Modeling the Soil Surface Temperature–Wind Speed–Evaporation Relationship Using a Feedforward Backpropagation ANN in Al Medina, Saudi Arabia

Abstract

1. Introduction

2. Materials and Methods

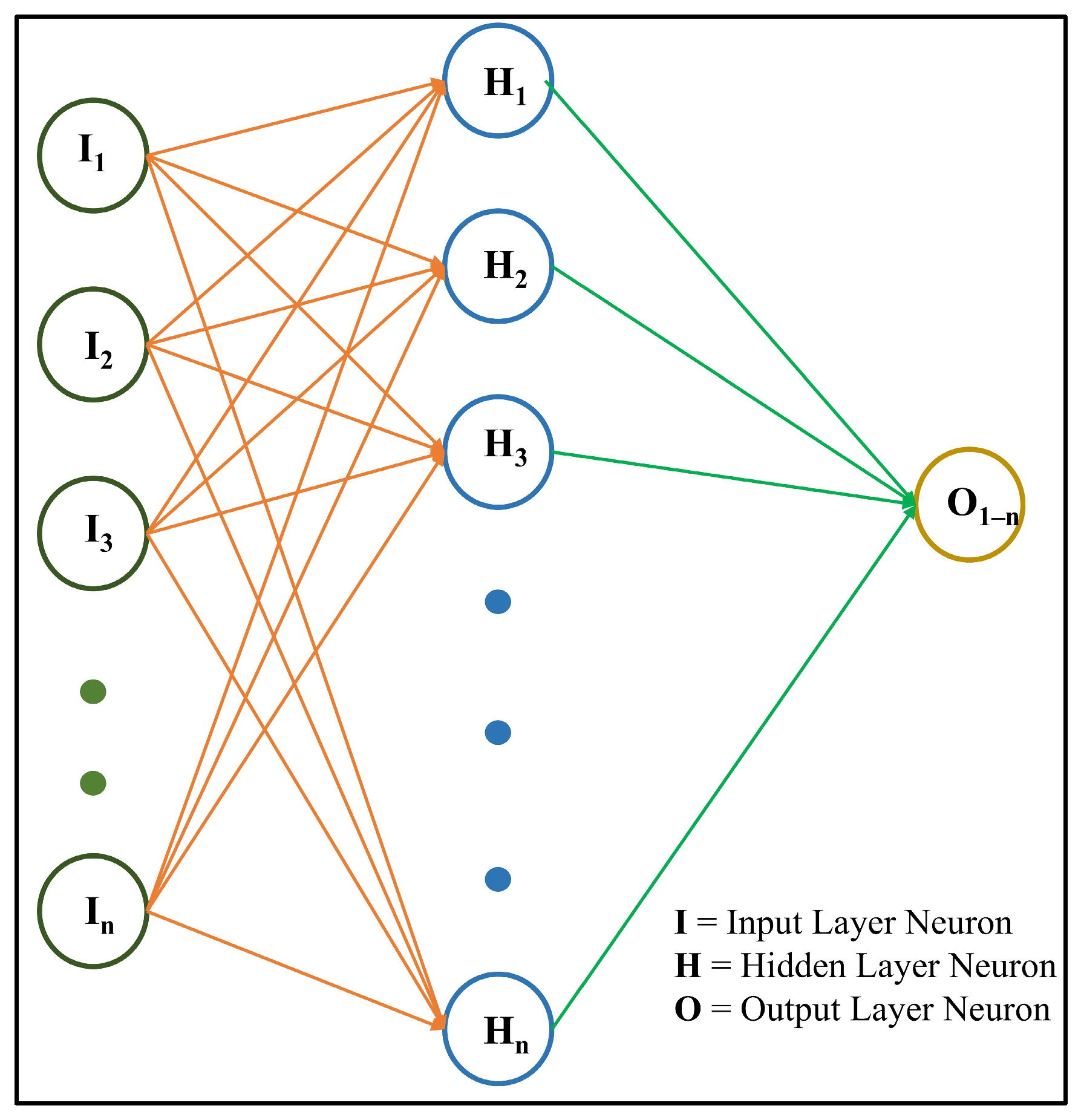

2.1. Feedforward Backpropagation Algorithm (FFBP)

2.2. Data Collection

2.3. Data Normalization

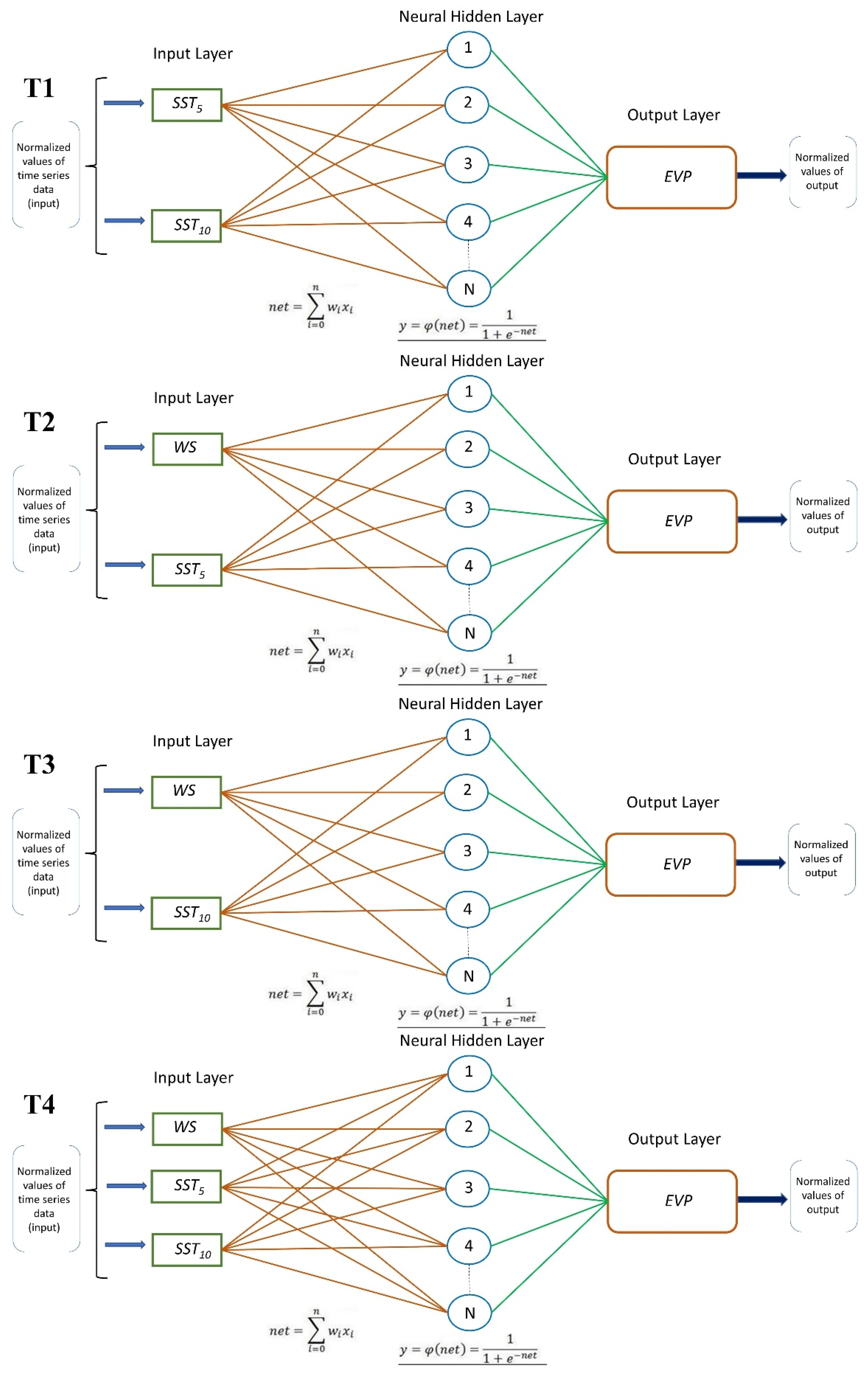

2.4. Architecture of Modeled NN

2.5. Datasets

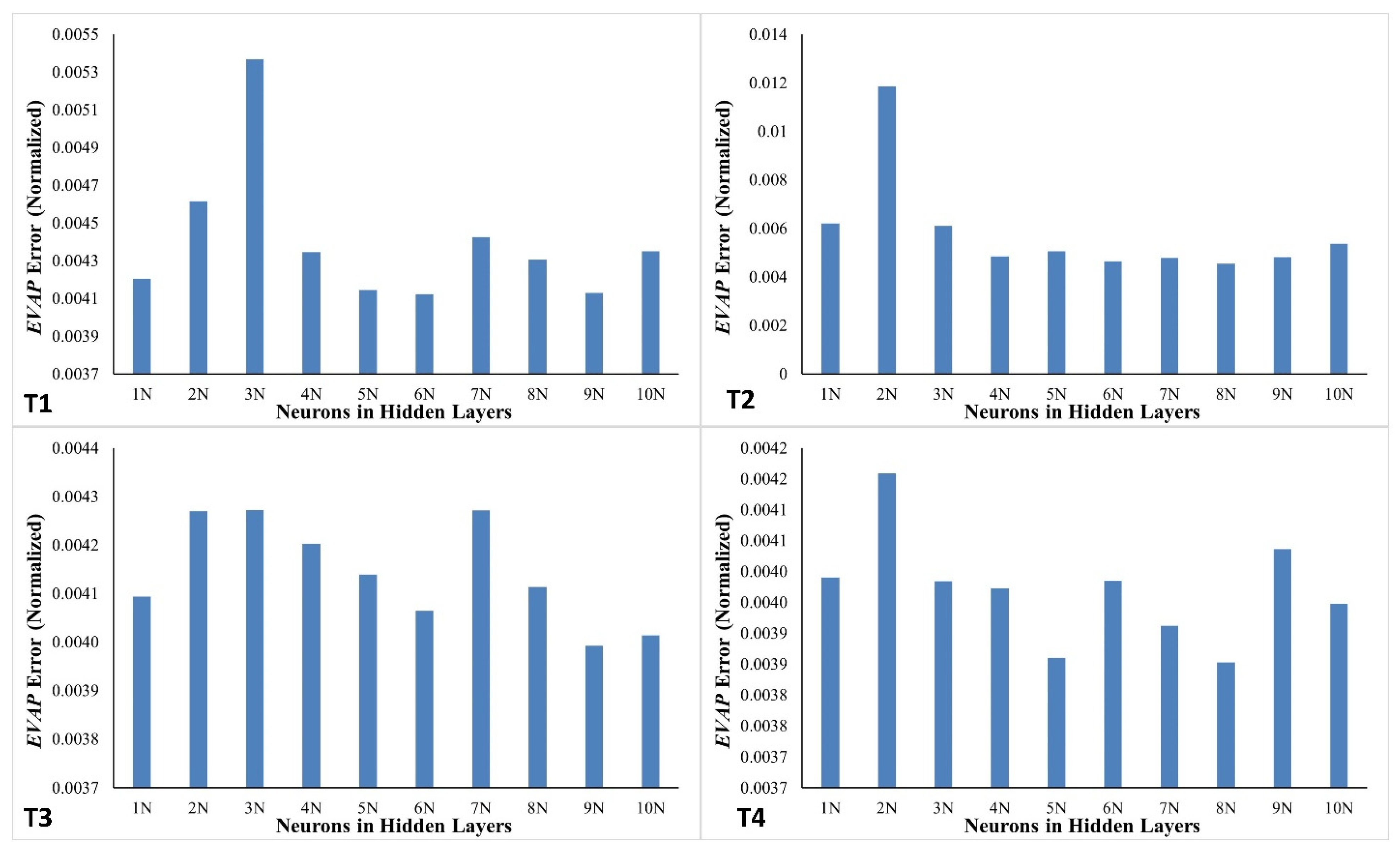

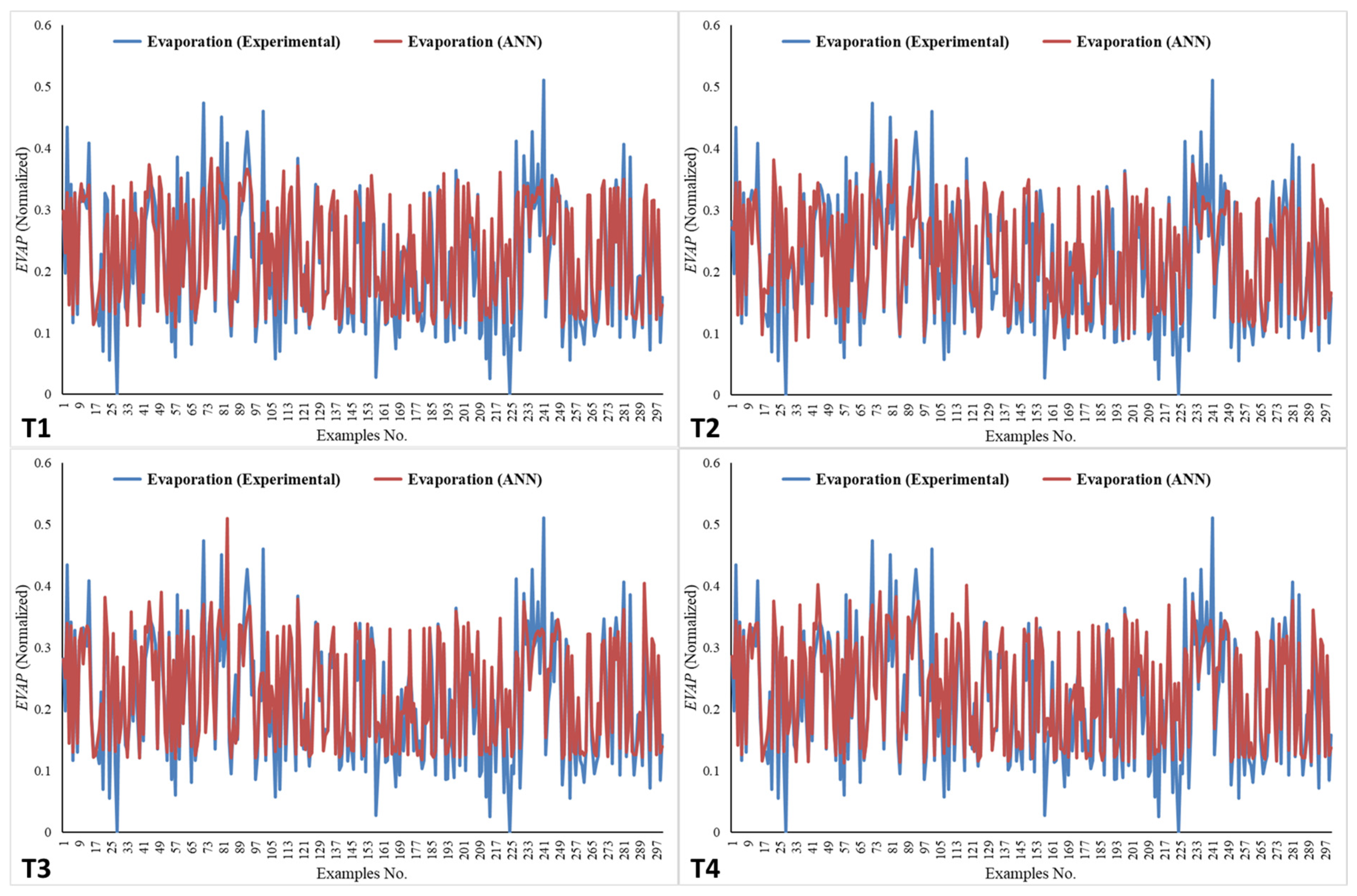

3. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wilson, K.; Hanson, P.J.; Mulholland, P.J.; Baldocchi, D.; Wullschleger, S.D. A comparison of methods for determining forest evapotranspiration and its components: Sap-flow, soil water budget, eddy covariance and catchment water balance. Agric. For. Meteorol. 2001, 106, 153. [Google Scholar] [CrossRef]

- Kamai, T.; Assouline, S. Evaporation from Deep Aquifers in Arid Regions: Analytical Model for Combined Liquid and Vapor Water Fluxes. Water Resour. Res. 2018, 54, 4805. [Google Scholar] [CrossRef]

- Refadah, S.S.; Khan, M.Y.A. Artificial neural networks for estimating historical daily missing evaporation to support sustainable development in Saudi Arabia. Phys. Chem. Earth Parts A/B/C 2025, 139, 103949. [Google Scholar] [CrossRef]

- Kofidou, M.; Stathopoulos, S.; Gemitzi, A. Review on spatial downscaling of satellite derived precipitation estimates. Environ. Earth Sci. 2023, 82, 424. [Google Scholar] [CrossRef]

- Dong, Z.; Hu, H.; Wei, Z.; Liu, Y.; Xu, H.; Yan, H.; Khan, M.Y.A. Estimating the actual evapotranspiration of different vegetation types based on root distribution functions. Front. Earth Sci. 2022, 10, 893388. [Google Scholar] [CrossRef]

- Hu, H.; Chen, L.; Liu, H.; Ali Khan, M.Y.; Tie, Q.; Zhang, X.; Tian, F. Comparison of the vegetation effect on ET partitioning based on eddy covariance method at five different sites of northern China. Remote Sens. 2018, 10, 1755. [Google Scholar] [CrossRef]

- Sadiq, A.A. Measurement and Estimation of Annual Variability of Water Loss at Njuwa Lake Using Class ‘A’ Pan Evaporation Method. Asian Soil Res. J. 2020, 11, 11–21. [Google Scholar] [CrossRef]

- Stăncălie, G.; Nert, A. Possibilities of Deriving Crop Evapotranspiration from Satellite Data with the Integration with Other Sources of Information; IntechOpen: London, UK, 2012. [Google Scholar] [CrossRef][Green Version]

- Khamidov, M.; Ishchanov, J.; Hamidov, A.; Shermatov, E.; Gafurov, Z. Impact of Soil Surface Temperature on Changes in the Groundwater Level. Water 2023, 15, 3865. [Google Scholar] [CrossRef]

- Jiang, Y.; Fu, P.; Weng, Q. Assessing the Impacts of Urbanization-Associated Land Use/Cover Change on Land Surface Temperature and Surface Moisture: A Case Study in the Midwestern United States. Remote Sens. 2015, 7, 4880. [Google Scholar] [CrossRef]

- Jin, M.; Mullens, T.J. A Study of the Relations between Soil Moisture, Soil Temperatures and Surface Temperatures Using ARM Observations and Offline CLM4 Simulations. Climate 2014, 2, 279. [Google Scholar] [CrossRef]

- Li, G.; Pan, Y.; Yang, L. Numerical Simulation of the Effects of Water Surface in Building Environment. IOP Conf. Ser. Earth Environ. Sci. 2018, 128, 12072. [Google Scholar] [CrossRef]

- la Torre, A.M.; Blyth, E.; Robinson, E.L. Evaluation of Drydown Processes in Global Land Surface and Hydrological Models Using Flux Tower Evapotranspiration. Water 2019, 11, 356. [Google Scholar] [CrossRef]

- Jandaghian, Z.; Colombo, A.F. The Role of Water Bodies in Climate Regulation: Insights from Recent Studies on Urban Heat Island Mitigation. Buildings 2024, 14, 2945. [Google Scholar] [CrossRef]

- Chen, L.; Dirmeyer, P.A. Global observed and modelled impacts of irrigation on surface temperature. Int. J. Climatol. 2018, 39, 2587. [Google Scholar] [CrossRef]

- Brice, J.C. Condensation and evaporation. Solid-State Electron. 1964, 7, 489. [Google Scholar] [CrossRef]

- Asano, K. Evaporation and Condensation. In Mass Transfer: From Fundamentals to Modern Industrial Applications; Wiley: Hoboken, NJ, USA, 2006; p. 177. [Google Scholar] [CrossRef]

- Hu, H.; Oglesby, R.J.; Marshall, S. The Simulation of Moisture Processes in Climate Models and Climate Sensitivity. J. Clim. 2005, 18, 2172. [Google Scholar] [CrossRef]

- Aghelpour, P.; Varshavian, V.; Hamedi, Z. Comparing the Models SARIMA, ANFIS And ANFIS-DE In Forecasting Monthly Evapotranspiration Rates Under Heterogeneous Climatic Conditions. Res. Sq. 2021; preprint. [Google Scholar] [CrossRef]

- Jensen, M.E.; Haise, H.R. Estimating evapotranspiration from solar radiation. J. Irrig. Drain. Div. ASCE 1963, 89, 15–41. [Google Scholar] [CrossRef]

- Stephens, J.C.; Stewart, E.H. A comparison of procedures for computing evaporation and evapotranspiration. In International Union of Geodynamics and Geophysics; Publication 62; International Association of Scientific Hydrology: Berkeley, CA, USA, 1963; pp. 123–133. [Google Scholar]

- Christiansen, J.E. Estimating pan evaporation and evapotranspiration from climatic data. In Irrigation and Drainage Special Conference; ASCE: Las Vegas, NV, USA, 1966; pp. 193–231. [Google Scholar]

- Burman, R.D. Intercontinental comparison of evaporation estimates. J. Irrig. Drain. Div. ASCE 1976, 102, 109–118. [Google Scholar] [CrossRef]

- Veihmeyer, F.J. Evaporation: Handbook of Applied Hydrology; Chow, V.T., Ed.; McGraw-Hill Book Co.: New York, NY, USA, 1964. [Google Scholar]

- Moayedi, H.; Ghareh, S.; Foong, L.K. Quick integrative optimizers for minimizing the error of neural computing in pan evaporation modeling. Eng. Comput. 2022, 38 (Suppl. 2), 1331–1347. [Google Scholar] [CrossRef]

- Linarce, E.T. Climate and the evaporation from crops. J. Irrig. Drain. Div. ASCE 1967, 93, 61–79. [Google Scholar]

- Azar, N.A.; Kardan, N.; Milan, S.G. Developing the artificial neural network–evolutionary algorithms hybrid models (ANN–EA) to predict the daily evaporation from dam reservoirs. Eng. Comput. 2023, 39, 1375–1393. [Google Scholar] [CrossRef]

- Farooque, A.A.; Afzaal, H.; Abbas, F.; Bos, M.; Maqsood, J.; Wang, X.; Hussain, N. Forecasting daily evapotranspiration using artificial neural networks for sustainable irrigation scheduling. Irrig. Sci. 2022, 40, 55–69. [Google Scholar] [CrossRef]

- Chen, H.; Huang, J.J.; Dash, S.S.; McBean, E.; Singh, V.P.; Li, H.; Wei, Y.; Zhang, O.; Zhou, Z. Anon-linear theoretical dry/wet boundary-based two-source trapezoid model for estimation of land surface evapotranspiration. Hydrol. Sci. J. 2023, 68, 1591–1609. [Google Scholar] [CrossRef]

- Goodarzi, M.; Eslamian, S. Performance evaluation of linear and nonlinear models for the estimation of reference evapotranspiration. Int. J. Hortic. Sci. Technol. 2018, 8, 1–15. [Google Scholar]

- Al-Mukhtar, M. Modeling of pan evaporation based on the development of machine learning methods. Theor. Appl. Climatol. 2021, 146, 961–979. [Google Scholar] [CrossRef]

- Anders, U.; Korn, O. Model selection in neural network. Neural Netw. 1999, 12, 309–323. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feed forward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Khan, M.Y.A. Regional ANN model for estimating missing daily suspended sediment load in complex, heterogeneous catchments. J. Geochem. Explor. 2025, 269, 107643. [Google Scholar] [CrossRef]

- Khan, M.Y.A.; Tian, F.; Hasan, F.; Chakrapani, G.J. Artificial neural network simulation for prediction of suspended sediment concentration in the River Ramganga, Ganges Basin, India. Int. J. Sediment Res. 2019, 34, 95–107. [Google Scholar] [CrossRef]

- Khan, M.Y.A.; Hasan, F.; Panwar, S.; Chakrapani, G.J. Neural network model for discharge and water-level prediction for Ramganga River catchment of Ganga Basin, India. Hydrol. Sci. J. 2016, 61, 2084–2095. [Google Scholar] [CrossRef]

- Şorman, A.Ü.; Abdulrazzak, M.J. Estimation of actual evaporation using precipitation and soil moisture records in arid climates. Hydrol. Process. 1995, 9, 729. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, W.; He, Y. Predicting Glossiness of Heat-Treated Wood Using the Back Propagation Neural Network Optimized by the Improved Whale Optimization Algorithm. Forests 2025, 16, 716. [Google Scholar] [CrossRef]

- Madhiarasan, M.; Louzazni, M. Analysis of Artificial Neural Network: Architecture, Types, and Forecasting Applications. J. Electr. Comput. Eng. 2022, 2022, 5416722. [Google Scholar] [CrossRef]

- Epelbaum, T. Deep learning: Technical introduction. arXiv 2017. [Google Scholar] [CrossRef]

- Gershenson, C. Artificial Neural Networks for Beginners. arXiv 2003. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. In The Handbook of Brain Theory and Neural Networks; The MIT Press: London, UK, 1998; p. 255. Available online: https://dl.acm.org/citation.cfm?id=303568.303704 (accessed on 15 July 2025).

- Zhao, L.; Zhang, L.; Wu, Z.; Chen, Y.; Dai, H.; Yu, X.; Liu, Z.; Zhang, T.; Hu, X.; Jiang, X.; et al. When brain-inspired AI meets AGI. Meta-Radiol. 2023, 1, 100005. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, T. Deep Learning for Exploring Landslides with Remote Sensing and Geo-Environmental Data: Frameworks, Progress, Challenges, and Opportunities. Remote Sens. 2024, 16, 1344. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Neural Networks and Deep Learning; Springer Nature: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Huang, Y. Advances in Artificial Neural Networks–Methodological Development and Application. Algorithms 2009, 2, 973. [Google Scholar] [CrossRef]

- Sahayam, S.; Zakkam, J.; Jayaraman, U. Can we learn better with hard samples? arXiv 2023. [Google Scholar] [CrossRef]

- Skansi, S. Undergraduate topics in computer science. In Introduction to Deep Learning; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Bhanja, S.; Das, A. Impact of Data Normalization on Deep Neural Network for Time Series Forecasting. arXiv 2018. [Google Scholar] [CrossRef]

- Djordjević, K.; Jordović-Pavlović, M.I.; Ćojbašić, Ž.; Galović, S.; Popović, M.; Nešić, M.V.; Markushev, D.D. Influence of data scaling and normalization on overall neural network performances in photoacoustics. Opt. Quantum Electron. 2022, 54, 501. [Google Scholar] [CrossRef]

- Li, A.; Zhang, W.; Zhang, X.; Chen, G.; Liu, X.; Jiang, A.; Zhou, F.; Peng, H. A Deep U-Net-ConvLSTM Framework with Hydrodynamic Model for Basin-Scale Hydrodynamic Prediction. Water 2024, 16, 625. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, K.; Guo, A.; Hao, F.; Ma, J. Predictive Model for Erosion Rate of Concrete Under Wind Gravel Flow Based on K-Fold Cross-Validation Combined with Support Vector Machine. Buildings 2025, 15, 614. [Google Scholar] [CrossRef]

- Kumari, B.; Swarnkar, T. Stock movement prediction using hybrid normalization technique and artificial neural network. Int. J. Adv. Technol. Eng. Explor. 2021, 8, 874387. [Google Scholar] [CrossRef]

- Meira, J. Comparative Results with Unsupervised Techniques in Cyber Attack Novelty Detection. Proceedings 2018, 2, 1191. [Google Scholar] [CrossRef]

- Siddiqi, M.A.; Pak, W. An Agile Approach to Identify Single and Hybrid Normalization for Enhancing Machine Learning-Based Network Intrusion Detection. IEEE Access 2021, 9, 137494. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks and Learning Machines, 3rd ed.; Pearson Education: London, UK, 2009. [Google Scholar]

- Maier, H.R.; Jain, A.; Dandy, G.C.; Sudheer, K.P. Methods used for the development of neural networks for the prediction of water resource variables in river systems: Current status and future directions. Environ. Model. Softw. 2010, 25, 891–909. [Google Scholar] [CrossRef]

- Kisi, O.; Parmar, K.S. Application of least square support vector machine and multivariate adaptive regression spline models in long term prediction of river water pollution. J. Hydrol. 2016, 534, 104–112. [Google Scholar] [CrossRef]

- Shamseldin, A.Y. Application of a neural network technique to rainfall–runoff modelling. J. Hydrol. 1997, 199, 272–294. [Google Scholar] [CrossRef]

- El-Shebli, M.; Sharrab, Y.; Al-Fraihat, D. Prediction and modeling of water quality using deep neural networks. Environ. Dev. Sustain. 2024, 26, 11397–11430. [Google Scholar] [CrossRef]

- Čule, I.S.; Ožanić, N.; Volf, G.; Karleuša, B. Artificial neural network (ANN) water-level prediction model as a tool for the sustainable management of the Vrana Lake (Croatia) water supply system. Sustainability 2025, 17, 722. [Google Scholar] [CrossRef]

- Guo, S.; Yang, T.; Gao, W.; Zhang, C. A Novel Fault Diagnosis Method for Rotating Machinery Based on a Convolutional Neural Network. Sensors 2018, 18, 1429. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017. [Google Scholar] [CrossRef]

- Pfob, A.; Lu, S.-C.; Sidey-Gibbons, C. Machine learning in medicine: A practical introduction to techniques for data pre-processing, hyperparameter tuning, and model comparison. BMC Med. Res. Methodol. 2022, 22, 282. [Google Scholar] [CrossRef]

- Prochukhan, D. Implementation of technology for improving the quality of segmentation of medical images by software adjustment of convolutional neural network hyperparameters. Inf. Telecommun. Sci. 2023, 1, 59. [Google Scholar] [CrossRef]

- Shaziya, H.; Zaheer, R. Impact of Hyperparameters on Model Development in Deep Learning. In Proceedings of the International Conference on Computational Intelligence and Data Engineering; Lecture Notes on Data Engineering and Communications Technologies; Springer International Publishing: Singapore, 2020; p. 57. [Google Scholar] [CrossRef]

- Demuth, H.B.; Beale, M.H.; Jess, O.D.; Hagan, M.T. Neural Network Design, 2nd ed.; Martin Hagan: Stillwater, OK, USA, 2014. [Google Scholar]

- Zhang, J.; Zhu, Y.; Zhang, X.; Ye, M.; Yang, J. Developing a Long Short-Term Memory (LSTM) based model for predicting water table depth in agricultural areas. J. Hydrol. 2021, 594, 125734. [Google Scholar] [CrossRef]

- Shen, C. A transdisciplinary review of deep learning research and its relevance for water resources scientists. Water Resour. Res. 2018, 54, 8558–8593. [Google Scholar] [CrossRef]

- Kisi, O. Evaporation modeling using a neuro-fuzzy approach. J. Hydrol. 2016, 535, 431–438. [Google Scholar]

- Nourani, V.; Baghanam, A.H.; Adamowski, J.; Kisi, O. Applications of hybrid artificial intelligence models in hydrology: A review. J. Hydrol. 2020, 580, 124675. [Google Scholar] [CrossRef]

| Parameter | WS | SST (5 CM) | SST (10 CM) | EVAP |

|---|---|---|---|---|

| Mean | 9.036 | 33.762 | 29.129 | 9.861 |

| SE | 0.069 | 0.196 | 0.164 | 0.057 |

| Median | 8.4 | 35.2 | 30.6 | 9.6 |

| Mode | 7 | 41 | 40 | 5 |

| SD | 3.826 | 10.843 | 9.091 | 4.703 |

| Kurtosis | 8.892 | 19.308 | −1.22 | 1.312 |

| Skewness | 1.513 | 1.054 | −0.3 | 0.609 |

| Range | 52 | 198 | 42.8 | 43 |

| Min | 0 | 8 | 6.8 | 0 |

| Max | 52 | 206 | 49.6 | 43 |

| Network Topology | T1 | T2 | T3 | Network Topology | T4 | ||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | MSE | R2 | MSE | R2 | MSE | R2 | MSE | ||

| 2-1-1 | 0.782 | 0.00421 | 0.739 | 0.00620 | 0.796 | 0.00404 | 3-1-1 | 0.797 | 0.00399 |

| 2-2-1 | 0.77 | 0.00462 | 0.031 | 0.01187 | 0.698 | 0.00592 | 3-2-1 | 0.797 | 0.00416 |

| 2-3-1 | 0.765 | 0.00537 | 0.746 | 0.00611 | 0.783 | 0.00422 | 3-3-1 | 0.798 | 0.00398 |

| 2-4-1 | 0.775 | 0.00435 | 0.749 | 0.00485 | 0.796 | 0.00415 | 3-4-1 | 0.787 | 0.00397 |

| 2-5-1 | 0.787 | 0.00414 | 0.749 | 0.00505 | 0.789 | 0.00409 | 3-5-1 | 0.808 | 0.00386 |

| 2-6-1 | 0.784 | 0.00412 | 0.763 | 0.00465 | 0.798 | 0.00401 | 3-6-1 | 0.797 | 0.00398 |

| 2-7-1 | 0.781 | 0.00443 | 0.734 | 0.00478 | 0.766 | 0.00422 | 3-7-1 | 0.805 | 0.00391 |

| 2-8-1 | 0.777 | 0.00431 | 0.766 | 0.00455 | 0.791 | 0.00406 | 3-8-1 | 0.805 | 0.00385 |

| 2-9-1 | 0.788 | 0.00413 | 0.751 | 0.00482 | 0.784 | 0.00394 | 3-9-1 | 0.799 | 0.00404 |

| 2-10-1 | 0.784 | 0.00435 | 0.747 | 0.00537 | 0.787 | 0.00396 | 3-10-1 | 0.807 | 0.00395 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Refadah, S.S.; AlAbadi, S.; Almazroui, M.; Khan, M.A.; ElKashouty, M.; Khan, M.Y.A. Modeling the Soil Surface Temperature–Wind Speed–Evaporation Relationship Using a Feedforward Backpropagation ANN in Al Medina, Saudi Arabia. Technologies 2025, 13, 461. https://doi.org/10.3390/technologies13100461

Refadah SS, AlAbadi S, Almazroui M, Khan MA, ElKashouty M, Khan MYA. Modeling the Soil Surface Temperature–Wind Speed–Evaporation Relationship Using a Feedforward Backpropagation ANN in Al Medina, Saudi Arabia. Technologies. 2025; 13(10):461. https://doi.org/10.3390/technologies13100461

Chicago/Turabian StyleRefadah, Samyah Salem, Sultan AlAbadi, Mansour Almazroui, Mohammad Ayaz Khan, Mohamed ElKashouty, and Mohd Yawar Ali Khan. 2025. "Modeling the Soil Surface Temperature–Wind Speed–Evaporation Relationship Using a Feedforward Backpropagation ANN in Al Medina, Saudi Arabia" Technologies 13, no. 10: 461. https://doi.org/10.3390/technologies13100461

APA StyleRefadah, S. S., AlAbadi, S., Almazroui, M., Khan, M. A., ElKashouty, M., & Khan, M. Y. A. (2025). Modeling the Soil Surface Temperature–Wind Speed–Evaporation Relationship Using a Feedforward Backpropagation ANN in Al Medina, Saudi Arabia. Technologies, 13(10), 461. https://doi.org/10.3390/technologies13100461