Abstract

This paper presents an intelligent system for the dynamic estimation of sheep body weight (BW). The methodology used to estimate body weight is based on measuring seven biometric parameters: height at withers, rump height, body length, body diagonal length, total body length, semicircumference of the abdomen, and semicircumference of the girth. A biometric parameter acquisition system was developed using a Kinect as a sensor. The results were contrasted with measurements obtained manually with a flexometer. The comparison gives an average root mean square error (RMSE) of 9.91 and a mean of 0.81. Subsequently, the parameters were used as input in a back-propagation artificial neural network. Performance tests were performed with different combinations to make the best choice of architecture. In this way, an intelligent body weight estimation system was obtained from biometric parameters, with a 5.8% RMSE in the weight estimations for the best architecture. This approach represents an innovative, feasible, and economical alternative to contribute to decision-making in livestock production systems.

1. Introduction

Body weight (BW) has been identified as the foremost parameter to determine the growth of farm animals and plays a critical role in decision support systems for livestock management [1]. However, its measurement in live animals is a complex and slow process. For this reason, multiple researchers have studied the correlation of BW with Biometric Measurements that are simpler and easy to obtain [2]. Various zones are commonly measured, such as withers height, rump height, body length, diagonal body length, rib depth, chest depth, chest width, dorsal height, abdomen width, and thorax width, among others, that can be correlated with BW [3,4].

In order to estimate the body weight of livestock, different methodologies are used that largely depend on the advancement of technology in both hardware and computational algorithms. Regarding hardware technologies, we can mention those based on lasers, which are exemplified by the study conducted in [5] using Light Detection and Ranging (LiDAR) for precise non-contact body measurement of Qinchuan cattle. The process involves filtering techniques, segmentation, and feature extraction, culminating in the reconstruction of a 3D model. An accuracy of 2% is achieved. Additionally, in [6], manual measurements on Holstein cows are compared to those obtained using a 3D scanner (Morpho3D), showing high correlations and thus enabling more precise morphological estimations.

Another technology is based on depth cameras, which have been used for 3D reconstructions. For example, in [2], they use a Kinect sensor for estimating biometric parameters in pigs and correlating them with weight, achieving an > 0.95. A similar study, but for estimating body weight in dairy cows, is mentioned in [7], where four Kinect devices are used to reconstruct the entire animal body, obtaining coefficients of determination > 0.84 and deviations lower than 6% from manual measurements.

Due to their affordability and efficiency, 2D cameras have gained popularity in computer vision in recent decades. Many researchers have proposed algorithms to extract animal body dimensions from 2D photos. For example, in [8], an algorithm is proposed to automatically extract pig body surface dimension parameters from top-view pig images, achieving an accuracy of 97% (SE = 1.64%) in the body length parameter. Furthermore, in [9], lateral and dorsal images are used to estimate different biometric parameters, resulting in a correlation coefficient of 0.71. In [10], stereoscopic vision is employed to estimate the weight of pigs; the system verified that the body length and withers height were estimated with an ranging from 0.91 to 0.98, and the weight was estimated with a coefficient of determination of 0.993. However, techniques relying on 2D images face obstacles such as sensitivity to changes in lighting conditions, complications in distinguishing the backdrop, and the need for optimal image capture settings.

Finally, it is also worth mentioning that advances in computational algorithms have contributed to improving the estimations provided by the previously described devices. One of the most promising algorithms is based on artificial neural networks (ANNs), as demonstrated in [11], which proposes a Deep Neural Network (DNN) weight estimation method for pigs using a mesh model obtained from a depth camera. The weights are estimated with a high accuracy of 97.89%. Another example involves using RGB and depth images of sheep [12] with the LiteHRNet model (a Lightweight High-Resolution Network), which is based on Convolutional Neural Networks (CNNs), and achieves acceptable weight estimation results with an average percentage error (MAPE) of 14.605%. Additionally, another work employs a Recurrent Neural Network (RNN) and demonstrates an accuracy of 88.6% for bite detection and 94.1% for chew identification while recording video and audio from horses while grazing [13]. Other studies show the use of depth images and CNNs to estimate the body condition score in cows [14,15]. A similar analysis is performed in pigs to calculate the live weights in saw stalls [16].

This study aims to provide a new alternative for determining BW by combining images from a depth camera with an ANN. The present study presents a neural network trained with the biometric parameters of Pelibuey sheep.

2. Methods and Methodology

2.1. Pelibuey Sheep Selection

Sheep were treated under the standards and laws governing ethical animal research of the Academic Division of Agricultural Sciences of the Juarez Autonomous University of Tabasco (ID project PFI: UJAT-DACA-2015-IA-02).

This study took place at the Southeastern Center for Ovine Integration (17°78′ N, 92°96′ W; ). A total of fifty-six Pelibuey ewes between the ages of greater than four months and less than a year, with mean body weights of , were used for the measurements and digital images. This age range was selected because the initial age for the selection of the Pelibuey for commercial activities must be after weaning, which occurs at approximately 90 days [17], and given the characteristics of the breed, samples were taken up to twelve months since although their weight increases, some of the biometric parameters will no longer have significant variations in size, and in this period, they move through childhood, adolescence, and end up being considered adults [17]. There was a weighing area where each sheep was passed one by one to carry out the weighing with a mechanical scale. This scale had a weight limit of 100 kg, a precision of 10 g, and a unit of measurement of kg (Torrey, Mexico).

The sheep were kept in cages with raised slatted floors and a feeding group in a feedlot arrangement. For the experiment, the diet consisted of elements such as star grass hay, soybean meal, ground corn, minerals, vitamins, and a crude protein level of 16% of dry matter [18].

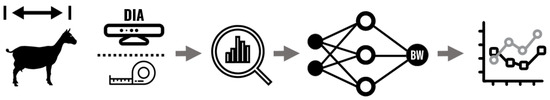

The general weight estimation process through digital image analysis and artificial intelligence is exhibited in Figure 1. In the first step, the measurements of the sheep are extracted through DIA to determine the reliability of these determinations. They are contrasted against traditional measurements made with flexible fiberglass tape. Subsequently, the data estimated with DIA are analyzed to find the biometric parameters that most influence body weight estimation. Finally, the biometric parameters obtained by DIA are employed as data for the training of an ANN that estimates the BW of the animal. Once again, the weight calculated by the neural network is collated with the actual weights of the animal and with the value obtained by other models.

Figure 1.

General process for obtaining the live weight of the animal through digital image analysis and artificial intelligence.

2.2. Software

Python [19] and the modules OpenCV [20], Numpy [21], and Pandas [22], were used as the programming languages. The image acquisition software was prepared to acquire images from a Kinect camera and deploy them on a portable computer for obtaining data.

The Kinect® sensor was positioned in front of the animals on a frame spaced apart by around 1.5 to record the complete body length.

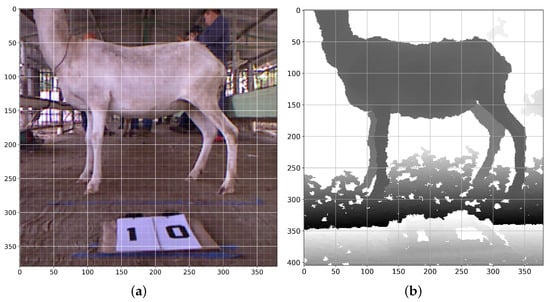

Subsequently, two digital color images (Figure 2a), and depth images, (Figure 2b), were collected from the Kinect® sensor in approximately one-second intervals. Color digital images were saved in PNG format. The depth image values were stored in a comma-separated value (CSV) file and processed using Python and the modules OpenCV and Numpy. The digital image was obtained in color with a resolution of 0.3 Mpixels, and the angular field of view for the acquisition was 62.0 degrees on the X-axis and 48.60° on the Y-axis. An infrared camera and IR projector were used to obtain the depth image. This hardware had a resolution of 0.3 MPixels, and the angular field of this camera was 58.50 degrees horizontally and 46.60 degrees vertically [23].

Figure 2.

Pictures taken with a Kinect® sensor (version 1) placed in front of the animal; (a) digital color image; (b) depth image.

Digital RGB color photos were used to identify the animals, the sheep entered the measuring area, and a small number was registered with their Identification Number (ID). The number was recorded by the Kinect® sensor to guarantee that each image could be recognized.

2.3. Image Processing

Following the procedures specified in [24], it was necessary to calibrate the pixels to appropriately collect the depth information on the Z-axis and the dimensions in the X- and Y-axes for processing the Kinect® sensor images.

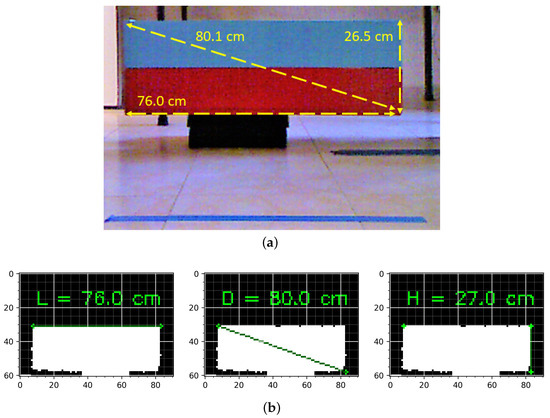

Regular objects were used to test the calibration, ensuring the exact dimensions were obtained. Figure 3 compares the measurements of an object with a rectangular face with those obtained after calibration. The calibration of any image sensor is only valid for a defined range where acquisition conditions are similar.

Figure 3.

Comparison of measurements made after Kinect® calibration; (a) measurement test object; (b) measurement of length, diagonal, and height after calibration.

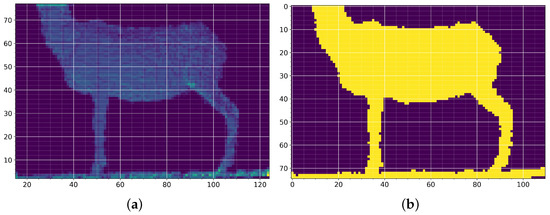

In sheep measurement, the error estimated was ±0.5. Once the image was calibrated, a depth threshold was performed on the Z-axis for the background removal process. This process is carried out by filtering pixels with similar depth values. The pixels that were at a depth less than 1.3 and above 1.7 were eliminated (Figure 2a). After creating a two-dimensional histogram, an image was generated using the conversion that set 1 pixel equal to 1 cm, as shown in Figure 4b. This last image was used to obtain the measurements at the points of interest.

Figure 4.

Comparison of measurements made after Kinect® calibration; (a) measurement test object; (b) measurement of length, diagonal, and height after calibration.

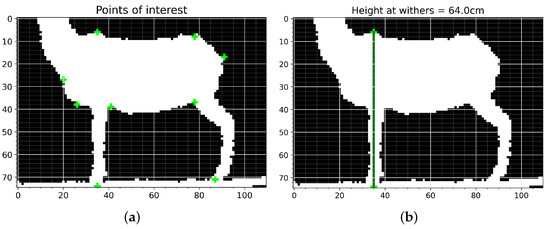

The measurements were obtained using the processed images from the Kinect® sensor, for which a graphical user interface (GUI) was implemented, as is displayed in Figure 5a. The operator only needs to highlight the points of interest, and the values are generated automatically, as shown in Figure 5b. The GUI automatically calculates the geometric distances between each pair of points using nine points and centimeters as the unit of measurement for the X- and Y-axes, which means that 1 pixel is equivalent to 1 cm.

Figure 5.

Graphical user interface to obtain the measurements. (a) Interface through which the user enters points of interest to obtain measurements. (b) Example of automatic height at withers (HW) measurement.

2.4. Biometric Measurements

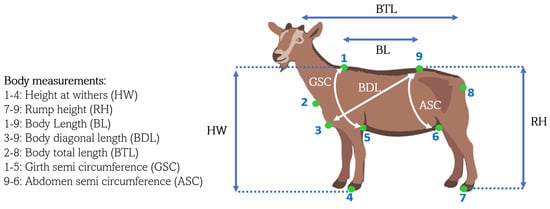

Immediately after data collection with the Kinect®, the following Biometric Measurements (BMs) were obtained for each animal, as previously described in [25]: body diagonal length (BDL), abdomen semicircumference (ASC), height at withers (HW), rump height (RH), body length (BL), total body length (BTL), and girth and semicircumference (GSC) (Figure 6).

Figure 6.

Biometric Measurements.

Every BM was recorded in centimeters. The animals’ body weight (BW) was determined using a digital scale with 10 g of precision. Flexible fiberglass tape was utilized for measuring purposes. The letter K was appended to the measurements collected by the camera to distinguish them. For instance, body length is BLK.

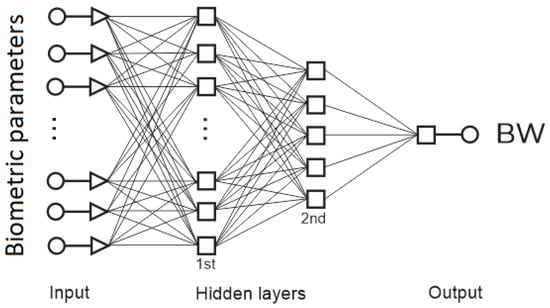

2.5. Artificial Neural Network as Body Weight Predictor

Artificial intelligence has exploded in recent years, and currently, there are several types of neural networks with multiple advantages and disadvantages. However, the most widely used artificial neural network (ANN) is the back-propagation (BP) ANN. Thus, a BP-ANN was used for all testing in this research (Figure 7). The performance of the network depends to a great extent on the architecture that it has. The architecture was adjusted by progressively increasing the number of neurons in the hidden layers and the activation functions to observe the best performance in the network used as a weight predictor.

Figure 7.

General structure of the ANN.

The data used are those shown in Table 1. The data were normalized with the max–min method, then used to build the network. The output was the weight of the sheep, and the biometric parameters were considered as inputs.

Table 1.

Variables used in the artificial neural networks for the estimation of the body weight of a sheep through biometric parameters.

The performance of an ANN can be measured with various statistical indicators; among the most common indicators is the root mean square error (RMSE); this type of indicator allows for reducing the effect of minor errors and increasing the weight of more significant errors’ magnitude; the formula that describes it is observed in Equation (1). A data division was made in 70% of the data set, 15% for validation, and 15% to evaluate the network performance. Several tests with different combinations of numbers of neurons and activation functions, were used to minimize the RMSE.

Finally, since the starting points in the neuron weights were random values and the data were randomly split, each architecture was simulated ten times to cross-reference and account for the variability in this type of neural network.

3. Results

This section shows the results of each process step for the weight estimation neural system. Firstly, the correlation of the parameters with the weight is shown. Later, the result using the biometric parameters as inputs in the neural network to estimate the weight is exhibited.

3.1. Selection of Network Architecture and Network Performance with the Seven Biometric Parameters as Input

The architecture selection was based on the geometric pyramid rule, and the hyperparameters were assorted to obtain the best performance of the network. The varied parameters were the neurons by layers, layers, and the type of the activation functions. The activation functions used were as follows: hyperbolic tangent sigmoid, represented by the letter T; the Log-sigmoid transfer function, defined by L; and the Linear Transfer Function, represented by P. A cross-validation was necessary since the network weights were initialized in random values. For this, ten runs were made. The average results of these runs are found in Table 2.

Table 2.

Tests performed to select the network architecture used.

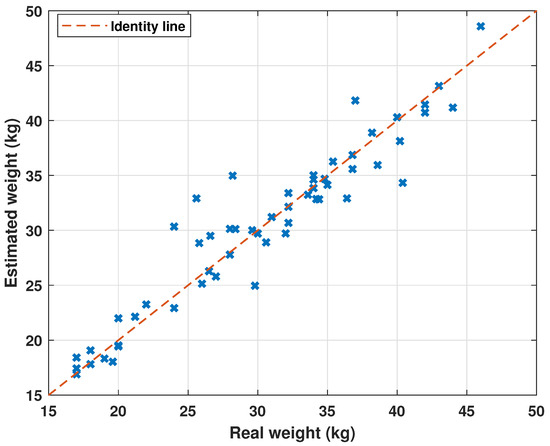

Considering the architecture tests, the network called N-L was the best estimator of the weight. This network delivers an average RMSE of 5.66%, an of 88.37%, a Mean Absolute Percentage Error (MAPE) of 4.53%, and a Mean Bias Error (MBE) of 0.59%.

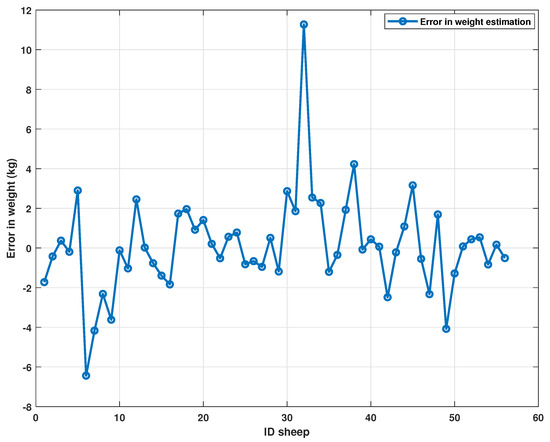

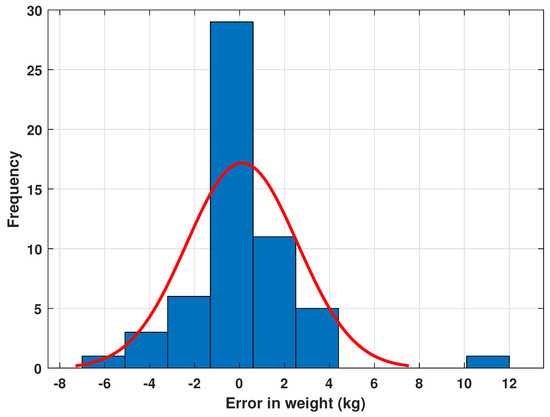

A comparison of the weight estimation of the sheep and the actual weight is displayed in Figure 8. The graph of the error is shown in Figure 9. Finally, Figure 10 depicts the Gaussian distribution and the frequency of the errors.

Figure 8.

Comparison between the estimated weight of sheep and the real weight.

Figure 9.

Errors in estimating the weight of sheep using neural networks trained with biometric parameters.

Figure 10.

Gaussian distribution of errors and frequency of appearance.

Although the statistical indicators show acceptable performance, the results must be compared with proven models.

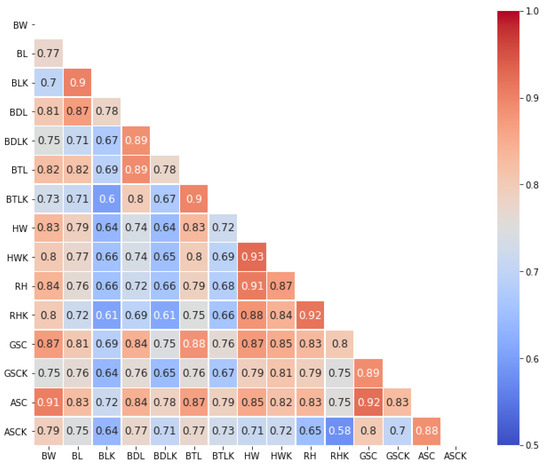

3.2. Correlation between Real Biometric Measurements and those Obtained with Digital Image Analysis

Figure 11 shows a correlation matrix summarizing the existing correlations between the real measurements and measurements obtained through digital image analysis. Pearson’s correlation coefficient was used to estimate the correlation between the measurements. The parameters with more correlation are closer to brown, and those with less correlation are closer to blue.

Figure 11.

Correlation matrix between real Biometric Measurements and those obtained with artificial vision.

Only considering the correlation between the pair of the real measurement and the one obtained by the Kinect®, the maximum value is 0.93, and it is found in HW, and the minimum value is 0.88, and it is found in ASC. In addition, the correlation of each variable with body weight (BW) was observed; for example, the variable real rump height (RH) has a correlation of 0.84 with live weight (BW), and the variable rump height measured by the Kinect (RHK) has a correlation of 0.8.

3.3. Network Performance with Lower Inputs and Comparison with Heuristic Models

Once the validity of the biometric parameters obtained by artificial vision was determined, the correlation of these measurements with the live weight of the sheep was determined since measuring the seven parameters would be impractical and could lead to more errors. Considering the correlation matrix in Figure 11, BTLK and BLK are the parameters with the highest correlation with BW. However, a broad correlation is observed between them, and the same case occurs for HW and RH. Therefore, BTLK and HWK were probed in neural networks with fewer inputs.

Considering the previous parameters and those used in the literature, a new group of networks was trained with fewer biometric parameters. All networks have the architecture of Net-L from Table 2. In addition, the BIC and AIC coefficients were calculated since these coefficients consider the number of parameters. Thus, in this case, it was essential to calculate and show the comparison. The results are exhibited in Table 3.

Table 3.

Statistical indicators for the evaluated models.

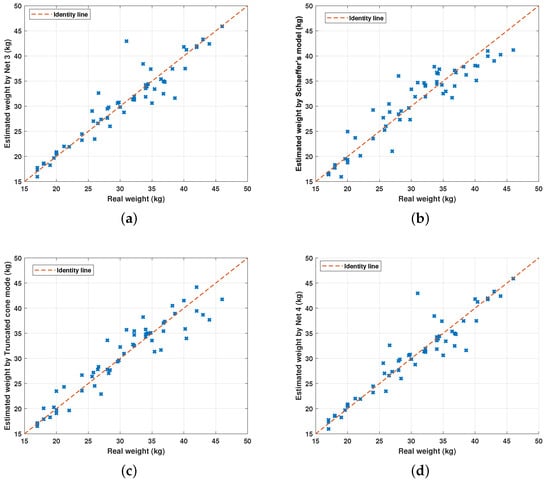

The best model (N 4) was compared with the typical models used in previous investigations to compare the performance of artificial neural networks. Schaeffer’s formula provides a good estimate of body weight. Therefore, it was decided to compare the data with this formula to calculate the weight (see Equation (2)). The model typically used for rapid measurement is Schaeffer’s formula adapted to Pelibuey (Equation (3)), and the truncated model is the best model reported for this type of animal [26] (Equation (4)). In these equations, the complete circumferences were used, that is, and . The results of the comparison are shown in Table 4.

Table 4.

Statistical indicators for the evaluated empirical models.

Finally, Figure 12 depicts the comparison between the weight estimation of the models shown in Table 3.

Figure 12.

Comparison between the weight estimation of the models shown in Table 3. (a) Comparison between the real weight of sheep and the estimated weight by N 3. (b) Comparison between the real weight and the estimated weight by Schaeffer’s adjusted model. (c) Comparison between the real weight and the estimated weight by the truncated cone model. (d) Comparison between the real weight and the estimated weight by the heuristic model.

4. Discussion

Accurate monitoring of performance and daily weight gain are the essential data needed to make appropriate management decisions. Furthermore, these data are necessary for building efficient production systems [27]. Knowing the daily weight gain would allow producers to adjust the diet according to the needs of each animal and also allow weight changes to be predicted and controlled. These actions allow better livestock management and can even help detect health problems among individuals [27].

This study used biometric parameters for body weight prediction, as suggested by [28]. Various techniques were applied to carry out the forecasts, from simple linear regressions to very complex ones based on artificial intelligence.

The estimation of biometric parameters using the Kinect® sensor shows a good correlation between the corresponding real measurements shown in Figure 11; the values obtained for Pearson’s correlation coefficient range from 0.88 to 0.96. These results are encouraging to implement this process on a large scale.

The BP-ANN was executed with the parameters measured manually to verify how the measurement error in obtaining biometric data obtained by artificial vision affects the final results. A mean RMSE of 3.6% was obtained for ten runs and an of 89.7%. The neural network shows a similar performance to those reported for mathematical models in the literature. However, the registered models work with manual measurements, and this may suggest that when using biometric parameters obtained through digital image analysis (DIA), the heuristic models could increase the reported error. Using the seven parameters as Network 4 has a high BIC due to its high number of parameters. It is not advisable to use a model with many parameters. Therefore, alternatives such as Network 3 can be implemented with greater certainty since it only has three parameters, just like the truncated cone model. The results obtained show no significant improvement compared to the heuristic models. This may be due to the low number of samples and the fact that the heuristic models do not work with the error inherent to the medication through DIA. However, its performance, even with these adversities, is shown in the range of heuristic models. Therefore, carrying out the experiment with a larger sample is suggested.

A variety of studies that employed DIA reported that the generated models had limited predictive capacity. This imperfect estimate can be related to potential errors, such as (a) errors on the part of the user, (b) position or selection errors during image analysis, (c) errors in size due to perspective and a poor relationship between the position of the camera and the object to be measured, and (d) poor calibration of the camera and the movement of the animals. This can cause variations in repeated measurements and different postures. Additionally, some studies suggest that the accuracy of weight estimation depends on the environment and the position in which the animal is measured [29,30]. These studies also question the reproducibility of the method. The main problem is that a controlled environment will be challenging to achieve. However, other variables are easier to control. It is necessary to take into account some technical difficulties to improve the precision and accuracy of the work. According to [31], it is essential to make periodic reviews of the quality and calibration of the sensors used for the analysis, and in this way have reliable measurements for the estimation of animal parameters. This is because the pixel values of an animal image significantly affect the estimate of the actual values. Furthermore, ref. [32] mentioned that digital image analysis has great potential for estimating animal weight and body size. However, multiple problems still need to be solved, such as multimedia data storage and analysis execution statistics.

Figure 8 shows an adequate prediction for the entire data set except for the sheep with ID 32. In this case, an estimated weight is offered with a 31% error in the sheep weight, which is an outlier in the data set. The error is caused in turn by a high RH measurement with an error of 40%. Multiple measurements can be made to detect the disparity between measurements and avoid this type of bias. In general, the errors oscillate mainly around zero with a standard deviation less than one, as shown in Figure 10. The data point for sheep with ID 32 is a datum with a standard deviation six times greater than most of the data.

A typical linear regression is limited due to the high number of variables. The results shown in Table 2 show that architecture with a relatively low number of neurons and two caps is sufficient to find a mean percentage RMSE of 5.5%.

5. Conclusions

The weight estimator presented in this research shows an average value in Pearson’s correlation coefficient of 0.90 for the data estimated with artificial vision. On the other hand, the proposed ANN calculates the weight with an RMSE of 0.055 and an of 0.88. The results of this work suggest the implementation of this same process on a large scale. A greater animal number or applying the procedure in different herds will allow the proposed strategy variation to be analyzed in detail. In general terms, a better fit of the predictor is expected since the neural network is trained with a more extensive training data set. However, the data set is smaller than required to perform the same deep learning procedure. Additionally, a study is required that contemplates longer ages range and the correlation of age with weight.

Author Contributions

Conceptualization, J.R.-R., M.C.-F., O.R.-A. and E.C.-P.; methodology, A.J.C.-C., J.R.-R., O.R.-A. and M.C.-F.; writing—original draft preparation, A.J.C.-C., F.C.-L. and J.R.-R.; writing—review and editing, M.C.-F. and O.R.-A.; supervision, F.C.-L. and E.C.-P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted with permission from the Ethics Committee of the Academic Division of Agricultural Sciences of the Juarez Autonomous University of Tabasco (ID project PFI: UJAT-DACA-2015-IA-02).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

This research was performed with the help of the Politechnic University of Queretaro UPQ, Juárez Autonomous University of Tabasco, and The National Council of Humanities, Sciences and Technologies of Mexico (CONAHCYT).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wishart, H.; Morgan-Davies, C.; Stott, A.; Wilson, R.; Waterhouse, T. Liveweight loss associated with handling and weighing of grazing sheep. Small Rumin. Res. 2017, 153, 163–170. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Guarino, M.; Sartori, L.; González, L.A.; Marinello, F. On-barn pig weight estimation based on body measurements by a Kinect v1 depth camera. Comput. Electron. Agric. 2018, 148, 29–36. [Google Scholar] [CrossRef]

- Kongsro, J. Estimation of pig weight using a Microsoft Kinect prototype imaging system. Comput. Electron. Agric. 2014, 109, 32–35. [Google Scholar] [CrossRef]

- Zhang, A.L.N.; Wu, B.P.; Jiang, C.X.H.; Xuan, D.C.Z.; Ma, E.Y.H.; Zhang, F.Y.A. Development and validation of a visual image analysis for monitoring the body size of sheep. J. Appl. Anim. Res. 2018, 46, 1004–1015. [Google Scholar] [CrossRef]

- Huang, L.; Li, S.; Zhu, A.; Fan, X.; Zhang, C.; Wang, H. Non-contact body measurement for qinchuan cattle with LiDAR sensor. Sensors 2018, 18, 3014. [Google Scholar] [CrossRef] [PubMed]

- Le Cozler, Y.; Allain, C.; Caillot, A.; Delouard, J.; Delattre, L.; Luginbuhl, T.; Faverdin, P. High-precision scanning system for complete 3D cow body shape imaging and analysis of morphological traits. Comput. Electron. Agric. 2019, 157, 447–453. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Guarino, M.; Sartori, L.; Marinello, F. A feasibility study on the use of a structured light depth-camera for three-dimensional body measurements of dairy cows in free-stall barns. Sensors 2018, 18, 673. [Google Scholar] [CrossRef] [PubMed]

- Lu, M.; Norton, T.; Youssef, A.; Radojkovic, N.; Fernández, A.P.; Berckmans, D. Extracting body surface dimensions from top-view images of pigs. Int. J. Agric. Biol. Eng. 2018, 11, 182–191. [Google Scholar] [CrossRef]

- Weber, V.A.d.M.; Weber, F.d.L.; Gomes, R.d.C.; Oliveira Junior, A.d.S.; Menezes, G.V.; Abreu, U.G.P.d.; Belete, N.A.d.S.; Pistori, H. Prediction of Girolando cattle weight by means of body measurements extracted from images. Rev. Bras. Zootec. 2020, 49, e20190110. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Teng, G. Mobile measuring system based on LabVIEW for pig body components estimation in a large-scale farm. Comput. Electron. Agric. 2019, 156, 399–405. [Google Scholar] [CrossRef]

- Hao, H.; Jincheng, Y.; Ling, Y.; Gengyuan, C.; Sumin, Z.; Huan, Z. An improved PointNet++ point cloud segmentation model applied to automatic measurement method of pig body size. Comput. Electron. Agric. 2023, 205, 107560. [Google Scholar] [CrossRef]

- He, C.; Qiao, Y.; Mao, R.; Li, M.; Wang, M. Enhanced LiteHRNet based sheep weight estimation using RGB-D images. Comput. Electron. Agric. 2023, 206, 107667. [Google Scholar] [CrossRef]

- Nunes, L.; Ampatzidis, Y.; Costa, L.; Wallau, M. Horse foraging behavior detection using sound recognition techniques and artificial intelligence. Comput. Electron. Agric. 2021, 183, 106080. [Google Scholar] [CrossRef]

- Rodríguez Alvarez, J.; Arroqui, M.; Mangudo, P.; Toloza, J.; Jatip, D.; Rodriguez, J.M.; Teyseyre, A.; Sanz, C.; Zunino, A.; Machado, C.; et al. Estimating body condition score in dairy cows from depth images using convolutional neural networks, transfer learning and model ensembling techniques. Agronomy 2019, 9, 90. [Google Scholar] [CrossRef]

- Alvarez, J.R.; Arroqui, M.; Mangudo, P.; Toloza, J.; Jatip, D.; Rodríguez, J.M.; Teyseyre, A.; Sanz, C.; Zunino, A.; Machado, C.; et al. Body condition estimation on cows from depth images using Convolutional Neural Networks. Comput. Electron. Agric. 2018, 155, 12–22. [Google Scholar] [CrossRef]

- Cang, Y.; He, H.; Qiao, Y. An intelligent pig weights estimate method based on deep learning in sow stall environments. IEEE Access 2019, 7, 164867–164875. [Google Scholar] [CrossRef]

- Aguilar-Martínez, C.U.; Berruecos-Villalobos, J.M.; Espinoza-Gutiérrez, B.; Segura-Correa, J.C.; Valencia-Méndez, J.; Roldán-Roldán, A. Origen, historia y situacion actual de la oveja pelibuey en Mexico. Trop. Subtrop. Agroecosyst. 2017, 20, 429–439. [Google Scholar] [CrossRef]

- AFRC. Energy and Protein Requirements of Ruminants; Agricultural and Food Research Council; University of Wisconsin: Madison, WI, USA, 1993. [Google Scholar]

- Van Rossum, G.; Drake, F.L., Jr. Python Reference Manual; Centrum voor Wiskunde en Informatica: Amsterdam, The Netherlands, 1995. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Oliphant, T.E. A Guide to NumPy; Trelgol Publishing: Austin, TX, USA, 2006; Volume 1. [Google Scholar]

- McKinney, W. Data Structures for Statistical Computing in Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28–30 June 2010; van der Walt, S., Millman, J., Eds.; pp. 56–61. [Google Scholar]

- Cai, Z.; Han, J.; Liu, L.; Shao, L. RGB-D datasets using microsoft kinect or similar sensors: A survey. Multimed. Tools Appl. 2017, 76, 4313–4355. [Google Scholar] [CrossRef]

- Pagliari, D.; Pinto, L. Calibration of Kinect for Xbox One and Comparison between the Two Generations of Microsoft Sensors. Sensors 2015, 15, 27569–27589. [Google Scholar] [CrossRef]

- Bautista-Díaz, E.; Salazar-Cuytun, R.; Chay-Canul, A.J.; Garcia Herrera, R.A.; Piñeiro-Vázquez, Á.T.; Magaña Monforte, J.G.; Tedeschi, L.O.; Cruz-Hernández, A.; Gómez-Vázquez, A. Determination of carcass traits in Pelibuey ewes using biometric measurements. Small Rumin. Res. 2017, 147, 115–119. [Google Scholar] [CrossRef]

- Montoya-Santiyanes, L.A.; Chay-Canul, A.J.; Camacho-Pérez, E.; Rodríguez-Abreo, O. A novel model for estimating the body weight of Pelibuey sheep through Gray Wolf Optimizer algorithm. J. Appl. Anim. Res. 2022, 50, 635–642. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. Automatic weight estimation of individual pigs using image analysis. Comput. Electron. Agric. 2014, 107, 38–44. [Google Scholar] [CrossRef]

- Bassano, B.; Bergero, D.; Peracino, A. Accuracy of body weight prediction in Alpine ibex (Capra ibex, L. 1758) using morphometry. J. Anim. Physiol. Anim. Nutr. 2003, 87, 79–85. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, W.; Winter, P.; Walker, L. Walk-through weighing of pigs using machine vision and an artificial neural network. Biosyst. Eng. 2008, 100, 117–125. [Google Scholar] [CrossRef]

- Wongsriworaphon, A.; Arnonkijpanich, B.; Pathumnakul, S. An approach based on digital image analysis to estimate the live weights of pigs in farm environments. Comput. Electron. Agric. 2015, 115, 26–33. [Google Scholar] [CrossRef]

- Lasfeto, D.B.; Letik, M. A measuring weight model of Timor’s cattle based on image. Int. J. Eng. Technol. 2017, 9, 677–688. [Google Scholar] [CrossRef][Green Version]

- Morota, G.; Ventura, R.V.; Silva, F.F.; Koyama, M.; Fernando, S.C. Big data analytics and precision animal agriculture symposium: Machine learning and data mining advance predictive big data analysis in precision animal agriculture. J. Anim. Sci. 2018, 96, 1540–1550. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).