1. Introduction

Blood pressure (BP) is extensively used to assist health monitoring and diagnosis in healthcare settings. However, inaccuracies in BP measurement can result in misjudgments, potentially leading to severe consequences [

1]. The clinical gold standard for BP measurement is BP measurement performed using arterial cannulation [

2]; however, arterial cannulation is invasive and time-consuming and can only be performed by skilled personnel. It is also linked with cases of ischemia, lesions of nerves or vessels, embolism, and other complications [

3]. In regular cases, BP is measured non-invasively [

4], which yields measurement inaccuracies. Even slight measurement inaccuracies can result in misclassifying millions of individuals [

5]. Hence, a precise measurement of blood pressure holds significant importance in public health. Underestimating true BP by merely 5 mmHg or less can have significant clinical consequences as several studies have inferred incorrect tagging of more than 20 million Americans as pre-hypertensive when, in fact, they are suffering from hypertension. Untreated hypertension can lead to a 25% increased risk of fatal strokes and fatal myocardial infarctions [

1]. Conversely, if there is an overestimation of true BP by 5 mmHg, nearly 30 million Americans may receive inappropriate treatment with antihypertensive medications. This could result in exposure to potential side effects of the drugs, psychological distress due to misdiagnosis, and unnecessary financial liability [

5]. In healthcare domains such as intensive care, accurate BP measurement is even more crucial. As a result, regulating BP measurement devices is a critical matter, and suitable processes must be used for clinical investigations to validate BP devices.

National regulators have made significant efforts towards global harmonization of the standards for medical devices. When designing a blood pressure (BP) measurement device, manufacturers must adhere to standardized protocols, ensuring that the device’s inaccuracy falls within an acceptable range, typically expressed as mean error ± standard deviation of BP errors for non-invasive techniques. Even when within acceptable limits, continuous efforts are made to improve the accuracy using improved methods by adding parameters associated with blood pressure [

6,

7,

8]. This pursuit aims to provide healthcare professionals with more reliable BP readings, reducing the likelihood of errors and supporting informed decision-making. The International Organization for Standardization (ISO), established in 1947, defines standards that are accepted worldwide. It comprises representatives from various national standards organizations. The ISO 81060-2:2018 standard defines the criterion for the clinical investigation of automated, non-invasive sphygmomanometers [

9] and has been approved for use currently and recognized in whole or part by many national regulators. It supersedes region-specific standards such as EN 1060-4:2004 [

10] and has been adopted in law, in contrast to validation protocols such as those recommended by the British Hypertension Society [

11] and the European Society of Hypertension [

12].

ISO 81060-2:2018 stipulates criteria for determining the acceptable accuracy of sphygmomanometers that originated from the initial work of the Committee of US Association for the Advancement of Medical Instrumentation (AAMI) in creating the American Standard for manual, electronic, or automated sphygmomanometers known as SP10 [

13]. The standard also specifies safety, labeling, and performance requirements designed to ensure the safety and effectiveness of the device. ISO 81060-2, like SP10, mandates a minimum sample size (N) of 85 participants to be used to evaluate the BP device inaccuracy [

9]. In addition to N >= 85, the standard requires the BP errors to be within −10 mmHg to 10 mmHg, also known as the tolerable error limit, and the estimated probability of tolerable error (

) to be at least 85%. In practice, it is found that accuracy requirements are difficult to achieve, and process requirements are costly. Manufacturers attempt to adhere to this standard. However, only a small fraction of manufacturers can do so [

14]. A study reports that less than 20% of the devices accessible today conform to an established guideline [

2].

While compliance with this standard is appropriate for devices that are to be marketed, there are purposes other than regulation of medical devices for which studies involving fewer participants can still yield useful information. For instance, early evaluation of experimental devices would benefit from an earlier checkpoint, as it is often difficult for clinicians to gather 85 participants [

15,

16,

17]. Currently, to our knowledge, there is no official method for evaluating studies with fewer participants. As a result, various research works in this field adopted potentially incorrect pass/fail criteria of the standard apparently without recognizing the difference between their research methods and those assumed by the standard. This paper aims to inform researchers and BP device manufacturers about the potential effects of employing different sample sizes for the validation of a BP measurement device.

We also offer recommendations to adjust the appropriate acceptance range (upper limit of acceptable standard deviation for a certain mean error) required for any study to adhere to criteria similar to the SP10 requirements. In addition to the different acceptance limits for different sample sizes, this paper provides a brief comparison of previous studies that investigated novel BP measurement methods with different sample sizes, and also assesses their adherence to the current standard.

6. Discussion and Conclusions

International standards such as ISO 86010-2 serve an important purpose in providing clarity to consumers, manufacturers, and regulators that medical devices (at least with respect to the scope of the standard) are safe and effective. With this purpose, standards provide clear pass/fail criteria, which reflect the level of a device’s performance and acceptability. In this regard, the pass/fail criteria set out in ISO 86010-2:2018, inherited from SP10, are broadly recognized and represent an implicit definition of what constitutes acceptable errors in blood pressure measurement. In SP10, the mathematical translation of this definition into pass/fail criteria utilizes an approximate approach that results in formulas for confidence intervals that are functions only of the sample size, disregarding the sampling errors in the form of estimated probability of tolerable error. In this work, we have proposed a method using a solid statistical theory to determine confidence intervals. The proportion’s sampling distribution is a more accurate statistical approach for studying the random errors producing since it additionally takes the mean and the standard deviation of measurement errors into consideration.

By detailing the expected changes in device acceptability, the paper contributes valuable knowledge to the existing research in this field. This work also provides an adjusted acceptance limit of BP errors based on the same definition of acceptable performance underlying the SP10 standard for studies that use a sample size of less than 85. The adjusted limits are expected to be useful in the initial validation of BP technologies. Device manufacturers can use these adjusted acceptance limits to estimate compliance with the standards using smaller sample sizes, reducing the cost of development and/or allowing faster iterative development.

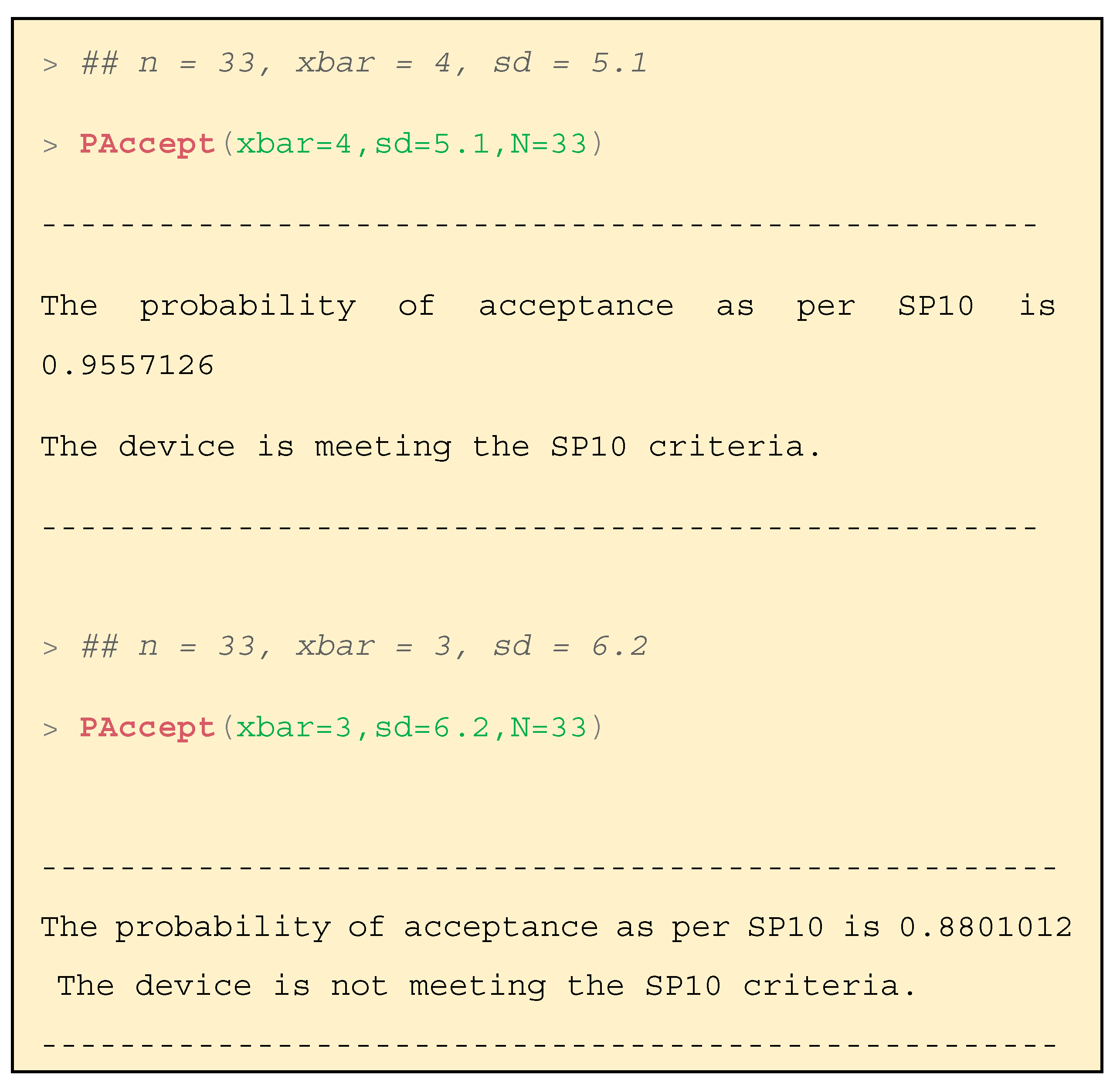

An important use case for this research is the ability to compare reported results, for example, when there are several technologies/methods/algorithms being used for estimating BP, and the individual reports utilize different sample sizes, as shown in

Table 5. In each case, the reported device inaccuracy (

and SD) appears to be within the SP10 criteria. However, the sample sizes are significantly smaller. Using the methods presented here, the calculation of the probability of acceptance,

, allows a quantitative comparison of the existing literature and also to the SP10 criteria. For most of the presented studies, it is apparent that the reported results do not reach an equivalent level of confidence to SP10.

allows direct comparison of results with different means and standard deviations, for example, a study with a high mean and low SD [

15] and a study with a much lower mean and higher SD [

26]. Many hypertension societies now offer clinicians a comprehensive list of blood pressure measurement devices, facilitating informed decision-making for clinical trials. Currently, the list can only assess whether the device is recommended or not based on its performance, utilizing the acceptance range specified in the standards [

28]. With the introduction of the proposed method, clinicians can now more appropriately compare the reported inaccuracies across varying sample sizes. This functionality empowers clinicians when evaluating devices for their specific research needs.

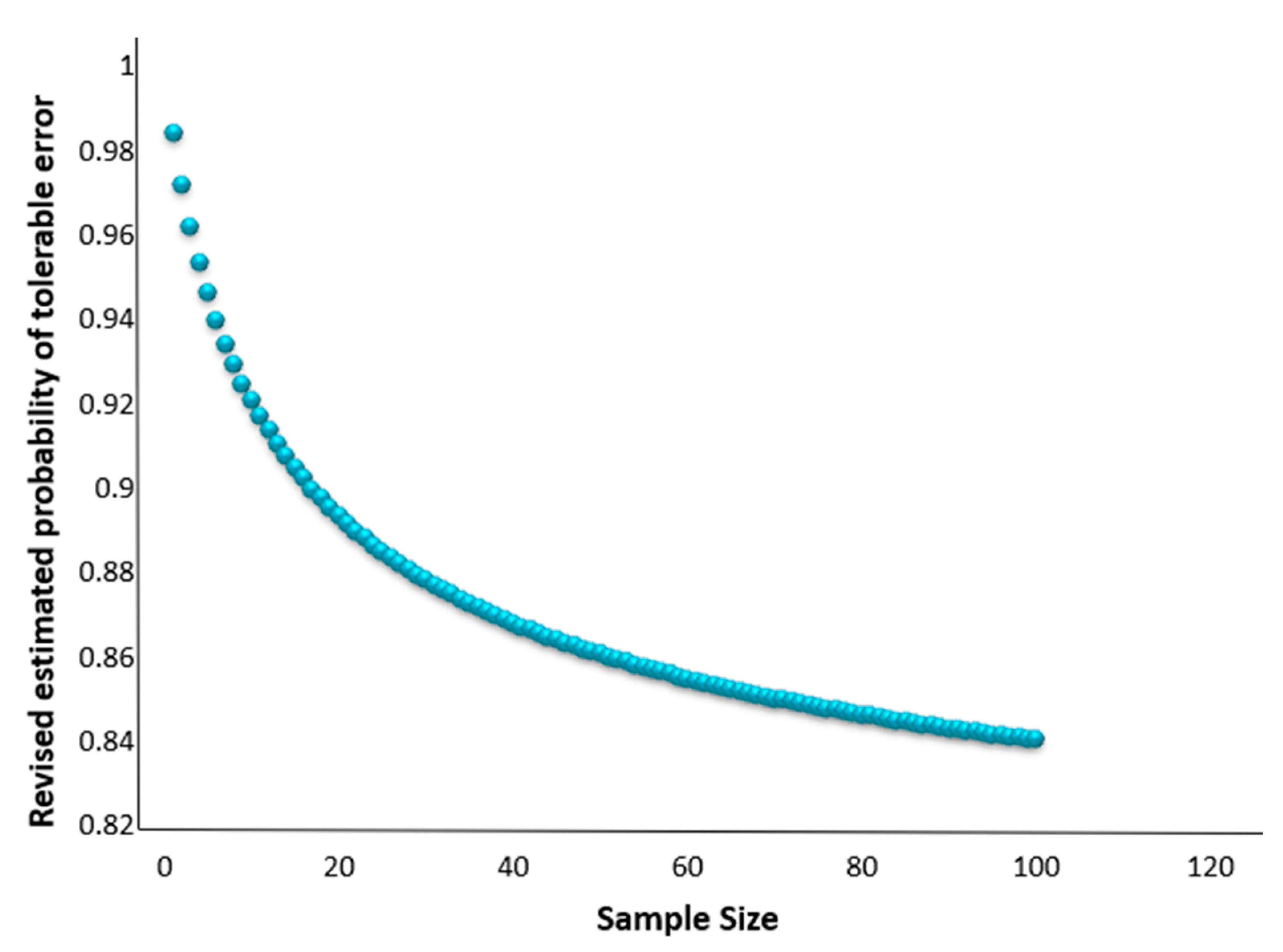

We demonstrate that no more than 70 samples are required to maintain the 85% estimated probability of tolerable error, as opposed to the N = 85 stated in the standards, as illustrated in

Figure 4. There are cases where being able to correctly interpret the results of a study with a smaller sample would be beneficial, for example, with a population subset with only infants. Our framework still makes a statistical assumption of reasonably large sample sizes, ideally N ≥ 39. Although the proposed method is optimized for at least 39 samples, it is instructive to see how the framework performs for fewer samples. For this, we performed a simulation study in which we varied the mean and the standard deviation of the error distribution. We experimented with high variance but not exceeding the SP10 mandate for the standard deviation, that is,

≤ 6.9. The approximations with the proposed framework demonstrated closer results compared to the approximations with the existing framework.

Table 2 presents one such result, while additional scenarios are elaborated in detail in

Appendix D. The results become more distant for both frameworks as the sample size decreases. We witness this relation because with smaller sample sizes, the tendency of the sampling distribution to approximate normal distribution decreases. These results are further confirmation that caution should be applied when using smaller sample sizes, particularly when N < 39. To extend the proposed framework for less than 39 samples, further research is required.

This study presents a formal statistical evaluation of the device’s conformity with international standards, primarily through the evaluation of the probability of acceptance depending on the mean error, the standard deviation of the error, and the sample size. While evaluating a device’s inaccuracy, international standards also mandate that the device follows guidelines in regard to selecting the cuff size, providing the subject with a resting period prior to measurement, etc. Any deviation from these protocols has the potential to introduce bias, though the specific impact remains inconclusive as most studies do not explicitly state whether these protocols were adhered to. Additionally, to ensure enough samples in varied categories, including covering high and low blood pressure groups, ranges, and distributions of arm sizes, a minimum device sample size of N = 85 is necessary. In such cases, a smaller sample size will not be representative of the population.

With significant modifications for the standard SP10, we introduce a mathematical framework to accommodate different underlying definitions of acceptable error and confidence. This is of relevance to those developing new BP measurement technologies which are often tested initially in smaller samples, such as cuffless, wearable BP measurement devices that perform continuous readings to gather trends for a long period of time. These technologies help in assessing real-time fluctuations which might be useful for clinical trials that aim to gather longitudinal data on blood pressure trends and responses to interventions.

Finally, to assist in the calculations presented, this paper also introduces the companion R package “bpAcc”, an implementation of this methodology involving functions to directly compute the acceptance limit and without having to deal with the mathematical complexities. At present, our framework has the infrastructure to afford normally distributed errors, as stated by the argument “distribution” from “PAccept()” and “AcceptR()”. Future work includes upgrading both functions to handle errors other than normal. Initial steps have been taken in this direction with both functions being currently trained and tested using the one-parameter (, or degrees of freedom) Student-t distribution. We are focused on selecting real-valued distributions with practical benefits for clinicians and manufacturers, rather than a theory-based selection of choices. Essentially, more data are useful, but experiments are often expensive. We aim to provide choices spanning various sample sizes by providing statistical infrastructure to maximize the user’s ability to identify faulty devices (e.g., Type I error) for BP measurement. Over time, both functions will be enhanced with further arguments to handle, e.g., criteria other than “SP10:2006”.