Abstract

Image-based colorimetry has been gaining relevance due to the wide availability of smart phones with image sensors and increasing computational power. The low cost and portable designs with user-friendly interfaces, and their compatibility with data acquisition and processing, are very attractive for interdisciplinary applications from art, the fashion industry, food science, medical science, oriental medicine, agriculture, geology, chemistry, biology, material science, environmental engineering, and many other applications. This work describes the image-based quantification of color and its machine vision and offline applications in interdisciplinary fields using specifically developed image analysis software. Examples of color information extraction from a single pixel to predetermined sizes/shapes of areas, including customized regions of interest (ROIs) from various digital images of dyed T-shirts, tongues, and assays, are demonstrated. Corresponding RGB, HSV, CIELAB, Munsell color, and hexadecimal color codes, from a single pixel to ROIs, are extracted for machine vision and offline applications in various fields. Histograms and statistical analyses of colors from a single pixel to ROIs are successfully demonstrated. Reliable image-based quantification of color, in a wide range of potential applications, is proposed and the validity is verified using color quantification examples in various fields of applications. The objectivity of color-based diagnosis, judgment and control can be significantly improved by the image-based quantification of color proposed in this study.

1. Introduction

Color is an important factor in our perception of substances. While variations in luminance can be caused by both changes in substances and changes in illumination, variations in color are highly diagnostic for changes in the physical and/or chemical properties of substances [1,2,3]. The color of a substance depends on the wavelengths of light absorbed. The perceived color is the complementary or subtractive light spectra, as light reaching the eye lacks the absorbed light spectra. What color (more specifically light spectra) is absorbed depends on the chemical nature of the substance. Color matching is the most basic and important task in color vision, and it forms the basis of colorimetry for a wide range of applications [1,2,3].

In the early experiments on color matching, homogeneously colored stimuli were used [1]. These experiments were intended to assess the sensitivities of the human visual system to different colors to help us better understand the perception of color in the vision of human beings at the level of the photoreceptors. However, the objects within our visual environment contain very few surfaces that are precisely uniform or identical in color. Moreover, these surfaces are made of a wide range of substances and geometries [4]. Different substances with different surface properties affect the perception of color in the human eye due to variations in reflection and scattering properties. As a result, we perceive variations in reflected light spectra (i.e., color).

Colorimetric information, including brightness, contrast, and uniformity, are frequently used in characterizing and describing properties of objects and substances of interest, both in daily life and in specific circumstances. Its applications are very broad from art, the fashion industry, food science, medical science, oriental medicine, agriculture, geology, chemistry, biology, material science, environmental engineering, etc. [5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20]. For ease of access and portable solutions, smartphone-based spectrometers and colorimeters have been studied worldwide [21,22,23,24,25,26,27,28,29,30]. The post-processing capability of acquiring images for colorimetric applications is very important to developing optimized algorithms. Once an optimized algorithm is developed, it can be integrated with image-capturing devices for machine vision applications.

We have reported newly developed image analysis software (PicMan, WaferMasters, Inc., Dublin, CA, USA) with image-capturing and video-recording functions with multiple application examples in various fields, including engineering, cultural heritage evaluation/conservation, archaeology, chemical, biological and medical applications [6,31,32,33,34,35,36,37,38]. The software has also been used for various research activities by third-party research groups and proved its capability of quantitative color analysis for various objectives in color pigment, painting cultural property, material science, and cell biology studies [7,8,39,40,41]. For machine vision applications, the video clip analysis technique and utilization of commercially available general image sensors, such as digital cameras, USB cameras, smart phones and camcorders using the newly developed software, have been demonstrated [7,31,38].

In this paper, the image-based quantification of color and its machine vision and offline applications in interdisciplinary fields using specifically developed image analysis software (PicMan) are introduced with examples. The colorimetric information of individual pixels and regions of interest (ROIs) in acquired images were extracted in RGB (red, green and blue) intensity, HSV (hue, saturation and value) and CIE L*a*b* values. The conversion of RGB intensity values into equivalent Munsell color indices and hexadecimal color codes has also been demonstrated for the potential expansion of machine vision and offline colorimetric applications.

2. Experimental

To demonstrate the image-based quantification of color, three types of images were used. Color information from a single pixel to predetermined sizes/shapes of areas, including customized regions of interest (ROIs) from various digital images of dyed T-shirts, assays, color charts, and tongues from various sources, including the internet, have been extracted. Average color and histograms of color components such as RGB, HSV, CIE L*a*b*, Munsell color and hexadecimal color of ROIs were extracted. Colorimetric analysis was conducted using newly developed image analysis software (PicMan from WaferMasters, Inc., Dublin, CA, USA) [6,7,8,31,32,33,34,35,36,37,38,39,40,41]. For machine vision applications, PicMan can be connected with image sensor devices, including USB cameras and digital microscopes, to acquire snapshot photographs and video images.

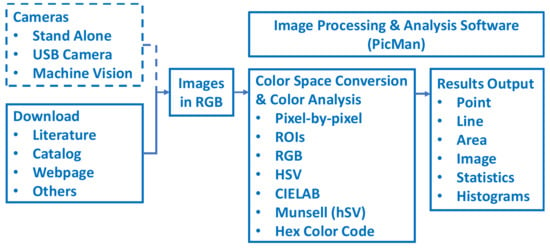

For the first set of images, twenty-nine dyed T-shirt images, downloaded from an internet shopping site [42], were selected as the first test image for color analysis demonstration. For the second set of images, an image of methylene blue (MB) solutions, with different concentrations ranging from 0.1 to 10 ppm, were selected from a publicly available literature source [23]. For the third set of images, a commercially available AquaChek Color Chart, which indicates the chemical levels in hot tub water was selected as a quantifiable set of colors from a chart [43]. Total hardness, total chlorine/total bromine, free chlorine, pH, total alkalinity, and cyanuric acid levels can be read from the AquaChek test strips color chart. As the last example of color images, a tongue diagnosis chart [44] used in traditional oriental medicine (traditional Chinese medicine (TCM, 中医, Zhōngyī), traditional Korean medicine (한방, 韓方, Hanbang) and traditional Chinese medicine in Japan (漢方, Kampo)) was selected. A block diagram from image acquisition, color space conversion, image analysis, and results output is illustrated in Figure 1. A customized system can be configured with any imaging device and a PC with the image analysis software, PicMan.

Figure 1.

Block diagram from image acquisition, color space conversion, image analysis, and results output.

Quantitative colorimetric information on individual pixels, lines and regions of interest (ROIs) in digital images from various sources has been extracted to demonstrate the feasibility of offline colorimetric analysis with traceability for further statistical analysis. Machine vision application examples of the colorimetric analysis, monitoring and quality control capabilities are provided towards the end of this paper. Once this technique is proved to be effective, careful consideration and optimization should be applied in hardware configurations, illumination, calibration and optical properties of target objects for reliable results.

3. Results

Color of areas of interest, single pixel to predetermined sizes/shapes of areas from four different types of objective images were quantified using newly developed image analysis software, PicMan. The analyzed color information of single pixel to predetermined sizes/shapes of areas was exported in commonly used color formats in RGB, HSV, CIE L*a*b*, Munsell color and hexadecimal codes to assist us in image analysis and quality monitoring/control. The RGB color system is one of many color-defining systems [45,46,47]. For optical image display in monitoring screen or projection devices, an RGB color system is used and all colors are uniquely identified as RGB-based hexadecimal color codes. A 24-bit color signal (8-bit per channel for red, green and blue channels) can display 224 (=16,777,216) colors [6,7,8]. The histogram and statistical analysis of extracted color information assists us in finding correlations between color and input variables/conditions of objects of interest at the time of image acquisition. Detailed examples using four types of images from different disciplines are described in the following subsections.

3.1. Dyed T-Shirts

Twenty-nine tie-dye T-shirt images, selected for color analysis demonstration, are shown in Figure 2. The term tie-dye is used to describe a number of resist dyeing techniques. The process of “tie-dye” typically consists of a few ‘resist’ preparation steps before tying (binding) with string or rubber bands, as in folding, twisting, pleating, or crumpling fabric or clothes. The application of dye or dyes takes place to the tied fabrics. The preparation steps of the fabric before the application of dye are called ‘resists,’ as they partially or completely prevent the coloring the fabric from the applied dye(s). Tie-dye can be used to create a wide variety of designs on fabric. Standard and popular patterns of tie-dye are the spiral, peace sign, diamond. A “marble effect” can be added to beautiful works in this manner. It is a perfect example of images being enhanced for color analysis demonstration.

Figure 2.

Twenty-nine dyed T-shirt images (a–ac) are shown for color analysis demonstration.

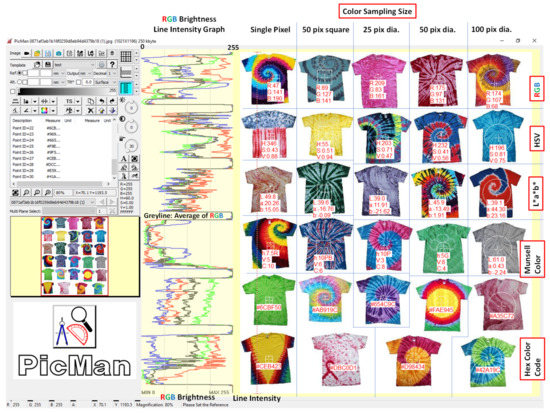

Figure 3 shows the twenty-nine tie-dye T-shirt images opened using the image processing software, PicMan, for color analysis. The colorful line intensity graph shows RGB (red, green and blue) channel brightness as a dotted red vertical line in 256 levels (0–255). The grey-colored line intensity graph shows the weighted average brightness of RGB channels of individual pixels on the red dotted vertical line. The weighted average brightness was calculated using (R + 2G + B)/4, considering the luminosity curve and the color filter array pattern used in typical CMOS image sensors [48]. In the Bayer color filter mosaic, each two-by-two sub-mosaic contains one red, two green, and one blue filter, each filter covering one pixel sensor [45] for considering the human luminosity factor. Color information of various ROIs from a single pixels to squares and circles of different sizes were quantified in various color-describing formats, such as RGB, HSV, L*a*b*, Munsell color and hexadecimal codes. The color information of any shape and size, for example, an individual tie-dye T-shirt or an entire image of twenty-nine T-shirts, can be analyzed and exported either pixel by pixel or as average color in various color formats of choice for further analysis, color monitoring and quality control.

Figure 3.

Examples of color information extraction of individual pixels and regions of interest (ROIs) with different sizes and shapes from twenty-nine dyed T-shirt images.

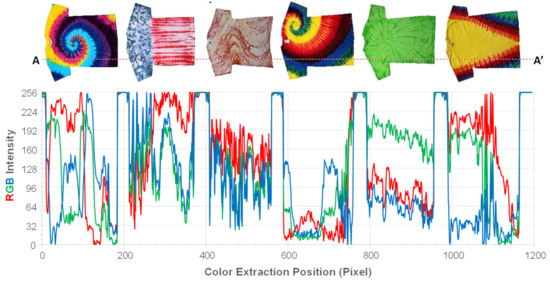

Examples of color information extraction and RGB intensity line graph across the line A-A’ on six dyed T-shirt images (far left column of Figure 1) are shown in Figure 4. The RGB intensity line graph in Figure 2 was plotted using exported pixel-by-pixel RGB intensity values across the line A-A’.

Figure 4.

Examples of color information extraction of individual pixels across the line A-A’ on six dyed T-shirt images in the left column of Figure 1.

Table 1 shows the summary of color analysis results on individual T-shirts. The measured area of individual T-shirts in the image was counted in the number of pixels. The weighted averages of RGB intensity and their standard deviations of individual T-shirts were calculated. The average intensity and standard deviation of red, green and blue channels of pixels within twenty-nine individual T-shirts were calculated. The average HSV values, average CIELAB L*a*b* values and average Munsell color values were calculated based on RGB values of all pixels within the individual T-shirt images. In addition, the average color using the hexadecimal code was determined for easy understanding. The image analysis software is capable of calculating width, height, circumference, area/circumference ration, circularity, and many more important parameters to characterize the individual T-shirts (or any shape and size of ROIs specified). All numbers are unique characteristics of individual T-shirts regarding the size, shape, and color. These numbers can be used for finding correlation with statistics of human reaction, impression, preference, etc., towards the human interface algorithm development, machine learning (ML), artificial intelligence (AI), psycho-visual modeling, human visual system modeling, product design, and so on.

Table 1.

Statistical summary of color information on twenty-eight dyed T-shirts. (Mean and standard deviation of brightness and RGB values, as well as average HSV (for hue, saturation, value) values, CIE L*a*b* values, Munsell color values, hex color codes for average color of individual dyed T-shirts). Intensity means and standard deviations of red, green and blue channels were shown in representing colors.

The RGB color model is an additive color model in which the red, green and blue primary colors of light are added together to reproduce a broad range of colors. For 24-bit colors, 8-bit brightness (or intensity) information in the range of 0 to 255 is used per color channel. The CIELAB color space (L*a*b*) is a color space defined by the International Commission on Illumination (abbreviated CIE) (1976). It expresses color as three values in a cartesian coordinate. L* is for perceptual lightness and a* and b* are for the four unique colors of human vision: red, green, blue and yellow. For Munsell color, The H (hue) number is converted by mapping the hue rings into numbers between 0 and 100. The C and V are the same as the normal chroma and value. A hexadecimal color is specified with the rrggbb format, where rr (red), gg (green) and bb (blue) are hexadecimal integers between 00 and ff, specifying the intensity of the color.

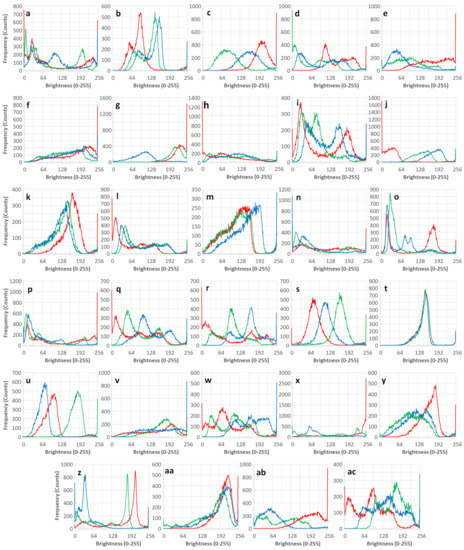

Figure 5 shows RGB intensity histograms of the twenty-nine T-shirt images. The brightness distribution of RGB values of entire pixels within individual T-shirt images were extracted and binned from 0 to 255 intensity category. The histogram contains all information on the color of individual T-shirts. The areas of each RGB histogram curve should be identical to the number of pixels. The histogram data ignore the x, y coordinate information of individual pixels of the image. The order of pixels in the image is ignored. It treats the group of pixels as a totally mixed ‘soup’ of RGB pixels with brightness ranging from 0 to 255. The histogram provides an attribute of RGB brightness population, but it is very difficult to imagine the resulting color from the histogram. It is useful to detect saturation and range of brightness used in individual channels.

Figure 5.

RGB intensity histograms of twenty-nine T-shirt images (a–ac).

For easy recognition, the twenty-nine dyed T-shirt images and their corresponding average color are shown in Figure 6. When multiple, highly contrasting colors are used, the corresponding average color does not seem to match our impression (a, f, h, i, n, o, p, v, w, x, z of Figure 6). They are calculated from the arithmetic mean of RGB brightness values of entire pixels. It represents the average color of totally mixed ‘RGB pixel solutions.’ In many cases, simple statistics cannot represent reality, but it can make sense and has its own value and usage as one of many quantifiable characterization factors.

Figure 6.

Twenty-nine dyed T-shirt images and their corresponding averaged color (a–ac).

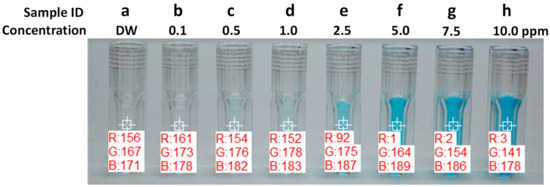

3.2. Methylene Blue (MB) Solutions

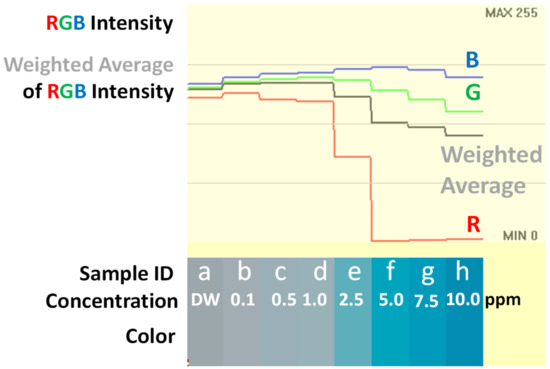

For testing color analysis sensitivity of liquid samples, an image of methylene blue (MB: Methylthioninium chloride (C16H18ClN3S)) solutions was adapted from the published literature [23]. The MB solutions with different concentrations are shown in Figure 5. The concentration of the MB solution varies from 0 ppm (100% distilled water (DW)) to 10 ppm (most right) in eight steps. RGB values of 15 × 15 pixel square areas of MB solutions from 0.1 to 10 ppm were extracted from the image for quantification of color change. The color change of MB solutions with respect to MB concentration is easily seen. As the MB concentration is increased, the red channel intensity is rapidly decreased. The green channel intensity started to decrease above MB concentration of 5.0 ppm. It coincided with the red intensity reduction with the increase in MB concentration reported from the smart phone spectrometer study [23].

Figure 7 shows the corresponding colors of the eight MB solutions with 0 to 10.0 ppm concentrations. Their RGB intensity and weighted average brightness (intensity) are shown as line intensity graphs (a partial screen capture image of PicMan) in Figure 8. Trends of RGB channel intensity as a function of MB concentration can be used to formulate the relationship between apparent color and MB concentration. Unlike red channel brightness, both green and blue channel intensities remained meaningful and showed clear trends above noise level in the entire MB concentration range. The response surface method (RSM) can be used to find the relationships between MB concentration and RGB intensities of tested MB solutions.

Figure 7.

RGB values of MB solutions from 0.1 to 10 ppm (adapted from [23]. (15 × 15 pixel square areas were chosen for color analysis).

Figure 8.

Representation of MB solutions from 0.1 to 10 ppm (adapted from [23] color and RGB values and weighted RGB values extracted using PicMan). The weighted average of RGB values were calculated by using a formula (R + 2G + B)/4.

Table 2 summarizes the color of MB solutions with different concentrations in various color expression formats. All values are averages of 15 × 15 pixel square areas of MB solutions from 0.1 to 10 ppm, as shown in Figure 7. The color was quantified for objective analysis. As noticed from the RGB values of MB solutions with different concentrations, the red channel intensity starts from 161 for 0.1 ppm and decreases with the increment of concentration. At the concentration between 1.0 and 2.5 ppm, the red component of longer wavelength photons were absorbed. As a result, almost no red color signal is detected. The green color intensity remained constant around 175 up to the concentration of 1.0 ppm and started to decrease its intensity as the concentration is increased. The blue color intensity remained very strong above 178 for all concentrations and showed the maximum intensity at around 2.5 ppm. By using this color change with the concentration, the concentration of MB solutions can be estimated from color analysis results.

Table 2.

Summary of color analysis results of MB solutions from 0.1 to 10 ppm in various color expression methods (RGB, weighted RGB, HSV, CIE L*a*b*, Munsell and Hexadecimal codes). Representing colors for sample a–h are shown for easy recognition. Intensity of red, green and blue channels were shown in representing colors.

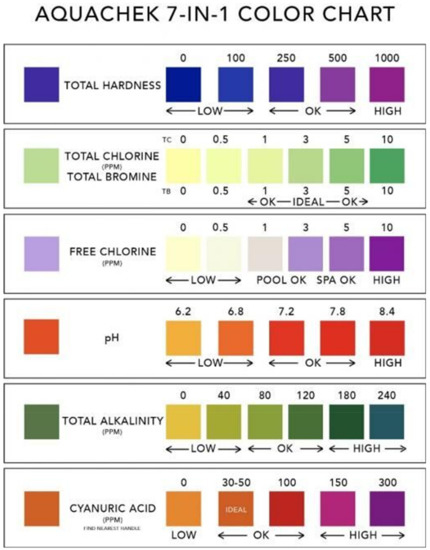

3.3. Water Quality Inspection Color Chart

Pools and hot tubs require periodic water quality tests to maintain the quality of water for the health and safety of users. Paper-based test strips are commonly used for water quality checks. Color change, after dipping a test strip into the testing water, is used as an indicator for various testing categories, such as the total hardness, total chlorine/total bromine, free chlorine, pH, total alkalinity, and cyanuric acid levels. A commercially available AquaChek Color Chart [43], which indicates the chemical levels in hot tub water, was selected as the color analysis example. The color of the test strip after dipping is compared with a color chart to interpret the test results and find a remedy for any issue.

Figure 9 shows a commercially available AquaChek Color Chart, which indicates the chemical levels in hot tub water. A printable AquaChek 7-in-1 Color Chart for a specific test strip product can be digitally downloaded and referenced for interpretation of proper test results. The downloaded AquaChek 7-in-1 Color Chart can be printed out on white filter papers with colored dots to prevent a color shift between a digital file and its printed chart.

Figure 9.

A commercially available AquaChek Color Chart, which indicates the chemical levels in hot tub water [43].

There are seven elements to the AquaChek Test Strips. From top to bottom, Total Hardness, Total Chlorine, Total Bromine, Free Chlorine, pH, Total Alkalinity, and Cyanuric Acid levels are tested and read from the chart. It is based on visual inspection and interpretation and is subjective to an individual’s color vision.

Table 3 shows the color quantification summary of the AquaChek Color Chart, which indicates the chemical levels in hot tub water.

Table 3.

Summary of color analysis results of a commercially available AquaChek Color Chart, which indicates the chemical levels in hot tub water [43]. Intensity of red, green and blue channels were shown in representing colors.

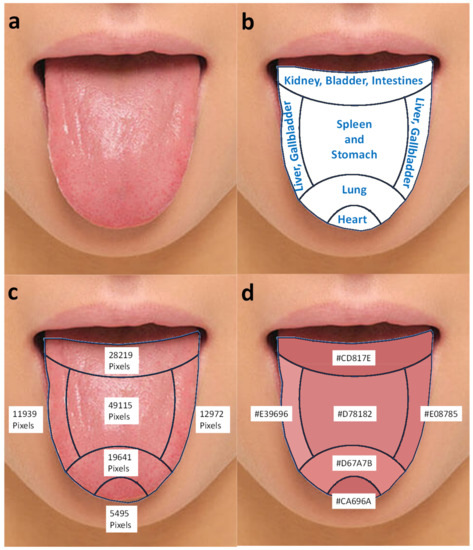

3.4. Tongue Color

As an effective alternative medicine, oriental medicine (called by different names in different countries: traditional Chinese medicine (TCM, 中医, Zhōngyī), traditional Korean medicine (한방, 韓方, Hanbang) and traditional Chinese medicine in Japan (漢方, Kampo)) utilizes tongue diagnosis as a major method to assess the patient’s health conditions. Oriental medicine doctors examine the color, shape, and texture of a patient’s tongue [12,13,14,15,16,17,18,19,20,44]. Tongue color, shape and texture can give the pre-disease indications without any significant health problems or disease-related symptoms. It provides a basis for preventive medicine and useful advice to a patient for lifestyle adjustment. However, traditional tongue diagnosis by visual inspection has limitations, as the inspection process is subjective and inconsistent between doctors. To provide more consistent and objective health assessments through tongue diagnosis, quantification of tongue color and texture from digital images seem to be a very attractive approach. Many researchers have reported their ideas and approaches, and they seen very encouraging results for years [12,13,14,15,16,17,18,19,20,44].

Figure 10 shows a sample tongue image from an acupuncturist’s site [44] and color analysis results using the color analysis of ROIs. Figure 10a,b are sample tongue photos of a patient. The tongue photos are covered with a tongue chart used in oriental medicine. Figure 10c,d are areas of each section with a number of pixels and a tongue repainted by representing average colors with corresponding hexadecimal color codes of the individual sections. The color difference between (a) and (d) can be used to quantify color variation and texture of a real tongue from image-based color quantification. The average tongue color can also be identified after image processing and used for tongue color-based diagnosis of a patient’s health conditions. Since the authors are not medical doctors, they cannot diagnose health conditions of the patient. However, the quantified color information can be very useful for medical doctors to standardize their experience-based knowledge into verifiable datasets. Table 4 summarized the color analysis results of a sample tongue photo of a patient [44] by section in various color expression formats.

Figure 10.

(a) A sample tongue photo of a patient, (b) a tongue chart used in oriental medicine, (c) areas of each section, and (d) a tongue repainted by representing average colors with corresponding hexadecimal color codes of individual sections of a tongue.

Table 4.

Summary of color analysis results of a sample tongue photo of a patient [44].

Zhang et al. proposed an in-depth systematic tongue color analysis system including a tongue image capture device for medical applications in 2013 [18]. A tongue color gamut was used in the study. Tongue foreground pixels are first extracted and assigned to one of 12 colors representing this gamut. The ratio of each color for the entire image is calculated and forms a tongue color feature vector to suppress subjectivity and significantly improve the objectivity of tongue color determination. A relationship between the health condition of the human body and its tongue color has been demonstrated using more than 1054 tongue images of 143 healthy patients and 902 patients with health issues. The patients with health issues were classified into 13 disease groups of more than 10 tongue images and one miscellaneous group. This study has reported that a given tongue sample can be classified into one of two classes (healthy and disease) with an average accuracy of 91.99%. Moreover, disease tongue images can be classified into three clusters, and within each cluster, most of the illnesses are distinguished from one another. In total, 11 illnesses had a classification rate greater than 70%. These results in colorimetry were very encouraging for oriental medicine.

A Precise and Fast Tongue Segmentation System Using U-Net with a Morphological Processing Layer called TongueNet has been reported by a research group of the University of Macau [19]. Quantification of tongue color using ML has been reported by Japanese and Malaysia-Japan international research groups [17].

4. Discussion

The characteristics of a color are determined by three different elements in the HSV color space: hue, chroma (saturation) and value (luminance). Hue is the most recognizable characteristic of a color and most people refer to hue as color. There are an infinite number of possible hues and would be difficult to precisely describe with words in any languages. Chroma refers to the purity and intensity of a color. High-chroma colors look rich and full, while low-chroma colors look dull or pale. Value is the lightness or darkness characteristic of a color. Light colors are often called tints, and dark colors are often referred to as shades. Personal impression is very subjective, and verbal expression of personal impression is not suited for precise description of color. For objective characterization of color, quantitative characterization of color is required.

The quantification capability of color information can be very useful for color monitoring, color control, machine vision, ML, AI-based algorithm development and smart phone app development for daily life and sophisticated applications. For accurate color quantification, illumination and image acquisition methods have to be optimized in addition to the image processing algorithm development. Medical image processing and analysis became a very active research field [11,12,13,14,15,16,17,18,19,20,38,41]. Image classification, deep convolution (DL), deep learning and ML examples and their approaches have been reported [49,50,51,52]. A DL neural network used for image classification is an important part of DL and has great significance in the field of computer vision towards medical image analysis and diagnosis applications. It would help us to navigate realistic directions for the development of ML, DL and AI-based algorithms for medical applications.

The quantitative colorimetric analysis technique has been extremely useful for digital forensic studies of cultural heritage identification processes, in identifying particular printing techniques through ink tone analyses and character image comparisons [53,54,55,56]. The world’s oldest metal-type printed book (The song of enlightenment (南明泉和尙頌證道歌) in Korea in 1239) has been identified by comparing six nearly identical books from Korea from the 13th to 16th centuries, the Jikji (直指) printed in 1377 and the Gutenberg 42-line Bible printed in 1455 using this image analysis technique. Diagnoses of the conservation status of painting cultural heritage and color fading characteristics of pigments were also very promising [7,8]. Many other applications based on color and shape extraction techniques using newly developed image processing/analysis software (PicMan) were introduced [57]. Image processing software applications combined with imaging devices have been reported in previous papers [6,31,38,56].

5. Conclusions

The concept of an image-based colorimetry technique has been introduced. Due to the wide availability of smart phones with image sensors and increasing computational power, a smart-phone-based, low-cost colorimeter has become a very attractive tool. The low cost and portable designs with user-friendly interfaces, and their compatibility with data acquisition and processing, make a perfect fit for interdisciplinary applications in a wide range of fields. Some examples are from art, the fashion industry, food science, medical science, oriental medicine, agriculture, geology, chemistry, biology, material science, environmental engineering, etc.

In this study, the image-based quantification examples of color using specifically developed image processing/analysis software were described. The feasibility of machine vision and offline applications in interdisciplinary fields using software was demonstrated. Examples of color information extraction from a single pixel to predetermined sizes/shapes of areas, including customized ROIs from various digital images of dyed T-shirts, color chart, assays, and tongues, are demonstrated. Conversion between RGB, HSV, CIELAB, Munsell color, and hexadecimal color codes for extracted colors from a single pixel to ROIs were demonstrated. The capability of color extraction and color conversion between different color spaces is very useful for machine vision and offline applications in various fields. Histograms and statistical analyses of colors from a single pixel to ROIs are successfully demonstrated using four different fields of applications.

Flat colors, colors with textures, segregated colors, and totally mixed colors appear very different to the human eye depending on the individual’s perception. Color-based judgments by humans are very subjective. The judgments are strongly dependent on the examiner’s vision, experience and knowledge. We were able to demonstrate image-based color quantification techniques using newly developed image processing software for various fields of applications.

Reliable image-based quantification of color, in a wide range of potential applications, including ML, AI, algorithm development and new smart phone app development, are proposed as realistic tasks to be worked on. The successful development and demonstration of image-based quantification of color of any size and shape of interest, using specifically developed software, makes customization easier for new applications that require quantitative colorimetry.

Author Contributions

All authors equally contributed in this study. Conceptualization, W.S.Y., K.K. and Y.Y.; material preparation, Y.Y., J.G.K. and W.S.Y.; methodology, Y.Y and J.G.K..; software, W.S.Y. and K.K.; data acquisition and analysis, Y.Y., J.G.K. and W.S.Y.; writing—original draft preparation, review and editing, W.S.Y. and Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

Woo Sik Yoo, Kitaek Kina and Jung Gon Kim were employed by WaferMasters, Inc. The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. All authors declare no conflict of interest.

References

- Giesel, M.; Gegenfurtner, K.R. Color appearance of real objects varying in material, hue, and shape. J. Vis. 2010, 10, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Dische, Z. Qualitative and quantitative colorimetric determination of heptoses. J. Biol. Chem 1953, 204, 983–998. [Google Scholar] [CrossRef] [PubMed]

- Nimeroff, I. Colorimetry, National Bureau of Standards Monograph 104. 1968. Available online: https://nvlpubs.nist.gov/nistpubs/Legacy/MONO/nbsmonograph104.pdf (accessed on 28 February 2023).

- Bäuml, K.H. Simultaneous color constancy: How surface color perception varies with the illuminant. Vis. Res. 1999, 39, 1531–1550. [Google Scholar] [CrossRef] [PubMed]

- Mohtasebi, A.; Broomfield, A.D.; Chowdhury, T.; Ravi Selvaganapathy, P.; Peter Kruse, P. Reagent-Free Quantification of Aqueous Free Chlorine via Electrical Readout of Colorimetrically Functionalized Pencil Lines. ACS Appl. Mater. Interfaces 2017, 9, 20748–20761. [Google Scholar] [CrossRef] [PubMed]

- Yoo, W.S.; Kim, J.G.; Kang, K.; Yoo, Y. Development of Static and Dynamic Colorimetric Analysis Techniques Using Image Sensors and Novel Image Processing Software for Chemical, Biological and Medical Applications. Technologies 2023, 11, 23. [Google Scholar] [CrossRef]

- Chua, L.; Quan, S.Z.; Yan, G.; Yoo, W.S. Investigating the Colour Difference of Old and New Blue Japanese Glass Pigments for Artistic Use. J. Conserv. Sci. 2022, 38, 1–13. [Google Scholar] [CrossRef]

- Eom, T.H.; Lee, H.S. A Study on the Diagnosis Technology for Conservation Status of Painting Cultural Heritage Using Digital Image Analysis Program. Heritage 2023, 6, 1839–1855. [Google Scholar] [CrossRef]

- Lehnert, M.S.; Balaban, M.O.; Emmel, T.C. A new method for quantifying color of insects. Fla. Entomol. 2011, 94, 201–207. [Google Scholar] [CrossRef]

- Taweekarn, T.; Wongniramaikul, W.; Limsakul, W.; Sriprom, W.; Phawachalotorn, C.; Choodum, A. A novel colorimetric sensor based on modified mesoporous silica nanoparticles for rapid on-site detection of nitrite. Microchim. Acta 2020, 187, 643. [Google Scholar] [CrossRef]

- Kim, J.; Wu, Y.; Luan, H.; Yang, D.S.; Cho, D.; Kwak, S.S.; Liu, S.; Ryu, H.; Ghaffari, R.; Rogers, J.A. A Skin-Interfaced, Miniaturized Microfluidic Analysis and Delivery System for Colorimetric Measurements of Nutrients in Sweat and Supply of Vitamins Through the Skin. Adv. Sci. 2022, 9, 2103331. [Google Scholar] [CrossRef]

- Horzov, L.; Goncharuk-Khomyn, M.; Hema-Bahyna, N.; Yurzhenko, A.; Melnyk, V. Analysis of tongue colorassociated features among patients with PCR-confirmed Covid-19 infection in Ukraine. Pesqui Bras. Odontopediatria Clín. Integr. 2021, 21, e0011. [Google Scholar] [CrossRef]

- Chen, H.S.; Chen, S.M.; Jiang, C.Y.; Zhang, Y.C.; Lin, C.Y.; Lin, C.E.; Lee, J.A. Computational tongue color simulation in tongue diagnosis. Color Res. Appl. 2021, 9, 2103331. [Google Scholar] [CrossRef]

- Segawa, M.; Iizuka, N.; Ogihara, H.; Tanaka, K.; Nakae, H.; Usuku, K.; Hamamoto, Y. Construction of a Standardized Tongue Image Database for Diagnostic Education: Development of a Tongue Diagnosis e-Learning System. Front. Med. Technol. 2021, 3, 760542. [Google Scholar] [CrossRef] [PubMed]

- Xie, J.; Congcong Jing, C.; Zhang, Z.; Xu, J.; Ye Duan, Y.; Xu, D. Digital tongue image analyses for health assessment. Med. Rev. 2021, 1, 172–198. [Google Scholar] [CrossRef]

- Sun, Z.M.; Zhao, J.; Qian, P.; Wang, Y.Q.; Zhang, W.F.; Guo, C.R.; Pang, X.Y.; Wang, S.C.; Li, F.F.; Li, Q. Metabolic markers and microecological characteristics of tongue coating in patients with chronic gastritis. BMC Complement. Altern. Med. 2013, 13, 227. Available online: http://www.biomedcentral.com/1472-6882/13/227 (accessed on 28 February 2023). [CrossRef] [PubMed]

- Kawanabe, T.; Kamarudin, N.D.; Ooi, C.Y.; Kobayashi, F.; Mi, X.; Sekine, M.; Wakasugi, A.; Odaguchi, H.; Hanawa, T. Quantification of tongue colour using machine learning in Kampo medicine. Eur. J. Integr. Med. 2016, 8, 932–941. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, X.; You, J.; Zhang, D. Tongue Color Analysis for Medical Application. Evid.-Based Complement. Altern. Med. 2013, 2013, 264742. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Q.; Zhang, B.; Chen, X. TongueNet: A Precise and Fast Tongue Segmentation System Using U-Net with a Morphological Processing Layer. Appl. Sci. 2019, 9, 3128. [Google Scholar] [CrossRef]

- Takahoko, K.; Iwasaki, H.; Sasakawa, T.; Suzuki, A.; Matsumoto, H.; Iwasaki, H. Unilateral Hypoglossal Nerve Palsy after Use of the Laryngeal Mask Airway Supreme. Case Rep. Anesthesiol. 2014, 2014, 369563. [Google Scholar] [CrossRef]

- Kılıç, V.; Alankus, G.; Horzum, N.; Mutlu, A.Y.; Bayram, A.; Solmaz, M.E. Single-Image-Referenced Colorimetric Water Quality Detection Using a Smartphone. ACS Omega 2018, 3, 5531–5536. [Google Scholar] [CrossRef]

- Alberti, G.; Zanoni, C.; Magnaghi, L.R.; Biesuz, R. Disposable and Low-Cost Colorimetric Sensors for Environmental Analysis. Int. J. Environ. Res. Public Health 2020, 17, 8331. [Google Scholar] [CrossRef] [PubMed]

- Kılıç, V.; Horzum, N.; Solmaz, M.E. From Sophisticated Analysis to Colorimetric Determination: Smartphone Spectrometers and Colorimetry. In Color Detection; IntechOpen: London, UK, 2018. [Google Scholar] [CrossRef]

- O’Donoghue, J. Simplified Low-Cost Colorimetry for Education and Public Engagement. J. Chem. Educ. 2019, 96, 1136–1142. [Google Scholar] [CrossRef]

- Hermida, I.D.P.; Prabowo, B.A.; Kurniawan, D.; Manurung, R.V.; Sulaeman, Y.; Ritadi, M.A.; Wahono, M.D. Use of Smartphone Based on Android as a Color Sensor. In Proceedings of the 2018 Electrical Power, Electronics, Communications, Controls and Informatics Seminar (EECCIS), Batu, Indonesia, 9–11 October 2018; pp. 424–429. [Google Scholar] [CrossRef]

- Han, P.; Dong, D.; Zhao, X.; Jiao, L.; Lang, Y. A smartphone-based soil color sensor: For soil type classification. Comput. Electron. Agric. 2016, 123, 232–241. [Google Scholar] [CrossRef]

- Alawsi, T.; Mattia, G.P.; Al-Bawa, Z.; Beraldi, R. Smartphone-based colorimetric sensor application for measuring biochemical material concentration. Sens. Bio-Sens. Res. 2021, 32, 100404. [Google Scholar] [CrossRef]

- Chellasamy, G.; Ankireddy, S.R.; Lee, K.N.; Govindaraju, S.; Yun, K. Smartphone-integrated colorimetric sensor array-based reader system and fluorometric detection of dopamine in male and female geriatric plasma by bluish-green fluorescent carbon quantum dots. Mater. Today Bio 2021, 12, 100168. [Google Scholar] [CrossRef]

- Böck, F.C.; Helfer, G.A.; da Costa, A.B.; Dessuy, M.B.; Flôres Ferrão, M. PhotoMetrix and colorimetric image analysis using smartphones. J. Chemom. 2020, 34, e3251. [Google Scholar] [CrossRef]

- Wongniramaikul, W.; Kleangklao, B.; Boonkanon, C.; Taweekarn, T.; Phatthanawiwat, K.; Sriprom, W.; Limsakul, W.; Towanlong, W.; Tipmanee, D.; Choodum, A. Portable Colorimetric Hydrogel Test Kits and On-Mobile Digital Image Colorimetry for On-Site Determination of Nutrients in Water. Molecules 2022, 27, 7287. [Google Scholar] [CrossRef]

- Yoo, Y.; Yoo, W.S. Turning Image Sensors into Position and Time Sensitive Quantitative Colorimetric Data Sources with the Aid of Novel Image Processing/Analysis Software. Sensors 2020, 20, 6418. [Google Scholar] [CrossRef]

- Kim, G.; Kim, J.G.; Kang, K.; Yoo, W.S. Image-Based Quantitative Analysis of Foxing Stains on Old Printed Paper Documents. Heritage 2019, 2, 2665–2677. [Google Scholar] [CrossRef]

- Yoo, W.S.; Kim, J.G.; Kang, K.; Yoo, Y. Extraction of Colour Information from Digital Images Towards Cultural Heritage Characterisation Applications. SPAFA J. 2021, 5, 1–14. [Google Scholar] [CrossRef]

- Yoo, W.S.; Kang, K.; Kim, J.G.; Yoo, Y. Extraction of Color Information and Visualization of Color Differences between Digital Images through Pixel-by-Pixel Color-Difference Mapping. Heritage 2022, 5, 3923–3945. [Google Scholar] [CrossRef]

- Yoo, Y.; Yoo, W.S. Digital Image Comparisons for Investigating Aging Effects and Artificial Modifications Using Image Analysis Software. J. Conserv. Sci. 2021, 37, 1–12. [Google Scholar] [CrossRef]

- Kim, J.G.; Yoo, W.S.; Jang, Y.S.; Lee, W.J.; Yeo, I.G. Identification of Polytype and Estimation of Carrier Concentration of Silicon Carbide Wafers by Analysis of Apparent Color using Image Processing Software. ECS J. Solid State Sci. Technol. 2022, 11, 064003. [Google Scholar] [CrossRef]

- Yoo, W.S.; Kang, K.; Kim, J.G.; Jung, Y.-H. Development of Image Analysis Software for Archaeological Applications. Adv. Southeast Asian Archaeol. 2019, 2, 402–411. [Google Scholar]

- Yoo, W.S.; Han, H.S.; Kim, J.G.; Kang, K.; Jeon, H.-S.; Moon, J.-Y.; Park, H. Development of a tablet PC-based portable device for colorimetric determination of assays including COVID-19 and other pathogenic microorganisms. RSC Adv. 2020, 10, 32946–32952. [Google Scholar] [CrossRef] [PubMed]

- Wakamoto, K.; Otsuka, T.; Nakahara, K.; Namazu, T. Degradation Mechanism of Pressure-Assisted Sintered Silver by Thermal Shock Test. Energies 2021, 14, 5532. [Google Scholar] [CrossRef]

- Jo, S.-I.; Jeong, G.-H. Single-Walled Carbon Nanotube Synthesis Yield Variation in a Horizontal Chemical Vapor Deposition Reactor. Nanomaterials 2021, 11, 3293. [Google Scholar] [CrossRef]

- Zhu, C.; Espulgar, W.V.; Yoo, W.S.; Koyama, S.; Dou, X.; Kumanogoh, A.; Tamiya, E.; Takamatsu, H.; Saito, M. Single Cell Receptor Analysis Aided by a Centrifugal Microfluidic Device for Immune Cells Profiling. Bull. Chem. Soc. Jpn. 2019, 92, 1834–1839. [Google Scholar] [CrossRef]

- Men’s T-Shirts. Available online: https://www.pinterest.com/pin/624733779558363886/ (accessed on 28 February 2023).

- AquaChek Color Chart. Available online: https://www.masterspaparts.com/aquachek-color-chart/ (accessed on 28 February 2023).

- What Is Chinese Tongue Diagnosis? Available online: https://www.lucyclarkeacupuncture.co.uk/what-is-chinese-tongue-diagnosis/ (accessed on 28 February 2023).

- Color Model. Available online: https://en.wikipedia.org/wiki/Color_model (accessed on 28 February 2023).

- Color Space. Available online: https://en.wikipedia.org/wiki/Color_space (accessed on 28 February 2023).

- Color Conversion. Available online: https://en.wikipedia.org/wiki/HSL_and_HSV (accessed on 28 February 2023).

- Palum, R. Image Sampling with the Bayer Color Filter Array. In PICS 2001: Image Processing, Image Quality, Image Capture, Systems Conference, Montréal, QC, Canada, 22–25 April 2001; The Society for Imaging Science and Technology: Cambridge, MA, USA; pp. 239–245. ISBN 0-89208-232-1.

- Zhao, M.; Liu, Q.; Jha, A.; Deng, R.; Yao, T.; Mahadevan-Jansen, A.; Tyska, M.J.; Millis, B.A.; Huo, Y. VoxelEmbed: 3D Instance Segmentation and Tracking with Voxel Embedding based Deep Learning. In Machine Learning in Medical Imaging; Lian, C., Cao, X., Rekik, I., Xu, X., Yan, P., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 12966, pp. 437–446. [Google Scholar] [CrossRef]

- Yao, T.; Qu, C.; Liu, Q.; Deng, R.; Tian, Y.; Xu, J.; Jha, A.; Bao, S.; Zhao, M.; Fogo, A.B.; et al. Compound Figure Separation of Biomedical Images with Side Loss. In Deep Generative Models, and Data Augmentation, Labelling, and Imperfections; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 13003, p. 183. [Google Scholar] [CrossRef]

- Zheng, Q.; Yang, M.; Zhang, Q.; Zhang, X. Fine-grained image classification based on the combination of artificial features and deep convolutional activation features. In Proceedings of the 2017 IEEE/CIC International Conference on Communications in China (ICCC), Qingdao, China, 22–24 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, Q.; Yang, M.; Tian, X.; Wang, X.; Wang, D. Rethinking the Role of Activation Functions in Deep Convolutional Neural Networks for Image Classification. Eng. Lett. 2020, 28, EL_28_1_11. [Google Scholar]

- Yoo, W.S. The World’s Oldest Book Printed by Movable Metal Type in Korea in 1239: The Song of Enlightenment. Heritage 2022, 5, 1089–1119. [Google Scholar] [CrossRef]

- Yoo, W.S. How Was the World’s Oldest Metal-Type-Printed Book (The Song of Enlightenment, Korea, 1239) Misidentified for Nearly 50 Years? Heritage 2022, 5, 1779–1804. [Google Scholar] [CrossRef]

- Yoo, W.S. Direct Evidence of Metal Type Printing in The Song of Enlightenment, Korea, 1239. Heritage 2022, 5, 3329–3358. [Google Scholar] [CrossRef]

- Yoo, W.S. Ink Tone Analysis of Printed Character Images towards Identification of Medieval Korean Printing Technique: The Song of Enlightenment (1239), the Jikji (1377) and the Gutenberg Bible (~1455). Heritage 2023, 6, 2559–2581. [Google Scholar] [CrossRef]

- PicManTV. Available online: https://www.youtube.com/@picman-TV (accessed on 28 February 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).