The Dual Functions of Adaptors

Abstract

1. Introduction

2. Literature Review

2.1. Methodological Considerations with Adaptors

2.2. Functions

3. Materials and Methods

3.1. Participants

3.2. Procedure

3.3. Analysis

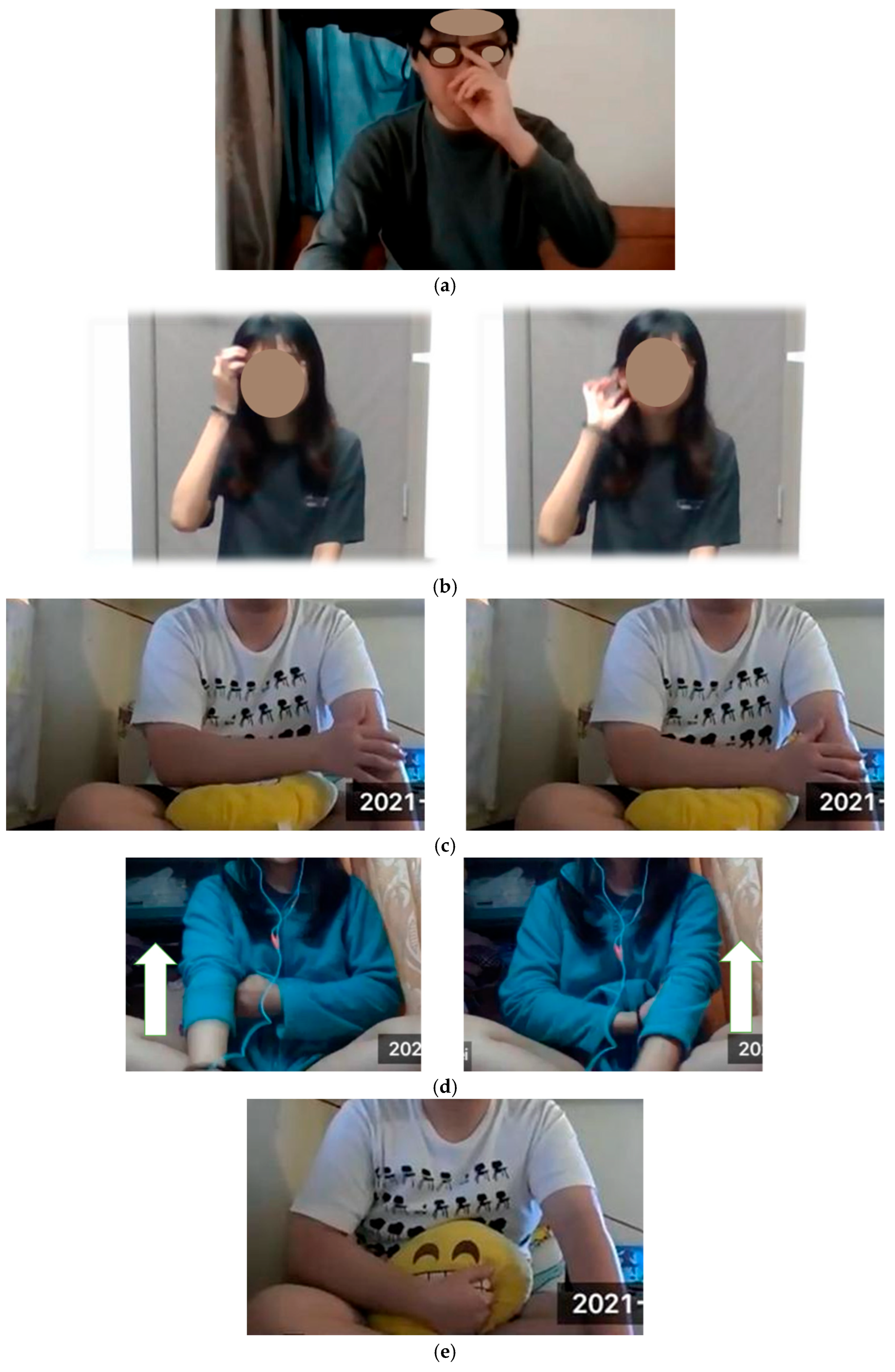

- Pushing glasses up (most common in our corpus, as the majority of our speakers wore glasses) (Figure 5a).

- Brushing away or smoothing hair (this can be a longer gesture if slow) (Figure 5b).

- Touching ear/nose/face mask (Figure 4).

- Hand to neck/chin/forehead as if thinking (the hand can remain in place for longer than 3 s) (Figure 1).

- Hand to wrist/elbow usually with a change in posture (Figure 5c).

- Pulling sleeve/trousers cuffs (Figure 5d).

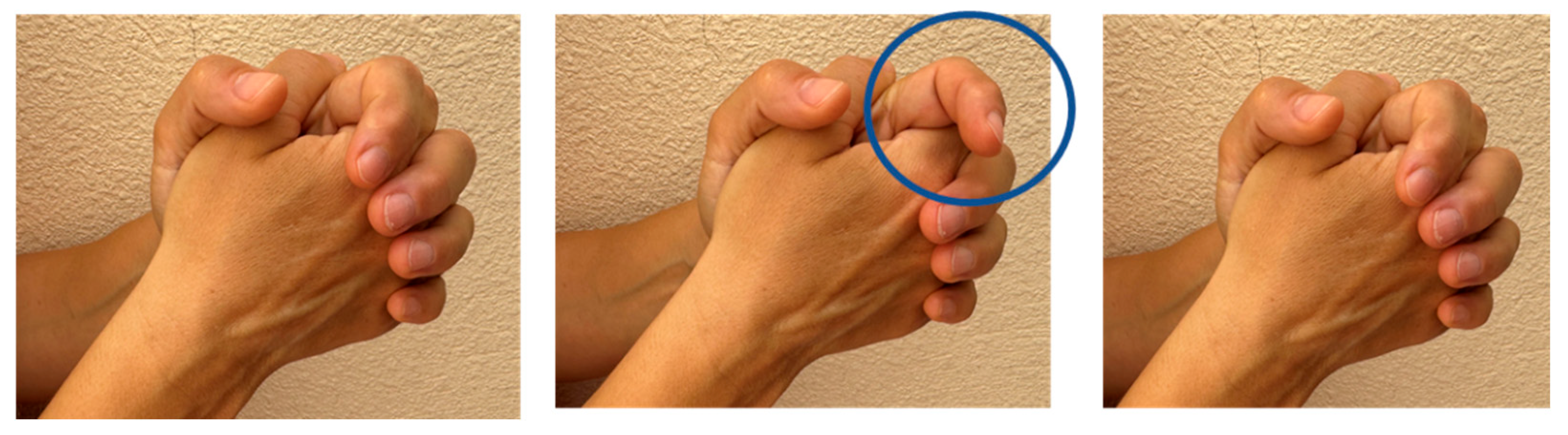

- Distinct continuous gestures, potentially to answer environmental or bodily needs, such as scratching or rubbing hands together or a hand against another part of the body/clothing (Figure 2).

- Handling items not related to speech, like fidgeting with a tissue/back of a mat/back of a shoe/cushion (Figure 5e).

4. Results

4.1. Context Differences in Monologues: F2F vs. Online

4.2. Context Differences in Dialogues: F2F vs. Online

4.3. Task Differences: Dialogues vs. Monologues

4.4. Interaction of Medium and Task

4.5. Task Familiarity

5. Discussion

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Flutters in Dialogues (Speaking Role) | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-Value | Effect Size | CI_Lower | CI_Upper |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Women | 27 | 0.171 | 0.149 | 0.137 | 0.081 | 0.218 | 181 | 0.887 | 0.0251 | 0.0054 | 0.37 |

| Men | 13 | 0.177 | 0.160 | 0.103 | 0.060 | 0.265 | |||||

| Adaptors in dialogues (speaking role) | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper | ||||||

| Women | 27 | 1.796 | 1.926 | 1.225 | 0.449 | 2.571 | 185 | 0.795 | 0.0434 | 0.0045 | 0.34 |

| Men | 13 | 1.280 | 0.757 | 1.075 | 0.621 | 1.966 | |||||

| Flutters in monologues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper |

| Women | 26 | 0.138 | 0.176 | 0.073 | 0.014 | 0.179 | 148.5 | 0.551 | 0.0978 | 0.0047 | 0.42 |

| Men | 13 | 0.120 | 0.089 | 0.106 | 0.044 | 0.201 | |||||

| Other adaptors in monologues | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper | ||||||

| Women | 26 | 2.985 | 2.324 | 2.667 | 1.167 | 4.154 | 176 | 0.848 | 0.0330 | 0.005 | 0.35 |

| Men | 13 | 2.547 | 1.586 | 2.857 | 1.768 | 3.476 | |||||

| Proficiency | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper |

| Online | 20 | 85.927 | 8.213 | 85.600 | 78.533 | 90.000 | 201.5 | 0.978 | 0.00641639 | 0.0044 | 0.35 |

| F2F | 20 | 86.027 | 10.060 | 83.033 | 79.317 | 92.100 | |||||

| Speech rate in dialogues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper |

| online | 20 | 137.716 | 25.363 | 139.162 | 130.671 | 151.843 | 229 | 0.445 | 0.124 | 0.006 | 0.44 |

| F2F | 20 | 136.647 | 28.633 | 127.304 | 120.381 | 152.549 | |||||

| Speech rate in monologues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | CI_Upper |

| online | 19 | 133.072 | 20.987 | 132.171 | 127.369 | 147.670 | 193 | 0.945 | 0.013 | 0.005 | 0.37 |

| F2F | 20 | 136.521 | 29.684 | 132.503 | 115.822 | 146.476 | |||||

| Flutters in online monologues by session | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Wilcoxon signed-rank | p-value | Effect Size | CI_Lower | CI_Upper |

| Session 1 | 19 | 0.099 | 0.114 | 0.072 | 0.020 | 0.132 | 54 | 0.103 | 0.368 | −0.053 | 0.684 |

| Session 2 | 19 | 0.193 | 0.286 | 0.095 | 0.057 | 0.204 | |||||

| Other adaptors in online monologues by session | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Wilcoxon signed-rank | p-value | Effect Size | CI_Lower | CI_Upper |

| Session 1 | 19 | 2.249 | 1.863 | 1.835 | 0.769 | 2.911 | 126 | 0.082 | −0.421 | −0.789 | 0 |

| Session 2 | 19 | 1.861 | 2.460 | 0.800 | 0.252 | 2.122 | |||||

| Other adaptors by 100 words and flutter duration | By task | ||||||||||

| Other adaptors in monologues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | adjusted p | Effect Size | CI_Lower |

| Online | 19 | 2.249 | 1.863 | 1.835 | 0.769 | 2.911 | 123 | 0.0612 | 0.1836 | 0.301 | 0.03 |

| F2F | 20 | 3.400 | 2.194 | 3.085 | 2.357 | 4.511 | |||||

| Other adaptors in dialogues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | |

| Online | 20 | 1.190 | 1.100 | 0.967 | 0.418 | 1.582 | 140 | 0.107 | 0.214 | 0.257 | 0.01 |

| F2F | 20 | 2.067 | 1.987 | 1.598 | 0.723 | 2.505 | |||||

| Flutters in monologues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | EffectSize | CI_Lower | |

| Online | 19 | 0.099 | 0.114 | 0.072 | 0.020 | 0.132 | 141 | 0.173 | 0.214 | 0.220 | 0.0091 |

| F2F | 20 | 0.164 | 0.177 | 0.118 | 0.022 | 0.211 | |||||

| Flutters in dialogues | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Mann–Whitney U | p-value | Effect Size | CI_Lower | |

| Online | 20 | 0.227 | 0.161 | 0.165 | 0.125 | 0.286 | 304 | 0.004 | 0.01708 | 0.445 | 0.15 |

| F2F | 20 | 0.119 | 0.120 | 0.061 | 0.035 | 0.179 | |||||

| Other adaptors per 100 words and flutter duration | By role | ||||||||||

| Other adaptors while speaking | N | Mean | SD | Median | Mann–Whitney U | p-value | adjusted p | Effect Size | CI_Lower | ||

| online | 20 | 0.967 | 0.418 | 1.58 | 140 | 0.107 | 0.107 | 0.257 | 0.02 | ||

| F2F | 20 | 1.598 | 0.723 | 2.505 | |||||||

| Other adaptors while listening | N | Mean | SD | Median | Mann–Whitney U | p-value | adjusted p | Effect Size | CI_Lower | ||

| online | 20 | 0.488 | 0 | 0.952 | 105 | 0.010 | 0.0309 | 0.408 | 0.12 | ||

| F2F | 20 | 1.569 | 0.544 | 2.295 | |||||||

| Flutters while speaking | N | Mean | SD | Median | Mann–Whitney U | p-value | adjusted p | Effect Size | CI_Lower | ||

| online | 20 | 0.165 | 0.125 | 0.286 | 304 | 0.004 | 0.01708 | 0.445 | 0.15 | ||

| F2F | 20 | 0.061 | 0.035 | 0.179 | |||||||

| Flutters while listening | N | Mean | SD | Median | Mann–Whitney U | p-value | adjusted p | Effect Size | CI_Lower | ||

| online | 20 | 0.377 | 0.193 | 0.649 | 275 | 0.043 | 0.086 | 0.321 | 0.04 | ||

| F2F | 20 | 0.242 | 0.081 | 0.329 | |||||||

| All data | |||||||||||

| Flutter duration per speech duration | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower |

| Dialogue | 39 | 0.158 | 0.121 | 0.133 | 0.061 | 0.228 | 509 | 0.098 | 0.295 | 0.265 | −0.590 |

| Monologue | 39 | 0.132 | 0.151 | 0.078 | 0.021 | 0.194 | |||||

| Other adaptors per 100 words | N | Mean | SD | Median | IQR_Lower | IQR_Upper | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower |

| Dialogue | 39 | 1.670 | 1.646 | 1.213 | 0.569 | 2.108 | 198 | 0.008 | 0.038 | 0.428 | −0.026 |

| Monologue | 39 | 2.839 | 2.096 | 2.679 | 1.176 | 3.501 | |||||

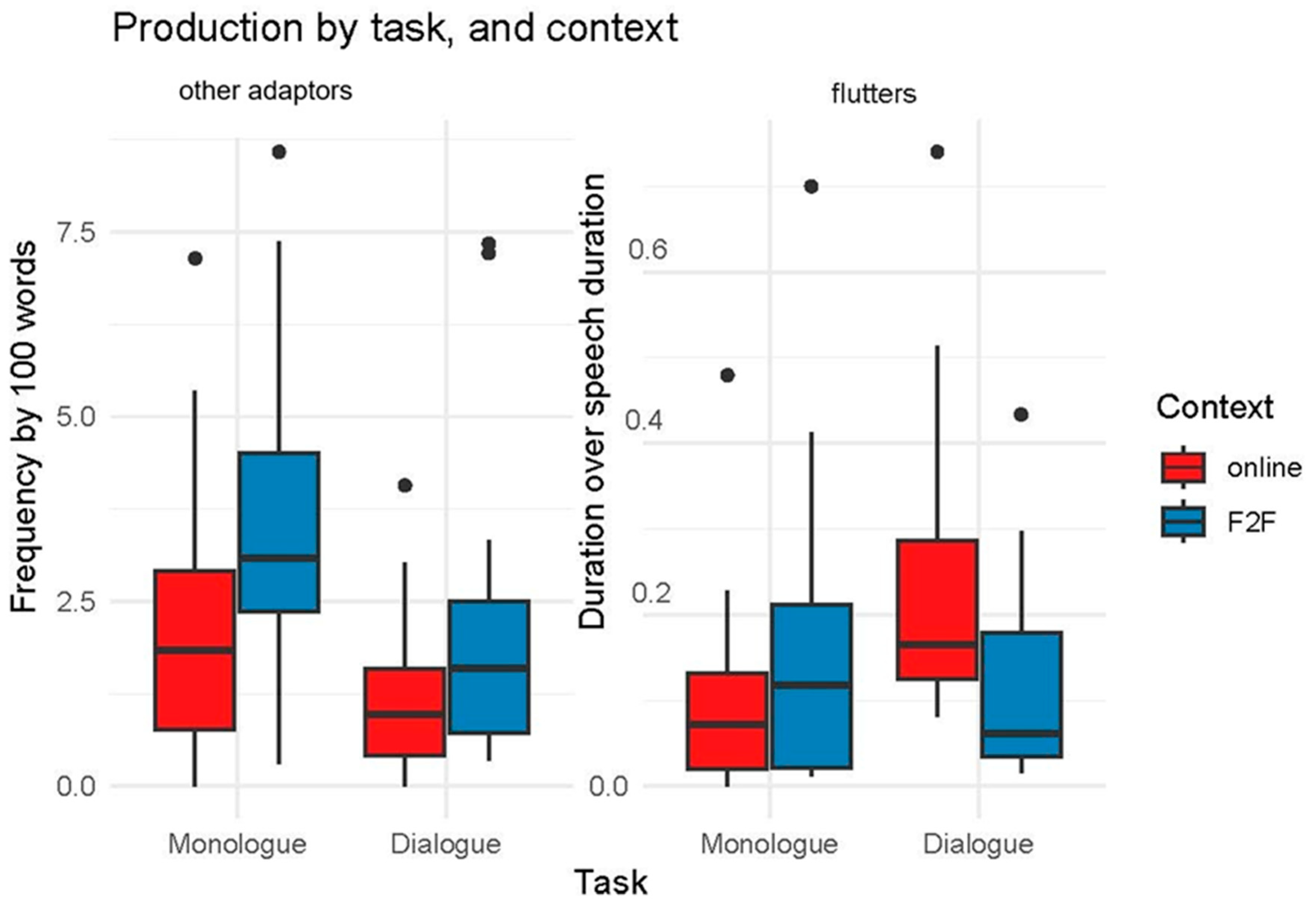

| Production by task and context | |||||||||||

| Flutter duration per speech duration | online | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower | |||||

| Dialogue | 19 | 0.200 | 0.109 | 0.159 | 0.124 | 0.258 | 166 | 0.005 | 0.027 | 0.651 | −1 |

| Monologue | 19 | 0.099 | 0.114 | 0.072 | 0.020 | 0.132 | |||||

| Other adaptors per 100 words | online | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower | |||||

| Dialogue | 19 | 1.252 | 1.093 | 1.205 | 0.449 | 1.587 | 55 | 0.112 | 0.295 | 0.365 | −0.263 |

| Monologue | 19 | 2.249 | 1.863 | 1.835 | 0.769 | 2.911 | |||||

| Flutter duration per speech duration | F2F | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower | |||||

| Dialogue | 20 | 0.119 | 0.120 | 0.061 | 0.035 | 0.179 | 85 | 0.467 | 0.467 | 0.163 | −0.3 |

| Monologue | 20 | 0.164 | 0.177 | 0.118 | 0.022 | 0.211 | |||||

| Other adaptors per 100 words | F2F | Wilcoxon signed-rank | p-value | p_adjusted | Effect Size_r | CI_Lower | |||||

| Dialogue | 20 | 2.067 | 1.987 | 1.598 | 0.723 | 2.505 | 50 | 0.042 | 0.168 | 0.455 | 0 |

| Monologue | 20 | 3.400 | 2.194 | 3.085 | 2.357 | 4.511 |

References

- Aslan, Z., Özer, D., & Göksun, T. (2024). Exploring emotions through co-speech gestures: The caveats and new directions. Emotion Review, 16(4), 265–275. [Google Scholar] [CrossRef]

- Barroso, F., Freedman, N., & Grand, S. (1980). Self-touching, performance, and attentional processes. Perceptual and Motor Skills, 50(Suppl. 3), 1083–1089. [Google Scholar] [CrossRef]

- Boomer, D. S., & Dittmann, A. T. (1962). Hesitation pauses and juncture pauses in speech. Language and Speech, 5(4), 215–220. [Google Scholar] [CrossRef]

- Campbell, A., & Rushton, J. P. (1978). Bodily communication and personality. British Journal of Social and Clinical Psychology, 17(1), 31–36. [Google Scholar] [CrossRef]

- Canarslan, F., & Chu, M. (2024). Individual differences in representational gesture production are associated with cognitive and empathy skills. Quarterly Journal of Experimental Psychology, 78(1), 17470218241245831. [Google Scholar] [CrossRef]

- Caplan, S. E. (2007). Relations among loneliness, social anxiety, and problematic Internet use. CyberPsychology & Behavior, 10(2), 234–242. [Google Scholar] [CrossRef] [PubMed]

- Carriere, J. S., Seli, P., & Smilek, D. (2013). Wandering in both mind and body: Individual differences in mind wandering and inattention predict fidgeting. Canadian Journal of Experimental Psychology/Revue Canadienne de Psychologie Expérimentale, 67(1), 19. [Google Scholar] [CrossRef] [PubMed]

- Chartrand, T. L., & Bargh, J. A. (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76(6), 893. [Google Scholar] [CrossRef] [PubMed]

- Chui, K., Lee, C. Y., Yeh, K., & Chao, P. C. (2018). Semantic processing of self-adaptors, emblems, and iconic gestures: An ERP study. Journal of Neurolinguistics, 47, 105–122. [Google Scholar] [CrossRef]

- Cienki, A. (2024). Self-focused versus dialogic features of gesturing during simultaneous interpreting. Russian Journal of Linguistics, 28(2), 227–242. [Google Scholar] [CrossRef]

- Cienki, A. (2025). Functions of gestures during disfluent and fluent speech in simultaneous interpreting. Parallèles, 37(1), 29–46. [Google Scholar]

- Clough, S., & Duff, M. C. (2020). The role of gesture in communication and cognition: Implications for understanding and treating neurogenic communication disorders. Frontiers in Human Neuroscience, 14, 323. [Google Scholar] [CrossRef]

- Council of Europe. Council for Cultural Co-operation. Education Committee. Modern Languages Division. (2001). Common European framework of reference for languages: Learning, teaching, assessment. Cambridge University Press. [Google Scholar]

- Cuñado Yuste, Á. (2017). Relación entre Rasgos de Personalidad y Gestos: ¿expresamos lo que somos? Behavior & Law Journal (Online), 3(1), 35–41. [Google Scholar] [CrossRef]

- Edelmann, R. J., & Hampson, S. E. (1979). Changes in non-verbal behaviour during embarrassment. British Journal of Social and Clinical Psychology, 18(4), 385–390. [Google Scholar] [CrossRef]

- Ekman, P., & Friesen, W. V. (1969). The repertoire of nonverbal behavior: Categories, origins, usage, and coding. Semiotica, 1(1), 49–98. [Google Scholar] [CrossRef]

- Ekman, P., & Friesen, W. V. (1972). Hand movements. Journal of Communication, 22(4), 353–374. [Google Scholar] [CrossRef]

- Frances, S. J. (1979). Sex differences in nonverbal behavior. Sex Roles, 5(4), 519–535. [Google Scholar] [CrossRef]

- Freedman, N. (1972). The analysis of movement behavior during the clinical interview. Studies in Dyadic Communication, 7, 153–175. [Google Scholar]

- Freedman, N. (1977). Hands, words, and mind. In N. Freedman, & S. Grand (Eds.), Communicative structures and psychic structures (pp. 109–132). Springer. [Google Scholar]

- Freedman, N., & Hoffman, S. P. (1967). Kinetic behavior in altered clinical states: Approach to objective analysis of motor behavior during clinical interviews. Perceptual and Motor Skills, 24(2), 527–539. [Google Scholar] [CrossRef] [PubMed]

- Genova, B. K. L. (1974). A view on the function of self-adaptors and their communication consequences. ERIC Clearinghouse.

- Germana, J. (1969). Effects of behavioral responding on skin conductance level. Psychological Reports, 24(2), 599–605. [Google Scholar] [CrossRef] [PubMed]

- Graziano, M., & Gullberg, M. (2024). Providing evidence for a well-worn stereotype: Italians and Swedes do gesture differently. Frontiers in Communication, 9, 1314120. [Google Scholar] [CrossRef]

- Guo, X. H., Goldin-Meadow, S., & Bainbridge, W. A. (2024). What makes co-speech gestures memorable? Available online: https://osf.io/7shx2/download (accessed on 3 September 2025).

- Harrigan, J. A. (1985). Self-touching as an indicator of underlying affect and language processes. Social Science & Medicine, 20(11), 1161–1168. [Google Scholar] [CrossRef]

- Harrigan, J. A., Kues, J. R., Steffen, J. J., & Rosenthal, R. (1987). Self-touching and impressions of others. Personality and Social Psychology Bulletin, 13(4), 497–512. [Google Scholar] [CrossRef]

- Heaven, L., & McBrayer, D. (2000). External motivators of self-touching behavior. Perceptual and Motor Skills, 90(1), 338–342. [Google Scholar] [CrossRef]

- Ishioh, T., & Koda, T. (2016, October 4–7). Cross-cultural study of perception and acceptance of Japanese self-adaptors. Fourth International Conference on Human Agent Interaction (pp. 71–74), Singapore. [Google Scholar]

- Kendon, A. (2004). Gesture. Cambridge University Press. [Google Scholar]

- Kimura, D. (1976). The neural basis of language qua gesture. In Studies in neurolinguistics (pp. 145–156). Academic Press. [Google Scholar]

- Koda, T., & Mori, Y. (2014). Effects of an agent’s displaying self-adaptors during a serious conversation. In Intelligent Virtual Agents: 14th International Conference, IVA 2014, Boston, MA, USA, August 27–29, 2014. Proceedings 14 (pp. 240–249). Springer International Publishing. [Google Scholar]

- Kosmala, L. (2024). Chapter 6. On the relationship between inter-(dis)fluency and gesture. In Beyond disfluency: The interplay of speech, gesture, and interaction (pp. 191–215). John Benjamins Publishing Company. [Google Scholar] [CrossRef]

- LeCompte, W. A. (1981). The ecology of anxiety: Situational stress and rate of self-stimulation in Turkey. Journal of Personality and Social Psychology, 40(4), 712. [Google Scholar] [CrossRef]

- Li, G. (2023). Adaptors do more than indicate emotional distress: Can they be discourse markers? [Master’s dissertation, The Hong Kong Polytechnic University]. [Google Scholar]

- Li, H. (2025). Higher empathy predicts more manual pointing in Tibetan people. Gesture, 23(1–2), 45–63. [Google Scholar] [CrossRef]

- Lin, W., Orton, I., Li, Q., Pavarini, G., & Mahmoud, M. (2021). Looking at the body: Automatic analysis of body gestures and self-adaptors in psychological distress. IEEE Transactions on Affective Computing, 14, 1175–1187. [Google Scholar] [CrossRef]

- Lopez-Ozieblo, R. (2020). Proposing a revised functional classification of pragmatic gestures. Lingua, 247, 102870. [Google Scholar] [CrossRef]

- Lopez-Ozieblo, R. (2024). Is personality reflected in the gestures of second language speakers? Frontiers in Psychology, 15, 1463063. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Ozieblo, R., & Kosmala, L. (2025, July 9–11). Gesture use and interactional dynamics in L2 speakers: A comparative study of face-to-face and online interactions. 10th International Society for Gesture Studies 2025, Nijmegen, The Netherlands. [Google Scholar]

- Maeda, S. (2023). No differential responsiveness to face-to-face communication and video call in individuals with elevated social anxiety. Journal of Affective Disorders Reports, 11, 100467. [Google Scholar] [CrossRef]

- Mahl, G. F. (1968). Gestures and Body Movements in Interviews. In Research in psychotherapy. American Psychological Association. [Google Scholar]

- Mahmoud, M., Morency, L. P., & Robinson, P. (2013, December 9–13). Automatic multimodal descriptors of rhythmic body movement. 15th ACM on International Conference on Multimodal Interaction (pp. 429–436), Sydney, Australia. [Google Scholar]

- Maricchiolo, F., Gnisci, A., & Bonaiuto, M. (2012). Coding hand gestures: A reliable taxonomy and a multi-media support. In Cognitive behavioural systems: COST 2102 international training school, Dresden, Germany, February 21–26, 2011, revised selected papers (pp. 405–416). Springer. [Google Scholar]

- McCrae, R. R., Costa, P. T., de Lima, M. P., Simões, A., Ostendorf, F., Angleitner, A., Marušić, I., Bratko, D., Caprara, G. V., Barbaranelli, C., Chae, J.-H., & Piedmont, R. L. (1999). Age differences in personality across the adult life span: Parallels in five cultures. Developmental Psychology, 35(2), 466–477. [Google Scholar] [CrossRef]

- McNeill, D. (2008). Gesture and thought. University of Chicago Press. [Google Scholar]

- Mehrabian, A. (1966). Immediacy: An indicator of attitudes in linguistic communication. Journal of Personality, 34(1), 26–34. [Google Scholar] [CrossRef]

- Mehrabian, A., & Friedman, S. L. (1986). An analysis of fidgeting and associated individual differences. Journal of Personality, 54(2), 406–429. [Google Scholar] [CrossRef]

- Mohiyeddini, C., Bauer, S., & Semple, S. (2015). Neuroticism and stress: The role of displacement behavior. Anxiety, Stress, & Coping, 28(4), 391–407. [Google Scholar] [CrossRef]

- Mohiyeddini, C., & Semple, S. (2013). Displacement behaviour regulates the experience of stress in men. Stress, 16(2), 163–171. [Google Scholar] [CrossRef]

- Morency, L. P., Christoudias, C. M., & Darrell, T. (2006, November 2–4). Recognizing gaze aversion gestures in embodied conversational discourse. 8th International Conference on Multimodal Interfaces (pp. 287–294), Banff, AB, Canada. [Google Scholar] [CrossRef]

- Myford, C. M., & Wolfe, E. W. (2003). Detecting and measuring rater effects using many-facet Rasch measurement: Part I. Journal of Applied Measurement, 4(4), 386–422. [Google Scholar]

- Nicoladis, E., Aneja, A., Sidhu, J., & Dhanoa, A. (2022). Is there a correlation between the use of representational gestures and self-adaptors? Journal of Nonverbal Behavior, 46(3), 269–280. [Google Scholar] [CrossRef]

- Pang, H. T., Canarslan, F., & Chu, M. (2022). Individual differences in conversational self-touch frequency correlate with state anxiety. Journal of Nonverbal Behavior, 46(3), 299–319. [Google Scholar] [CrossRef]

- Pierce, T. (2009). Social anxiety and technology: Face-to-face communication versus technological communication among teens. Computers in Human Behavior, 25(6), 1367–1372. [Google Scholar] [CrossRef]

- Rafieifar, M., Hanbidge, A. S., Lorenzini, S. B., & Macgowan, M. J. (2024). Comparative efficacy of online vs. face-to-face group interventions: A systematic review. Research on Social Work Practice, 35(5), 524–542. [Google Scholar] [CrossRef]

- Ricciardi, O., Maggi, P., & Nocera, F. D. (2019). Boredom makes me ‘nervous’: Fidgeting as a strategy for contrasting the lack of variety. International Journal of Human Factors and Ergonomics, 6(3), 195–207. [Google Scholar] [CrossRef]

- Rosenfeld, H. M. (1966). Instrumental affiliative functions of facial and gestural expressions. Journal of Personality and Social Psychology, 4(1), 65. [Google Scholar] [CrossRef]

- Sahlender, M., & ten Hagen, I. (2023). Do teachers adapt their gestures in linguistically heterogeneous second language teaching to learners’ language proficiencies? Gesture, 22(2), 189–226. [Google Scholar] [CrossRef]

- Scherer, S., Stratou, G., Mahmoud, M., Boberg, J., Gratch, J., Rizzo, A., & Morency, L. P. (2013, April 22–26). Automatic behavior descriptors for psychological disorder analysis. 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG) (pp. 1–8), Shanghai, China. [Google Scholar]

- Seli, P., Carriere, J. S. A., Thomson, D. R., Cheyne, J. A., Martens, K. A. E., & Smilek, D. (2014). Restless mind, restless body. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40(3), 660–668. [Google Scholar] [CrossRef]

- Skehan, P., Xiaoyue, B., Qian, L., & Wang, Z. (2012). The task is not enough: Processing approaches to task-based performance. Language Teaching Research, 16(2), 170–187. [Google Scholar] [CrossRef]

- Skogmyr Marian, K., & Pekarek Doehler, S. (2022). Multimodal word-search trajectories in L2 interaction. Social Interaction. Video-Based Studies of Human Sociality, 5(1). [Google Scholar] [CrossRef]

- Sloetjes, H. (2017). ELAN (Version 5.0.0) [Computer program]. Available online: https://archive.mpi.nl/tla/elan (accessed on 3 September 2025).

- Spille, J. L., Grunwald, M., Martin, S., & Mueller, S. M. (2022). The suppression of spontaneous face touch and resulting consequences on memory performance of high and low self-touching individuals. Scientific Reports, 12, 8637. [Google Scholar] [CrossRef] [PubMed]

- Stam, G. (2001). Lexical failure and gesture in second language development [Doctoral dissertation, National St Louis University]. [Google Scholar]

- Waxer, P. H. (1977). Nonverbal cues for anxiety: An examination of emotional leakage. Journal of Abnormal Psychology, 86(3), 306. [Google Scholar] [CrossRef]

- Wray, C., Saunders, N., McGuire, R., Cousins, G., & Norbury, C. F. (2017). Gesture production in language impairment: It’s quality, not quantity, that matters. Journal of Speech, Language, and Hearing Research, 60(4), 969–982. [Google Scholar] [CrossRef]

- Żywiczyński, P., Wacewicz, S., & Orzechowski, S. (2017). Adaptors and the turn-taking mechanism: The distribution of adaptors relative to turn borders in dyadic conversation. Interaction Studies, 18(2), 276–298. [Google Scholar] [CrossRef]

| Total Speaking Time (mins) | Total Gestures | Total no. Other Adaptors | As a % of All Gestures | Total Flutter Time (mins) | As a % of All Gesture Time | ||

|---|---|---|---|---|---|---|---|

| Online | Dialogue | 58.323 | 1583 | 99 | 6% | 13.98 | 24% |

| F2F | Dialogue | 50.134 | 1364 | 124 | 9% | 6.23 | 12% |

| Online | Monologue | 58.648 | 1838 | 162 | 9% | 5.6 | 10% |

| F2F | Monologue | 52.41 | 1537 | 230 | 15% | 8.12 | 15% |

| Total | 219.515 | 6322 | 615 | 10% | 33.93 | 15% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lopez-Ozieblo, R. The Dual Functions of Adaptors. Languages 2025, 10, 231. https://doi.org/10.3390/languages10090231

Lopez-Ozieblo R. The Dual Functions of Adaptors. Languages. 2025; 10(9):231. https://doi.org/10.3390/languages10090231

Chicago/Turabian StyleLopez-Ozieblo, Renia. 2025. "The Dual Functions of Adaptors" Languages 10, no. 9: 231. https://doi.org/10.3390/languages10090231

APA StyleLopez-Ozieblo, R. (2025). The Dual Functions of Adaptors. Languages, 10(9), 231. https://doi.org/10.3390/languages10090231