Distribution and Timing of Verbal Backchannels in Conversational Speech: A Quantitative Study

Abstract

1. Introduction

Design of the Current Study

2. Materials and Annotations

2.1. GRASS

2.2. Turn-Taking Annotations: Points of Potential Completion (PCOMP)

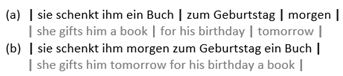

2.2.1. Identifying PCOMPs

“We judged an utterance to be grammatically complete if, in its sequential context, it could be interpreted as a complete clause, i.e., with an overt or directly recoverable predicate, without considering intonation. In the category of grammatically complete utterances, we also included elliptical clauses and answers to questions. […] A grammatical completion point, then, is a point at which the speaker could have stopped and have produced a grammatically complete utterance, though not necessarily one that is accompanied by intonational or interactional completion.”

- Example 1

- Example 2

2.2.2. PCOMP Labels

2.2.3. Annotation Process

2.2.4. Validation of Annotations

3. Methods

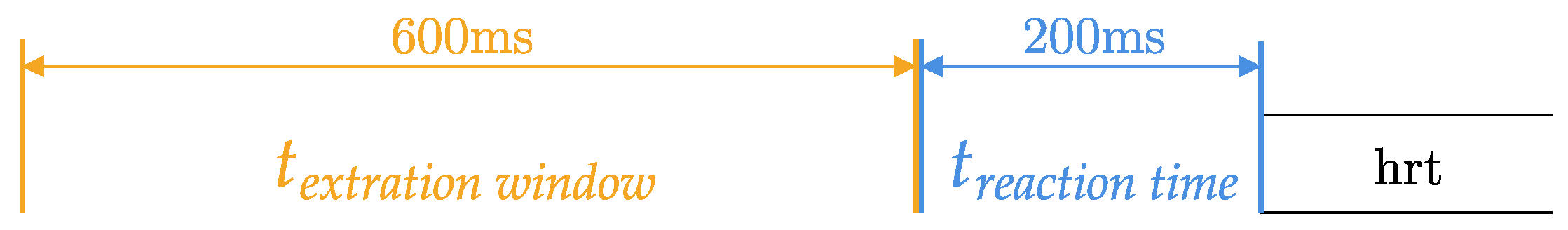

3.1. Label Extraction

- (a)

- The hearer response token and preHRT do not overlap, and the pause between the two utterances is greater than . The extraction window begins at the end of the preHRT and extends backwards.

- (b)

- The hearer response token occurs in the middle of the interlocutor’s turn, resulting in overlap. In this case, the extraction window starts before the hearer response token and spans backwards.

- (c)

- The hearer response token overlaps with a newly started PCOMP interval. If the onset of that interval occurred less than before the hearer response token, it is skipped. The extraction window is then starting at the end of the preceding utterance and extends backwards.

- (d)

- The preHRT is shorter than the default extraction window. In this case, the extraction window is shortened to match the duration of the preHRT.

- (e)

- The hearer response token overlaps with multiple PCOMP annotations. The extraction window is defined as in (b). When multiple labels fall within the extraction window, the one with the greatest overlap, that is, more than of its duration within the extraction window, is selected.

3.1.1. Combining of Labels

3.1.2. Categorical Factors

3.2. Acoustic Feature Extraction

3.2.1. Durational Features

3.2.2. F0 Features

3.2.3. Intensity Features

3.2.4. Articulation Rate Features

3.3. Data Cleaning and Filtering

3.4. Statistical Methods

3.4.1. Conditional Inference Trees

3.4.2. Feature Importance

3.4.3. Linear Mixed-Effects Regression Models

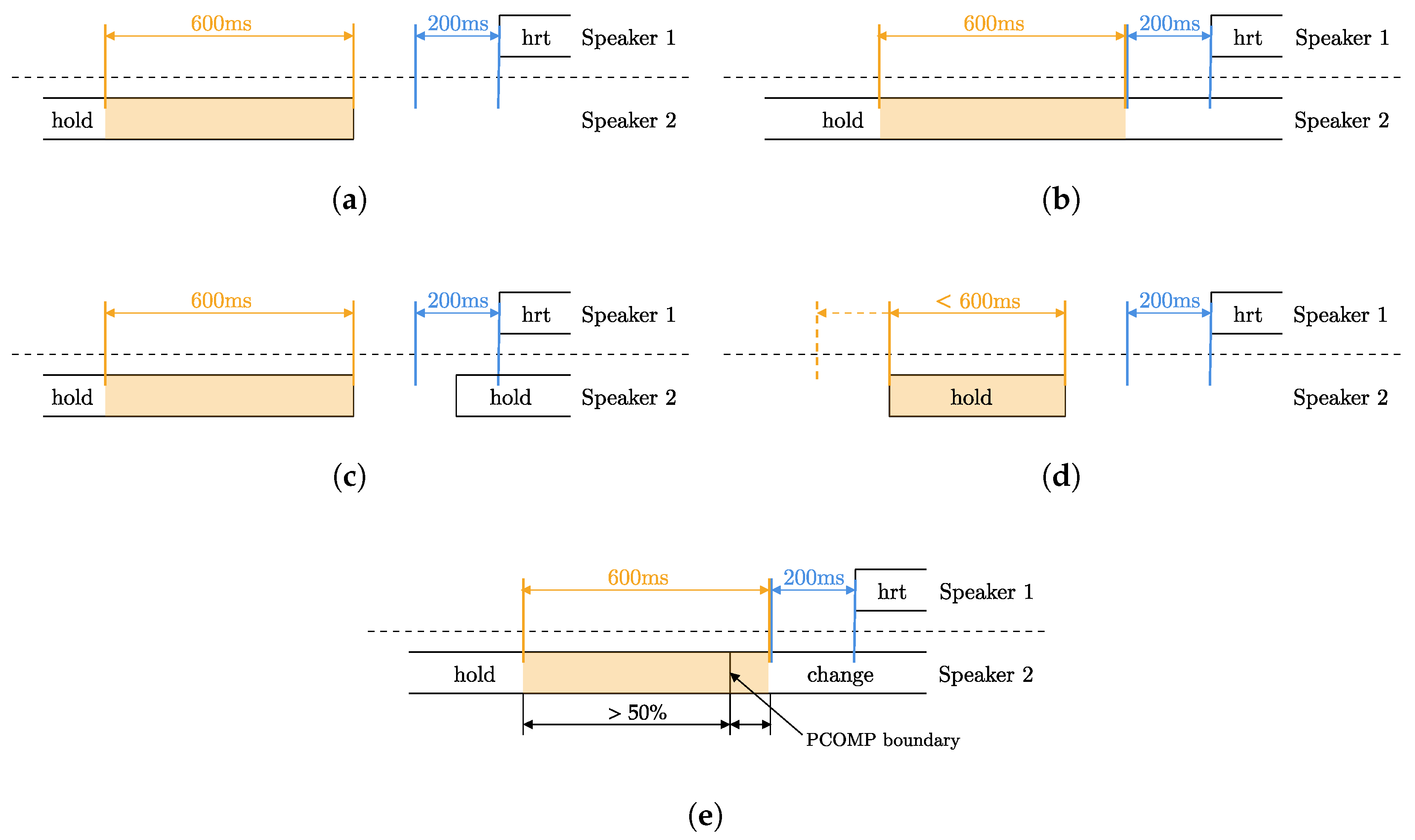

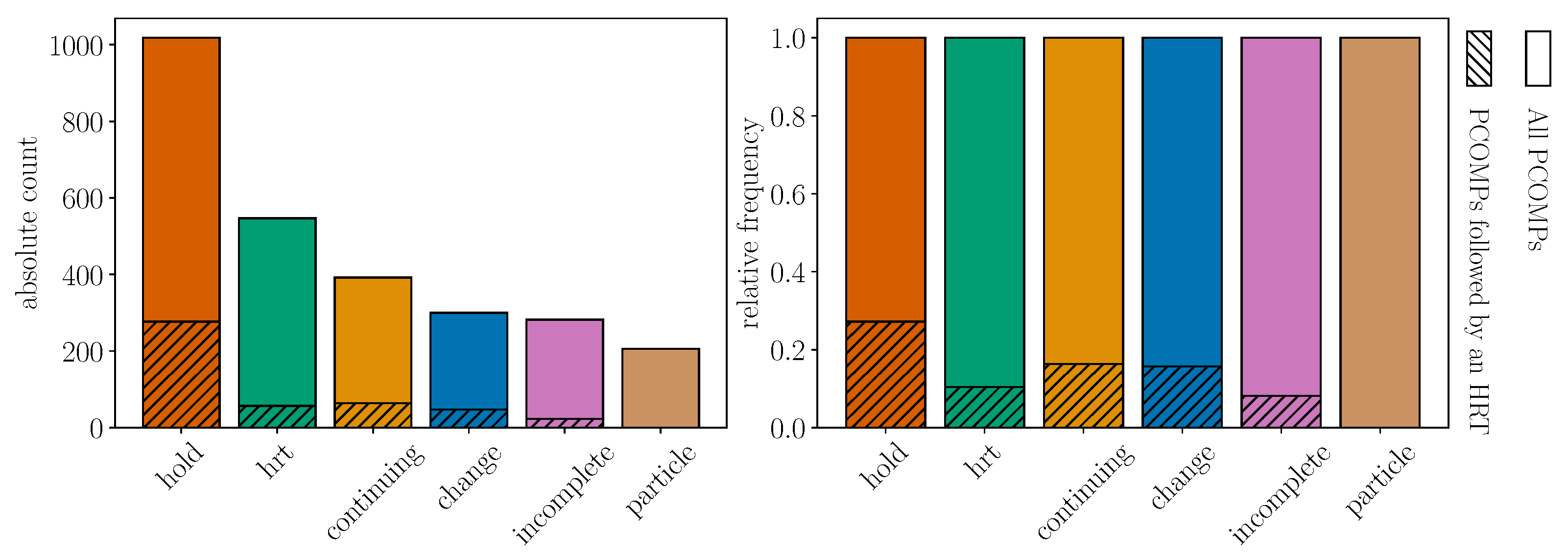

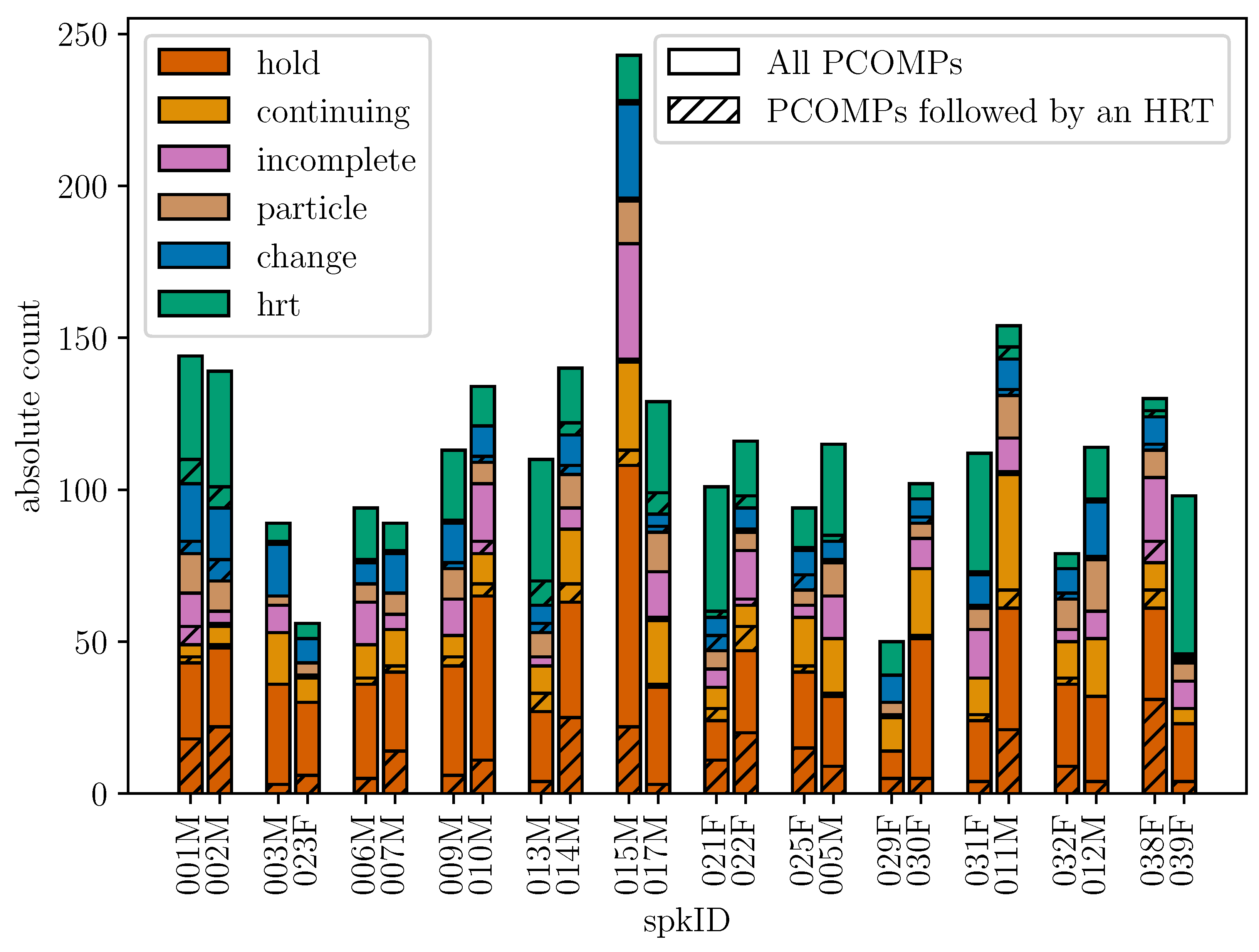

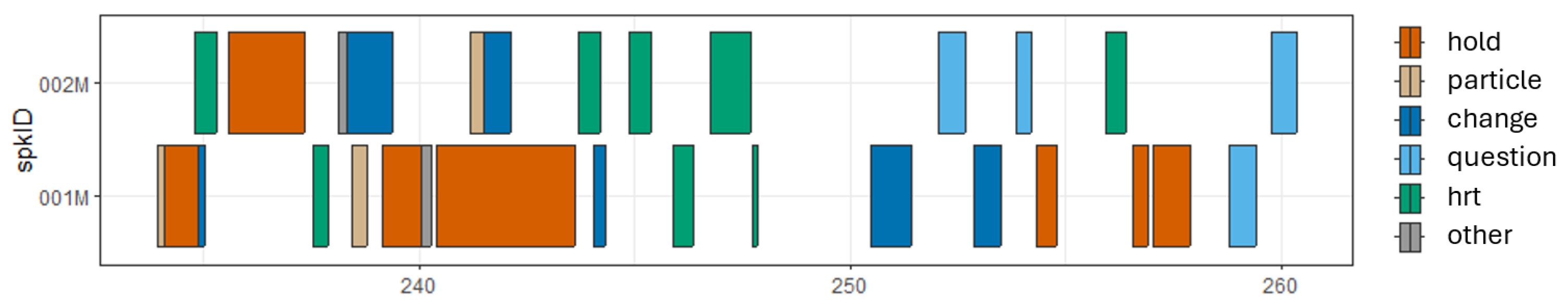

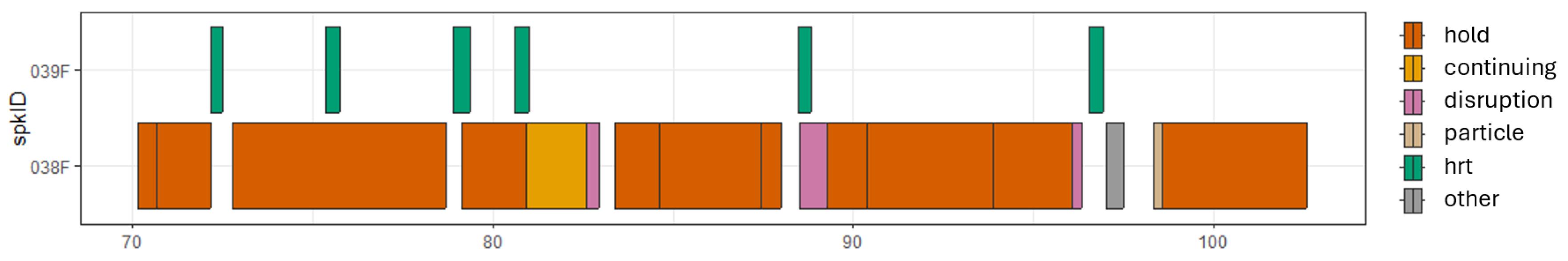

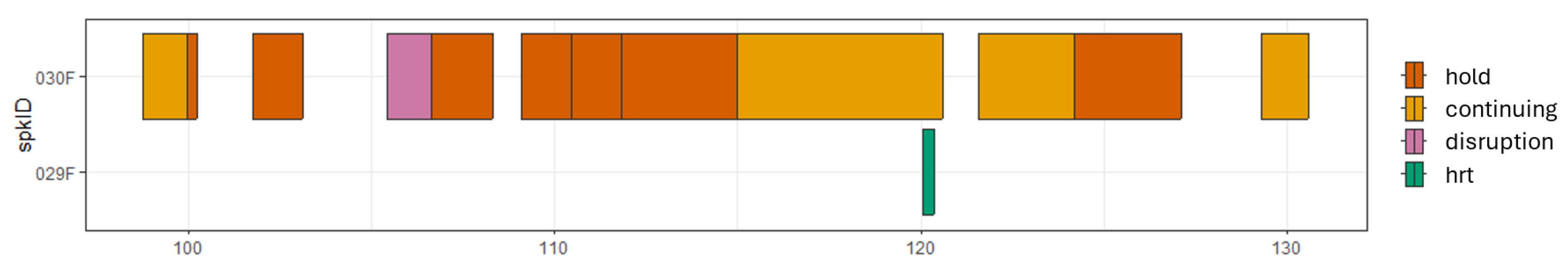

4. How PCOMP Annotations Capture Conversational Dynamics and Backchanneling Behavior

5. Acoustic Analysis of the Interlocutor’s Speech Preceding Hearer Response Tokens

5.1. How Turn-Taking Function and Prosody Affect Whether a Hearer Response Token Occurs or Not

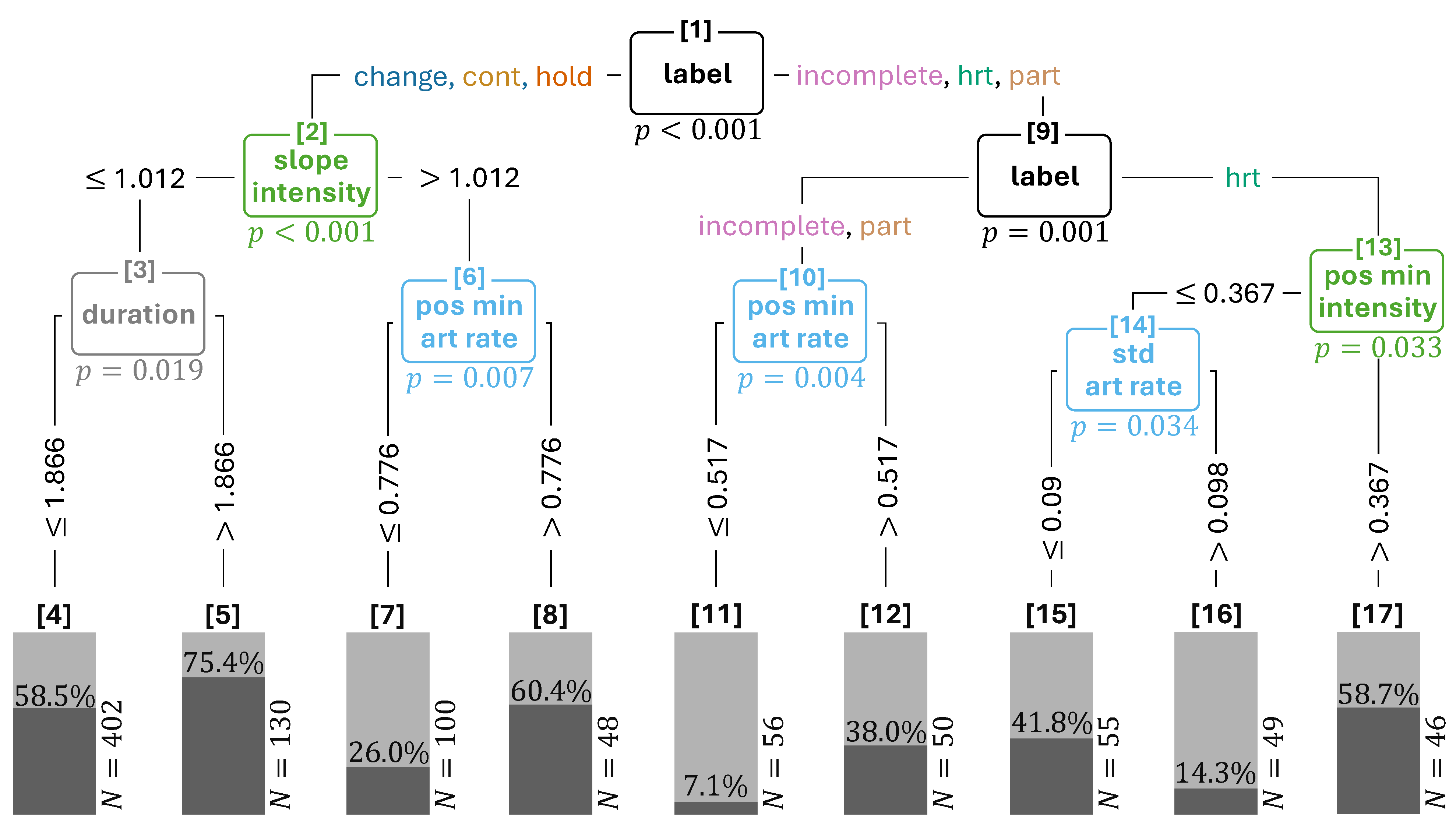

- The tree is not very deep (only three layers of splits), this can be expected given our relatively small size of observations (total N = 936). The resulting buckets have a minimum of 46 and a maximum of 402 tokens, showing that some of the trends cover a much larger group of tokens than others.

- Of all features that we entered into the tree (eight F0-related, eight intensity-related, ten durational and PCOMP label), only six features resulted in the production of significant splits in the tree.

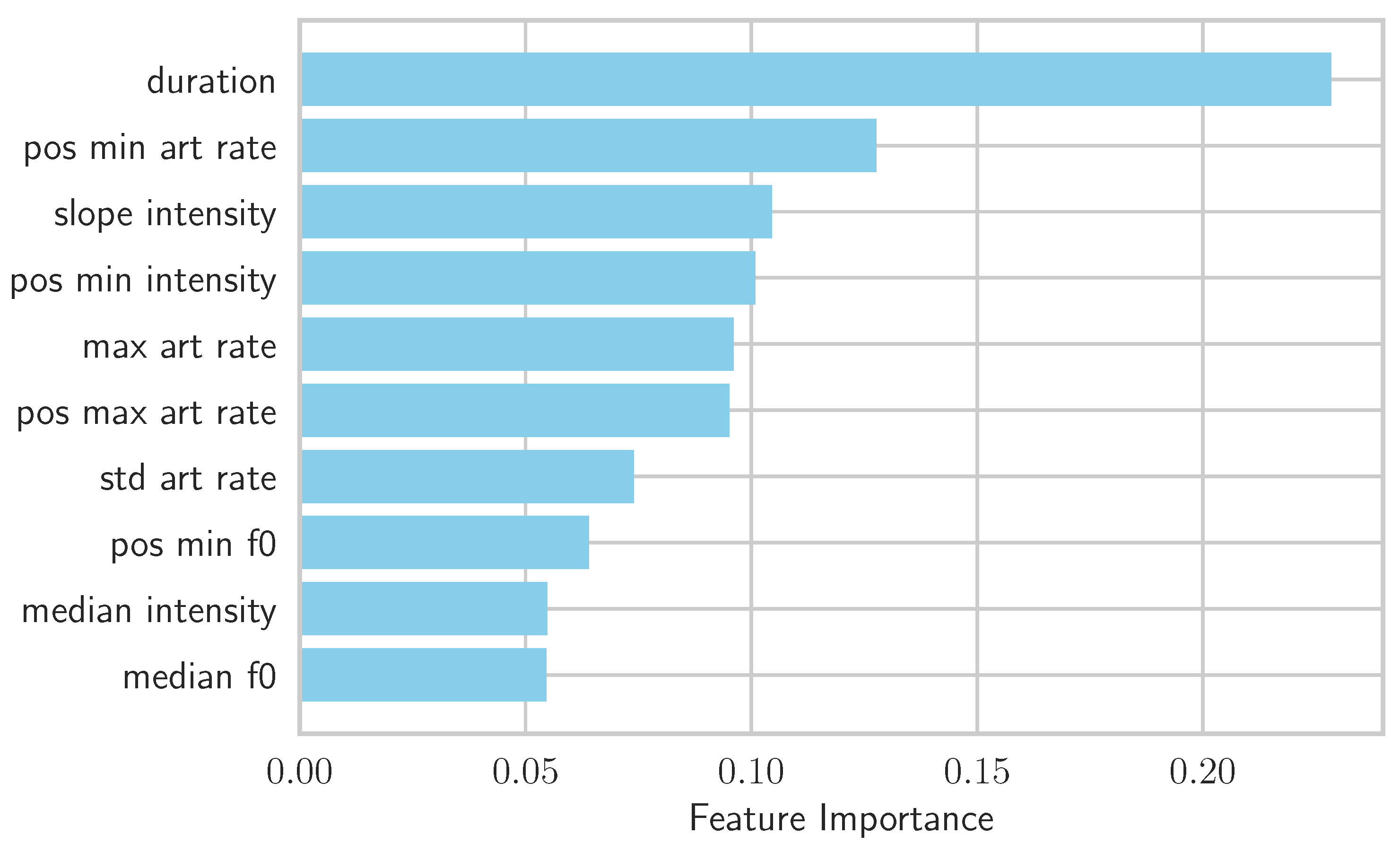

- The highest ranking feature is the PCOMP label (nodes 1 and 9), followed by two intensity-related features (nodes 2 and 13, indicated in green), and the durational features (nodes 3, 6, 10 and 14, indicated in gray and blue).

- None of the F0-related features resulted to contribute significantly to describing the variation seen in the data, i.e., to the distinction of whether a PCOMP segment was followed by a hearer response token or not.

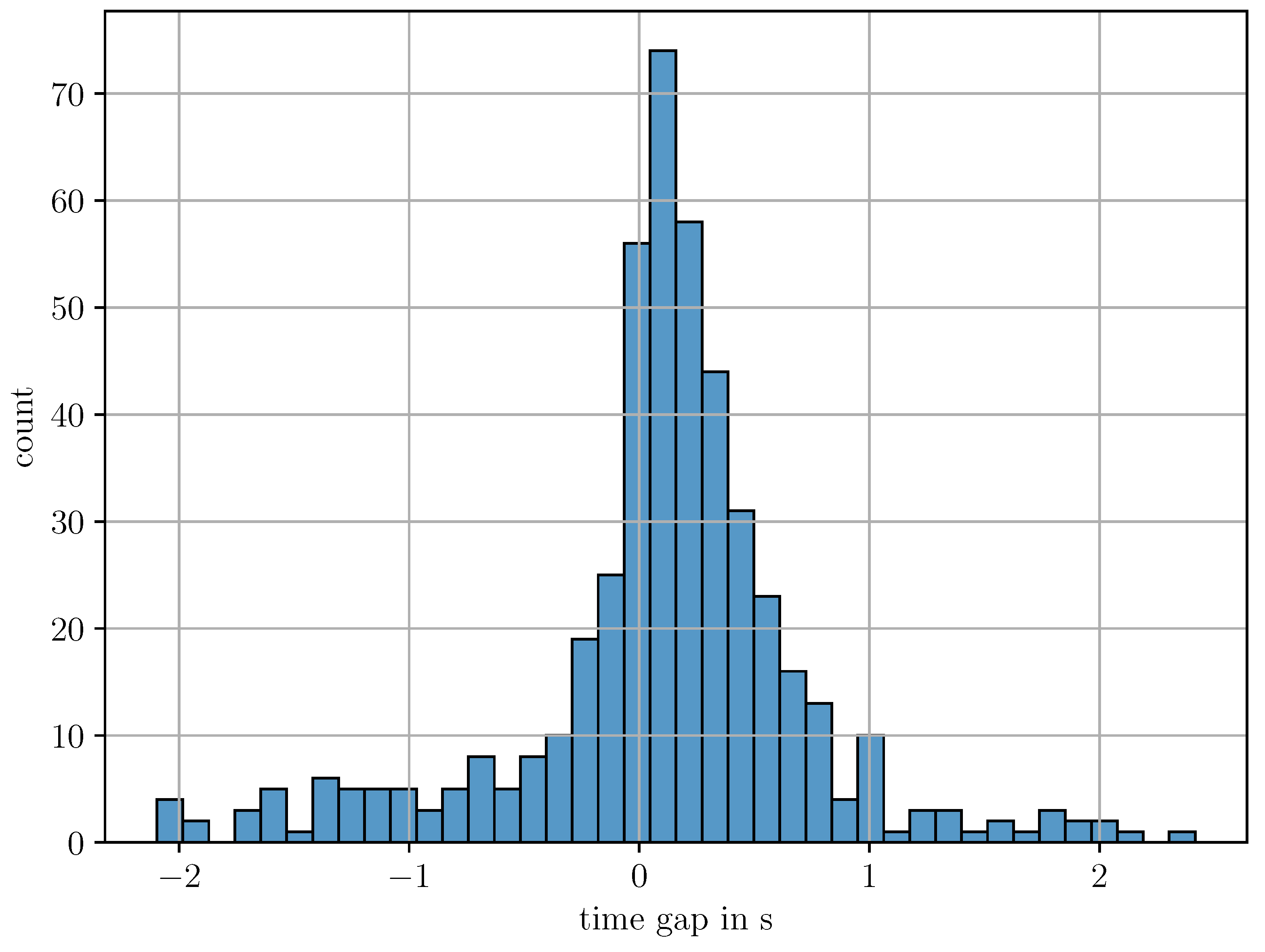

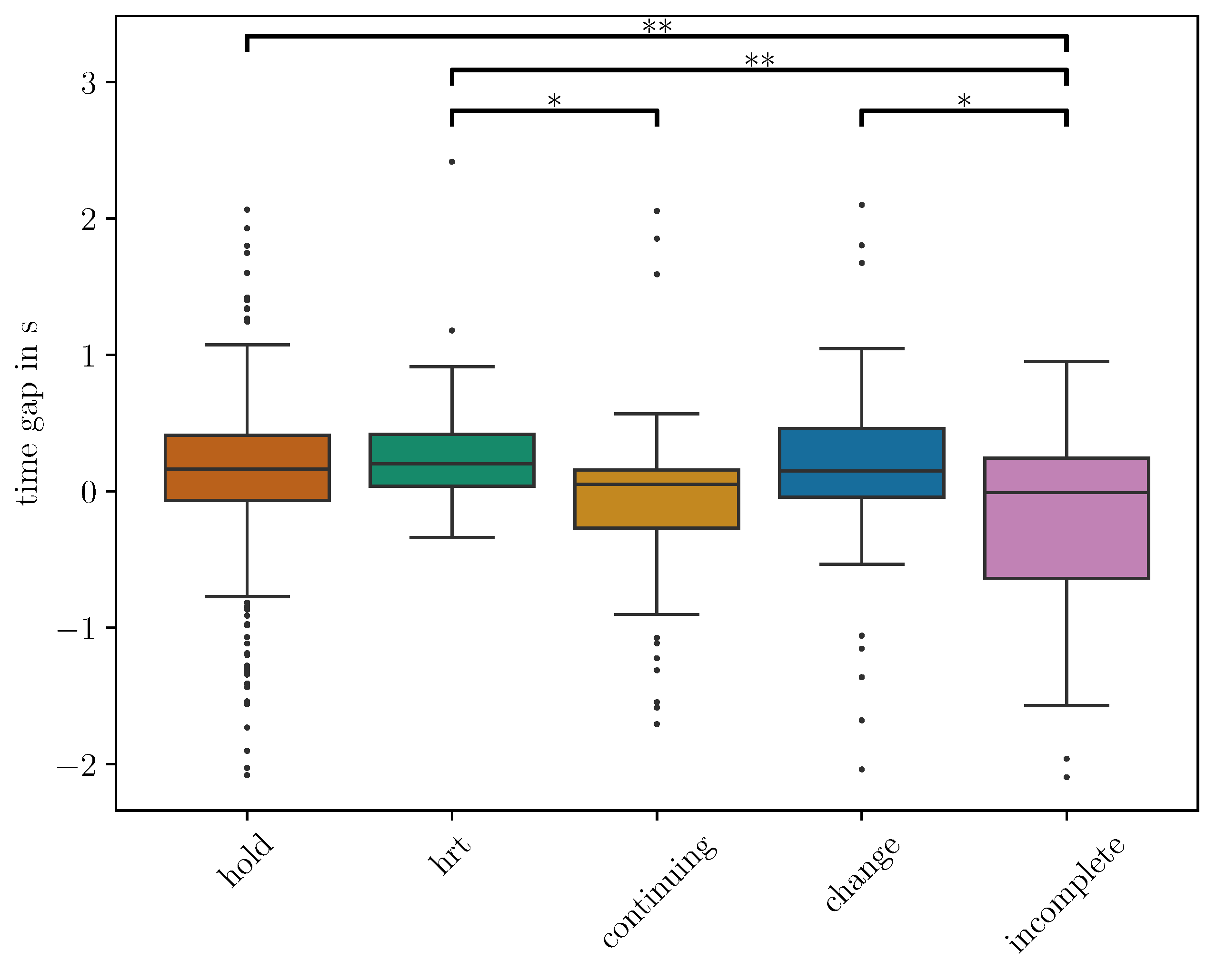

5.2. How Turn-Taking Function and Prosody Affect Hearer Response Token Timing

6. General Discussion

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| F0 | Fundamental Frequency |

| preHRT | Pre Hearer Response Token |

| noHRT | Non-Hearer Response Token |

| PCOMP | (point of) Potential Completion |

| CA | Conversation Analysis |

| TCU | Turn Construction Unit |

| CIT | Conditional Inference Tree |

| LMER | Linear Mixed-Effects Regression Model |

| GBC | Gradient Boosting Classifier |

| AIC | Akaike Information Criterion |

Appendix A

| Predictor | Estimate | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| Intercept | 0.10 | 0.11 | 0.88 | 0.38 |

| std_art_rate | 1.49 | 0.39 | 3.78 | <0.001 |

| duration | −0.11 | 0.02 | −4.53 | <0.001 |

| slope_intensity | −0.02 | 0.02 | −0.93 | 0.35 |

| label (continuing) | −0.12 | 0.12 | −1.01 | 0.31 |

| label (incomplete) | −0.37 | 0.16 | −2.41 | 0.02 |

| label (hold) | −0.03 | 0.10 | −0.29 | 0.78 |

| label (hrt) | 0.11 | 0.12 | 0.93 | 0.35 |

| duration:slope_intensity | 0.04 | 0.01 | 3.25 | <0.01 |

| Predictor | Estimate | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| Intercept | −0.02 | 0.10 | −0.16 | 0.87 |

| std_art_rate | 1.49 | 0.39 | 3.78 | <0.001 |

| duration | −0.11 | 0.02 | −4.53 | <0.001 |

| slope_intensity | −0.02 | 0.02 | −0.93 | 0.35 |

| label (change) | 0.12 | 0.12 | 1.01 | 0.31 |

| label (incomplete) | −0.26 | 0.15 | −1.74 | 0.08 |

| label (hold) | 0.09 | 0.08 | 1.08 | 0.28 |

| label (hrt) | 0.23 | 0.12 | 2.00 | 0.05 |

| duration:slope_intensity | 0.04 | 0.01 | 3.25 | <0.01 |

| Predictor | Estimate | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| Intercept | 0.21 | 0.10 | 2.23 | 0.03 |

| std_art_rate | 1.49 | 0.39 | 3.78 | <0.001 |

| duration | −0.11 | 0.02 | −4.53 | <0.001 |

| slope_intensity | −0.02 | 0.02 | −0.93 | 0.35 |

| label (change) | −0.11 | 0.12 | −0.93 | 0.35 |

| label (continuing) | −0.23 | 0.12 | −2.00 | 0.05 |

| label (incomplete) | −0.49 | 0.15 | −3.14 | <0.01 |

| label (hold) | −0.14 | 0.10 | −1.48 | 0.14 |

| duration:slope_intensity | 0.04 | 0.01 | 3.25 | <0.01 |

| Predictor | Estimate | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| Intercept | 0.07 | 0.09 | 0.85 | 0.40 |

| std_art_rate | 1.49 | 0.39 | 3.78 | <0.001 |

| duration | −0.11 | 0.02 | −4.53 | <0.001 |

| slope_intensity | −0.02 | 0.02 | −0.93 | 0.35 |

| label (change) | 0.03 | 0.10 | 0.29 | 0.78 |

| label (continuing) | −0.09 | 0.08 | −1.08 | 0.28 |

| label (incomplete) | −0.35 | 0.13 | −2.63 | <0.01 |

| label (hrt) | 0.14 | 0.10 | 1.48 | 0.14 |

| duration:slope_intensity | 0.04 | 0.01 | 3.25 | <0.01 |

| 1 | The analyses presented here build on an earlier qualitative analysis by the first author (Paierl, 2024) in the same corpus. The paper also connects to our ongoing work on real-time prediction of backchannels for human–robot interaction (Paierl et al., 2025). |

| 2 | For more information, see https://www.spsc.tugraz.at/databases-and-tools/grass-the-graz-corpus-of-read-and-spontaneous-speech.html (accessed on 30 July 2025). |

| 3 | There is also one backward-looking label indicating when one speaker collaboratively finishes another speaker’s utterance. These (rare) cases always received a double-label with the respective forward-looking label, but are not relevant for the present investigation and are thus not described further here. For a description of this label, see Kelterer and Schuppler (2025, Section 4.2.2). |

| 4 | These units overlap to some degree with the concept of Turn Construction Units (TCU) (Sacks et al., 1974; Selting, 2000), not, however, taking into account prosody. |

| 5 | A detailed description of these labels illustrated by examples is provided in Kelterer and Schuppler (2025). |

| 6 | The annotation process and the training is documented in detail in Kelterer and Schuppler (2025). |

| 7 | Even though the second author did not annotate any of the other data, she also segmented the same for evaluation. The inter-annotator agreement for boundaries between this and the other two annotations by the primary annotator team is 0.93 and 0.95. |

| 8 | The category incomplete in Figure 3 comprises turn-holding as well as turn-yielding annotations (cf. Section 3.3). The main criterion for this group, which merged two different annotations, is that no PCOMP point is actually reached because speakers interrupted themselves. |

| 9 | We are aware that this is an oversimplification of the concept of TCUs (cf. Selting, 2000), since we neither considered prosody nor multi-unit turn projection for our concept of PCOMP. |

| 10 | |

| 11 | Half of the conversations in GRASS were also filmed, which is necessary for multi-modal analyses. So far, no multi-modal annotations exist of these video recordings. |

| 12 | For more information, see Kelterer and Schuppler (2025). |

References

- Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59(4), 390–412. [Google Scholar] [CrossRef]

- Bates, G. G., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. [Google Scholar] [CrossRef]

- Bavelas, J. B., Coates, L., & Johnson, T. (2002). Listener responses as a collaborative process: The role of gaze. Journal of Communication, 52(3), 566–580. [Google Scholar] [CrossRef]

- Beňuš, Š., Gravano, A., & Hirschberg, J. (2011). Pragmatic aspects of temporal accommodation in turn-taking. Journal of Pragmatics, 43(12), 3001–3027. [Google Scholar] [CrossRef]

- Berger, E., Schuppler, B., Pernkopf, F., & Hagmüller, M. (2023, September 20–22). Single channel source separation in the wild—Conversational speech in realistic environments. Speech Communication, 15th ITG Conference (pp. 96–100), Achen, Germany. [Google Scholar] [CrossRef]

- Blomsma, P., Vaitonyté, J., Skantze, G., & Swerts, M. (2024). Backchannel behavior is idiosyncratic. Language and Cognition, 16(4), 1158–1181. [Google Scholar] [CrossRef]

- Boersma, P., & Weenink, D. (2001). PRAAT, a system for doing phonetics by computer. Glot International, 5(9), 341–345. [Google Scholar]

- Bögels, S., & Torreira, F. (2021). Turn-end estimation in conversational turn-taking: The roles of context and prosody. Discourse Processes, 58(10), 903–924. [Google Scholar] [CrossRef]

- Bravo Cladera, N. (2010). Backchannels as a realization of interaction: Some uses of mm and mhm in Spanish. In Dialogue in Spanish: Studies in functions and contexts (pp. 137–155). John Benjamins Publishing Company. [Google Scholar]

- Breiman, L. (2001). Random Forests. Machine Learning, 45(1), 5–32. [Google Scholar] [CrossRef]

- Clancy, B., & McCarthy, M. (2014). Co-constructed turn-taking. In Corpus Pragmatics: A Handbook (pp. 430–453). Cambridge University Press. [Google Scholar] [CrossRef]

- Clancy, P. M., Thompson, S. A., Suzuki, R., & Tao, H. (1996). The conversational use of reactive tokens in English, Japanese, and Mandarin. Journal of Pragmatics, 26(3), 355–387. [Google Scholar] [CrossRef]

- Coates, J., & Sutton-Spence, R. (2001). Turn-taking patterns in deaf conversation. Journal of Sociolinguistics, 5(4), 507–529. [Google Scholar] [CrossRef]

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019, June 2–7). BERT: Pre-training of deep bidirectional transformers for language understanding. NAACL: Human Language Technologies (pp. 4171–4186), Minneapolis, MN, USA. [Google Scholar] [CrossRef]

- Ekstedt, E., & Skantze, G. (2022, September 18–22). Voice activity projection: Self-supervised learning of turn-taking events. Interspeech (pp. 5190–5194), Incheon, Republic of Korea. [Google Scholar] [CrossRef]

- Ernestus, M. (2000). Voice assimilation and segment reduction in casual Dutch: A corpus-based study of the phonology-phonetics interface [Ph.D. thesis, Netherlands Graduate School of Linguistics, Vrije Universiteit te Amsterdam]. Available online: https://research.vu.nl/ws/portalfiles/portal/42168786/complete%20dissertation.pdf (accessed on 30 July 2025).

- Ernestus, M., Kočková-Amortová, L., & Pollak, P. (2014, May 26–31). The Nijmegen corpus of casual Czech. LREC (pp. 365–370), Reykjavik, Iceland. Available online: https://hdl.handle.net/11858/00-001M-0000-0018-66A2-3 (accessed on 30 July 2025).

- Friedman, J. H. (2001). Greedy function approximation: A gradient boosting machine. Annals of Statistics, 29(5), 1189–1232. Available online: https://www.jstor.org/stable/2699986 (accessed on 30 July 2025). [CrossRef]

- Fry, D. (1975). Simple reaction-times to speech and non-speech stimuli. Cortex, 11(4), 355–360. [Google Scholar] [CrossRef]

- Gardner, R. (1998). Between speaking and listening: The vocalisation of understandings. Applied Linguistics, 19(2), 204–224. [Google Scholar] [CrossRef]

- Geiger, B. C., & Schuppler, B. (2023, August 20–24). Exploring graph theory methods for the analysis of pronunciation variation in spontaneous speech. Interspeech (pp. 596–600), Dublin, Ireland. [Google Scholar] [CrossRef]

- Gravano, A., & Hirschberg, J. (2011). Turn-taking cues in task-oriented dialogue. Computer Speech & Language, 25(3), 601–634. [Google Scholar] [CrossRef]

- Harris, C. R., Millman, K. J., van der Walt, S. J., Gommers, R., Viranen, P., Cournapeau, D., Wieser, E., Taylor, J., Berg, S., Smith, N. J., Kern, R., Picus, M., Hoyer, S., van Kerkwijk, M. H., Matthew, B., Allan, H., Fernández del Río, J., Wiebe, M., Peterson, P., … Oliphant, T. E. (2020). Array programming with NumPy. Nature, 585(7825), 357–362. [Google Scholar] [CrossRef]

- Heinz, B. (2003). Backchannel responses as strategic responses in bilingual speakers’ conversations. Journal of Pragmatics, 35(7), 1113–1142. [Google Scholar] [CrossRef]

- Hirschberg, J., & Gravano, A. (2009, September 6–10). Backchannel-inviting cues in task-oriented dialogue. Interspeech (pp. 1019–1022), Brighton, UK. [Google Scholar] [CrossRef]

- Hjalmarsson, A. (2011). The additive effect of turn-taking cues in human and synthetic voice. Speech Communication, 53(1), 23–35. [Google Scholar] [CrossRef]

- Hothorn, T., Hornik, K., & Zeileis, A. (2006). Unbiased recursive partitioning: A conditional inference framework. Journal of Computational and Graphical Statistics, 15(3), 651–674. [Google Scholar] [CrossRef]

- Hothorn, T., Hornik, K., & Zeileis, A. (2015). ctree: Conditional Inference Trees. The Comprehensive R Archive Network, 8, 1–34. [Google Scholar]

- Inoue, K., Lala, D., Skantze, G., & Kawahara, T. (1998, April 29–May 4). Yeah, Un, Oh: Continuous and real-time backchannel prediction with fine-tuning of Voice Activity Projection. NAACL: Human Language Technologies (pp. 7171–7181), Albuquerque, NM, USA. [Google Scholar] [CrossRef]

- Jadoul, Y., Thompson, B., & de Boer, B. (2018). Introducing parselmouth: A Python interface to Praat. Journal of Phonetics, 71, 1–15. [Google Scholar] [CrossRef]

- Kelterer, A., & Schuppler, B. (2025). Turn-taking annotation for quantitative and qualitative analyses of conversation. arXiv, arXiv:2504.09980. [Google Scholar] [CrossRef]

- Kelterer, A., Zellers, M., & Schuppler, B. (2023, August 20–24). (Dis)agreement and preference structure are reflected in matching along distinct acoustic-prosodic features. Interspeech (pp. 4768–4772), Dublin, Ireland. [Google Scholar] [CrossRef]

- Landis, R. J., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174. [Google Scholar] [CrossRef]

- Levshina, N. (2021). Conditional inference trees and random forests. In M. Paquot, & S. T. Gries (Eds.), A practical handbook of corpus linguistics (pp. 611–643). Springer. [Google Scholar] [CrossRef]

- Linke, J., Wepner, S., Kubin, G., & Schuppler, B. (2023). Using Kaldi for automatic speech recognition of conversational Austrian German. arXiv, arXiv:2301.06475. [Google Scholar] [CrossRef]

- Local, J., & Walker, G. (2012). How phonetic features project more talk. Journal of the International Phonetic Association, 42(3), 255–280. [Google Scholar] [CrossRef]

- Maynard, S. K. (1986). On back-channel behavior in Japanese and English casual conversation. Linguistics, 24(6), 1079–1108. [Google Scholar] [CrossRef]

- Maynard, S. K. (1990). Conversation management in contrast: Listener response in Japanese and American English. Journal of Pragmatics, 14(3), 397–412. [Google Scholar] [CrossRef]

- McCarthy, M. (2003). Talking back: “Small” interactional response tokens in everyday conversation. Research on Language and Social Interaction, 36(1), 33–63. [Google Scholar] [CrossRef]

- Noguchi, H., & Den, Y. (1998, November 30–December 4). Prosody-based detection of the context of backchannel responses. ICSLP, Sydney, Australia. [Google Scholar] [CrossRef]

- Ogden, R. (2012). The phonetics of talk in interaction—Introduction to the special issue. Language and Speech, 55(1), 3–11. [Google Scholar] [CrossRef]

- Paierl, M. (2024). Modeling backchannels for human-robot interaction [Master’s thesis, Graz University of Technology]. [Google Scholar] [CrossRef]

- Paierl, M., Schuppler, B., & Hagmüller, M. (2025, August 17–21). Continuous prediction of backchannel timing for human-robot interaction. Interspeech, Rotterdam, The Netherlands. (Accepted for publication). [Google Scholar]

- Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., & Duchesnay, E. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830. [Google Scholar]

- Poppe, R., Truong, K. P., & Heylen, D. (2011). Backchannels: Quantity, type and timing matters. In H. H. Vilhjálmsson, S. Kopp, S. Marsella, & K. R. Thórisson (Eds.), Intelligent virtual agents (pp. 228–239). Springer. [Google Scholar] [CrossRef]

- Sacks, H., Schegloff, E., & Jefferson, G. (1974). A simplest systematics for the organisation of turn-taking for conversation. Language, 50(4), 696–735. [Google Scholar] [CrossRef]

- Sbranna, S., Möking, E., Wehrle, S., & Grice, M. (2022, May 23–26). Backchannelling across languages: Rate, lexical choice and intonation in L1 Italian, L1 German and L2 German. Speech Prosody (pp. 734–738), Lisbon, Portugal. [Google Scholar] [CrossRef]

- Schegloff, E. A. (1982). Discourse as an interactional achievement: Some uses of “uh huh” and other things that come between sentences. In D. Tannen (Ed.), Analyzing discourse: Text and talk (pp. 71–93). Georgetown University Press. [Google Scholar]

- Schuppler, B., Hagmüller, M., Morales-Cordovilla, J. A., & Pessentheiner, H. (2014, May 26–31). GRASS: The Graz corpus of Read And Spontaneous Speech. LREC (pp. 1465–1470), Reykjavik, Iceland. [Google Scholar]

- Schuppler, B., Hagmüller, M., & Zahrer, A. (2017). A corpus of read and conversational Austrian German. Speech Communication, 94, 62–74. [Google Scholar] [CrossRef]

- Schuppler, B., & Kelterer, A. (2021, October 4–5). Developing an annotation system for communicative functions for a cross-layer ASR system. First Workshop on Integrating Perspectives on Discourse Annotation (pp. 14–18), Tübingen, Germany. Available online: https://aclanthology.org/2021.discann-1.3/ (accessed on 30 July 2025).

- Selting, M. (2000). The construction of units in conversational talk. Language in Society, 29(4), 477–517. [Google Scholar] [CrossRef]

- Sikveland, R. O. (2012). Negotiating towards a next turn: Phonetic resources for ‘doing the same’. Language and Speech, 55(1), 77–98. [Google Scholar] [CrossRef]

- Stalnaker, R. (2002). Common ground. Linguistics and Philosophy, 25(5/6), 701–721. Available online: https://www.jstor.org/stable/25001871 (accessed on 30 July 2025). [CrossRef]

- Stubbe, M. (1998). Are you listening? Cultural influences on the use of supportive verbal feedback in conversation. Journal of Pragmatics, 29(3), 257–289. [Google Scholar] [CrossRef]

- Torreira, F., & Ernestus, M. (2012). Weakening of intervocalic/s/in the Nijmegen corpus of casual Spanish. Phonetica, 69(3), 124–148. [Google Scholar] [CrossRef]

- Virtanen, P., Gommers, R., Oliphant, T. E., Haberland, M., Reddy, T., Cournapeau, D., Burovski, E., Peterson, P., Weckesser, W., Bright, J., van der Walt, S. J., Brett, M., Wilson, J., Millman, K. J., Mayorov, N., Nelson, A. R. J., Jones, E., Kern, R., Larson, E., … van Mulbregt, P. (2020). SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nature Methods, 17, 261–272. [Google Scholar] [CrossRef]

- Vogel, A. P., Maruff, P., Snyder, P. J., & Mundt, J. C. (2009). Standardization of pitch-range settings in voice acoustic analysis. Behavior Research Methods, 41(2), 318–324. [Google Scholar] [CrossRef]

- Ward, N., & Tsukahara, W. (2000). Prosodic features which cue back-channel responses in English and Japanese. Journal of Pragmatics, 32(8), 1177–1207. [Google Scholar] [CrossRef]

- White, S. (1989). Backchannels across cultures: A study of Americans and Japanese. Language in Society, 18(1), 59–76. [Google Scholar] [CrossRef]

- Yngve, V. H. (1970, April 16–18). On getting a word in edgewise. Chicago Linguistic Society (pp. 567–578), Chicago, IL, USA. [Google Scholar]

- Zellers, M. (2017). Prosodic variation and segmental reduction and their roles in cuing turn transition in Swedish. Language and Speech, 60(3), 454–478. [Google Scholar] [CrossRef] [PubMed]

- Zellers, M., Gubian, M., & Post, B. (2010, September 26–30). Redescribing intonational categories with Functional Data Analysis. Interspeech (pp. 1141–1144), Makuhari, Japan. [Google Scholar]

| PCOMP Label | Definition |

|---|---|

| hold | same speaker continues speaking after the PCOMP by starting a new sentence |

| continuing | same speaker continues speaking after the PCOMP by continuing the same sentence with the addition of increments |

| change | other speaker continues speaking after the current speaker reaches a PCOMP |

| particle | discourse particle uttered after a PCOMP or at the beginning of a turn |

| question-particle | question particle (tag question) that transforms a declarative utterance into a question or is used to elicit some kind of listener feedback (e.g., a backchannel) |

| question | syntactic and/or prosodic question |

| hesitation | hesitation particle uttered after a PCOMP or at the beginning of a turn |

| hrt | hearer response token; usually short backchannels, continuers, acknowledgments, etc., that do not contain a (new) proposition of their own and do not take up the turn (though a new turn may be started by the interlocutor who uttered the hrt if the previous speaker does not continue their turn after the hrt) |

| disruption | current speaker does not reach a PCOMP, but interrupts themselves to rephrase and start a new sentence |

| incomplete | current speaker does not reach a PCOMP before the other speaker takes up the turn |

| label_label | combination of two of the labels above, e.g., when both interlocutors start speaking simultaneously after a pause |

| @ | indicates uncertainty about a label (cf. Section 2.2.3); may also co-occur with a combined label to indicate the uncertainty between two specific labels (cf. Section 2.2.4) |

| Factor/Feature | Type | Description |

|---|---|---|

| PCOMP label | categorical | functional label assigned to each preHRT, indicating its turn-taking role (e.g., hold, change, hrt) |

| Durational features | float | the total duration (in seconds) of the preHRT, the temporal gap (in seconds) between the end of the preHRT and the onset of the following hearer response token |

| F0 features | float | mean, median, standard deviation, slope, maximum (and its position), and minimum (and its position) of the fundamental frequency (F0) |

| Intensity features | float | mean, median, standard deviation, slope, maximum (and its position), and minimum (and its position) of the signal intensity |

| Articulation rate features | float | mean, median, standard deviation, slope, maximum (and its position), and minimum (and its position) of the articulation rate |

| PCOMP Label | noHRT PCOMPs | preHRT PCOMPs | Total |

|---|---|---|---|

| hold | 741 | 277 | 1018 |

| hrt | 490 | 57 | 547 |

| continuing | 328 | 64 | 392 |

| change | 253 | 47 | 300 |

| incomplete | 259 | 23 | 29 |

| particle | 206 | 0 | 206 |

| total | 2277 | 468 | 2745 |

| Accuracy | Recall | Precision | F1 |

|---|---|---|---|

| 0.6917 | 0.6983 | 0.6944 | 0.6934 |

| Predictor | Std. Error | t-Value | p-Value | |

|---|---|---|---|---|

| Intercept | −0.27 | 0.15 | −1.80 | 0.07 |

| std_art_rate | 1.49 | 0.39 | 3.78 | <0.001 |

| duration | −0.11 | 0.02 | −4.53 | <0.001 |

| slope_intensity | −0.02 | 0.02 | −0.93 | 0.35 |

| label (change) | 0.37 | 0.16 | 2.41 | 0.02 |

| label (continuing) | 0.26 | 0.15 | 1.74 | 0.08 |

| label (hold) | 0.35 | 0.13 | 2.63 | <0.01 |

| label (hrt) | 0.49 | 0.15 | 3.14 | <0.01 |

| duration:slope_intensity | 0.04 | 0.01 | 3.25 | <0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paierl, M.; Kelterer, A.; Schuppler, B. Distribution and Timing of Verbal Backchannels in Conversational Speech: A Quantitative Study. Languages 2025, 10, 194. https://doi.org/10.3390/languages10080194

Paierl M, Kelterer A, Schuppler B. Distribution and Timing of Verbal Backchannels in Conversational Speech: A Quantitative Study. Languages. 2025; 10(8):194. https://doi.org/10.3390/languages10080194

Chicago/Turabian StylePaierl, Michael, Anneliese Kelterer, and Barbara Schuppler. 2025. "Distribution and Timing of Verbal Backchannels in Conversational Speech: A Quantitative Study" Languages 10, no. 8: 194. https://doi.org/10.3390/languages10080194

APA StylePaierl, M., Kelterer, A., & Schuppler, B. (2025). Distribution and Timing of Verbal Backchannels in Conversational Speech: A Quantitative Study. Languages, 10(8), 194. https://doi.org/10.3390/languages10080194