3.1. Acoustic Features at Phrase Boundaries

Number and duration of pauses. While reading the dictation, the speaker paused after all IPs and after 84% of ips (see

Table 1). In the news readings, pauses followed 84% of IPs and 13% of ips. Spontaneous speech paused after 89% of IPs and 29% of ips. In all recordings, some pauses also occurred within phrases; however, such interruptions were rare, only 5% during dictation, 3% in the news recordings, and 2% in the interview. These may be actual phrase boundaries but were not marked during annotation due to very tight syntactic or semantic connections between the word groups.

The data were also statistically evaluated. To determine whether there was a statistically significant relationship between phrase boundaries and the distribution of pauses, a Chi-square test (

McHugh, 2013) was conducted. The results showed that in all cases, the relationship between phrase boundaries and pauses was statistically significant (

p < 0.05). Although pauses followed only 13% of ips in the news recordings, the Chi-square test still indicated a significant association, confirming that pauses at these boundaries were not random.

After calculating the average pause durations, it is evident that the longest pauses between IPs and ips occurred while reading the dictations (averages of 910 ms and 266 ms, respectively). On average, pauses between IPs were 3.4 times longer than pauses between ips. In the interview, pauses between IPs were half as long as those in the dictation recordings, with an average duration of 478 ms. Pauses between ips were also shorter, but not as significantly, down to an average of 234 ms (0.9 times shorter). In the interview, pauses after IPs were, on average, 2.8 times longer than pauses between ips. The shortest pauses occurred while reading the news. Here, the average duration of pauses between IPs was 438 ms; and between ips it was only 156 ms. Pauses between IPs were, on average, twice as long as those between ips. The large standard deviations observed indicate that the pause durations vary greatly, and in some cases, the duration of pauses between IPs and ips may overlap. Therefore, the relationship between pause duration and phrase type should always be interpreted cautiously.

Figure 2 further illustrates the variation in pause duration by showing the distribution of phrase-final pauses, with pauses after IPs consistently longer than those after ips, and the greatest variability observed in dictations.

To determine whether the differences in pause durations were statistically significant, the Mann–Whitney U test (

Mann & Whitney, 1947) was used to compare the pause durations between IPs and ips in all recordings. In dictation, news, and interview recordings, it was found that the pause durations between IPs and ips differ significantly (

p < 0.001,

p < 0.001,

p = 0.0003, respectively).

The results indicate that pauses are a clear marker of phrase boundaries, although their occurrence may depend on the type of phrase and speech. While reading the dictation, the speaker always separates IPs with pauses, and there are many pauses between ips as well. In the news recordings, pauses are both less frequent and shorter. This is influenced by the rate. The articulation rate in the news was 17 sounds per second, in the interview it was 15 sounds per second, and in the dictations it was 12 sounds per second (the structure of a syllable in Lithuanian can range from one to seven sounds (

Kazlauskienė, 2023), e.g., išskleisk [ɪ.

2ˈsʲklʲɛɪˑsk], ‘spread’; therefore, it is more appropriate to measure speech rate in sounds rather than in words or syllables). In the interview, pauses may also arise due to the spontaneity of speech, when the speech is not pre-prepared, and there is a need to think about what to say. These findings align with the broader observation that the use of pausing to signal prosodic boundaries varies across languages. For instance, in German, intonational phrase boundaries are only occasionally marked by pauses (Kohler et al., 2017, as cited in

Ip & Cutler, 2022), while in Mandarin, pausing is a much more frequent cue (Wang et al., 2019, as cited in

Ip & Cutler, 2022).

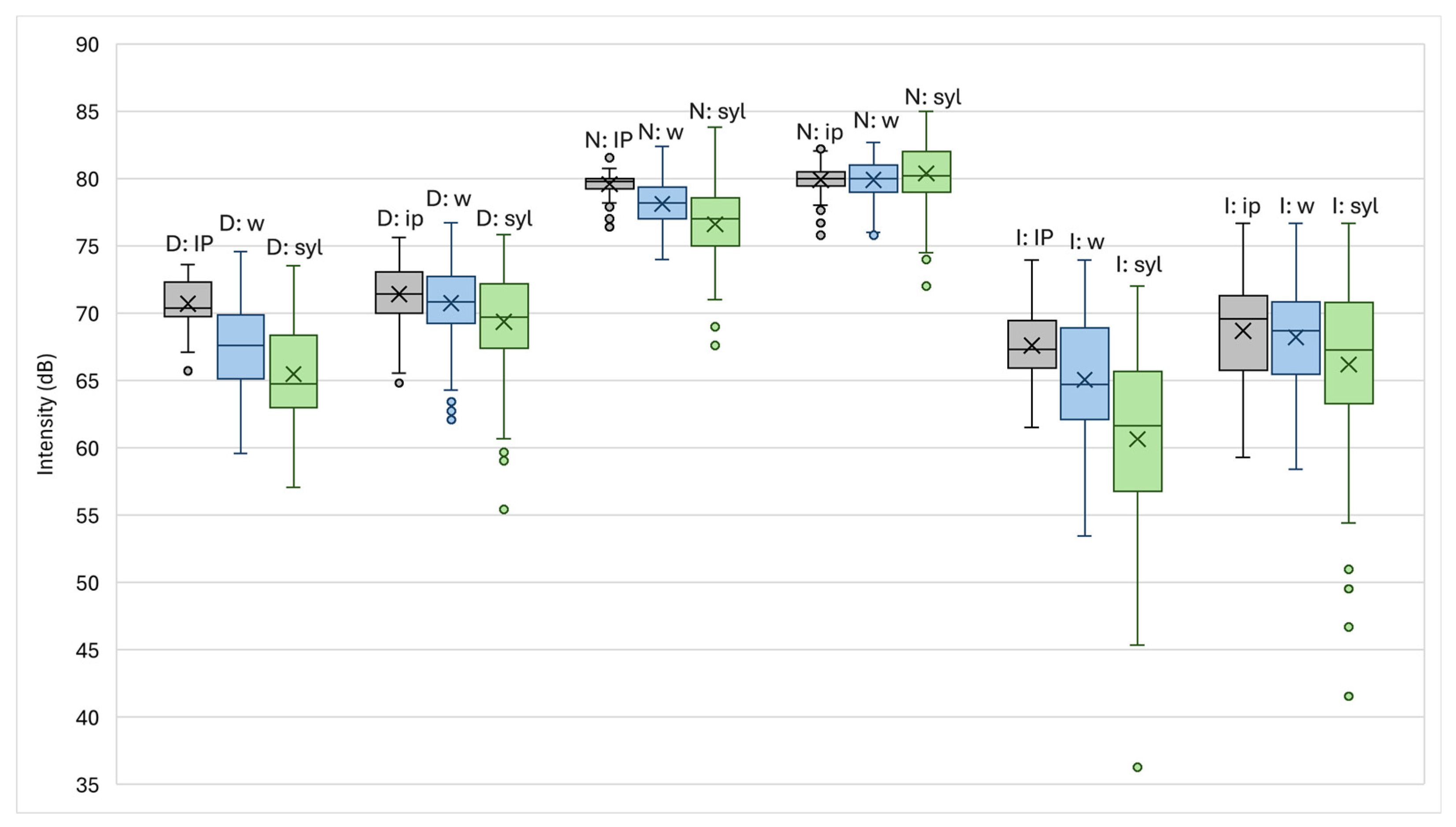

Intensity. When reading dictations and speaking spontaneously, the trend of intensity decreases at the end of IPs and ips is similar. Compared to the overall intensity of the IP, the last word’s intensity decreased by 50% in the dictations and 40% in the interview. The decrease in intensity of the last word of the ip was minor—around 10% in both types of recordings. The intensity of the last syllable, compared to the overall intensity of the IP, decreased by 70% in both types of recordings, while the decrease in intensity of the last syllable of the ip was much smaller: 30% in the dictations and 40% in the interview (see

Table 2).

While reading the news, the intensity of the final word in an IP decreased by 30%, and the final syllable’s intensity was reduced by half. This shows a pattern similar to that observed in other types of recordings, although the decrease in intensity was somewhat smaller. However, the intensity of the final syllable of ip was about 10% higher than that of the entire phrase and its final word. This suggests that when reading the news, the speaker does not reduce intensity between ips—likely due to the relatively fast pace of speech—resulting in a somewhat flatter intonation pattern. A similar pattern of inconsistency in intensity marking phrase boundaries has been observed in studies on other languages; for example, Kim et al., 2004 (as cited in

Wagner & Watson, 2010) found that stronger boundaries were sometimes—but not consistently—associated with lower pre-boundary intensity across speakers.

Figure 3 illustrates this pattern across all speech types: intensity consistently decreases in the final syllable of IPs, while the final syllable of ips often retains or even exceeds the intensity of the overall phrase or final word. This trend is most pronounced in the news recordings, where intensity remains relatively high between internal phrases, in contrast to the more marked decline observed in dictations and interviews.

The Kruskal–Wallis test (

Kruskal & Wallis, 1952) showed that differences in intensity between IPs and their final syllables were statistically significant across all recordings (

p < 0.05). When comparing the final word of these phrases with the final syllable, a statistically significant difference was found only in the news recordings; in the dictation and interview recordings, the intensity differences between these elements were not statistically significant (

p = 0.28 and

p = 0.08, respectively). When comparing the intensity of ips, a statistically significant difference was observed in only one position—in the news recordings, between the final word of the phrase and its final syllable. In all other cases, the differences were not statistically significant. This suggests that the intensity of the final word and its final syllable does not differ.

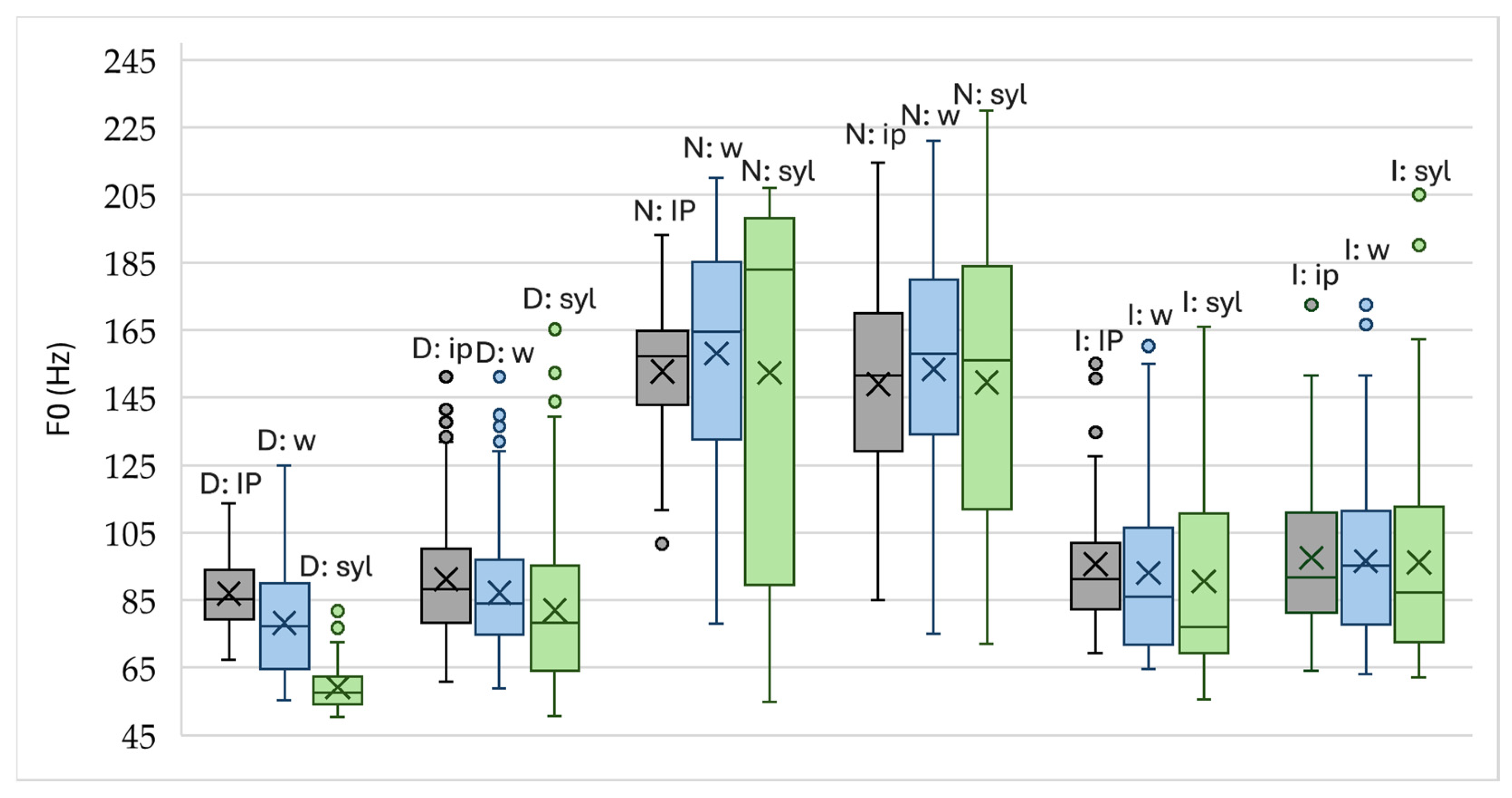

The fundamental frequency may either fall or rise at the end of a phrase. A falling F0 is typical of declarative statements, while a rising F0 often signals a question or an unfinished thought. For this part of the study, we analyzed only phrases exhibiting falling F0 contours, as those with rising contours often require detailed semantic and syntactic analysis. While such cases were noted, they were not examined in depth and are left for future investigation. Thus, this section analyzes 125 IPs (82%) and 162 (59%) ips from the dictation recordings, 34 IPs (51%) and 131 ips (54%) from the news recordings, and 49 IPs (61%) and 41 ips (49%) from the interview recordings with falling F0.

Additionally, in 3% of the dictation and news recordings and 10% of the interview recordings, the final F0 of IPs could not be determined due to creaky voice. Similarly, the F0 at the end of 1% of ips in the dictation and interview recordings was also unmeasurable for the same reason. These instances also indicate the end of a phrase; however, they are excluded from this study.

The data from the analyzed material (see

Table 3) show that the F0 of the final syllable is, on average, one-fifth lower than that of the entire IP or ip. In the dictations and news recordings, the F0 of the final syllable in IPs decreased by 30% compared to the entire phrase (differences in Hz are shown in

Table 3), while in the interview recordings it decreased by 20%. The F0 of the final syllable in ips decreased by 20% in dictations and by 10% in both the news and interview recordings. However, the data on the F0 of the final word compared to the entire phrase are less consistent. In IPs, the F0 of the final word decreased by 20% in dictations and by 10% in interviews and showed no change in the news recordings. Similarly, no F0 change was observed in the final word of ips. Additionally, F0 in the news recordings was noticeably higher than in dictations or spontaneous speech. In the read speech recordings, the F0 of ips—whether of the entire phrase, the final word, or the final syllable—was higher than that of IPs.

Statistical analysis using the Kruskal–Wallis test revealed that in the dictation recordings, the F0 of IPs differed significantly when comparing the entire phrase and the final word with the final syllable (p < 0.05). In ips from the dictations, the F0 of the entire phrase also differed significantly from that of the final syllable (p < 0.05). However, the difference between the F0 of the final word and its final syllable was not statistically significant (p = 0.09). In the news recordings, the differences in F0 between the final word and the final syllable were not statistically significant for either IPs or ips (p = 0.25 and p = 0.05, respectively). In the interview recordings, none of the compared F0 differences were statistically significant (for IPs: phrase vs. syllable, p = 0.05; word vs. syllable, p = 0.08; for ips: phrase vs. syllable, p = 0.37; word vs. syllable, p = 0.56).

As shown in

Table 4, an examination of phrases with rising F0 contours reveals that in the dictation recordings, the F0 of the final word and its final syllable in an IP is lower than that of the entire phrase. However, the F0 of the final syllable is slightly higher than that of the final word as a whole, although the difference is minimal. In all other cases (both IPs and ips), the F0 of the final word is either slightly higher than or comparable to the overall phrase F0, while the F0 of the final syllable increases on average by about 20%. These cases involving rising F0 contours warrant more detailed analysis, which is planned for future research.

The boxplot in

Figure 4 includes both falling and rising F0. It shows that F0 tends to decrease at the end of IPs, especially in dictations and news recordings, while ips—particularly in interviews—often maintain or even show a slight increase in final-syllable F0. This reflects the influence of rising contours and indicates that final lowering is not consistent across all phrase types and speech styles.

These patterns correspond to some previous observations summarized by

Yuan and Liberman (

2014), who argue that F0 declination rate is shaped by speaking style and sentence type. Read speech has been shown to display steeper and more frequent F0 declination than spontaneous speech, with greater control over the declination slope in read contexts (Laan, 1997; Lieberman et al., 1984; Tøndering, 2011, as cited in

Yuan & Liberman, 2014). Sentence modality also plays a role: declaratives typically exhibit the steepest falling contours, while syntactically unmarked questions and non-terminal utterances show flatter or rising intonation (Thorsen, 1980, as cited in

Yuan & Liberman, 2014). Thus, instances with rising F0 require more detailed syntactic and semantic interpretation, which will be undertaken in future work.

Final Sound Duration. In relevant positions—that is, at the end of a word in the middle of a phrase and at the end of both types of phrases—the following sounds were observed: [s], [ɪ], [ɐ], and [oː]. However, their frequency of occurrence was not equal; in some positions, only two or three instances were found. Nevertheless, general trends can still be identified.

Although the sounds were expected to lengthen the most at the end of IPs, the results only partially confirm this hypothesis (see

Table 5). However, one consistent pattern is quite evident: in all cases, the final sound of any phrase is longer than the final sound of a word located in the middle of a phrase. In the dictation recordings, the final sound of both IPs and ips was, on average, 1.8 times longer than the corresponding final sound of a word in the middle of a phrase. In the interviews, the final sound of IPs was on average 1.9 times longer, and of ips 1.7 times longer than the respective medial word-final sound. In the news recordings, the final vowel of IPs was on average 1.4 times longer, and the final consonant 2.3 times longer. In contrast, the final sound of ips was 1.3 times longer than the corresponding sound in phrase-medial position. Noticeable differences in sound duration were observed between the dictation and news recordings, which can be attributed to the much faster reading pace in the news. Here, sounds are shorter by a third or even by half compared to those in the dictation recordings.

Another Kruskal–Wallis test was conducted to determine whether the differences in the duration of the final sounds are statistically significant. The results show that in read speech, the duration of all sounds differs significantly when comparing the duration of a final sound in a word located in the middle of a phrase with the final sound of either phrase type (p < 0.05). However, when comparing the duration of final sounds between IPs and ips, a statistically significant difference was found only for the sound [s] (p < 0.05).

In the interview recordings, a statistically significant difference was observed only for the consonant [s] and the vowel [ɐ] when comparing phrase-medial position with either phrase-final position (

p < 0.05). The duration differences in the other vowels were not statistically significant (for sound [ɪ],

p = 0.12; for [oː],

p = 0.41). Lithuanian has a phonological vowel length contrast (for more details on the phonological structure of Lithuanian, see

Girdenis, 2014). Our analysis includes two short vowels and one long vowel. The results from the dictation and news recordings do not show a clear difference in the lengthening of these vowels. However, in the interview data, short vowels are lengthened more at the end of ip than at the end of IP, whereas the long vowel [oː] is lengthened more at the end of IP than ip. Nevertheless, we cannot determine whether phonological length contrasts remained stable (cf. significant findings in

Paschen et al., 2022), as this was not the aim of our study. Future research should explore these issues further.

3.2. Reliability of Acoustic Features in Automatic Phrasing

Based on all the examined features, the overall accuracy of prosodic unit identification using logistic model trees (LMT) (

Landwehr et al., 2005) reached 80%. However, the accuracy varied depending on the type of unit.

Table 6 presents a confusion matrix of the predicted and unpredicted prosodic units, which helps to clarify: (1) how the target phenomenon was predicted and with which other categories it was confused; (2) which unit was interpreted as the phenomenon in question.

The raw data provide the answer to the first question: of the 299 IP boundaries marked by annotators, 247 (83%) were correctly predicted as IPs, 44 were interpreted as ip, and 8 were marked as word-final boundaries. Of the 605 annotated intermediate phrase boundaries, 298 (49%) were correctly predicted, 38 were mispredicted as IPs, and as many as 269 were interpreted as word-final boundaries. Nearly all word-final boundaries were correctly predicted as such, with only 30 marked as ip and 5 as IP.

The answer to the second question is reflected in the column data: 290 instances were predicted as IP boundaries, of which 247 were annotated as IPs, 38 as ips, and 5 as word-final boundaries. A total of 372 instances were predicted as ip boundaries, of which 298 were annotated as ips, 30 as word-final boundaries, and 44 as IPs. Of the 1328 instances predicted as word-final boundaries, 1051 were annotated as such, 269 as ips, and 8 as IPs.

Thus, 69% of phrase boundaries marked by annotators were predicted as phrase boundaries. Still, the accuracy of identifying the specific phrase type (IP or ip) was lower—60%—due to some IPs being misclassified as ips and vice versa. The greatest confusion occurred within the ip group: as many as 45% of ip boundaries annotated by linguists were not predicted as phrase boundaries, and a small portion (6%) were misclassified as IPs. These findings prompted a separate evaluation of different types of recordings.

In the news recordings, annotators marked only 67 instances of IP, which tend to be very long (cf. dictation recordings, where 152 IPs were marked, although the number of phonetic words differs only slightly: 893 in dictations vs. 732 in news recordings). A total of 82% of the IPs annotated by humans were predicted as IPs; the remaining were classified as ips, typically when the phrases were very short, such as greetings.

However, only 30% of the annotated ips were predicted as ips, while as many as 67% were misclassified as word-final boundaries. This makes ips the most poorly predicted prosodic unit group in news recordings. Such low ip accuracy significantly lowers the overall phrase boundary identification rate: only 47% of the phrase boundaries annotated by humans were predicted as such.

News recordings are characterized by a particularly high speech rate—17 sounds per second, compared to 15 in interviews and 12 in dictations (measuring articulation rate only, excluding pauses and filled pauses). As shown in the first part of the study, news recordings also contain the fewest pauses (see

Table 1), which means one of the main prosodic markers—pausing—is often absent. Moreover, final sounds in ips are lengthened the least in news recordings (see

Table 4). News segments are read with relatively high pitch: the average F0 median in news is 108 Hz, compared to 84 Hz in interviews and 85 Hz in dictations. This somewhat limits the possible F0 variation, although the ratio of the average phrase F0 to the final syllable F0 does not differ significantly across the three types of recordings (see

Table 3). News recordings differ from most kinds in terms of intensity patterns (see

Table 2). While intensity significantly decreases at the end of ips in dictations and interviews, it remains the same or even slightly increases in news.

The annotators marked phrase boundaries based not only on the features analyzed in this paper (pause, lengthening of the final sound, F0, and intensity) but also on articulation-related features such as assimilation, degemination, the presence or absence of accommodation of adjacent sounds, qualitative characteristics of the sounds, and the strengthening or weakening of articulation. These features were not included in the current analysis because the functioning of Lithuanian sounds in connected speech remains under-researched. In preparing the recordings, the primary focus was on aligning phonetic words with their textual representations, and context-driven or prosodic modifications of sounds were largely disregarded. Since annotators relied on additional criteria when marking phrasing in the data, the automatic identification of ips in news recordings was unsuccessful.

Almost all (97%) word-final boundaries in news recordings were correctly predicted as such.

In dictation recordings, 86% of the IPs annotated by humans were predicted as IPs, while the remainder were labeled as ips. The analysis of these cases reveals that the pauses were somewhat shorter than typical IP boundaries, and other acoustic cues were less pronounced, for example, less lengthening of final sounds. However, in some cases, the final syllable was produced with a creaky voice, which usually signals an IP boundary in Lithuanian.

More ips were correctly predicted in dictations than in news—72%. A total of 8% of ips were classified as IPs, and in some cases, the acoustic cues indeed suggested IP boundaries, but due to syntactic and semantic considerations, annotators labeled them as ips. There were also a few cases where the final syllable was produced with a sharply rising F0, even though it was not a question. Since this pitch contour deviates from the typical pattern, such cases were classified as IPs during automatic analysis.

A total of 20% of the ips were actually within-phrase word boundaries rather than true phrase-final boundaries. Analysis shows that these boundaries may have been cued not by the selected acoustic features, but by other phonetic phenomena mentioned earlier in relation to news recordings, such as the absence of assimilation and degemination (typical of word boundaries but not phrase boundaries), articulatory clarity or weakening, and F0 resetting at the beginning of a new phrase. This last cue should be included in future versions of the algorithm. These mispredicted boundaries most often occurred between subject and predicate phrase groups.

In summary, 87% of phrase boundaries marked by annotators in dictation recordings were predicted as phrase boundaries in general, although the identification of the specific type (IP or ip) was slightly lower at 77%. Thus, phrase boundaries in read speech were predicted quite accurately based solely on the analyzed acoustic features and without accounting for syntactic and semantic factors.

As in the news recordings, nearly all (97%) word-final boundaries in dictations were predicted as such. A very small number were classified as ips, and in nearly all those cases, the acoustic features did suggest an ip, but syntactic and semantic factors led annotators not to mark them.

It is important to note that the dictation texts exhibit considerable syntactic complexity, including intricate sentence structures and numerous punctuation marks, all of which influenced the speaker’s phrasing. Annotators deliberated extensively over certain phrasing decisions. For example, homogeneous parts of a sentence are often read as an intermediate variant between IP and ip—that is, without pauses and with only minor changes in intensity and F0. In each case, the final decision was made after careful comparative analysis with similar examples.

In interviews, 76% of annotated IPs were predicted as IPs, but only 29% of ips were predicted as such, and 61% were labeled as word boundaries. This significantly reduced the overall phrase boundary identification accuracy—only 64% of annotator-marked boundaries were predicted as phrase boundaries. As in read speech, nearly all (94%) word-final boundaries were correctly predicted.

Spontaneous speech exhibits specific characteristics that likely influence phrasing. Speakers often begin an IP with high intensity and pitch, which gradually decreases throughout the phrase. As a result, at the end of the IP, intensity and F0 are often too low to be measured reliably, and vowel quality and sound duration may also be unclear. This made it difficult to assess and automatically detect ip boundaries in the second part of IPs.

Moreover, this recording is an excerpt from a talk show. Thought transitions are common, and the speech rate varies, making it difficult to rely on final sound duration for boundary detection. Although interviewer speech was excluded and interrupted segments were avoided, this may have affected the speaker’s phrasing. This is partially reflected in IPs ending with a sharply rising F0, even though they are not questions. The speaker may be seeking confirmation from the interviewer or signaling that something is left unsaid.

The interview also includes many phrases ending with non-modal phonation (specifically, creaky voice), which makes F0 measurements nearly impossible. Such instances also occurred in read speech but were rare. Since this feature was not included in the study, it was not used to detect phrase boundaries.

During recording preparation and analysis of acoustic correlates, it became evident that not all features are equally relevant or salient. Therefore, we evaluated the accuracy of prosodic unit identification based on individual features. The results confirmed the hypothesis: identification accuracy varied depending on the feature used (see

Table 7). The most significant feature was the presence of a pause—prosodic units, which were predicted with 76% accuracy using this cue alone. In comparison, combining all features yielded 80% accuracy, meaning the additional features improved accuracy by only 4%.

Final sound lengthening was considerably less informative, yielding 65% accuracy. Intensity and F0 changes were the least effective (59% and 60% accuracy, respectively), contrary to common assumptions in Lithuanian phonetics. However, the news recordings strongly influenced the low values, where the ip-final intensity remained nearly unchanged.

Naturally, the most relevant aspect is phrase identification—specifically, whether a phrase boundary is detected regardless of its type. The results indicate that 60% of phrase boundaries are detected based on pauses, 57% based on final sound lengthening, 33% based on F0 changes, and only 25% based on intensity changes (cf. 69% when all features are combined).

The findings clearly demonstrate that the examined features vary in significance when identifying specific types of phrases. The accuracy of IP detection based on pauses is 74%, while intensity and F0 contribute similarly, with accuracies of 52% and 50%, respectively. The poorest accuracy was obtained using only the final sound duration parameter (8%), which may be partly due to difficulties in precisely identifying the boundaries of the final IP sound, as intensity tends to decrease substantially.

The highest accuracy in identifying ip was achieved based on final sound lengthening (49%), while pauses proved less reliable, with 38% accuracy. Changes in intensity and F0 were considerably less relevant for identifying ips than for IPs, yielding only 3% and 11% accuracy, respectively.