Developing an AI-Powered Pronunciation Application to Improve English Pronunciation of Thai ESP Learners

Abstract

1. Introduction

2. Literature Review

2.1. Pronunciation Challenges for Thai Learners of English

2.2. L2 Pronunciation Learning Theory

2.2.1. Speech Learning Model (SLM)

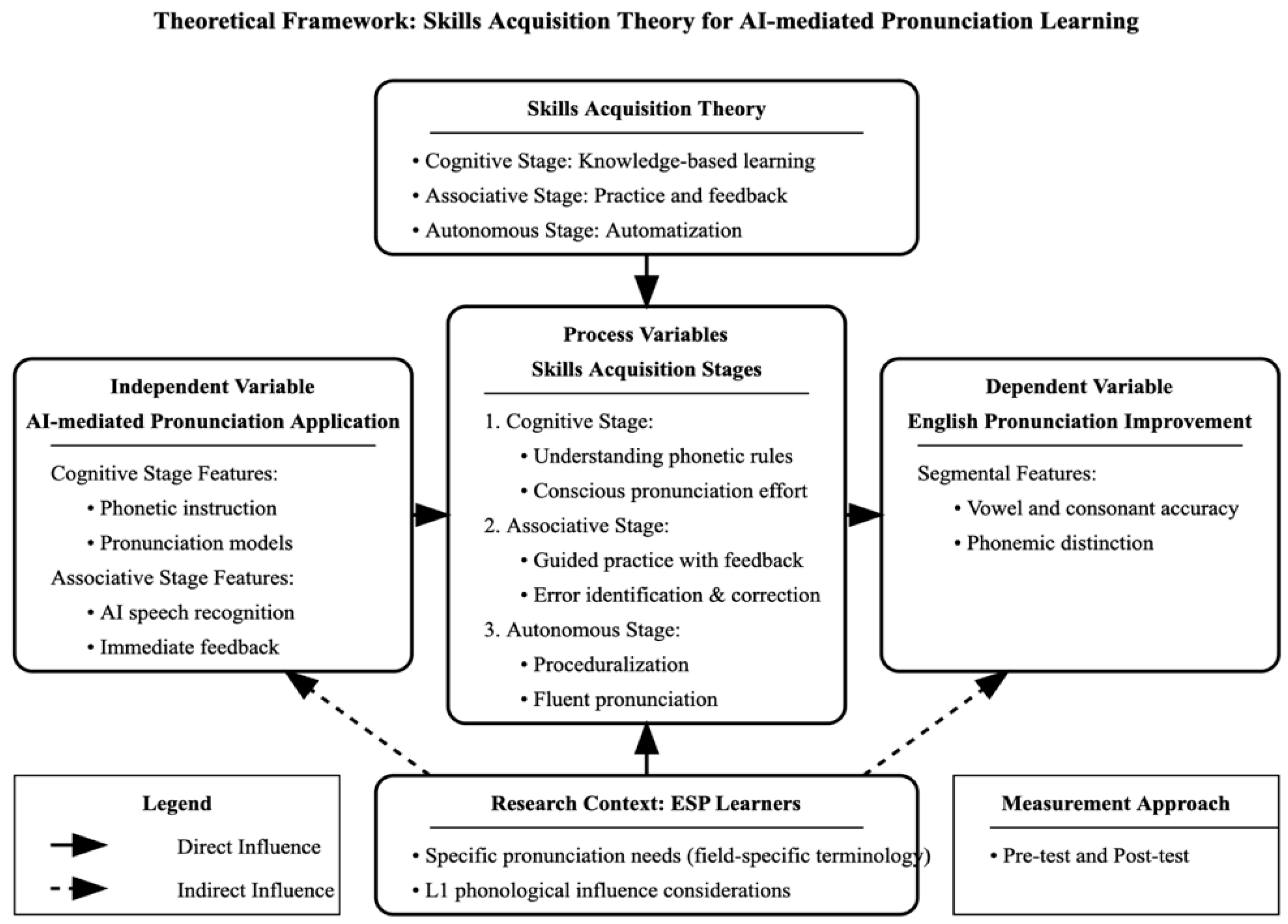

2.2.2. Skill Acquisition Theory (SAT)

2.3. AI-Mediated Applications in Pronunciation Teaching

2.4. Pronunciation Learning in English for Specific Purposes

3. Research Methodology

3.1. Participants

3.2. Research Instruments

3.2.1. English Pronunciation Test

- The folk theatre performance requires careful choreography.

- Traditional musicians use string instruments like the zither.

- They preserve ancient methods of dance.

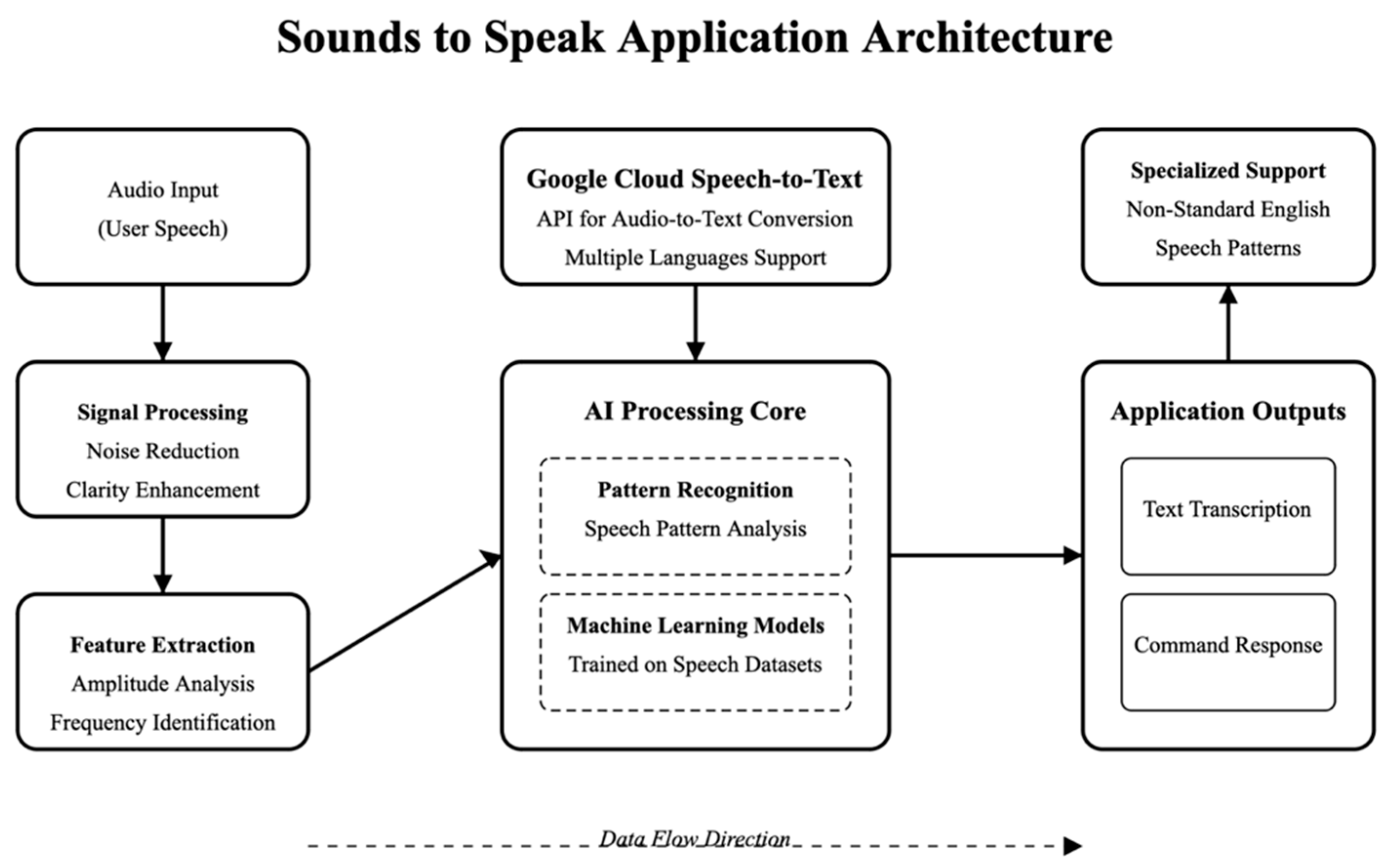

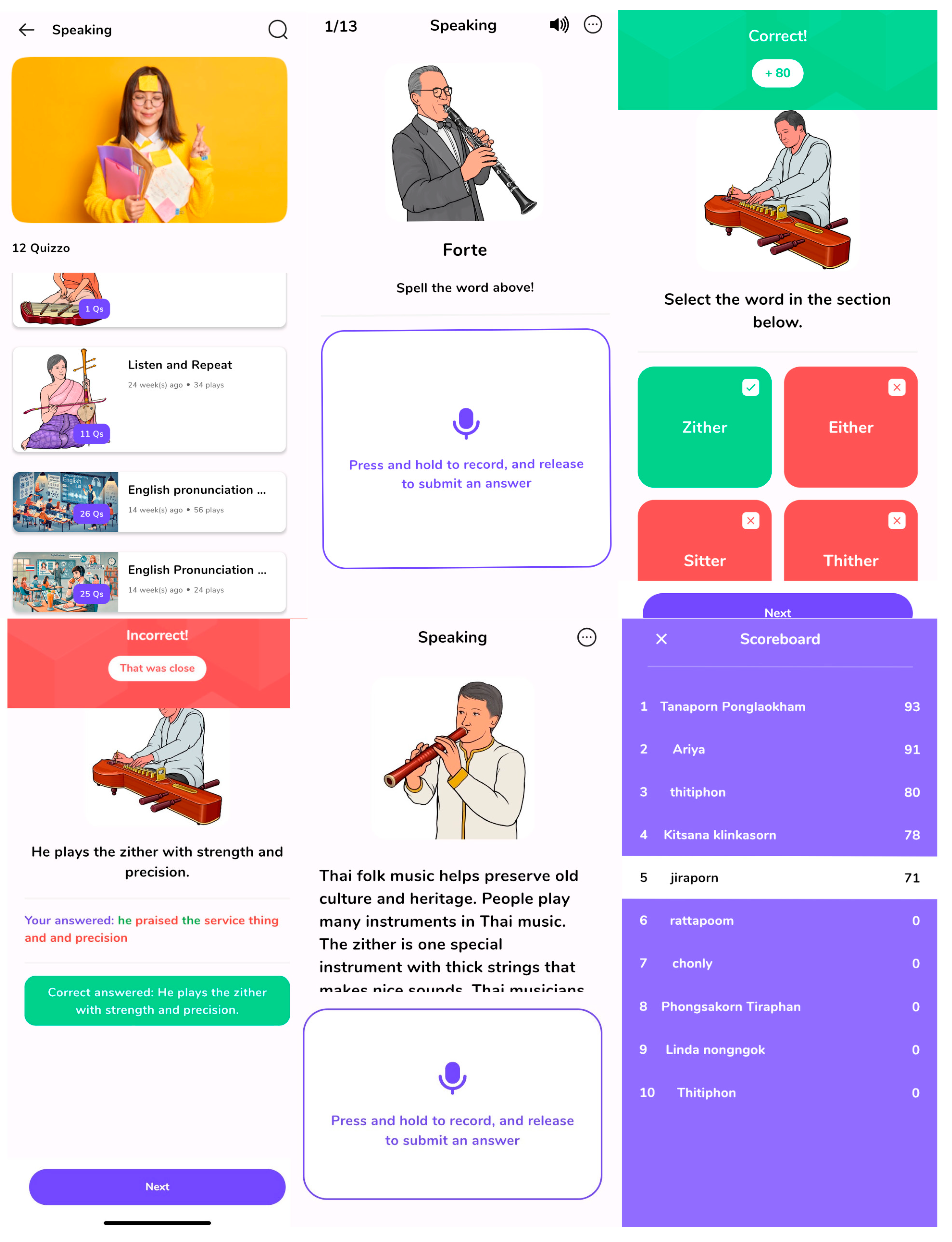

3.2.2. AI-Mediated Pronunciation Application

- Speech analysis capability that identifies specific errors in fricative production;

- Explicit and implicit textual feedback on specific pronunciation errors;

- Personalized practice exercises targeting individual learners’ difficulties;

- Progress tracking and analytics;

- Specialized vocabulary modules relevant to participants’ ESP contexts.

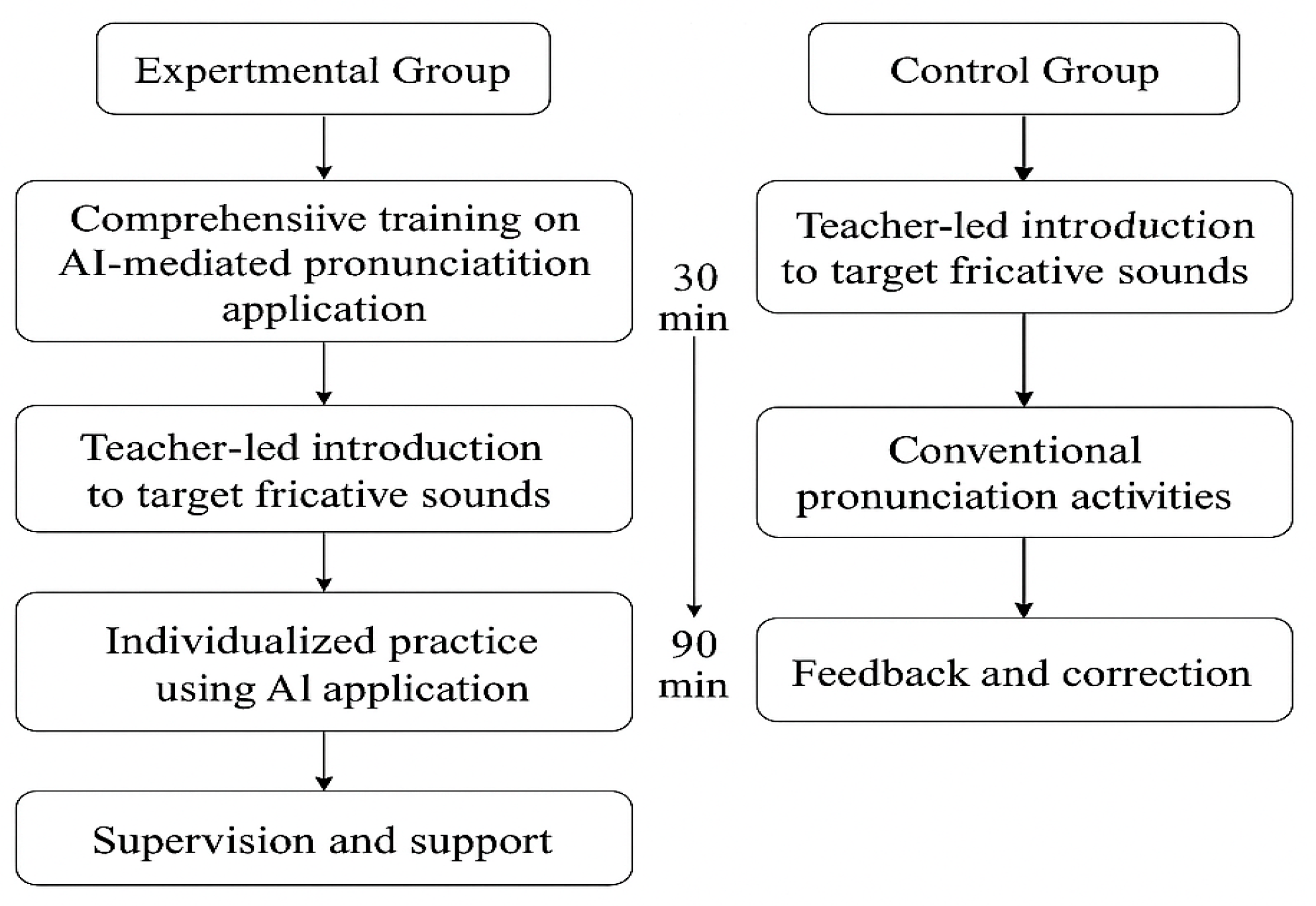

3.3. Research Procedures

3.3.1. Pre-Testing Phase

3.3.2. Intervention Phase

3.3.3. Post-Testing Phase

3.4. Data Analysis

4. Results

4.1. Within-Group Comparisons: Pre-Test and Post-Test Performance

4.2. Between-Group Comparisons: Overall Pronunciation Performance

4.3. Between-Group Comparisons: Specific Fricative Sounds

5. Discussion

5.1. Differential Effects on Specific Fricative Sounds

5.2. Implications for ESP Pronunciation Pedagogy

5.3. Theoretical Contributions

5.4. Limitations and Directions for Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. English Pronunciation Test Items

- Section A: Individual Word Reading Task

- Folk (initial position)

- Performance (medial position)

- Clef (final position)

- 4.

- Voice (initial position)

- 5.

- Movement (medial position)

- 6.

- Preserve (final position)

- 7.

- Theatre (initial position)

- 8.

- Method (medial position)

- 9.

- Cloth (final position)

- 10.

- They (initial position)

- 11.

- Rhythm (medial position)

- 12.

- Breathe (final position)

- 13.

- String (initial position)

- 14.

- Instrument (medial position)

- 15.

- Dance [final position]

- 16.

- Zither (initial position)

- 17.

- Musician (medial position)

- 18.

- Dulcimers (final position)

- 19.

- Choreography (medial position)

- 20.

- Percussion (medial position)

- 21.

- Finish (final position)

- 22.

- Culture (medial position)

- 23.

- Rouge (final position)

- 24.

- Homage (final position)

- 25.

- Rehearsal (initial position)

References

- Akhila, K., Nair, A. P., Jyothsana, K. N., Sreepriya, S., & Akhila, E. (2024). Fluttering into fluent English: Building an interactive voice-based AI learning app for language acquisition. Journal of Artificial Intelligence and Capsule Networks, 6(2), 158–170. [Google Scholar] [CrossRef]

- Anderson, J. R. (1982). Acquisition of cognitive skill. Psychological Review, 89(4), 369–406. [Google Scholar] [CrossRef]

- Anderson, J. R. (1992). Automaticity and the ACT* theory. American Journal of Psychology, 105, 165–180. [Google Scholar] [CrossRef] [PubMed]

- Aryanti, R. D., & Santosa, M. H. (2024). A systematic review on artificial intelligence applications for enhancing EFL students’ pronunciation skill. The Art of Teaching English as a Foreign Language, 5(1), 102–113. [Google Scholar] [CrossRef]

- Bashori, M., van Hout, R., Strik, H., & Cucchiarini, C. (2021). Effects of ASR-based websites on EFL learners’ vocabulary, speaking anxiety, and language enjoyment. System, 99, 102496. [Google Scholar] [CrossRef]

- Bashori, M., Van Hout, R., Strik, H., & Cucchiarini, C. (2024). I can speak: Improving English pronunciation through automatic speech recognition-based language learning systems. Innovation in Language Learning and Teaching, 18(5), 443–461. [Google Scholar] [CrossRef]

- Basturkmen, H. (2010). Developing courses in English for specific purposes. Palgrave Macmillan. [Google Scholar]

- Behr, N. S. (2022). English diphthong characteristics produced by Thai EFL learners: Individual practice using PRAAT. Computer-Assisted Language Learning Electronic Journal, 23, 401–424. [Google Scholar]

- Best, C. T., & Tyler, M. D. (2007). Nonnative and second-language speech perception: Commonalities and complementarities. In M. J. Munro, & O.-S. Bohn (Eds.), Second language speech learning: The role of language experience in speech perception and production (pp. 13–34). John Benjamins. [Google Scholar]

- Cedar, P., & Termjai, M. (2021). Teachers’ training of English pronunciation skills through social media. Journal of Education and Innovation, 23(3), 32–47. [Google Scholar]

- Chanaroke, U., & Niemprapan, L. (2020). The current issues of teaching English in Thai context. Eau Heritage Journal Social Science and Humanities, 10(2), 34–45. [Google Scholar]

- DeKeyser, R. (2007a). Practice in a second language: Perspectives from applied linguistics and cognitive psychology. Cambridge University Press. [Google Scholar]

- DeKeyser, R. (2007b). Skill acquisition theory. In B. VanPatten, & J. Williams (Eds.), Theories in second language acquisition (pp. 97–113). Routledge. [Google Scholar]

- DeKeyser, R. M. (1997). Beyond explicit rule learning: Automatizing second language morphosyntax. Studies in Second Language Acquisition, 19(2), 195–221. [Google Scholar] [CrossRef]

- DeKeyser, R. M. (2009). Cognitive-psychological processes in second language learning. In M. Long, & C. Doughty (Eds.), Handbook of Second Language Teaching (pp. 119–138). Wiley-Blackwell. [Google Scholar]

- DeKeyser, R. M. (2015). Skill acquisition theory. In B. VanPatten, & J. Williams (Eds.), Theories in second language acquisition: An introduction (2nd ed., pp. 94–112). Routledge. [Google Scholar]

- Dennis, N. K. (2024). Using AI-powered speech recognition technology to improve English pronunciation and speaking skills. IAFOR Journal of Education: Technology in Education, 12(2), 107–126. [Google Scholar] [CrossRef]

- Derwing, T. M., & Munro, M. J. (2015). Pronunciation fundamentals: Evidence-based perspectives for L2 teaching and research. John Benjamins. [Google Scholar]

- Ellis, N. C. (2005). At the interface: Dynamic interactions of explicit and implicit language knowledge. Studies in Second Language Acquisition, 27(2), 305–352. [Google Scholar] [CrossRef]

- Flege, J. E. (1995). Second-language speech learning: Theory, finding, and problems. In W. Strange (Ed.), Speech perception and linguistic experience: Issue in cross-language research (pp. 229–273). York Press. [Google Scholar]

- Flege, J. E., & Bohn, O.-S. (2021). The revised Speech Learning Model (SLM-r). In R. Wayland (Ed.), Second language speech learning: Theoretical and empirical progress (pp. 3–83). Cambridge Press. [Google Scholar] [CrossRef]

- Guskaroska, A. (2019). ASR as a tool for providing feedback for vowel pronunciation practice [Master’s thesis, Iowa State University]. [Google Scholar] [CrossRef]

- Huang, Q., & Kitikanan, P. (2022). Production of the English “sh” by L2 Thai learners: An acoustic study. Theory and Practice in Language Studies, 12(8), 1508–1515. [Google Scholar] [CrossRef]

- Isarankura, S. (2016). Using the audio-articulation method to improve EFL learners’ pronunciation of the English /v/ sound. Thammasat Review, 18(2), 116–137. [Google Scholar]

- Jenkins, J. (2000). The phonology of English as an international language: New models, new norms, new goals. Oxford University Press. [Google Scholar]

- Kachinske, I. (2021). Skill acquisition theory and the role of rule and example learning. Journal of Contemporary Philology, 4(2), 25–41. [Google Scholar] [CrossRef]

- Kaewpet, C. (2009). A framework for investigating learner needs: Needs analysis extended to curriculum development. Electronic Journal of Foreign Language Teaching, 6(3), 209–220. [Google Scholar]

- Kang, S. W., Lee, Y. J., Lim, H. J., & Choi, W. K. (2024). Development of AI convergence education model based on machine learning for data literacy. Advanced Industrial Science, 3(1), 1–16. [Google Scholar]

- Kanokpermpoon, M. (2007). Thai and English consonantal sounds: A problem or a potential for EFL learning? ABAC Journal, 27(1), 57–66. [Google Scholar]

- Kaya, S. (2021). From needs analysis to development of a vocational English language curriculum: A practical guide for practitioners. Journal of Pedagogical Research, 5(1), 154–171. [Google Scholar] [CrossRef]

- Khamkhien, A. (2010). Teaching English speaking and English speaking tests in the Thai context: A reflection from Thai perspective. English Language Teaching, 3(1), 184. [Google Scholar] [CrossRef]

- Khampusaen, D., Chanprasopchai, T., & Lao-un, J. (2023). Empowering Thai community-based tourism operators: Enhancing English pronunciation abilities with AI-based lessons. Mekong Journal, 21(1), 45–60. [Google Scholar]

- Kitikanan, P. (2017). The effects of L2 experience and vowel context on the perceptual assimilation of English fricatives by L2 Thai learners. English Language Teaching, 10(12), 72. [Google Scholar] [CrossRef]

- Kormos, J. (2006). Speech production and second language acquisition. Lawrence Erlbaum Associates. [Google Scholar]

- Lao-un, J., & Bunyaphithak, W. (2025). Needs analysis of English language in Thai performing arts profession. Asia Social Issues, 18(5), 2–13. [Google Scholar]

- Lara, M. S., Subhashini, R., Shiny, C., Lawrance, J. C., Prema, S., & Muthuperumal, S. (2024, April 12–14). Constructing an AI-assisted pronunciation correction tool using speech recognition and phonetic analysis for ELL. 2024 10th International Conference on Communication and Signal Processing (ICCSP) (pp. 1021–1026), Melmaruvathur, India. [Google Scholar] [CrossRef]

- Lee, C., Zhang, Y., & Glass, J. (2013, October 18–21). Joint learning of phonetic units and word pronunciations for ASR. The 2013 Conference on Empirical Methods in Natural Language Processing (pp. 182–192), Seattle, WA, USA. [Google Scholar] [CrossRef]

- Levis, J. M. (2018). Intelligibility, oral communication, and the teaching of pronunciation. Cambridge University Press. [Google Scholar]

- Liu, Y., binti Ab Rahman, F., & binti Mohamad Zain, F. (2025). A systematic literature review of research on automatic speech recognition in EFL pronunciation. Cogent Education, 12(1), 2466288. [Google Scholar] [CrossRef]

- McCrocklin, S. (2019). Dictation programs for second language pronunciation learning. Journal of Second Language Pronunciation, 5(2), 242–258. [Google Scholar] [CrossRef]

- McCrocklin, S., Fettig, C., & Markus, S. (2022, September 14). SalukiSpeech: Integrating a new ASR tool into students’ English pronunciation practice. Virtual PSLLT, Virtual. [Google Scholar] [CrossRef]

- Mohammadkarimi, E. (2024). Exploring the use of artificial intelligence in promoting English language pronunciation skills. LLT Journal: A Journal on Language and Language Teaching, 27(1), 98–115. [Google Scholar] [CrossRef]

- Naruemon, D., Bhoomanee, C., & Pothisuwan, P. (2024). Challenges of Thai learners in pronouncing English consonant sounds. The Golden Teak: Humanity and Social Science Journal, 30(3), 1–19. [Google Scholar]

- Neri, A., Cucchiarini, C., & Strik, W. (2003, August 3–9). Automatic Speech Recognition for second language learning: How and why it actually works. 15th ICPhS (pp. 1157–1160), Barcelona, Spain. [Google Scholar]

- Ngo, T. T. N., Chen, H. H. J., & Lai, K. K. W. (2024). The effectiveness of automatic speech recognition in ESL/EFL pronunciation: A meta-analysis. ReCALL, 36(1), 4–21. [Google Scholar]

- Noom-ura, S. (2013). English-teaching problems in Thailand and Thai teachers’ professional development needs. English Language Teaching, 6(11), 139. [Google Scholar] [CrossRef]

- Pawlak, M. (2022). Research into individual differences in SLA and CALL: Looking for intersections. Language Teaching Research Quarterly, 31, 200–233. [Google Scholar] [CrossRef]

- Peerachachayanee, S. (2022). Towards the phonology of Thai English. Academic Journal of Humanities and Social Sciences Burapha University, 30(3), 64–92. [Google Scholar]

- Plonsky, L., & Oswald, F. L. (2014). How big is “big”? Interpreting effect sizes in L2 research. Language Learning, 64(4), 878–912. [Google Scholar] [CrossRef]

- Rajkumari, Y., Jegu, A., Fatma, D. G., Mythili, M., Vuyyuru, V. A., & Balakumar, A. (2024, October 25–26). Exploring neural network models for pronunciation improvement in English language teaching: A pedagogical perspective. 2024 International Conference on Intelligent Systems and Advanced Applications (ICISAA) (pp. 1–6), Pune, India. [Google Scholar] [CrossRef]

- Rebuschat, P. (2013). Measuring implicit and explicit knowledge in second language research. Language Learning, 63(3), 595–626. [Google Scholar] [CrossRef]

- Schmidt, R. (1990). The noticing hypothesis: Awareness and second language acquisition. Studies in Second Language Acquisition, 12(3), 1–20. [Google Scholar]

- Schmidt, R. (2001). Attention. In P. Robinson (Ed.), Cognition and second language instruction (pp. 3–32). Cambridge University Press. [Google Scholar] [CrossRef]

- Schmidt, T., & Strassner, T. (2022). Artificial intelligence in foreign language learning and teaching. Anglistik, 33(1), 165–184. [Google Scholar] [CrossRef]

- Segalowitz, N. (2010). Cognitive bases of second language fluency (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Seidlhofer, B. (2011). Understanding English as a lingua franca. Oxford University Press. [Google Scholar]

- Shafiee Rad, H., & Roohani, A. (2024). Fostering L2 learners’ pronunciation and motivation via affordances of artificial intelligence. Computers in the Schools, 42(3), 1–12. [Google Scholar] [CrossRef]

- Skehan, P. (2015). Foreign language aptitude and its relationship with grammar: A critical overview. Applied Linguistics, 36(3), 367–384. [Google Scholar] [CrossRef]

- Strange, W. (2011). Automatic selective perception (ASP) of first and second language speech: A working model. Journal of Phonetics, 39(4), 456–466. [Google Scholar] [CrossRef]

- Sukying, A., Supunya, N., & Phusawisot, P. (2023). ESP teachers: Insights, challenges and needs in the EFL context. Theory and Practice in Language Studies, 13(2), 396–406. [Google Scholar] [CrossRef]

- Taie, M. (2014). Skill acquisition theory and its important concepts in SLA. Theory and Practice in Language Studies, 4(9), 1971–1976. [Google Scholar] [CrossRef]

- Thomson, R. I., & Derwing, T. M. (2015). The effectiveness of L2 pronunciation instruction: A narrative review. Applied Linguistics, 36(3), 326–344. [Google Scholar] [CrossRef]

- Tomasello, M. (2003). Constructing a language: A usage-based theory of language acquisition. Harvard University Press. [Google Scholar]

- Wang, J. (2024). Optimizing English pronunciation teaching through motion analysis and intelligent speech feedback systems. Molecular & Cellular Biomechanics, 21(4), 652. [Google Scholar] [CrossRef]

- Wongsuriya, P. (2020). Improving the Thai students’ ability in English pronunciation through mobile application. Educational Research and Reviews, 15(4), 175–185. [Google Scholar]

- Wulff, S., & Ellis, N. C. (2018). Usage-based approaches to second language acquisition. In D. Miller, F. Bayram, J. Rothman, & L. Serratrice (Eds.), Bilingual cognition and language: The state of the science across its subfields (pp. 37–56). John Benjamins Publishing Company. [Google Scholar] [CrossRef]

| Authors/Tools | Description | Methodologies | Findings | Limitations |

|---|---|---|---|---|

| I Love Indonesia (ILI) and NovoLearning (NOVO) (Bashori et al., 2021) | Immediate and corrective feedback on phonetic details | Experimental research; short intervention (5 weeks) | Both ASR systems improved learners’ pronunciation, with NOVO (richer feedback) showing more substantial gains. | Short study duration: no long-term effects measured. |

| SpeakBuddy (Akhila et al., 2024) | Interactive conversation & instant feedback | Case study | Enhanced learner autonomy and engagement; increased confidence and accuracy in pronunciation. | Focused on one context; generalizability limited. |

| SalukiSpeech (McCrocklin et al., 2022) | Flexible, student-driven, implicit feedback for sound segments | Tool development; user trials | Segmental accuracy improved, especially with problematic vowels/consonants; learners actively engage in their own process. | Limited practice variety (only one picture description task). |

| LangGo (Khampusaen et al., 2023) | Contextualized lessons and error feedback aligned with learner backgrounds | Case study: Thai EFL learners | Itemized individual error analysis; improved target segmental features; context improves relevance | Applicability beyond Thai/ESP context not tested. |

| ASR + Phonetic Analysis System (Lara et al., 2024) | Combination of ASR + phonetic analysis for precision | Technical evaluation with learners | Precise feedback on articulation/intonation, revealing subtle errors hard to catch by human teachers. | Technology-dependent; user interface challenges. |

| Listnr & Murf (Mohammadkarimi, 2024) | AI tools with instant feedback and consumable practice exercises | Mixed methods | Notable pronunciation gains; increased learner motivation. | Black-box feedback logic; possible over-reliance on technology. |

| CNN-Based Systems (Rajkumari et al., 2024) | Neural network-powered personalized feedback on accuracy and fluency | Experimental | Personalized, real-time corrections outperformed static approaches; improved both accuracy and fluency. | Resource-intensive; requires large, clean training sets. |

| Group | Kolmogorov–Smirnov | Shapiro–Wilk | |||||

|---|---|---|---|---|---|---|---|

| Statistic | df | Sig. | Statistic | df | Sig. | ||

| Pretest | Exp | 0.12 | 38 | 0.174 | 0.96 | 38 | 0.186 |

| Control | 0.14 | 36 | 0.077 | 0.96 | 36 | 0.216 | |

| Posttest | Exp | 0.11 | 38 | 0.200 | 0.98 | 38 | 0.579 |

| Control | 0.09 | 36 | 0.200 | 0.97 | 36 | 0.540 | |

| Week | Instructional Stage (SAT) | Focus Content | Control Group (Traditional Instruction) | Experimental Group (AI-Mediated Practice) |

|---|---|---|---|---|

| 1–2 | Cognitive (Declarative) |

|

|

|

| 3–4 | Cognitive to Associative |

| ||

| 5–6 | Associative (Procedural) | Voiced fricatives (/v/, /z/) |

|

|

| 7–8 | Associative | Interdental fricatives (/θ/, /ð/) | ||

| 9–10 | Associative to Autonomous | Post-alveolar fricatives (/ʃ/, /ʒ/) | ||

| 11–12 | Autonomous | Sentence-level and ESP wordlist practice |

|

|

| 13–14 | Autonomous | Contextualized speech production |

|

|

| 15–16 | Post-Instruction Evaluation | Pronunciation test (ESP-specific words) and reflective evaluation |

|

|

| AI Accuracy | Rating Scale | Descriptions |

|---|---|---|

| >90% | 8 | The rater would perceive the fricative as consistently and clearly produced, closely matching native-like pronunciation with minimal or no discernible errors. |

| 80–90% | 7 | |

| 70–80% | 6 | The rater might notice some inconsistencies or minor deviations in the production of the fricative, but the sound remains largely intelligible. |

| 60–70% | 5 | |

| 50–60% | 4 | |

| 40–50% | 3 | The rater would identify significant and frequent errors in the production of the fricative, potentially leading to reduced intelligibility |

| 30–40% | 2 | |

| <30% | 1 |

| Test | Group | M | SD | MD | df | t | p | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Pretest | Exp (N = 36) | 87.67 | 10.07 | 47.36 | 35 | −14.21 | <0.001 | 2.32 |

| Posttest | 135.03 | 16.99 | ||||||

| Pretest | Control (N = 38) | 85.45 | 15.39 | 35.71 | 37 | −15.10 | <0.001 | −2.45 |

| Posttest | 121.16 | 23.98 |

| Test | Group | M | SD | df | t | p | Cohen’s d |

|---|---|---|---|---|---|---|---|

| Pre-test | Control (N = 38) | 87.67 | 15.39 | 72 | 0.73 | 0.468 | −0.17 |

| Exp (N = 36) | 85.45 | 16.99 | |||||

| Post-test | Control (N = 38) | 121.16 | 23.98 | 72 | 2.86 | 0.006 | −0.66 |

| Exp (N = 36) | 135.03 | 16.99 |

| Fricative | Group | M | SD | df | t | p | Mean Difference | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| /f/ | Control (N = 38) | 14.32 | 2.27 | 72 | −1.89 | 0.063 | −1.02 | −0.44 |

| Exp (N = 36) | 15.32 | 3.59 | ||||||

| /v/ | Control (N = 38) | 15.18 | 3.59 | 72 | −0.86 | 0.394 | −0.076 | −0.20 |

| Exp (N = 36) | 15.94 | 4.04 | ||||||

| /θ/ | Control (N = 38) | 13.79 | 3.76 | 72 | −2.28 | 0.026 * | −2.02 | −0.53 |

| Exp (N = 36) | 15.81 | 3.85 | ||||||

| /ð/ | Control (N = 38) | 13.32 | 3.30 | 72 | −2.12 | 0.037 * | −1.74 | −0.49 |

| Exp (N = 36) | 15.06 | 3.76 | ||||||

| /s/ | Control (N = 38) | 14.76 | 7.88 | 72 | −1.37 | 0.175 | −1.90 | −0.32 |

| Exp (N = 36) | 16.67 | 2.82 | ||||||

| /z/ | Control (N = 38) | 14.71 | 4.45 | 72 | −2.92 | 0.005 ** | −2.51 | −0.68 |

| Exp (N = 36) | 17.22 | 2.68 | ||||||

| /ʃ/ | Control (N = 38) | 15.39 | 4.48 | 72 | −2.74 | 0.008 ** | −2.36 | −0.64 |

| Exp (N = 36) | 17.75 | 2.61 | ||||||

| /ʒ/ | Control (N = 38) | 9.39 | 3.12 | 72 | −0.16 | 0.877 | −0.11 | −0.04 |

| Exp (N = 36) | 9.50 | 2.67 | ||||||

| /h/ | Control (N = 38) | 10.29 | 2.20 | 72 | −2.61 | 0.011 * | −1.46 | −0.61 |

| Exp (N = 36) | 11.75 | 2.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lao-un, J.; Khampusaen, D. Developing an AI-Powered Pronunciation Application to Improve English Pronunciation of Thai ESP Learners. Languages 2025, 10, 273. https://doi.org/10.3390/languages10110273

Lao-un J, Khampusaen D. Developing an AI-Powered Pronunciation Application to Improve English Pronunciation of Thai ESP Learners. Languages. 2025; 10(11):273. https://doi.org/10.3390/languages10110273

Chicago/Turabian StyleLao-un, Jiraporn, and Dararat Khampusaen. 2025. "Developing an AI-Powered Pronunciation Application to Improve English Pronunciation of Thai ESP Learners" Languages 10, no. 11: 273. https://doi.org/10.3390/languages10110273

APA StyleLao-un, J., & Khampusaen, D. (2025). Developing an AI-Powered Pronunciation Application to Improve English Pronunciation of Thai ESP Learners. Languages, 10(11), 273. https://doi.org/10.3390/languages10110273