Abstract

A complex command and control task was selected as the test task, which included observing the overall and local situation, the interactive operation and situation display of detection equipment, the erection and launch of air defense equipment, and the check and display status. The disadvantages of the traditional two-dimensional display interactive system include poor intuitiveness, insufficient information display dimension and complicated interactive operation. The mixed reality display interaction system can avoid these problems well and has the advantages of good portability and high efficiency, but this display interaction system has the problem of high cognitive load. Therefore, based on the premise of completing the same complex task, how to select and improve the display interaction system has become a problem worthy of urgent research. Based on the same complex command and control task, this paper compared the traditional two-dimensional display interaction system and the mixed reality display interaction system and analyzed the performance and cognitive load of the two systems. It is concluded that when completing the same task, the performance of the mixed reality display interaction system is significantly higher than that of the traditional two-dimensional display interaction system, but the cognitive load is slightly higher than that of the traditional two-dimensional display. Cognitive load was reduced while task performance was improved through multi-channel improvements to the mixed reality display interaction system. Considering the effects of performance and cognitive load, the improved multi-channel mixed reality display interaction system is superior to the unimproved mixed reality display interaction system and the two-dimensional display interaction system. This research provides an improvement strategy for the existing display interaction system and provides a new display interaction mode for future aerospace equipment and multi-target, multi-dimensional command and control tasks in war.

1. Introduction

With the development of science and technology and the improvement of the war system, the current mode of war has changed from the original mechanized war to the information war; the combat unit has changed from an original single-service operation to a multi-service joint operation. The type of war has also changed from the original quantity-scale type and manpower-intensive type to the quality-efficiency type and technology-intensive type [1]. With the evolution of modern warfare, highly informatized weapon systems, sensitive information resources and ultra-multi-dimensional combat space are the directions for the development of today’s informatized warfare [2]. Under this trend, weapons and equipment are also being upgraded towards generalization, networking and miniaturization [3]. With the evolution of the form of warfare and the continuous enhancement of the information perception capability of aerospace equipment, the information processed and interacted by the human–machine interface of aerospace equipment is changing from traditional situational information to multi-dimensional complex information of land, sea, air, sky, and electricity [4,5]. The human–machine interface of the command-and-control system needs to process complex information such as multi-dimensional, high-density, and multi-form battlefield situations, equipment status, and combat instructions in a shorter time [6]. Through the investigation and study of various existing aerospace equipment, it is found that the current aerospace equipment mainly performs information perception and acquisition on a two-dimensional basis. With the continuous evolution and development of war forms, multidimensional information and situation bring great pressure to the original two-dimensional information perception and acquisition mechanism, and also bring obstacles and limitations in information perception to operators [7]. It is difficult to meet the multi-dimensional and complex information display and interaction of land, sea, air, sky and electricity, and unable to adapt to the combat needs of air and space equipment in future wars [8]. The development of mixed reality (hereinafter referred to as MR) technology provides a new display interaction mode to solve this problem, which can display information in a higher dimension and realize holographic perception of the overall situation information [9]. Moreover, with the evolution of war forms, the advantages of small size and portability of MR become particularly important. However, while it brings good interactive modes of information display and also brings higher visual fatigue and cognitive load to users [10]. Multi-channel interaction is an important interaction method in human–computer interaction. Through the cooperation of multiple sensory channels, task performance and cognitive load can be significantly improved [11]. Using multi-channel interaction in the improvement of MR can not only improve performance, reduce visual fatigue and cognitive load, but users are no longer limited by complex interactions and can focus more on actual tasks [12].

The command and control of air defense tasks were all carried out under the two-dimensional display interaction system. This display interaction method has several problems such as insufficient information display dimensions, low display quality, and complex interactive operations, which greatly affect the performance of air defense missions [13,14,15]. However, mission performance is a key factor in selecting and optimizing display interaction systems in air defense missions. An MR display interactive system has good immersion and interaction modes. When the MR display interactive system is used to complete a complex command and control task, it can achieve higher task performance, establish a better interaction system [16,17], and has good portability. However, the MR display interactive system has the defect of high cognitive load [18]. Therefore, how to optimize and improve the display interactive system is an urgent problem to be solved when completing the same complex command and control task.

Therefore, the research content of this paper is as follows. In the case of completing the same complex command and control task, the performance and cognitive load of the MR display interactive system and two-dimensional display interactive system were compared, and the two display interactive systems were evaluated by combining task completion time, eye movement, EEG and subjective cognitive load data. In this way, the problems of the MR display interactive system in complex command and control tasks are explored and multi-channel improvement is carried out to address the existing problems. A comprehensive evaluation was conducted between the improved MULTI-channel MR display interactive system and the first two display interactive systems to determine the most suitable display interactive system for the complex command and control system.

Patrzyk and M. Klee pointed out that when users use the traditional two-dimensional display interactive system, it leads a lack of depth perception, and the novice will have a high error rate when using the traditional two-dimensional display interactive system to perform complex tasks [19]. Nicolas Gerig and Johnathan Mayo and others pointed out in their research that the quantity and quality of visual information cues displayed by traditional two-dimensional display modes are limited, which will affect the performance and quality of user interaction [20]. After comparing and analyzing various display modes, Chiuhsiang Joe Lin et al. concluded that the traditional two-dimensional display mode has low immersion and visual search efficiency [21]. It can be seen that the traditional two-dimensional display cannot meet the information display and interaction of the complex task of early warning and interception proposed in this paper. There is an urgent need to explore a new way of display interaction.

MR is a new technology that seamlessly integrates real world information and virtual world information [22]: the physical information (visual information, sound, taste, touch, etc.) that is difficult to experience in a certain time and space of the real world is simulated and superimposed by computer science and other science and technology, and virtual information is applied to the real world [23,24,25]. Perceived according to human senses, it is possible to experience a feeling beyond reality [26,27]. The real environment and virtual objects coexist in the same picture or space [28]. Mixed reality technology not only displays real-world information, but also displays virtual information at the same time. The user uses the helmet display to combine the real world with computer graphics and can see the real world superimposed. The virtual world surrounds him [29].

MR head-mounted displays are highly immersive, but due to conflicting multi-sensory inputs, head-mounted displays in MR environments are highly prone to visually induced motion sickness (VIMS) [30,31,32]. Additionally, the research of Hoffman, Shibata, Jeng shows that due to the physiological characteristics of the vergence regulation system of the human eye, the degree of visual fatigue is very high when people receive information in a mixed reality environment [33,34,35]. Kim, Kane, Banks, and Kanda et al., in their study, explained that the convergency-divergency-mediating conflicts in mixed reality environments caused greater binocular pressure [36,37].

Because MR head-mounted displays project parallax images directly into both eyes, it will cause greater visual stress to the human eye [38,39]. Therefore, in the same usage time, the visual stress and fatigue of MR head-mounted displays will be greater than that of 2D screens [40].

2. Method

2.1. Subject

Twenty healthy male students aged 20–30 years old from an engineering university were selected (SD = 2.4). Everyone participated voluntarily in this experiment, and everyone underwent an eye exam with a visual acuity or corrected visual acuity of 5.0, and no color blindness or color weakness. Before the experiment, each subject was informed of the research nature of the experiment and possible complications, and their consent was obtained. The 20 students were randomly divided into two groups with 10 students in each group.

Experimental Site and Experimental Equipment

The experimental site is located indoors and the experiments were carried out in a special mixed reality training room. The site area was about 40 m2. The indoor temperature was constant at 25 °C and the humidity was 52%.

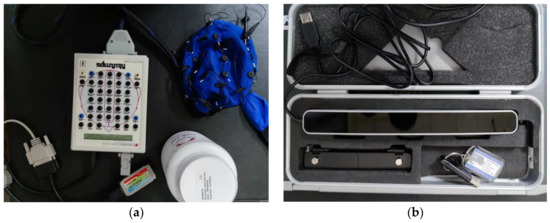

The MR display device uses Microsoft’s HoloLens2 generation, and Figure 1a is a real photo. As a typical hardware device of mixed reality technology, users can still walk in real space without being cut off when using the device. HoloLens2 can track the user’s movement and line of sight changes and project virtual holograms onto the user’s eyes through light projection, supporting real-time interaction between users and virtual objects in gestures, sounds, and gazes in various forms. The two-dimensional display device is an AOC27-inch display with a resolution of 1920 × 1080 dpi, a screen refresh rate of 75 Hz, a screen ratio of 16:9, and a brightness adjustment of 200 cd/m2. Figure 1b is a real photo.

Figure 1.

Display device ((a) Mixed reality display: HoloLens2; (b) Two dimensional display: AOC displayer).

The experimental test device includes an EEG and an eye tracker. The EEG model is Nuamps7181. Its analog input is 40-lead monopole, the sampling frequency is 125, 250, 500, 1000 Hz per lead, the input range is ±130 mV, and the input impedance is not lower than 80 MOhm, Common Mode Rejection Ratio is 100 dB at 50/60 Hz, Input Noise is 0.7 μV RMS (4 μV peak-to-peak), the interface is USB, fully supports hot-plug technology, Figure 2a is a real picture. The eye tracker model is Tobii Pro Glasses 2, with a sampling rate of 50 Hz/100 Hz, which can automatically perform parallel parallax correction, and has built-in slip compensation and tracking technology. The device also supports the absolute value measurement of the pupil, and its interface supports HDMI, USB and 3.5 mm interface. Figure 2b is a real picture.

Figure 2.

Experimental Test Setup ((a) Electroencephalograph; (b) Eye tracker).

2.2. Experimental Task Model

The experimental task model is a command-and-control sand table system developed by Microsoft Visual C# programming language; 3D max software is used for 3D modeling, and Unity 3D is used for animation production and rendering. For the simulated air defense early warning and interception mission scenario, there were two blue-side planes, one conducting low-altitude penetration and the other conducting reconnaissance at high altitude. The red side deployed two air defense positions on the battlefield, each with radar and missile vehicles. However, the radars of the two positions of the red team could not directly detect the high-speed and low-altitude aircraft, so the red team used the drone group to conduct situational awareness on the battlefield, so as to detect the low-altitude penetration aircraft of the blue team. At the same time, the drone group transmitted the situational awareness results to the commander. The commander locked the position of the blue plane according to the data transmitted by the drone group and used the missile vehicle in the air defense position close to the blue plane to launch the missile and shoot down the blue-side plane. The high-altitude blue-side reconnaissance plane could be directly discovered by the red-side radar, and the radar could directly lock the position. The commander controlled the missile vehicle in the air defense position closer to the high-altitude blue plane to fire missiles to shoot down the blue plane according to the radar information.

Task Model Establishment

To establish a virtual model and a holographic human–computer interaction interface, using 3DMAX software, the equipment models such as radar vehicles, drones, and fighter jets required for the experiment were established [41], as shown in Figure 3.

Figure 3.

Partial correlation model.

The selection of domestic and foreign air defense exercise venues was analyzed and it was concluded that the exercise locations for air defense tasks are mainly concentrated in the desert, such as the Gobi, and other sparsely populated terrain areas. A desert terrain was chosen as the map for this experiment in order to better fit the actual exercise scene, as shown in Figure 4. In practical exercises, commanders often erase terrain details in order to reduce operational burden and error rate when conducting command and control of air defense weapons. Therefore, the selection of a terrain basically meets the general requirements of the exercise and does not affect the process and results of the experiment.

Figure 4.

Map.

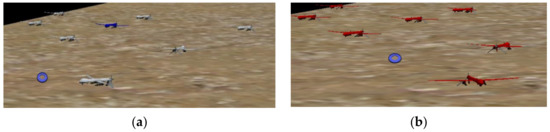

Three groups of UAV swarms were designed. When no enemy situation is found, in order to prevent the blue-side aircraft from attacking at low altitudes, the drone groups of these three formations will use an edited search lineup to perceive the situation on the battlefield, as shown in Figure 5a. In the formation of UAVs, when one of the UAVs spots the blue-side plane, the UAVs will change the formation of the UAVs. Figure 5b is the response of the executive aircraft after finding the blue-side aircraft.

Figure 5.

Battlefield awareness of drone formations ((a) UAVs on search mission; (b) UAVs change formation and color after spotting a target).

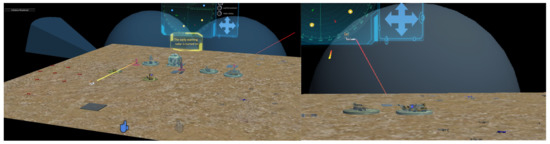

In the real world, radar waves cannot be observed with the naked eye and can only be reacted by plane scanning. In order to visually display the radar beam, this paper adopts the 3D animation of hemispherical and sector scanning, which intuitively expresses the effect of radar waves. In addition, in order to avoid blocking the line of sight, the material selected light blue translucent. The specific effect is shown in Figure 6.

Figure 6.

Radar situation.

After the blue-side aircraft is detected by the warning radar, the blue-side aircraft is exposed, and the dynamic connection line between the blue-side aircraft and the target in the system is locked. The specific effect is shown in Figure 7.

Figure 7.

Schematic diagram of blue-side aircraft target locking.

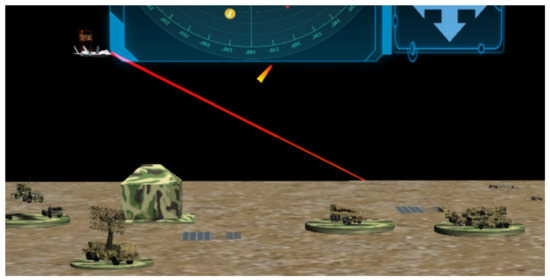

After the blue plane was stably tracked, the missile vehicle entered the ready state. After the commander’s orders, the missile flew to the enemy plane at high speed. After approaching the blue-side plane, the missile exploded, and the blue-side plane and missile disappeared. The above actions were made by UNTY3D animation, and were realized in various forms such as kiefrem animation, particle animation, sprite animation and so on. The specific effect is shown in Figure 8.

Figure 8.

Strike interception diagram.

Finally, the load red-side terrain and the blue-side information, load landmark and radar display were checked. The specific effect is shown in Figure 9.

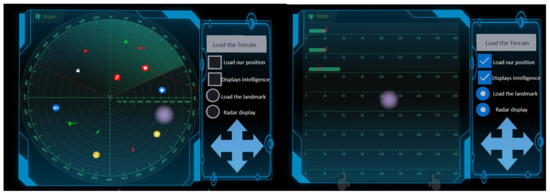

Figure 9.

Select interface display content.

2.3. Task Flow

Task 1: Observe the entire command sand table; Mission 2: Turn on the radars of the two air defense areas; Task 3: Observe the whole process of changing colors and formations after the drone group finds the blue-side aircraft; Task 4: Control the missile vehicle in the air defense area of the low-altitude blue-side aircraft to prepare for erection; Task 5: Control the missile vehicle near the air defense area of the low-altitude blue-side aircraft to launch missiles and shoot down the low-altitude blue-side aircraft; Task 6: When the high-altitude blue-side plane is observed to enter the radar detection distance of its adjacent air defense area, control the missile vehicle in the air defense area to prepare for erection; Task 7: The missile vehicle that controls the air defense area of the blue-side plane near the high altitude launches missiles and shoots down the high altitude blue-side plane; Task 8: Check load red-side terrain, display blue-side intelligence, load landmarks, radar display. Operational Approach:

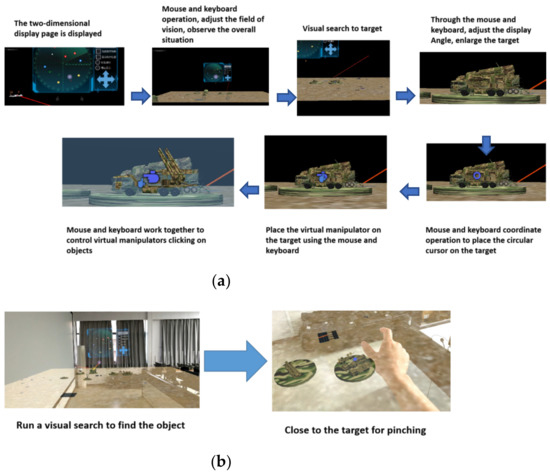

Figure 10a shows the general process of interactive operation in the traditional two-dimensional display mode, and Figure 10b shows the general process of interactive operation in the MR environment. Table 1 shows the operation flow of the two display interactive systems when they complete various tasks.

Figure 10.

Interactive operation method diagram ((a) The operation process in 2D display; (b) Operation process in MR environment).

Table 1.

Operation steps of each task completed by the two display interactive systems.

2.4. Experimental Objective

In the experiment, the tasks completed by the two display interaction systems were completely consistent in task flow and task effect. In this case, collecting and analyzing the differences in performance and cognitive load of the two display interaction systems can reveal the pros and cons of the performance and cognitive load of the two display interaction systems under the same task.

The experimenter used the traditional two-dimensional display interactive system and the MR display interactive system to complete the same task, so as to explore the task performance and cognitive load of these two display interactive systems under the condition of completing the same task. A comparative analysis of the task performance and cognitive load of the two display interactive systems was also conducted. The advantages and disadvantages of the two display interactive systems in task performance and cognitive load were obtained.

The selected tasks include the observation of the overall and local situation, the interactive operation and situation display of detection equipment, the erection and launching of weapons and equipment, and the checking of status display. The above task types are proposed by experts in the field of air defense, which are typical tasks in air defense missions and can represent the general process of the commander’s command operation in air defense missions.

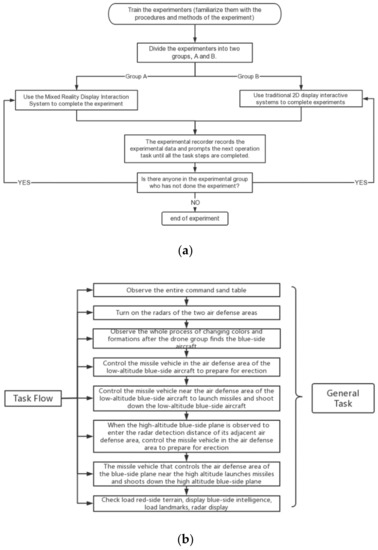

2.5. Experiment Process

- In order to avoid the learning effect, all the testers were trained to be familiar with the operation process and operation method of the whole experiment and performed a rehearsal first.

- It was important that the tester was in a good state of mind and relaxed.

- The experimenters were divided into two groups, A and B, and conducted the experiments on the mixed reality display interaction system and the traditional two-dimensional display interaction system, respectively.

- The experimental data recorder prompts the next operation task, the tester raises his hand to signal after completion, and the recorder records the interval between the prompt operation task and the tester raising his hand as the completion time of this operation task; this cycle was repeated until the entire experimental task was completed.

The experimental process and task flow are shown in Figure 11.

Figure 11.

Experiment flowchart and task flow (a): Experimental process, (b): Task flow.

After the experimenter puts on the HoloLens2, the corresponding program is opened, and the glasses are registered in three dimensions according to the natural characteristics. Figure 12 shows the experimenter’s on-site operation diagram.

Figure 12.

Field Operation Diagram.

In the actual operation, the overall process is clear, consistent and smooth, and the expected effect is achieved. The first view of the operation is shown in Figure 13.

Figure 13.

The first perspective of the experimenter using the HoloLens2 to complete the task.

2.6. Experimental Data Type

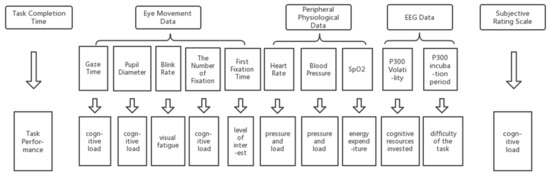

The types of data collected in the experiment are as follows: 1. Task completion time, 2. Eye movement data, 3. Peripheral physiological data, 4. EEG data, and 5. Subjective evaluation scale scoring. Eye movement data included average fixation time, pupil diameter, blink frequency, number of fixation points, and first fixation time; peripheral physiological data included heart rate, blood pressure and blood oxygen saturation; EEG data collected were the amplitude and latency of P300 when subjects completed the task. The specific data types and their contributions to the experimental results are shown in Figure 14.

Figure 14.

Data Types and Contributions.

3. Result

3.1. Task Performance

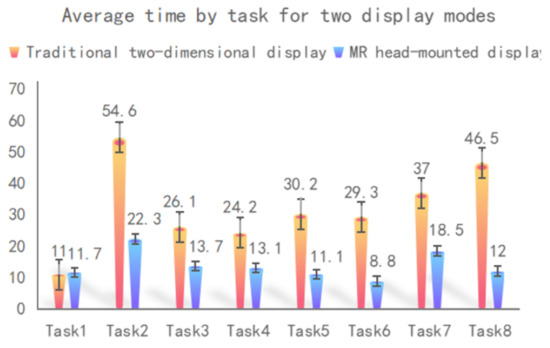

Task completion performance: The average time and total average time to complete each task of the experimental group using the traditional two-dimensional display interactive system and the experimental group using MR display interactive system are shown in Table 2.

Table 2.

Average time to complete tasks using traditional 2D display interaction system and using MR display interaction system.

Through the data analysis in Table 2, it was concluded that the total average time of the traditional two-dimensional display interaction system to complete the task is 258.8 s, which is much higher than the total average time of 111.2 s for the MR display interaction system to complete the task. Paired t-test for two display interaction systems (). One-Sample t-test analysis of traditional two-dimensional display interactive system () and MR display interactive system ().

The results show that different display interaction systems have a significant impact on the task completion time, and the MR display interaction system can improve the time performance of the total task more than the traditional display interaction system under the same air defense command and control task.

Figure 15 was obtained by analyzing the average time for the two display interactive systems to complete 1–8 sub-tasks.

Figure 15.

Average time by task for different display interactive systems.

The analysis of Figure 15 shows that the traditional two-dimensional display interactive system has advantages over the MR display interactive system for observing the whole environment in task 1. However, for tasks 2 to 8, including operational tasks, the performance of the MR display interactive system is higher than that of the traditional two-dimensional display interactive system.

3.2. Eye Movement Data

Blink frequency in the eye movement index can show the degree of visual fatigue [42], while the first fixation time can reflect the degree of interest of the experimenter, pupil diameter, number of fixation points and average fixation time can represent the cognitive load of the experimenter level [43]. Because the subjects in the MR display interactive system could not wear the HoloLens2 and eye tracker at the same time, the solution proposed by Hirota, Masakazu, Kanda and Hiroyuki was adopted in this experiment [44]. Using the demonstration screen casting function in HoloLens2, the experimenter and the tester were separated. The experimenter performs real-time screen projection from the first perspective in the process of completing the task. The tester cooperates with the experimenter to watch the first perspective of the experimenter completing the task. The eye movement data of the test subjects were collected using an eye tracker. Although the experimental data vary from person to person, the visual fatigue law and visual cognitive load characteristics of people are consistent; therefore, the data obtained by this experimental method is valid, and can reflect the difference in visual fatigue and cognitive load between the traditional two-dimensional display interactive system and the MR display interactive system.

Table 3 is the mean and standard deviation of the eye movement data of 10 male experimenters under the two display interaction systems. The data analysis results show that the average fixation time, pupil diameter, blink frequency, number of fixation points and first fixation time of the MR display interaction system are higher than those of the traditional two-dimensional display interaction system. The paired T-test results show that the eye movement data of the traditional two-dimensional display interaction system and the MR display interaction system are significantly different.

Table 3.

Average data and standard deviation data of eye movement characteristics of traditional 2D display interactive system and MR display interactive system.

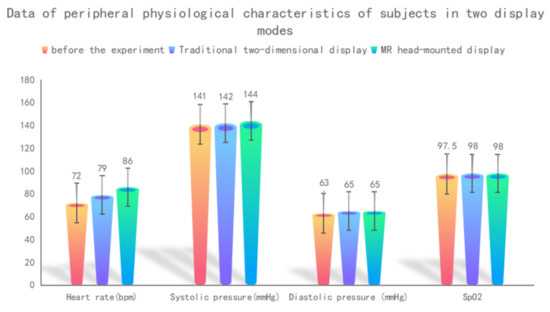

3.3. Peripheral Physiological Characteristics

The peripheral physiological characteristic data were collected when the experimenter did not conduct the experiment, after the traditional two-dimensional display interactive system experiment and after the MR display interactive system experiment [44], as shown in Table 4 and Figure 16.

Table 4.

Peripheral physiological characteristic data of traditional two-dimensional display interactive system and MR display interactive system.

Figure 16.

Peripheral physiological characteristic data of experimenters under different display systems.

In Table 4, the data values of systolic blood pressure, diastolic blood pressure and blood oxygen saturation in the traditional two-dimensional display interactive experiment and MR display interactive experiment were not significantly different, but these three data were higher in both groups than before the experiment. From the heart rate data in Table 4, it can be seen that the experimenter using the MR display interactive system and the traditional two-dimensional display interactive system will cause the heart rate to increase. Additionally, the heart rate of the experiment using the MR display interaction system is higher than that of the traditional two-dimensional display interaction system.

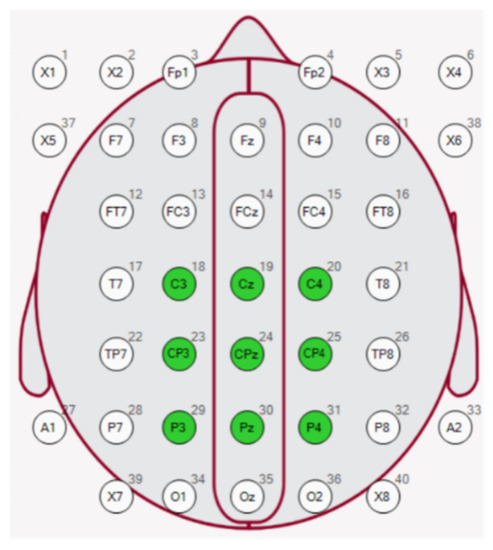

3.4. EEG Data

The amplitude and latency of P300 EEG components are closely related to human cognitive load [26]. The larger the amplitude and the longer the latency, the greater the human cognitive load [45]. In this experiment, nine electrode points (C3, CZ, C4, CP3, CPZ, CP4, P3, PZ and P4) from the central region to the top region, as shown in Figure 17, were selected as the analysis electrodes of P300 composition. Participants in the EEG experiment were all men with normal hair volume.

Figure 17.

Electrode position layout.

Table 5.

P300 Volatility and Latency.

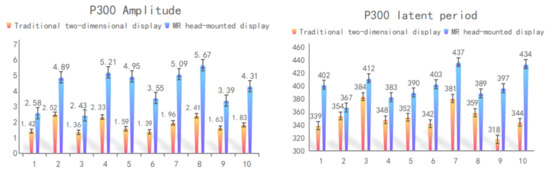

Figure 18.

The experimenter’s P300 EEG data under the two display interaction systems.

Further analysis of the EEG data of P300 shows that the average amplitudes of the traditional two-dimensional display interactive system and the MR display interactive system are 1.84 and 4.21. The average latency of the traditional 2D display interaction system and the MR display interaction system was 352.1 and 401.4. The results of paired t-test showed that the P300 EEG data of the experimenters using the traditional two-dimensional display interaction system and the MR display interaction system were significantly different. The amplitude and latency of the MR display interactive system are higher than those of the traditional two-dimensional display interactive system. Among them, the average amplitude of the EEG components of the P300 of the MR display interactive system is 2.29 times higher than that of the traditional two-dimensional display. This shows that the experimenter invests more cognitive resources when using the MR display interaction system to conduct experiments compared with the traditional two-dimensional display interaction system.

3.5. Subjective Evaluation

In addition to exploring the objective performance data and eye movement and EEG data in the use of the two display interaction systems, the subjective feelings of the experimenter when interacting with the display mode are also an important part of reflecting cognitive load and visual fatigue [46,47]. The NASA-TLX scale is a relatively accurate method for collecting subjective cognitive load data in cognitive experiments, and it is also widely used. The scale includes six evaluation dimensions: mental demand, physical demand, time demand, effort level, performance level, and frustration level [48]. An evaluation dimension will have 20 equivalent scales, one scale represents 5 points, the more scales on a dimension means the higher the load of the dimension. Finally, the scale cognitive load value is obtained by multiplying the score of the dimension by its corresponding weight. The weight is determined by the experimenter comparing the importance of the six dimensions in pairs and recording the more important dimension selected by the experimenter. After = 15 comparisons, the statistics of each dimension are selected by the experimenter as more important times, 1/15 of the value is the weight of this dimension.

Then, the NASA-TLX evaluation scores of the two display interaction systems are shown in Equation (1).

where is the number of times that the ith dimension is selected as an important dimension by the experimenter.

where is the score of the dimension, and is the weight of the dimension.

Following this method, the cognitive load values obtained are shown in Table 6.

Table 6.

Subjective Cognitive Load.

The paired t-test () on the above data showed significant differences, and the score of the traditional two-dimensional display interactive system is lower than that of the MR display interactive system.

3.6. Experimental Result 1

In the MR environment, the whole scene is constructed in a three-dimensional space, and the experimenter needs to constantly change the perspective and orientation to observe the whole environment. In the traditional two-dimensional interactive display system, the experimenter can adjust the display size of the sand table by mouse and keyboard, so that the whole command sand table can be directly observed. Additionally, because in the MR environment, the experimenter can directly interact with the target after searching for the target. In the traditional two-dimensional display interaction system, the experimenter needs to adjust the position, angle and target size of the interface display through the cooperation of the mouse and the keyboard to complete the interaction process. Moreover, in the two-dimensional mode, it is impossible to directly interact with the target, and the interaction process is also more complicated. Therefore, when observing the whole environment in the experiment, the performance of the two-dimensional display interactive system is higher than that of the head-mounted display mode, but once the interaction process is involved in the task, the performance of the MR display interactive system is much higher than that of the two-dimensional display interactive system.

HoloLens2 generation builds a mixed reality environment by directly projecting parallax images to people’s eyes, which will cause the visual focus of the experimenter’s eyes to be not at the same depth, and the pressure of the convergence and divergence adjustment of the eyes will increase, and the experimenter’s eyes will have higher visual fatigue. In the mixed reality environment, the experimenter has a better sense of immersion, and often shows strong interest after entering the mixed reality environment. However, in the MR environment, the experimenter receives more information and has more information dimensions, so for the experimenter there is often a higher cognitive load in the MR environment. It can be seen that the experimenter is more interested in the MR display interaction mode, but in the case of completing the same task, the cognitive load and visual fatigue of the MR display interaction system are slightly higher than those of the traditional two-dimensional screen display.

When people are in a stressful, tense environment or have physical exertion, their heart rate will increase. The greater the pressure and the higher the load, the higher the heart rate will be. It can be seen that when experimenters use the MR display interaction system and the traditional two-dimensional display interaction system to carry out experimental tasks, there is a certain degree of pressure and tension, and they also have a certain cognitive load. However, experimenters had a higher cognitive load and were more physically exhausted when using MR to display the interactive system.

Among them, the average amplitude of the EEG components of the P300 of the MR display interactive system is 2.29 times higher than that of the traditional two-dimensional display. This shows that the experimenter invests more cognitive resources when using the MR display interaction system to conduct experiments compared with the traditional two-dimensional display interaction system. Among them, the average latency of the EEG component of the P300 of the MR display interactive system is 1.14 times longer than that of the traditional two-dimensional display interactive system. This shows that it is more difficult for the experimenter to obtain visual information when using the MR display interaction system to perform experimental tasks. It can be seen from the above that the cognitive load of the traditional two-dimensional display interactive system is significantly lower than that of the MR display interactive system, and the traditional two-dimensional display interactive system can obtain visual information more easily.

The experimenter is more adaptable to the traditional two-dimensional display interactive system in the process of completing the task, which is related to the experimenter’s daily habits. Compared with the MR display interactive system, the experimenter uses the traditional two-dimensional display more in daily life. Therefore, it is more acceptable for experimenters to use traditional two-dimensional display interactive system to complete experimental tasks in subjective evaluation. The results of the data show that the experimenter believes that the cognitive load of the traditional two-dimensional display interactive system is lower than that of the MR display interactive system.

4. Conclusions 1

According to the above experimental data analysis, the performance of the MR display interactive system is better than that of traditional two-dimensional display interactive system, but it is inferior in cognitive load and visual fatigue.

With the development of space equipment in the future, the traditional two-dimensional display interactive system cannot meet the needs of increasingly complex command and control tasks. MR display interactive system has better task performance, more information display dimensions, better portability, and can better adapt to the development of space equipment in the future, but it has the defects of visual fatigue and high cognitive load. Therefore, it is necessary to improve the MR display interactive system to reduce its visual fatigue and cognitive load when completing tasks.

5. Multi-Channel Interaction Improvements for MR Display Interactive System

Because of its good immersion and the complexity and diversity of display information, the MR interactive display system needs to occupy higher cognitive resources when people obtain the information they need in real time, which is why the cognitive load and visual fatigue of MR interactive display system is higher than that of the traditional two-dimensional interactive display system. A single visual channel is hardly suitable for today’s complex command and control tasks. Therefore, multiple channels of gesture and language are added to the MR display interactive system to cooperate with vision for task operation [49,50,51].

In the mixed reality environment, radar was enabled, the missile vehicle was controlled for erection preparation, and the missile vehicle was controlled to launch missiles from the original pinch start to the language start. The check box was changed to load our terrain and blue information was displayed, along with load landmarks and radar display from the original click to gesture operation. For example, when multi-channel improvements are made to MR, the task process of controlling a missile vehicle to launch a missile to shoot down an aircraft becomes the following steps. 1. The experimenter puts on the HoloLens2 and starts the language. 2. The experimenter said: “missile vehicle launch”. At this time, the virtual hand in the mixed reality environment will automatically move to the missile car and click the target automatically. Figure 19 shows the task process of the language-controlled missile vehicle launching missiles.

Figure 19.

Flowchart of using voice to control missile launch.

The multi-channel improved MR as a new display interaction system is compared with the unimproved MR display interaction system and the traditional two-dimensional display interaction system. The subjects used the multi-channel improved MR display interaction system to complete the same experimental task as above and collected the task performance and cognitive load of the improved MR display interaction system under this task condition. The performance and cognitive load of the improved MR display interactive system were compared with those of the unimproved MR display interactive system and the traditional two-dimensional display interactive system in the above experiments, so as to obtain the advantages and disadvantages of the three display interactive systems under the same task.

In this experiment, the experimental steps and task flow of the improved multi-channel MR display interactive system are consistent with Figure 11.

The performance experiment of the multi-channel improved MR display interaction system is carried out, and the results are shown in Table 7.

Table 7.

Multi-channel improved MR display interactive system to perform the average task time.

Through data analysis in Table 2 and Table 7, it is concluded that the total average time for the traditional two-dimensional display interactive system to complete the task is 258.8 s, the total average time for MR display interactive system to complete the task is 111.2 s, and the total average time for MR display interactive system to complete the task is 61.9 s after multi-channel improvement. One-Way ANOVA for three display interaction systems (F(2, 27) = 748.79, p < 0.001). One-Sample t-test Analysis of Multi-channel Improved MR display interaction system (t(9) = 61.89, p < 0.001). The results show that different display interactive systems have a significant impact on the task completion time. Under the same task condition, the improved MR display interactive system had the highest task performance under the three display interactive modes.

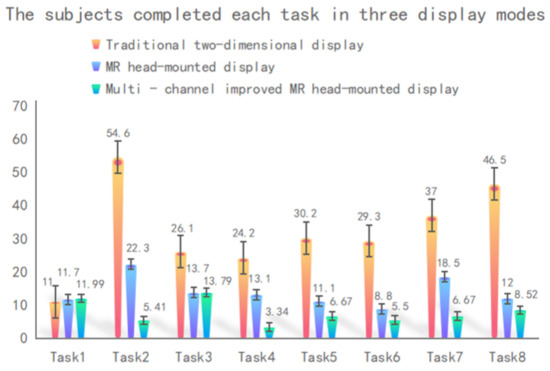

The average time for completing 1–8 sub-tasks in the three display interaction systems was analyzed and Figure 20 was obtained.

Figure 20.

The time taken by the subjects to complete each task in the three display interaction systems.

Analysis of Figure 20 shows that after multi-channel improvement, the completion time of the MR display interaction system is significantly reduced in tasks requiring interactive operation such as task 2, task 4, task 5, task 6, task 7 and task 8, and the total task completion time is also significantly reduced.

The eye movement data, peripheral physiological data and EEG data of the multi-channel improved MR display interaction system are shown in Table 8 and Table 9.

Table 8.

Multi-channel modified MR display interaction system eye movement and peripheral physiological characteristics data.

Table 9.

MR display interaction system P300 EEG data after multi-channel improvement.

As shown in Table 8, the eye movement data and peripheral physiological characteristic data of the MR display interaction system after the multi-channel improvement are significantly lower than those of the MR display interaction system without the multi-channel improvement, and even lower than the traditional two-dimensional display interaction system.

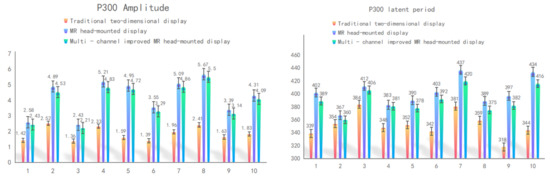

Combined analysis of the P300 EEG data of the experimenter in three display interaction systems is shown in Figure 21.

Figure 21.

Subject’s P300 EEG data in three display interaction systems.

By analyzing the P300 EEG data, the average P300 amplitude and latency of the multi-channel improved MR display interaction system were 1.56 and 347.3, respectively. The amplitude and latency are lower than those of the conventional 2d display interaction system and the unmodified MR display interaction system.

Subjective scores were given to the improved MULTI-channel MR display interaction system, and the data are shown in Table 10.

Table 10.

Subjective cognitive load of MR display interactive system after multi-channel improvement.

Comparing Table 6 and Table 10, the experimenter believes that the cognitive load of the improved multi-channel MR display interaction system is lower than that of the traditional two-dimensional display interaction system and the unimproved MR display interaction system. The results of one-way ANOVA show that the three display interaction systems have significant differences in the subjective feelings of the experimenters ().

5.1. Experimental Result 2

After the multi-channel improvement, the interaction mode of MR display interactive system becomes more concise, which not only improves the performance of interactive task in the MR display interactive system, but also greatly simplifies the process of visual search for objects. This resulted in a dramatic increase in performance after the multi-channel improvement of the MR display interaction system.

After the multi-channel improvement of the MR display interactive system, both the cognitive load and visual fatigue of the subjects were improved, and the visual fatigue and cognitive load of the improved MR display interactive system were even slightly lower than that of the traditional two-dimensional display interactive system. The reasons for this were analyzed. After the multi-channel improvement of the MR display interaction system, the task time was greatly reduced, which greatly reduced the visual resources and cognitive resources the experimenter paid after completing the entire task process, and after the multi-channel improvement of the MR, the difficulty of interactive operations was reduced, the visual search process was simplified, the overall task difficulty was reduced, and the proportion of vision in the task process was reduced to a certain extent.

After the multi-channel improvement of the MR display interaction system, the convenient and fast interaction mode, low visual fatigue, cognitive fatigue and high task performance make this display interaction system favored by experimenters; experimenters will feel more comfortable when performing tasks in this display interaction system.

5.2. Data Normalization Processing

Further statistical processing was performed on the measured subjective and objective data [52], and the results are shown in Table 11.

Table 11.

Subjective and objective data.

Build matrix , represents the data of the jth indicator under the ith scheme.

Normalize the data.

Obtain the normalized matrix

Put the data into the matrix to obtain: .

Data normalization processing:

The normalized data were solved and weighted.

5.3. Determine the Weight of Evaluation Indicators

The AHP hierarchical structure model was established using the Analytic Hierarchy Process [53]. A total of 12 experts participated in the establishment of the evaluation index weights, all of whom have a rich knowledge of human–machine efficacy, human–machine evaluation and mixed reality. After statistics, analysis and calculation, the weight of each indicator was obtained as shown in Table 12.

Table 12.

Weights of evaluation indicators.

The weighted quantitative scores for the three display interaction systems are as follows.

Sum the weighted scores of each indicator for the three display interaction systems to calculate the weighted total score for each system mode:

After calculation, the total score of the traditional two-dimensional display interaction syste-m is 96.45, the total score of the MR display interaction system without multi-channel improvement is 95.04, and the total score of the MR display interaction system with multi-channel improvement is 108.51.

Summarizing the above data, it can be seen that after the multi-channel improvement of the MR display interaction system, its performance has been improved, and the cognitive load and visual fatigue was also significantly optimized compared with the unimproved MR display interaction system. It even has advantages in terms of cognitive load and visual fatigue compared to the traditional two-dimensional display interaction systems. The multi-channel improved MR display interaction system scored the highest among the three systems on the overall evaluation of completing the same task.

This result indicates that the use of the MR display interaction system improved by multi-channel is a better choice in terms of comprehensive performance, cognitive load, visual fatigue and subjective evaluation when completing the same operational task.

6. Conclusions 2

When performing the same complex task, the direct use of the MR display interaction system can improve the performance to a certain extent compared with the traditional two-dimensional display interaction system and reduce the time to complete the task using the system, but because of its greater cognitive load and Visual fatigue, when completing this task, the cognitive load and visual fatigue of the MR display interactive system are still higher than that of the 2D display. After the multi-channel improvement of MR, the performance of completing the task is further improved, and the cognitive load and visual fatigue of completing the same task are also significantly reduced, which are even lower than the traditional two-dimensional display interactive system. The scoring results of comprehensive task performance, cognitive load and visual fatigue were multi-channel improved MR (108.51) > traditional two-dimensional (96.45) > unimproved MR (95.04).

The research results show that under complex command and control tasks, the multi-channel improved MR display interaction system is the best display method to complete the task, integrating performance, visual fatigue, cognitive load and subjective evaluation.

There are two limitations of this paper. 1. The paper only qualitatively compares the cognitive load of the two display interactive systems, and concludes that the cognitive load of the MR display interactive system is higher, but it is not clear how much higher. 2. The experimental tasks in this paper are only typical tasks in air defense tasks, and the types of experimental tasks are not extended.

Author Contributions

Conceptualization, W.W. and J.Q.; methodology, X.H.; software, X.H. and N.X.; data curation, X.H.; validation, W.W., J.Q., X.H., N.X. and T.C.; formal analysis, W.W. and X.H.; investigation, W.W. and X.H.; resources, W.W., J.Q. and X.H.; writing—original draft preparation, X.H.; writing—review and editing, W.W.; visualization, X.H.; supervision, T.C.; project administration, W.W.; funding acquisition, W.W. and J.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to REASON (All the instruments only measured the physiological data of the experimenter, and had no effect on the physical and mental health of the human body. The device has no adverse effects and does not harm the human body when it comes into contact with human body parts).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to some data involve detailed equipment parameters, these parameters cannot be disclosed.

Acknowledgments

The research was supported by the National Natural Science Foundation of China (grant number 52,175,282).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, C.; You, X. View the information-based battlefield environment system from network-centric warfare (NCW). In Geoinformatics 2007: Geospatial Information Science; SPIE: Bellingham, WA, USA, 2007; Volume 6753, pp. 1189–1198. [Google Scholar]

- Prislan, K.; Bernik, I. From Traditional Local to Global Cyberspace–Slovenian Perspectives on Information Warfare. In Proceedings of the 7th International Conference on Information Warfare and Security, Seattle, WA, USA, 22–23 March 2011; p. 237. [Google Scholar]

- War, X. Discussion on some problems of informationized war and weapon equipment informationization. Fundam. Natl. Def. Technol. 2010, 4, 4–48. [Google Scholar]

- Sun, Z. The evolution of war patterns since the Industrial Revolution. Future Dev. 2016, 40, 17–23. [Google Scholar]

- Orend, B. Fog in the fifth dimension: The ethics of cyber-war. In The Ethics of Information Warfare; Springer: Cham, Switzerland, 2014; pp. 3–23. [Google Scholar]

- Gous, E. Utilising cognitive work analysis for the design and evaluation of command and control user interfaces. In Proceedings of the 2013 International Conference on Adaptive Science and Technology, Pretoria, South Africa, 25–27 November 2013; pp. 1–7. [Google Scholar]

- Liu, W. Some Reflections on the Command and Control System. In Proceedings of the 2nd China Command and Control Conference 2014, Beijing, China, 4–5 August 2014. [Google Scholar]

- Liu, J. Research on Battlefield Environment Change and Weapon Equipment Maintenance Support. Master’s Thesis, National University of Defense Technology, Changsha, China, 2013. [Google Scholar]

- Lee, J.Y.; Rhee, G.W.; Seo, D.W. Hand gesture-based tangible interactions for manipulating virtual objects in a mixed reality environment. Int. J. Adv. Manuf. Technol. 2010, 51, 1069–1082. [Google Scholar] [CrossRef]

- Souchet, A.; Philippe, S.; Ober, F.; Léveque, A.; Leroy, L. Investigating cyclical stereoscopy effects over visual discomfort and fatigue in virtual reality while learning. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 10–18 October 2019; pp. 328–338. [Google Scholar]

- Fu, Q.; Lv, J.; Zhao, Z.; Yue, D. Research on optimization method of VR task scenario resources driven by user cognitive needs. Information 2020, 11, 64. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Shen, Y.; Zhou, H.; Zhang, X. Design and Implementation of new Ship Electronic Sand Table Interaction System Based on AR/VR Technology. In Proceedings of the Collection of the 6th China Command and Control S Conference, Beijing, China, 17–19 August 2018; Volume 1. [Google Scholar]

- Hirota, M.; Morimoto, T.; Kanda, H.; Endo, T.; Miyoshi, T.; Miyagawa, S. Objective Evaluation of Visual Fatigue Using Binocular Fusion Maintenance. Transl. Vis. Sci. Technol. 2018, 7, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hirota, M.; Yada, K.; Morimoto, T.; Endo, T.; Miyoshi, T.; Miyagawa, S.; Hirohara, Y.; Yamaguchi, T.; Saika, M.; Fujikado, T. Objective evaluation of visual fatigue in patients with intermittent exotropia. PLoS ONE 2020, 15, e0230788. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez Aguinaga, A.; Realyvazquez, A.; Lopez Ramirez, M.A.; Quezada, A. Cognitive Ergonomics Evaluation Assisted by an Intelligent Emotion Recognition Technique. Appl. Sci. 2020, 10, 1736. [Google Scholar] [CrossRef] [Green Version]

- Liu, P.; Jiang, G.; Liu, Y. Head-mounted Display and Desktop in Virtual Training System Versus Maps for Navigation Training:a Comparative Evaluation. J. Comput. Aided Des. Graph. 2017, 29, 10. [Google Scholar]

- Liu, P.; Forte, J.; Sewell, D.; Carter, O. Cognitive load effects on early visual perceptual processing. Atten. Percept. Psychophys. 2018, 80, 929–950. [Google Scholar] [CrossRef]

- Kyritsis, M.; Gulliver, S.R.; Feredoes, E. Environmental factors and features that influence visual search in a 3D WIMP interface. Int. J. Hum. Comput. Stud. 2016, 92, 30–43. [Google Scholar] [CrossRef]

- Patrzyk, M.; Klee, M.; Stefaniak, T.; Heidecke, C.D.; Beyer, K. Randomized study of the influence of two-dimensional versus three-dimensional imaging using a novel 3D head-mounted display (HMS-3000MT) on performance of laparoscopic inguinal hernia repair. Surg. Endosc. 2018, 32, 4624–4631. [Google Scholar] [CrossRef]

- Gerig, N.; Mayo, J.; Baur, K.; Wittmann, F.; Riener, R.; Wolf, P. Missing depth cues in virtual reality limit performance and quality of three dimensional reaching movements. PLoS ONE 2018, 13, e0189275. [Google Scholar] [CrossRef] [Green Version]

- Lin, C.J.; Chen, H.J.; Cheng, P.Y.; Sun, T.L. Effects of displays on visually controlled task performance in three-dimensional virtual reality environment. Hum. Factors Ergon. Manuf. Serv. Ind. 2015, 25, 523–533. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Choi, S.M. A review on mixed reality: Current trends, challenges and prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef] [Green Version]

- Taylor, A.G. Develop Microsoft Hololens Apps Now; Apress: New York, NY, USA, 2016; pp. 91–100. [Google Scholar] [CrossRef]

- Chen, H.; Lee, A.S.; Swift, M.; Tang, J.C. 3D collaboration method over HoloLens™ and Skype™ end points. In Proceedings of the 3rd International Workshop on Immersive Media Experiences, Brisbane, Australia, 30 October 2015; ACM: New York, NY, USA, 2015; pp. 27–30. [Google Scholar]

- Hockett, P.; Ingleby, T. Augmented Reality with Hololens: Experiential Architectures Embedded in the Real World. arXiv, 2016; arXiv:1610.04281. [Google Scholar]

- Boring, M.J.; Ridgeway, K.; Shvartsman, M.; Jonker, T.R. Continuous decoding of cognitive load from electroencephalography reveals task-general and task-specific correlates. J. Neural Eng. 2020, 17, 056016. [Google Scholar] [CrossRef]

- Su, Y.; Cai, Z.; Shi, L.; Zhou, F.; Guo, P.; Lu, Y.; Wu, J. A multi-plane optical see-through holographic three-dimensional display for augmented reality applications. Int. J. Light Electron Opt. 2017, 157, 190–196. [Google Scholar] [CrossRef]

- Chen, B.; Qin, X. Mixed reality can blend with human wit. Chin. Sci. Inf. Sci. 2016, 46, 1737–1747. [Google Scholar]

- Webel, S. Evaluating virtual reality and augmented reality training for industrial maintenance and assem bly tasks. Interact. Learn. Environ. 2015, 23, 778–798. [Google Scholar]

- Bos, J.E.; Bles, W.; Groen, E.L. A theory on visually induced motion sickness. Displays 2008, 29, 47–57. [Google Scholar] [CrossRef]

- Keshavarz, B.; Saryazdi, R.; Campos, J.L.; Golding, J.F. Introducing the VIMSSQ: Measuring susceptibility to visually induced motion sickness. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Seattle, WA, USA, 28 October–1 November 2019; Sage CA: Los Angeles, CA, USA, 2019; Volume 63, pp. 2267–2271. [Google Scholar]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, D.M.; Girshick, A.R.; Akeley, K.; Banks, M.S. Vergence–Accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 2018, 8, 33. [Google Scholar] [CrossRef] [PubMed]

- Shibata, T.; Kim, J.; Hoffman, D.M.; Banks, M.S. The zone of comfort: Predicting visual discomfort with stereo displays. J. Vis. 2011, 11, 11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeng, W.D.; Ouyang, Y.; Huang, T.W.; Duann, J.R.; Chiou, J.C.; Tang, Y.S.; Ou-Yang, M. Research of accommodative microfluctuations caused by visual fatigue based on liquid crystal and laser displays. Appl. Opt. 2014, 53, 76–84. [Google Scholar] [CrossRef]

- Kim, J.; Kane, D.; Banks, M.S. The rate of change of vergence–accommodation conflict affects visual discomfort. Vis. Res. 2014, 105, 159–165. [Google Scholar] [CrossRef] [Green Version]

- Hiroyuki, K.; Mariko, K.; Toshifumi, M. Clinical Investigation: Serial measurements of accommodation by open-field HartmannShack wavefront aberrometer in eyes with accommodative spasm. Jpn. J. Ophthalmol. 2012, 56, 617. [Google Scholar]

- Blehm, C.; Vishnu, S.; Khattak, A.; Mitra, S.; Yee, R.W. Computer vision syndrome: A review. Surv. Ophthalmol. 2005, 50, 253–262. [Google Scholar] [CrossRef]

- Berens, C.; Le, G.; Pierce, H.F. Studies in Ocular Fatigue. II. Convergence Fatigue in Practice. Trans. Am. Ophthalmol. Soc. 1926, 24, 262–287. [Google Scholar]

- Bowman, D.A.; Mcmahan, R.P. Virtual Reality: How Much Immersion Is Enough? Computer 2007, 40, 36–43. [Google Scholar] [CrossRef]

- Gao, B.; Kim, B.; Kim, J.I.; Kim, H. Amphitheater layout with egocentric distance-based item sizing and landmarks for browsing in virtual reality. Int. J. Hum. Comput. Interact. 2018, 35, 831–845. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, S.; Chu, J.; Cun, W. Effect of alertness light environment on flight cognitive task performance. Proc. Beijing Univ. Aeronaut. 2021, 47, 9. [Google Scholar]

- Li, H.; Wang, S.; Li, J. Research on human-machine interface evaluation method based on QFD-PUGH. J. Graph Stud. 2021, 42, 8. [Google Scholar]

- Hirota, M.; Kanda, H.; Endo, T.; Miyoshi, T.; Miyagawa, S.; Hirohara, Y.; Yamaguchi, T.; Saika, M.; Morimoto, T.; Fujikado, T. Comparison of visual fatigue caused by head-mounted display for virtual reality and two-dimensional display using objective and subjective evaluation. Ergonomics 2019, 62, 759–766. [Google Scholar] [CrossRef]

- Yue, K.; Wang, D. EEG-Based 3D Visual Fatigue Evaluation Using CNN. Electronics 2019, 8, 1208. [Google Scholar] [CrossRef] [Green Version]

- Muraoka, T.; Uchimura, S.; Ikeda, H. Evaluating Visual Fatigue to Keep Eyes Healthy Within Circadian Change During Continuous Display Operations. J. Disp. Technol. 2016, 12, 1464–1471. [Google Scholar] [CrossRef]

- Lim, K.; Li, X.; Tu, Y. Effects of curved computer displays on visual performance, visual fatigue, and emotional image quality. J. Soc. Inf. Disp. 2019, 27, 543–554. [Google Scholar] [CrossRef]

- Shuzhi, Y.; Hongni, G.; Wei, W.; Jue, Q.; Xiaowei, L.; Kang, L. Multi-image evaluation for human-machine interface based on Kansei engineering. Chin. J. Eng. Des. 2017, 24, 523–529. [Google Scholar]

- Zhang, S.; Tian, Y.; Wang, C.; Wei, K. Target selection by gaze pointing and manual confirmation: Performance improved by locking the gaze cursor. Ergonomics 2020, 7, 884–895. [Google Scholar] [CrossRef]

- Kang, N.; Sah, Y.J.; Lee, S. Effects of visual and auditory cues on haptic illusions for active and passive touches in mixed reality. Int. J. Hum. Comput. Stud. 2021, 150, 102613. [Google Scholar] [CrossRef]

- Yang, M.; Tao, J. Intelligent approach for multi-channel human-computer Interaction information fusion. Chin. Sci. Inf. Sci. 2018, 48, 433–448. [Google Scholar]

- Sun, L.; Han, B.; Zhang, W. Evaluation of Mobile APP Interface Design for English Learning Based on Eye Movement Experiment. Hum. Ergon. 2021, 27, 8. [Google Scholar]

- Zhao, Y.; Liu, Y. Reasech on Subjective Evaluation Indexes for Vehicle Handing Stability Based on Improved Analytic Hierarchy Process and Fuzzy Comprehensive Evaluation. China Mech.-Anical Eng. 2017, 45, 620–623. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).