1. Introduction

With the increasing importance of Earth observation projects, staring imaging outperforms other sensing techniques owing to its unique capability in capturing continuous images of the ground target [

1,

2]. As its name suggests, staring control requires the camera to constantly point to the target, so we can obtain images where the target is always at the center. To achieve this purpose, the optical axis of the camera is supposed to be aimed at the target throughout the whole observation phase. Many video satellites (e.g., TUBSAT [

3], Tiantuo-2, Jilin-1) are equipped with staring mode, therefore they can be applied for various scenarios, such as emergence rescue, target monitoring, and so on [

4,

5,

6]. In a staring control case, the satellite is moving along the orbit and simultaneously the ground target is not stationary as it is fixed on the rotating Earth’s surface, leading to a time-varying relative motion. Therefore, a dedicated attitude controller should be designed to keep the satellite staring at the target.

Conventional methods for staring control mainly require the relative position between the satellite and the target, normally obtained via orbital data and the geographic information, respectively. Furthermore, a staring imaging for a single point dictates only the optical axis, so the orientation perpendicular to it is free. Refs. [

2,

7] both propose PD-like controllers to achieve staring imaging, while Refs. [

8,

9,

10] pursue optimality during the attitude maneuver. Ref. [

11] realizes a similar real-time optimal control method with an emphasis on the pointing accuracy. However, the above studies have not considered the image feedback, though the image error directly and precisely reflects the controller’s accuracy. In light of this, it is necessary to take use of the camera’s ability to achieve more precise staring.

Besides traditional methods whose attitude control torques are generated by establishing the inertial geometry and disintegrating the relative orientation into different rotation angles, state-of-the-art image-processing technologies [

12,

13,

14,

15] have made it possible to develop a novel image-based staring controller relying on the target’s projection on the image plane. As a camera plays an essential role in different engineering applications [

16,

17,

18,

19,

20,

21,

22], various image-based control methods have been developed. The same as staring control, some of these control schemes use the image to obtain the orientation of the target point. For example, Refs. [

23,

24] study positioning control of robots, Ref. [

25] conducts research on motion control of an unmanned aerial vehicle (UAV), and Ref. [

26] uses images for space debris tracking. However, above studies either neglect the uncertainties of the camera or do not consider the spacecraft kinematics and dynamics. A spaceborne camera is hard to calibrate in a complicated working environment such as space; therefore, this paper focuses on analyzing an image-based adaptive staring attitude controller for a video satellite using an uncalibrated camera.

To take advantage of the images, the projection model should be analyzed. The target’s projection on the image plane is decided by the relative position between the target and satellite in the inertial space, the satellite’s attitude and the camera’s configuration. The camera configuration consists of the intrinsic structure and the extrinsic mounting position and orientation. Camera parameters are to be properly defined, thus able to be linearized and thereafter estimated online. Worth noting that the relative orientation of staring imaging is influenced by both the attitude and orbital motion, which is different from a robotic model. To conclude the discussion, for a video satellite whose camera is uncalibrated, the image-based staring attitude controller is built upon the thorough analysis of the camera structure.

This paper differs from traditional staring attitude control methods by focusing on the image-based adaptive algorithm accommodating the unknown camera parameters, therefore the kinematic relationship between the image and the attitude is firstly established. Through linear parameterization, the negative gradients of the projection errors are chosen as the direction of parameter adjustment. Estimated parameters and the image information are then adopted to formulate the staring controller, which directs the target’s projection to the desired coordinates. A potential function is also introduced to guarantee the controller’s stability. The convergence of the ground target’s projection to its desired location indicates that the optical axis reaches the desired orientation. Finally, simulation shows the trajectory of the projection on the image plane. As the projection moves along the trajectory, the image errors, as well as the estimated projection errors, are approaching zero.

The remainder of this paper is organized as follows. The camera modeling is introduced in

Section 2, where the projection kinematics are derived for a video satellite. In

Section 3, we propose the adaptive controller, including the parameter extraction and estimation. Simulation is presented in

Section 4 and the results demonstrate the effectiveness of our controller. Conclusions are drawn in the last section.

2. Problem Formulation

This section starts with a brief introduction of satellite attitude kinematics and dynamics, and then establishes the camera projection model between the target’s position in the Earth-centered inertial (ECI) frame and its pixel coordinates on the image plane. Finally, the projection kinematics in the form of pixel coordinates are derived.

2.1. Attitude Kinematics and Dynamics

A quaternion

q, which includes a scalar part

and a vector part

, is adopted to describe the attitude:

where

is the Euler axis and

is the rotation angle. The quaternion can avoid the singularity of Euler angles and it must meet the normalization condition:

. The attitude kinematics and dynamics of a satellite as a rigid body are given by

where

is a

unit matrix,

J is the inertial moment of the satellite and

represents the angular velocity of the satellite relative to the inertial frame expressed in the body frame.

is the attitude control torques. The operation

is defined as

In traditional attitude tracking controllers including the staring control, a desired quaternion should firstly be designed and then the error quaternion is obtained. According to different control strategies, is calculated based on and the angular velocity error . For an image-based staring control case, alternative attitude representation is needed as the attitude errors are embodied in the image errors, i.e., the pixel coordinate errors between the current and desired projection. Therefore, we are to measure the relative attitude via image recognition. Inevitably, an uncalibrated camera introduces extra uncertainties into the images. For this reason, the analysis of a camera model is necessary.

2.2. Earth-Staring Observation

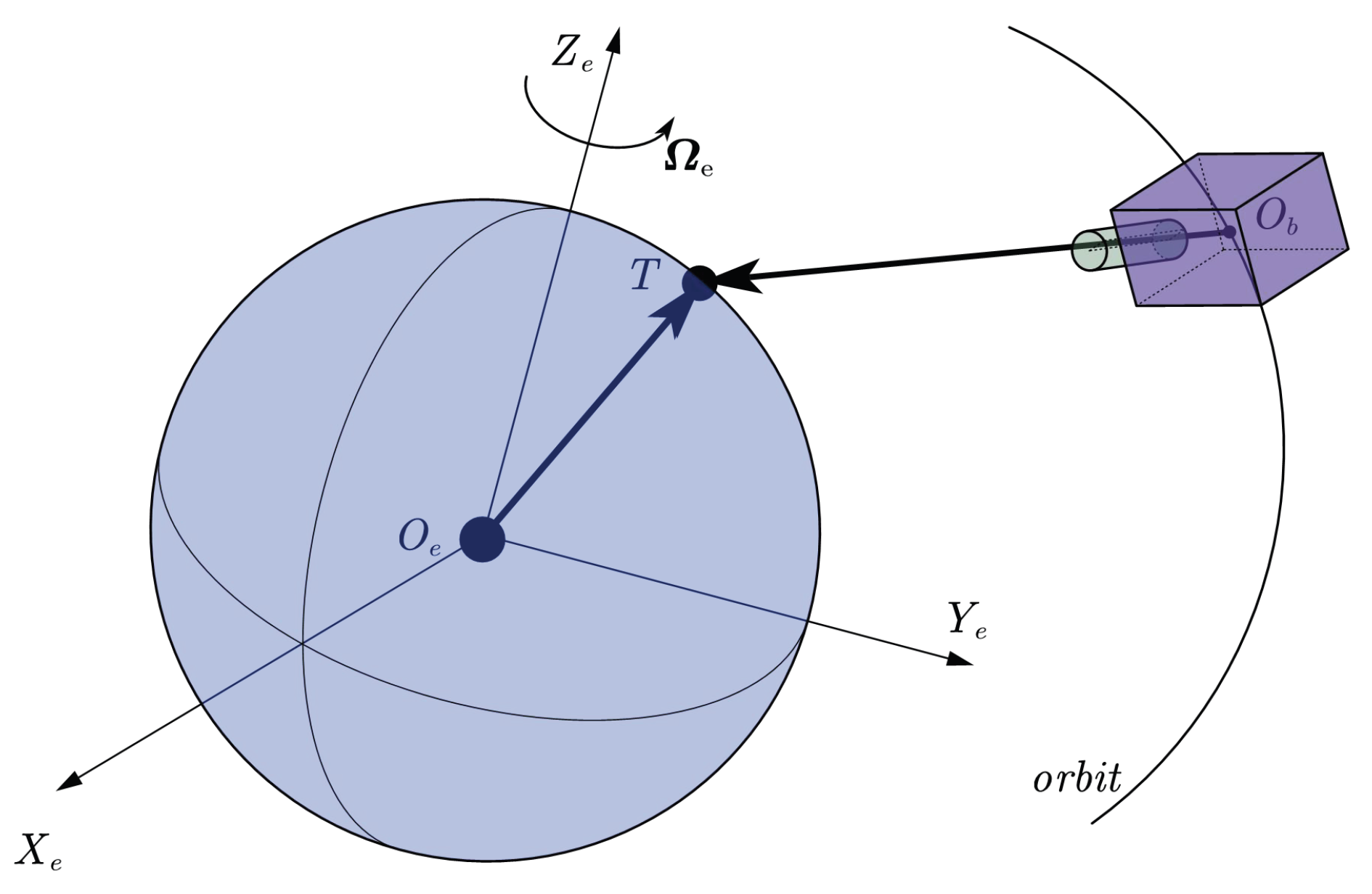

Earth-staring observation requires the camera’s optical axis to point towards the ground target for a period of time. The scenario is shown in

Figure 1.

is ECI frame.

and

are the the center of mass of Earth and the satellite, respectively. The satellite with a camera is on the orbit, and the ground target T is located at the Earth surface while rotating around

at the angular velocity of

. The satellite’s position is expressed in ECI as

, and

represents the rotation matrix from ECI to the body frame. Define the homogeneous transform matrix from the inertial frame to the body frame

:

is the target’s position in ECI, and

is the vector from the satellite to the target expressed in the body frame. Homogeneous coordinates are adopted to better describe the transformation. According to the geometrical relationship, we have

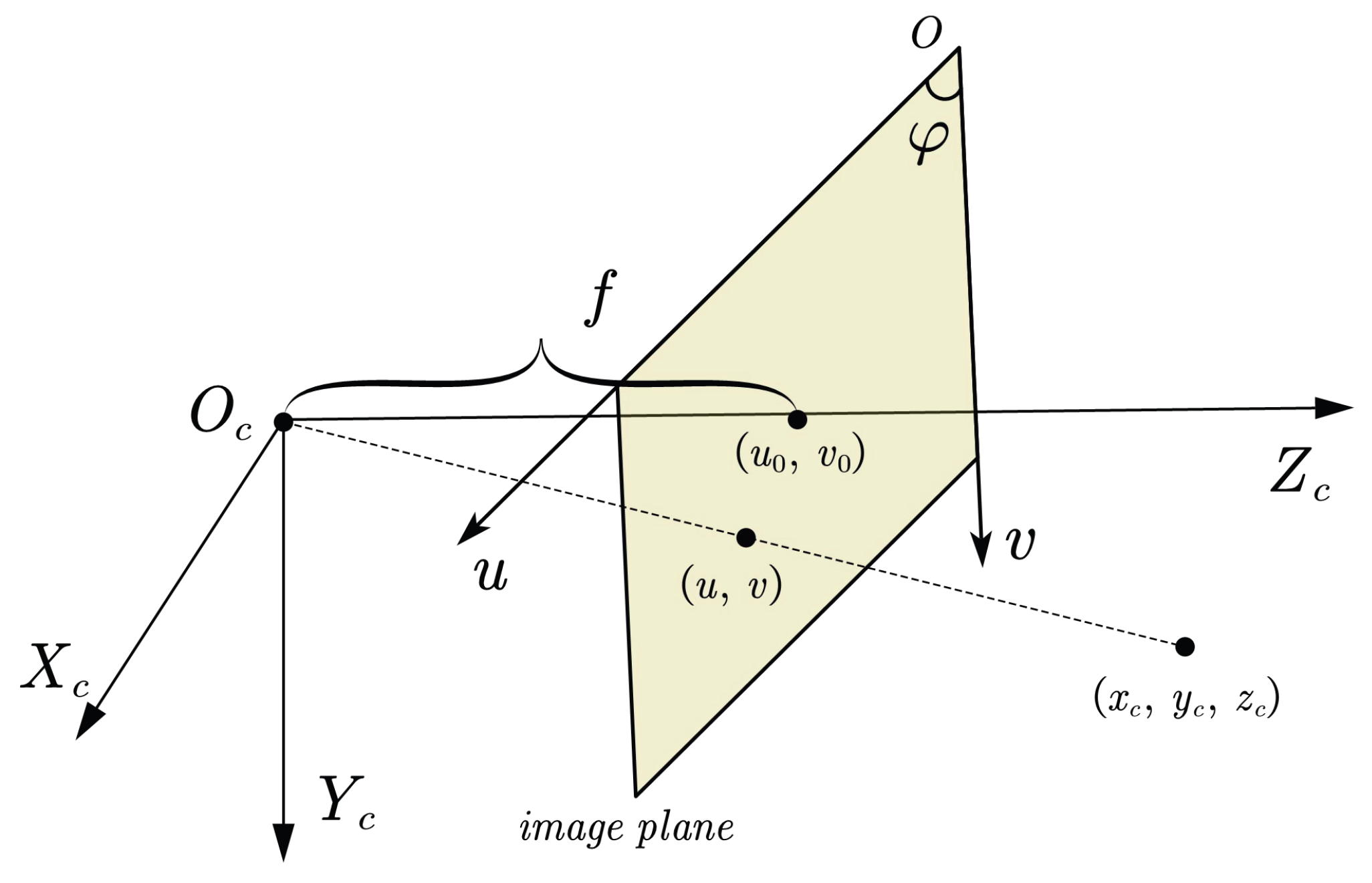

2.3. The Intrinsic Camera Model

Inside the camera, the target is projected on the image plane through the lens. Assume the camera has a focal length of

f and a pixel size of

.

Figure 2 depicts the camera frame

and the 2D pixel frame

, whose conjunction with the optical axis is

.

is the angle between the axis

u and

v. The target in the camera frame is expressed as

, and its projection is

. Thus, we have the following projection transformation:

where

is defined as

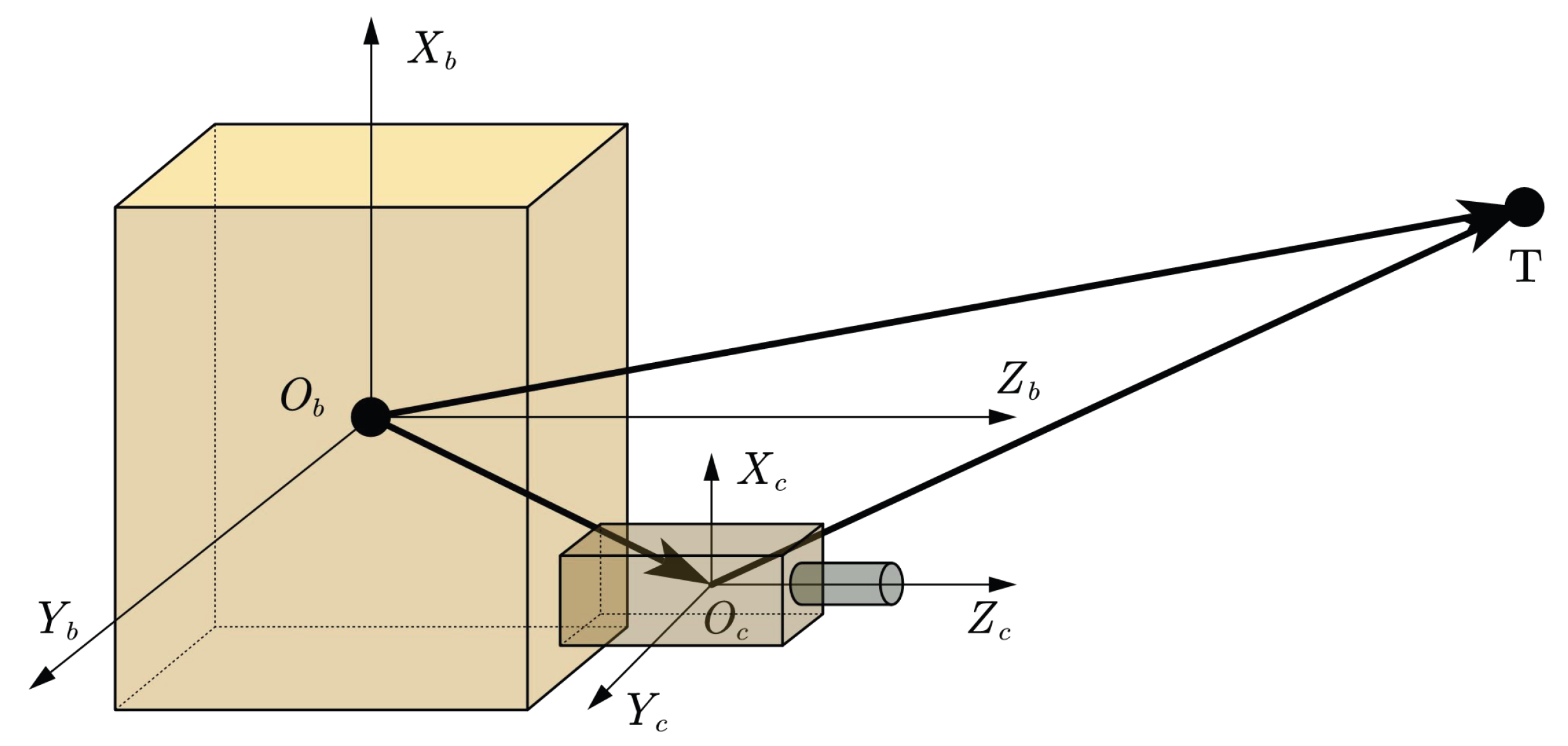

2.4. The Extrinsic Camera Model

The position and attitude of the camera frame

with respect to the body frame

is displayed in

Figure 3.

represents the position of

in the body frame. Similarly, we define the homogeneous transform matrix from the body frame to the camera frame

:

The target’s position in the camera frame is

and in the body frame it is

. The transformation between them can be given by

2.5. The Projection Kinematics of Staring Imaging

According to Equations (

6) and (

9), we have

where the projection matrix

is defined by

and its elements are denoted as

. Then combine (

5) and (

10), we obtain

The above equation reveals the mapping relation between the target’s position in ECI and its projection coordinates on the image plane. The matrix

N reflects the camera’s role in the transformation, while

T contains the satellite’s attitude and orbit motion impacts. Define

where

is the matrix consisting of the first two rows of

N, and

is the third row. To derive the kinematic equations more clearly, we will explicitly denote the time-varying states. Equation (

11) can be rewritten as

For simplicity, let

denote a vector formed by the first three elements of

, and let

denote a matrix formed by the first three columns of

. Differentiate the depth

of the target in the camera frame and we obtain

To simplify the expression, we define

Similarly, we define

then we have the derivative of the image coordinates given by

Equations (

16) and (

18) are the staring imaging kinematics. From the prior information of the target point, the position

and velocity

of the ground point are already known. Moreover, noting that the attitude and orbit determination can provide the rotation matrix

and the orbital location

and velocity

, the only uncertain parameters are

and

. There are a few characteristics worth analyzing in the kinematic equations. First, the depth

is not observable through images, therefore our controller can not access depth information. Second, the matrices

and

contain the relative orbital motion and are uncontrollable, and simultaneously we do not conduct orbit maneuver. This brings in a problem that if the relative motion between the target and the satellite is too fast, the demand to track the target may exceed the satellite’s attitude maneuver capability. This can result in the target being lost in the field of view. Hence, the satellite should have sufficient angular maneuver capability to keep up the relative rotation. Moreover, refer to [

2] for more analysis of the relative angular velocity. Third, the image-based kinematics (

16) and (

18) maintain the same feature as quaternion-based kinematics (

2) as they are all linear with regard to

. Fourth, the unknown camera parameters exist in

,

,

and

, so no accurate projection change rate can be derived through the kinematics, and a self-updating rule is to be proposed to estimate them online.

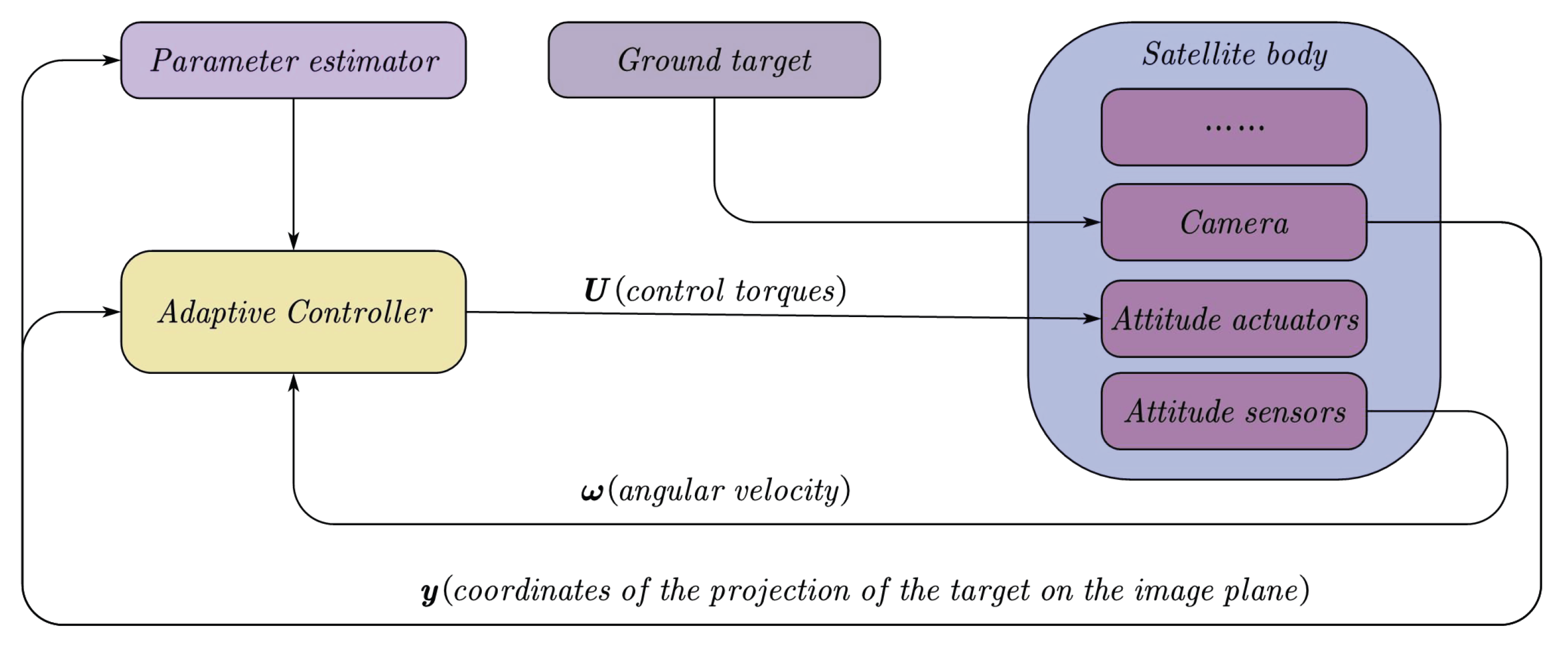

2.6. Control Objective

The control objective is to guarantee the projection coordinate approaches its desired location on the image plane. The is extracted from the real-time images and is predetermined. Normally we expect to fix the target point at the center of the image to gain a better view, thus without loss of generality, is selected. To realize this purpose, an adaptive controller is to be designed to specifically address the camera parameters. Define the image error , the control objective is that asymptotically converges to .

Figure 4 shows the control framework. The camera captures the target when it appears in the field of view, and then the image is processed so that the corresponding target coordinate

is obtained. With

, the camera parameters are estimated online and are thereafter applied to the attitude controller. According to the kinematics and dynamics, the satellite will finally accomplish the staring attitude maneuver.

4. Simulation and Discussion

In this section, the proposed image-based adaptive staring controller and a conventional position-based controller are applied to realize the ground target observation.

At the initial time (12 Jul 2021 04:30:00.000 UTC), the ground target’s location is given in

Table 1 and the orbital elements of the satellite are listed in

Table 2. The target is initially near the sub-satellite point. The real and theoretical camera parameters are listed in

Table 3.

denotes the rotation matrix with a 3-2-1 rotation sequence. The theoretical parameters were initially real states of the camera and are used as the initial estimated values. Due to various causes, e.g., the long-term oscillation, the real parameters deviate from the theoretical ones and reflect the current camera states. The image plane of the camera consists of

pixels. The desired projection location is at the center of the plane, i.e.,

. The initial attitude is presented in

Table 4 where the camera is roughly kept pointing to Earth center.

In the given initial conditions, the ground target already appears on the image. The control torques are bounded by the maximum output of the attitude actuator, i.e., a reaction flywheel, which in our simulation is Nm. So the inequality holds for . The following two cases are simulated using the same uncalibrated camera and the same initial attitude and orbit conditions. In case 1, the conventional position-based controller only utilizes target’s location information without taking advantage of the images. In case 2, we suppose the image processing algorithm detects its pixel coordinates and the image-based adaptive controller outputs the control torques incorporating the image and location information.

4.1. Case 1: Conventional Position-based Staring Controller

The conventional staring control methods are normally based on the location of the ground target. By designing the desired orientation and angular velocity, the optical axis of camera is supposed to be aimed at the target in an ideally calibrated camera case. Using the uncalibrated camera and the initial conditions presented in this section, we adopt a position-based staring controller from [

2]. The controller is

where

and

are the coefficient matrices. Let

where

and

are the two rotation angles between the camera’s optical axis and its desired orientation aiming at the target. Let

where

is the desired angular velocity.

,

and

are designed based on target’s position. Refer to the original article for more detailed definitions.

Table 5 shows the coefficient values adopted in the simulation.

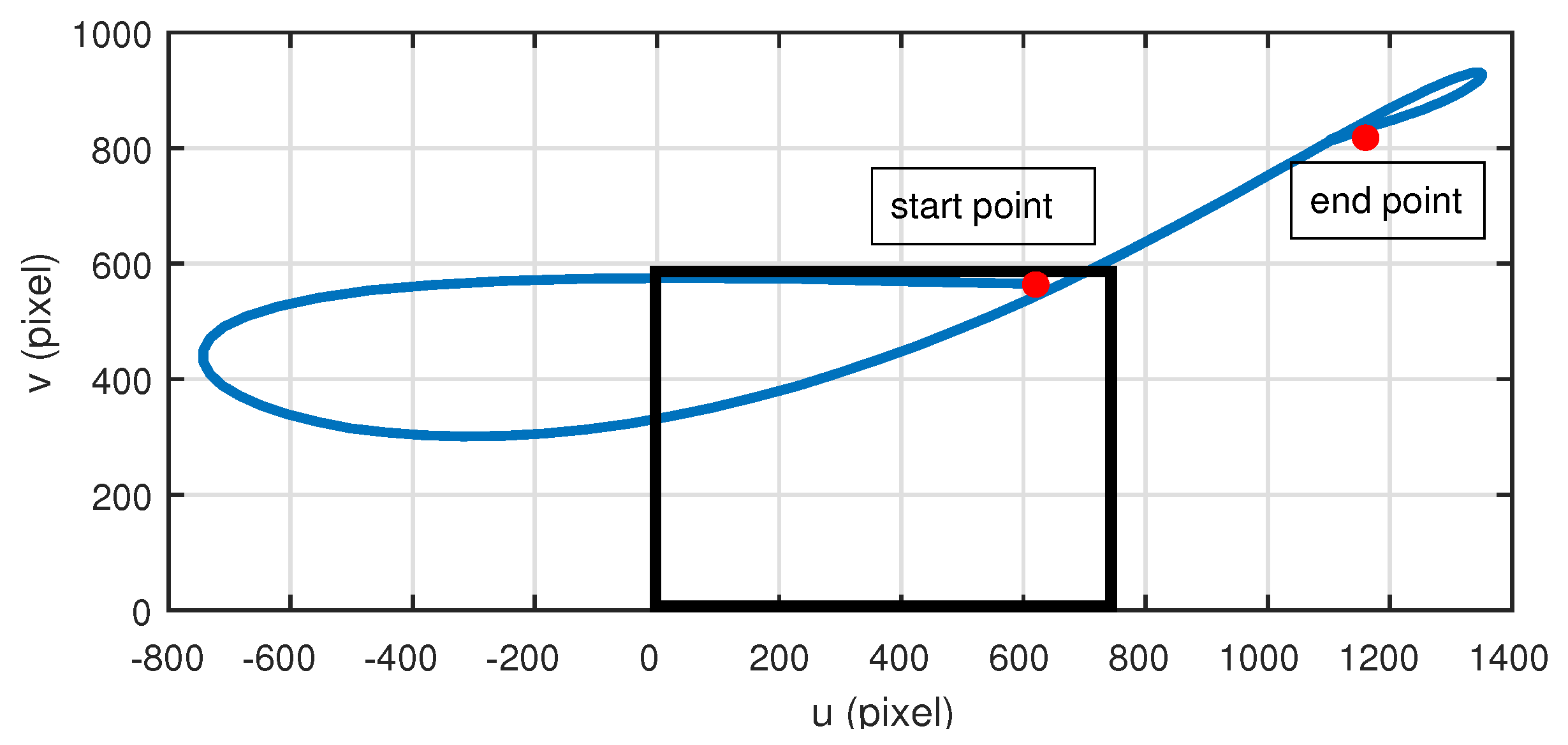

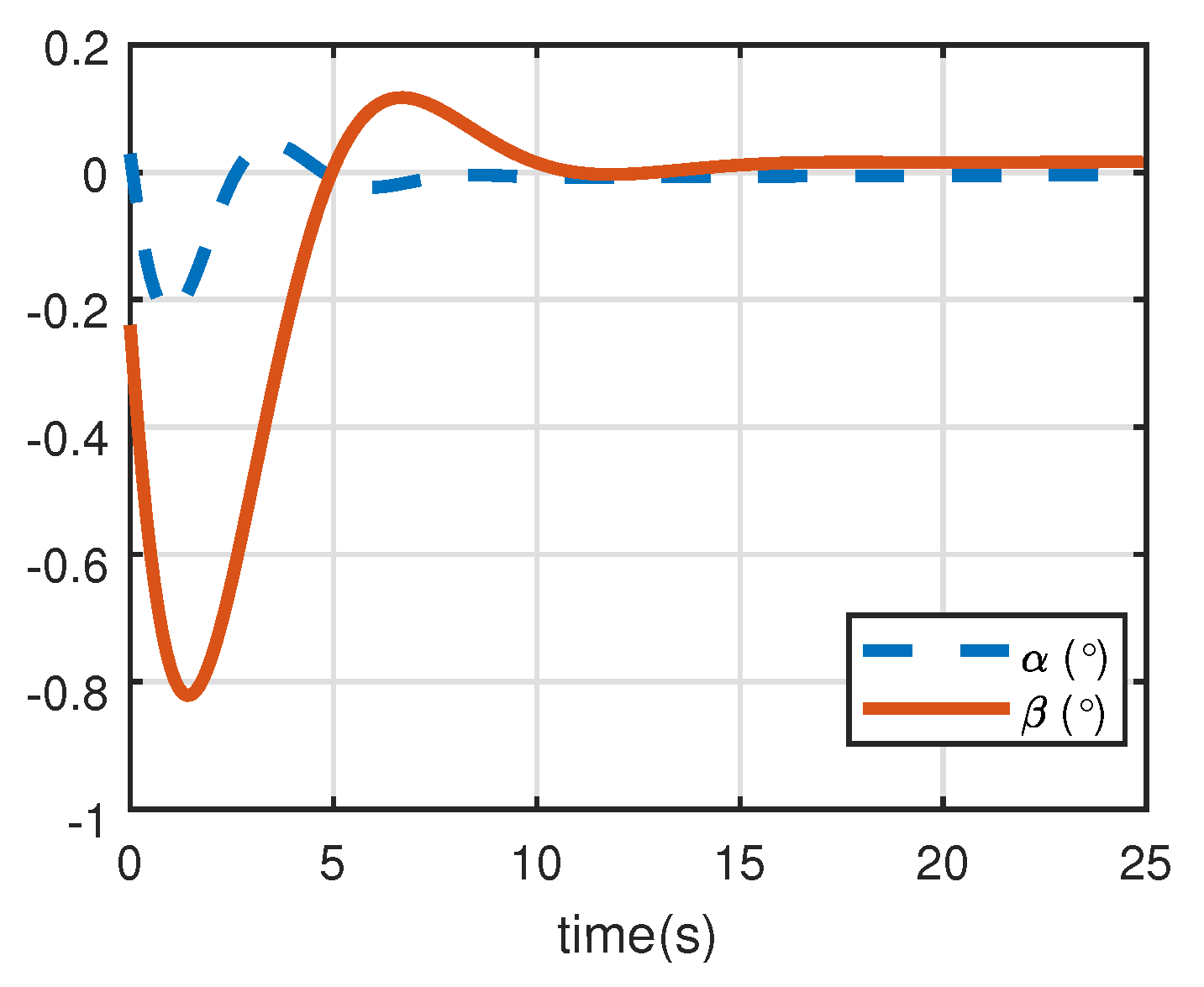

The target’s trajectory on the image plane is shown in

Figure 5 where the black box is the field of view.

Figure 6 depicts the changes of two rotation angles. The initial location of the ground point is the upper right corner of the plane marked by the start point. As the controller starts working, the target gradually moves out of the field of view, which means the camera can not see the target temporarily. Since this controller is dependent on the position, it can still work without the sight. However, the end point shown in the

Figure 5 demonstrates that when the satellite finishes attitude maneuvering and is at the stable staring stage, the target is still lost in our image. This leads to the failure of observing the ground target, which results from the uncalibrated camera. According to transform matrix from the body frame to camera frame

in

Table 3, the optical axis of camera has over

deviation from its ideal orientation in the body frame. Hence, when the position-based controller thinks the optical axis is aimed at the target, the target is actually lost in the view.

4.2. Case 2: Image-Based Adaptive Staring Controller

Table 6 shows the control coefficients in the controller. The operation

represents a matrix with the elements located at the diagonal consecutively.

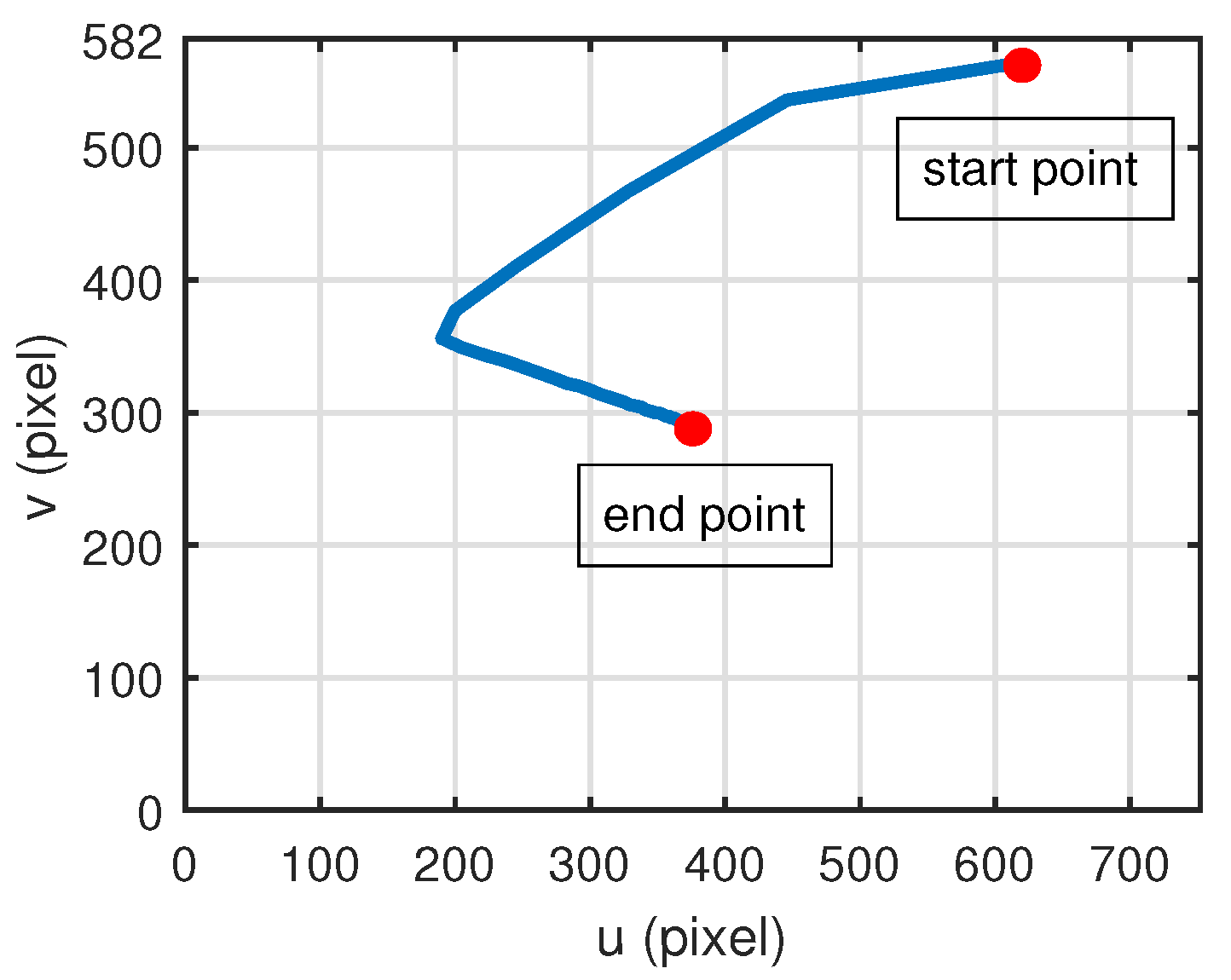

Figure 7 depicts the trace of the target’s projection on the image plane. Initially, the target appears at the same location on the image plane as in the case

Section 4.1. With the staring controller working properly, the projection moves along the trajectory and finally reaches the end point, which is also the image center

. The trajectory indicates that it takes some time for the controller to find the proper direction of the desired destination, because the initial guess of the parameters is primary factor affecting the accuracy of the controller at the starting stage. Furthermore, the initial angular velocity of the satellite also determines the initial moving direction the target.

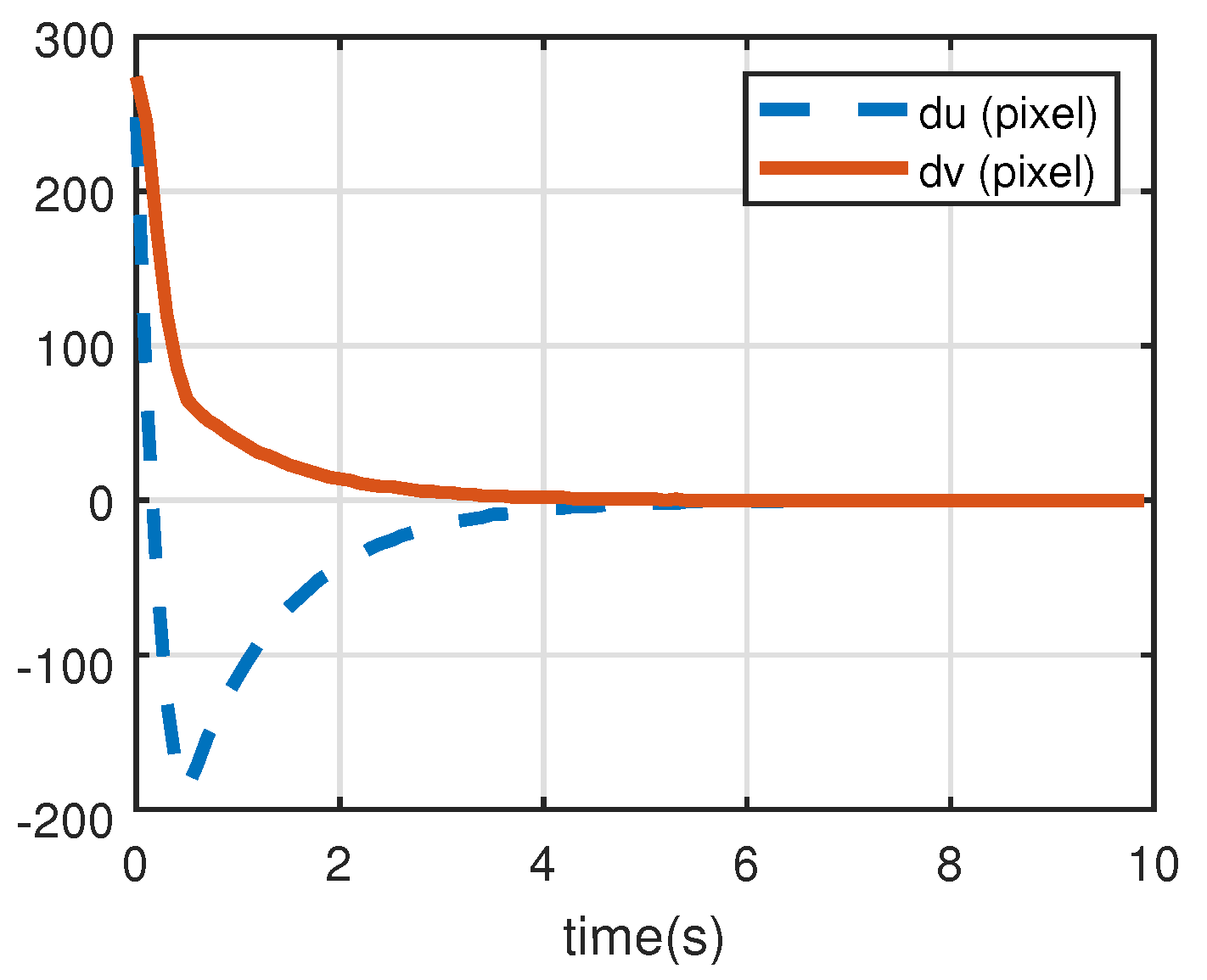

Figure 8 shows the differences between the current and the desired coordinates and it reflects the same trend as

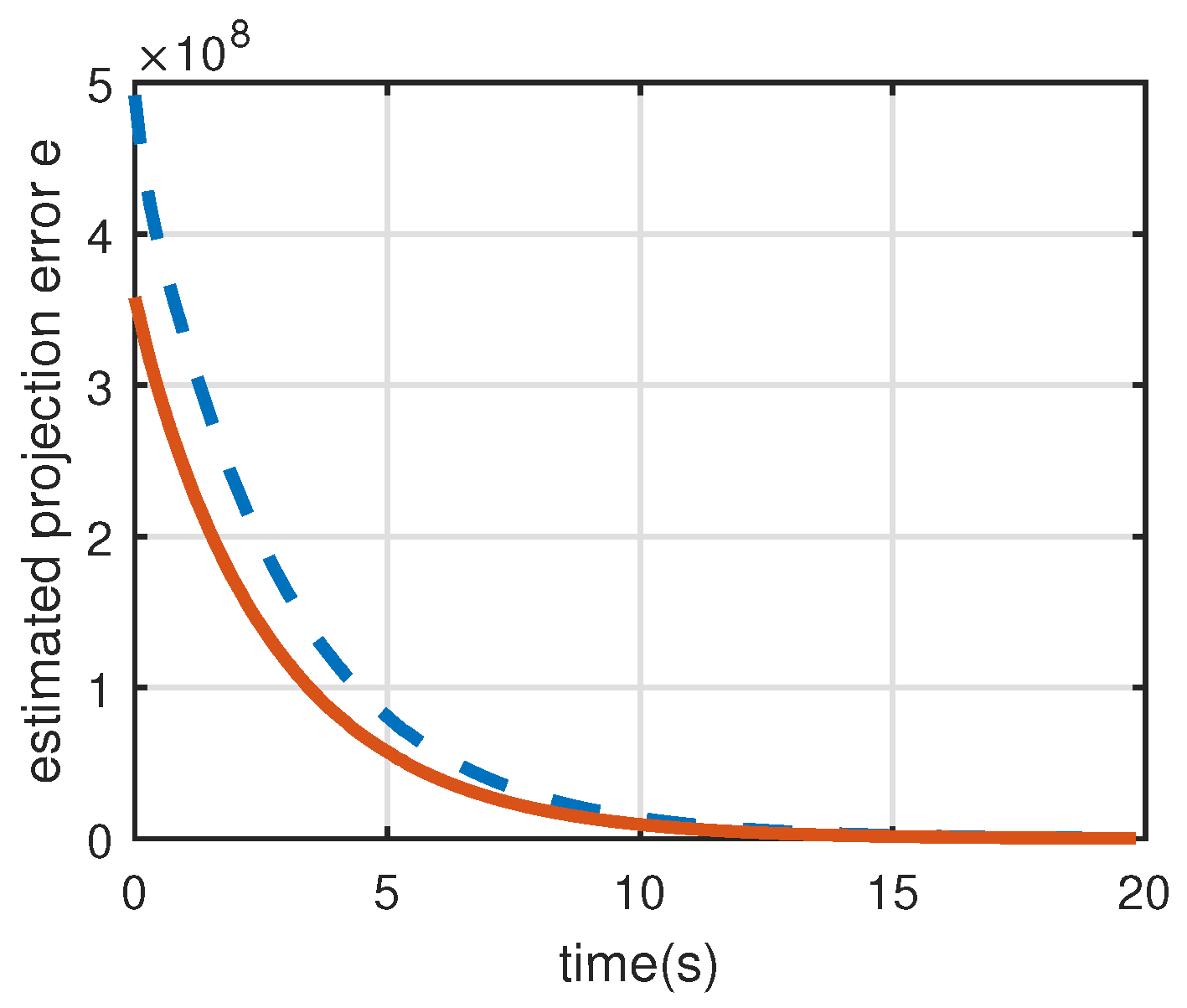

Figure 7.

Figure 9 is the time evolution of estimated projection errors. The adaptive rule continuously updates the parameters in the negative gradient direction of

, so the estimated projection errors can be reduced due to the parameter estimation.

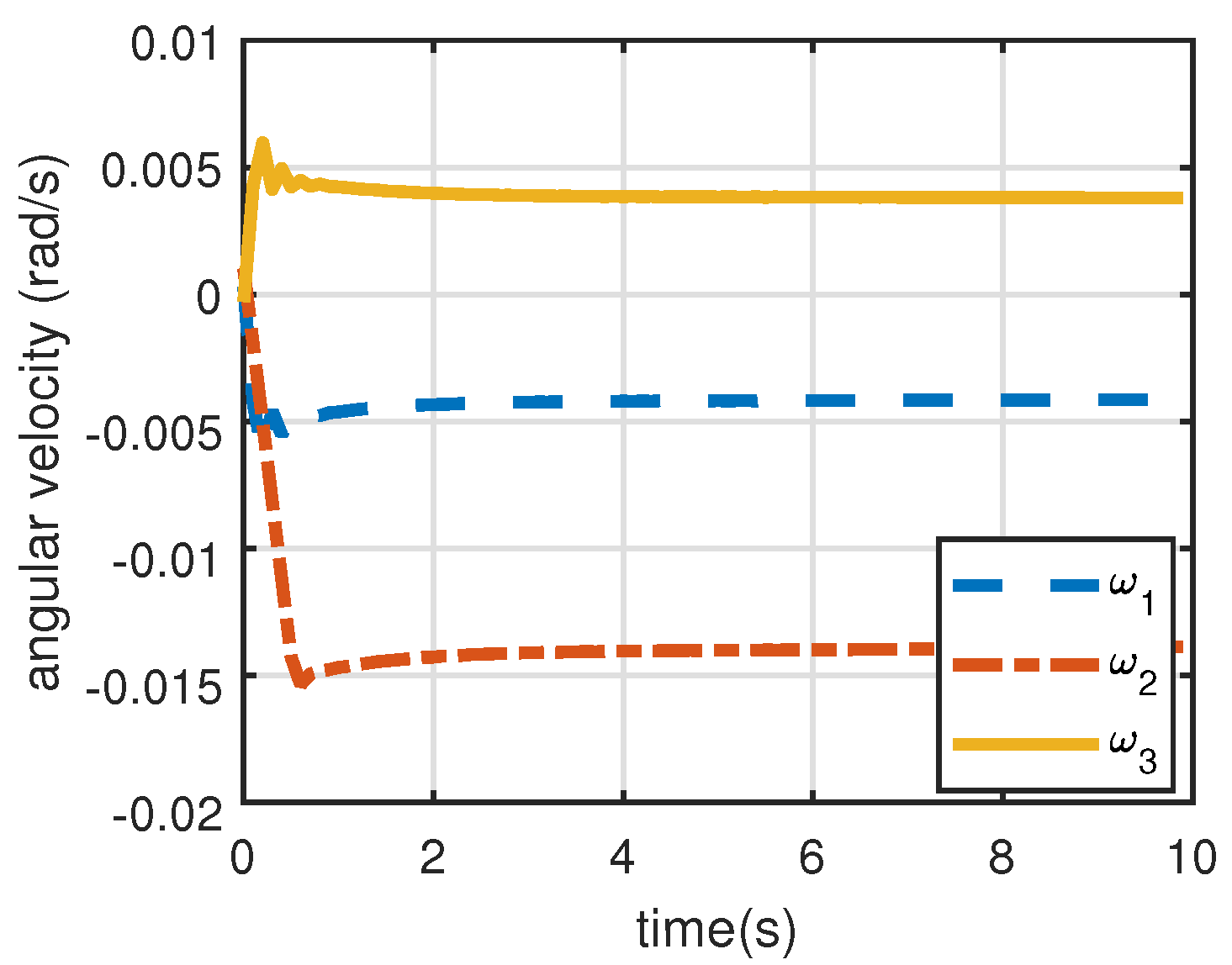

Figure 10 shows the angular velocities of the satellite. At first,

adjusts very fast and is then gradually stabilized. It is worth noting that

is not convergent to

, because the ground target is a moving point in the inertial space. The satellite is required to rotate at a certain rate to keep staring at it. Moreover, from

Figure 10 we can see that the final angular velocity is not a constant but varies at a very low speed because of the relative motion between the ground point and the satellite.

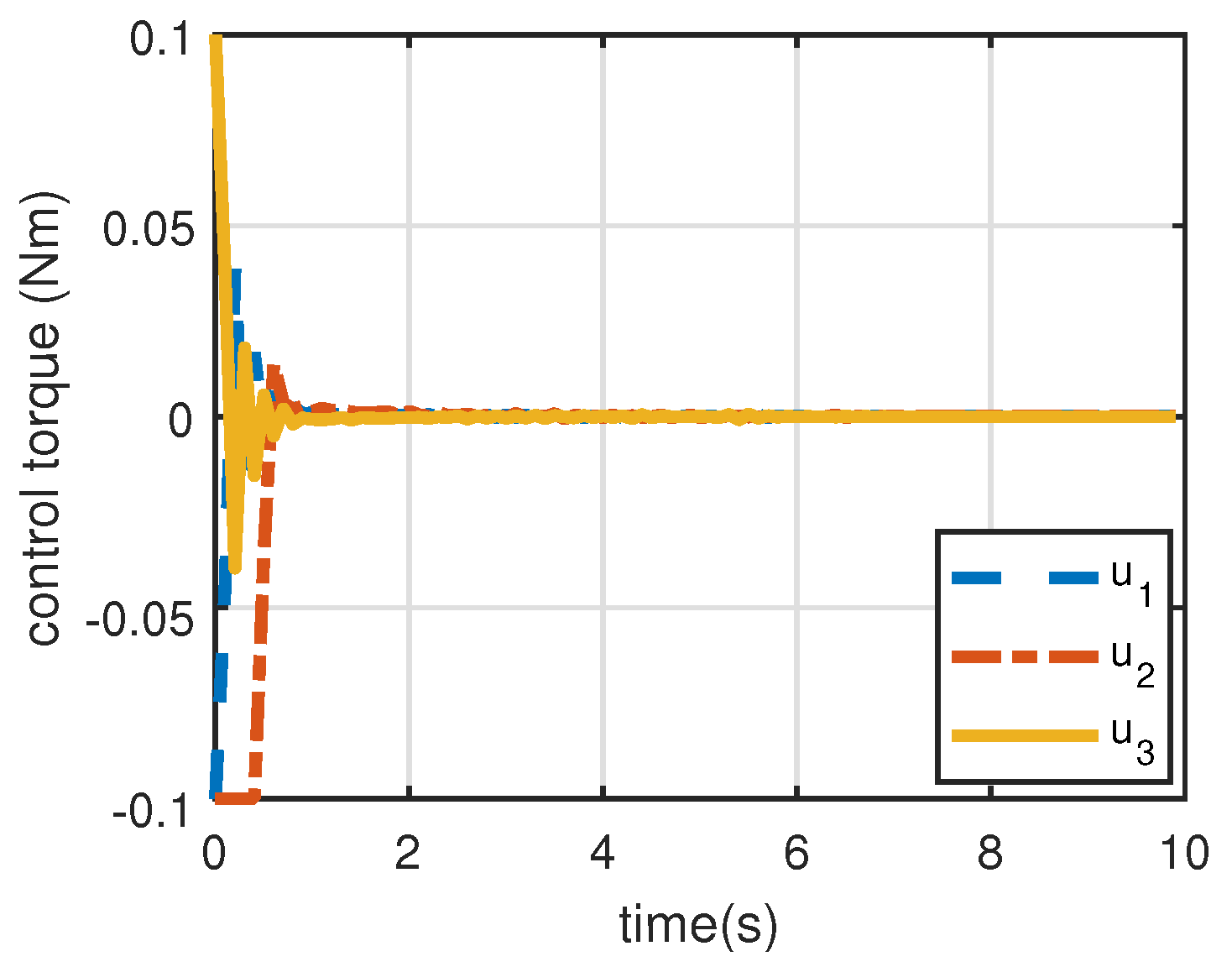

Figure 11 shows the control torques generated by attitude actuators. In the starting process,

reaches its upper bound which is the joint result of the parameter estimation, the initial image errors and the initial angular velocity. As the target projection approaches its desired location, the control torques are decreasing and are eventually kept within a narrow range to meet the need for the aforementioned minor adjustment of the angular acceleration.

Here, we sum up these two cases. For a position-based staring controller, it fails at coping with the deviation in the presence of an uncalibrated camera. For the proposed image-based adaptive staring controller, although the camera parameters are not unknown, the online estimation can reduce the estimated projection errors. With this technique, control torques are generated to drive the target projection to its desired location. The simulation demonstrates that the adaptive controller achieves the goal of keeping the target’s projection at the center and we can expect that high precision can be realized to gain better ground target staring observation. Only small control torques are needed to maintain the constant tracking in the stable staring process.

5. Conclusions and Outlook

For a video satellite, staring attitude control has been its main working mode and has reaped many promising applications. This paper proposes an adaptive controller that takes the camera’s model into account. First, the projection kinematics are established based on the staring imaging scenario, where constant relative motion exists. Second, an attitude reference trajectory is introduced to avoid designing the desired angular velocity and a potential function is introduced to guarantee that the definition of the reference angular velocity exists. Third, we define the parameters that need to be estimated and a corresponding parameter updating rule is proposed. Finally, the image and attitude information is incorporated to form the adaptive staring controller, which is constructed using the estimated variables. Stability is proved and the projection is successfully controlled to the predetermined desired location on the image plane in the simulation. Thus, an image-based staring controller for an uncalibrated camera is formulated.

While we can obtain the information of ground targets, it is hard to predict the motion of many moving targets such as planes and ships. In the further study, non-cooperative targets should be dealt with where the relative motion is unknown.