Physics-Informed MTA-UNet: Prediction of Thermal Stress and Thermal Deformation of Satellites

Abstract

1. Introduction

- (1)

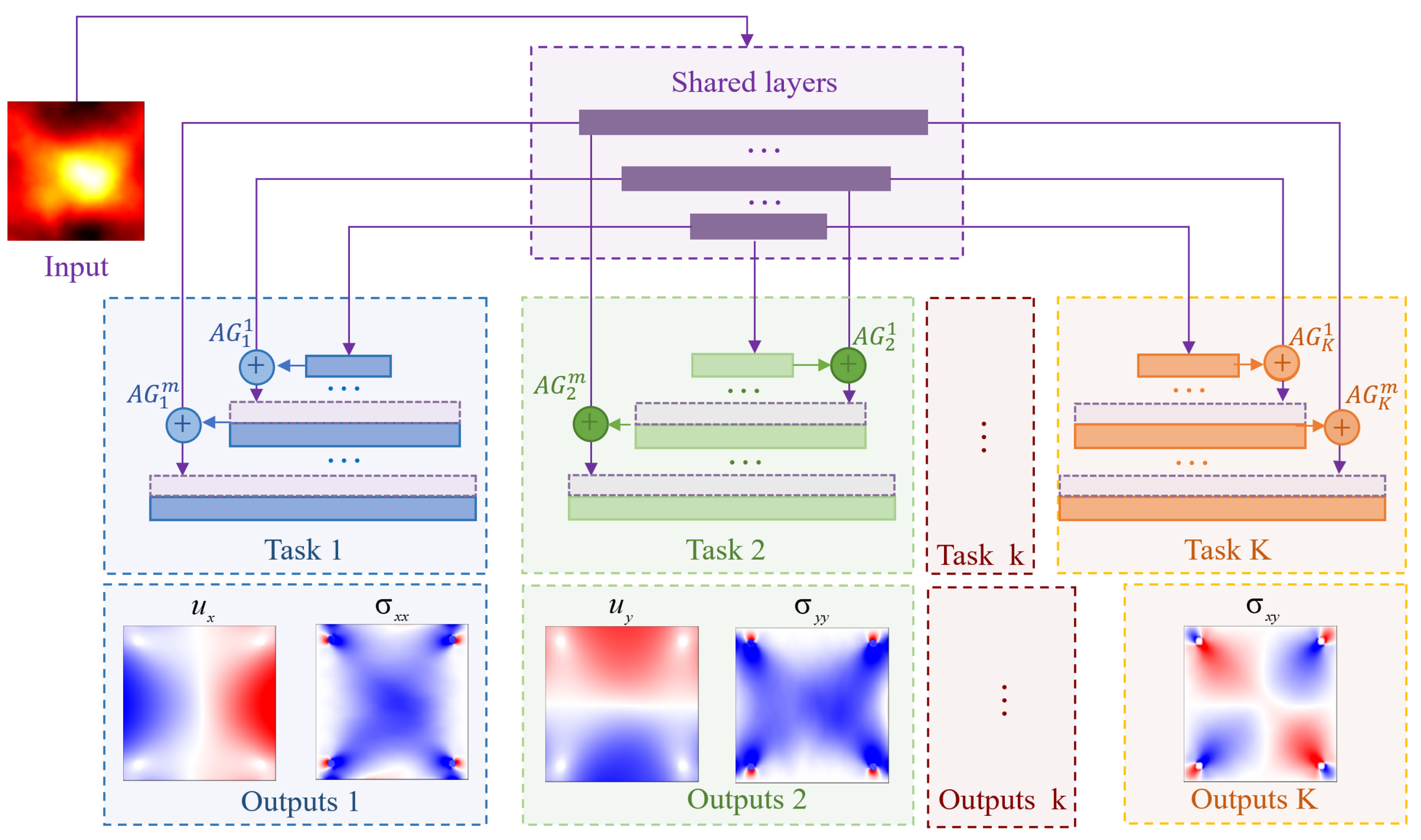

- This paper integrates the advantages of the U-Net and MTL, and proposes the Multi-Task Attention UNet network (MTA-UNet). This network not only shares feature information between high-level layers and low-level layers, but also shares feature information between different tasks. Specifically, it shares the parameters in a targeted way through the attention mechanism. The MTA-UNet effectively reduces the training time of the model and improves the accuracy of prediction compared with STL U-Net.

- (2)

- A physics-informed approach is applied in training the deep learning-based surrogate model, where the finite difference is applied to discrete thermoelastic and thermal equilibrium equations. The equations are encoded into a loss function to fully exploit the existing physics knowledge.

- (3)

- Faced with multiple physics tasks, an uncertainty-based loss balancing strategy is adopted to weigh the loss functions of different tasks during the training process. This strategy solves the problem that the training speed and accuracy between different tasks are difficult to balance if trained together, effectively reducing the phenomenon of competition between tasks.

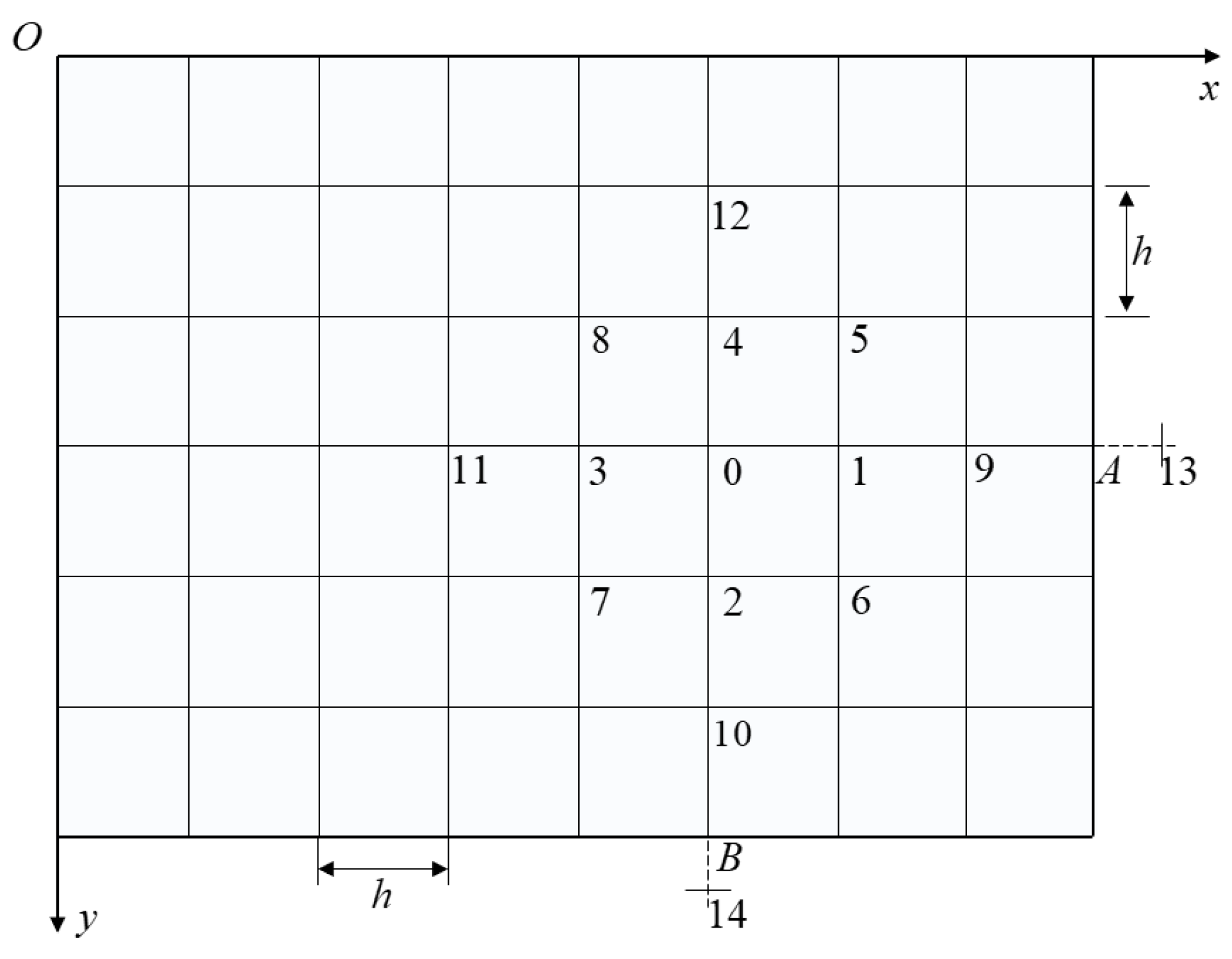

2. Mathematical Modeling of Thermal Stress and Deformation Prediction

3. Method

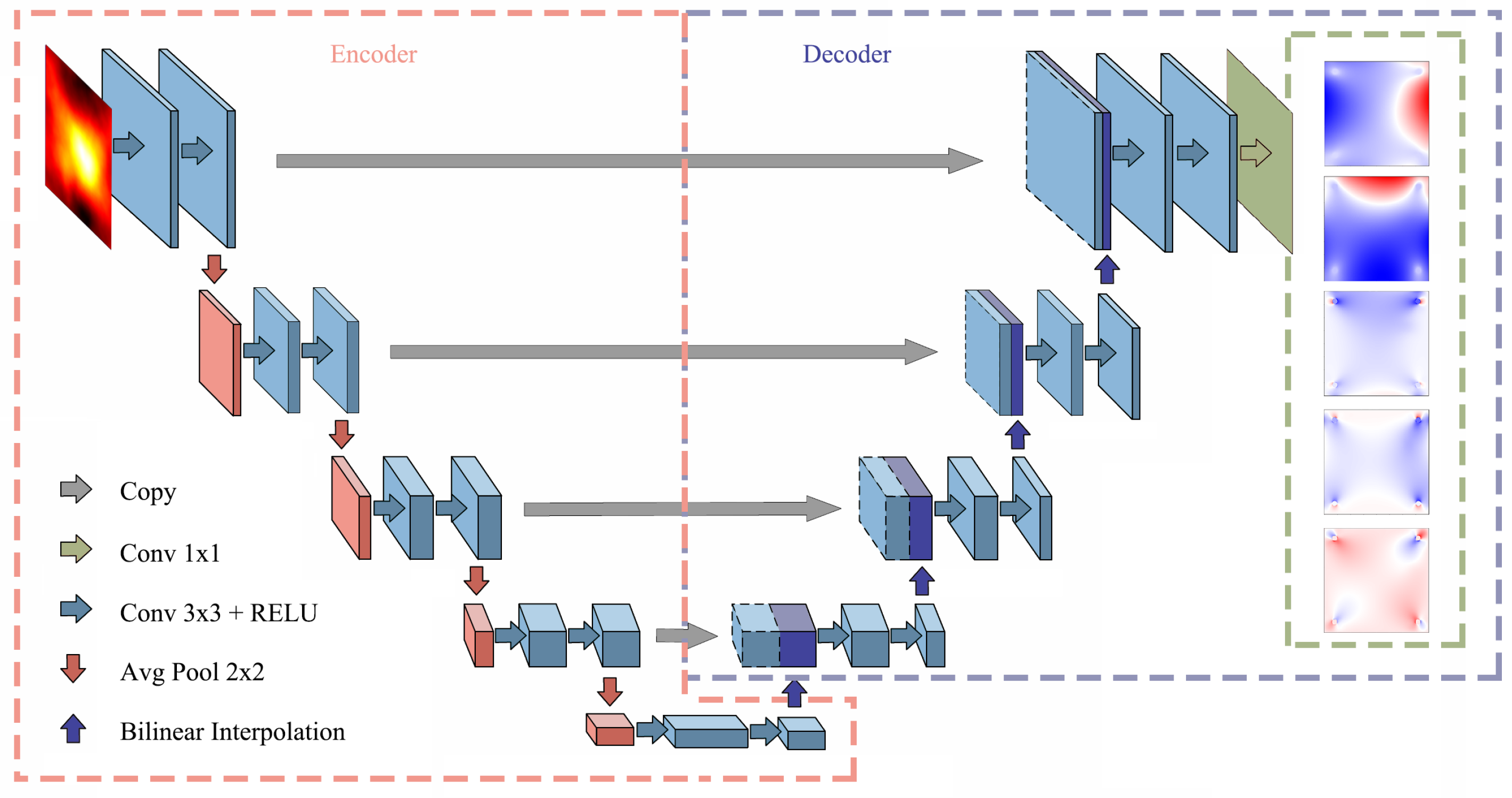

3.1. MTA-Unet Network Structure

3.1.1. Architecture of the Mta-Unet Network

3.1.2. Feature Sharing Mechanism of Mta-Unet

3.1.3. Attention Gate of MTA-Unet

3.2. A Physics-Informed Training Strategy

3.3. Uncertainty-Based Multi-Task Loss Balancing Strategy

4. Experiment Results

4.1. Training Steps

4.2. Performance of Multiple Strategies

4.2.1. Effectiveness of Dynamic Loss Balancing Strategies

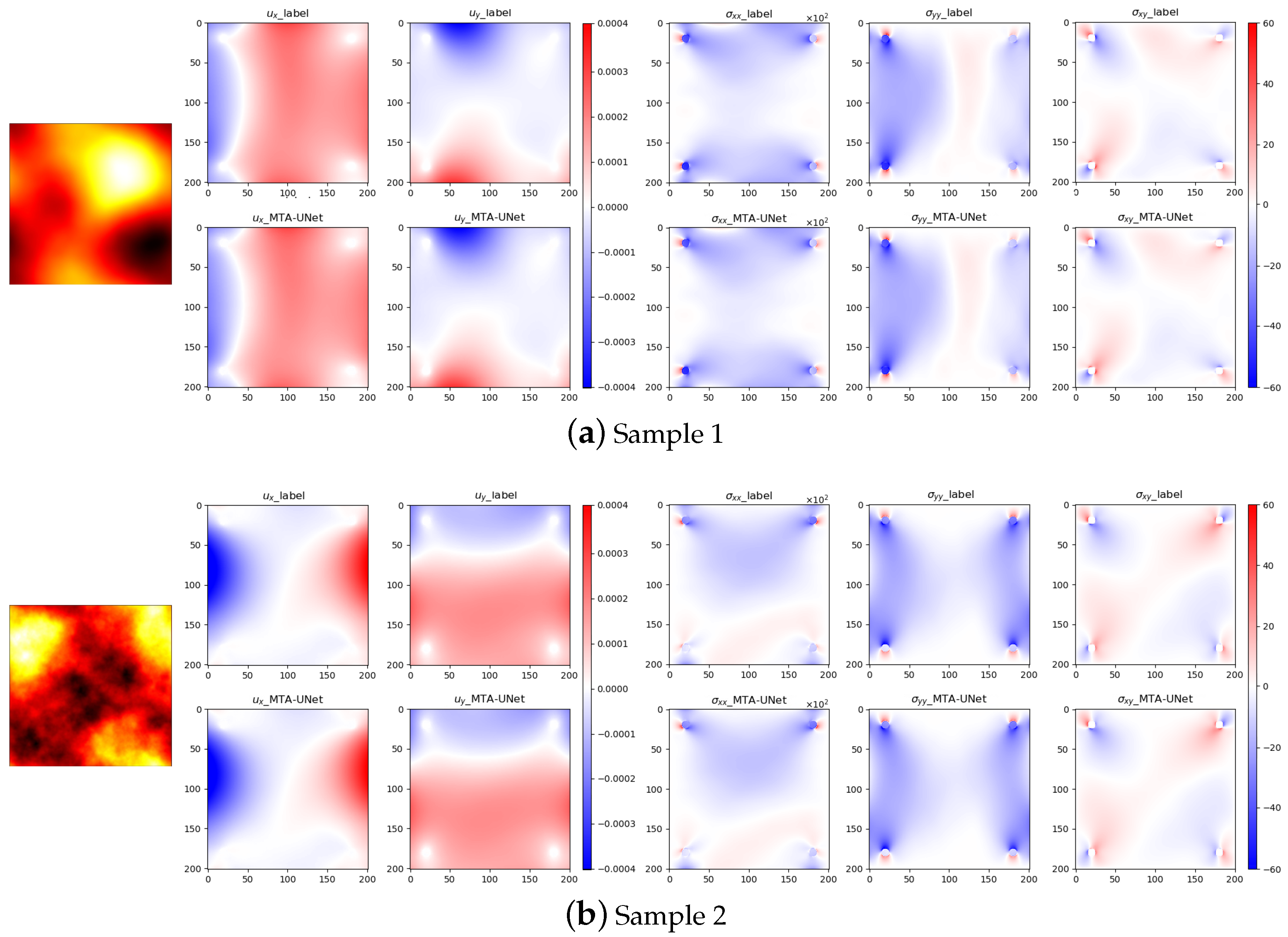

4.2.2. Performances of Models

4.2.3. Effects of the Physics-Informed Strategy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Montenbruck, O.; Gill, E.; Lutze, F. Satellite orbits: Models, methods, and applications. Appl. Mech. Rev. 2002, 55, B27–B28. [Google Scholar] [CrossRef]

- Kodheli, O.; Lagunas, E.; Maturo, N.; Sharma, S.K.; Shankar, B.; Montoya, J.F.M.; Duncan, J.C.M.; Spano, D.; Chatzinotas, S.; Kisseleff, S.; et al. Satellite communications in the new space era: A survey and future challenges. IEEE Commun. Surv. Tutor. 2020, 23, 70–109. [Google Scholar] [CrossRef]

- Chen, X.; Chen, X.; Zhou, W.; Zhang, J.; Yao, W. The heat source layout optimization using deep learning surrogate modeling. Struct. Multidiscip. Optim. 2020, 62, 3127–3148. [Google Scholar] [CrossRef]

- Du, Z.; Zhu, M.; Wang, Z.; Yang, J. Design and application of composite platform with extreme low thermal deformation for satellite. Compos. Struct. 2016, 152, 693–703. [Google Scholar] [CrossRef]

- Liu, X.; Cai, G. Thermal analysis and rigid-flexible coupling dynamics of a satellite with membrane antenna. Int. J. Aerosp. Eng. 2022, 2022, 3256825. [Google Scholar] [CrossRef]

- Stohlman, O.R. Coupled radiative thermal and nonlinear stress analysis for thermal deformation in large space structures. In Proceedings of the 2018 AIAA Spacecraft Structures Conference, Kissimmee, FL, USA, 8–12 January 2018; p. 0448. [Google Scholar]

- Azadi, E.; Fazelzadeh, S.A.; Azadi, M. Thermally induced vibrations of smart solar panel in a low-orbit satellite. Adv. Space Res. 2017, 59, 1502–1513. [Google Scholar] [CrossRef]

- Johnston, J.D.; Thornton, E.A. Thermally induced dynamics of satellite solar panels. J. Spacecr. Rocket. 2000, 37, 604–613. [Google Scholar] [CrossRef]

- Shen, Z.; Li, H.; Liu, X.; Hu, G. Thermal-structural dynamic analysis of a satellite antenna with the cable-network and hoop-truss supports. J. Therm. Stress. 2019, 42, 1339–1356. [Google Scholar] [CrossRef]

- Zhao, X.; Gong, Z.; Zhang, J.; Yao, W.; Chen, X. A surrogate model with data augmentation and deep transfer learning for temperature field prediction of heat source layout. Struct. Multidiscip. Optim. 2021, 64, 2287–2306. [Google Scholar] [CrossRef]

- Chen, X.; Yao, W.; Zhao, Y.; Chen, X.; Zheng, X. A practical satellite layout optimization design approach based on enhanced finite-circle method. Struct. Multidiscip. Optim. 2018, 58, 2635–2653. [Google Scholar] [CrossRef]

- Cuco, A.P.C.; de Sousa, F.L.; Silva Neto, A.J. A multi-objective methodology for spacecraft equipment layouts. Optim. Eng. 2015, 16, 165–181. [Google Scholar] [CrossRef]

- Chen, X.; Liu, S.; Sheng, T.; Zhao, Y.; Yao, W. The satellite layout optimization design approach for minimizing the residual magnetic flux density of micro-and nano-satellites. Acta Astronaut. 2019, 163, 299–306. [Google Scholar] [CrossRef]

- Yao, W.; Chen, X.; Ouyang, Q.; Van Tooren, M. A surrogate based multistage-multilevel optimization procedure for multidisciplinary design optimization. Struct. Multidiscip. Optim. 2012, 45, 559–574. [Google Scholar] [CrossRef][Green Version]

- Goel, T.; Hafkta, R.T.; Shyy, W. Comparing error estimation measures for polynomial and kriging approximation of noise-free functions. Struct. Multidiscip. Optim. 2009, 38, 429–442. [Google Scholar] [CrossRef]

- Clarke, S.M.; Griebsch, J.H.; Simpson, T.W. Analysis of support vector regression for approximation of complex engineering analyses. J. Mech. Des. 2005, 127, 1077–1087. [Google Scholar] [CrossRef]

- Yao, W.; Chen, X.; Zhao, Y.; van Tooren, M. Concurrent subspace width optimization method for rbf neural network modeling. IEEE Trans. Neural Netw. Learn. Syst. 2011, 23, 247–259. [Google Scholar]

- Zhang, Y.; Yao, W.; Ye, S.; Chen, X. A regularization method for constructing trend function in kriging model. Struct. Multidiscip. Optim. 2019, 59, 1221–1239. [Google Scholar] [CrossRef]

- Zakeri, B.; Monsefi, A.K.; Darafarin, B. Deep learning prediction of heat propagation on 2-D domain via numerical solution. In Proceedings of the 7th International Conference on Contemporary Issues in Data Science, Zanjan, Iran, 6–8 March 2019; pp. 161–174. [Google Scholar]

- Edalatifar, M.; Tavakoli, M.B.; Ghalambaz, M.; Setoudeh, F. Using deep learning to learn physics of conduction heat transfer. J. Therm. Anal. Calorim. 2021, 146, 1435–1452. [Google Scholar] [CrossRef]

- Farimani, A.B.; Gomes, J.; Pande, V.S. Deep learning the physics of transport phenomena. arXiv 2017, arXiv:170902432. [Google Scholar]

- Sharma, R.; Farimani, A.B.; Gomes, J.; Eastman, P.; Pande, V. Weakly-supervised deep learning of heat transport via physics informed loss. arXiv 2018, arXiv:180711374. [Google Scholar]

- Chen, J.; Viquerat, J.; Hachem, E. U-net architectures for fast prediction of incompressible laminar flows. arXiv 2019, arXiv:191013532. [Google Scholar]

- Deshpande, S.; Lengiewicz, J.; Bordas, S. Fem-based real-time simulations of large deformations with probabilistic deep learning. arXiv 2021, arXiv:211101867. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Liu, X.; Peng, W.; Gong, Z.; Zhou, W.; Yao, W. Temperature field inversion of heat-source systems via physics-informed neural networks. Eng. Appl. Artif. Intell. 2022, 113, 104902. [Google Scholar] [CrossRef]

- Bao, K.; Yao, W.; Zhang, X.; Peng, W.; Li, Y. A physics and data co-driven surrogate modeling approach for temperature field prediction on irregular geometric domain. arXiv 2022, arXiv:220308150. [Google Scholar] [CrossRef]

- Zhao, X.; Gong, Z.; Zhang, Y.; Yao, W.; Chen, X. Physics-informed convolutional neural networks for temperature field prediction of heat source layout without labeled data. arXiv 2021, arXiv:210912482. [Google Scholar]

- Zhang, Y.; Yang, Q. An overview of multi-task learning. Natl. Sci. Rev. 2018, 5, 30–43. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2021. [Google Scholar] [CrossRef]

- Misra, I.; Shrivastava, A.; Gupta, A.; Hebert, M. Cross-stitch networks for multi-task learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3994–4003. [Google Scholar]

- Tang, H.; Liu, J.; Zhao, M.; Gong, X. Progressive layered extraction (ple): A novel multi-task learning (mtl) model for personalized recommendations. In Proceedings of the Fourteenth ACM Conference on Recommender Systems, Virtual Event, 22–26 September 2020; pp. 269–278. [Google Scholar]

- Ma, X.; Zhao, L.; Huang, G.; Wang, Z.; Hu, Z.; Zhu, X.; Gai, K. Entire space multi-task model: An effective approach for estimating post-click conversion rate. In Proceedings of the The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 1137–1140. [Google Scholar]

- Ma, J.; Zhao, Z.; Chen, J.; Li, A.; Hong, L.; Chi, E.H. Snr: Sub-network routing for flexible parameter sharing in multi-task learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Hilton Hawaiian Village, Honolulu Hawaii, USA, 27 January–1 February 2019; Volume 33, pp. 216–223. [Google Scholar]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling task relationships in multi-task learning with multi-gate mixture-of-experts. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:12125701. [Google Scholar]

- Chen, Z.; Badrinarayanan, V.; Lee, C.Y.; Rabinovich, A. Gradnorm: Gradient normalization for adaptive loss balancing in deep multitask networks. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 794–803. [Google Scholar]

- Liu, S.; Johns, E.; Davison, A.J. End-to-end multi-task learning with attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1871–1880. [Google Scholar]

- Guo, M.; Haque, A.; Huang, D.A.; Yeung, S.; Li, F.-F. Dynamic task prioritization for multitask learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 270–287. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar]

- Xiang, Z.; Peng, W.; Zheng, X.; Zhao, X.; Yao, W. Self-adaptive loss balanced physics-informed neural networks for the incompressible navier-stokes equations. arXiv 2021, arXiv:210406217. [Google Scholar]

- Haran, M. Gaussian random field models for spatial data. In Handbook of Markov Chain Monte Carlo; Chapman and Hall/CRC: New York, USA, 2011; pp. 449–478. [Google Scholar]

| Task | ||||

|---|---|---|---|---|

| U-Net | MTA-UNet | U-Net | MTA-UNet | |

| 1.9754 | 1.1556 | ±8.2727 | ±1.9903 | |

| 2.0947 | 1.0721 | ±8.3263 | ±2.2980 | |

| 0.0945 | 0.0713 | ±0.01 | ±0.0097 | |

| 0.0924 | 0.0728 | ±0.01 | ±0.0098 | |

| 0.0474 | 0.0402 | ±0.01 | ±0.0087 | |

| Task | ||||

|---|---|---|---|---|

| U-Net | MTA-UNet | U-Net | MTA-UNet | |

| 2.23 | 1.30 | ±0.0056 | ±0.0044 | |

| 2.39 | 1.21 | ±0.0058 | ±0.0039 | |

| 1.27 | 0.96 | ±0.0036 | ±0.0027 | |

| 1.29 | 0.98 | ±0.0039 | ±0.0027 | |

| 1.36 | 1.03 | ±0.0038 | ±0.0030 | |

| Scale | Method | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 200 | Data | 6.46 | 6.42 | 4.43 | 4.55 | 5.52 | ±0.0421 | ±0.0396 | ±0.0145 | ±0.0143 | ±0.0200 |

| PDE | 6.07 | 6.02 | 4.02 | 4.17 | 5.11 | ±0.0305 | ±0.0297 | ±0.0103 | ±0.0102 | ±0.0142 | |

| 500 | Data | 3.72 | 3.76 | 2.77 | 2.78 | 2.93 | ±0.0185 | ±0.0193 | ±0.0083 | ±0.0088 | ±0.0104 |

| PDE | 3.48 | 3.50 | 2.37 | 2.45 | 2.66 | ±0.0095 | ±0.0096 | ±0.0065 | ±0.0056 | ±0.0093 | |

| 1000 | Data | 3.11 | 3.19 | 1.99 | 2.01 | 2.29 | ±0.0114 | ±0.0115 | ±0.0056 | ±0.0057 | ±0.0086 |

| PDE | 2.96 | 2.98 | 1.82 | 1.78 | 2.09 | ±0.0073 | ±0.0073 | ±0.0046 | ±0.0044 | ±0.0071 | |

| 2000 | Data | 2.62 | 2.65 | 1.55 | 1.61 | 1.72 | ±0.0089 | ±0.0092 | ±0.0049 | ±0.0052 | ±0.0065 |

| PDE | 2.49 | 2.51 | 1.41 | 1.40 | 1.53 | ±0.0063 | ±0.0062 | ±0.0044 | ±0.0042 | ±0.0044 | |

| 5000 | Data | 2.28 | 2.41 | 1.19 | 1.25 | 1.41 | ±0.0057 | ±0.0069 | ±0.0039 | ±0.0040 | ±0.0046 |

| PDE | 2.17 | 2.30 | 1.11 | 1.12 | 1.22 | ±0.0048 | ±0.0052 | ±0.0029 | ±0.0030 | ±0.0031 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Z.; Yao, W.; Peng, W.; Zhang, X.; Bao, K. Physics-Informed MTA-UNet: Prediction of Thermal Stress and Thermal Deformation of Satellites. Aerospace 2022, 9, 603. https://doi.org/10.3390/aerospace9100603

Cao Z, Yao W, Peng W, Zhang X, Bao K. Physics-Informed MTA-UNet: Prediction of Thermal Stress and Thermal Deformation of Satellites. Aerospace. 2022; 9(10):603. https://doi.org/10.3390/aerospace9100603

Chicago/Turabian StyleCao, Zeyu, Wen Yao, Wei Peng, Xiaoya Zhang, and Kairui Bao. 2022. "Physics-Informed MTA-UNet: Prediction of Thermal Stress and Thermal Deformation of Satellites" Aerospace 9, no. 10: 603. https://doi.org/10.3390/aerospace9100603

APA StyleCao, Z., Yao, W., Peng, W., Zhang, X., & Bao, K. (2022). Physics-Informed MTA-UNet: Prediction of Thermal Stress and Thermal Deformation of Satellites. Aerospace, 9(10), 603. https://doi.org/10.3390/aerospace9100603