1.2.1. Eye Movements

An enormous effort by cognitive psychologists and neuroscientists has been made toward understanding ATCOs’ eye movements. These studies implicitly or explicitly assume that eye movements, as an information-seeking behavior, is closely related to

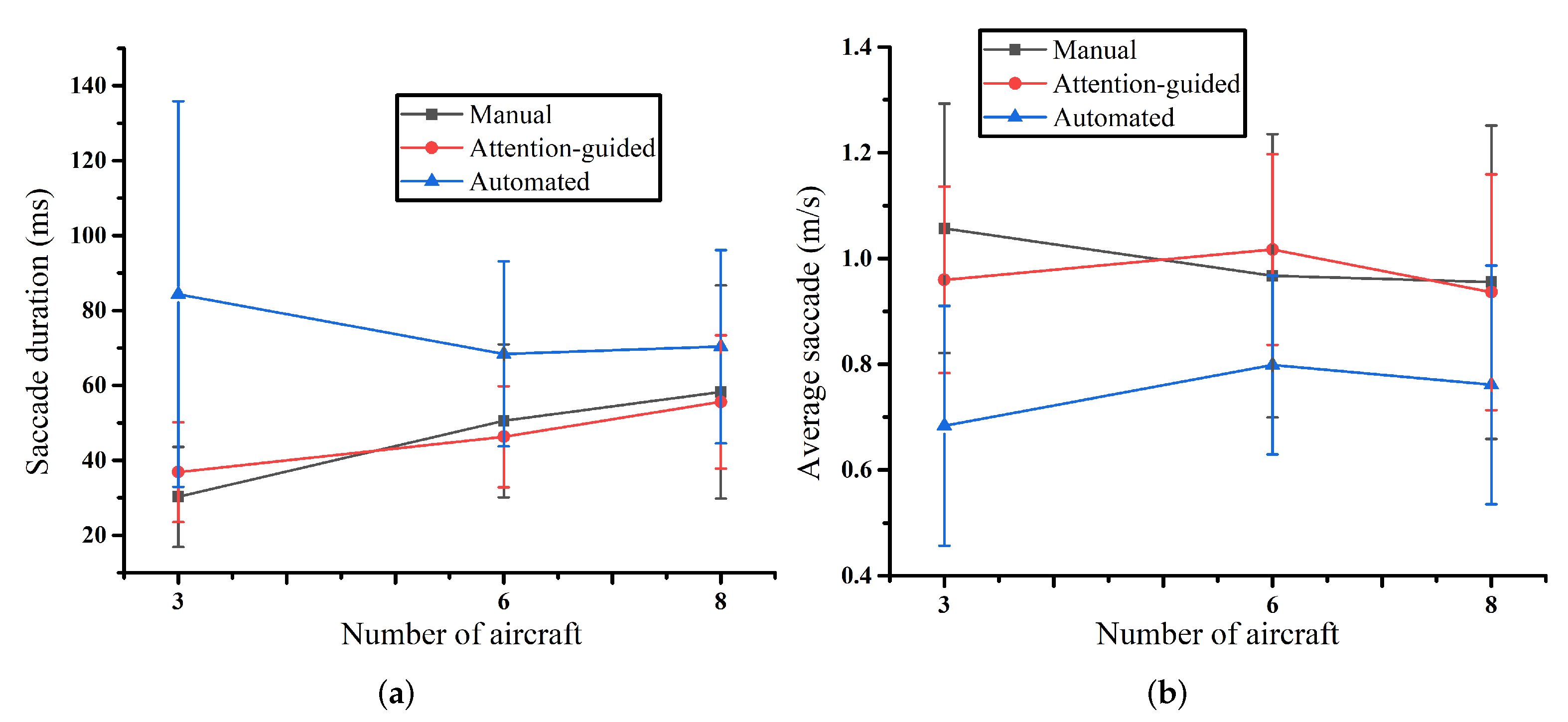

workload, conflict detection, and performance. Eye movements data were recorded and analyzed to identify their relationships with workload. For example, Ahlstrom et al. studied the correlation between eye movements and the ATCO workload. They investigated the relationship between ATCO saccades, blink frequency, pupil diameter, and air traffic flow [

10]. The results showed that the use of DSTs in supervision work can reduce the ATCO workload. Tokuda studied the relationship between saccadic intrusion and workload [

11]. It is found that saccadic intrusion was closely connected to the workload. The correlation coefficient between the workload and saccadic intrusion was up to 0.84. In another study, Stasi set up three tasks with different levels of difficulty in a simplified control simulation experiment to study the relationship between workload and saccade [

12]. The results showed that the increase in workload led to an increase in response time and decrease in peak saccade speed. Muller et al. found that the value of pupil restlessness gradually increased when the workload increased. However, the value of pupil restlessness cannot be used as a workload indicator when workload reaches a certain threshold [

13]. Imants et al. studied the relationship between eye movement indicators and task performance [

14]. They found that the saccade path, saccade time, and gaze duration of different ATCOs were significantly different when performing surveillance, planning, and control tasks.

There are few studies on the investigation of ATCOs’ eye movements in conflict detection tasks [

15,

16]. Kang et al. investigated visual search strategies and conflict detection strategies used by air traffic control experts [

15]. Based on the collected data, the saccade strategies were classified into five categories, while the strategies for managing air traffic were classified into four groups. Marchitto et al. studied the impact of scene complexity on the ATCO’s workload in conflict detection tasks [

17]. Results suggested that ATCOs tended to use more gaze and more saccades in the conflict scenes than in the conflict-free scene.

In another line of research, research effort was devoted to the understanding of relationships between eye movements and human performance. For instance, Mertens et al. collected ATCOs’ eye movements data, including gaze, saccade, and blink, to investigate how effectively display cues can reduce human errors [

18]. It was found that the ATCOs’ attention was mainly concentrated in areas with dense traffic, especially those aircraft which have just entered the sector. To improve ATCO’s performance, they suggested that the latest aircraft entering the sector can be marked with color, flashing, or other prompts, to attract ATCOs’ visual attention. Meeuwen et al. studied the visual search strategy and task performance of ATCOs of three levels of expertise: novice, intermediate, and expert [

19]. Performance results showed that experts used more efficient scan paths and less mental effort to retrieve relevant information. Wang et al. studied the effect of working experience on the ATCOs’ eye movement behaviors. The results showed that working experience had a notable effect on eye-movement patterns. Both fixation and saccades were found to be different between qualified ATCOs and novice [

20].

The extensive discussions on the eye movements in air traffic management domain demonstrated that ATCOs’ visual searching behaviors are closely related to their mental workload, conflict detection strategies, and task performance. Notable differences on the eye movements indicators can be found among ATCOs with levels of expertise, or working experience. However, few works can be found in the literature that focus on the levels of automation on ATCOs’ eye movements.

1.2.2. Classification of the Levels of Automation

Levels of automation (LOA) generally refers to the degree to which a task is automated. The definition of LOA specifies the roles and responsibilities of human and machine in a complex system. Sheridan et al. in [

21] proposed the standards for classifying the levels of system automation. They divided system automation into ten levels ranging from 1 to 10, and described the main functions of the automation at each level accordingly. A higher level indicates higher automation with less manual intervention. Fitts et al. proposed the concept of the automation phase. They divided the automation phases into four sub-phases: information filtering, information integration, decision making, and implementation [

22]. Parasuraman et al. developed a model for selecting appropriate types and LOA for a system [

23]. Four types of functions in a system are proposed, namely information acquisition, information analysis, decision and action selection, and action implementation. Automation can be applied within each type from low level to high level. It is suggested that the selection or design of automation level can be mainly based on human performance consequences, automation reliability, and the costs of decision/action consequences.

In the field of autonomous driving, the National Highway Traffic Safety Administration (NHTSA) proposed a set of classification standards for self-driving cars with four LOA in 2013. Later, the Society of Automotive Engineers (SAE) proposed a set of self-driving car classification standards building up on the NHTSA’s standards. The SAE divides autonomous driving into five levels: driving support, partial automation, conditional automation, high degree of automation, and full automation [

5].

1.2.3. Situation Awareness

Situation awareness (SA) is recognized as a critical foundation for humans making correct decisions in a complex and dynamic environment. According to Endsley [

24], situation awareness is defined as “

the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future”. Wickens et al. [

9] described situation awareness as follows: the operator obtains relevant situational information from the system and environment, assesses the overall situation based on his/her understanding and knowledge, and then adjusts the methods of information acquisition and prejudges the future situation. Similarly, situation awareness is referred to as a cognitive process in which operators observe, understand, predict, and control the status and future trends of systems and environments [

25]. Situation awareness is also considered to be a comprehensive analysis and understanding of various information in the task, as well as a cognitive process of predicting its trend [

26]. Here, we define situation awareness as a complete progress that the operator observes and obtains relevant information from the system and environment, integrating this progress into a complete representation, and then make future information acquisition plan in order to predict the system’s state.

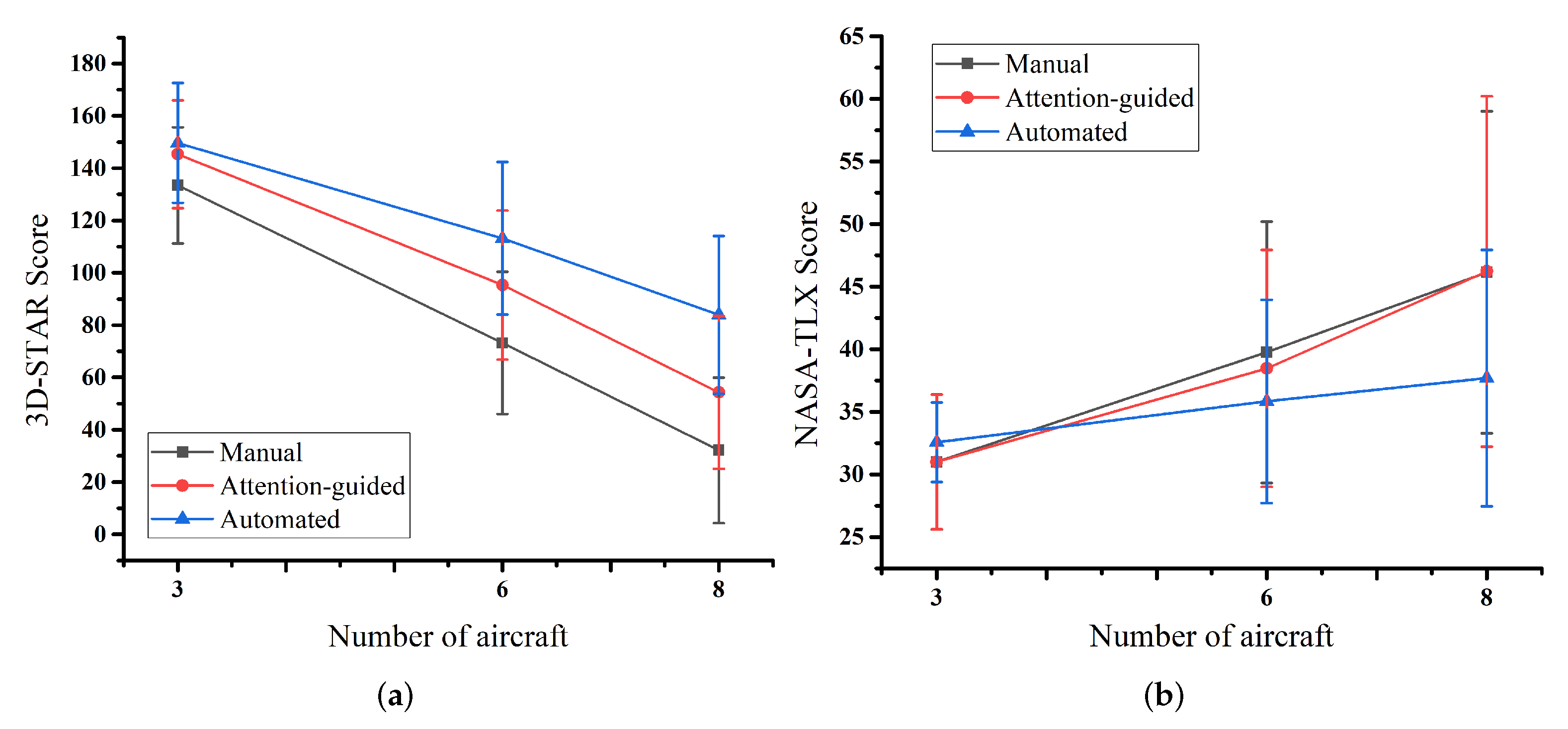

Several pioneering studies on situation awareness in air traffic management can be found in [

27,

28]. Reports suggested that ATCO relied on situation awareness to detect and resolve conflicts. Meanwhile, the levels of situation awareness were positively correlated with the ATCO performance. ATCOs tended to make more mistakes when they had lower situation awareness [

28].

There are four commonly used methods to assess situation awareness:

subjective measuring, physiological measurement, performance assessment, and memory probing [

29]. Subjective measuring will take place once the subject completes the tasks. The subject assesses his/her SA by self-assessment. The Situation Awareness Rating Technique (SART), originally developed for the assessment of pilot SA, is widely used in various fields. The participant is required to rate each of the ten dimensions of SA based on performance and task. It is a simplistic post-trial subjective rating technique. Physiological indicators have been widely proposed to measure human workload. They are rarely used to assess situation awareness. Vidulich [

30] set up a flight experiment and collected the electroencephalography (EEG) data of the subjects. They found that the activity of

-wave decreased, and

-wave increased when the subject was experiencing lower SA. Few studies have shown that situation awareness can be inferred through EEG, event-related potential (ERP), heart rate variability (HRB), and skin conduction level (SCL) [

31,

32]. A recent review on the physiological measures of SA reports the general findings on the studies that investigated the correlations between physiological measures and situation awareness [

33]. It was found that eye movements measures and EEG are commonly reported to have correlations with SA. The other measures, for example cardiovascular indicators, generate mix results.

The performance assessment method is to assess the situation awareness based on the performance of the subject during the experiment. The advantages of this method are low cost, objective, less influenced by the exogenous factors, and no requirement for additional space, while the disadvantage is that it may be influenced by the stress, workload, and arouses of the subjects [

32]. In a memory-probing method, the subject is asked to report the memorized content of the experiment as well as its correctness and completeness, which is used to assess the situation awareness.