The Effect of Real-World Interference on CNN Feature Extraction and Machine Learning Classification of Unmanned Aerial Systems

Abstract

:1. Introduction

2. Materials and Methods

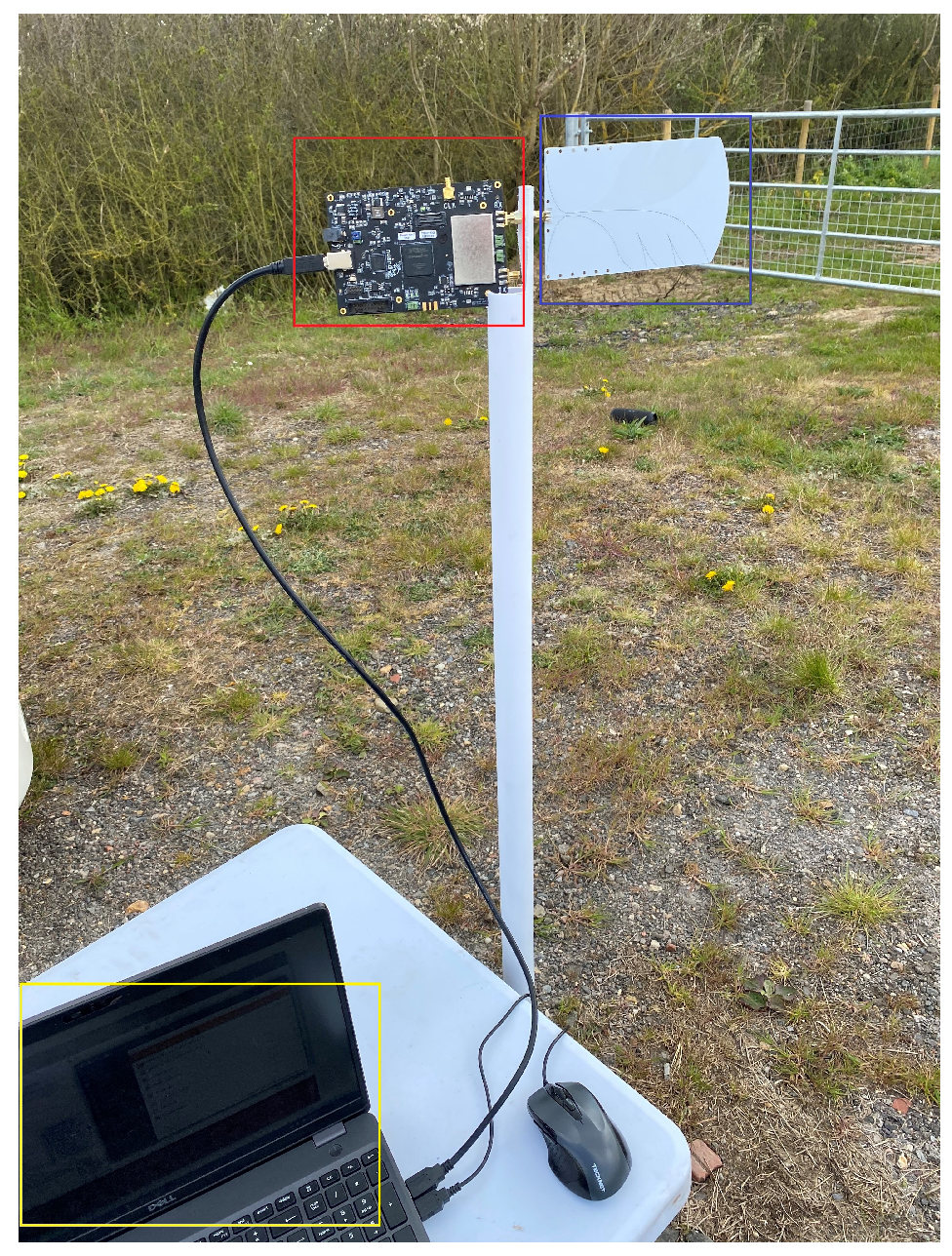

2.1. Experimental Setup

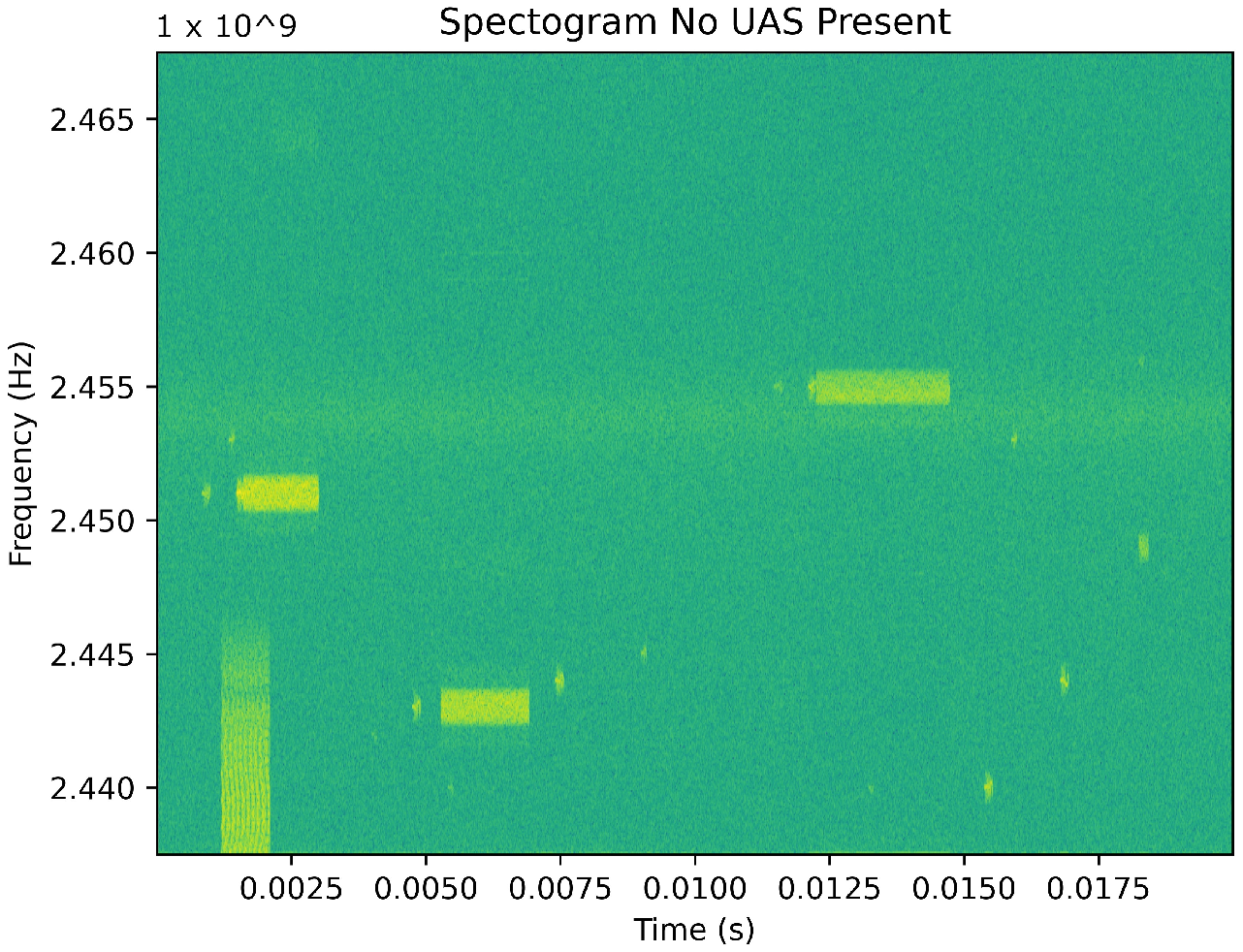

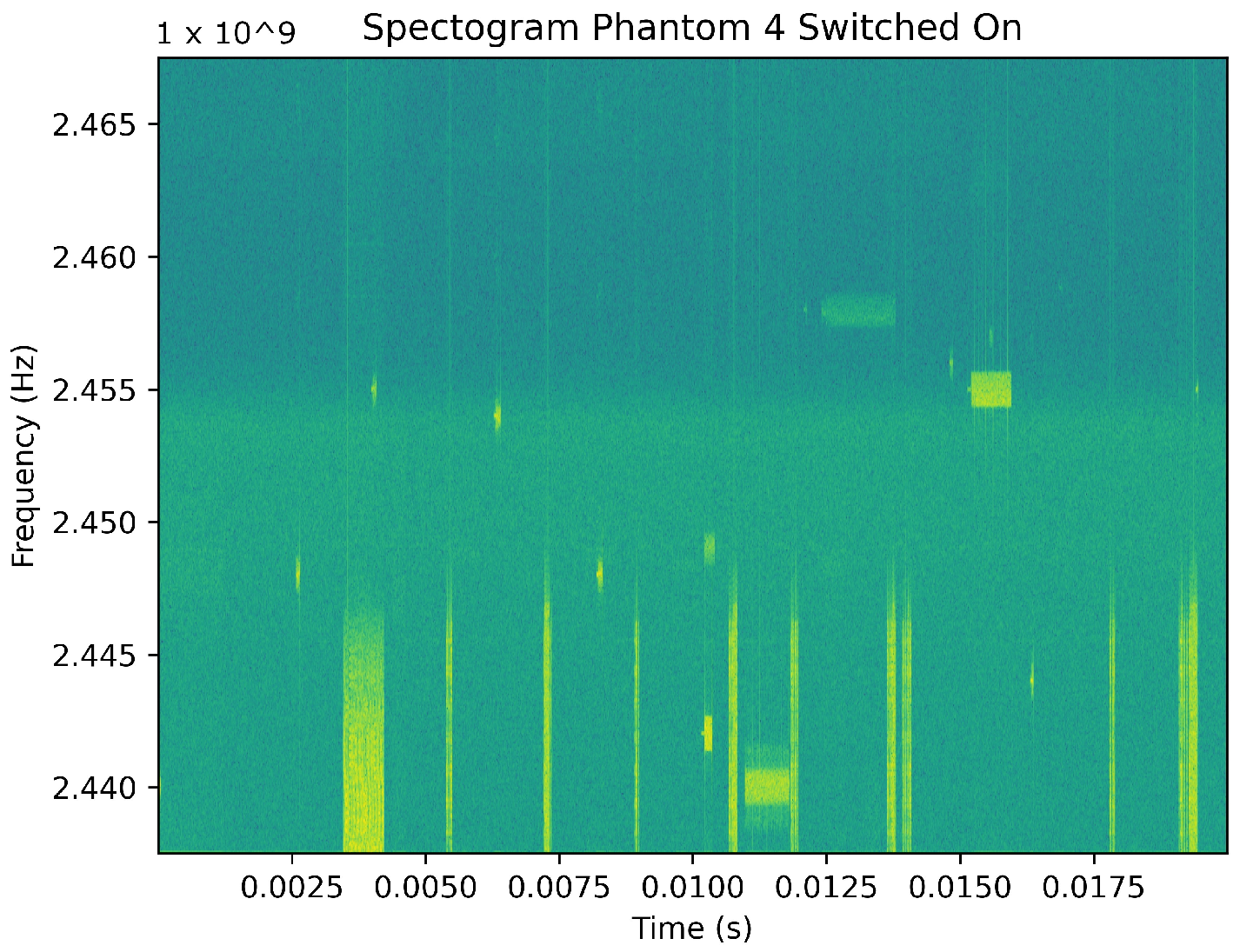

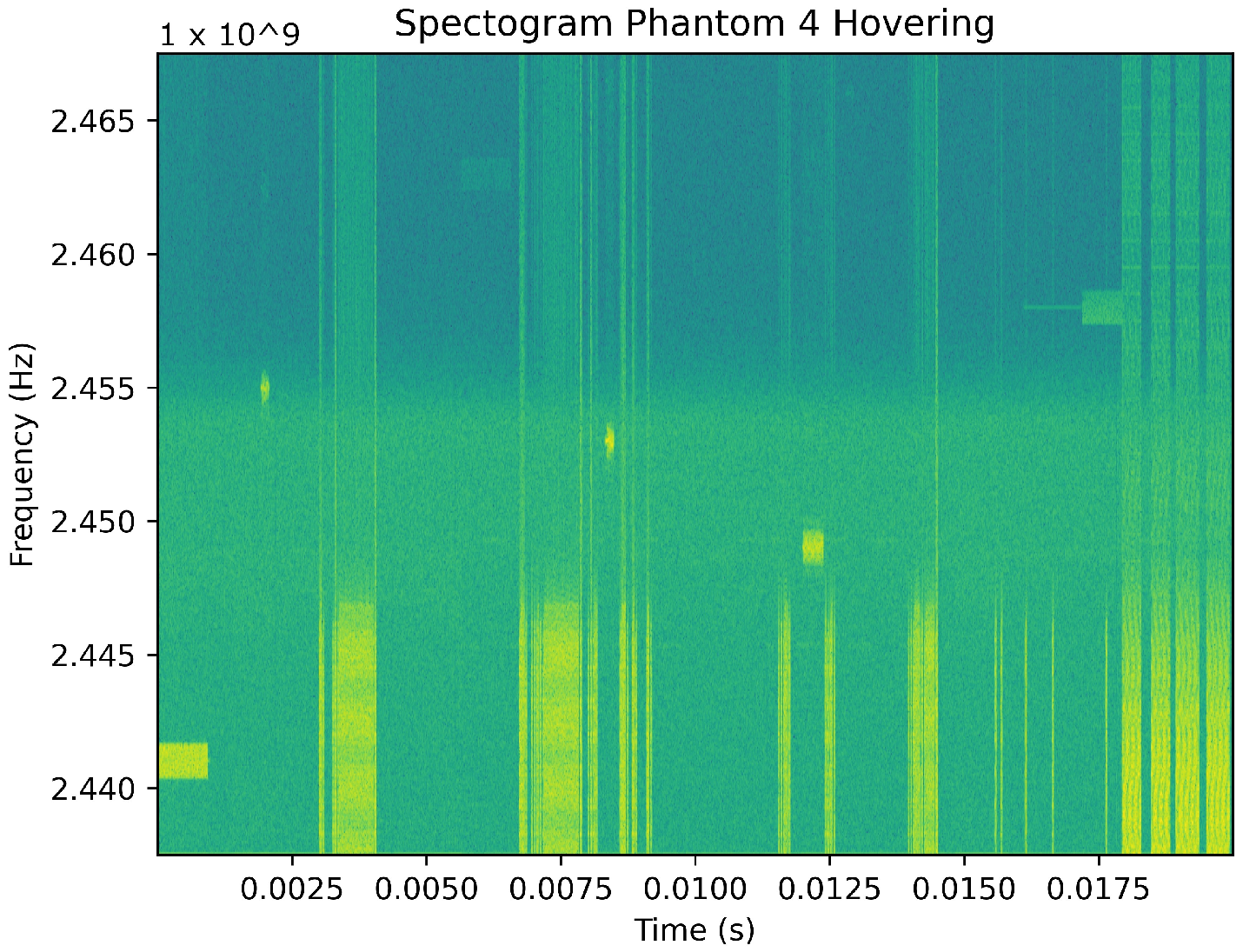

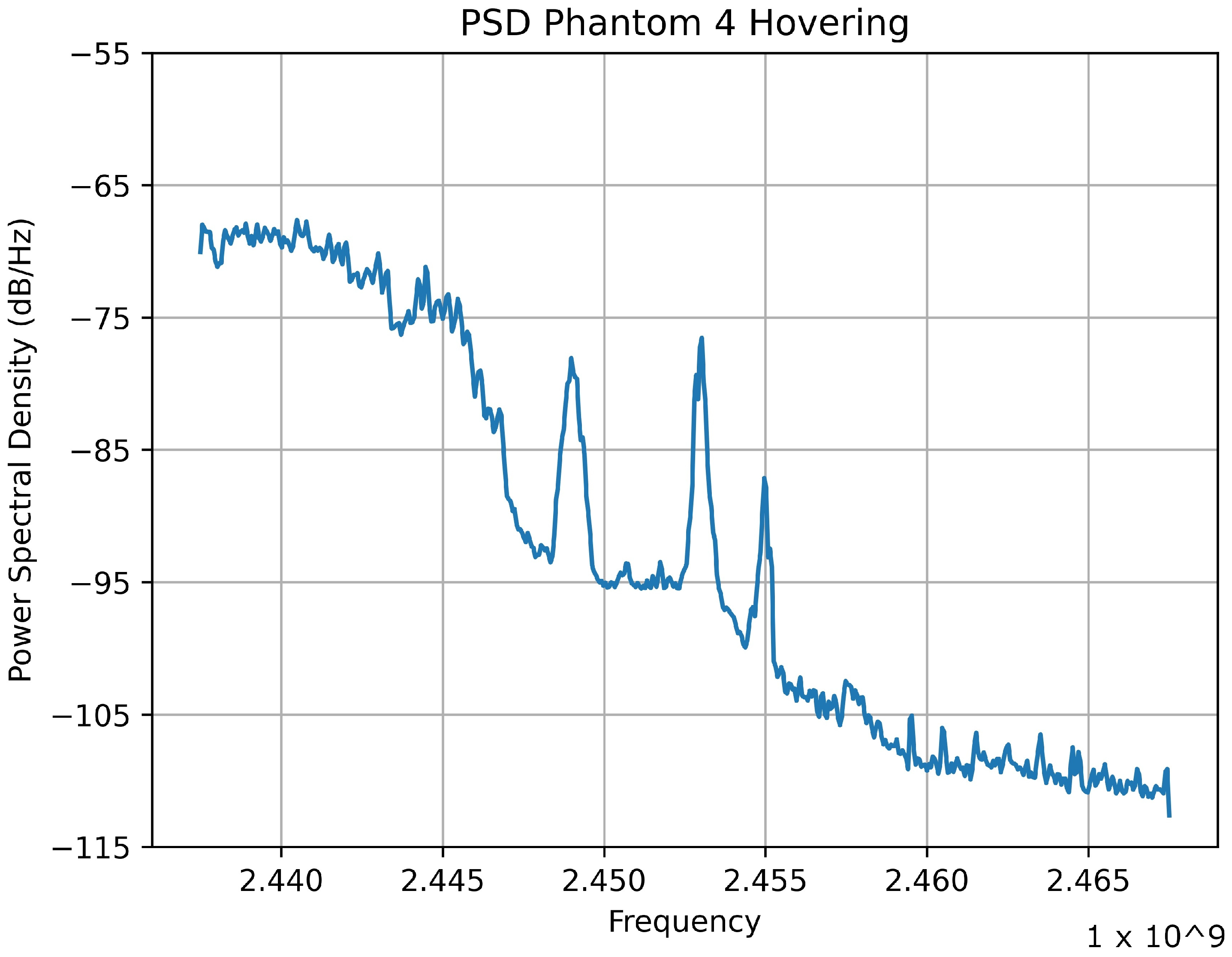

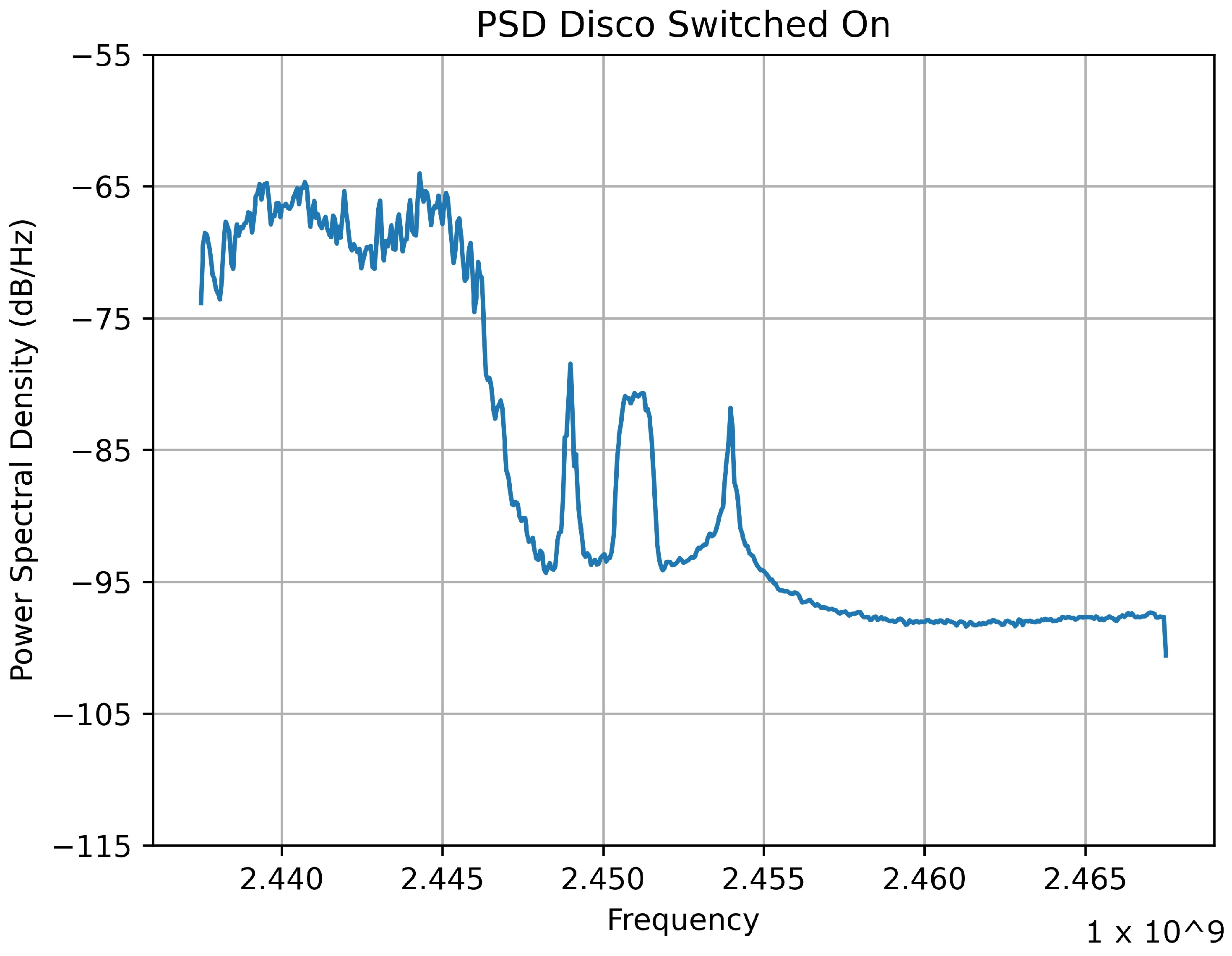

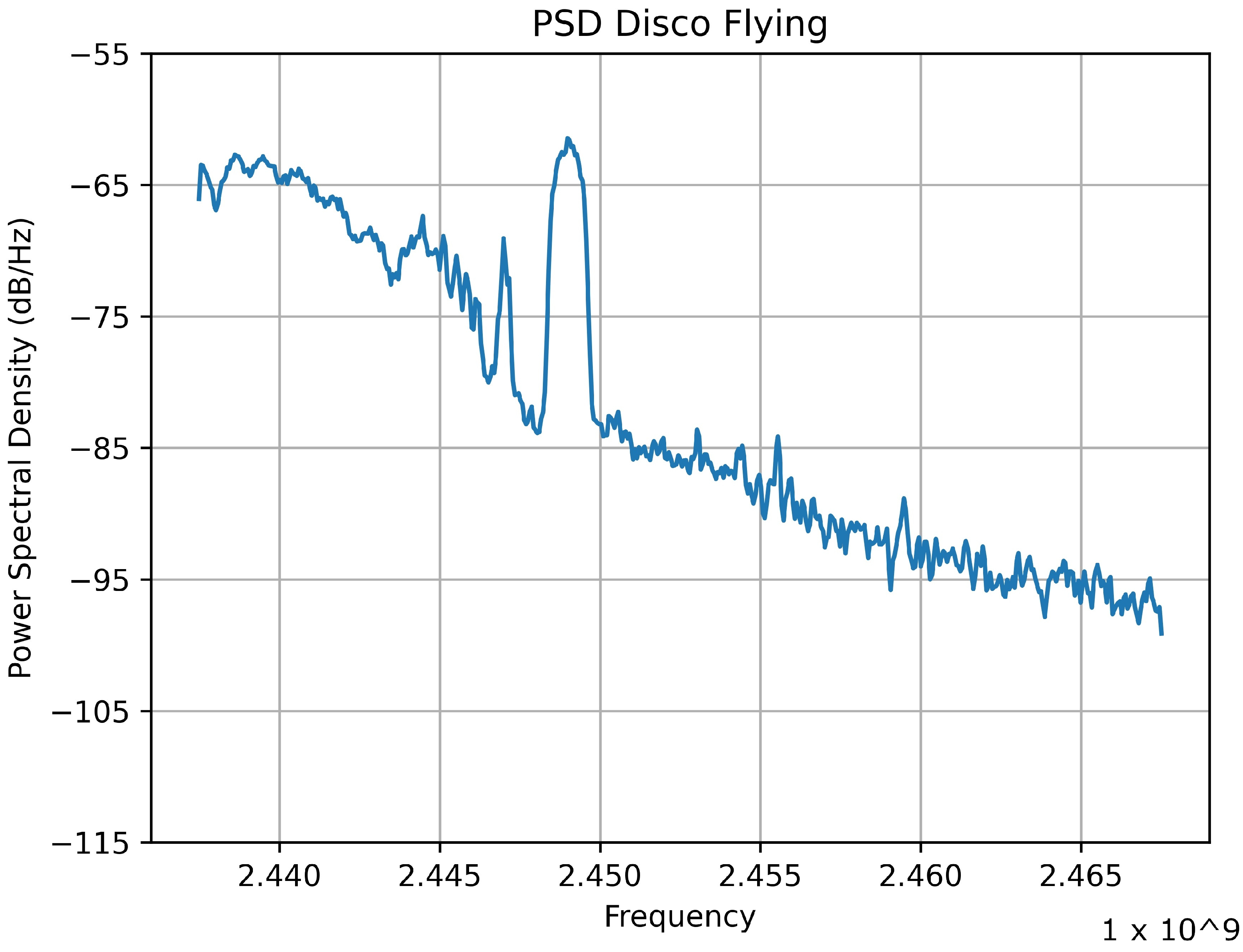

2.2. Graphical Signal Representation

2.3. Classification

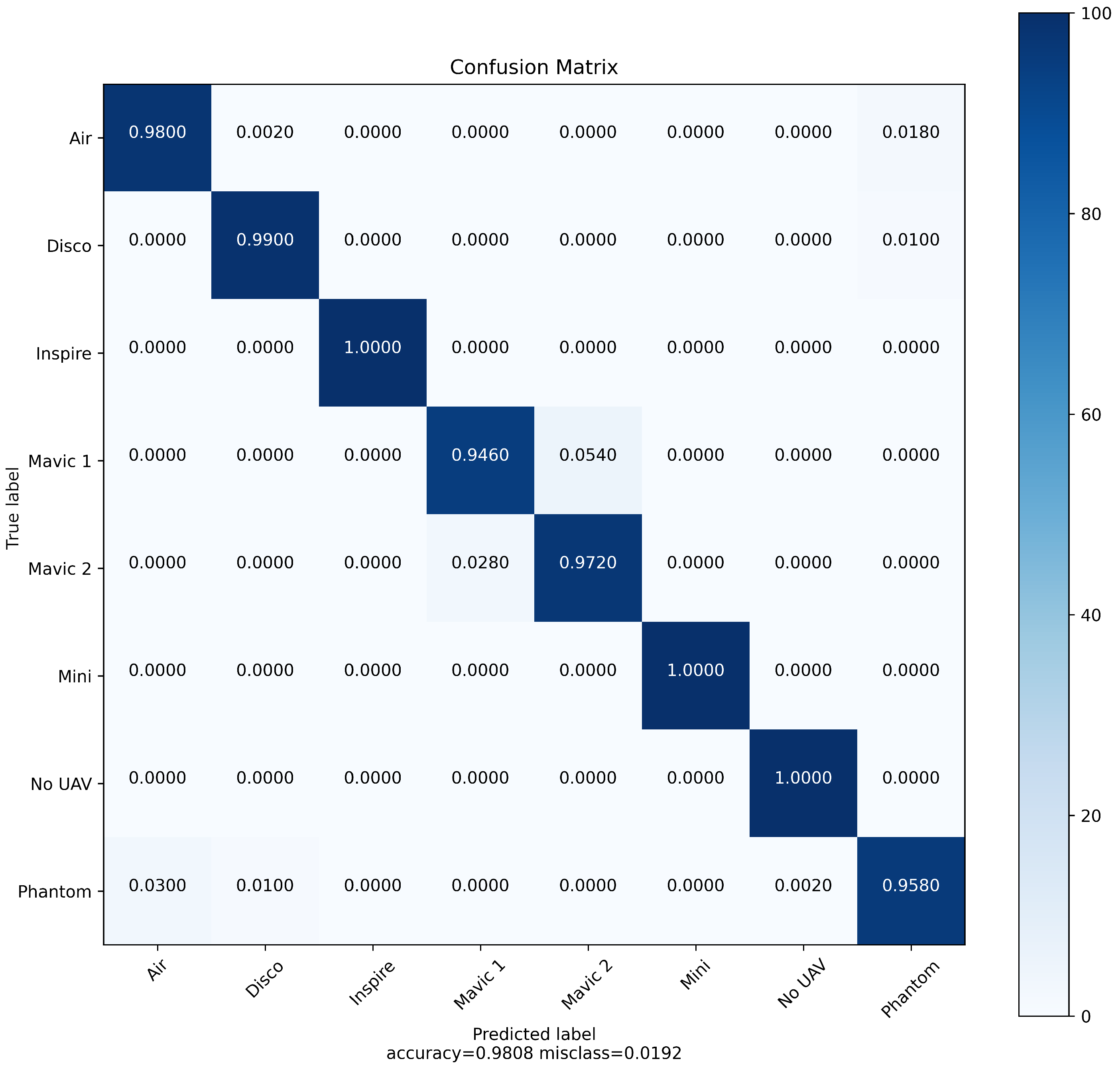

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tagabe, P.M. Economy-wide impact of drones. Infrastruct. Mag. 2021. Available online: https://infrastructuremagazine.com.au/2021/02/10/economy-wide-impact-of-drones/ (accessed on 23 June 2021).

- Partidge, J. Royal Mail to deliver to Scilly Isles by drone in first UK trial of its kind. The Guardian, 2021. Available online: https://www.theguardian.com/business/2021/may/10/royal-mail-to-deliver-to-scilly-isles-by-drone-in-first-uk-trial-of-its-kind(accessed on 23 June 2021).

- McKenzie, K. US Army General: Small Drones Biggest Threat Since IEDs. The Defense Post, 2021. Available online: https://www.thedefensepost.com/2021/02/10/small-drones-threat-us-general/(accessed on 23 June 2021).

- Nassi, B.; Shabtai, A.; Masuoka, R.; Elovici, Y. SoK—Security and privacy in the age of drones: Threats, challenges, solution mechanisms, and scientific gaps. arXiv 2019, arXiv:1903.05155. [Google Scholar]

- Altawy, R.; Youssef, A.M. Security, privacy, and safety aspects of civilian drones: A survey. ACM Trans. Cyber Phys. Syst. 2017, 1. [Google Scholar] [CrossRef]

- Lykou, G.; Moustakas, D.; Gritzalis, D. Defending airports from uas: A survey on cyber- attacks and counter-drone sensing technologies. Sensors 2020, 20, 3537. [Google Scholar] [CrossRef]

- Department for Transport. Small Remotely Piloted Aircraft Systems (Drones) Mid-Air Collision Study; Department of Transport: London, UK, 2017; p. 18. Available online: https://www.gov.uk/government/publications/drones-and-manned-aircraft-collisions-test-results (accessed on 23 June 2021).

- A Joint Civil Aviation Authority/Military Aviation Authority Service: Report Number 35. Analysis of Airprox in UK Airspace; UK AirProx Board: Middlesex, UK, 2019. [Google Scholar]

- BBC News. ‘Kill switch’ Failed as Drone Hit Controlled Space near GATWICK. BBC News, 2021. Available online: https://www.bbc.co.uk/news/uk-england-sussex-56112694(accessed on 23 June 2021).

- NZ Herald. Drone Spotted 30 Metres from Plane at Auckland Airport; NZ Herald, 2021; Available online: https://www.nzherald.co.nz/nz/drone-spotted-30-metres-from-plane-at-auckland-airport/JLFJA4D6OLRHIAHHRZO3W3LVXY/ (accessed on 23 June 2021).

- US News. Flights Halted at North Carolina Airport After Drone Sighted|North Carolina News. US News, 2021. Available online: https://www.usnews.com/news/best-states/north-carolina/articles/2021-03-10/flights-halted-at-north-carolina-airport-after-drone-sighted(accessed on 23 June 2021).

- Snuggs, T. Madrid Airport Forced to Close for Two Hours after Drone Sightings. Sky News, 2020. Available online: https://news.sky.com/story/madrid-airport-forced-to-close-for-two-hours-after-drone-sightings-11925578(accessed on 23 June 2021).

- ITV News. Flights grounded for two hours at Frankfurt airport after drone sighting. ITV News, 2020. Available online: https://www.itv.com/news/2020-03-02/flights-grounded-for-two-hours-at-frankfurt-airport-after-drone-sighting(accessed on 23 June 2021).

- Corr, S. Apache Attack Helicopters Assist Essex Police in Hunt for Stansted Airport Drone; Bishops Stortford Independant, 2020; Available online: https://www.bishopsstortfordindependent.co.uk/news/army-helicopters-help-police-hunt-drone-above-stansted-airport-9142882/ (accessed on 23 June 2021).

- BBC News. Changi Airport: Drones disrupt flights in Singapore. BBC News, 2019. Available online: https://www.bbc.co.uk/news/business-48754432(accessed on 23 June 2021).

- International Airport Review. Drone Sighting at Dubai International Airport Temporarily Suspends Flights. 2019. Available online: https://www.internationalairportreview.com/news/81308/drone-dubai-suspend-flights/ (accessed on 23 June 2021).

- Mee, E. Flights resume at Dublin Airport after Drone Sighting. Sky News, 2019. Available online: https://news.sky.com/story/dublin-airport-suspends-flights-after-drone-sighting-11643644(accessed on 23 June 2021).

- Lomas, N. Drone sighting at Germany’s busiest airport grounds flights for about an hour. TechCrunch, 9 May 2019. [Google Scholar]

- DW News. Frankfurt Airport halts flights after drone sighted. DW News, 2019. Available online: https://www.dw.com/en/frankfurt-airport-halts-flights-after-drone-sighted/a-48030789(accessed on 23 June 2021).

- BBC News. Heathrow airport drone investigated by police and military. BBC News, 12 January 2019.

- Morrison, S. Heathrow drone protest fails as activists marred by technical breakdowns before police make two arrests. London Evening Standard, 2019. Available online: https://www.standard.co.uk/news/transport/heathrow-drone-protest-four-men-arrested-at-airport-as-climate-activists-prepared-to-disrupt-flights-with-drones-a4235641.html(accessed on 23 June 2021).

- Japan Today. Drone disrupts operations at Kansai airport. Japan Today, 2019. Available online: https://japantoday.com/category/national/Drone-disrupts-operations-at-Kansai-airport(accessed on 23 June 2021).

- Lee, D. Drone sighting disrupts major US airport. BBC News, 23 January 2019. [Google Scholar]

- Shackle, S. The mystery of the Gatwick drone. The Gaurdian, 2020. Available online: https://www.theguardian.com/uk-news/2020/dec/01/the-mystery-of-the-gatwick-drone(accessed on 23 June 2021).

- ALADDIN Project ALADDIN—Advanced hoListic Adverse Drone Detection, Identification and Neutralization. ALADDIN Project, 2020. Available online: https://aladdin2020.eu/(accessed on 23 June 2021).

- De Cubber, G.; Shalom, R.; Coluccia, A.; Borcan, O.; Chamrád, R.; Radulescu, T.; Izquierdo, E.; Gagov, Z. The SafeShore system for the detection of threat agents in a maritime border environment. In Proceedings of the IARP Workshop on Risky Interventions and Environmental Surveillance, Les Bons Villers, Belgium, 18–19 May 2017. [Google Scholar] [CrossRef]

- Mezei, J.; Fiaska, V.; Molnar, A. Drone sound detection. In Proceedings of the CINTI 2015—16th IEEE International Symposium on Computational Intelligence and Informatics, Budapest, Hungary, 19–21 November 2016; pp. 333–338. [Google Scholar] [CrossRef]

- Anwar, M.Z.; Kaleem, Z.; Jamalipour, A. Machine Learning Inspired Sound-Based Amateur Drone Detection for Public Safety Applications. IEEE Trans. Veh. Technol. 2019, 68, 2526–2534. [Google Scholar] [CrossRef]

- Shi, Z.; Chang, X.; Yang, C.; Wu, Z.; Wu, J. An Acoustic-Based Surveillance System for Amateur Drones Detection and Localization. IEEE Trans. Veh. Technol. 2020, 69, 2731–2739. [Google Scholar] [CrossRef]

- Yaacoub, J.P.; Noura, H.; Salman, O.; Chehab, A. Security analysis of drones systems: Attacks, limitations, and recommendations. Internet Things 2020, 11, 100218. [Google Scholar] [CrossRef]

- Thai, V.P.; Zhong, W.; Pham, T.; Alam, S.; Duong, V. Detection, Tracking and Classification of Aircraft and Drones in Digital Towers Using Machine Learning on Motion Patterns. In Proceedings of the 2019 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 9–11 April 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J. Deep cross-domain flying object classification for robust UAV detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 29 August–1 September 2017. [Google Scholar] [CrossRef]

- AVSS. Drone-vs-Bird Detection Challenge; WOSDETC, 2019; Available online: https://wosdetc2019.wordpress.com/challenge/ (accessed on 23 June 2021).

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Méndez, M.; de la Iglesia, D.; González, I.; Mercier, J.-P.; et al. Drone vs. Bird detection: Deep learning algorithms and results from a grand challenge. Sensors 2021, 21, 2824. [Google Scholar] [CrossRef]

- Mendis, G.J.; Randeny, T.; Wei, J.; Madanayake, A. Deep learning based doppler radar for micro UAS detection and classification. In Proceedings of the IEEE Military Communications Conference MILCOM, Cleveland, OH, USA, 27–28 June 2016; pp. 924–929. [Google Scholar] [CrossRef]

- Zulkifli, S.; Balleri, A. Design and Development of K-Band FMCW Radar for Nano-Drone Detection. In Proceedings of the IEEE National Radar Conference, Florence, Italy, 21–25 September 2020. [Google Scholar] [CrossRef]

- Semkin, V.; Yin, M.; Hu, Y.; Mezzavilla, M.; Rangan, S. Drone detection and classification based on radar cross section signatures. In Proceedings of the 2020 International Symposium on Antennas and Propagation, Montreal, QC, Canada, 4–11 July 2021; pp. 223–224. [Google Scholar] [CrossRef]

- Andraši, P.; Radišić, T.; Muštra, M.; Ivošević, J. ScienceDirect Night-time Detection of UAVs using Thermal Infrared Camera. Transp. Res. Procedia 2017, 28. [Google Scholar] [CrossRef]

- Coluccia, A.; Parisi, G.; Fascista, A. Detection and Classification of Multirotor Drones in Radar Sensor Networks: A Review. Sensors 2020, 20, 4172. [Google Scholar] [CrossRef] [PubMed]

- Passafiume, M.; Rojhani, N.; Collodi, G.; Cidronali, A. Modeling Small UAV Micro-Doppler Signature UsingMillimeter-Wave FMCW Radar. Electronics 2020, 10, 747. [Google Scholar] [CrossRef]

- Ezuma, M.; Erden, F.; Anjinappa, C.K. Detection and Classification of UAVs Using RF Fingerprints in the Presence of Wi-Fi and Bluetooth Interference. IEEE Open J. Commun. Soc. 2020, 1, 60–76. [Google Scholar] [CrossRef]

- Zhao, C.; Chen, C.; Cai, Z.; Shi, M.; Du, X.; Guizani, M. Classification of Small UAVs Based on Auxiliary Classifier Wasserstein GANs. In Proceedings of the 2018 IEEE Global Communications Conference, GLOBECOM, Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar] [CrossRef]

- Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database. Future Gener. Comput. Syst. 2019, 100, 86–97. [Google Scholar] [CrossRef]

- Swinney, C.J.; Woods, J.C. Unmanned Aerial Vehicle Flight Mode Classification using Convolutional Neural Network and Transfer Learning. In Proceedings of the 2020 16th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2020; pp. 83–87. [Google Scholar]

- Swinney, C.J.; Woods, J.C. Unmanned Aerial Vehicle Operating Mode Classification Using Deep Residual Learning Feature Extraction. Aerospace 2021, 8, 79. [Google Scholar] [CrossRef]

- Swinney, C.J.; Woods, J.C. DroneDetect Dataset: A Radio Frequency dataset of Unmanned Aerial System (UAS) Signals for Machine Learning Detection and Classification. IEEE Dataport 2021. [Google Scholar] [CrossRef]

- GNU Radio. About GNU Radio. GNU Radio. Available online: https://www.gnuradio.org/about/ (accessed on 23 June 2021).

- Nuand. bladeRF 2.0. Available online: https://www.nuand.com/bladerf-2-0-micro/ (accessed on 26 April 2021).

- Nassar, I.T.; Weller, T.M. A Novel Method for Improving Antipodal Vivaldi Antenna Performance. IEEE Trans. Antennas Propag. 2015, 63, 3321–3324. [Google Scholar] [CrossRef]

- De Oliveira, A.M.; Perotoni, M.B.; Kofuji, S.T.; Justo, J.F. A palm tree Antipodal Vivaldi Antenna with exponential slot edge for improved radiation pattern. IEEE Antennas Wirel. Propag. Lett. 2015, 14, 1334–1337. [Google Scholar] [CrossRef]

- Tindie. Ultra-WideBand Vivaldi Antenna 800 MHz to 6 GHz+ from Hex and Flex. Tindie. Available online: https://www.tindie.com/products/hexandflex/ultra-wideband-vivaldi-antenna-800mhz-to-6ghz/ (accessed on 23 June 2021).

- Mavic Mini—Specifications—DJI. DJI. Available online: https://www.dji.com/uk/mavic-mini/specs (accessed on 23 June 2021).

- DJI Lightbridge 2—Product Information—DJI. DJI. Available online: https://www.dji.com/uk/lightbridge-2/info (accessed on 23 June 2021).

- DJI. Mavic Pro—Product Information. DJI. Available online: https://www.dji.com/uk/mavic/info (accessed on 23 June 2021).

- DJI Store Sofia. What Is DJI OcuSync And How Does it Work? DJI Store Sofia, 2019; Available online: https://store.dji.bg/en/blog/what-is-dji-ocusync-and-how-does-it-work (accessed on 23 June 2021).

- DroneLabs CA. Mavic Air 2s. DroneLabs CA. Available online: https://www.dronelabs.ca/products/mavic-air-2s-1 (accessed on 23 June 2021).

- Brown, J. Parrot Disco: Features, Reviews, Specifications, Competitors; My Drone Lab, 2021; Available online: https://www.mydronelab.com/reviews/parrot-disco.html (accessed on 23 June 2021).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 6, 84–90. [Google Scholar] [CrossRef]

- Kaggle VGGNet-16 Architecture: A Complete Guide. Kaggle 2020. Available online: https://www.kaggle.com/blurredmachine/vggnet-16-architecture-a-complete-guide (accessed on 24 June 2021).

- Hale, J. Don’t Sweat the Solver Stuff. Tips for Better Logistic Regression. Towards Data Sci. 2019. Available online: https://towardsdatascience.com/dont-sweat-the-solver-stuff-aea7cddc3451 (accessed on 23 June 2021).

- Pandey, A. The Math Behind KNN. Artif. Intell. Plain Engl. 2021. Available online: https://ai.plainenglish.io/the-math-behind-knn-7883aa8e314c (accessed on 23 June 2021).

Short Biography of Authors

| Carolyn J. Swinney received a B.Eng.(hons.) degree (first class) in 2007 and a M.Sc.(dist.) in Electronics Engineering from the University of Essex, Colchester, UK in 2013. She graduated as a Communications and Electronics Engineering Officer in the Royal Air Force in 2014. She currently works within the Air and Space Warfare Centre and is working towards a Ph.D. degree in Electronic Systems Engineering at the University of Essex, Colchester, UK. Her main research interests are signal processing, unmanned aerial vehicles, neural networks, machine learning and cyber security. |

| John C. Woods was born in a small fishing village near Colchester, U.K., in 1964. He received the B.Eng. (hons.) degree (first class) in 1996 and the Ph.D. degree in 1999 from the University of Essex, Colchester, UK. He has been a Lecturer in the Department of Computer Science and Electronic Systems Engineering, University of Essex, since 1999. Although his field of expertise is image processing, he has a wide range of interests including telecommunications, autonomous vehicles and robotics. |

| Date | Location | Observation | Disruption |

|---|---|---|---|

| 2021 | Auckland Airport, New Zealand [10] | Pilot sighting of drone 30 m from helicopter and 5 m above | Flights grounded 15 min |

| 2021 | Piedmont Triad International Airport, North Carolina Airport, USA [11] | Drone sightings over airport | Flights suspended 2 h, 1 flight diverted |

| 2020 | Adolfo Suárez-Barajas Airport, Spain [12] | 2 pilot sightings of drones | Flights grounded 1 h, 26 flights diverted |

| 2020 | Frankfurt Airport, Germany [13] | Pilot sighting of drone | Flights grounded 2 h, flights diverted and cancelled |

| 2020 | Stansted Airport, UK [14] | Military helicopter confirmed sighting of drone | No flight disruption, one police arrest made |

| 2019 | Changi Airport, Singapore [15] | Drone sightings in vicinity of airport | 37 flights delayed, 1 flight diverted |

| 2019 | Dubai Airport [16] | Drone sightings in vicinity of airport | 30 min suspension of flights |

| 2019 | Dublin Airport, Ireland [17] | Pilot sighting of drone | Flights grounded 30 min, 3 flights diverted |

| 2019 | Frankfurt Airport, Germany [18] | Sighting of drone | Flights grounded 1 h, 100 take-offs and landings were cancelled |

| 2019 | Frankfurt Airport, Germany [19] | Sighting of drone | Flights grounded 30 min |

| 2019 | Heathrow Airport, UK [20] | Undisclosed number of sightings | Flights grounded for 1 h |

| 2019 | Heathrow Airport, UK [21] | Heathrow Pause group planned drone flights to disrupt flights | 1 attempted flight which was unsuccessful |

| 2019 | Kansai International Airport, Japan [22] | Drone sighted hovering near terminal and flying over runway 1 week prior aircrew sighting of drone in vicinity of incoming aircraft | 1 h suspension flights 40 min suspension of flights |

| 2019 | Newark Airport, USA [23] | 2 pilot sightings on route into Newark, above Teterboro airport, drone coming within 9 m of aircraft | Flights disrupted for short duration |

| 2018 | Gatwick Airport, UK [24] | 170 sightings, 115 sightings deemed credible | Airport closed for 33 h, 1000 flights cancelled, 140,000 passengers affects at a cost of GBP 50 million. 18 month police operation costing GBP 800,000 across 5 different forces. |

| Classification Type | Class | Description |

|---|---|---|

| Detection | 1 | No UAS detected |

| Detection | 2 | UAS detected |

| midrule Type | 1 | No UAS detected |

| Type | 2 | Mavic 2 Air S detected |

| Type | 3 | Parrot Disco detected |

| Type | 4 | Inspire 2 Pro detected |

| Type | 5 | Mavic Pro detected |

| Type | 6 | Mavic Pro 2 detected |

| Type | 7 | Mavic Mini detected |

| Type | 8 | Phantom 4 detected |

| midruleFlight Mode | 1 | No UAS detected |

| Flight Mode | 2 | Air Mode 1—Switched on |

| Flight Mode | 3 | Air Mode 2—Hovering |

| Flight Mode | 4 | Air Mode 3—Flying |

| Flight Mode | 5 | Disco Mode 1—Switched on |

| Flight Mode | 6 | Disco Mode 3—Flying |

| Flight Mode | 7 | Inspire Mode 1—Switched on |

| Flight Mode | 8 | Inspire Mode 2—Hovering |

| Flight Mode | 9 | Inspire Mode 3—Flying |

| Flight Mode | 10 | Mavic 1 Mode 1—Switched on |

| Flight Mode | 11 | Mavic 1 Mode 2—Hovering |

| Flight Mode | 12 | Mavic 1 Mode 3—Flying |

| Flight Mode | 13 | Mavic Pro 2 Mode 1—Switched on |

| Flight Mode | 14 | Mavic Pro 2 Mode 2—Hovering |

| Flight Mode | 15 | Mavic Pro 2 Mode 3—Flying |

| Flight Mode | 16 | Mini Mode 1—Switched on |

| Flight Mode | 17 | Mini Mode 2—Hovering |

| Flight Mode | 18 | Mini Mode 3—Flying |

| Flight Mode | 19 | Phantom 4 Mode 1—Switched on |

| Flight Mode | 20 | Phantom 4 Mode 2—Hovering |

| Flight Mode | 21 | Phantom Mode 3—Flying |

| UAS Type | Transmission System |

|---|---|

| Mavic 2 Air S | OcuSync 3.0 |

| Parrot Disco | Wi-Fi |

| Inspire 2 Pro | Lightbridge 2.0 |

| Mavic Pro | OcuSync 1.0 |

| Mavic Pro 2 | OcuSync 2.0 |

| Mavic Mini | Wi-Fi |

| Phantom 4 | Lightbridge 2.0 |

| Layer Type | Size | Feature Map |

|---|---|---|

| Input Image | 224 × 224 × 3 | 1 |

| 2× Convolutional | 224 × 224 × 64 | 64 |

| Max Pooling | 112 × 112 × 64 | 64 |

| 2× Convolutional | 112 × 112 × 128 | 128 |

| Max Pooling | 56 × 56 × 128 | 128 |

| 2× Convolutional | 56 × 56 × 256 | 256 |

| Max Pooling | 28 × 28 × 256 | 256 |

| 3× Convolutional | 28 × 28 × 512 | 512 |

| Max Pooling | 14 × 14 × 512 | 512 v |

| 3× Convolutional | 14 × 14 × 512 | 512 |

| Max Pooling | 7 × 7 × 512 | 512 v |

| Classifier | Metric | Detection | Type | Flight | |

|---|---|---|---|---|---|

| LR | PSD | Acc | 100(+/−0.0) | 98.1 (+/−0.4) | 95.4 (+/−0.3) |

| PSD | F1 | 100(+/−0.0) | 98.1 (+/−0.4) | 95.4 (+/−0.3) | |

| Spec | Acc | 96.7 (+/−1.5) | 90.5 (+/−0.8) | 87.3 (+/−0.4) | |

| Spec | F1 | 96.7 (+/−1.5) | 90.5 (+/−0.9) | 87.3 (+/−0.4) | |

| kNN | PSD | ||||

| PSD | Acc | 99.6 (+/−0.2) | 93.5 (+/−0.6) | 86.5 (+/−0.5) | |

| PSD | F1 | 99.6 (+/−0.2) | 93.4 (+/−0.7) | 86.3 (+/−0.5) | |

| Spec | Acc | 88.0 (+/−1.3) | 75.1 (+/−1.5) | 64.6 (+/−0.9) | |

| Spec | F1 | 87.9 (+/−1.4) | 75.3 (+/−1.5) | 64.8 (+/−0.8) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Swinney, C.J.; Woods, J.C. The Effect of Real-World Interference on CNN Feature Extraction and Machine Learning Classification of Unmanned Aerial Systems. Aerospace 2021, 8, 179. https://doi.org/10.3390/aerospace8070179

Swinney CJ, Woods JC. The Effect of Real-World Interference on CNN Feature Extraction and Machine Learning Classification of Unmanned Aerial Systems. Aerospace. 2021; 8(7):179. https://doi.org/10.3390/aerospace8070179

Chicago/Turabian StyleSwinney, Carolyn J., and John C. Woods. 2021. "The Effect of Real-World Interference on CNN Feature Extraction and Machine Learning Classification of Unmanned Aerial Systems" Aerospace 8, no. 7: 179. https://doi.org/10.3390/aerospace8070179

APA StyleSwinney, C. J., & Woods, J. C. (2021). The Effect of Real-World Interference on CNN Feature Extraction and Machine Learning Classification of Unmanned Aerial Systems. Aerospace, 8(7), 179. https://doi.org/10.3390/aerospace8070179