Engineering Methodology for Student-Driven CubeSats

Abstract

:1. Introduction

2. Background

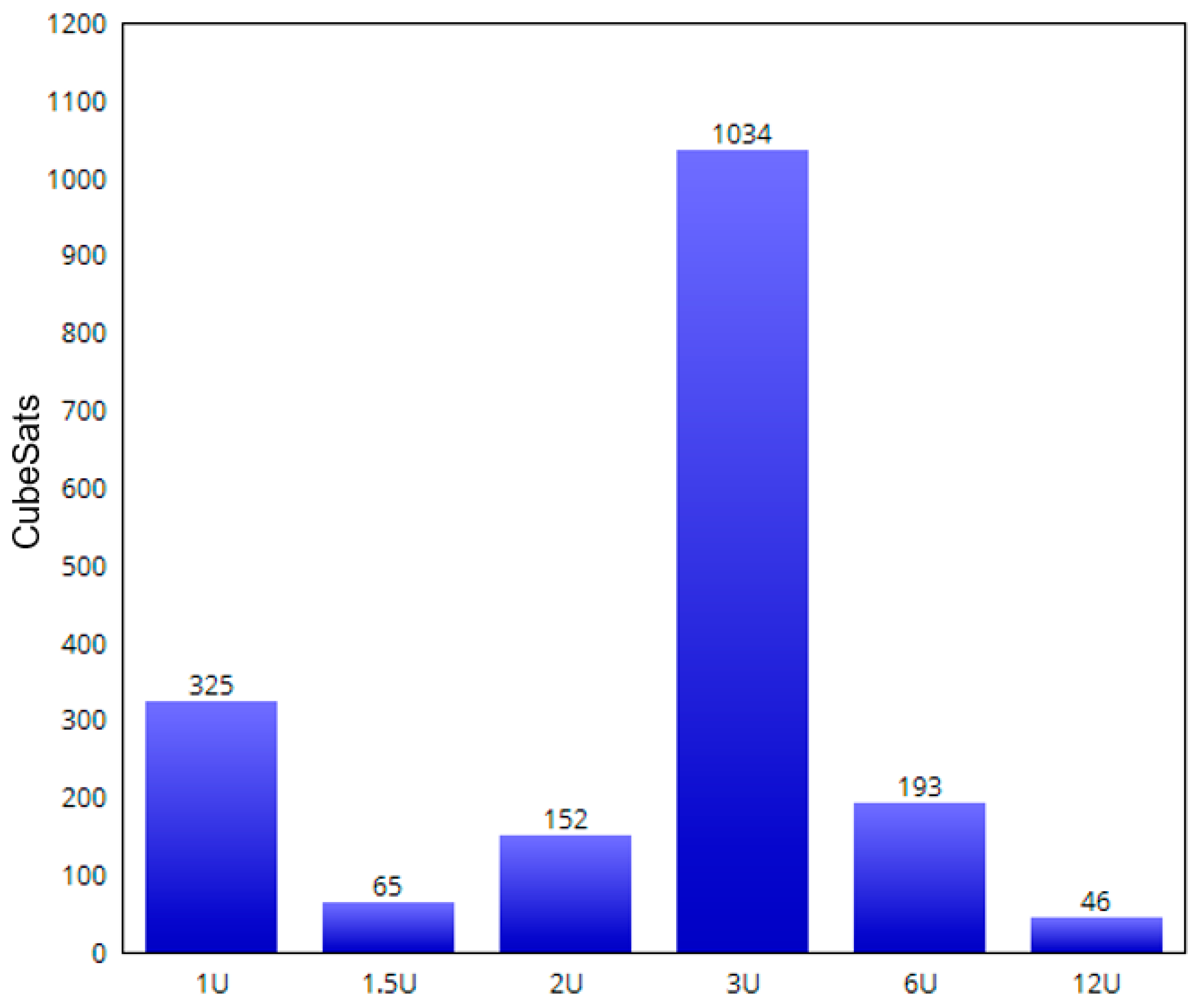

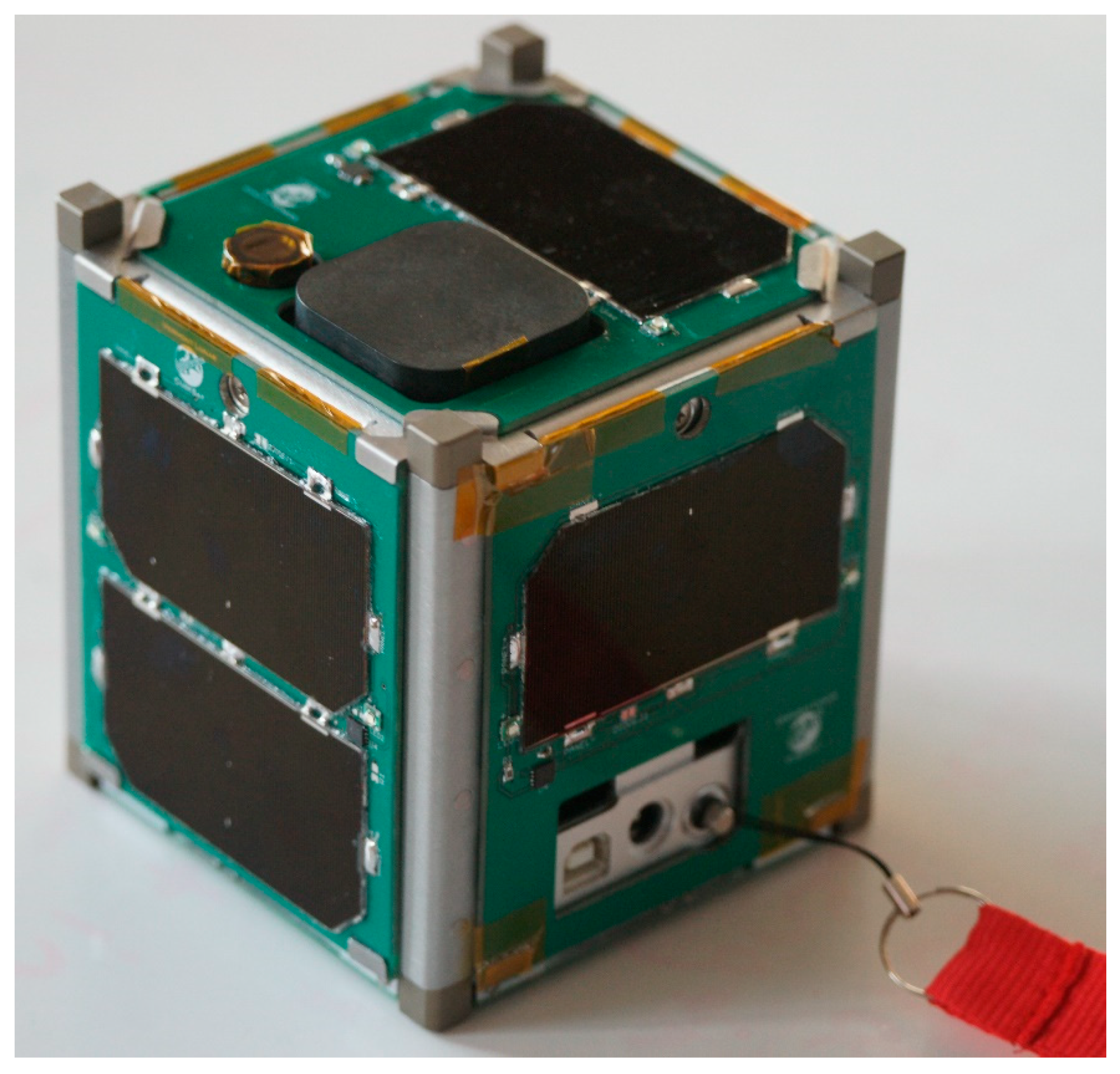

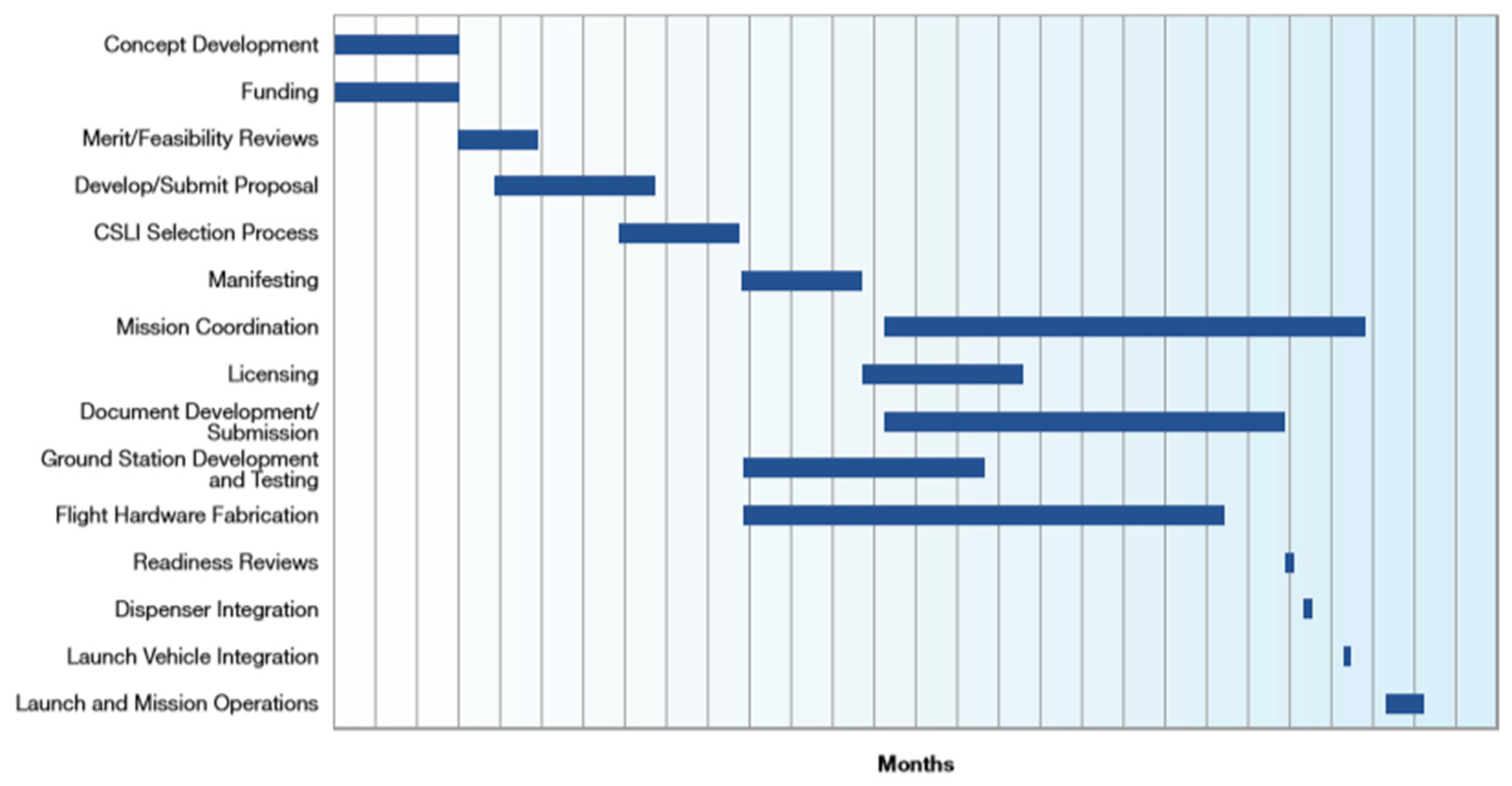

2.1. CubeSat Design and Developing Processes

2.2. University Demand for CubeSats

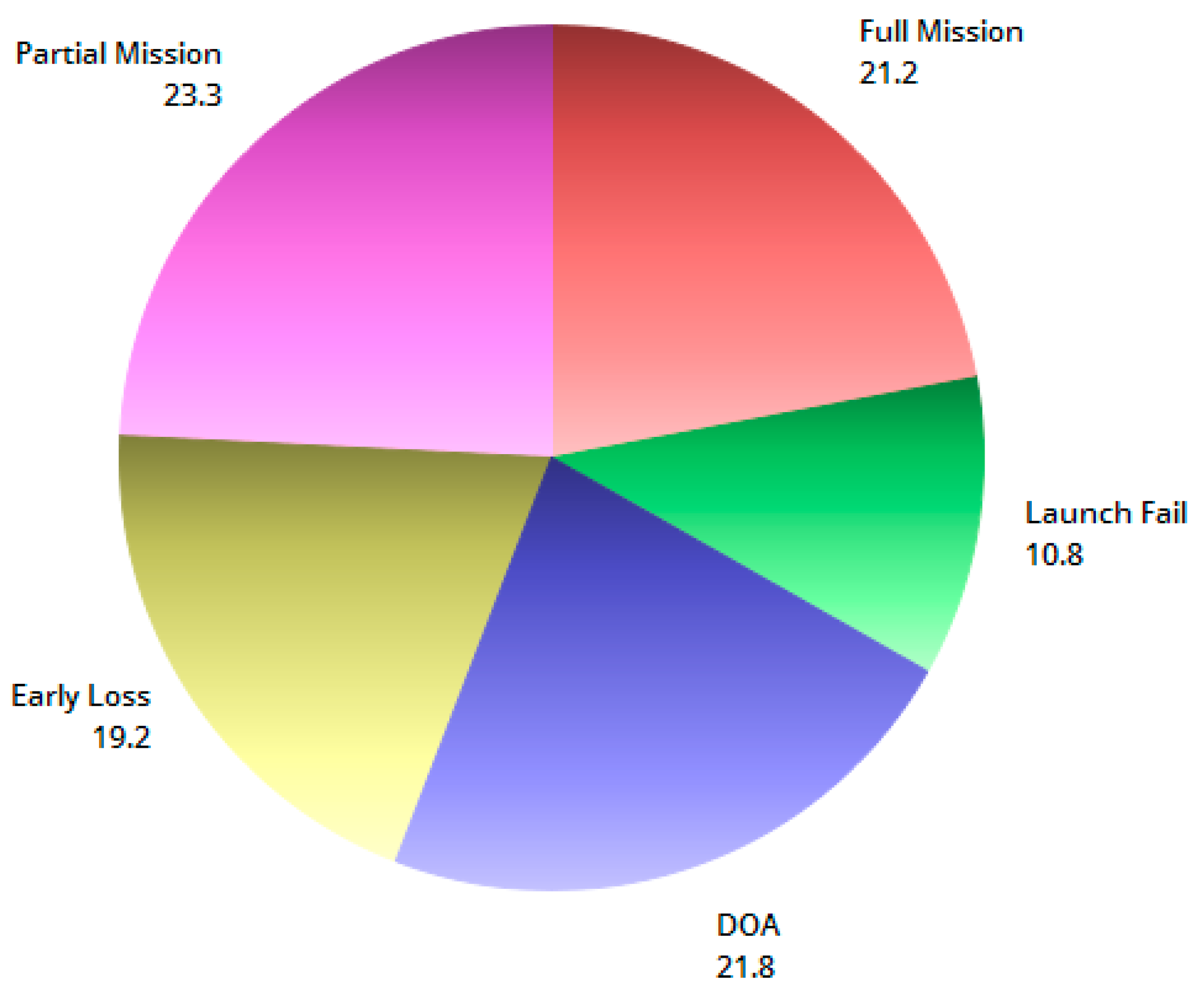

3. Investigating Reasons for Failure

- Exceeding development time: where the approximate development time is 1–2 years, some projects may exceed the time because the participants are inexperienced or graduate and leave the project.

- Limited functionality of components: the cost of space-grade components may be expensive for CubeSat projects. For instance, the average cost of GaAs solar cells is $3000 [20]. Thus, students may, instead, choose less expensive COTS with a limited functionality for their project.

- Lack of system testing: this may be due to the lack of availability of testing tools. The testing time may affect the project life-cycle.

- Requirements analysis: misunderstanding and communication issues between student developers and stakeholders (i.e., principal investigator, developers and testers)

- Inexperienced team: students learn while working on the project through trial and error. Some may be participating in the project only to receive an extra credit. Some may graduate and leave school before completing the project [21].

- Lack of documentation: students may not have proper documentation for their project.

- Testing time reduction (variable name: TTR): coded as 0 if it did not occur and as 1 if it occurred.

- Design problems (variable name: DesPr): coded as 1 if the problems were related to tools, 2 if the problems were related to the models and 3 if the problems were related to both.

- Availability of model for modification (variable name: Mod): coded as 0 if not available and as 1 if available.

- Ease of addition or deletion of components (variable name: AddDel): coded as 1 if easy and as 2 if difficult.

- System design objectives met (variable name: SysMet): coded as 0 if not met and as 1 if they were.

- Mission objectives met (variable name: MMet): coded as 0 if not met and as 1 if they were.

- Whether one model was employed as a reference model for different missions (variable name: Mission): coded as 0 if not employed and as 1 if it was.

4. Student-Driven CubeSat Practices

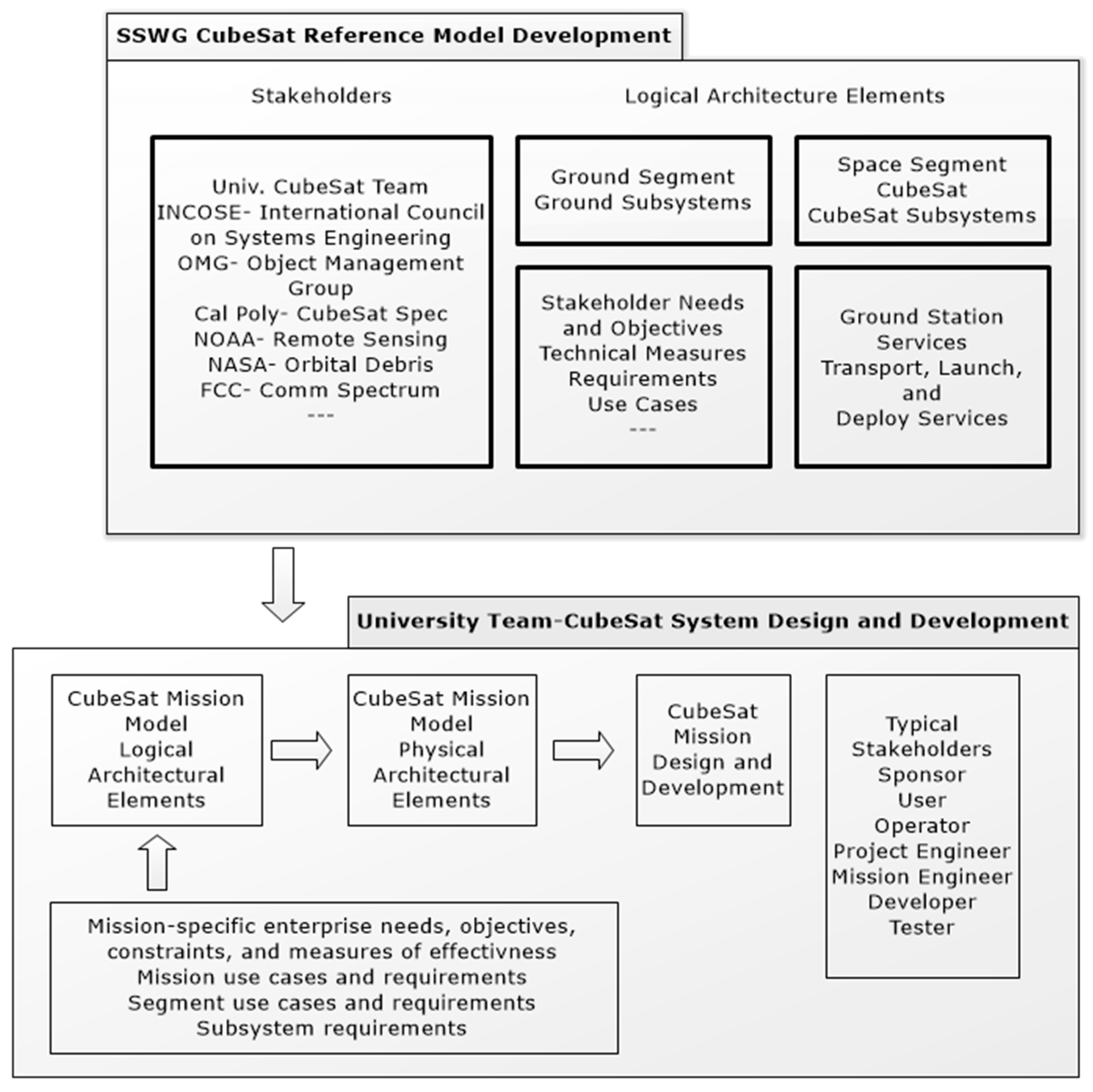

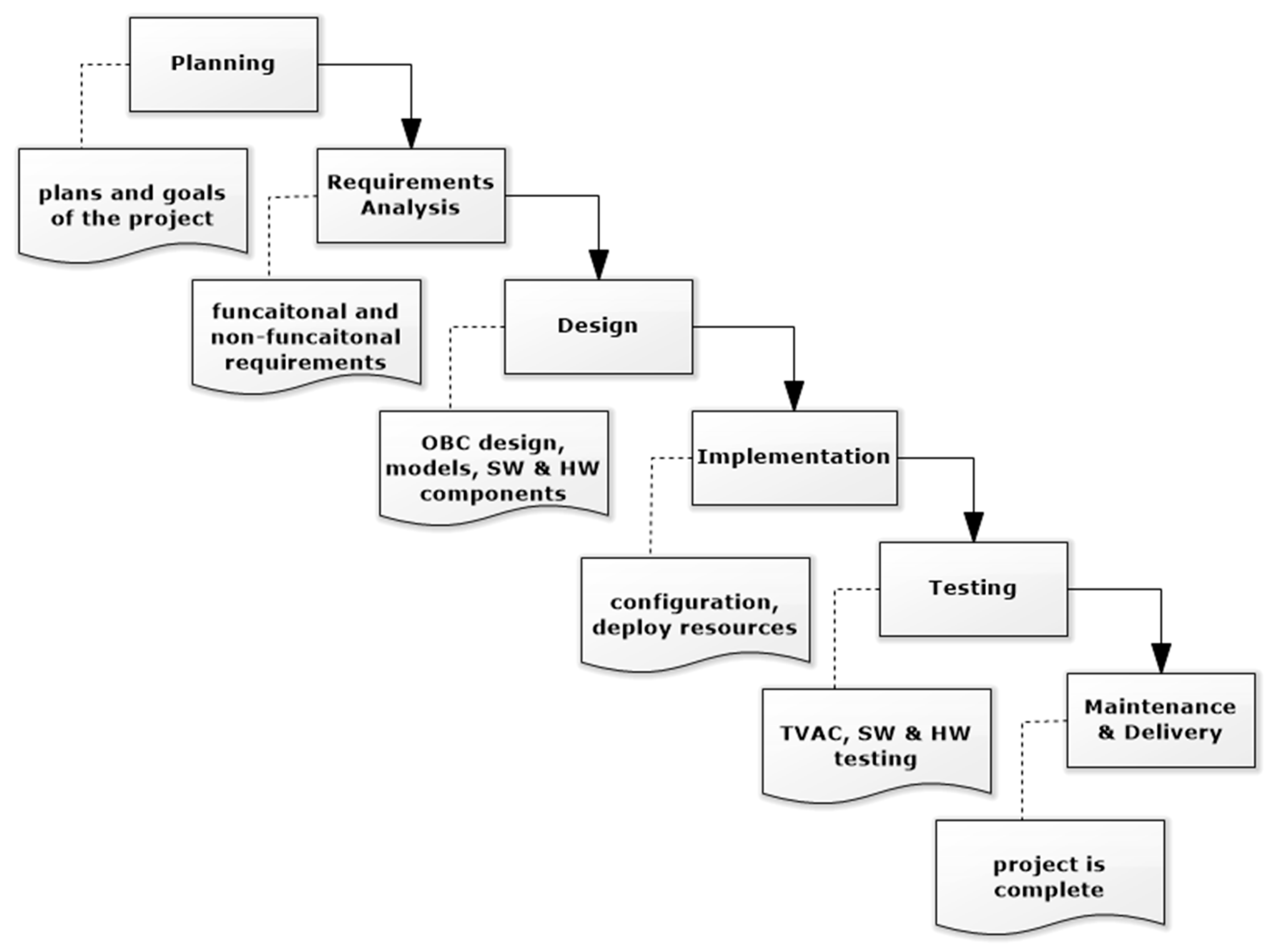

5. Engineering Methodology for University-Class CubeSats

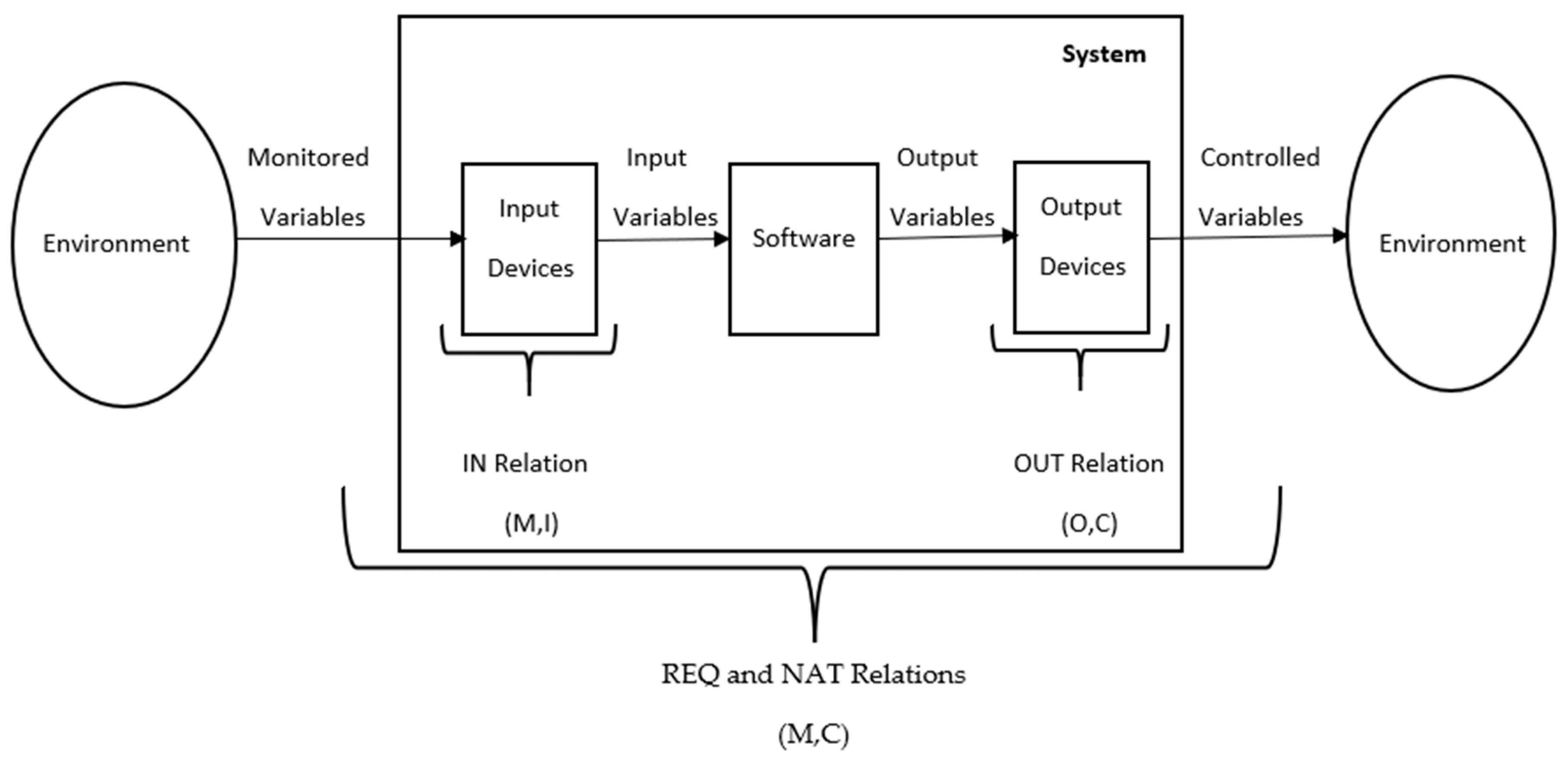

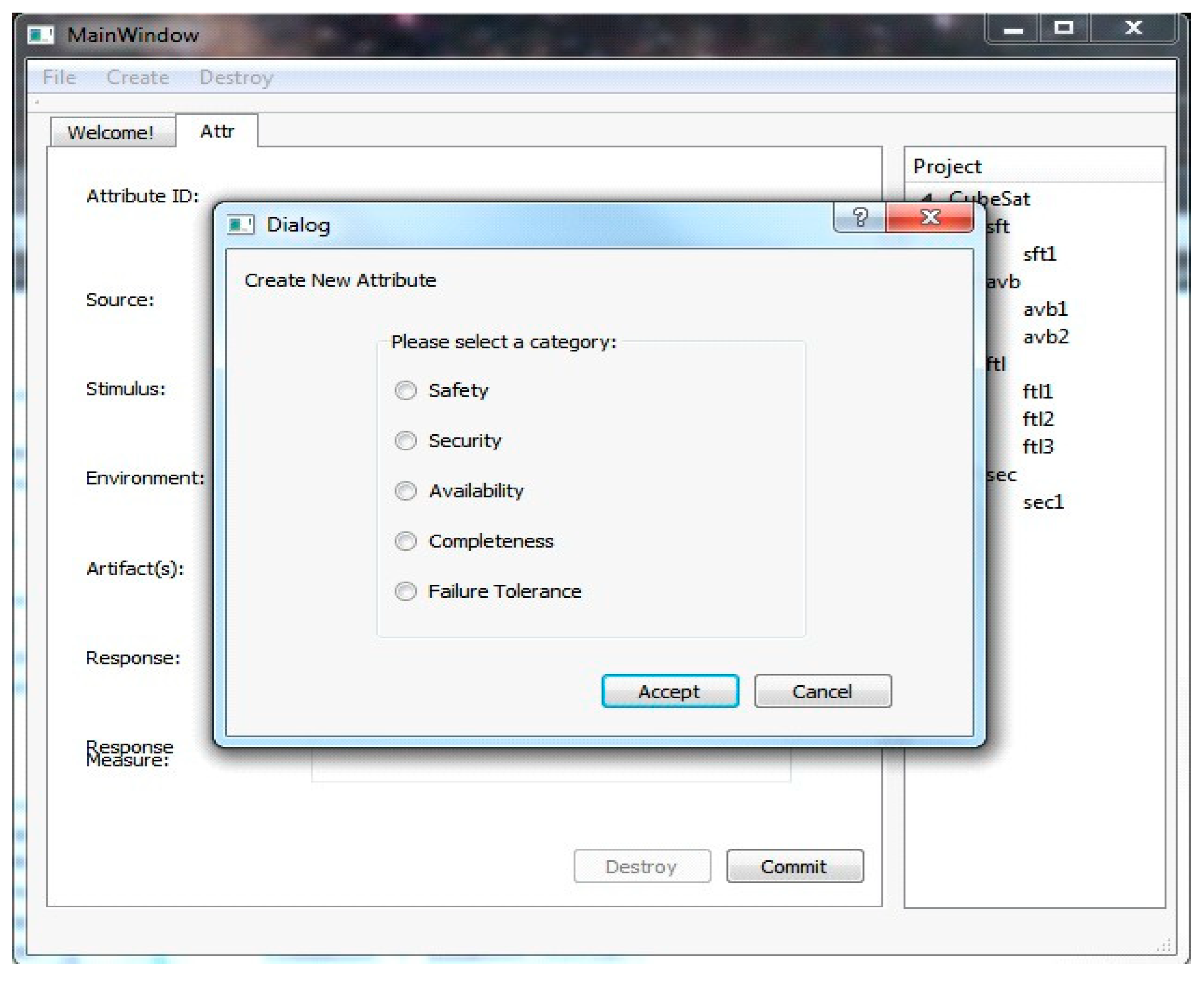

6. CubeSat Software Requirements

- Valid: a number is between −40 and 40

- Invalid:

- o

- a number is greater than or equal to 41

- o

- the number is less than or equal to −41

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chin, A.; Coelho, R.; Nugent, R.; Munakata, R.; Puig-Suari, J. Cubesat: The pico-satellite standard for research and education. In Proceedings of the AIAA Space 2008 Conference & Exposition, San Diego, CA, USA, 9–11 September 2008. [Google Scholar]

- Erik, E. Nanosats Database [online Database]. Available online: https://www.nanosats.eu/ (accessed on 10 August 2018).

- Swartwout, M. University-class satellites: From marginal utility to ‘disruptive’ research platforms. In Proceedings of the 18th Annual Conference on Small Satellites, Logan, UT, USA, 9–12 August 2004. [Google Scholar]

- Robert, B. Distributed Electrical Power System in CubeSat Applications. Master’s Thesis, Master of Science in Electrical Engineering, Utah State University, Logan, UT, USA, December 2011. [Google Scholar]

- Swartwout, M. Reliving 24 Years in the Next 12 Minutes: A Statistical and Personal History of University-Class Satellites. In Proceedings of the 32nd Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 4–9 August 2018. SSC18-WKVIII-03. [Google Scholar]

- Xiaozhou, Y. The Status of University Nanosatellites in China. In Proceedings of the 14th Annual CubeSat Workshop, San Luis Obispo, CA, USA, 26–28 April 2017. [Google Scholar]

- National Aeronautics and Space Administration. CubeSat Picture. Available online: https://www.nasa.gov/press-release/nasa-sets-coverage-schedule-for-cubesat-launch-events (accessed on 12 May 2019).

- Selva, D.; Krejci, D. A survey and assessment of the capabilities of CubeSats for Earth observation. Acta Astronaut. 2012, 74. [Google Scholar] [CrossRef]

- Dániel, V.; Pína, L.; Inneman, A.; Zadražil, V.; Báča, T.; Platkevič, M.; Stehlíková, V.; Nentvich, O.; Urban, M. Terrestrial gamma-ray flashes monitor demonstrator on CubeSat. Cubesats Nanosats Remote Sens. 2016. [Google Scholar] [CrossRef]

- Garrick-Bethell, I.; Lin, R.P.; Sanchez, H.; Jaroux, B.A.; Bester, M.; Brown, P.; Cosgrove, D.; Dougherty, M.K.; Halekas, J.S.; Hemingway, D.; et al. Lunar magnetic field measurements with a CubeSat. Sens. Syst. Space Appl. 2013, 8739, 873903. [Google Scholar] [CrossRef]

- Lucken, R.; Hubert, N.; Giolito, D. Systematic space debris collection using CubeSat constellation. In Proceedings of the 7th European Conference for Aeronautics and Aerospace Sciences (EUCASS), Milan, Italy, 3–6 July 2017. [Google Scholar] [CrossRef]

- Fortescue, P.; Swinerd, G.; Stark, J. CubeSat Structures. In Spacecraft Systems Engineering, 4th ed.; John Wiley and Sons Inc.: Hoboken, NJ, USA, 2011; pp. 540–542. ISBN 9780470750124. [Google Scholar]

- CubeSat 101: Basic Concepts and Processes for First-Time CubeSat Developers. Available online: https://www.nasa.gov/sites/default/files/atoms/files/nasa_csli_cubesat_101_508.pdf (accessed on 20 October 2018).

- Twiggs, B.; Puig-Suari, J. CUBESAT Design Specifications Document; Stanford University and California Polytechnical Institute: San Luis Obispo, CA, USA, August 2003. [Google Scholar]

- Zea, L.; Ayerdi, V.; Argueta, S.; Muñoz, A. A Methodology for CubeSat Mission Selection. J. Small Satell. 2016, 5, 483–511. [Google Scholar]

- Dabrowski, M.J. The Design of a Software System for a Small Space Satellite. Master’s Thesis, Graduate College, University of Illinois at Urbana-Champaign, Urbana, IL, USA, 2005. [Google Scholar]

- The Open Source Hardware Association (OSHA). Available online: https://www.oshwa.org (accessed on 15 October 2018).

- The Open Source Initiative (OSI). Available online: https://www.opensource.org (accessed on 15 October 2018).

- Straub, J.; Whalen, D. Evaluation of the Educational Impact of Participation Time in a Small Spacecraft Development Program. Educ. Sci. 2014, 4, 141–154. [Google Scholar] [CrossRef]

- List of CubeSat Components Prices. Available online: https://www.cubesatshop.com/ (accessed on 15 October 2018).

- Straub, J. Extending the student qualitative undertaking involvement risk model. J. Aerosp. Technol. Manag. 2014, 6, 333–352. [Google Scholar] [CrossRef]

- Alanazi, A.; Straub, J. Statistical Analysis of CubeSat Mission Failure. In Proceedings of the 2018 AIAA/USU SmallSat Conference, Logan, UT, USA, 4–9 August 2018. [Google Scholar]

- Swartwout, M. Statistical Figures. Available online: https://sites.google.com/a/slu.edu/swartwout/home/cubesat-database (accessed on 30 October 2018).

- Open-Source CubeSat Database Repository. Available online: http://www.dk3wn.info/p/ (accessed on 30 October 2018).

- Reza, H.; Rashmi, S.; Alexander, N.; Straub, J. Toward Model-Based Requirement Engineering Tool Support. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017. [Google Scholar] [CrossRef]

- Weiz, N. Analysis of Verification and Validation Techniques for Educational CubeSat Programs. Master’s Thesis, Master of Science in Computer Science, California Polytechnic State University, San Luis Obispo, CA, USA, May 2018. [Google Scholar]

- Ward, P.T.; Mellor, S.J. Structured Development for Real-Time Systems; Prentice Hall Professional Technical Reference: Upper Saddle River, NJ, USA, 1991. [Google Scholar]

- Weisgerber, M.; Langer, M.; Schummer, F.; Steinkirchner, K. Risk Reduction and Process Acceleration for Small Spacecraft Assembly and Testing by Rapid Prototyping. In Proceedings of the German Aerospace Congress, Munich, Germany, 5–7 September 2017. [Google Scholar]

- González, D.; Rodríguez, D.; Birnie, J.; Bagur, J.; Paz, R.; Miranda, E.; Solórzano, F.; Esquit, C.; Gallegos, J.; Álvarez, E.; et al. Guatemala’s Remote Sensing CubeSat—Tools and Approaches to Increase the Probability of Mission Success. In Proceedings of the 2018 AIAA/USU SmallSat Conference, Logan, UT, USA, 4–9 August 2018. [Google Scholar]

- The International Council on Systems Engineering. Systems Engineering Definition. Available online: https://www.incose.org (accessed on 5 November 2018).

- Alanazi, A. Methodology and Tools for Reducing CubeSat Mission Failure. In Proceedings of the 2018 AIAA Space and Astronautics Forum and Exposition, AIAA SPACE Forum, Orlando, FL, USA, 17–19 September 2018. AIAA 2018-5122. [Google Scholar]

- Kaslow, D.; Louise, A.; Asundi, S.; Bradley, A.; Curtis, I.; Bungo, S.; Robert, R. Developing a CubeSat Model-Based System Engineering (MBSE) Reference Model-interim status. In Proceedings of the IEEE Aerospace Conference 2017, Big Sky, MT, USA, 7–14 March 2015. [Google Scholar] [CrossRef]

- Hammond, W.E. Space Transportation: A Systems Approach to Design and Analysis; AIAA: Reston, VA, USA, 1999. [Google Scholar]

- Aerosp, J.; Manag, T.; Campos, S. Design of a Nanosatellite Ground Monitoring and Control Software—A Case Study. J. Aerosp. Technol. Manag. 2016, 8, 211–231. [Google Scholar]

- Manyak, G. Fault Tolerant and Flexible CubeSat Software Architecture; Master’s Thesis, Master of Science in Electrical Engineering, California Polytechnic State University, San Luis Obispo, CA, USA, June 2011. [Google Scholar]

| Factor | Variable Name | Code |

|---|---|---|

| testing time reduction | TTR | occurred (1), did not occur (0) |

| designing problems | DesPr | tools (1), models (2), both (3) |

| availability of modifications | Mod | yes (1), no (0) |

| adding/deleting components | AddDel | easy (1), difficult (0) |

| system design objectives | SysMet | yes (1), no (0) |

| mission objectives | MMet | yes (1), no (0) |

| using a reference model | Mission | yes (1), no (0) |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 1.020 | 1 | 0.313 | ||

| Continuity Correction | 0.431 | 1 | 0.512 | ||

| Likelihood Ratio | 1.018 | 1 | 0.313 | ||

| Fisher’s Exact Test | 0.481 | 0.255 | |||

| Linear-by-Linear Association | 0.991 | 1 | 0.32 | ||

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 0.326 | 2 | 0.849 | 1.000 | |

| Likelihood Ratio | 0.333 | 2 | 0.847 | 0.904 | |

| Fisher’s Exact Test | 0.432 | 1.000 | |||

| Linear-by-Linear Association | 0.007 | 1 | 0.931 | 1.000 | 0.546 |

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 1.225 | 1 | 0.268 | 0.541 | 0.306 |

| Continuity Correction | 0.232 | 1 | 0.630 | ||

| Likelihood Ratio | 1.177 | 1 | 0.278 | 0.541 | 0.306 |

| Fisher’s Exact Test | 0.541 | 0.306 | |||

| Linear-by-Linear Association | 1.190 | 1 | 0.275 | 0.541 | 0.306 |

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 0.122 | 1 | 0.726 | 1.000 | 0.525 |

| Continuity Correction | 0.000 | 1 | 1.000 | ||

| Likelihood Ratio | 0.121 | 1 | 0.728 | 1.000 | 0.525 |

| Fisher’s Exact Test | 1.000 | 0.525 | |||

| Linear-by-Linear Association | 0.119 | 1 | 0.730 | 1.000 | 0.525 |

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 3.512 | 1 | 0.061 | 0.079 | 0.067 |

| Continuity Correction | 2.267 | 1 | 0.132 | ||

| Likelihood Ratio | 3.477 | 1 | 0.062 | 0.139 | 0.067 |

| Fisher’s Exact Test | 0.079 | 0.067 | |||

| Linear-by-Linear Association | 3.412 | 1 | 0.065 | 0.079 | 0.067 |

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 0.015 | 1 | 0.901 | 1.000 | 0.591 |

| Continuity Correction | 0.000 | 1 | 1.000 | ||

| Likelihood Ratio | 0.015 | 1 | 0.901 | 1.000 | 0.591 |

| Fisher’s Exact Test | 1.000 | 0.591 | |||

| Linear-by-Linear Association | 0.015 | 1 | 0.903 | 1.000 | 0.591 |

| N of Valid Cases | 35 |

| Value | df | Asymptotic Significance (2-Sided) | Exact Sig. (2-Sided) | Exact Sig. (1-Sided) | |

|---|---|---|---|---|---|

| Pearson Chi-Square | 0.015 | 1 | 0.901 | 1.000 | 0.591 |

| Continuity Correction | 0.000 | 1 | 1 | ||

| Likelihood Ratio | 0.015 | 1 | 0.901 | 1.000 | 0.591 |

| Fisher’s Exact Test | 1.000 | 0.591 | |||

| Linear-by-Linear Association | 0.015 | 1 | 0.903 | 1.000 | 0.591 |

| N of Valid Cases | 35 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alanazi, A.; Straub, J. Engineering Methodology for Student-Driven CubeSats. Aerospace 2019, 6, 54. https://doi.org/10.3390/aerospace6050054

Alanazi A, Straub J. Engineering Methodology for Student-Driven CubeSats. Aerospace. 2019; 6(5):54. https://doi.org/10.3390/aerospace6050054

Chicago/Turabian StyleAlanazi, Abdulaziz, and Jeremy Straub. 2019. "Engineering Methodology for Student-Driven CubeSats" Aerospace 6, no. 5: 54. https://doi.org/10.3390/aerospace6050054

APA StyleAlanazi, A., & Straub, J. (2019). Engineering Methodology for Student-Driven CubeSats. Aerospace, 6(5), 54. https://doi.org/10.3390/aerospace6050054