Abstract

Traditional methods for requirement identification depend on the manual transformation of unstructured requirement texts into formal documents, a process that is both inefficient and prone to errors. Although requirement knowledge graphs offer structured representations, current named entity recognition and relation extraction techniques continue to face significant challenges in processing the specialized terminology and intricate sentence structures characteristic of the aerospace domain. To overcome these limitations, this study introduces a novel approach for constructing aerospace-specific requirement knowledge graphs using a large language model. The method first employs the GPT model for data augmentation, followed by BERTScore filtering to ensure data quality and consistency. An efficient continual learning based on token index encoding is then implemented, guiding the model to focus on key information and enhancing domain adaptability through fine-tuning of the Qwen2.5 (7B) model. Furthermore, a chain-of-thought reasoning framework is established for improved entity and relation recognition, coupled with a dynamic few-shot learning strategy that selects examples adaptively based on input characteristics. Experimental results validate the effectiveness of the proposed method, achieving F1 scores of 88.75% in NER and 89.48% in relation extraction tasks.

1. Introduction

With the continuous expansion of aerospace application domains, the complexity of associated missions has been steadily increasing. Mission objectives have evolved from single-purpose goals to multi-functional, multi-domain integrated tasks. This evolution imposes heightened demands on aerospace equipment development, necessitating enhanced adaptability and reliability. As a foundational preliminary phase in systems engineering-driven aerospace equipment development, requirements engineering plays a pivotal role by articulating stakeholder expectations, clarifying system needs, and specifying the functions to be delivered, thereby establishing a solid basis for subsequent development [1]. Within the scope of requirements engineering, requirement identification constitutes a critical component of the development process. It entails extracting essential system requirements and relevant information from stakeholder inputs, unstructured documents, meeting records, and interview transcripts and subsequently transforming them into standardized system specifications to support later design and development stages. However, with the growing complexity of aerospace missions and systems, traditional manual methods for retrieving and classifying requirements increasingly fall short of meeting the efficiency and accuracy demands of modern aerospace development. Manual processing is labor-intensive, error-prone, and inefficient, thereby amplifying risks in subsequent development stages. This underscores the urgent need for intelligent approaches to enhance both the accuracy and efficiency of requirement identification.

The Knowledge Graph (KG), as an effective method for knowledge integration, models key entities and their interrelationships within textual information by employing a graph-based structure, thereby offering an innovative approach to organizing and managing complex data. Specifically, within a knowledge graph, entities are represented as nodes, while relationships between entities are represented as edges, thus facilitating the structured representation of information. This graph-based representation not only uncovers the semantic associations embedded within the data but also facilitates semantic understanding and reasoning across complex information networks. In the context of requirements engineering, constructing KG from requirement texts not only facilitates the organization and management of knowledge across diverse documents but also uncovers dependencies among various requirements. This process enhances the clarity and precision of requirement semantic interpretation and reasoning, thereby contributing to a more comprehensive understanding of the requirements [2]. By visualizing key entities and their interrelationships extracted from requirement texts, system engineers can intuitively comprehend internal connections and hierarchical structures among entities, thereby offering robust support for subsequent equipment development. Furthermore, KG can be integrated with requirement models defined in the Systems Modeling Language (SysML), thereby opening new avenues for standardized representation and automated processing of requirements. By leveraging the structured nature of KG, it becomes feasible to automatically generate requirement-related models, thereby further enhancing the efficiency and accuracy of requirement identification [3].

Named Entity recognition (NER) and Relation Extraction (RE) constitute essential components in the construction of KG. These techniques facilitate the precise extraction of key information from large volumes of text, thereby establishing a robust data foundation for KG construction. In prior research, Natural Language Processing (NLP) techniques have been extensively applied to NER and RE tasks [4]. Although these approaches have made notable progress in general KG construction, they exhibit significant limitations when applied to aerospace requirement texts, primarily due to the following domain-specific challenges:

Data scarcity: Aerospace requirement texts are relatively scarce and often inaccessible. The limited availability of standardized documents constrains both research and model training efforts in this domain, thereby complicating the construction of KG.

Domain specificity: These texts feature a high density of specialized terminology, with technical expressions that are more intricate and fine-grained than those found in other domains.

Text complexity: Aerospace requirement texts often contain multi-layered, ambiguous, or incomplete descriptions, accompanied by intricate performance metrics and constraint specifications. Additionally, they frequently describe interdependencies among multiple systems and subsystems, while the inherently unstructured language further impedes clarity. Collectively, these factors pose substantial challenges to the effective extraction of information from aerospace requirement texts using traditional NLP approaches.

With the advent of the Large Language Model (LLM), its capability in sentence analysis, semantic understanding, and advanced summarization and abstraction has significantly outperformed that of traditional NLP models. LLMs exhibit markedly superior precision in processing complex natural language texts, particularly in semantic comprehension. Against this backdrop, this study introduces an innovative application of an LLM for constructing KG from aerospace requirement texts. First, the learning capabilities of GPT models are leveraged alongside specially designed prompts to perform data augmentation, thereby enhancing data diversity and generalization. The quality of the generated data is evaluated using the BERTScore metric to ensure its validity. Based on this process, a high-quality training dataset is constructed through meticulous annotation. Second, to enhance the model’s adaptability to domain-specific information in aerospace texts, a token index encoding mechanism is proposed to assist the LLM in more accurately identifying entity boundaries and understanding semantic relations within the requirement texts. In addition, the Qwen2.5 (7B) model is further adapted to the domain using Low-Rank Adaptation of Large Language Models (LoRA) fine-tuning, thereby improving its ability to capture key features in aerospace specific texts. Finally, for the task of entity and relation reasoning in requirement texts, a Chain of Thought (CoT) reasoning framework is developed. By incorporating hierarchical reasoning logic, the model can more accurately identify entities and relations in requirement texts characterized by complex semantic associations. Furthermore, a dynamic few-shot strategy is introduced, enabling the model to adaptively select examples during inference based on the characteristics of the input texts. This approach maximizes information utilization under resource constrained conditions and further improves the stability and generalization performance in NER and RE tasks. Ultimately, a KG specific to aerospace requirement texts is constructed. The main contributions of this study are summarized as follows:

- (1)

- This study proposes Large Language Model with Augmented Construction, Continual Learning, and Chain-of-Thought Reasoning (LLM-ACNC), a method for constructing KG from aerospace requirement texts. The proposed method automatically extracts key entities and relations from unstructured requirement texts and effectively constructs high-quality KG to support intelligent requirement management.

- (2)

- An efficient, domain-adaptive continual learning approach based on token index encoding is introduced. This approach enhances the model’s focus on key textual information through token index encoding and improves its understanding of aerospace-specific texts via LoRA fine-tuning.

- (3)

- A CoT reasoning framework for entity and relation extraction is developed, complemented by a dynamic few-shot strategy; this enables the model to adaptively select few-shot examples based on the characteristics of input texts, thereby enhancing stability and accuracy in NER and RE reasoning tasks.

- (4)

- Experimental results demonstrate substantial improvements in NER and RE performance on aerospace requirement texts, achieving F1 scores of 88.75% and 89.48%, respectively.

2. Background

This section presents an illustrative example to clarify the motivation behind the proposed method and offers an overview of LLM along with their practical applications.

2.1. Motivation Example

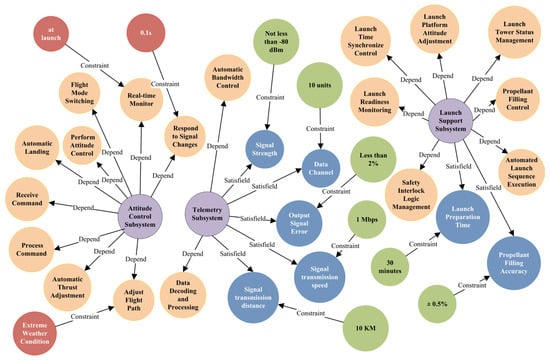

Figure 1 illustrates the construction of a KG from unstructured requirements text. The upper portion of the figure depicts a segment of unstructured requirements text from the aerospace domain, encompassing key design elements associated with the functions and performance of the propulsion system. At present, most mainstream approaches rely on traditional NLP techniques for NER and RE to construct corresponding requirement KG from unstructured text. However, these methods continue to encounter substantial challenges when applied to the aerospace domain. On the one hand, aerospace requirement texts often feature complex syntactic structures, lengthy and deeply nested sentences, and strong contextual dependencies, all of which contribute to frequent omissions and misidentifications of key entities by traditional NLP methods. On the other hand, the terminology is highly specialized, characterized by numerous domain-specific expressions, which hinder the ability of models to accurately identify relationships and comprehend the complex interconnections among entities.

Figure 1.

A motivational example illustrating the construction of a KG from aerospace requirement texts. The upper section presents the aerospace requirements text, whereas the lower section depicts the extracted key entities and their interrelationships, purple represents subsystem-type entity, pink represents function-type entities, green represents environment condition-type entities, and yellow represents indicator parameter-type entity.

To enhance the efficiency and accuracy of requirements analysis in aerospace system development, this study aims to automatically extract key requirement-related entities from unstructured text and accurately identify the relationships among them. As illustrated in the lower part of Figure 1, the automated extraction of core information reduces manual effort and improves the completeness and consistency of requirement identification. During the early stages of development, system engineers can leverage this method to rapidly obtain structured requirement data across various dimensions, including functional modules, performance indicators, and environmental constraints. Based on the extracted entities and relationships, a requirements KG can be constructed to provide clear semantic associations and a coherent logical structure, serving as a crucial reference for requirements modeling, verification, and design decision-making. This KG significantly enhances the comprehension and management of complex system requirements, providing robust intelligent support for the design and development of aerospace systems.

2.2. LLM for KG

LLMs [5], built upon the Transformer architecture, have exhibited exceptional capabilities across a broad spectrum of NLP tasks in recent years. Representative models such as GPT-3 [6], PaLM [7], LLAMA [8], and ChatGPT [9] have marked significant milestones in the field. Success is largely attributed to their massive parameter scales, often reaching hundreds of billions, enabling effective comprehension and generation of natural language in complex linguistic contexts [10]. ChatGPT (GPT-3.5, GPT-4), as one of the most widely adopted models, excels in conversational capabilities and performs tasks based on user prompts, demonstrating human-like interaction. In LLM-based KG construction, entities and their relationships can be identified within complex and dynamic contexts, with implicit connections captured, thereby enhancing the accuracy of entity and relation extraction and improving semantic comprehension. Furthermore, the introduction of CoT reasoning has significantly enhanced LLM reasoning capabilities. Incorporation of intermediate reasoning steps into prompts has been shown to improve performance in arithmetic, commonsense, and symbolic reasoning tasks [11], thereby broadening the scope of applications. Li et al. [12] proposed the MuKDC framework, which systematically enriches the original knowledge graph through a multi-level knowledge generation mechanism and rigorously filters the generated knowledge via a consistency assessment mechanism to ensure high-quality completion. Experimental results demonstrate that this method consistently outperforms existing approaches across multiple conventional and multimodal knowledge graph datasets, highlighting a promising direction for enhancing FKGC performance through generation-based methodologies. Lan et al. [13] introduced the NLP-AKG framework, which utilizes LLM to extract semantic elements from research papers—including tasks, methods, models—and citation relationships, thereby constructing a large-scale KG comprising over 620,000 entities and 2.2 million relations. Additionally, a subgraph community summarization method was developed to improve the literature-based question-answering capabilities of an LLM. Experimental results show that NLP-AKG significantly surpasses retrieval-augmented and knowledge-augmented baseline methods across multiple scientific QA tasks. Zhang et al. [14] proposed the GKG-LLM framework, aiming to unify the construction of three types of knowledge graphs: traditional KG, Event Knowledge Graph (EKG), and Commonsense Knowledge Graph (CKG). Employing a three-stage curriculum learning strategy—KG empowerment, EKG enhancement, and CKG generalization—along with LLM integration, the framework effectively addresses the challenge of unified modeling across diverse subtask definitions. Experimental results confirm that GKG-LLM consistently outperforms existing methods on in-domain, cross-task, and out-of-distribution datasets, validating the feasibility and superiority of unified GKG construction. Tian et al. [15] proposed KG-Adapter, a parameter-efficient fine-tuning method for integrating knowledge graphs into LLMs. This method encodes KG information from both node-centric and relation-centric perspectives through Sub-word to Entity Hybrid Initialization and multi-layer adapter structures, thereby achieving deep bidirectional reasoning fusion with LLMs. Experimental results demonstrate that, with only approximately 28 M trainable parameters, KG-Adapter outperforms full-parameter fine-tuning methods and achieves performance comparable to advanced prompt-augmented approaches, effectively mitigating issues such as structural information loss, knowledge conflicts, and overreliance on ultra-large models.

Despite notable success across many NLP tasks, several challenges remain. In entity and relation extraction, domain-specific terminology and specialized vocabulary often reduce model accuracy, resulting in incorrect or incomplete KG triples [16]. Moreover, overly complex or ambiguous texts often hinders correct interpretation of entity relationships, thereby compromising the overall quality of the constructed KG.

2.3. Continual Learning

Continual learning in LLMs seeks to facilitate adaptation to new tasks while alleviating catastrophic forgetting. Existing approaches are commonly categorized into three paradigms: consolidation-based, dynamic-architecture-based, and memory-based methods. Consolidation-based methods preserve critical parameter stability through regularization [17,18] or knowledge distillation [19,20], yet often lack efficiency in leveraging historical data. Dynamic-architecture-based methods [21] address novel tasks by expanding model architecture, mitigating forgetting at the expense of linearly increasing training costs with task accumulation. Memory-based methods [22] enhance adaptability by storing and replaying prior task data, though often at the risk of overfitting. Parameter-Efficient Tuning (PET) has emerged as a recent solution for reducing the computational burden in adapting LLMs to novel tasks. For example, LFPT5 [23,24] employs soft prompts to extend task capabilities and integrates pseudo-sample replay mechanisms, although scalability remains a concern. AdapterCL [25] enhances adaptability via independently trained adapters, though inference costs scale with task quantity. LAE (Lifelong Adaptive Ensemble) adopts a dual-expert framework to jointly learn from new and prior tasks, alleviating forgetting while remaining susceptible to overfitting [5]. Continual pretraining offers another strategy for enhancing continual learning in LLM, though its intensive computational demands constrain applicability in resource-limited settings [26,27]. Overall, balancing computational cost, catastrophic forgetting, and scalability remains a central challenge in continual learning for LLM. Enhancing fine-tuning efficiency while preserving model performance remains a key open research problem.

2.4. Chain of Thought

The CoT prompting approach has markedly enhanced the reasoning capabilities of LLMs, enabling them to address complex problems through step-by-step inference. In the domain of logical reasoning, Wei et al. [28] were the first to introduce CoT and demonstrated its effectiveness in addressing complex tasks. Subsequently, Kojima et al. [29] found that appending the phrase “Let’s think step by step” to prompts significantly improved the model’s reasoning ability in zero-shot settings. To further improve the applicability of CoT across diverse reasoning tasks, Wang et al. [30] introduced CoT-SC, which integrates a self-consistency (SC) strategy to enhance reasoning stability via multi-path inference. In contrast, Auto-CoT, introduced by Zhang et al. [31], addresses the instability of manually crafted prompts by automatically generating reasoning chains, thereby reducing CoT’s variability across tasks. Fu et al. [32] proposed Complex-CoT, a multi-step reasoning technique tailored for tasks that necessitate layered inference, whereas RE2 [33] enhances model robustness in complex problem-solving by rewriting questions to improve semantic comprehension. Furthermore, Wang et al. [34] presented the Plan-and-Solve framework, which separates planning and solving into distinct reasoning phases, thereby enabling CoT approaches to better manage structured problems. Nevertheless, conventional CoT approaches continue to encounter challenges in tasks that involve multiple entities and their implicit relationships. While NER effectively identifies entities in text, it often struggles to accurately extract the relationships among them. Meanwhile, zero-shot RE remains constrained by performance bottlenecks, primarily due to the absence of explicit relational cues in the input. Therefore, effectively integrating CoT reasoning with information extraction techniques to enhance model generalization and stability in complex reasoning tasks remains a critical open challenge.

In the field of aerospace requirements engineering, the prevalence of highly specialized terminology presents significant challenges to the application of general-purpose NLP models in constructing aerospace-specific knowledge graphs. Moreover, training such models typically demands a substantial amount of annotated data; however, data acquisition in the aerospace domain is extremely challenging, and standardized documents are relatively scarce, thereby limiting research progress in this field. Among the limited studies, Norheim et al. [35] investigated the challenges and opportunities associated with applying LLMs in requirements engineering; they discussed the development of NLP technologies in requirements engineering, particularly the use of LLMs, and highlighted key challenges such as the lack of domain-specific data, inconsistent data annotation, and unclear definitions of use cases in requirements engineering. Ronanki et al. [36] further examined the application of LLMs in requirements engineering, exploring their technical capabilities, ethical constraints, and regulatory limitations. They proposed a systematic approach to integrating LLMs into the requirements engineering process, and the paper evaluated the performance of LLMs in tasks such as requirements generation, user story quality assessment, requirements classification, and traceability tracking through experiments, interviews, and case studies, emphasizing the impact of effective prompt engineering and ethical governance on their practical application. Vogelsang et al. [37] systematically discussed the use of LLMs to address NLP tasks in requirements engineering, providing a practical guide to assist researchers and practitioners in understanding the basic principles of LLMs and selecting and adapting appropriate model architectures for specific requirements engineering tasks. Tikayat Ray et al. [38] proposed an NER method based on the BERT model—named aeroBERT-NER—for identifying named entities in aerospace requirements engineering. By creating a dedicated aerospace text corpus and annotating it, the authors fine-tuned the BERT model to accurately identify aerospace-related entities, such as system names, resources, and dates. The experiments demonstrated that aeroBERT-NER outperformed the general BERT model in entity recognition within aerospace domain texts, providing effective support for the standardization of aerospace requirements and the development of a terminology glossary. Tikayat Ray et al. [39] developed a novel model specifically tailored for entity recognition in the aerospace domain, demonstrating outstanding performance in identifying entities within aerospace requirements texts. Their work contributes to the development of aerospace-specific vocabularies, promotes the consistent use of terminology, and mitigates challenges associated with the standardization of natural language requirements. Regarding Chinese solid rocket engine data, Zheng et al. [40] proposed a BERT-based dual-channel named entity recognition model, achieving remarkable results on solid rocket engine datasets. This approach overcomes the difficulties faced by traditional NER methods in simultaneously learning character-level features and contextual sequence information. In this study, a task-oriented prompt construction strategy is integrated with the advanced learning capabilities of GPT models to perform data augmentation on aerospace requirements texts. Subsequently, the Qwen2.5 (7B) model is utilized and fine-tuned using a LoRA-based approach for continual domain adaptation, thereby enhancing its capacity to comprehend and extract key requirement features from the text. In addition, a CoT reasoning method, grounded in a few-shot learning paradigm, is designed to identify entities and relationships, thereby facilitating the construction of a KG from aerospace requirements text.

3. Methods

This section presents a comprehensive description of the proposed LLM-ACNC method for constructing KG from aerospace requirements texts. It encompasses an overview of the method, data generation, domain-adaptive continual learning, and dynamic few-shot CoT reasoning. The ultimate objective is to construct a high-quality KG from aerospace requirement texts to provide intelligent support for requirements engineering in aerospace equipment development.

3.1. Overview of the Method

This paper introduces LLM-ACNC, a KG construction framework specifically designed for aerospace requirement texts. The objective is to automatically extract entities and relations from unstructured aerospace requirement texts, thereby constructing a requirement KG. The overall model architecture is illustrated in Figure 2. Due to the limited availability of requirement data in the aerospace domain, we initially perform data generation based on a set of collected aerospace requirement texts. A GPT model is employed to leverage its language understanding capabilities, in combination with two specifically designed prompts for data augmentation. To ensure the quality and consistency of the augmented data, the BERTScore evaluation metric is used for automatic filtering, thereby enhancing the overall quality of the training dataset. The data is subsequently annotated with high precision according to predefined entity and relation types, resulting in a high-quality dataset for model training. To address the challenge posed by the highly specialized terminology in aerospace requirement texts, a position-enhanced embedding technique based on token index encoding is developed to improve the model’s ability to accurately identify entity locations within the text. In addition, LoRA fine-tuning is applied to facilitate continual learning on the Qwen2.5(7B) model, thereby enhancing its domain adaptability and semantic understanding in aerospace-specific contexts. Subsequently, to address the complexity of aerospace requirement texts during the reasoning and identification of key entities and relations, a CoT reasoning mechanism is introduced. A CoT-based framework is designed to enable step-by-step reasoning for the generation of entities and relations. Building on a limited set of CoT few-shot dataset, a dynamic few-shot strategy is proposed, enabling the model to adaptively select the most relevant examples based on the input text. This mechanism guides the LLM to progressively and accurately extract entities and relations. Finally, the extracted entities and relations are integrated to construct a high-quality KG tailored to aerospace requirement texts in the context of equipment development.

Figure 2.

The framework for LLM-ACNC.

3.2. Data Generation

Data acquisition represents a crucial step in the construction of a high-quality training dataset. Publicly available literature and technical documents related to spacecraft systems, published between 2010 and 2022, were initially collected from sources such as the International Astronautical Congress (IAC) and the NASA Technical Reports Server. These sources encompass design requirements and performance parameters of several key subsystems—including propulsion, control, and telemetry systems—demonstrating strong representativeness and practical engineering relevance. This time frame was specifically selected due to the significant advancements in digital technologies for aerospace equipment development achieved during this period. To enhance data quality, the collected texts were preprocessed by removing irrelevant content, trimming extraneous spaces, and eliminating special characters. The cleaned data were subsequently stored using blank lines to delimit text segments, forming the foundational data source for this study.

To address the issue of requirement texts scarcity in aerospace domain, this study leverages the text understanding and data generation capabilities of GPT model for data augmentation. We adopt GPT-4 as the requirement texts generation model and generate new requirement text samples using a standard “Instruction + Input + Output” template based on existing text data. The selection of the GPT model is motivated by its outstanding capabilities in processing long-form texts, performing complex contextual reasoning, and generating coherent narratives, which are particularly aligned with the characteristics of aerospace requirement texts featuring intricate descriptions and nested logic structures. In this template, the instruction specifies the data generation task, the input provides the original textual content, and the output represents the text generated by GPT-4 after reasoning. To enhance the generalization ability of the generated data, we design two types of data generation templates, as shown in Figure 3. In template (a), the input consists of complete requirement text without any entity or relation annotations, whereas in template (b), the input includes only entity and relation information, without the corresponding requirement text. For each original data instance, we apply both prompt templates to generate three different augmented samples, aiming to improve the diversity and effectiveness of the augmented dataset.

Figure 3.

Data generation prompts. (a) The input consists of complete requirement text without any entity or relation annotations; (b) the input includes only entity and relation information, without the corresponding requirement text.

To ensure semantic consistency between the generated data and the original text, BERTScore is adopted as an automatic filtering method for evaluating and selecting the generated samples. BERTScore leverages pre-trained BERT model to compute the semantic alignment between generate requirement texts and reference requirement texts. It is particularly effective in capturing synonym substitutions, syntactic variations, and long-range dependencies, thereby enhancing the accuracy of data filtering [41].

The method first encodes both the reference and generate text using a pre-trained BERT model, transforming the token sets into high-dimensional embeddings, denoted as and , respectively. Here, and represent the token embeddings corresponding to the reference and generate text. Subsequently, cosine similarity is applied to compute the precision and recall between the two token sets. The cosine similarity is calculated as follows:

For recall, it reflects whether each token embedding in the reference text can find a semantically similar token in the generate text. This is a similarity matching process from the perspective of the reference text. The recall is calculated as follows:

For precision, it indicates whether each token embedding in the generate text can find a semantically aligned token in the reference text. This is evaluated from the perspective of the generate text. The precision is calculated as follows:

Based on the computed precision and recall, the F1 score can be derived. The F1 score is considered the most stable metric in BERTScore and can accurately measure the semantic similarity between generated and reference text. A higher F1 score indicates better semantic alignment between the two text. The F1 score is computed as follows:

In this study, the F1 score is employed as the evaluation criterion for data filtering to assess the quality of data generated using two distinct prompt templates. The sample with the highest F1 score is selected and incorporated into the dataset as newly generated data.

Finally, the dataset was annotated according to predefined entity and relation types using the open-source tool doccano. The definitions of entity and relation types are presented in Table 1 and Table 2, respectively. The design of the entity and relation types presented above was systematically derived from the summarization of typical aerospace requirement documents. These types sufficiently cover the key elements and semantic structures of major requirement texts. The selection of relation verbs adheres to two core principles: generality and standardization. Upon completion of the annotation process, the text data and triplet relationships were embedded into two distinct prompt templates, corresponding to the two designed formats, for the purposes of data augmentation and filtering.

Table 1.

Entity types.

Table 2.

Relation types.

3.3. Domain-Adaptive Continual Learning

Although LLMs acquire extensive general knowledge through pretraining, they continue to encounter significant challenges in understanding and identifying domain-specific knowledge and critical information within the highly specialized context of aerospace requirement texts [42,43]. One of the primary challenges lies in the limited ability of these models to effectively adapt to the domain-specific expressions and technical terminology commonly found in aerospace requirement documents, thereby impairing the accurate recognition of entity boundaries and semantic relationships. For instance, when processing requirement texts containing the term “control subsystem”, LLMs frequently misidentify it as two separate entities—“control” and “subsystem”—thereby failing to capture its intended meaning as a unified concept. Such errors are particularly prevalent in the processing of aerospace requirement texts and can significantly compromise the accuracy of subsequent RE and KG construction. To address this issue, we propose an efficient fine-tuning approach based on token index encoding to facilitate domain-adaptive continual learning. This approach enhances the model’s capacity to comprehend specialized terminology and logical relationships within aerospace texts, thereby improving its adaptability and overall performance in domain-specific tasks.

Specifically, the requirement texts are first encoded using the tokenizer of the Qwen2.5(7B) LLM, transforming the original text into a sequence of tokens. The entities within the text are subsequently mapped to the token sequence to obtain their corresponding position indices. Drawing inspiration from prompt tuning methods [44], we propose a position-enhanced embedding mechanism, wherein the token position indices of entities are integrated as additional features into the prompt template. By explicitly incorporating both entity information and positional context into the input, the model is guided to more accurately locate and identify entities, thereby enhancing its ability to recognize long and complex nested entities. This approach enables the model to more accurately parse aerospace requirement texts and enhances its understanding of domain-specific terminology and complex entity relationships. The structure of the designed prompt template is illustrated in Figure 4.

Figure 4.

Prompt template based on token index encoding.

Secondly, to enhance continual learning of domain-specific knowledge in the aerospace domain, this study adopts the LoRA fine-tuning approach to efficiently adapt the Qwen2.5(7B) model to domain-specific tasks. The adoption of the LoRA fine-tuning strategy is due to its ability to significantly reduce the number of trainable parameters and computational overhead while maintaining model performance, making it well-suited for the characteristics of small-sample aerospace requirement datasets.

Let the model parameter matrix to be optimized be denoted as ; the parameter update process during training is expressed as follows:

where represents the original weight matrix and denotes the updated weight matrix. In the LoRA fine-tuning process, the original matrix remains frozen, and only is updated. LoRA optimizes by applying low-rank decomposition, defined as follows:

where and , with r ≪ min(m,n), in order to reduce computational complexity.

During the forward pass, the output is computed as follows:

where x is the input vector and h is the output vector. As shown in the above equations, the LoRA fine-tuning method updates only the low-rank matrices within the LLM. Compared with traditional full-parameter continual pretraining, this approach significantly reduces the tuning scale, thereby lowering both computational cost and GPU memory consumption.

Leveraging the LoRA fine-tuning method, we perform domain-adaptive continual learning using the constructed dataset in conjunction with token index encoding-based prompt templates. This enhances the model’s adaptability to aerospace domain texts and strengthens its ability to comprehend domain-specific knowledge and extract critical information.

3.4. Dynamic Few-Shot Chain of Thought Reasoning

Although the Qwen2.5(7B) LLM has acquired substantial domain-specific terminology and knowledge from aerospace requirement texts through domain-adaptive continual learning, it still exhibits limitations in reasoning and generating complex entity–relation triples. The primary challenge lies in the absence of an optimal reasoning pathway, which limits the model’s ability to accurately identify entities and their relationships, despite improvements in understanding aerospace terminology, background knowledge, and contextual semantics. Moreover, a single requirement text often involves interactions among multiple entities, such as subsystems and functions, thereby requiring strong reasoning capabilities from the LLM. The model must conduct deep semantic inference to mitigate potential misjudgments and accurately extract complex inter-entity relationships. To address this issue, we introduce a CoT reasoning mechanism that enables the model to perform step-by-step inference for entity recognition, relational reasoning, and ultimately, the accurate generation of structured triples. In addition, considering the input token limitations of LLM, we design a dynamic few-shot strategy that enables the model to adaptively adjust exemplar texts and optimize prompt templates based on input characteristics. This strategy enhances the model’s generalization capabilities across diverse aerospace requirement scenarios and ensures the effective utilization of informative exemplars under resource-constrained conditions.

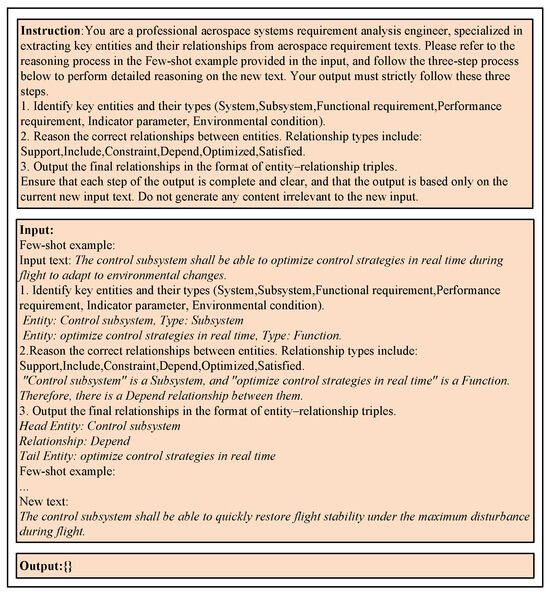

Specifically, we propose a step-by-step CoT reasoning framework to enhance the model’s capabilities in NER and RE tasks, as illustrated by the few-shot example in Figure 5. This framework comprises three core steps: first, the domain-adapted LLM analyzes the requirement text to identify key entities along with their corresponding entity types; second, based on the recognized entities, the model performs relational reasoning to infer the associations between entities and generate appropriate relation labels; and finally, the outputs of entity recognition and relational reasoning are organized into structured entity–relation triples, thereby improving the readability and usability of the extracted information.

Figure 5.

Example of dynamic few-shot CoT reasoning prompt.

This CoT-based reasoning approach equips the model with enhanced learning and comprehension capabilities. It not only reduces the likelihood of misjudgments but also significantly improves the model’s generalization capabilities across diverse aerospace requirement scenarios. To mitigate the time cost associated with large-scale manual annotation for multi-step reasoning tasks, we construct a compact reasoning example dataset M, in which ten exemplar instances are annotated for each relation type. These few-shot samples contain complete step-by-step reasoning processes along with accurate final outputs.

Considering the token limitations of LLM, we propose an example selection method based on the Entity Overlap Degree (EOD). This method first utilizes a pre-trained BERT model to generate an embedding vector for the input text and an embedding vector for the j-th entity in the i-th reasoning example from the example dataset M. The semantic similarity between the input text and each entity is then computed using cosine similarity, as defined below:

Subsequently, we compute the average similarity between the input text and all entities within a given example, which we define as the EOD:

Finally, the example with the highest EOD score is selected from the example dataset M and embedded into the prompt template, thereby ensuring an optimal example selection strategy. An example selected using this strategy is illustrated in Figure 5.

This method not only enables dynamic adjustment of few-shot examples based on the input text, allowing the model to select the most relevant examples during the reasoning process and enhance contextual adaptability, but also effectively reduces redundant information and improves input efficiency.

3.5. KG Generation

The storage and visualization of entities and their relationships constitute essential components for enabling the practical application of requirement KG. Efficient storage technologies not only facilitate the management and rapid retrieval of large-scale requirement text data but also enhance the structural organization of knowledge. In parallel, visualization technologies play a vital role in presenting KG in an intuitive and interpretable manner. By displaying the structure and relationships within the requirement KG via graphical interfaces, system designers are better equipped to comprehend and utilize the knowledge embedded in requirement texts. Neo4j is a graph database built upon a node–relationship data model, making it particularly well-suited for storing and querying complex inter-entity relationships. With its core data structure centered on nodes and edges, Neo4j is ideal for constructing KG, social networks, recommendation engines, and other systems involving highly relational data.

In this study, following the extraction of entities and relationships from requirement texts using the proposed LLM-ACNC method, Neo4j is employed for data storage and visualization. The resulting interactive interface presents the hierarchical structure and inter-entity relationships of the requirement KG, thereby facilitating more efficient requirement identification and offering intelligent support for requirement management, optimization, and decision-making in system engineering.

4. Experiment and Analysis

In this study, a total of 2284 original requirement data points were collected from requirement documents of various spacecraft system types, including telemetry systems, launch support systems, and attitude control subsystems. Data augmentation was performed based on the data generation method described in Section 3.2, resulting in a final dataset of 7923 unstructured requirement texts. The training and test datasets were split with a ratio of 80%:20%. The distribution of unstructured requirement texts across subsystems is presented in Table 3. Model fine-tuning was conducted using Python 3.11 in an environment equipped with 2 × 4090D 24 G GPUs. The maximum learning rate was set to 1 × 10−4, and training was performed for up to 10 epochs. In addition, due to the incorporation of few-shot examples in the downstream entity and relation recognition tasks, the maximum token length was set to 1024. To evaluate the performance of the proposed model, Recall, Precision, and F1-score were adopted as evaluation metrics, followed by a series of performance evaluations and comparative experimental analyses.

Table 3.

Number of data samples.

4.1. Evaluation of Model Performance

4.1.1. NER and RE Performance Evaluation

To verify the effectiveness of the proposed method, a set of comparative experiments were conducted to evaluate the performance of different approaches. First, the CasRel model was trained on the constructed original dataset for 50 epochs. CasRel is a cascade binary tagging-based pointer network that incrementally extracts entities and their corresponding relations through a two-stage tagging strategy [45]. In addition, GPT-4 was selected as a baseline comparison model. As one of the most advanced LLMs to date, GPT-4 is capable of generating effective reasoning outputs based on various input prompts. Two GPT-4-based reasoning approaches were implemented:

GPT-4 (Zero-shot): Performs entity and relation recognition directly based on the model’s pre-trained knowledge, without incorporating any few-shot examples.

GPT-4 (Few-shot): Utilizes the prompt template shown in Figure 5 to perform entity and relation reasoning with few-shot examples.

REBEL [46]: An end-to-end model for generative relation extraction;

KnowGL [47]: An instruction-guided model for triplet generation and entity normalization.

All models were evaluated on the same test dataset, and the corresponding results are presented in Table 4.

Table 4.

NER and RE performance evaluation results.

Experimental results indicate that the CasRel model demonstrates relatively poor performance in entity and relation recognition. This is primarily attributed to its pipeline structure, which sequentially identifies the subject entity, predicts the relation type, and subsequently extracts the object entity. Such a sequential approach is prone to error propagation, particularly in requirement texts containing multiple entities and relations, where inaccurate subject recognition may undermine the accuracy of relation prediction. Moreover, in aerospace requirement texts, entity relationships often exhibit strong contextual dependencies. However, CasRel primarily relies on local feature modeling and fails to capture long-range dependencies, thereby reducing its effectiveness in identifying complex relational structures. The GPT-4 (Zero-shot) approach leverages the zero-shot reasoning capabilities of LLM to directly perform information extraction. Although it achieves a certain degree of entity and relation recognition without additional example data, it suffers from limited task adaptability and unstable reasoning performance due to the absence of task-specific guidance. REBEL builds upon CasRel through end-to-end generation; however, its limited reasoning depth and emphasis on local features constrain its effectiveness in capturing long-range entity relations. KnowGL further enhances entity consistency and relation extraction by utilizing instruction-guided prompts and entity normalization; nevertheless, it encounters challenges in domain-specific and fine-grained semantic scenarios due to the limited coverage of underlying knowledge. In contrast, GPT-4 (Few-shot) improves task adaptability by incorporating a small number of examples into the prompt design, thereby effectively reducing the generalization error commonly observed in zero-shot reasoning. However, due to the lack of domain-specific knowledge learning in the aerospace field, its performance remains suboptimal when applied to highly specialized requirement texts. By comparison, the proposed method benefits from domain-adaptive knowledge learning and dynamically selects the most relevant examples based on the characteristics of the input text. This results in improved accuracy and stability in both NER and RE tasks. Experimental results demonstrate that the proposed method significantly outperforms baseline models in terms of F1-score on both NER and RE tasks, with particularly notable improvements in recall. These findings highlight its effectiveness in mitigating information loss during complex entity recognition and relation reasoning, thereby enabling more robust entity–relation identification.

4.1.2. Evaluation of Domain-Adaptive Continual Learning Performance

To evaluate the impact of token index encoding-based domain-adaptive continual learning on NER and RE performance, we conducted experiments under three different configurations: the first without domain-adaptive continual learning (Without DACL), the second with domain-adaptive continual learning but without token index encoding (DACL-No TIE), and the third being the proposed method. In the configuration without domain-adaptive continual learning, entity and relation recognition are performed directly on the test set without any prior domain-specific adaptation. In the setting without token index encoding, the model undergoes domain-adaptive continual learning; however, the prompt template (as shown in Figure 4) does not incorporate token position information for entities. After training, the model is applied to entity and relation recognition.

The experimental results are presented in Table 5. Domain-adaptive continual learning significantly enhances the model’s adaptability to domain-specific tasks, while the proposed token index encoding-based domain-adaptive continual learning further improves performance on NER and RE tasks within aerospace requirement texts. In the absence of domain-adaptive continual learning, the model achieves F1 scores of 59.77% for NER and 57.62% for RE, indicating that relying solely on the LLM’s general reasoning capabilities is insufficient for accurately identifying domain-specific entities and relations in the aerospace domain. With DACL-No TIE, the model benefits from exposure to aerospace-specific knowledge, achieving an NER F1-score of 80.58% and an RE F1-score of 71.17%. These results demonstrate that domain-adaptive continual learning enhances the model’s ability to recognize domain-specific terminology and relational patterns. However, due to the absence of explicit guidance on entity boundaries, the accuracy of key entity recognition remains limited, resulting in constrained improvements in identifying complex entity structures and performing long-range relation reasoning. In contrast, the proposed method incorporates token index encoding into the prompt template, further optimizing the domain-adaptive training process. It achieves NER and RE F1 scores of 88.75% and 89.48%, respectively, demonstrating substantial improvements in both precision and recall. These findings indicate that the token index encoding approach not only enhances the model’s ability to accurately identify domain-specific terminology but also reduces information loss and improves both entity and relation recall, thereby establishing it as the most effective solution for information extraction in aerospace requirement texts.

Table 5.

Domain-adaptive continual learning evaluation results.

4.1.3. Evaluation of Dynamic Few-Shot CoT Reasoning

The dynamic few-shot CoT reasoning method proposed in this study provides an efficient approach for few-shot inference, enabling the LLM to perform high-quality NER and RE with limited example support. To evaluate the effectiveness of the proposed approach, a comprehensive comparative analysis was conducted under four different configurations: Fixed Few-shot, Fixed Few-shot + CoT, Dynamic Few-shot, and the proposed method. In the Fixed Few-shot setting, a static set of few-shot examples is provided during inference and remains unchanged regardless of the input text. This configuration excludes the CoT reasoning process (as illustrated in Figure 5), and the model directly generates recognition outputs. Fixed Few-shot + CoT incorporates the CoT reasoning process into the prompt, enabling the model to construct responses step by step through logical inference, while the few-shot examples remain static. Dynamic Few-shot dynamically adjusts the few-shot examples in the prompt based on the input text using the entity overlap degree method proposed in this study. However, the examples do not contain explicit reasoning steps. The recognition performance of all configurations is presented in Table 6.

Table 6.

Evaluation results of dynamic few-shot CoT reasoning.

Experimental results demonstrate that the Fixed Few-shot method alone yields limited recognition performance in NER and RE tasks, primarily due to the static nature of the provided examples. This limitation hampers the model’s adaptability to diverse entity and relation structures across varying input texts. In contrast, the Dynamic Few-shot method enables the model to select more relevant examples from the dataset based on the input text, thereby improving its adaptability during entity and relation recognition. However, both methods lack intermediate reasoning logic, resulting in reduced accuracy—particularly in processing texts involving multiple relationships or hierarchical structures, where model performance tends to be unstable. The Fixed Few-shot + CoT method, by incorporating CoT reasoning, significantly enhances recognition performance—particularly in RE tasks—where both precision and recall demonstrate notable gains. These results suggest that the CoT reasoning strategy enhances the model’s coherence and stability when addressing complex tasks. Nevertheless, the use of fixed examples restricts the model’s ability to adapt to varying input characteristics, thereby limiting its effectiveness in domain-specific entity and relation recognition.

In comparison, the proposed method achieves superior performance in both NER and RE tasks, exhibiting significant improvements in precision and recall. These findings indicate that the proposed method not only reduces incorrect predictions but also minimizes information loss, thereby enhancing the model’s robustness in complex entity–relation reasoning scenarios.

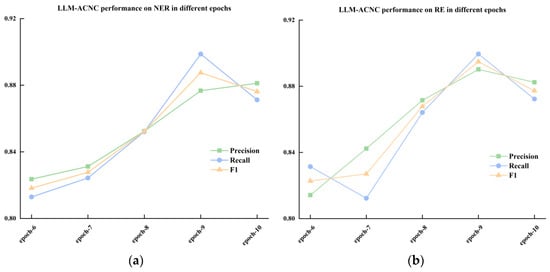

4.2. Fine-Tuning Epoch Settings

In the training of deep learning models, selecting an appropriate number of training epochs is critical to final model performance. Different models require varying numbers of epochs to achieve optimal performance. This consideration is particularly important when fine-tuning LLMs, as the number of training epochs directly influences model effectiveness. Compared to smaller pre-trained models such as BERT, LLMs contain significantly more parameters. Although they typically require fewer epochs for fine-tuning, each epoch incurs significantly higher computational cost, making it essential to balance training efficiency and convergence. To determine the optimal number of training epochs, we evaluated the model on a fixed dataset using varying epoch settings and observed the resulting performance variations.

As shown in Figure 6, when the number of training epochs is too low, the model fails to adequately capture the features of the text data, resulting in suboptimal performance. As the number of epochs increases, the model continues to optimize and its performance steadily improves. However, once the model reaches near-optimal accuracy, further training leads to fluctuations in the F1-score. This is likely attributable to overfitting, which adversely affects the model’s generalization capability.

Figure 6.

NER and RE performance in different epoch. (a) Description of LLM-ACNC performance on NER in different epochs; (b) description of LLM-ACNC performance on RE in different epochs.

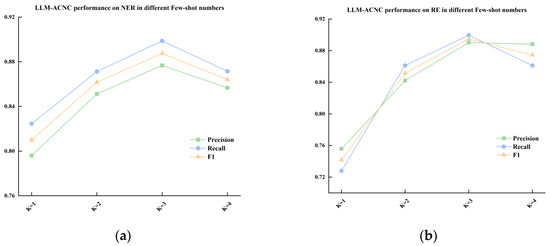

4.3. Few-Shot Example Number Configuration

In dynamic few-shot CoT reasoning, the number of few-shot examples plays a critical role in determining the effectiveness of model inference. A limited number of few-shot examples may prevent the model from adequately learning task-specific patterns, thereby reducing reasoning accuracy. Conversely, an excessive number of examples may introduce information redundancy and exceed token limits, which can negatively affect both reasoning efficiency and output completeness.

To determine an appropriate number of examples, we evaluated different few-shot configurations and analyzed the corresponding performance variations. As shown in Figure 7, with K = 1, the model achieves F1-scores of 81.10% for NER and 74.15% for RE, suggesting that a minimal number of examples is insufficient for effectively learning entity and relation patterns. Increasing the number of examples to K = 2 significantly improves the F1-score to 86.17% (NER) and 85.15% (RE), indicating that a moderate increase in support examples greatly enhances the model’s reasoning performance. Further increasing the number of examples to K = 3 results in F1-score of 88.75% for NER and 89.48% for RE; however, the performance gain becomes marginal. When increased to K = 4, model performance begins to decline, suggesting that an excessive number of few-shot examples may introduce redundancy and impair the model’s learning effectiveness. Additionally, the above analysis indicates that the RE task is more sensitive to the number of support examples than the NER task. Under the K = 1 setting, the RE F1-score is significantly lower than that of NER; however, as K increases, RE exhibits a greater relative improvement. This suggests that RE involves more complex semantic structures, and additional examples help the model better capture inter-entity interactions and relational dependencies.

Figure 7.

Model performance with different few-shot numbers. (a) Description of LLM-ACNC performance on NER with different few-shot numbers; (b) description of LLM-ACNC performance on RE with different few-shot numbers.

4.4. Performance on Real Datasets

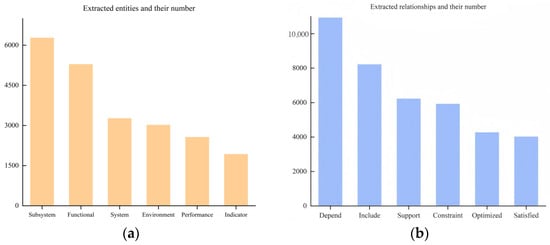

The trained model was employed to extract entities and relationships from the test dataset, with the resulting information stored in the Neo4j graph database. By leveraging its graph modeling capabilities, Neo4j automatically organizes the extracted entities and relationships into a graph format. The extraction results are presented in Figure 8.

Figure 8.

The number of extracted entities and relationships. (a) The extracted entities; (b) the highest number of relationships.

Among the identified entity types, subsystem entities constituted the largest proportion, followed by function-type entities, encompassing functions such as Real-time control, Data decoding, and Status monitoring. With respect to relationship types, dependency relationships were the most prevalent, reflecting a high degree of functional coordination between subsystems and their associated functions. For instance, the Telemetry system is closely linked to multiple functional entities and is constrained by various performance indicators, including Communication range, Data rate, and Response time. Moreover, it depends on support from other subsystems—such as the Attitude control subsystem, Propulsion system, and Ground control center—to facilitate flight status data acquisition and coordinated system control. Figure 9 displays a segment of the constructed KG, illustrating several requirement-related entities associated with the Telemetry subsystem. This figure highlights the hierarchical structure and semantic relationships among entities, thereby laying a solid foundation for subsequent graph-based reasoning and system-level design.

Figure 9.

A partial KG structure representing aerospace system requirements is depicted. Purple nodes represent subsystem types, yellow nodes denote function requirement types, blue nodes correspond to performance requirement types, and red nodes indicate parameter types. The directed edges illustrate various types of relationships among the entities.

5. Case Study

This section presents a detailed explanation of the application of the constructed aerospace requirement texts KG to requirements engineering through practical examples, thereby facilitating the automatic generation of structured system requirement items.

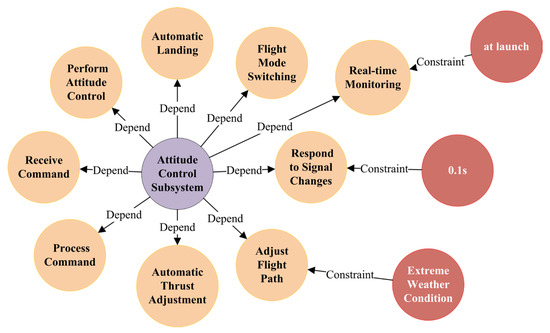

By identifying and constructing a KG from unstructured requirement texts, the textual knowledge embedded in aerospace requirements can be transformed into a graph-structured format for effective representation and management. Figure 10 illustrates a subset of requirement entities and their corresponding relationships associated with the attitude control subsystem. Based on this, we designed a standardized aerospace system requirement items template based on the standard terminology in requirements engineering [48,49], as shown in Table 7. Building on this foundation, a plug-in based on a template-matching strategy was developed. This plug-in automatically selects and populates templates based on the extracted entities and their relationships. Specifically, it first identifies the relation type of the given triple in order to determine the corresponding semantic template. Subsequently, the names and types of the involved entities are extracted, and the most appropriate sentence template is matched from a predefined library, according to the combination of relation type and entity types. Finally, the entity names are dynamically inserted into designated placeholders within the selected template, thereby generating a complete and structured system requirement items, as illustrated in Figure 11.

Figure 10.

Partial KG of the attitude control subsystem, where purple nodes represent subsystem-type entity, yellow nodes represent function-type entities, and red nodes represent environmental condition-type entities.

Table 7.

System requirement items template.

Figure 11.

Attitude control subsystem requirement item examples.

This process significantly enhances the efficiency of converting the KG into actionable system requirements. The generated outputs can be directly utilized as inputs for system design, modeling, and verification, thereby promoting the evolution of requirement engineering toward automation and structured development and offering robust engineering support for the development of aerospace systems.

6. Discussion

This study proposes an automated approach, LLM-ACNC, for constructing aerospace requirement texts KG using LLM. Initially, the learning capabilities of the GPT model are leveraged to augment textual data via carefully designed prompts. BERTScore is subsequently employed to filter the augmented samples, resulting in a high-quality dataset for model training. To enhance the model’s ability to learn and comprehend domain-specific aerospace knowledge, an efficient domain-adaptive continual learning strategy based on token index encoding is proposed. This method enriches prompt inputs with token position information and incorporates the LoRA fine tuning strategy to efficiently adapt the Qwen2.5(7B) model, thereby improving its understanding of aerospace-related texts. To further strengthen the model’s capacity for extracting key entities and relationships from text, a CoT reasoning framework is constructed for the inference stage, along with a dynamic few-shot learning strategy. This strategy allows the model to adaptively adjust exemplar texts and optimize the prompt templates according to the characteristics of various input requirements, thereby enhancing the model’s stability and accuracy in NER and RE tasks. As a result, an aerospace requirement text knowledge graph is successfully constructed. To evaluate the effectiveness of the proposed approach, a series of comparative experiments were conducted. Experimental results demonstrate that the proposed method effectively extracts entities and relationships from aerospace requirement texts, achieving F1 scores of 88.75% and 89.48%, respectively.

Future work will focus on further enhancing the model’s performance to fully exploit the potential of LLM in the context of aerospace requirement engineering. Additionally, efforts will be directed towards integrating domain knowledge embeddings and dynamic prompt generation techniques to further enhance the model’s performance under extreme zero-shot conditions. The proposed approach will also be validated on larger and more diverse aerospace requirements datasets to comprehensively evaluate its generalization capabilities and scalability across various systems and application domains.

Author Contributions

Y.L.: methodology, software, writing—original draft, validation; J.H.: writing—review and editing, supervision; Y.C.: writing—review and editing, funding acquisition; J.J.: writing—review and editing; W.W.: writing—review and editing, investigation. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Civil Aerospace Project (D020101) of China State Administration of Science, Technology and Industry for National Defense.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data involve sensitive information and are related to confidential aspects of my research project; therefore, data will be made available on request.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (GPT-4, OpenAI) for the purpose of requirement data augmentation. Specifically, the tool was employed to generate synthetic aerospace requirement texts based on a set of collected examples, guided by two specially designed prompts. The authors have reviewed and edited the generated content and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| KG | Knowledge Graph |

| SysML | Systems Modeling Language |

| NER | Named entity recognition |

| RE | Relation extraction |

| NLP | Natural Language Processing |

| LLM | Large Language Model |

| CoT | Chain of Thought |

| LoRA | Low-Rank Adaptation of Large Language Models |

| LLM-ACNC | Large Language Model with Augmented Construction, Continual Learning, and Chain-of-Thought Reasoning |

References

- INCOSE. INCOSE Systems Engineering Handbook; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Jia, J.; Zhang, Y.; Saad, M. An approach to capturing and reusing tacit design knowledge using relational learning for knowledge graphs. Adv. Eng. Inform. 2022, 51, 101505. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, K.; Li, Y.; Liu, Y. Data-driven concept network for inspiring designers’ idea generation. J. Comput. Inf. Sci. Eng. 2020, 20, 031004. [Google Scholar] [CrossRef]

- AlDhafer, O.; Ahmad, I.; Mahmood, S.J.I.; Technology, S. An end-to-end deep learning system for requirements classification using recurrent neural networks. Inf. Softw. Technol. 2022, 147, 106877. [Google Scholar] [CrossRef]

- Gao, Q.; Zhao, C.; Sun, Y.; Xi, T.; Zhang, G.; Ghanem, B.; Zhang, J. A unified continual learning framework with general parameter-efficient tuning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 11483–11493. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S. Palm: Scaling language modeling with pathways. J. Mach. Learn. Res. 2023, 24, 11324–11436. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Roumeliotis, K.I.; Tselikas, N.D. Chatgpt and open-ai models: A preliminary review. Future Internet 2023, 15, 192. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D. Emergent abilities of large language models. arXiv 2022, arXiv:2206.07682. [Google Scholar]

- Li, Q.; Chen, Z.; Ji, C.; Jiang, S.; Li, J. LLM-based multi-level knowledge generation for few-shot knowledge graph completion. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024. [Google Scholar]

- Lan, J.; Li, J.; Wang, B.; Liu, M.; Wu, D.; Wang, S.; Qin, B. NLP-AKG: Few-Shot Construction of NLP Academic Knowledge Graph Based on LLM. arXiv 2025, arXiv:2502.14192. [Google Scholar]

- Zhang, J.; Wei, B.; Qi, S.; Liu, J.; Lin, Q. GKG-LLM: A Unified Framework for Generalized Knowledge Graph Construction. arXiv 2025, arXiv:2503.11227. [Google Scholar]

- Tian, S.; Luo, Y.; Xu, T.; Yuan, C.; Jiang, H.; Wei, C.; Wang, X. KG-adapter: Enabling knowledge graph integration in large language models through parameter-efficient fine-tuning. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 3813–3828. [Google Scholar]

- Sun, L.; Zhang, P.; Gao, F.; An, Y.; Li, Z.; Zhao, Y. SF-GPT: A training-free method to enhance capabilities for knowledge graph construction in LLMs. Neurocomputing 2025, 613, 128726. [Google Scholar] [CrossRef]

- Chaudhry, A.; Dokania, P.K.; Ajanthan, T.; Torr, P.H. Riemannian walk for incremental learning: Understanding forgetting and intransigence. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 532–547. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Ghosh, S.; Li, D.; Tasci, S.; Heck, L.; Zhang, H.; Kuo, C.-C.J. Class-incremental learning via deep model consolidation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1131–1140. [Google Scholar]

- Chen, T.; Goodfellow, I.; Shlens, J. Net2net: Accelerating learning via knowledge transfer. arXiv 2015, arXiv:1511.05641. [Google Scholar]

- Isele, D.; Cosgun, A. Selective experience replay for lifelong learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Qin, C.; Joty, S. Lfpt5: A unified framework for lifelong few-shot language learning based on prompt tuning of t5. arXiv 2021, arXiv:2110.07298. [Google Scholar]

- Razdaibiedina, A.; Mao, Y.; Hou, R.; Khabsa, M.; Lewis, M.; Almahairi, A. Progressive prompts: Continual learning for language models. arXiv 2023, arXiv:2301.12314. [Google Scholar]

- Madotto, A.; Lin, Z.; Zhou, Z.; Moon, S.; Crook, P.; Liu, B.; Yu, Z.; Cho, E.; Wang, Z. Continual learning in task-oriented dialogue systems. arXiv 2020, arXiv:2012.15504. [Google Scholar]

- Ke, Z.; Shao, Y.; Lin, H.; Konishi, T.; Kim, G.; Liu, B. Continual pre-training of language models. arXiv 2023, arXiv:2302.03241. [Google Scholar]

- Qin, Y.; Zhang, J.; Lin, Y.; Liu, Z.; Li, P.; Sun, M.; Zhou, J. Elle: Efficient lifelong pre-training for emerging data. arXiv 2022, arXiv:2203.06311. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Chi, E.; Narang, S.; Chowdhery, A.; Zhou, D. Self-consistency improves chain of thought reasoning in language models. arXiv 2022, arXiv:2203.11171. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic chain of thought prompting in large language models. arXiv 2022, arXiv:2210.03493. [Google Scholar]

- Fu, Y.; Peng, H.; Sabharwal, A.; Clark, P.; Khot, T. Complexity-based prompting for multi-step reasoning. arXiv 2022, arXiv:2210.00720. [Google Scholar]

- Xu, X.; Tao, C.; Shen, T.; Xu, C.; Xu, H.; Long, G.; Lou, J.-G. Re-reading improves reasoning in language models. arXiv 2023, arXiv:2309.06275. [Google Scholar]

- Wang, L.; Xu, W.; Lan, Y.; Hu, Z.; Lan, Y.; Lee, R.K.-W.; Lim, E.-P. Plan-and-solve prompting: Improving zero-shot chain-of-thought reasoning by large language models. arXiv 2023, arXiv:2305.04091. [Google Scholar]

- Norheim, J.J.; Rebentisch, E.; Xiao, D.; Draeger, L.; Kerbrat, A.; de Weck, O.L. Challenges in applying large language models to requirements engineering tasks. Des. Sci. 2024, 10, e16. [Google Scholar] [CrossRef]

- Ronanki, K. Enhancing Requirements Engineering Practices Using Large Language Models. Licentiate Thesis, University of Gothenburg, Gothenburg, Sweden, 2024. [Google Scholar]

- Vogelsang, A.; Fischbach, J. Using large language models for natural language processing tasks in requirements engineering: A systematic guideline. In Handbook on Natural Language Processing for Requirements Engineering; Springer: Berlin/Heidelberg, Germany, 2025; pp. 435–456. [Google Scholar]

- Tikayat Ray, A.; Pinon-Fischer, O.J.; Mavris, D.N.; White, R.T.; Cole, B.F. aeroBERT-NER: Named-Entity Recognition for Aerospace Requirements Engineering Using BERT. In Proceedings of the AIAA SciTech 2023 Forum, National Harbor, MD, USA, 23–27 January 2023; p. 2583. [Google Scholar]

- Tikayat Ray, A.; Pinon Fischer, O.J.; White, R.T.; Cole, B.F.; Mavris, D.N. Development of a language model for named-entity-recognition in aerospace requirements. J. Aerosp. Inf. Syst. 2024, 21, 489–499. [Google Scholar] [CrossRef]

- Zheng, Z.; Liu, M.; Weng, Z. A Chinese BERT-based dual-channel named entity recognition method for solid rocket engines. Electronics 2023, 12, 752. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. Bertscore: Evaluating text generation with bert. arXiv 2019, arXiv:1904.09675 2019. [Google Scholar]

- Song, C.; Han, X.; Zeng, Z.; Li, K.; Chen, C.; Liu, Z.; Sun, M.; Yang, T. Conpet: Continual parameter-efficient tuning for large language models. arXiv 2023, arXiv:2309.14763. [Google Scholar]

- Wu, T.; Luo, L.; Li, Y.-F.; Pan, S.; Vu, T.-T.; Haffari, G. Continual learning for large language models: A survey. arXiv 2024, arXiv:2402.01364. [Google Scholar]

- Ding, N.; Chen, Y.; Han, X.; Xu, G.; Xie, P.; Zheng, H.-T.; Liu, Z.; Li, J.; Kim, H.-G. Prompt-learning for fine-grained entity typing. arXiv 2021, arXiv:2108.10604. [Google Scholar]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A novel cascade binary tagging framework for relational triple extraction. arXiv 2019, arXiv:1909.03227. [Google Scholar]

- Cabot, P.-L.H.; Navigli, R. REBEL: Relation extraction by end-to-end language generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16–20 November 2021; pp. 2370–2381. [Google Scholar]

- Rossiello, G.; Chowdhury, M.F.M.; Mihindukulasooriya, N.; Cornec, O.; Gliozzo, A.M. Knowgl: Knowledge generation and linking from text. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 16476–16478. [Google Scholar]

- Laplante, P.A.; Kassab, M. Requirements Engineering for Software and Systems; Auerbach Publications: Boca Raton, FL, USA, 2022. [Google Scholar]

- Rolls-Royce, P. EARS (Easy Approach to Requirements Syntax). In Proceedings of the 17th IEEE International Requirements Engineering Conference, Atlanta, GA, USA, 31 August–4 September 2009. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).