1. Introduction

With the continuous development of modern technology, precision guidance technology has become an indispensable core technology, widely applied in various tasks such as unmanned aerial vehicles (UAVs) and defense systems. However, traditional precision guidance methods face significant challenges when dealing with certain types of low, slow, and small (LSS) UAVs. As a new type of force, LSS UAVs have characteristics of low flight altitude, slow speed, and small radar cross-section (RCS). Existing defense systems are high-cost and cannot play a role in the engagement scenarios of LSS UAVs. Therefore, it is necessary to study a defender with low cost and low overload to effectively intercept LSS UAVs.

Successful interception requires a minimal miss distance and a relatively uniform distribution of the ballistic overload. Based on the defender’s kill radius, the required miss distance is 5 m. Due to the limited maneuverability, the entire trajectory’s overload needs to be planned, which further complicates the interception problem. Proportional navigation guidance (PNG) [

1], due to its simple computation and strong applicability, has become one of the most widely used guidance schemes. PNG primarily focuses on concentrating the overload during the terminal phase. For interceptors with limited available overload, the interception accuracy significantly decreases when the target is in a maneuvering state. Compared with PNG, augmented proportional navigation guidance (APNG) [

2] introduces compensation for maneuvering targets, which can reduce the end effector overload to a certain extent. For a defender with a low overload capacity, the overload calculated by these traditional methods is too concentrated and unevenly distributed, which may result in limited interception effectiveness.

The development of modern control theory has provided new avenues for addressing the design problems of guidance law in intercepting maneuvering UAVs. Optimal guidance law [

3], sliding mode control-based guidance law [

4,

5], and similar approaches have attracted significant attention from numerous researchers. Asher et al. [

6] were the first to introduce optimal control theory into the interception problem of maneuvering targets and provided an analytical derivation of the optimal closed-loop guidance law for a missile with limited bandwidth in engaging maneuvering targets. Dun et al. [

7] applied optimal control theory and incorporated energy management considerations, thus deriving an optimal guidance law that addresses high-speed and maneuvering targets. Kim et al. [

8] employed optimal control concepts to provide a unified formulation for the optimal collision course law for targets with arbitrary acceleration or deceleration, ensuring the optimality of the guidance commands even in non-linear engagement scenarios. In [

9], Shima et al. adopted sliding mode control as the primary approach for the design of guidance laws in interception scenarios. They developed a guidance law capable of dealing with all engagement geometries. However, the guidance law could not guarantee error convergence in a finite time. Zhang et al. [

5] proposed an optimal sliding mode guidance law, in which an adaptive sliding mode switching term was designed to address prediction error and actuator saturation, effectively reducing both the interceptor’s energy consumption and the terminal acceleration commands. Zheng et al. [

10] presented an adaptive sliding mode guidance law that overcomes the conventional issues of large initial control input and chattering in traditional sliding mode guidance. Guidance laws based on modern control theory often require more observational information. In practical situations, due to the limited observability of sensors, many states are difficult to obtain, and partial observability challenges remain unresolved in modern control methods.

With the development of artificial intelligence technologies, reinforcement learning (RL) [

11] and deep reinforcement learning (DRL) [

12] algorithms have become the core data-driven paradigms for solving complex decision optimization and control problems. These algorithms have already been widely applied in fields such as gaming [

13], robotics [

14,

15], and autonomous driving [

16]. RL is based on the Markov decision process (MDP), where an agent interacts with the environment to continuously learn the optimal policy. The core concept is to approximate the policy and value function using neural networks, improving learning efficiency and stability through techniques such as experience replay [

17] and target networks. DRL combines the advantages of deep learning and reinforcement learning [

18], enabling it to handle high-dimensional state spaces and continuous action spaces [

19]. To overcome the limitations of traditional guidance methods, the RL and DRL algorithms have also been used to design guidance laws. Hu et al. [

20] proposed a second-order sliding mode guidance law with terminal impact angle constraint by integrating the twin-delayed deep deterministic policy gradient (TD3) [

21] framework with nonsingular terminal sliding mode control (NTSM), effectively mitigating the inherent chattering issue associated with sliding mode control. Gaudet et al. [

22] applied reinforcement meta-learning (Meta-RL) to address the problem of intercepting maneuvering targets in outer space. This method generates guidance commands using only the LOS angle and the LOS angular rate as observation values. Compared to traditional RL, an agent trained with Meta-RL enhances its generalization ability to uncertain scenarios. The authors also extended this method to applications such as lunar landing [

23] and asteroid exploration, proving the feasibility of RL in practical applications [

24,

25]. Qiu et al. [

26] proposed a recorded recurrent-twin delayed deep deterministic (RRTD3) policy gradient algorithm for intercepting maneuvering targets in the atmosphere. This approach addresses the impact of uncertainty and observation noise by modeling the engagement scenario as a partially observable Markov decision process (POMDP). Additionally, the recurrent neural network layer was incorporated into the policy network, improving training speed and stability [

27].

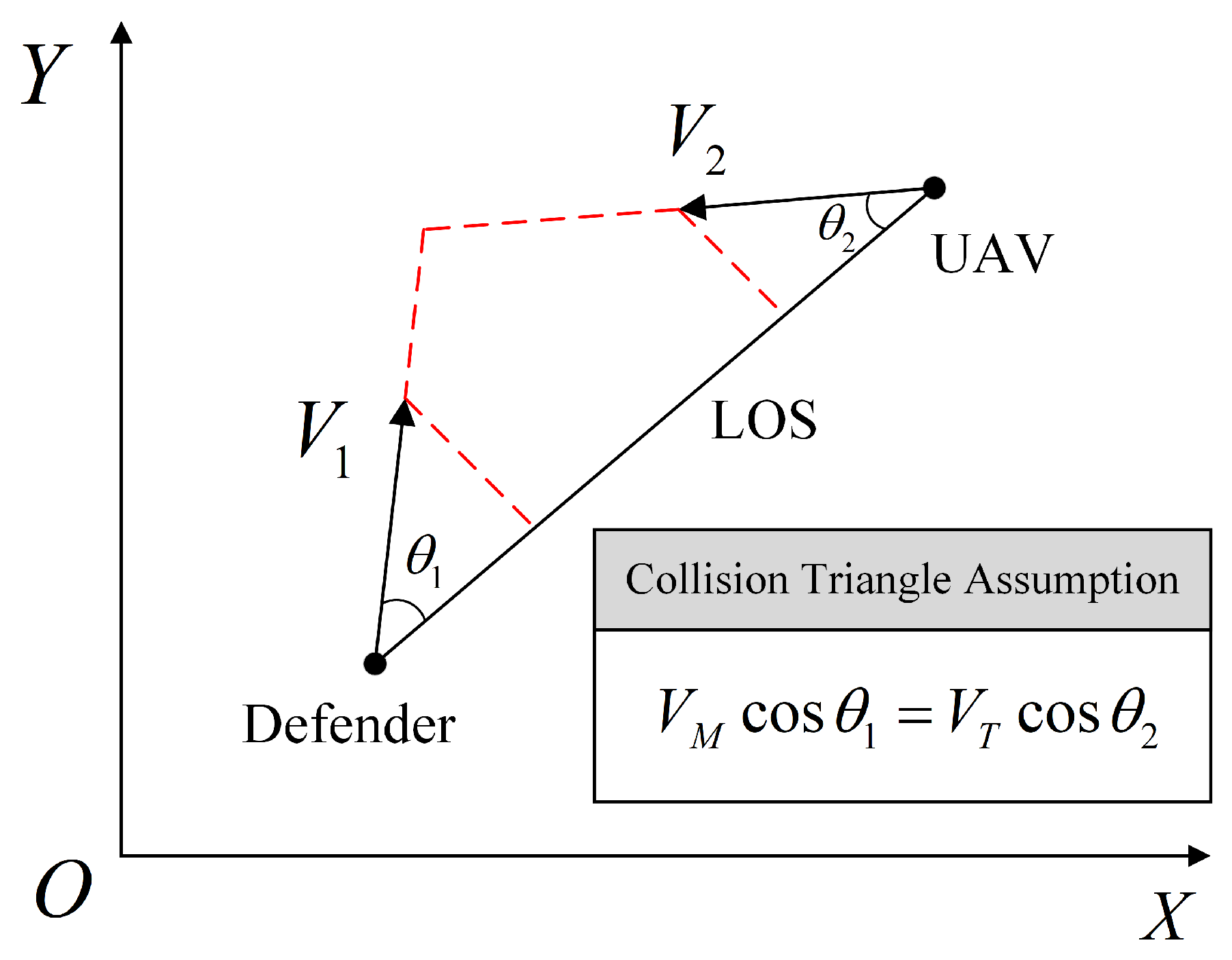

Despite the significant progress of DRL algorithms in guidance law design, current research universally relies on simplifying assumptions. Existing works typically reduce complex engagement scenarios to a two-dimensional plane and provide the defender with an ideal initial launch angle by pre-setting a collision triangle [

28], as shown in

Figure 1. While this idealized setup accelerates algorithm convergence by shrinking the state space and circumventing the challenge of correcting large initial heading errors, it does so at the cost of model fidelity. However, to ensure rapid response, radar is typically not employed to provide initial launch angles for low-overload defenders, rendering this assumption invalid in our study. Therefore, our work directly addresses the core challenge that arises when these idealized constraints are removed, namely, the expanded exploration space in a three-dimensional environment without prior guidance information, which results in inefficient training and even failure to converge with existing DRL methods.

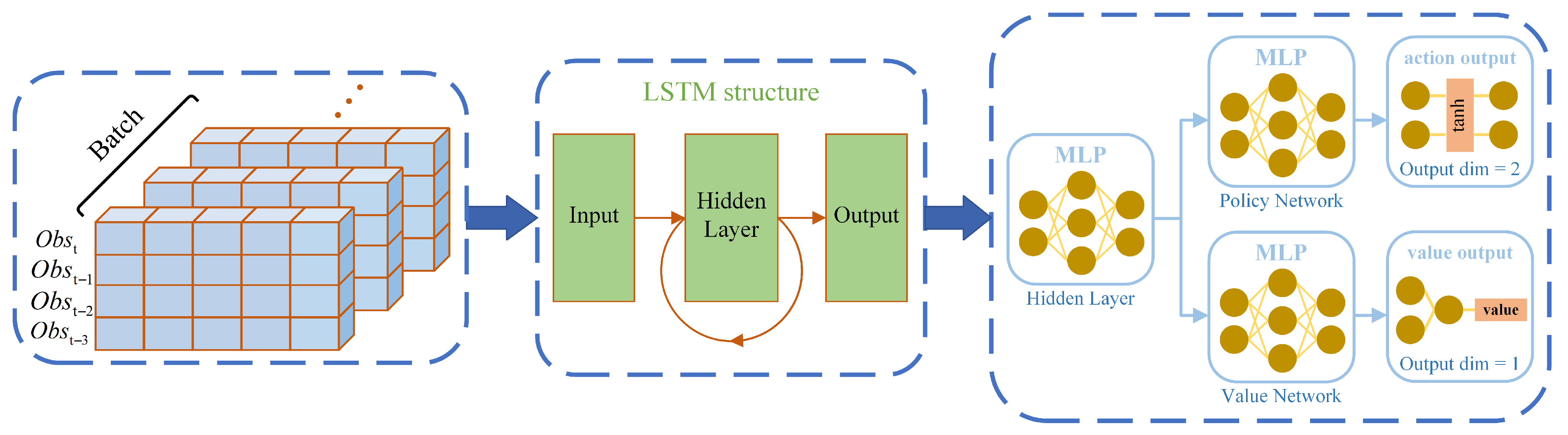

In this work, we propose a novel recurrent proximal policy optimization (RPPO) guidance law framework, designed to solve the challenge of high-precision interception of LSS UAVs by a low-overload defender with limited observations. The main contributions of our work are as follows:

The RPPO guidance law framework for intercepting LSS UAVs is proposed, which leverages a recurrent neural network to extract temporal information from the observation sequence and address the limitations of traditional DRL algorithms in partially observable scenarios.

A three-dimensional guidance law modeling method is proposed, which frames the interception problem as a POMDP model and introduces a random launch angle mechanism that does not rely on the collision triangle, thereby broadening the launch conditions.

A novel reward function is proposed, which leverages UAV velocity prediction to accelerate convergence and incorporates overload distribution constraints to improve interception accuracy. This approach addresses the sparse reward problem and improves training performance.

The remainder of this paper is organized as follows: In

Section 2, the engagement scenario is constructed, followed by a brief discussion of the reinforcement learning framework and PPO algorithm.

Section 3 mainly introduces the proposed RPPO algorithm framework and the implementation details in the combat scenario. The simulation experiments are conducted in

Section 4 to verify the performance of the proposed method. The conclusion are presented in

Section 5.

2. Problem Formulation

This section primarily introduces the relative motion equations between the missile and the UAV in three-dimensional space, as well as some specific details within the training environment. Before proceeding, we first present the following widely accepted assumptions [

29,

30]:

Assumption 1: The entire engagement process is modeled as a head-on engagement scenario, where the defender is fired towards the UAV to ensure that the defender and the UAV move closer to each other. This assumption is based on the fact that, in interception problems, the defender intercepts the UAV in a head-on engagement [

31].

Assumption 2: The influence of gravity is neglected throughout the guidance process. The rationale behind this assumption is that, during the initial development of new guidance laws, ignoring gravity is a common practice [

32].

Assumption 3: The velocities of both the defender and the UAV are assumed as constants. This assumption is founded on the observation that the terminal guidance duration is relatively short.

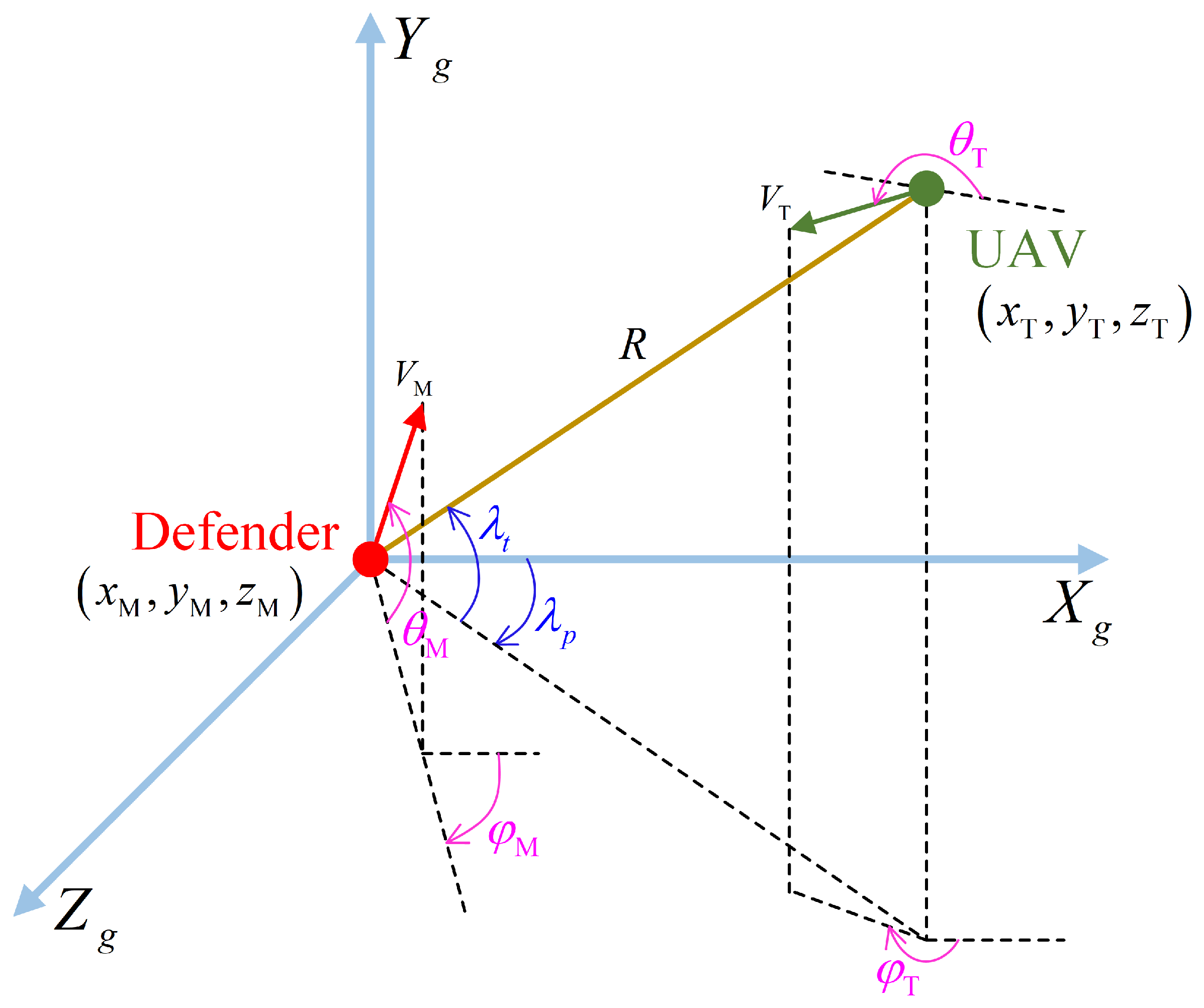

In this study, the three-dimensional engagement scenario is depicted as shown in

Figure 2.

M represents the defender fired from the origin of the coordinate system, while

T represents the incoming UAV from a distant location. The mission of

M is to intercept

T.

2.1. Equations of Engagement

A three-dimensional spatial coordinate system

is established as shown in

Figure 2, with the defender being located at the origin. The defender position vector and UAV position vector are represented as

,

, and the relative distance is represented as

R. The position vectors are defined as

, and

. The velocity vectors of the defender and the UAV are represented by

and

, respectively. The position vectors are defined as

, and

. The angles between the velocity vectors

,

and the

plane are

and

, respectively, where the upward direction is positive. The angles between the projections of

,

on the

plane and the

-axis are

and

, respectively, where the direction is defined as positive for a clockwise rotation from the positive

-axis to the positive

-axis. The normal acceleration of the defender is represented as

. The LOS angles between the defender and the UAV are defined as

and

, with the positive direction of the angles being specified in

Figure 2. It can be calculated as Equations (

1) and (2).

According to the definition, the Euler angles between the LOS coordinate frame and the inertial coordinate frame are

and

. The direction cosine matrix (DCM) from the inertial coordinate system to the LOS coordinate system can be expressed as follows:

The relative relationship between the defender and the UAV can be calculated using Equation (

4).

In this work, we focus on the relative relationship between the defender and the UAV, rather than absolute information, which is vital for our algorithm to generalize in more scenarios.

2.2. Engagement Scenario

We provide sufficient samples for DRL training by randomly initializing the environment, with all environment parameters being selected randomly from

Table 1. Our environment ensures that, under the initial conditions, both the defender and the UAV are in a head-on engagement scenario, with no constraints on the defender’s initial launch angle. This setup aligns with real-world conditions, in which it is challenging for the defender to form a collision triangle with the UAV at launch. This typically requires knowledge of the UAV’s current velocity, which means that the radar provides certain information to the defender. We consider it reasonable to launch within a certain angular range, roughly towards the UAV’s direction.

Without loss of generality, we assume that the UAV moves with constant velocity in a straight line, with only its position and velocity direction being altered. For the defender, its acceleration is considered to be orthogonal to its velocity vector, and the magnitude of the acceleration is provided by the RPPO-trained policy network, The feasible region is , where is the maximum overload of defender.

During the training process, we define the following termination states:

, which indicates a successful interception of the UAV by the defender. is defined as the killing distance of the defender.

, indicating that the defender is moving away from the UAV at timestep t, resulting in a failure of interception.

, where the interception process exceeds the maximum time limit, leading to a failure of interception. is defined as the maximum interception time.

2.3. RL Framework and PPO Algorithm

DRL achieves decision optimization through interactive learning between the agent and the environment. Its core paradigm can be described as MDP, which is represented by a five-tuple

, where

s denotes the state space,

a denotes the action space,

represents the state transition probability,

r denotes the immediate reward, and

represents the reward discount factor [

33]. As shown in

Figure 3, the agent generates an action based on the observed outcome and sends it to the environment. Subsequently, the environment, using the action and the current state, generates the next state and a scalar reward signal. The reward and observation corresponding to the next state are then passed back to the agent. This process continues iteratively until the environment signals termination.

In a typical MDP problem, the agent has access to global information. However, in guidance law design problems, due to the limitations of hardware sensors, the defender can only observe partial information and cannot consider all states in the environment as observable. This results in a partially observable Markov decision process. This paper will focus on the discussion of POMDP.

Proximal Policy Optimization (PPO) [

34] is an online policy gradient algorithm that has been widely applied in the field of reinforcement learning. PPO demonstrates excellent stability and efficiency when handling high-dimensional and complex problems. By improving upon traditional policy gradient methods, it addresses the issues of instability due to large policy updates and high computational complexity. The process of the PPO algorithm is illustrated in

Figure 4. Based on the aforementioned framework, this section will explain its underlying principles and update method.

In PPO, the key to optimization is adjusting the agent’s policy through the policy gradient method. During the policy optimization process, PPO employs a target function to guide the policy updates. The core of this target function is the trust region concept, which prevents drastic changes in the policy update process. PPO introduces a technique known as clipping to limit the magnitude of policy updates, ensuring that each update does not deviate too far from the current policy and preventing instability caused by large policy adjustments. Specifically, the objective function of PPO is as follows:

where

denotes the ratio between the current policy and the old policy,

denotes the empirical expectation over the entire trajectory,

is the advantage function, which reflects the relative quality of taking action

in state

, and

is a small hyperparameter, typically set to 0.2 in our implementation.

The introduction of the advantage function helps to evaluate the relative benefit of a particular action with respect to the current policy. It is formally defined as follows:

where

represents the cumulative return from time step

t until the end of the episode, and

is the value function of state

. The advantage function plays a crucial role in guiding the algorithm to optimize the policy by assessing the quality of actions, which leads to updates in the most advantageous direction.

In PPO,

represents the ratio between the current and the old policy, defined as follows:

where

is the probability of taking action

in state

according to the current policy, and

is the corresponding probability under the old policy. By defining this ratio, PPO quantifies the difference between the current and the old policies, using it as a basis for updating the policy.

The procedure of the PPO method is outlined in Algorithm 1.

| Algorithm 1 PPO algorithm |

| Input: initial policy parameters , initial value function parameters |

1: for k = 0, 1, 2, … do

2: Collect set of trajectory by running policy in the environment

3: Compute rewards-to-go

4: Compute advantage estimates based on the current value function

5: Update the policy by maximizing the PPO objective:

typically via stochastic gradient ascent with Adam

6: Fit value function by regression on Mean-squared error: typically via some gradient descent algorithm

7: end for |

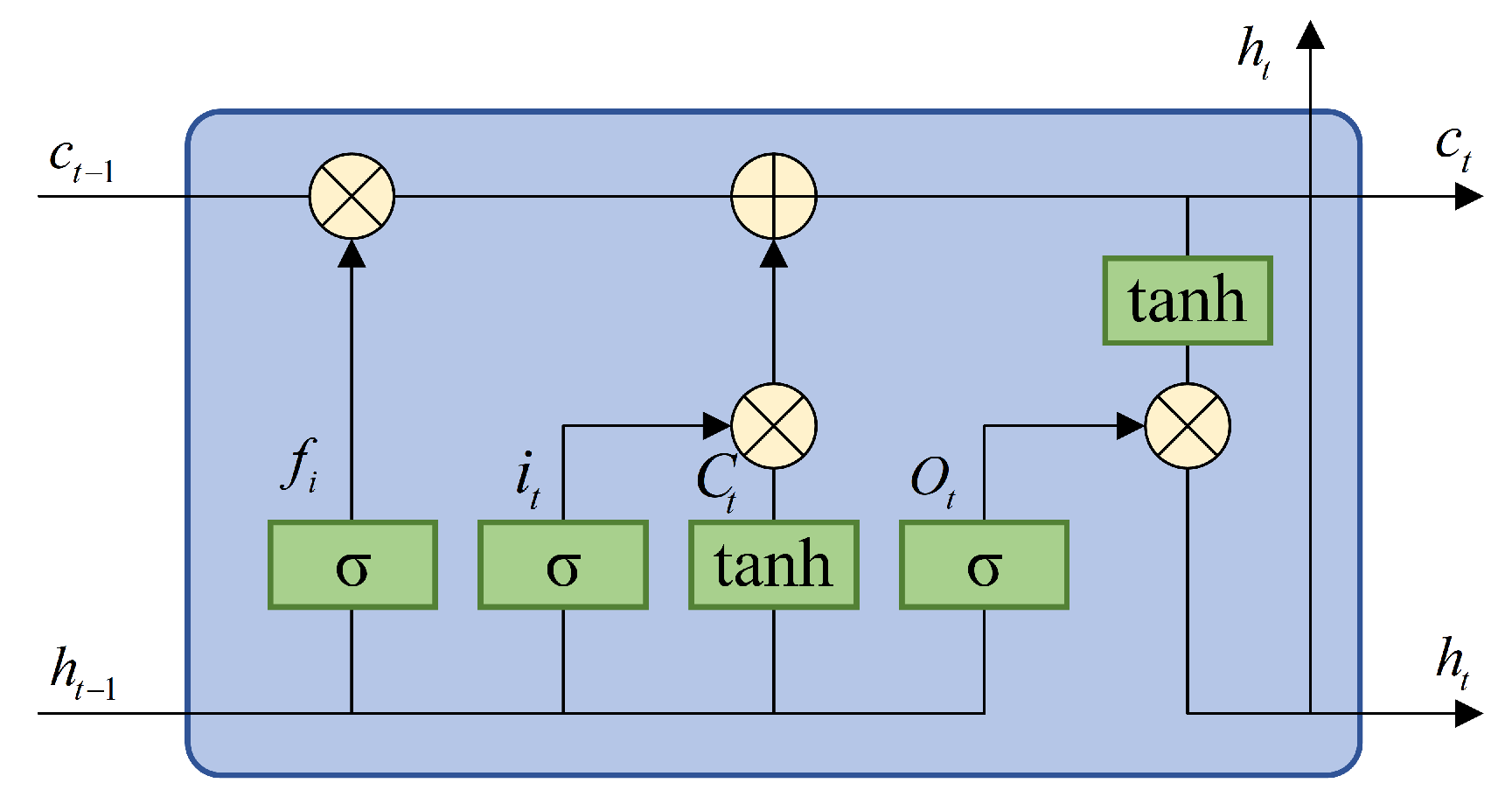

2.4. Long Short-Term Memory Network

Due to the inherent characteristics of POMDP problems, directly applying the PPO algorithm to generate guidance strategies may result in poor performance or even failure to converge. To address this issue, we consider incorporating a recurrent layer into the algorithm to extract hidden information from the observation sequences.

Long short-term memory (LSTM) [

35] is a class of neural networks with memory capabilities, designed to handle sequential data, and its structure is illustrated in

Figure 5. It is capable of leveraging hidden states to capture temporal information in sequential data. The core of LSTM lies in its memory cell state, which uses a gating mechanism to determine which information should be retained, updated, or discarded. This mechanism mainly includes the forget gate

, the input gate

, and the output gate

. The basic formulas are as follows:

where

and

are the parameters of the LSTM network.

4. Numerical Simulation

4.1. Training Process

As described in

Section 2.2, the initial states of both the defender and the UAV are randomly initialized during training to ensure the universality of the trained strategy. Considering the defender’s detection frequency, a fourth-order Runge–Kutta integrator with a step size of

is employed in the experiment. The value of

m in Equation (

9) is set to 8.

Table 3 lists all the hyperparameters used in the training process.

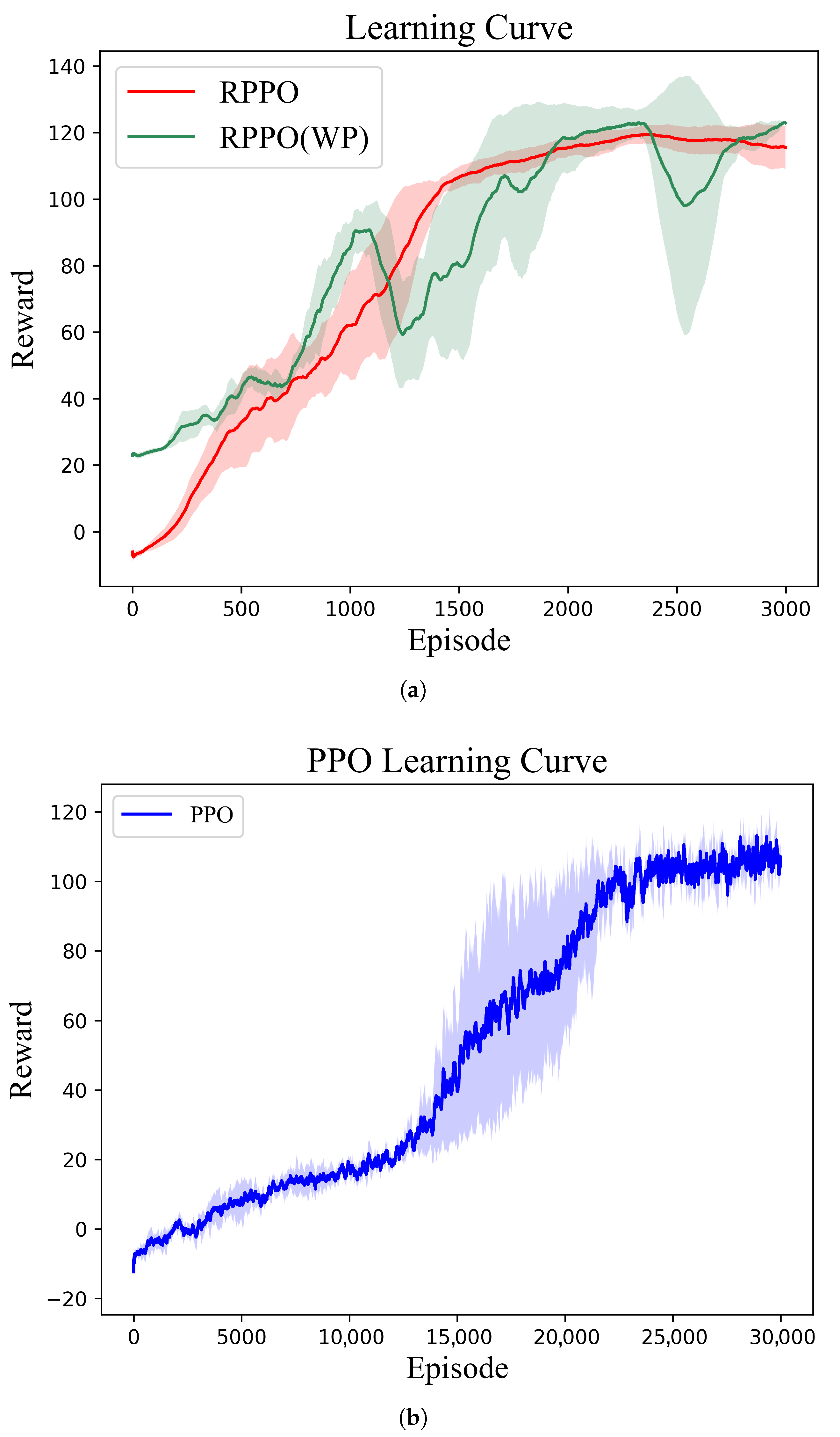

The proposed RPPO guidance law was trained in the above random scenario, and the learning curve after 3000 training episodes is shown in

Figure 9a. It is evident that in the first 1500 episodes of training, the average reward of RPPO gradually increased, after which it slowly rose and stabilized, indicating that the trained policy network had converged.

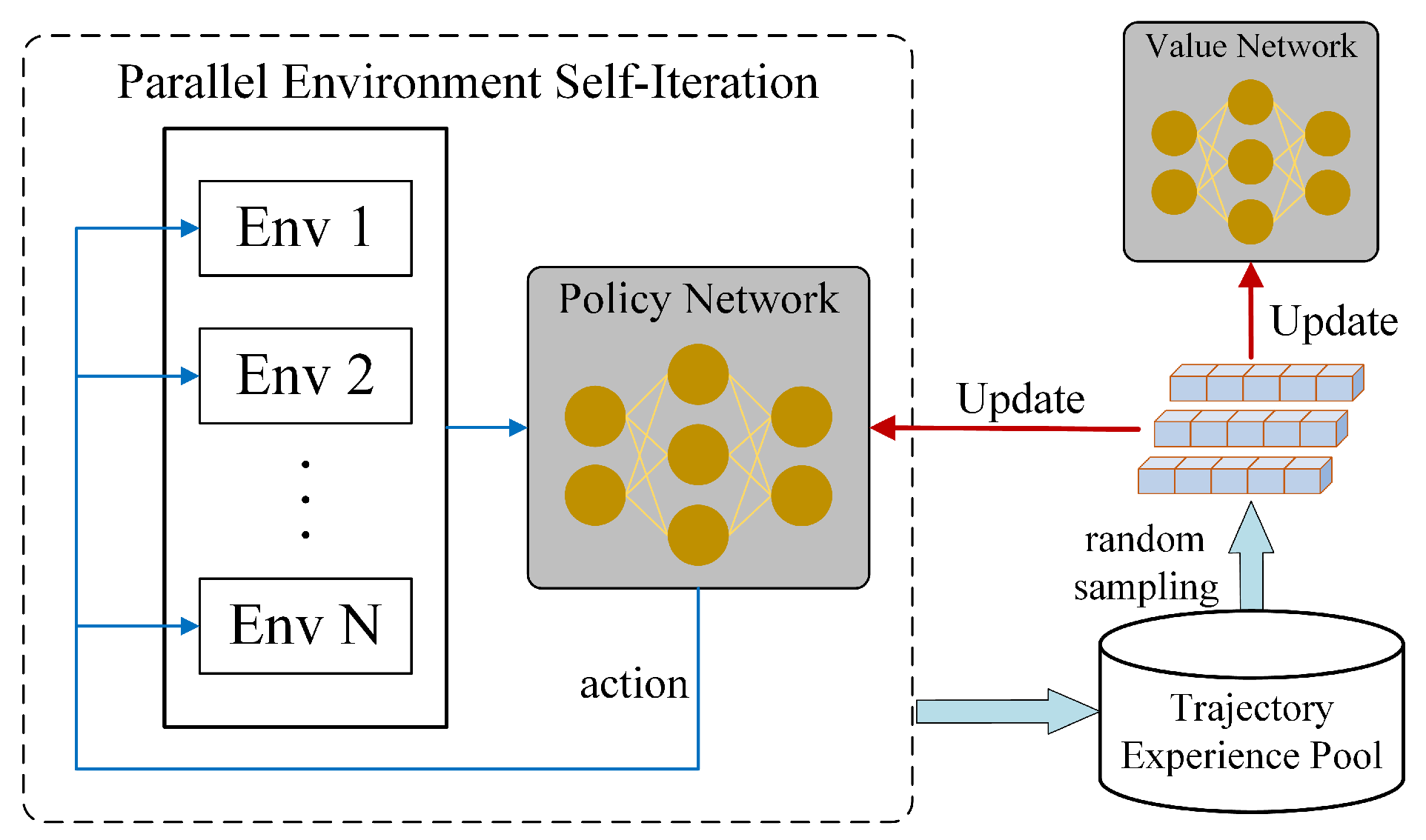

We also employed the classical PPO algorithm for training, with the learning curve being shown in

Figure 9b. The classical PPO method exhibits low learning efficiency in complex environments; it takes approximately 25,000 episodes to converge to a suboptimal solution, with the reward curve being unable to continue rising to the maximum. In contrast, our proposed RPPO method employs a multi-environment parallel computing framework that performs simultaneous iterations in diverse environments, thereby improving data utilization and accelerating training. Moreover, incorporating a recurrent network into the actor–critic architecture mitigates the impact of partial observability, enabling the agent to learn a superior strategy.

Furthermore, we conducted tests on the predictive reward term. As shown in

Figure 9a, when all other conditions remain unchanged, but the reward does not include predictive information, the reward curve exhibits greater fluctuations and converges more slowly. This highlights the crucial role of the predictive term in the reward design, which effectively guides the network to converge rapidly and learn a good strategy in sparse reward scenarios.

In conclusion, the proposed RPPO algorithm framework enhances training speed, alleviates the negative effects of partial observability, and improves the stability of the training process.

4.2. Tests in the Training Scenario

To verify the performance of our proposed guidance law based on the RPPO framework, we conducted the following simulation tests on the trained model:

We first conducted tests in the environment introduced in

Section 2, comparing the APNG law methods as shown in Equation (

19). Among them,

,

denotes the estimation of defender acceleration.

We conducted 1000 Monte Carlo simulations for each of the different methods, and the results are presented in

Table 4. The results indicate that the proposed RPPO guidance law framework achieves an interception rate of 95.3%, with an average miss distance of 1.2935 m and a miss distance variance of 0.5493 m

2. Compared to the classical APNG law, the performance of the proposed guidance law has been improved. Under conditions of limited observability and maneuverability, the RPPO guidance law framework is still capable of achieving a high interception rate, demonstrating its practical engineering significance.

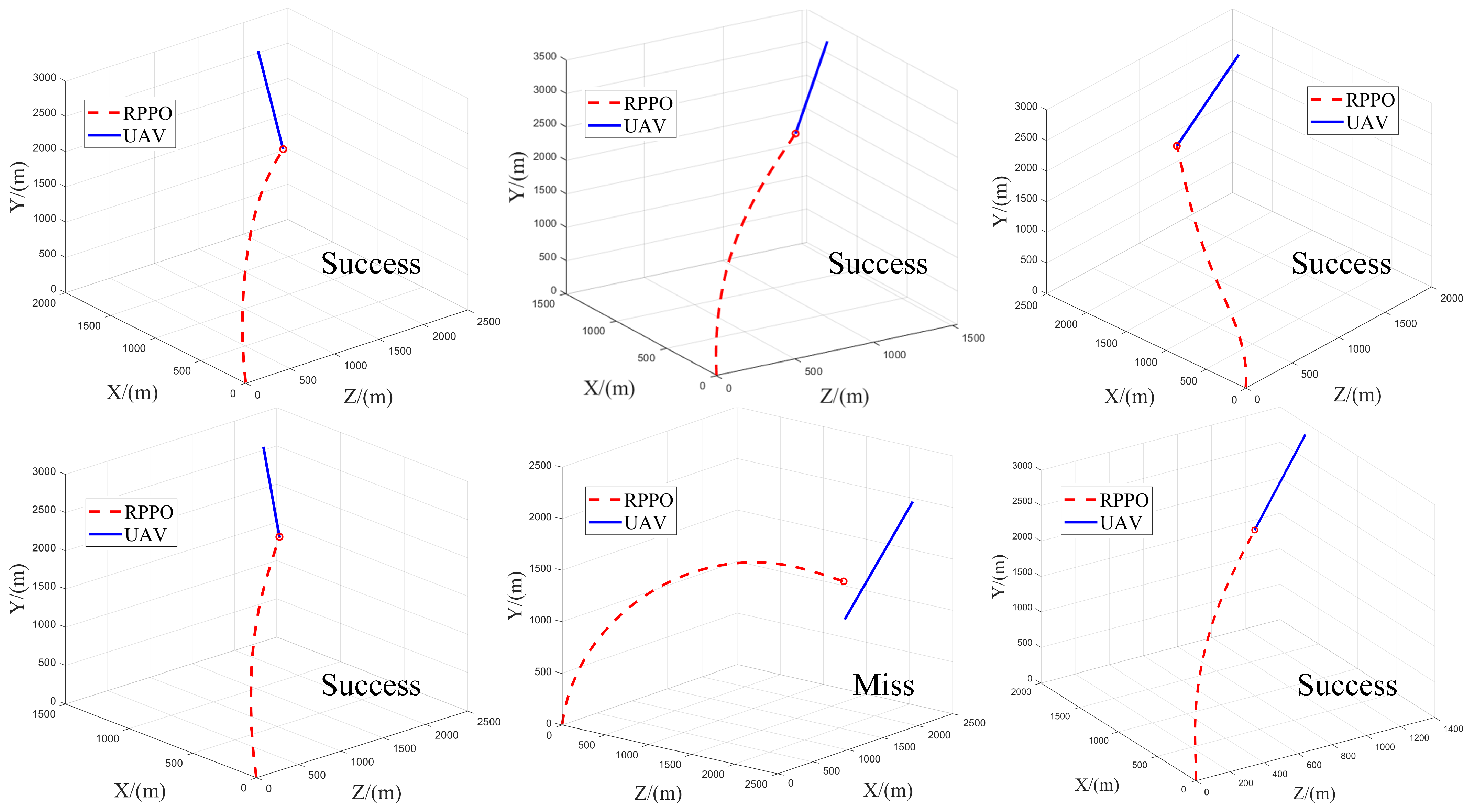

We have visualized a portion of the trajectories, as shown in

Figure 10. From the visualization results, it can be seen that the proposed RPPO guidance law framework effectively confines the overload to the front end. If the initial launch angle difference between the defender and the UAV is too large, such that the interception process exceeds the defender’s maneuverability, the defender will be unable to intercept the UAV. In all other scenarios, the defender can effectively intercept the UAV through maneuvering.

Figure 11 illustrates the engagement scenario of the defender and the UAV, including the flight paths of both the defender and the UAV, as well as the defender’s normal acceleration curves. In this scenario, the initial distance

, and the LOS angle

,

. The RPPO and APNG methods both successfully intercept the UAV, with miss distances of

and

, respectively.

By analyzing the information in

Figure 11a,b, it is evident that the guidance law based on the RPPO framework effectively constrains the trajectory overload distribution. This design concentrates the trajectory overload in the initial phase, leaving sufficient margin for subsequent maneuvers. In contrast, traditional guidance laws primarily focus on the overload in the terminal phase, requiring a much higher overload than available, which can easily result in a miss. This clearly demonstrates the effectiveness and necessity of the RPPO-guided law framework design.

4.3. Generalization to Unseen Scenarios

The above tests demonstrate the advantages of the proposed RPPO guidance law framework in the training scenarios. This section will evaluate the adaptability of the trained policy to new scenarios, specifically examining the generalization capability of the policy. During the training process, we only considered the case of a UAV moving at a constant velocity. To further assess the defender’s interception rate when the UAV’s maneuvering mode changes, a new maneuvering mode for the UAV is assigned, as shown in Equation (

20).

Here,

is defined as the unit step size. It means that the UAV is performing a sinusoidal maneuver in the z-axis direction. We also conducted 1000 Monte Carlo simulations, and the results are shown in

Table 5. The findings indicate that even in previously untrained scenarios, the policy trained using RPPO exhibits strong generalization capability, with an interception accuracy exceeding that of traditional guidance law algorithms.

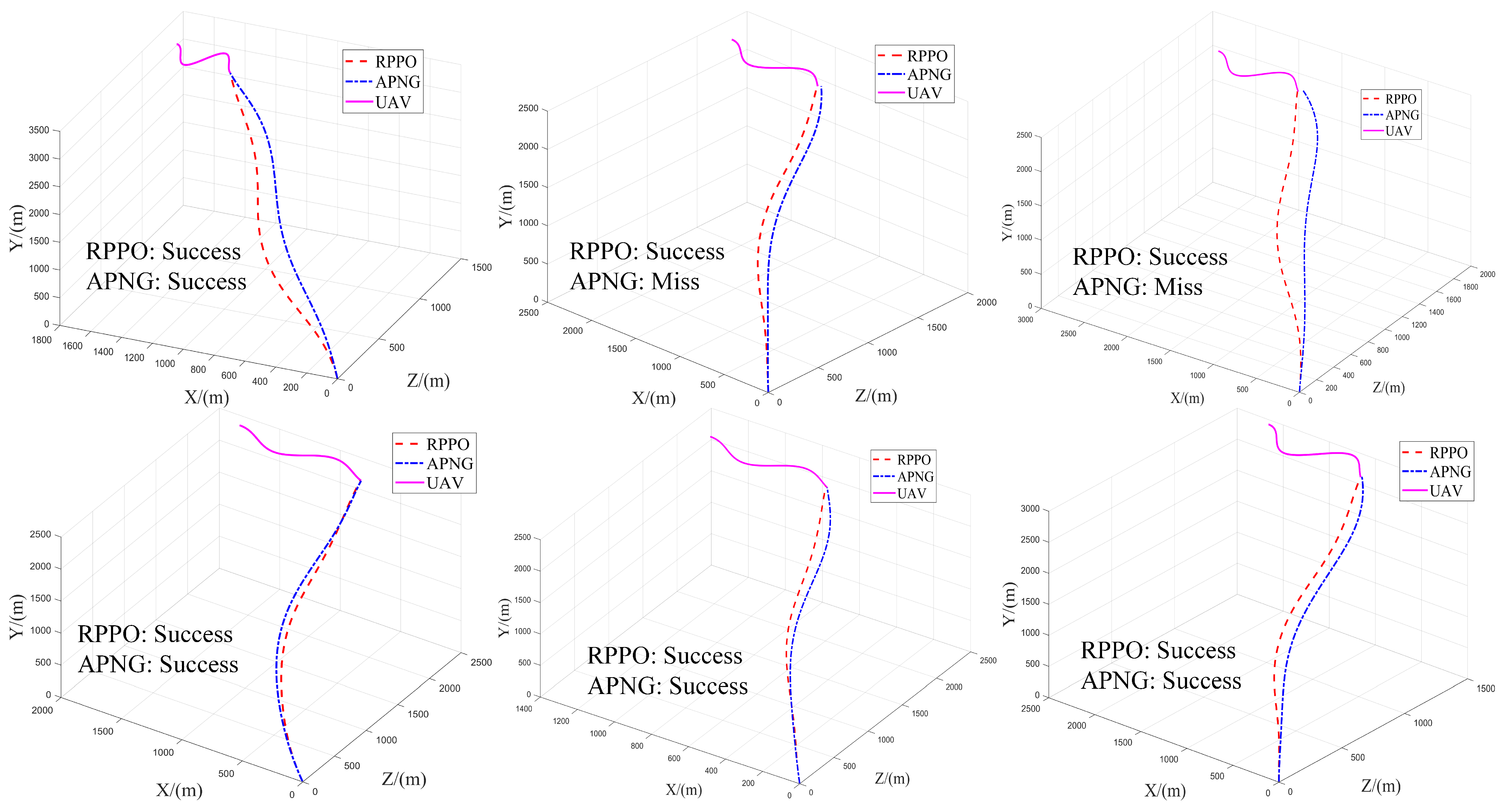

We also visualized some trajectories, as shown in

Figure 12. It can be seen from the figure that in the face of the UAV’s complex maneuvers, the RPPO guidance law is still able to intercept the UAV effectively, whereas the APNG method does not handle this situation well.

The reason for the strong generalization ability of the RPPO guidance law framework lies in its focus on the relative relationship between the defender and the UAV during training, while disregarding their individual motions. Additionally, the RPPO guidance law restricts the defender’s maneuvering to the early stage of the trajectory, thereby preserving maneuvering margin for the later stage. This approach allows the low-overload defender to handle the UAV maneuvers better and improves its hit accuracy.

In actual engagement scenarios, UAVs typically adjust their maneuvering strategies based on the level of threat. To simulate this sudden maneuvering behavior, we define the following: When the relative distance between the UAV and the defender is greater than a certain threshold, the UAV will switch its maneuver mode. Specifically, when the relative distance

R exceeds 1000 m, the UAV maintains a constant-speed maneuver, as described in

Section 4.2. The initial velocity of the UAV is set within the range of 50 m/s to 80 m/s. However, when the relative distance

R drops below 1000 m, the UAV switches to a new maneuver mode, performing sinusoidal maneuvers along the

Z-axis, while the velocities in the

X-axis and

Y-axis remain unchanged.

For the scenario described above, we also conducted a Monte Carlo simulation, and the results are presented in

Table 6. From these results, it can be observed that even in the face of the UAV’s sudden maneuvers, the RPPO guidance law still demonstrates excellent performance.

4.4. Noise Robustness Test

Considering the uncertainties brought by noise in real-world scenarios, the proposed RPPO guidance law is further required to possess robustness. Specifically, Gaussian white noise is added to the observation data, as described in Equation (

21),

where

.

The tests are conducted in the scenario presented in

Section 4.3, where different values of variance

are selected to evaluate the interception rate and the miss distance. The variance

of the environmental noise, which follows a normal distribution, is set to values of 0, 0.1, 0.2, 0.3, 0.4, and 0.5.

Figure 13 presents the probability density curves under different variance conditions.

For each noise setting, we performed three independent Monte Carlo runs with 1000 episodes each and reported the averaged results across these runs. The final results are shown in

Table 7.

Based on the experimental results, the proposed RPPO guidance law demonstrates superior performance compared to the APNG guidance law when exposed to noise with varying levels of variance. In all cases, the RPPO guidance law demonstrates a higher interception rate and a smaller miss distance, indicating stronger robustness against the unknown noise.

4.5. Significance Testing Experiment

We conducted a significance analysis of the above results, and the findings are presented in

Table 8. The analysis indicates that the RPPO guidance law significantly outperforms the APNG guidance law in terms of statistical significance, effect size, and Bayes factor.

Case 1 corresponds to the test results in

Section 4.2, Case 2 corresponds to the test results in

Section 4.3, and Cases 3 to 7 correspond to the test results in

Section 4.4 with variance

values of 0.1, 0.2, 0.3, 0.4, and 0.5, respectively.

The p-values for all seven cases are much smaller than 0.05, indicating that the differences in each case are statistically significant. For instance, Case 2 achieves a t-value of 9.003 (), an effect size of 0.226, and a Bayes Factor of 4.624 , demonstrating a very strong difference between the two algorithms. In Cases 3 to 7, as the variance increases, the t-value, effect size, and Bayes Factor all gradually increase, especially in Case 6 and Case 7, where very strong differences are observed. These results conclusively highlight the exceptional performance of the RPPO guidance law.