1. Introduction

In the present era of rapid iteration in artificial intelligence technology, it has transcended the boundaries of computer science, deeply permeating multiple disciplines including mathematics, physics, and information theory [

1,

2,

3]. The maturation of technologies such as big data and cloud computing has further established a broad platform for the cross-sector integration of artificial intelligence with various industries [

4,

5,

6,

7,

8,

9]. Within the aviation sector, this convergence is profoundly reshaping the industry’s developmental landscape [

10,

11], accelerating the evolution of traditional air confrontation paradigms towards intelligent and autonomous systems. Among these, experimental aircraft platforms serve as the pivotal conduit linking intelligent air confrontation technology theory with engineering practice [

12,

13,

14]. Their technological advancement and operational deployment directly dictate the pace of aviation’s intelligent transformation, establishing them as a critical research focus within contemporary aeronautics [

15].

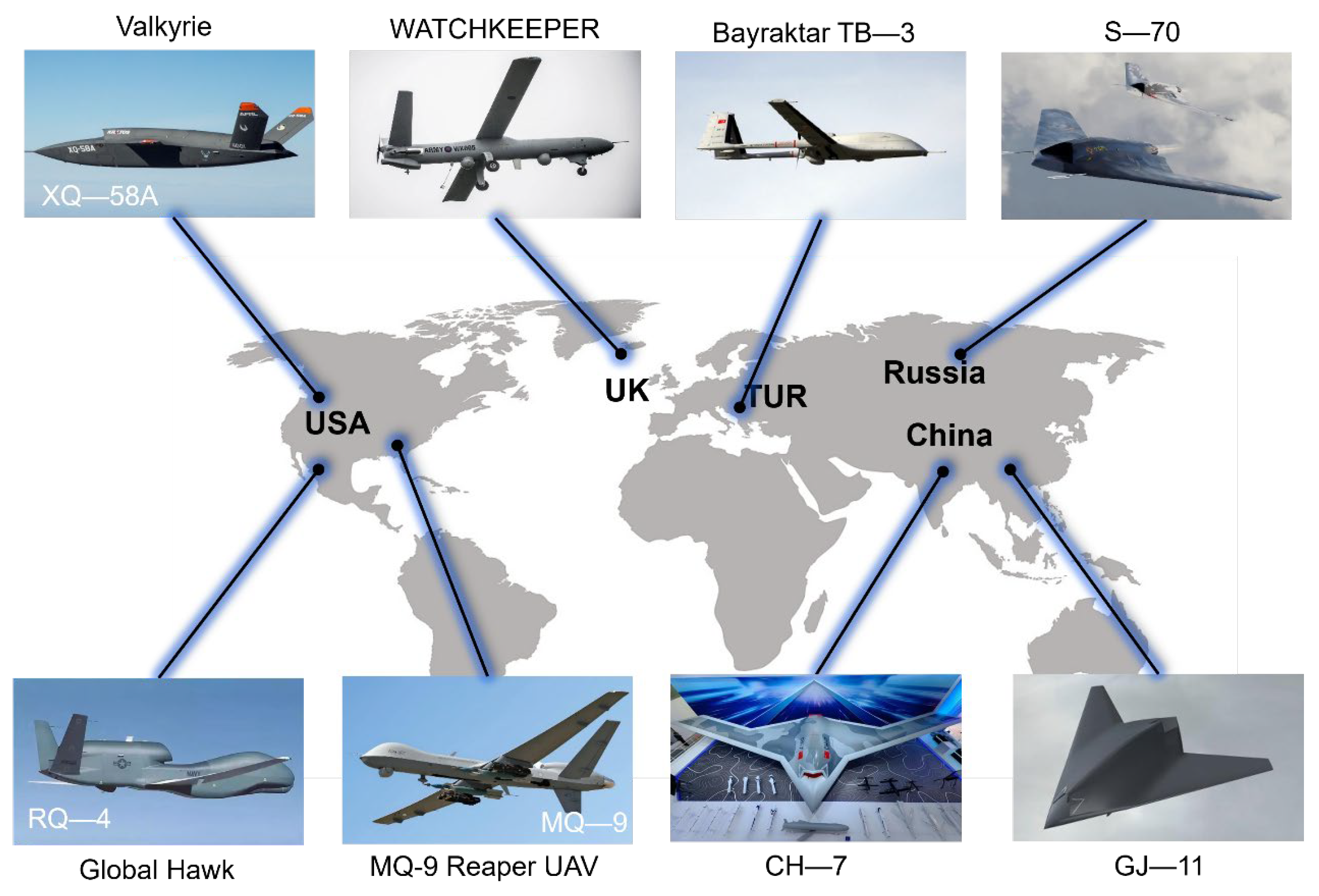

At present, the application of AI technology in advanced unmanned aerial vehicles worldwide has taken on a diverse and high-end trajectory, with particularly significant achievements in the field of unmanned aerial vehicle-manned aircraft collaborative operations [

16,

17,

18,

19]. For instance, the American XQ-58A Valkyrie employs AI systems to achieve coordinated reconnaissance and strike operations with manned aircraft, while China’s CH-7 has demonstrated the confrontation effectiveness of intelligent payloads in multi-mission scenarios (as illustrated in

Figure 1). From a technological application perspective, AI continues to permeate aircraft operation, design, and integration; at the operational level, AI can assist in developing Tactical Decision Generators (TDGs) for close-range aerial confrontation [

20,

21]. It can also construct adaptive air confrontation simulation programs, providing pilots with dynamic adversarial training whilst also enabling offline performance analysis of fighter aircraft and weapon systems [

3,

22,

23,

24]. The simulation program developed by George H. Burgin’s team achieves real-time simulation of one-on-one air-to-air confrontation scenarios. At the design level, a genetic algorithm-based tactical generation system can automatically devise new maneuvering strategies for experimental fighters such as the X-31 through digital air confrontation simulation [

25,

26,

27,

28,

29,

30,

31]. Approximate dynamic programming (ADP) technology enables drones to autonomously execute real-time decision-making within complex aerial confrontation environments without requiring predefined tactical rules. Research by McGrew et al. has demonstrated its value in balancing tactical responses with strategic planning [

32,

33,

34,

35,

36,

37,

38,

39]. At the integration level, automated testing frameworks leverage Operational Design Domain (ODD) models to generate synthetic data, enabling verification of AI safety and performance within simulation environments for novel airborne collision avoidance systems (ACAS X) [

40,

41,

42]. Meanwhile, the test aircraft platform flexibly integrates multi-source AI algorithms and sensor systems through a Modular Open System Architecture (MOSA). For instance, the X-62A can rapidly incorporate Model Following Algorithms (MFAs) and Simulated Autonomous Control Systems (SACSs), achieving hardware–software decoupling and rapid technological adaptation [

21,

43,

44,

45]. This research falls under the field of “test verification adaptation” within the integration direction. Its core lies in establishing a technical implementation framework that deeply integrates intelligent technologies with flight testing, covering aspects such as requirement analysis, intelligent test aircraft platform construction, and ground verification. Although it does not directly involve design optimization or geometric optimization, it provides high-fidelity verification support for design optimization results (such as new aerodynamic layouts) and simultaneously offers a flight test verification carrier for AI applications in the operation direction (such as the autonomous control algorithm of X-62A). It forms a collaborative closed loop with the design and operation directions in terms of “design–verification–application”, supporting the transformation of air confrontation modes from “human-in-the-loop” to “Autonomous Gaming”.

However, the application of artificial intelligence in the aviation sector continues to face significant bottlenecks: most achievements remain confined to laboratory proof-of-concept stages, lacking comprehensive validation in real-flight scenarios, which limits their practical utility [

50,

51,

52,

53,

54,

55]; certain intelligent algorithms exhibit insufficient adaptability and robustness in complex electromagnetic environments and extreme meteorological conditions typical of real battlefield situations; moreover, model generalizability across diverse aircraft types and operational contexts remains poor, hindering large-scale deployment [

56,

57,

58,

59]. To overcome these challenges, leading aviation powers worldwide are intensifying efforts in experimental aircraft platform development. The U.S. military’s selected X-62A test aircraft, modified from the F-16D, features a highly flexible open-system architecture capable of rapid integration of various AI algorithms while simulating flight control systems and autonomous characteristics of different aircrafts. It has successfully implemented deep reinforcement learning-driven tactical decision-making in the Autonomous Air Confrontation Operations (AACO) program [

60,

61,

62] and, notably under the Air Confrontation Evolution (ACE) program [

63,

64,

65,

66], achieved the first-ever AI-controlled complex air confrontation maneuvers in a full-scale fighter jet, outperforming human pilots. This milestone marks the advent of a new era in autonomous air confrontation capabilities [

67].

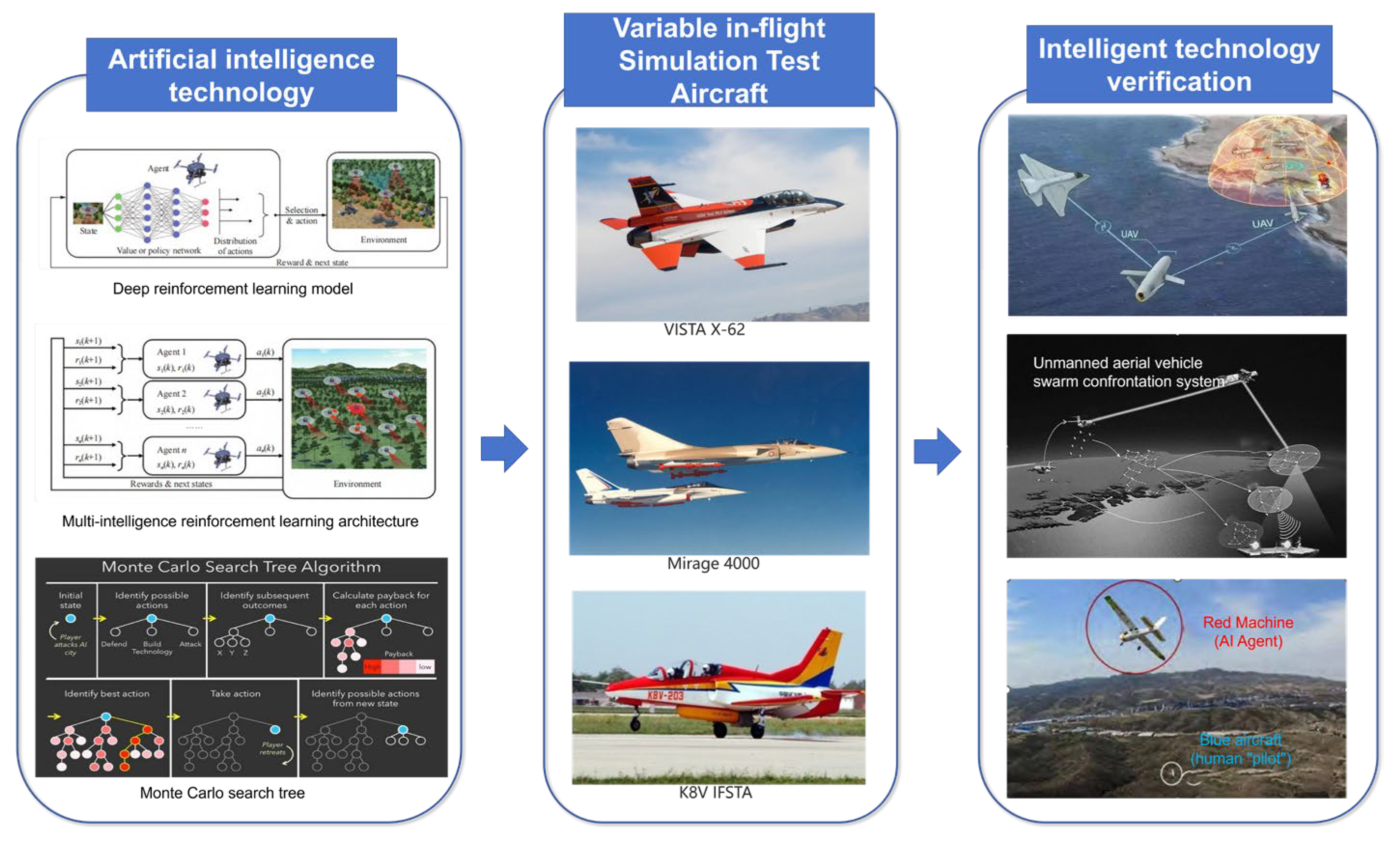

Although flight simulation and confrontation simulation systems, supported by AR/VR and large-scale model technologies, have already demonstrated the ability to replace some actual test flights, the practical application of any intelligent technology must undergo rigorous verification in a real flight environment—only by replicating extreme conditions on the test aircraft platform, testing system redundancy, and verifying human–machine collaboration, can the reliability of the technology in complex battlefield environments be ensured, as shown in

Figure 2. Based on this, this paper conducts research from the following four dimensions: first, it systematically reviews the technological evolution path of the test aircraft platform and clarifies its position in aviation intelligence; second, by combining typical cases such as X-62A and X-37B, it deeply analyzes the efficiency enhancement advantages of intelligent technology empowering the test aircraft platform and reveals the technical value of their deep integration; third, it constructs a technical implementation framework for the deep integration of intelligent technology and flight tests, covering key links such as requirement analysis, platform construction, ground verification, and technical assessment, integrating core technologies such as network security, data management, and human–machine collaboration; fourth, it summarizes the theoretical support of this framework for the deep integration of AI and aviation, providing a clear path for the engineering application of intelligent air confrontation technology. Through the above research, it helps transform the air confrontation mode from “human in the loop” to “autonomous competition”, enhances the intelligence level and confrontation effectiveness of the aviation field, and provides a reference for the subsequent research and verification of intelligent test aircraft platforms.

The core contributions of this study are as follows: firstly, it systematically integrates the interrelated logic of “AI technology-test aircraft platform-intelligent air confrontation” for the first time, filling the gap in the current literature lacking a systematic review of their collaborative integration. Secondly, the advantages of the test aircraft platform provide practical guidance for intelligent air confrontation technology R&D. For instance, the “high-efficiency iterative verification” feature of the X-62A can guide the shortening of AI algorithm flight test cycles. Thirdly, the established technical implementation framework enables the full lifecycle of AI algorithms—from design and ground-based virtual validation to flight testing—providing a standardized engineering pathway for transforming intelligent air confrontation technology from “theory” to “operational deployment”. This directly supports the evolution of air confrontation modes from “human-in-the-loop” to “autonomous competition”, thereby enhancing the intelligence level and confrontation effectiveness within the aviation domain.

The structure of this thesis is as follows: the first part is the introduction, which elaborates on the development background, application status, and research significance of artificial intelligence in the aviation field, and clarifies the core value of the test aircraft platform. The second part analyzes the application status and development prospects of the test aircraft platform, including its connotation definition, domestic and international development history, and the integration technology details of artificial intelligence with typical test aircrafts such as X-62A and X-37B. The third part summarizes the four core advantages of the test aircraft platform in the research of intelligent technology through the analysis of three major typical intelligent air confrontation projects. The fourth part constructs a technical implementation framework for the deep integration of intelligent technology and flight experiments and elaborates on the core contents and key technologies at each level. The fifth part is the summary and outlook, where the research results of the entire paper are summarized, and the development direction of artificial intelligence technology and the test aircraft platform in the aviation field in the future is pointed out.

2. Application Status and Development Prospects of Experimental Aircraft Platforms

To clearly demonstrate the core value of the test aircraft platform in the verification of intelligent air confrontation technology, this section will discuss from three major dimensions: “definition of essence, development context, and AI integration practice”. Firstly, it will clarify the essential attributes and classification characteristics of the test aircraft platform, and analyze the broad scope of “airborne laboratory” and the technical definition of the variable stability aircraft as a key subcategory. By comparing the core differences between variable stability aircraft and general test aircraft, it will clarify the boundaries of their functional positioning, technical characteristics, and application scenarios. Secondly, it will review the developmental history of test aircraft platforms at home and abroad, from the US X series and the Soviet/Russian Tu-154LL to the Chinese BW-1 and other typical cases, and trace the technological evolution path from mechanical performance verification to intelligent integration. Finally, it will focus on the deep integration of AI and the test aircraft platform, with the X-62A and X-37B as core cases, analyze the application breakthroughs of AI technology in autonomous decision-making, collaborative confrontation, open architecture, etc., and supplement the integration practices of the X-47B, J-10B/C vector verification aircraft and other platforms, comprehensively revealing the key role of the test aircraft platform as a carrier for AI technology verification, and laying the foundation for subsequent analysis of its core advantages and the construction of a technical framework.

2.1. The Connotation of Experimental Aircraft Platform

With the rapid development of technology, various new systems and new technologies developed by industries such as aviation, aerospace, engines, and radar electronics are emerging one after another. To verify the functions and performance of these new systems in the air, it is necessary to modify an aircraft as a test aircraft (referred to as a test machine) [

68,

69], and install these new systems on the test machine. The modification of such aircraft is called the “air laboratory” modification of the new system.

“The airborne laboratory” is a broad concept that encompasses various types of modified test aircraft, such as airborne flight simulators, thrust vector verification aircrafts, radar and electronic test aircrafts, integrated avionics test aircrafts, and ice-water spray test aircrafts, etc. Among them, the “airborne laboratory” specifically designed to simulate the flight performance of different aircraft is called an “airborne flight simulator” or a Variable Stability Aircraft. It is an airborne flight test platform that uses a variable stability fly-by-wire control system and a variable human sensation system to change the basic flight dynamics characteristics, stability, and controllability of the aircraft, and can conduct high-fidelity flight simulations of the flight characteristics and human–machine interface of new aircrafts and advanced weapon systems [

70,

71]. In addition, there is another type of modified test aircraft, which is similar to a set of “evaluation” systems tailored for new aircraft designs. Only when this testing system is installed can a new aircraft be called a true test aircraft. During the flight test process, the testing system can obtain real-time data on various functions and performance indicators of the aircraft, thereby determining whether the aircraft can meet the design expectations, including safety, stability, flight speed, altitude, and range, etc.

In a broad sense, the variable stability aircraft (an airborne test platform that uses a variable stability fly-by-wire control system and a variable human–machine perception system to achieve active adjustment of its basic flight dynamics characteristics, stability, and maneuverability parameters without changing the hardware physical attributes such as geometry structure, control surface area, or actuator response time, and instead relying on software logic and parameter configuration to replicate the flight quality of other target aircraft with high fidelity) can be regarded as a type of test aircraft. The differences between the two are shown in

Table 1.

2.2. The Development Status of Experimental Aircraft Platforms

Next, we will focus on analyzing the current application status and future development prospects of the testing machine (including the stabilizing machine).

The X series of test aircrafts have been pioneers in the exploration of American aerospace technology since the 1940s [

72,

73,

74,

75]. From the first X-1 breaking the sound barrier to the X-15 setting records for speed and altitude, and to the X-20 proposing the concept of space-aircraft, the X series test aircrafts have continuously pushed the boundaries of aerospace technology. In the era of unmanned intelligentization, the X-45/X-47 pioneered unmanned confrontation aircrafts, the X-56 explored flexible wing technology, providing technical reserves for future unmanned and manned aircrafts. The X-62, as a variable stability test aircraft based on the F-16 modification, further pushed the research on flight control in the transition to unmanned intelligentization to new heights. Additionally, the long-term in-orbit operation of the X-37B small space aircraft and the research on the X-59 silent supersonic technology both demonstrated the outstanding capabilities of the X series test aircraft in aerospace integration and new concept verification. The success of the X-60 hypersonic flight verification propulsion technology laid a solid foundation for the verification of hypersonic weapon systems.

Apart from the X-series test aircraft of the United States, other countries have also achieved remarkable accomplishments in the field of test aircrafts. For instance, the Tu-154LL test aircraft of the Soviet Union/Russia was used for automatic landing systems and a series of other tests, making significant contributions to the development of Soviet aviation technology [

76]. Although the Russian Tu-154LL test aircraft platform did not deeply integrate AI technology, its practice in the “extreme environment flight verification” field provided an alternative approach for reducing technical framework risks—this platform was long used for testing automatic landing systems and flight control systems, capable of replicating extremely cold conditions of −50 °C and strong sandstorms, and its environmental adaptability verification took precedence over the technical path of integrating AI functions. This formed a complementarity with the path of the X-62A first achieving AI autonomous decision-making and then expanding environmental adaptability, proving that the evolution of the test platform technology has two alternative directions: prioritizing environmental adaptation and prioritizing intelligent functions, both of which can support the implementation of intelligent air confrontation technology. At the same time, the Soviet/Russian Tu-154M-LL (FACT) CCV was modified into a full-freedom flight simulator to verify new technologies related to the control and flight data display of future transport aircraft and passenger aircraft. In Europe, Dassault’s “Phantom” 2000, as the first fully electrically controlled aircraft in Europe, used the “Phantom” 4000 as a technical verification aircraft to conduct aerodynamic characteristic research and test flights for the near-coupled delta configuration, accumulating rich design experience [

77]. Additionally, Dassault’s “Fastrak” RBE2 radar, as the first electronic scanning airborne phased array radar developed in Europe, also conducted a large number of test flights on aircraft such as “Phantom” 2000, promoting the development of European avionics technology. The European “Phantom” 2000, as a verification carrier of full electric control technology, first conducted ground simulation verification of flight control logic and then carried out test flights following the logic of “ground verification–flight evaluation”, but the Phantom 2000 focused more on “flight control system and radar collaborative verification” rather than the AI autonomous decision-making of the X-62A. This difference also reflects the differentiated development paths of test platforms under different technical requirements, providing practical support from a non-American perspective for the framework.

Figure 3 shows the development history of test aircraft worldwide.

The stabilizing machine is also a type of testing machine. Since the 1940s, many countries have made significant progress in this field. Among them, the United States, Germany, and Russia have been particularly outstanding in the research and application of stabilizing machine technology.

The United States holds a leading position in the field of flight simulation. The NT-33A stabilizer developed in 1952 was widely used in flight control systems, human–machine interfaces, and the verification of flight quality specifications, becoming the most widely used and longest-lasting airborne flight simulator in the world [

79]. In addition, the United States also developed the Learjet-24 stabilizer, the F-104G CCV stabilizer, and the NF-16D VISTA stabilizer (now known as X-62A) [

80,

81]. These models all achieved remarkable accomplishments in the field of stabilizers. Germany developed the ATTAS (Advanced Technology Testing Aircraft System) advanced technology verification aircraft in the 1990s, mainly for researching flight control systems, active aerodynamic control, and aircraft stability [

82]. Russia converted the Tu-154 passenger aircraft into the Tu-154LL stabilizer for the verification of automatic landing systems and flight control systems. Moreover, after the mid-1990s, several flight control system test platforms emerged, including X-45, X-47B, and T-50A [

83]. Although they are not all traditional “stabilizers”, they all involve the research and testing of aircraft design, flight stability, and flight control systems.

These stabilizing machines not only promoted the development of aviation technology in various countries, but also provided valuable data support for the design of future aircraft and the innovation of control systems.

2.3. Technical Analysis of the Integration of Artificial Intelligence and Test Aircrafts

In terms of the integration of artificial intelligence technology and autonomous stabilization systems, certain achievements have been made. Among them, the X-62A autonomous stabilization aircraft serves as the core test platform, successfully supporting multiple cutting-edge projects such as the US military’s autonomous air confrontation operations (AACO), Skyborg Vanguard program, and Air Confrontation Evolution (ACE), achieving full-chain support from algorithm verification to practical testing [

84,

85,

86]. This section will focus on

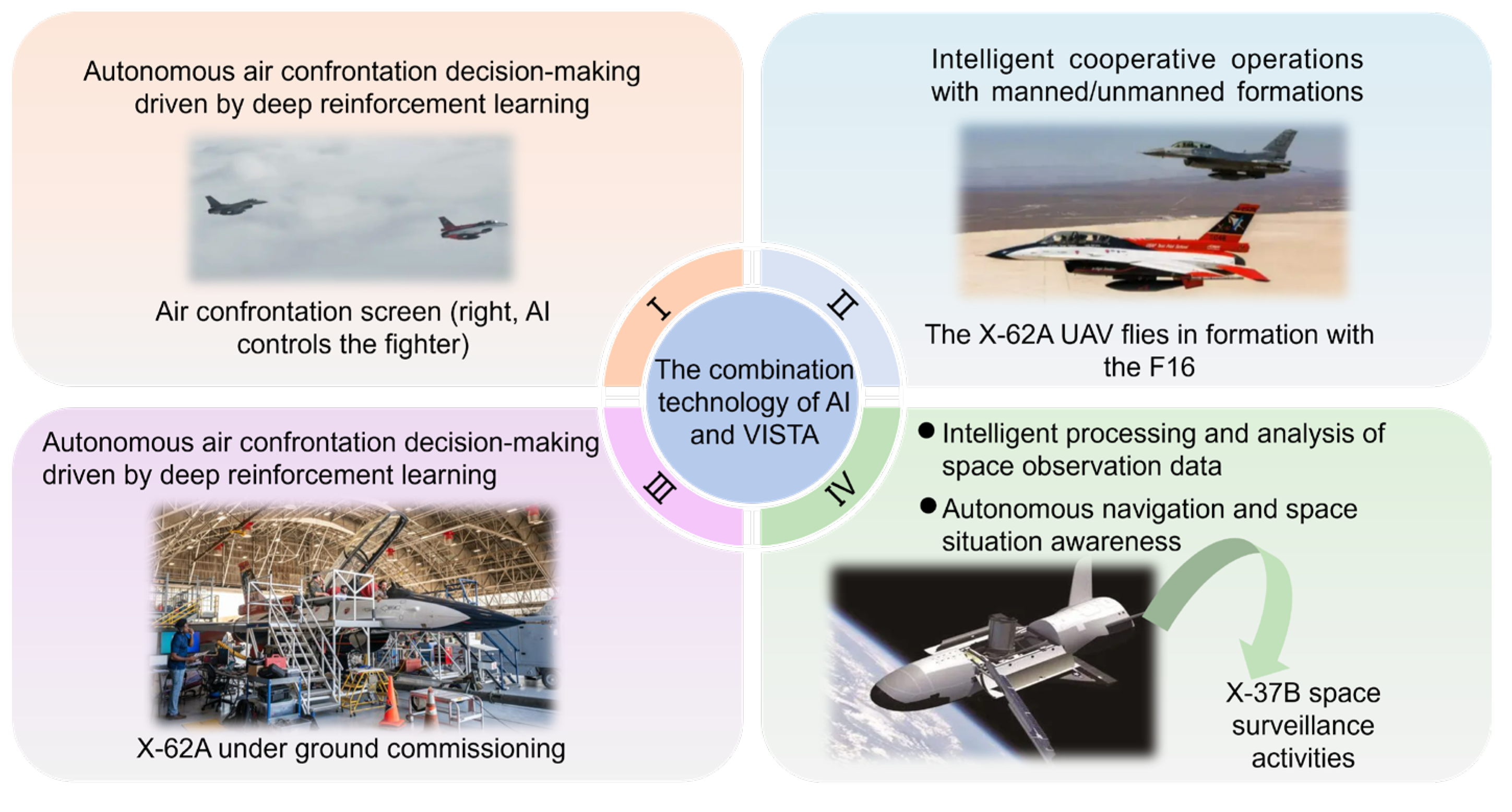

Figure 4, conducting in-depth analysis of the current application status and development prospects of X-62A and X-37B test aircrafts in the field of artificial intelligence, as well as analyzing the integration of X-47B, J-10B/C vector verification aircraft, and other related projects with artificial intelligence.

2.3.1. The Integration of X-62A and Artificial Intelligence Technology

In the DARPA “Air Confrontation Evolution” project, the AI system achieved its first close-range confrontation with human pilots [

87]. Through self-battle simulation algorithms, the AI demonstrated innovative tactics that surpassed traditional methods in the simulated battles. Its decision-making response speed exceeded the microsecond level, and it could dynamically adjust strategies based on the tactical habits of human pilots. When humans adopted the traditional “scissors maneuver”, the AI could autonomously generate asymmetric tactics. When facing sensor data loss caused by electromagnetic interference, it could re-construct the situation and optimize decisions through multimodal fusion, completing the confrontation loop without human intervention. This is precisely the core feature that distinguishes “Autonomous Gaming” from simple autonomous execution, and it also verifies the supporting value of the variable stability platform for high-level autonomous capabilities.

In the fields of intelligent collaborative operations and manned/unmanned formations, as the core concept of the “Loyal Assistant Aircraft” program, the X-62A builds a real-time collaborative network with manned aircraft (such as the F-35) through the Link16 data link. The AI system can autonomously analyze the distribution of battlefield threats, provide attack position suggestions, electronic warfare support, and other decision-making assistance to the lead aircraft, and even actively undertake reconnaissance tasks. In the “Collaborative Confrontation Aircraft” project, the X-62A further assumes the role of the “digital commander” for the unmanned wing unit, dynamically allocating strike targets, planning formation routes, and forming a “manned decision-making–unmanned execution” hybrid command mode, significantly enhancing the confrontation effectiveness and survivability in complex tasks [

88]. For example, as a technical extension of the X-62A, the XQ-58A has been equipped with an AI autonomous formation system, which can execute reconnaissance-attack tasks in a manned–unmanned mixed formation and completed its first practical deployment in 2023 [

89].

In terms of open system architecture and rapid technological iteration, the X-62A is designed based on a modular open architecture. This architecture supports plug-and-play third-party artificial intelligence applications, achieving decoupling of hardware and software. For example, it can flexibly integrate Lockheed Martin’s model tracking algorithm (MFA), simulation autonomous control system (SACS), and the variable flight simulation test aircraft simulation system (VSS) provided by Carlspan, thereby supporting the rapid adaptation of multi-source technologies. The core of its architecture is the Oshkosh Factory Enterprise-level Open System Architecture (E-OSA), which drives the enterprise task computer version 2 (EMC2) to run and integrates advanced sensors, multi-level security solutions, and cockpit Getac tablet computer displays. This ensures safety while enhancing system scalability. Through this architecture, the X-62A can quickly update software to adjust flight control logic or AI models, significantly shortening the technical iteration cycle, and using high-frequency test flights to verify algorithm optimization effects, thereby accelerating the development of AI and autonomous capabilities.

The X-62A has achieved a deep integration of autonomy, collaboration, and openness through AI technology. Its technological achievements have been directly applied to the “Loyal Wingman” program, electronic warfare system upgrades, and other fields, becoming a benchmark for future intelligent air confrontation systems.

2.3.2. The Integration of X-37B and Artificial Intelligence Technology

The X-37B space-aircraft test vehicle of the United States is the core technology verification platform of the Space Maneuver Vehicle (SMV) program. Its research and testing profoundly reflect the United States’ exploration strategy in the field of aerospace [

90]. By integrating advanced AI systems, the X-37B successfully achieved an intelligent upgrade in space technology during the OTV-7 mission, particularly in the areas of intelligence processing and mission reconnaissance [

91].

In the field of intelligent processing and analysis of space observation data, the OTV-7 mission has fully demonstrated the core advantages of artificial intelligence in enhancing the processing and analysis of observation data. Through the Generative Adversarial Network (GAN) algorithm [

92], AI can restore details from low-quality images, significantly improving the clarity and color fidelity of the photos. This technology not only optimizes the efficiency of data processing, but also enables intelligent restoration of low-quality images, significantly improving the quality of scientific data and environmental perception capabilities. It provides more efficient intelligence processing capabilities for future space missions.

In terms of autonomous navigation and space situational awareness, its autonomous navigation system can analyze data from multiple sensors in real time, dynamically adjust the flight trajectory through machine learning models, and maintain high-precision maneuvering in complex space environments. According to the latest mission data, X-37B achieved the first autonomous orbit change in a high elliptical orbit during the OTV-7 mission, demonstrating a strong space situational awareness capability.

2.3.3. The Integration of Artificial Intelligence Technology with Other Test Aircraft Platforms

The X-47B focuses on autonomous takeoff and landing capabilities for unmanned carrier-based aircraft and carrier integration technology. It is the world’s first “tail-less, jet-powered unmanned aircraft” requiring no human intervention and operated entirely by computer. Its onboard adaptive mission planning system can dynamically adjust flight paths in real time to respond to sudden sea conditions, with its decision-making algorithms laying the foundation for subsequent Loyal Wingman projects.

By replacing with domestically produced vector engines, the J-10B/C vector verification aircraft was able to perform extraordinary maneuvers such as the “Serpent Maneuver” [

93]. Its flight control system has an adaptive adjustment function, which can simulate the control characteristics of different generations of fighter aircrafts. This provides data support for the optimization of the flight control algorithms of domestic stealth fighter jets (such as the J-20), and is similar in purpose to the variable stability test of the X-62A.

The deep integration of the Airbus A310MRTT refueling aircraft with artificial intelligence is prominently demonstrated by the “Auto’Mate Demonstrator” system it is equipped with [

94]. This system, through multi-source sensor fusion and collaborative control algorithms, has for the first time achieved autonomous formation flight and in-flight refueling operations for unmanned aircraft without the need for human interaction, verifying the value of artificial intelligence technology in formation flight and precise control. Its algorithm framework can be transferred to the autonomous escort mission of fighter jets, complementing the collaborative confrontation vision of the X-62A.

Figure 4.

The integration technology of artificial intelligence and test aircrafts.

Figure 4.

The integration technology of artificial intelligence and test aircrafts.

In conclusion, the integration of artificial intelligence and test aircrafts represents a significant leap in the field of aviation technology. The test aircraft provides a crucial validation platform for artificial intelligence, allowing its algorithms to be tested and optimized in real flight environments. Conversely, artificial intelligence technology enhances the capabilities and autonomy of the test aircraft, such as autonomous navigation, intelligent decision-making, and intelligent collaboration. This integration not only accelerates the technological iteration and validation of artificial intelligence in aviation, but also drives the evolution of air confrontation models towards an intelligent and autonomous direction, profoundly changing the research and development model of aviation technology and the future war form.

Apart from the military-led test platforms, the technological innovation of defense industrial enterprises is becoming an important supplement to the verification of intelligent air confrontation, and it is highly consistent with the “platform flexibility” requirement of the technical framework in this article. The “Hivemind” autonomous collaborative system of Shield AI [

95] has successfully adapted to platforms such as the US Air Force’s F-16V and the Navy’s MQ-9A [

96,

97]. Its core advantage lies in “distributed autonomous decision-making without the support of satellites/data links”—the system optimizes multi-aircraft collaborative path planning through reinforcement learning algorithms. In the 2023 US military “Red Flag” exercise, the MOSA architecture of the X-62A platform was used to achieve rapid loading and complete the autonomous reconnaissance and strike tasks for a 12-vehicle drone cluster, verifying the compatibility between the enterprise technology and the military test platforms, and providing industrial practice support for the “heterogeneous algorithm compatibility deployment technology” in this article. The “Ghost” unmanned aircraft system of Anduril focuses on “intelligent verification of low-cost expendable platforms”, and its “Lattice” AI operating system can simulate target recognition and evasion in complex electromagnetic environments [

98,

99]. It has been used in the US military’s “Agile Confrontation Deployment” (ACE) concept test, forming an “aerospace–space collaborative” verification link with the X-37B’s space situational awareness technology, compensating for the shortcomings of traditional test platforms in “low-cost cluster verification”, and echoing the design logic of “platforms need to cover multiple scenarios of verification requirements” in this article.

The technical integration practices of the aforementioned domestic and foreign experimental aircraft platforms (such as the US X-62A, X-37B, the Russian Tu-154LL, and the Chinese J-10B/C) have demonstrated that they play an irreplaceable core role in the research of intelligent air confrontation technology. To further quantify this role, this chapter will analyze three typical intelligent air confrontation projects, systematically extract the four advantages of the experimental platforms, and provide practical basis for the subsequent construction of the technical framework.

3. Analysis of Advantages of Intelligent Technology Research on Experimental Aircraft Platforms

3.1. Analysis of Three Typical Intelligent Air Confrontation Projects

Currently, intelligent air confrontation has become a strategic direction where artificial intelligence technology and the aviation field are deeply integrated, aiming to reshape the military landscape. The development level of this field directly reflects a country’s technological and military capabilities [

100,

101,

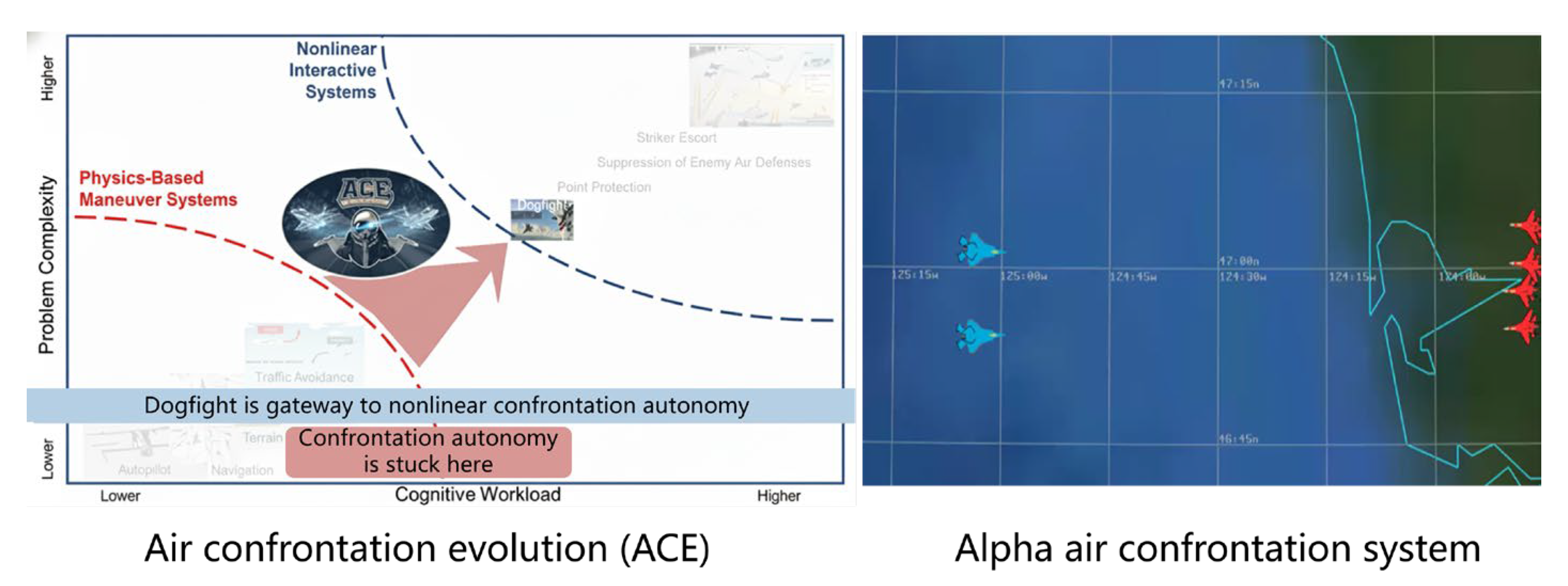

102]. Intelligent air confrontation is centered on artificial intelligence. Its complexity is manifested in three major characteristics: emergence (the overall performance of the system exceeds the sum of individual components), adaptability (autonomous adjustment to cope with battlefield changes), and uncertainty (especially the uncertainty of the game when both sides have comparable strength). This complexity has overturned the traditional understanding of air confrontation and has given rise to new trends such as autonomous evolution, decentralization, unmanned operation, cross-domain integration, and the combination of virtual and real worlds, as shown in

Figure 5.

The complexity of intelligent air confrontation cannot be eliminated. Only by having a deeper understanding of its essence can one better grasp the initiative in future air battles. The test aircraft is the key platform for verifying the theory and technology of intelligent air confrontation. On one hand, the characteristics of intelligent air confrontation such as autonomous evolution and cross-domain integration need to be verified through actual flight tests to validate their effectiveness in actual confrontation environments, such as the collaborative decision-making ability of unmanned clusters; on the other hand, the test aircraft can collect real confrontation data, evaluate the performance of AI algorithms under uncertain conditions, identify potential flaws, and optimize the system. Moreover, the test aircraft can simulate combined virtual and real confrontation scenarios, test virtual deduction and physical attack situations, and provide support for theoretical development. Without the verification through the test aircraft, the complexity of intelligent air confrontation will be difficult to be fully recognized, and the technological iteration will lack a practical basis.

Therefore, as shown in

Figure 6, the “Air Confrontation Evolution (ACE)” program and Alpha air confrontation system of the US DARPA, among other specialized research projects, all use the test aircraft platform as the core verification vehicle to promote the breakthrough and development of air confrontation intelligent technologies. This section analyzes three typical intelligent air confrontation projects to summarize the advantages of the test aircraft platform in conducting intelligent technology research.

The “Air Confrontation Evolution (ACE)” project of the US DARPA aims to enhance autonomy through steps such as developing intelligent algorithms, designing human–machine interfaces, and establishing full-scale flight test infrastructure. The project intends to improve autonomous capabilities, including autonomous systems in close-range confrontation and global behavior. It will start with computer-based simulation modeling, gradually demonstrate on unmanned aircrafts, and ultimately achieve capability verification on modified fighter jets to address current trust issues and gradually build the trust of confrontation personnel in autonomous systems. In the 2023 stage verification, the ACE project has successfully achieved autonomous decision-making by unmanned aircraft formations in complex electromagnetic environments. The algorithm response speed has reached 1/300 of the decision-making cycle of human pilots, demonstrating significant efficiency advantages.

The “Shenying-1” air confrontation intelligent simulation system developed by the Military Operations and Planning Analysis Institute of the Chinese Academy of Military Sciences contrasts sharply with the methodology of the US ACE project. It provides an alternative approach for the verification of intelligent air confrontation—the ACE project adopts a progressive approach of “simulation–unmanned aircraft verification–manned aircraft verification”, focusing on the rapid iteration of AI algorithms on test platforms; while “Shenying-1” takes the core approach of “large-scale simulation first, then linkage with test platforms”, establishing a database and rule library through 32 × 32 air confrontation simulations, first solving basic algorithm problems such as “multi-target decision-making, missile evasion”, and then transitioning to test flight verification. The differences between the two methodologies indicate that the verification of intelligent air confrontation technology can exist in two paths: test flight-driven and simulation-driven. The former is suitable for rapid algorithm iteration, while the latter is suitable for breakthroughs in basic problems of complex systems. This alternative perspective also enriches the methodology system of the technical framework, making it not only adaptable to the path of the ACE project but also capable of guiding the implementation of “Shenying-1” type projects.

The Alpha air confrontation system jointly developed by the University of Cincinnati and the US Air Force, which employs the Genetic Fuzzy Tree (GFT) algorithm, possesses the capabilities of self-learning and self-evolution. In simulated air confrontation scenarios, this system defeated retired US Air Force Colonel Gene Lee with a 100% success rate, demonstrating the significant potential of artificial intelligence in air confrontation decision-making. During its simulated confrontation with Colonel Gene Lee, the system was able to obtain and organize all air confrontation data within 1 millisecond, with a response speed 250 times faster than that of pilots. Subsequently, this system was integrated into the F-16 modified verification aircraft and completed 12 rounds of test flights at Edwards Air Force Base. In these tests, it successfully achieved autonomous decision-making for beyond-visual-range air confrontation, with an accuracy rate for target allocation significantly higher than that of traditional algorithms.

The technological projects led by defense industrial enterprises are becoming a crucial link in the “academic research–military verification–industrial implementation” process. Their outcomes are highly consistent with the four major advantages of the test platform summarized in this article. The “Intelligent Air Confrontation Decision Support System” project jointly conducted by Palantir and the US Air Force focuses on developing an interpretable AI decision module; by visualizing tactical logic (such as real-time simulation of target allocation for drone clusters), it addresses the pain point of the military’s AI decision-making black box. This project has been tested in the AACO project of the X-62A, increasing the pilots’ trust in AI decisions by 40%, verifying the viewpoint in this article that “the human–machine collaborative trust mechanism needs to rely on flight test verification”. Anduril’s “Project Anvil” in collaboration with the US Special Operations Command focuses on “rapid deployment and system integration”. Through modular hardware design (such as plug-and-play sensor components), it enables AI algorithms to complete adaptation to different test platforms (such as the C-130 transport aircraft modification test platform) within 24 h. Its deployment efficiency has increased threefold compared to traditional military projects, and this practice also supports the technical conclusion in this article that “the MOSA architecture is the basis for flexible system expansion”, providing industrial support for the “flexible carrier” advantage of the test platform [

103].

3.2. The Remarkable Advantages of Experimental Aircraft Platforms in Intelligent Technology Research

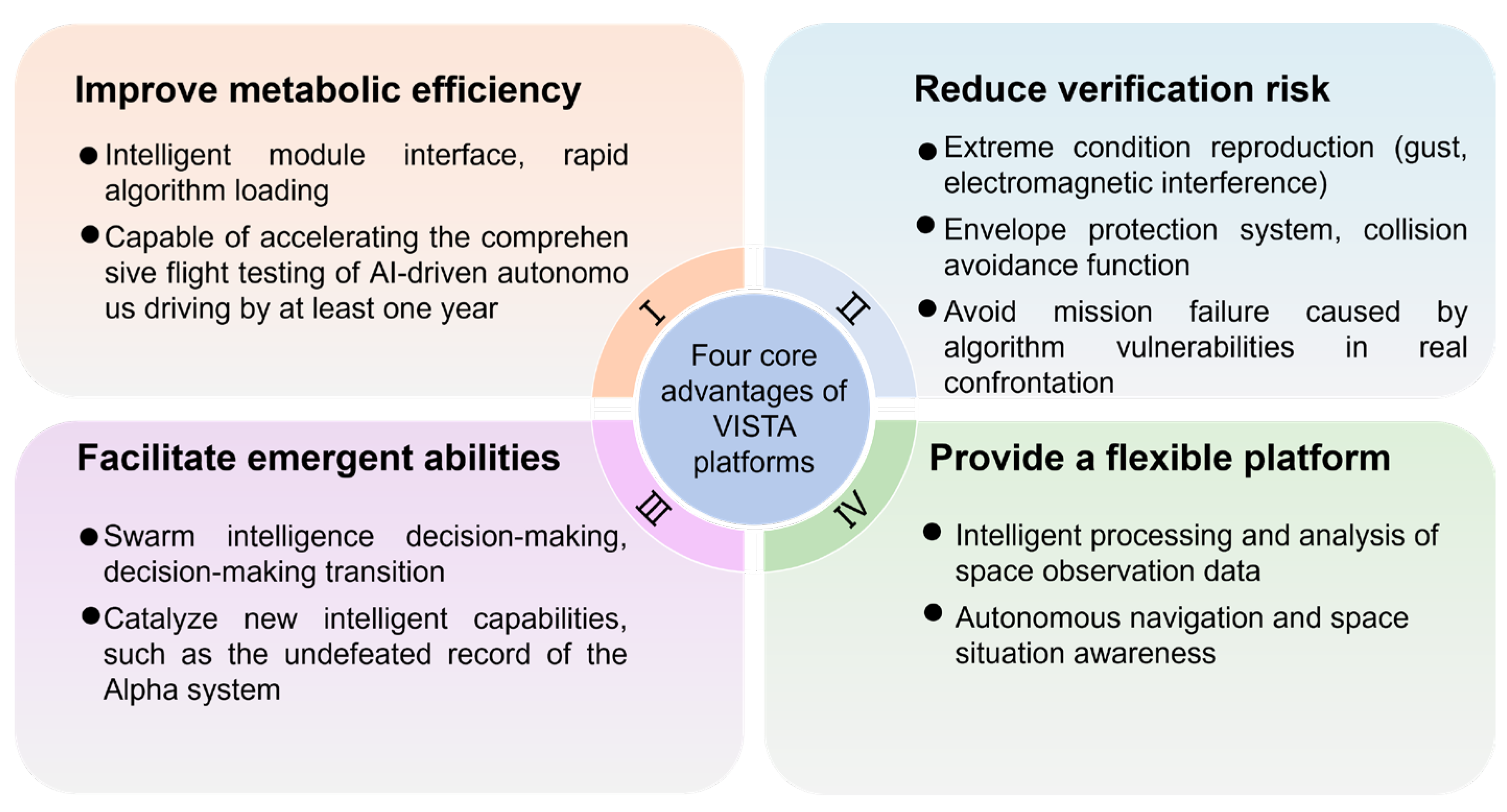

The above three air confrontation projects fully demonstrated the crucial role of the test aircraft platform in the verification of intelligent air confrontation algorithms. By closely approximating the real air confrontation environment, they effectively facilitated the rapid transformation from theoretical algorithms to practical applications. Ultimately, the significant advantages of the test aircraft platform in intelligent technology research were summarized, as shown in

Figure 7.

3.2.1. Improve the Efficiency of Intelligent Technology Iteration and Verification

The test platform is flexibly configured through intelligent module interfaces and has the technology for the rapid loading of algorithms, enabling the relative decoupling of intelligent algorithms and the aircraft platform. For example, in the autonomous air confrontation decision-making driven by deep reinforcement learning, the X-62A, by integrating an air confrontation AI system based on DRL [

69], after 4 billion simulation training sessions, has decision-making capabilities close to those of top human pilots. It can analyze multi-source information in real-time, plan the optimal maneuver path, and maintain millimeter-level control accuracy in 5G overload maneuvers. This system was the first to achieve close-range confrontation with human pilots in the ACE project. The decision response speed exceeded the microsecond level. At the same time, through the MOSA architecture, it supports software and hardware decoupling. In 21 flight tests, it completed 100,000 key software changes for critical software modifications, forming a “data collection–training–deployment” loop. The algorithm iteration efficiency was ten times higher than that of traditional laboratory simulations, accelerating the AI autonomous flight test by at least one year (traditional laboratory simulations require monthly cycles, while X-62A achieves second-level cross-vehicle control logic adaptation in a few seconds) [

104].

3.2.2. Reduce the Risks of Intelligent Technology Aerial Verification

The test platform can reduce risks through various intelligent technologies. Firstly, the test platform has the ability to replicate extreme conditions, such as sudden winds and electromagnetic interference, which makes it feasible to test the robustness of AI systems in a controlled environment. In the “Autonomous Air Confrontation Operations (AACO)” project [

105], the X-62A utilized this advantage by simulating adversarial training of AI decision-making models and conducting over-the-horizon air confrontation simulations in complex electromagnetic environments. This effectively prevented mission failures that might have occurred due to algorithmic flaws in actual confrontation. Additionally, the test platform also has the functions of avoiding collisions in the air and on the ground, and can take timely measures to avoid collisions when potential risks are detected, further enhancing the safety of air-based verification.

3.2.3. Promote the Emergence of Intelligent Aviation Capabilities

The test machine platform serves as a verification platform for intelligent technologies, continuously driving the emergence of aviation intelligent capabilities. In the context of aviation intelligent capabilities, “emergence” refers to the process of generating new and more advanced intelligent capabilities through the technical verification and iteration on the test machine platform. These capabilities are not simply the simple addition of individual technologies or components, but the result of the collaborative effect of the entire system. For instance, the AI system of X-62A was the first to achieve close-range air confrontation with human pilots, giving rise to new capabilities such as group intelligent decision-making, and enabling the leap from basic tactical rules to beyond-line-of-sight decision-making for intelligent agents. The autonomous navigation system technology of X-37B has promoted the transformation of the US military’s air confrontation concept from “platform-centered warfare” to “intelligent collaborative warfare”, significantly enhancing the space deterrence capability. The Alpha system passed the flight test of the F-16 validation aircraft. Its Genetic Fuzzy Tree (GFT) algorithm can process the full set of air confrontation data within 1 millisecond [

106]. Not only does it achieve a 100% simulation confrontation success rate, but it can also autonomously allocate attack priorities and dynamically adjust evasion strategies in multi-target scenarios, forming an “Autonomous Gaming” capability that surpasses single tactical rules. The emergence of this capability is based on the high-fidelity verification environment and rapid iteration support provided by the test platform. This further proves the critical role of the platform’s “promoting capability emergence” advantage in the implementation of “autonomous gaming”.

3.2.4. Provide Flexible Test Platforms

The test platform is equipped with a programmable simulation system, which has created a highly flexible verification environment. Its core advantage lies in the ability to dynamically reconfigure the verification scenarios, enabling precise reproduction of the flight characteristics of the world’s top confrontation aircraft and even undisclosed aircraft of other countries. This dynamic simulation capability covers all elements such as flight quality, human–machine interface, and weapon system logic, providing a universal verification base for intelligent algorithms.

Firstly, the modular design of the platform provides it with excellent task flexibility, enabling a single machine to support multiple tasks for parallel verification. For instance, the “Digital Commander” system of the X-62A achieves the mode transition from manned flight to unmanned wingman cluster control through dynamic task allocation during the same flight test, significantly enhancing the effectiveness of mixed formation operations. Secondly, the test aircraft platform has the ability to flexibly configure intelligent module interfaces, allowing for the rapid loading and replacement of different intelligent algorithms. This high configurability enables researchers to verify the performance of various algorithms in a short period of time, accelerating technological iteration and updates. For example, the X-62A platform integrates system autonomous control simulation (SACS) and model following algorithm (MFA) [

107,

108]. The rapid testing and verification of various AI algorithms have been achieved. Finally, the open system architecture of the X-62A platform can simulate the performance characteristics of different types of aircraft, providing validation scenarios for the adaptability and generalization ability of AI algorithms. This flexible configuration capability has significantly reduced the verification cost and greatly shortened the research and development cycle of new aircraft models. The Russian Tu-154M-LL (FACT) CCV platform utilizes the “full degree-of-freedom flight simulation” function to validate the control technologies of various transport aircraft and passenger planes. Its flexible mode of “simulating the control characteristics of multiple aircraft on a single platform” forms an alternative to the flexible path of the US X-62A, which “parallelly loads multiple algorithms”. The former focuses on “the flexibility of control characteristic simulation”, while the latter emphasizes “the flexibility of intelligent algorithm loading”. Both modes have verified the core advantages of the “flexible carrier” of the test platform and proved that the definition of “flexible carrier” in the technical framework can cover different technical directions, enhancing the global applicability of the framework.

In conclusion, the test machine platform, leveraging its four core advantages—efficient iteration, controllable risks, emergent capabilities, and flexible configuration—has established a bridge from theoretical exploration to practical application of intelligent technologies. In the future, as AI integrates with cutting-edge technologies such as 5G and quantum computing, the test machine platform will evolve into a key pathway for the aviation sector to transition to the “intelligent autonomous” era, fostering technological breakthroughs such as air confrontation decision-making, cluster collaboration, and autonomous confrontation, and establishing a research and development chain of “artificial intelligence theory–test machine platform–practical application”.

The four major advantages of the test aircraft platform provide the core support for the implementation of intelligent air confrontation technology. However, these advantages need to be transformed into operational engineering paths through a systematic technical framework. This chapter, based on the existing test platforms in China, constructs a complete technical implementation framework of “demand analysis–platform construction–ground verification–test evaluation”, and clarifies the key technologies and connection logic of each link.

4. Technical Implementation Framework for the Deep Integration of Intelligent Technologies and Flight Testing

Given the current level of testing equipment in China, there are sufficient conditions to carry out the deep integration of intelligent technology and flight tests. Therefore, this paper aims to build an intelligent air confrontation test platform and a technical framework for the verification of artificial intelligence technology. This framework is based on the integrated air-ground test environment, with the intelligent test aircraft as the core carrier. It covers three key links: requirement analysis, platform construction and capability generation, ground verification and technical assessment. It also integrates core technologies such as network security, data management, and human–computer collaboration, providing technical support for the engineering application of intelligent air confrontation technology.

This technical implementation framework centers on the core objective of “establishing a comprehensive verification chain for intelligent algorithms from laboratory R&D to actual flight testing.” Adhering to systems engineering principles, it adopts a three-tier progressive structure: “requirements-driven, platform-supported, and closed-loop verification.” The bottom layer (focused on analysis and test environment design) defines objectives and establishes foundational environments, clarifying the capability boundaries of intelligent air confrontation systems and multi-dimensional testing environment requirements to provide design basis for upper layers. The middle layer (Intelligent Testbed Platform Construction and Capability Generation Layer), serving as the core execution vehicle of the framework, translates bottom-layer requirements into four specific capabilities: perception, decision-making, control, and security. The top layer (Ground Verification and Test Evaluation Layer) validates through closed-loop testing whether the middle-layer platform capabilities meet the bottom-layer requirements, forming a complete logical chain of “requirements–construction–verification–optimization.” This three-tier architecture employs a Modular Open Systems Approach (MOSA), standardized interfaces, and high-speed data links for deep integration, ensuring seamless transmission of both technical and data flows.

4.1. Requirement Analysis and Test Environment Design Layer

4.1.1. Capability Requirement Analysis of Intelligent Air Confrontation System

In order to ensure that the constructed integrated test environment for the airfield can be compatible with different types of intelligent air confrontation systems, an analysis of the capabilities and requirements of intelligent air confrontation systems was carried out. The analysis framework is shown in

Figure 8.

Firstly, in terms of system capability modeling and analysis, an intelligent air confrontation system capability ontology model is constructed, and the capability boundaries of different types of systems (such as autonomous air confrontation systems and human–machine collaborative systems) are defined.

The capability ontology model refers to the structured description of the core elements, capability dimensions, and interaction logic in the confrontation domain. It essentially constitutes a multi-level, dynamically evolving knowledge system. Among these, the core elements include confrontation units (such as manned aircraft, unmanned vehicles, missiles, etc.), environmental elements (such as weather conditions, electromagnetic interference, etc.), and mission types (such as air superiority operations, ground strike operations, etc.); the capability dimensions include perception capabilities (such as target recognition, situation understanding), decision-making capabilities (such as tactical planning, path optimization), and execution capabilities (such as maneuver control, firepower strike); the interaction logic refers to the explicit relationships between core elements, between capability dimensions, and between core elements and capability dimensions, such as the impact of geomagnetic interference on aircraft, the support of decision-making by perception results, and the influence of situational awareness capabilities on manned–unmanned collaborative confrontation units, etc.

The capability boundary refers to the range and limitations of the stable operational capabilities of an intelligent air confrontation system in specific scenarios. It is influenced by various factors such as technological maturity, environmental complexity, and task requirements, and defines the practical limits of the system. For instance, in terms of technological maturity, although autonomous air confrontation systems possess the ability for autonomous decision-making, they still require human supervision in complex game scenarios; in terms of environmental complexity, electronic interference and cyber attacks may reduce the efficiency of AI systems; in terms of task requirements, autonomous air confrontation systems excel at performing standardized tasks, but may perform inadequately in multi-task switching or cross-domain operations.

Furthermore, based on the system capability model, the test environment requirements are decomposed from various aspects such as functional requirements (such as target simulation, interference injection), equipment requirements (such as sensor types and performance), electrical requirements (such as power supply, interface standards), data requirements (such as data bandwidth, format), and network security requirements (such as network offensive and defensive capabilities, data encryption level), to form a structured requirement analysis result.

Finally, typical confrontation scenarios are selected, such as close-range confrontation and beyond-visual-range air confrontation, and benchmark intelligent air confrontation algorithm samples are developed. For instance, an autonomous decision-making framework for close-range air confrontation based on the proximal policy optimization algorithm is constructed, and achieves intelligent maneuvering decisions and tactical games in air confrontation scenarios ranging from beyond-visual-range to visual-range through deep reinforcement learning technology. These algorithm samples provide empirical basis for requirement analysis.

4.1.2. Air-Ground Integrated Test Environment Architecture Design

In order to provide a universal air-ground integrated test environment for different intelligent air confrontation systems, an air-ground integrated test environment architecture with the intelligent test aircraft as the aerial node and simulators and digital simulations as the ground nodes is established, as shown in

Figure 9.

Firstly, the principles of modularization, openness, and reconfigurability are adhered to, and the Modular Open System Approach (MOSA) is adopted to ensure the flexibility and scalability of the test environment. At the same time, network security and data management are integrated into the architectural design.

Furthermore, based on typical air confrontation scenarios, a distributed layout is designed with an intelligent test aircraft as the airborne nodes, and high-fidelity simulators and digital simulations (such as digital twin systems) as the ground nodes. The interconnection relationships among these nodes are also clarified. Among them, the airborne nodes provide real flight platforms and sensor data, while the ground nodes offer a combined virtual and real confrontation scenario and simulation analysis capabilities. Through high-speed data links, information exchange between air and ground and closed-loop verification are achieved.

Furthermore, based on the decomposition results of the test environment requirements, a pyramid-shaped four-layer hierarchical interface architecture is constructed. From bottom to top, the four layers are as follows: data interface, electrical interface, device interface, and function interface. The four layers of interfaces, respectively correspond to the intelligent algorithm level, intelligent chip level, intelligent component level, and intelligent system level. Standardized interface specifications are defined for these results to achieve plug-and-play and rapid integration of test resources, thereby meeting the compatibility requirements of multi-level and multi-dimensional test environments for intelligent systems, intelligent components, intelligent chips, and intelligent algorithms. At the same time, security interfaces are considered to ensure the security of data transmission.

The requirements analysis and test environment design layer provides clear guidance for the construction of the intelligent testing machine platform through two core outputs. First, a capability requirement list, based on the intelligent air confrontation system capability ontology model (defining dimensions such as perception, decision-making, and execution), clearly defines technical indicators in different scenarios. The platform construction layer then configures multimodal sensor arrays and a DRL + MCTS integrated decision-making algorithm accordingly; second, a standardized interface specification, with a pyramid-shaped four-layer interface (data interface, electrical interface, etc.), directly guides the modular configuration of platform hardware. For example, the X-62A implements the MOSA architecture’s plug-and-play functionality based on this specification, flexibly integrating the MFA algorithm and the VSS system [

109]. At the same time, the platform construction layer reversely optimizes the requirements layer through “capability feasibility feedback”; if a certain requirement (such as no loss of perception in extreme electromagnetic environments) exceeds the current technical level, the platform layer will feedback to the requirements layer to adjust the indicators to ensure that the requirements match engineering practice.

4.2. Intelligent Test Aircraft Platform Construction and Capability Generation Layer

This layer focuses on the construction of the core capabilities of the intelligent testing machine platform, endowing the testing machine with generalized perception, decision-making, control, and safety guarantee capabilities. The construction plan is shown in

Figure 10.

4.2.1. Intelligent Perception Capability Construction

Firstly, in order to ensure that the test machine can meet the situational awareness requirements of different intelligent air confrontation systems, it is necessary to analyze the components, performance capabilities, operating conditions, and control methods of different airborne situational awareness systems, including but not limited to radar systems, infrared search and tracking systems, electronic reconnaissance equipment, optical sensors, etc. Based on this analysis, a sensor array suitable for different confrontation tasks or confrontation scenarios can be established. Subsequently, by considering the spatial dimensions, heat dissipation, energy requirements, etc., of each sensing system in the sensor array for the test machine, and in combination with the already constructed pyramid-style four-layer hierarchical interface architecture, a suitable situational awareness system for the intelligent test machine can be selected and configured in a modular manner to achieve rapid response to different sensing requirements, enabling the test machine to flexibly configure and autonomously control the situational awareness system according to task requirements, and enhancing the generality of the intelligent test machine’s sensing capability. Furthermore, heterogeneous sensors such as radar, optoelectronics, and electronic support can be integrated to establish a multi-source information fusion algorithm, thereby enhancing the test machine’s ability to perceive complex electromagnetic environments.

The Chinese J-10B/C vector verification aircraft, as the core test aircraft platform of the country, has its perception capability construction highly consistent with the technical logic of this section of the framework—by replacing the domestic vector engine, this platform integrates multiple-modal sensors such as radar, optoelectronics, and electronic support, and based on the domestic multi-source information fusion algorithm, it achieves precise identification and tracking of airborne targets in complex electromagnetic environments. Its technical path is consistent with the requirement of the framework of “modular configuration of sensing arrays and optimization of fusion algorithms”. In addition, the flight control system of the J-10B/C has an adaptive adjustment function, which can simulate the control characteristics of different generations of fighter aircraft, providing data support for the optimization of the flight control algorithms of domestic stealth fighter aircraft (such as J-20). This practice also verifies the design logic of the framework that “platform capabilities need to match the research and development needs of domestic equipment” and has become a typical case of the framework’s application in domestic test platforms.

Figure 10.

Construction of intelligent perception capability.

Figure 10.

Construction of intelligent perception capability.

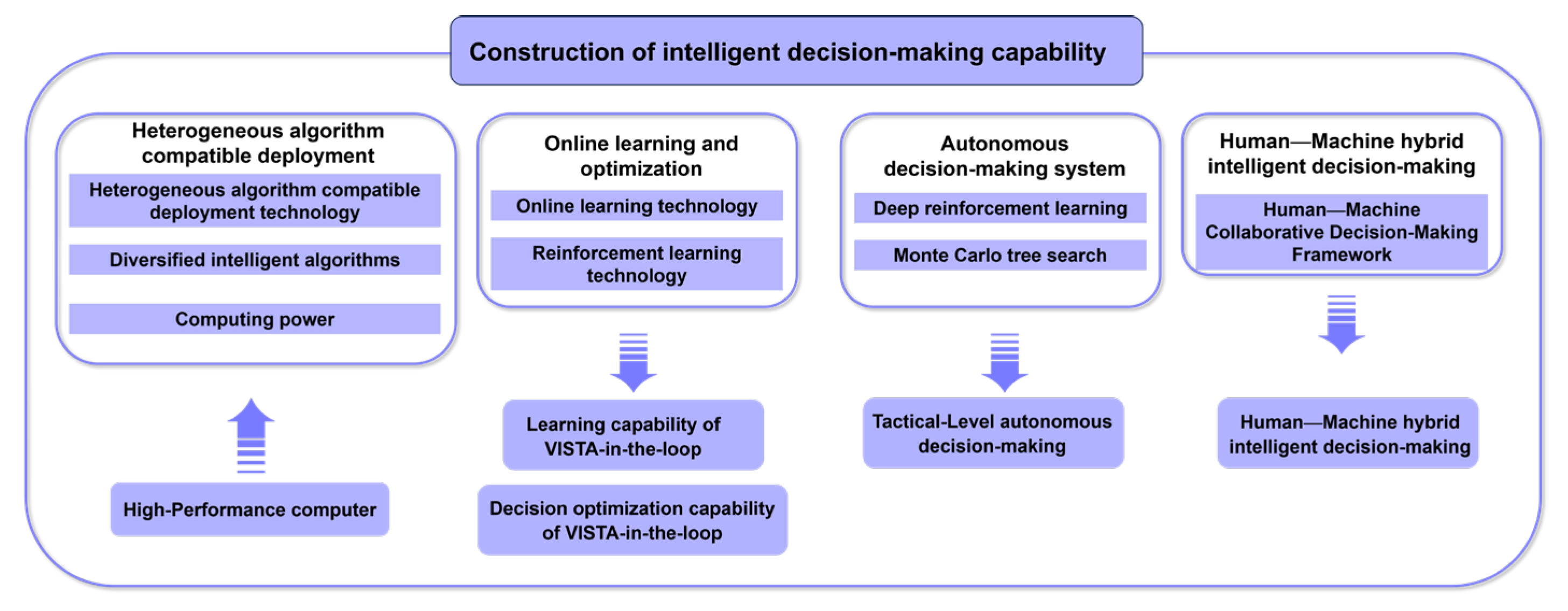

4.2.2. Intelligent Decision-Making Capability Construction

To build an intelligent decision-making system that meets the requirements of air confrontation, based on comprehensive consideration of the airborne application scenarios, computing characteristics, and computing power demands of intelligent air confrontation algorithms, as shown in

Figure 11, four aspects of construction are focused on. Firstly, in response to the demand for compatible deployment of heterogeneous algorithms, methods for analyzing high-performance hardware architectures and highly compatible software deployment environments that are suitable for different algorithm learning frameworks (such as TensorFlow, Pytorch, etc.) [

110] are analyzed, thereby establishing a high-performance computing platform to meet the efficient operation requirements of diverse intelligent algorithms. Secondly, on the basis of the constructed computing platform, online learning and reinforcement learning technologies are explored to endow the test aircraft with in-loop learning and decision optimization capabilities, thereby enhancing the practicality of intelligent algorithms. Furthermore, to achieve the full-process autonomy of “Autonomous Gaming”, the framework specially integrates the fusion algorithm of deep reinforcement learning (DRL) and Monte Carlo Tree Search (MCTS) at the decision-making capability layer [

111]. The former ensures tactical innovation and dynamic confrontation capabilities, while the latter enhances the optimality of multi-scenario decision-making. At the same time, through the human–machine collaborative decision-making framework, transitional space is reserved—in the current stage, “human supervision + AI-led decision-making” can be gradually verified for the reliability of “Autonomous Gaming”. In the future, relying on the iterative optimization of the framework, high-level autonomous gaming without human intervention will ultimately be achieved. Finally, a human–machine collaborative decision-making framework is designed to realize human–machine hybrid intelligent decision-making and enhance the intelligent decision-making ability of the test machine.

4.2.3. Intelligent Control Capability Construction

To meet the requirements of intelligent air confrontation algorithms for multi-modal control of the aircraft, the control capabilities should be endowed to the intelligent test aircraft based on the existing hardware architecture. Firstly, based on the control characteristics of different intelligent algorithms, the coupling relationship between pitch angle control, roll angle control, pitch angle rate control, roll angle rate control, angle of attack control, overload control, and vertical speed control command tracking modes and air confrontation maneuver trajectory planning is analyzed. A flight control law system including command response and trajectory tracking dual-modal control is established to ensure that the aircraft can achieve high-precision tracking of the maneuver instructions output by the intelligent decision-making system. In addition, a multi-modal control switching logic based on state monitoring is constructed. Through the establishment of transition state energy management strategies and abnormal handling mechanisms, smooth switching between different control modes such as autonomous air confrontation and manual intervention is achieved to ensure flight safety. The final construction scheme is shown in

Figure 12.

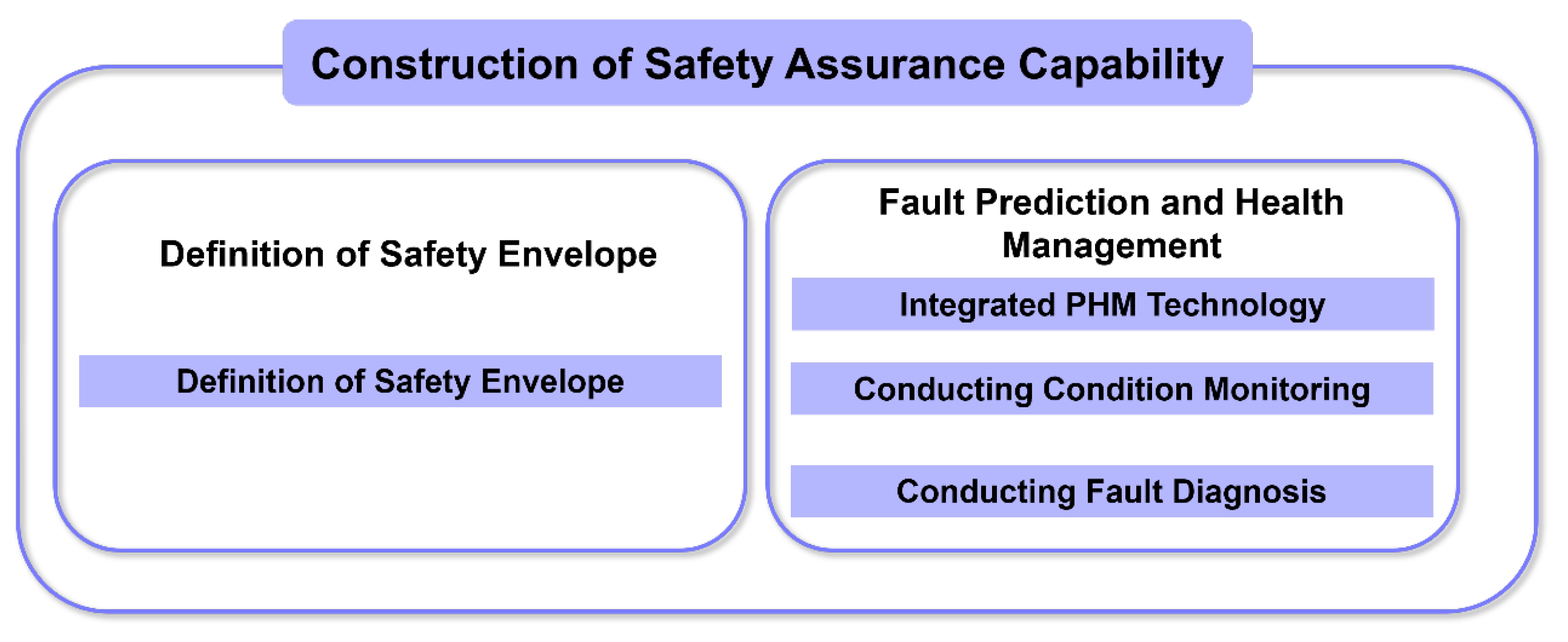

4.2.4. Safety Assurance Capability Construction

To ensure the security of the intelligent air confrontation system verification and reduce the risk of the test, it is necessary to endow the intelligent test aircraft with safety capabilities. Firstly, based on the characteristics of the variable stability aircraft architecture, a safety envelope is established for the test aircraft. The safety envelope can ensure that the aircraft remains within the safe flight parameter range during the intelligent air confrontation stage, including parameters such as flight speed, altitude, angle of attack, overload, and rate limit. Secondly, Fault Prognostics and Health Management (PHM) technology is integrated to conduct condition monitoring and fault diagnosis of key systems, including system self-detection, redundancy management, fault monitoring, prediction, diagnosis, mode switching, and other contents [

112]. The final construction scheme for safety assurance capabilities is shown in

Figure 13.

Based on the actual measurement data of the X-62A and J-10B/C, the MOSA architecture is prone to “interface compatibility failures”, such as when the X-62A loads the VSS system from Calspan; due to the voltage deviation of the electrical interface (standard 28 V, actual 32 V), the data transmission is interrupted. The cause is that the third-party module did not fully follow the MOSA interface specification. The multi-modal sensor fusion technology is prone to “data conflict failures”. In the strong electromagnetic interference scenario of J-10B/C, the radar data jump and the stable photonic data form a conflict, resulting in distorted fusion results. The cause is that the weight allocation algorithm for extreme environments has not been optimized. The DRL decision algorithm is prone to “strategy deviation failures”. In the Autonomous Air Confrontation Operations (AACO) project of X-62A, due to the training data not covering the “simultaneous maneuver of multiple targets” scenario, the decision algorithm selects the wrong avoidance path. The cause is that the scene coverage rate of the algorithm training set is less than 60%.

The construction layer of the intelligent test machine platform will import the generated perception, decision-making, control, and safety capabilities through the standardized interfaces defined in the demand layer into the ground verification environment. In the hardware-in-the-loop (HIL) simulation, the platform’s DR air confrontation decision-making algorithm is loaded into the simulation system and is verified in conjunction with the sensor model and dynamic model; in the VR scenario, the platform’s multi-modal control capabilities (switching between autonomous/manual modes) are tested in simulated electromagnetic interference and gust scenarios. The ground verification layer drives the platform’s optimization through “test data feedback”. If it is found during the verification that the accuracy rate of target allocation in multi-target scenarios is only 80% (not meeting the 95% standard of the demand layer), the cases of incorrect allocation and algorithm parameters will be fed back to the platform layer, and the search depth of the MCTS algorithm will be adjusted. If “the safety envelope gives false alarms at 8G overload”, the optimization parameters threshold of the envelope will be fed back, forming a “construction–verification–optimization” loop, clearly presenting the connection logic of “capability import–test feedback–platform optimization”.

4.3. Ground Verification and Test Evaluation Layer

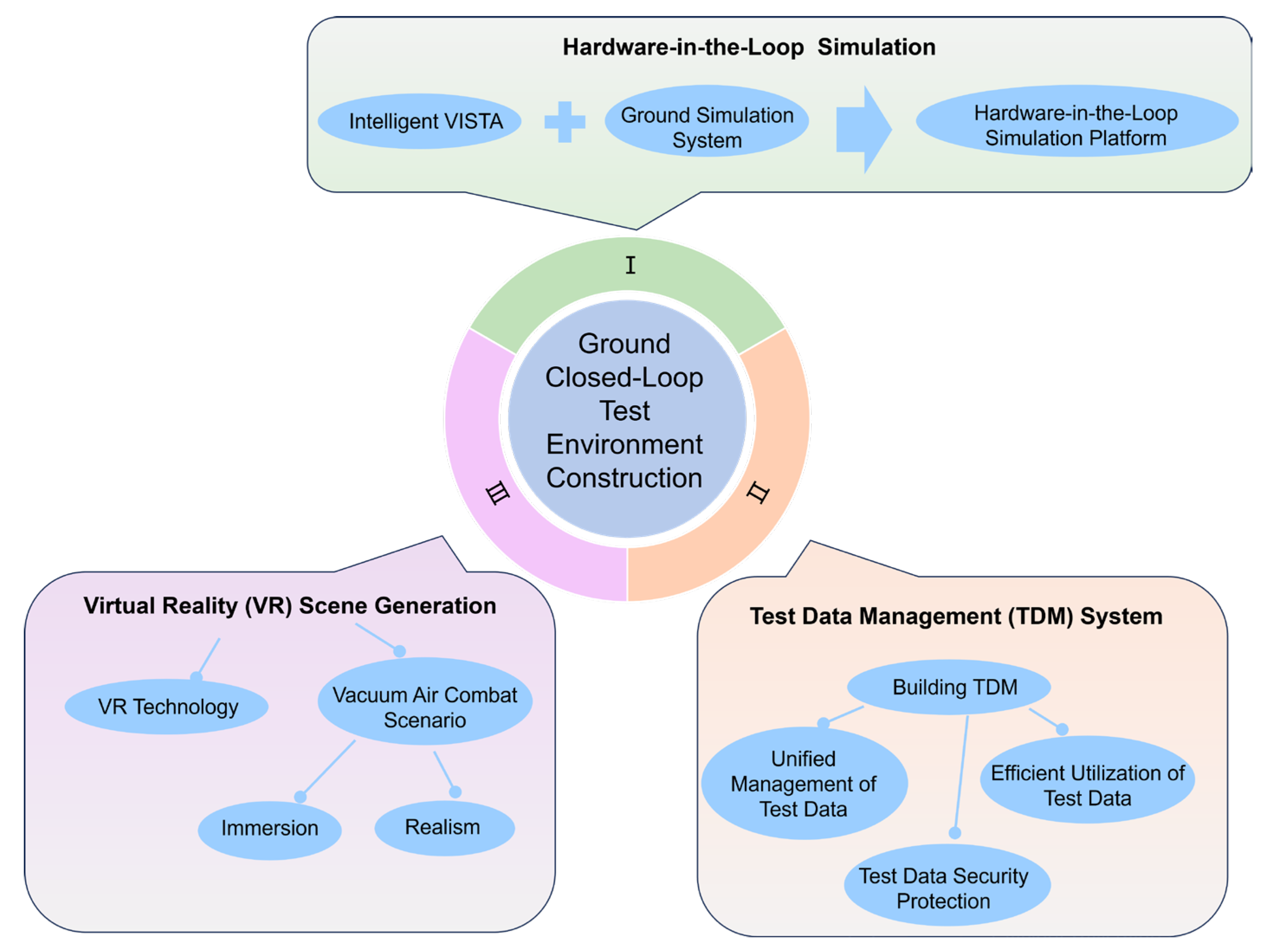

4.3.1. Ground Closed-Loop Test Environment Construction

This layer builds an intelligent air confrontation system ground closed-loop test environment for the aircraft in the loop. The intelligent confrontation algorithms established in the “Requirement Analysis and Test Environment Design Layer”, the intelligent decision-making algorithms established in the “Intelligent Test Aircraft Platform Construction and Capability Generation Layer”, the safety envelope and PHM (Prognostics and Health Management) safety protection mechanisms are connected to the air-ground integrated test environment through a pyramid-style four-layer hierarchical interface. Through simulation methods, sensor models, dynamic models, fire control models, weapon models, etc., required for the operation of the intelligent air confrontation system are established, and the test monitoring program is developed. During the construction process, environmental function verification tests are carried out simultaneously to ensure the normal operation of the ground closed-loop test environment. This includes three aspects: hardware-in-the-loop (HIL) simulation, virtual reality (VR) scene generation, and test data management system (TDM). Hardware-in-the-loop (HIL) simulation refers to the hardware-in-the-loop integration of the intelligent test aircraft and the ground simulation system to build a hardware-in-the-loop simulation platform; virtual reality (VR) scene generation refers to the use of VR technology to construct realistic air confrontation scenes to enhance the immersion and authenticity of ground verification; the test data management system (TDM) can achieve unified management, efficient utilization, and security protection of test data. The final construction plan is shown in

Figure 14.

The “Shenying-1” air confrontation intelligent simulation system of China, as the core ground platform for intelligent air confrontation verification in China, has its functional design deeply aligning with the positioning of the “ground verification layer” of this framework—this system realizes 32 × 32 air confrontation intelligent simulation, and for the first time adopts genetic algorithms to solve the problem of selecting multiple strike targets, and establishes air confrontation databases, case libraries and rule libraries. It can be regarded as the specific implementation form of the “ground verification layer” of the framework in China. On the one hand, the simulation environment of “Shenying-1” can simulate complex air confrontation scenarios, providing preliminary virtual verification for AI algorithms (such as autonomous decision-making algorithms) of J-10B/C and other aerial test platforms, reducing test flight risks, corresponding to the core goal of the framework’s “HIL simulation to reduce test flight costs”; on the other hand, the massive simulation data generated by the system can be linked with the test flight data of the aerial platforms, and be unified analyzed through the test data management system (TDM) to support the iterative optimization of AI algorithms. This “virtual simulation–test flight verification” linkage mode is precisely the practical manifestation of the framework’s “space-ground-air integrated verification” logic in China.

4.3.2. Test Verification and Evaluation Methods

As shown in

Figure 15, to verify the effectiveness of the intelligent testing machine platform, a high-fidelity verification environment was constructed based on HIL hardware-in-the-loop simulation and VR scene generation technology. A test plan was designed focusing on typical confrontation scenarios such as close-range confrontation. This plan first generated test cases for different confrontation scenarios regarding functions, performance, and safety requirements. Through the tactical action sequences in the tests, the core functions such as perception ability, autonomous decision-making ability, and multi-modal control ability were verified to evaluate their execution effectiveness. These efficiencies reflected the effectiveness of the artificial intelligence algorithms supporting these capabilities. At the same time, abnormal conditions such as extreme overload and sensor failure were set to test safety boundary constraints such as flight envelope protection and emergency response. A standardized ground test outline was compiled based on the test requirements, and the test cases were executed using an automated testing framework and data was collected. A quantitative evaluation system including key indicators such as situational awareness range, command response accuracy, and decision timeliness was constructed, and the test results were evaluated. Finally, based on the first-round verification results, the platform was iteratively optimized and a “test–evaluation–improvement” closed-loop verification process was formed.

In the academic field, the “Eagle-1” system serves as an example, adopting the “three-level verification” strategy. The first level is simulation verification, where the DRL algorithm’s decision accuracy rate in 1000 scenarios is tested in a 32 × 32 air confrontation simulation environment (requiring ≥ 90%). The second level is hardware-in-the-loop (HIL) verification, where the algorithm is loaded onto the flight control hardware of the simulated X-62A to verify the accuracy of control instructions. The third level is flight test verification, where 12 flight tests are conducted on the J-10B/C platform, comparing the target tracking errors between simulation and flight. This strategy can expose more than 80% of the defects at the laboratory stage. In the industrial field, Lockheed Martin is the representative, designing a trust-building strategy of “decision log + logic visualization” for the AI decision system of the X-62A. It records the input data, intermediate variables, and output instructions of the AI decision in real time, forming an irreversible decision log. At the same time, the decision process is visualized through “tactical logic map”, such as showing the basis for “selecting the left side to avoid” (target threat degree 0.8, own overload margin 0.3). In the “Northern Sharp Sword” exercise in 2023, this strategy increased the pilots’ trust in AI decisions to 75%, solving the pain point of “insufficient trust between humans and machines”.

The modular open system architecture (MOSA) and the test data management system (TDM) serve as the core connecting links throughout the three levels: MOSA technology ensures the scalability of the test environment (supporting the addition of sensors/algorithms) at the demand level, realizes the decoupling of software and hardware at the platform level (facilitating algorithm replacement and sensor upgrade), and supports the integration of multiple means such as HIL and VR at the verification level. The TDM system, through a high-speed encrypted data link, aggregates the capability indicators at the demand level, the test flight parameters at the platform level, and the test results at the verification level, achieving unified data management and analysis, providing support for iterative processes at all levels; for example, the demand level updates the rationality of capability indicators based on TDM historical data, and the platform level optimizes control law parameters based on data mining. This content is based on the paragraphs of MOSA, TDM, and key technologies, clearly demonstrating the technical support for cross-level connections and strengthening the overall framework.

4.4. Key Technologies of the Technical Framework System