Abstract

The Automatic Dependent Surveillance-Broadcast (ADS-B) is a key component of the new-generation air traffic surveillance system. However, it is vulnerable to security threats due to its plaintext transmission and lack of authentication mechanisms. Existing ADS-B anomaly detection methods still suffer from significant limitations, including low anomaly detection rates and limited adaptability. To address these issues, this paper proposes a novel ADS-B anomaly detection framework driven by large language models (LLMs). The approach utilizes pre-trained LLMs and a self-iterative prompt optimization loop, which integrates historical trajectories and multimodal features to refine expert-initialized prompts. The optimized prompts guide the LLM in identifying ADS-B anomalies. The advantage of the proposed ADS-B anomaly detection framework lies in overcoming the limitation of traditional model adaptation. Experimental results show that the proposed method achieves excellent performance on key metrics: the anomaly detection rate of 98.55%, the false alarm rate controlled at 3.61%, the miss detection rate reduced to 1.45%, and a recall of 96.39%. Compared to traditional detection methods, this method improves detection accuracy by an average of more than 12%. Furthermore, experiments on multi-type anomaly detection tasks validate that the framework exhibits strong adaptability and good generalization, providing effective technical support for the development of aviation data security protection systems.

1. Introduction

Air traffic management (ATM) systems, as critical infrastructure for safe and efficient airspace operations, depend on communication, navigation, and surveillance technologies to maintain orderly control of aircraft [1,2]. With the continuous increase in air traffic volume and the growing diversity of aircraft types, traditional ATM surveillance technologies face significant challenges. These technologies include Primary Surveillance Radar (PSR), Secondary Surveillance Radar (SSR), and multilateration systems [3]. In contrast, the ADS-B technology has been widely adopted in commercial aviation due to its superior surveillance accuracy and broader coverage [4].

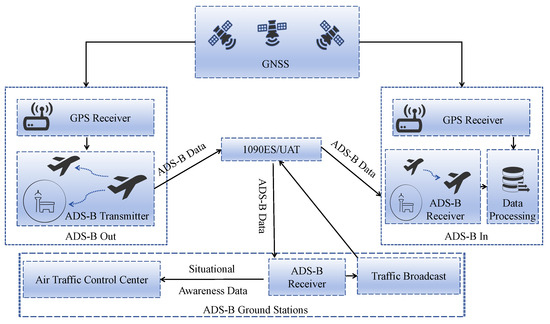

The working principle of ADS-B is illustrated in Figure 1. Aircraft obtain latitude and longitude information via satellite and heading data through onboard sensors, which are then encapsulated into 112-bit ADS-B messages. The ADS-B Out equipment on the aircraft broadcasts ADS-B data via 1090ES or UAT communication channels. Aircraft equipped with ADS-B In equipment can receive ADS-B data, which can be used for functions such as air traffic control and collision avoidance. The data received by ground stations is processed by air traffic control (ATC) centers and can support ATC safety and order [5]. The ADS-B messages are transmitted in plaintext without effective data integrity checks or authentication mechanisms. As a result, they are highly vulnerable to radio frequency spoofing attacks, posing serious threats to system security [6].

Figure 1.

Schematic overview of the ADS-B system in ATM.

To address these threats, researchers have explored security solutions for ADS-B. These solutions are primarily categorized into two types: cryptographic and non-cryptographic methods. Cryptographic schemes maximize message security but suffer from drawbacks, such as the need to modify existing protocols, additional hardware requirements, and high deployment costs [7]. Non-cryptographic approaches primarily rely on anomaly detection techniques to identify security issues by detecting outliers in messages and, subsequently, use protective measures to ensure navigation safety [8,9,10,11,12]. Recently, machine-learning and deep-learning techniques have gained momentum [13,14]. For example, ref. [15] applied a Long Short-Term Memory (LSTM) encoder–decoder to ADS-B data and reduced detection errors, but false-alarm rates remain high. Ref. [16] combined a k-nearest neighbour proximity model with an autoencoder to flag hazardous airspace from radar flight data, integrating both real and simulated ADS-B inputs for visual decision support. Despite these advances, most models still depend on large, high-quality training sets, adapt poorly to new anomaly types, and offer limited interpretability [17,18].

Therefore, methods of leveraging new-generation artificial intelligence, such as LLMs to both enhance system security and reduce operational and adaptation costs, have become a prominent research focus. This paper proposes an LLM-powered intelligent ADS-B anomaly detection framework that focuses on overcoming the bottlenecks of dependence on labeled data, limited model adaptability, and the black-box nature of decision-making. These capabilities translate into three concrete advantages for ADS-B anomaly detection. (i) Data efficiency: powerful few-shot generalization allows LLMs to spot novel anomalies with only a handful of labeled examples, sharply reducing the need for large, curated datasets. (ii) Online adaptability: an agent-driven prompt-engineering loop lets the model update its behavior as the monitoring environment evolves, eliminating costly full retraining cycles. (iii) Transparent reasoning: LLMs can emit structured, human-readable rationales for each decision, providing audit trails that improve interpretability and foster effective human–machine collaboration.

The main contributions of this article are as follows:

- (1)

- We propose a hybrid sample generation method for ADS-B anomaly detection. This method combines real trajectories with five typical attack types to generate hybrid samples that cover a broad range. Additionally, by leveraging the comprehensive data composition, it reduces annotation costs.

- (2)

- To achieve adaptive responses to unknown attacks, we design a prompt optimization mechanism leveraging the reasoning-action LLMs and agents. This mechanism enables online, adaptive prompt optimization without frequent retraining. As a result, it empowers robust adaptation to unknown attacks and enhances the flexibility of the model in complex scenarios.

- (3)

- An interpretable ADS-B anomaly decision mechanism is developed. By integrating real-time analysis of trajectory and attack features, this mechanism provides the cause, location, and suggestions in real-time. Thus, it improves transparency and efficiency in emergency response, addressing the “black-box” issue inherent in traditional detection models.

This paper is organized as follows: Section 2 reviews related work, focusing on security solutions for ADS-B vulnerabilities. Section 3 presents the proposed LLM-based ADS-B anomaly detection framework, including the design of prompt optimization. Section 4 provides experimental results, comparing the method with various existing ADS-B anomaly detection approaches. Section 5 discusses the limitations of current methods. Section 6 summarizes the contributions and suggests future directions for improving the performance of ADS-B anomaly detection.

2. Related Works

2.1. ADS-B Vulnerabilities

ADS-B was not designed with network security in mind and broadcasts data in plain text. As a result, ADS-B messages are vulnerable to various network attacks during transmission [19]. Moreover, such attacks can propagate through ATC networks, causing harm on a larger scale [20]. As summarized in Table 1, ADS-B vulnerabilities can be grouped into five canonical categories.

Table 1.

Classification of typical ADS-B attack patterns with implementation difficulty and impact assessment.

2.2. Security Solutions for ADS-B Vulnerabilities

Currently, security solutions for ADS-B data primarily encompass encryption, physical layer information analysis, multilateration, and machine learning-based anomaly detection [28]. The first three methods are regarded as traditional security approaches.

2.2.1. Traditional Security Solutions

Encryption-based hardening relies on either symmetric or asymmetric cryptography. Symmetric algorithms use a single secret key for both encryption and decryption, delivering high throughput with minimal computational load [29]. For instance, ref. [30] introduced ADS-Bsec, an integrated scheme that adds authentication, integrity checks, and multi-technology collaboration to the ADS-B workflow. Asymmetric algorithms encrypt messages with the recipient’s public key and decrypt them with the matching private key [31]. The encryption method requires more computational resources but offers strong resistance to brute-force attacks. However, large-scale key management and the need to alter the legacy ADS-B protocol have, so far, impeded the practical deployment of either encryption approach in air-traffic control environments.

The physical-layer defenses leverage radio-frequency artifacts, such as the inverse-square decay of received signal strength with range and the characteristic inter-message timing of a given transponder, to flag forged broadcasts [32]. While these cues can expose blatant spoofing, sophisticated low-power tampering that faithfully mimics genuine waveforms may still slip through.

Multilateration (MLAT) provides a complementary safeguard. It triangulates the position of an aircraft using the time difference of its message arrivals at multiple ground stations. Then, it cross-checks that estimate against the coordinates encoded in the ADS-B packet [33]. This approach is accurate but requires a dense, tightly synchronized receiver network, which restricts practical deployment to airspace with robust ground infrastructure.

2.2.2. Machine Learning-Based Solutions

Advanced machine learning has emerged as the primary tool for safeguarding ADS-B systems against anomalies [34]. Machine learning methods usually detect ADS-B anomaly data based on prediction errors and reconstruction errors. Ref. [35] employed five supervised learning methods, including support vector machines, k-nearest neighbors, artificial neural networks, decision trees, and logistic regression, to detect ADS-B signal interference attacks. Experimental results demonstrated that these supervised learning methods could successfully classify normal ADS-B signals and ADS-B interference signals. However, these methods assume access to balanced and richly labeled datasets, which is rarely the case in real-world scenarios where anomalies are both infrequent and largely unlabeled [36].

Consequently, research has increasingly shifted toward unsupervised deep learning approaches. Ref. [37] proposed an LSTM-based anomaly detection method for ADS-B data. This approach used LSTM to predict ADS-B data and set thresholds based on the difference between predicted and actual values to perform anomaly detection. The results demonstrated that this method can successfully detect attacks such as slow velocity offset and slow altitude offset. Ref. [38] employed an autoencoder to model normal traffic patterns, detecting anomalies based on reconstruction error across multivariate flight parameters. Ref. [22] enhanced anomaly sensitivity to complex, low-frequency attacks by integrating a GAN-based discriminator with an autoencoder–generator architecture. Ref. [11] introduced a hierarchical temporal memory model capable of online adaptation to streaming data, though its effectiveness in capturing very long-term dependencies remains limited. Despite the success of these methods, deep learning models still face several challenges, such as the need for frequent retraining to adapt to new anomaly patterns and the lack of interpretability in anomaly detection.

2.2.3. Advances in LLMs

Currently, LLMs have rapidly become core components of modern Natural Language Processing (NLP) systems, thanks to their rich semantic representations, multimodal reasoning, and strong contextual understanding [39]. State-of-the-art models, such as Gemini [40], DeepSeek [41], and Qwen [42], already match or surpass task-specific baselines across a wide range of benchmarks. What is more, LLMs have recently gained attention for anomaly detection due to their powerful contextual understanding and adaptability [43]. Ref. [44] proposed a framework combining LLMs and variational autoencoders for real-time analysis of aeronautical communication data to detect potential safety risks and to alert ATC and flight crews, thereby enhancing aviation safety. Ref. [45] proposed a Transformer-based multi-agent model called MA-BERT. By incorporating an agent-aware attention mechanism and a pretraining–fine-tuning framework, MA-BERT significantly improves trajectory prediction and estimated time of arrival accuracy in ATM while substantially reducing training time and data requirements. Ref. [46] explored the potential of LLMs for flight trajectory reconstruction. By fine-tuning an LLaMA 3.1-8B model to handle noisy and missing ADS-B data, it introduced a novel evaluation metric called “containment accuracy” and demonstrated significant improvements over traditional methods. Ref. [47] employed the GPT-4 model to detect and interpret anomalies in ATC communications. Unlike traditional models, LLMs excel in few-shot and even unsupervised settings, enabling effective detection in scenarios with scarce or imbalanced labels [48]. Their ability to process both structured and unstructured data allows for a more nuanced understanding of flight sequences and potential anomalies. In this study, we investigate the application of LLMs for ADS-B anomaly detection, aiming to improve detection accuracy and adaptability.

3. Methodology

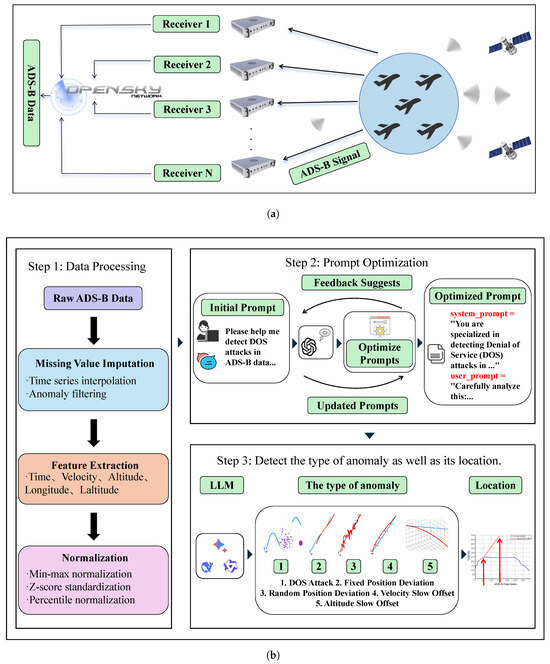

Figure 2 illustrates the overall LLM-based ADS-B anomaly detection framework developed in this study. First, as shown in Figure 2a, the raw ADS-B data is broadcast and collected through the OpenSky receiver network. Then, as shown in Figure 2b, human experts first preprocess the collected raw ADS-B data and then inject the initial prompts into the system. The LLMs initiate a self-iterative optimization loop based on the initial prompts. They generate logical correction suggestions grounded in historical trajectories and multimodal features. Through this process, the LLMs autonomously optimize and evaluate the prompt strategy. Expert human review and intervention are triggered only when the output fails to pass the assessment, enabling a dynamically evolving prompt strategy. Finally, the optimized prompts are input into various LLMs to identify five categories of ADS-B data anomalies.

Figure 2.

Schematic illustration and framework of the proposed ADS-B anomaly detection system. (a) Schematic illustration of ADS-B signal broadcasting and data aggregation within the OpenSky receiver network. (b) Architectural framework of the LLM-driven ADS-B anomaly detection system with self-iterative prompt optimization. The framework undergoes three main steps: (1) data processing, including raw ADS-B data preprocessing, missing value imputation, feature extraction, and normalization; (2) prompt optimization, where feedback suggests improvements to generate updated prompts; and (3) anomaly detection, which identifies the type of anomaly and its location. This framework utilizes iterative prompt optimization to enhance the detection accuracy of ADS-B anomalies.

3.1. Data Processing

In the OpenSky Network data, occasional ADS-B point losses occur when aircraft traverse areas with sparse ground-station coverage or move beyond the effective reception range. In the raw dataset collected for this study, the proportion of missing points per trajectory averaged approximately 3%. To preserve trajectory continuity and avoid confusing natural reception gaps with Denial of Service (DoS) attacks, the missing points were reconstructed using linear interpolation. The data preprocessing workflow primarily comprises three core steps: missing value imputation, feature extraction, and data normalization.

Feature Extraction: Each data point is represented as an n-dimensional feature vector

where in this study, corresponding to the selected features: time t, longitude , latitude , altitude h, heading , and velocity v. The entire ADS-B trajectory is, thus, represented as

Missing Value Imputation: This study employs linear interpolation to fill in missing data. First, the slope between the time points before and after the missing value is calculated as

Then the missing value at time step j is obtained as

Normalization: Min–max normalization is applied feature-wise:

where and denote the maximum and minimum values of the i-th feature across the sequence.

3.2. Prompt Optimization

Prompt engineering is a crucial technique for effective interaction between humans and LLMs. The better the design of the prompts, the better the performance of LLMs in executing specific downstream tasks [49]. The effectiveness of prompts is influenced by multiple factors, including the model used, its training data, model configuration, choice of wording, style and tone, structure, and context. Therefore, prompt design is not a one-time effort but an iterative optimization process [50]. Inappropriate prompts may lead to vague or inaccurate responses, hindering the model from generating meaningful outputs. Within the context of NLP and LLMs, prompts serve as inputs provided to the model for generating responses or predictions.

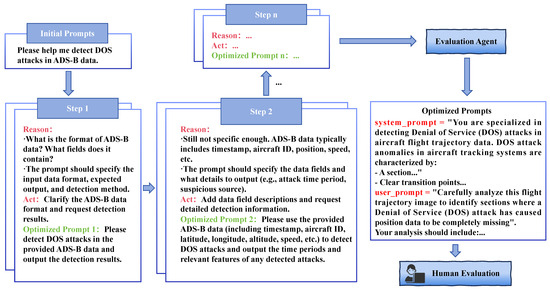

The React Agent outperforms traditional agents by integrating both reasoning and action capabilities within LLMs, rather than relying on only one [51]. This cooperative cycle allows for more flexible and effective decision-making. Building on this, our study proposes a dynamic and interactive method for optimizing prompts in ADS-B anomaly detection. With React Agent, prompts are continuously trained and improved to enhance detection accuracy.

Figure 3 depicts the prompt-optimization loop. Prompts are optimized in a multimodal, context-driven closed loop. In the initialization phase, human experts seed an editable prompt buffer P with ADS-B anomaly-detection prompts. Then, at each time step t, the system forms the context:

where are observations (data features), are reward signals, and are past actions.

Figure 3.

Workflow diagram of the React-Agent-based prompt optimization loop for LLM adaptation.

Given the context , the LLM first produces an analysis rationale and then an action , followed by a self-assessment of confidence :

Executing yields a candidate optimized prompt . When the confidence fails an evaluation agent’s check, a human-in-the-loop gate is triggered:

where denotes a gating function (implemented by an evaluation agent or human supervisor) that decides whether the candidate prompt requires correction.

If , is accepted. If , experts supply corrected pairs . The final rationale/action written to the trajectory is

3.3. Anomaly Detection

Let the raw ADS-B time series be (length l), and let I denote an auxiliary image. After feature scaling, we obtain the normalized sequence:

where each is the scaled n-dimensional feature vector at time step j.

Text and image encoders produce embeddings:

which are concatenated for each time step:

To ensure a logically consistent mapping, anomaly decisions are made per time step using a sliding context window of width w. With the finalized prompt , the LLM predicts a categorical label (six classes, where 0 denotes normal and 1–5 corresponds to anomaly types):

Collecting the per-step outputs yields the sequence of predicted labels:

Finally, contiguous runs of non-normal states () are grouped to form anomaly intervals . This per-step formulation preserves adaptability and interpretability, as each decision is tied to a localized context window .

3.4. Overall Algorithm

To provide a clear overview of the proposed methodology, the Algorithm 1 summarizes the complete workflow of the React-Agent-based training and testing framework.

| Algorithm 1 React-Agent Training and Testing Framework. |

|

4. Experiments

4.1. Dataset Construction

The OpenSky Network is an open-source aviation data platform providing global ADS-B data [52]. Although capable of recording ADS-B messages at higher rates, its sampling rate varies across regions and flights. To ensure temporal consistency and comparability, trajectories were resampled to a fixed 10 s interval, a setting widely adopted in trajectory anomaly studies for preserving essential spatiotemporal patterns while reducing computational overhead. For this study, ADS-B data from 500 flights covering all flight phases were collected, with trajectories containing 200–800 data points. Due to the scarcity of real-world attack data, five types of anomalies were synthetically injected into real ADS-B trajectories: random position deviation, fixed position deviation, slow altitude drift, slow speed drift, and DoS attacks. Table 2 summarizes the specific construction methods for these attack types.

Table 2.

Data construction methods for five ADS-B anomaly types based on real flight scenarios.

In this study, the choice of injection points represents different flight phases and strikes a balance between coverage and experimental feasibility. Attacks in the range of 51–150 cover the post-takeoff and early cruise phases, avoiding ground noise and capturing stable yet dynamic states. Later injections (151–250) are applied to slow drift scenarios to ensure that anomalies are observable. These ranges were chosen to reflect representative operating conditions rather than a fixed, singular environment. The offsets and drifts were determined based on real ADS-B data distributions and prior studies to ensure the scenarios remain realistic, controllable, and widely applicable.

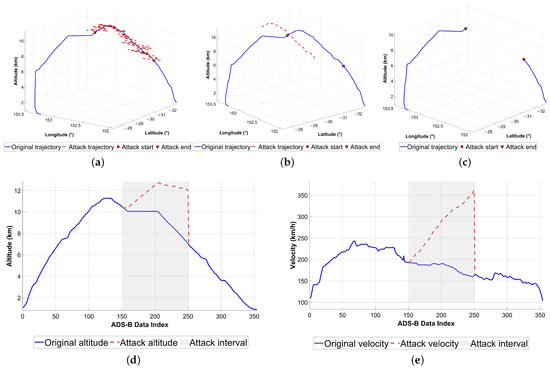

A single flight trajectory was randomly selected, comprising 339 ADS-B data points. The specific methods for constructing attack data are illustrated in Figure 4.

Figure 4.

Example of attack data: (a) Random position deviation, (b) Fixed position deviation, (c) DoS attack, (d) Altitude slow offset, (e) Velocity slow offset.

4.2. Evaluation Metrics

This study evaluates the performance of the anomaly detection model using Detection Rate (DE), Recall (RC), Accuracy (ACC), Miss Detection Rate (MDR), and False Alarm Rate (FAR), all of which are calculated based on the confusion matrix. The confusion matrix of the anomaly detection model is presented in Table 3, where TP represents the number of non-attack values detected as normal, FN represents the number of non-attack values detected as anomalies, FP represents the number of attack values detected as normal, and TN represents the number of attack values detected as anomalies.

Table 3.

Confusion matrix framework for evaluating ADS-B anomaly detection model performance.

The calculation methods for the evaluation metrics used in this study are presented in Equation (17). The DE denotes the probability of correctly detecting attack values. The RC refers to the probability that non-attack values are not mistakenly classified as anomalous. The ACC represents the probability that all values are correctly identified. The MDR indicates the probability that attack values are misclassified as normal. The FAR denotes the probability that non-attack values are incorrectly identified as anomalies. The formulas are as follows:

4.3. Model Selection and Configuration

Three mainstream LLMs serve as the foundational models for ADS-B data anomaly detection: Gemini-2.0, DeepSeek-V3, and Qwen-2.5-7B. To ensure experimental fairness and result stability, the temperature parameter of all models was uniformly set to zero, thereby eliminating uncertainties caused by random sampling. Additionally, Gemini-2.0 supports multimodal inputs, allowing for the simultaneous use of flight trajectory images and numerical data, whereas DeepSeek-V3 and Qwen-2.5-7B utilize only numerical data inputs, enabling a comparison of the performance gains attributable to multimodal fusion.

To enable a clear comparison of the characteristics of the selected LLMs and their suitability for this study, Table 4 summarizes the key information of the chosen LLMs.

Table 4.

Comparison of Model Characteristics and Experimental Suitability.

4.4. Experimental Results

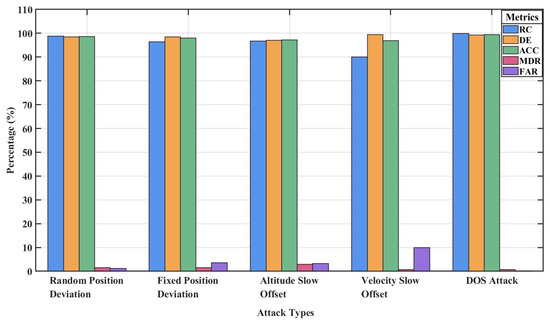

This section focuses on the performance of Gemini-2.0 in five types of ADS-B anomaly detection. The experimental dataset comprises 500 instances for each of the five anomaly types, allowing for a detailed assessment of fine-grained localization performance. Table 5 and Figure 5 present the detection results of Gemini-2.0 in different anomaly categories.

Table 5.

Detailed performance metrics of the Gemini-2.0 model in anomaly interval detection across multiple attack categories.

Figure 5.

Gemini-2.0 results for anomaly interval detection.

As shown in Figure 5, Gemini-2.0 performs exceptionally well in detecting DoS attack anomalies and two types of position deviation anomalies, with RC exceeding 96% and FAR below 4%. The DoS attack, characterized by a complete disappearance of data in a segment of the flight path, presents a pronounced contrast that enables the model to easily capture such anomalies with almost no missed detections or false alarms.

For random and fixed position deviation anomalies, the abrupt changes in coordinates are prominent and sudden. The multimodal architecture of Gemini-2.0 integrates image and textual data, enhancing sensitivity to changes in spatiotemporal data. Consequently, it can accurately identify significant deviations from normal flight patterns, offering strong robustness and high localisation precision.

The Gemini-2.0 performs worst on velocity slow offset anomalies, with an RC of 90.10% and a FAR of 9.90%. The altitude slow offset anomaly is secondary. The high FAR for velocity slow offset is mainly due to the model’s inability to distinguish attacks from normal flight phases, especially during speed fluctuations in takeoff or landing. Although we explicitly instructed the model to differentiate between anomalous and normal flight phases during prompt optimization, the results remained unsatisfactory. The model encounters similar issues in detecting altitude slow offset anomalies when anomaly features partially overlap with normal operations, resulting in fuzzy decision boundaries. Multimodal data provides limited benefits in such scenarios.

Overall, the detection accuracy of the model increases with the severity of the anomaly. The performance of the model shows a positive correlation between anomaly salience and detection accuracy, which explains the generalization limitations of LLMs in low signal-to-noise ratio scenarios.

4.5. Comparative Experiment

4.5.1. Performance Comparison of Anomaly Intervals

To identify anomaly intervals, three representative ADS-B anomaly detection methods were used alongside three mainstream LLMs. These methods include Isolated Forest (Iforest), a Gated Recurrent Unit-based model (GRU), and a hybrid CNN-LSTM model. Iforest detects outliers by assigning low probabilities to data points in sparse regions, using 200 trees for general anomalies and 1500 for DoS anomalies. The GRU model utilizes a bidirectional GRU layer with 32 units, along with multi-head attention, and incorporates dropout and L1-L2 regularization [27]. Training is performed using the Adam optimizer with early stopping and dynamic learning rate adjustment. The hybrid CNN-LSTM model has two branches, where the CNN extracts local features and the LSTM captures temporal dependencies, followed by fully connected layers [15]. All models are trained with input sequences of 50 time steps, use adaptive learning rate strategies, and determine detection thresholds based on validation set performance.

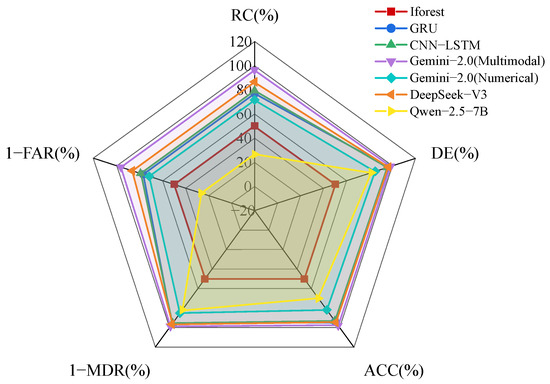

Based on the performance metrics presented in Table 6 and Figure 6, Gemini-2.0 (multimodal) demonstrates a clear superiority over other models across all evaluation metrics. It achieves the highest RC of 96.39%, significantly outperforming alternatives such as GRU (76.95%) and CNN-LSTM (79.31%). This result substantially exceeds the unimodal Gemini-2.0 (Numerical) version, which achieves only 71.63%. This starkly contrasts with Qwen-2.5-7B, which exhibits critical failures in anomaly detection with an RC of only 26.51% and a FAR of 73.49%.

Table 6.

The comparative results of different models in detecting anomalous intervals are presented, with the best values highlighted in red and italicized and the second-best values highlighted in blue and underlined.

Figure 6.

Comparative analysis of model performance in anomaly interval detection using multiple evaluation metrics.

DeepSeek-V3 achieves second place overall, with stable metrics and an RC of 86.57%. However, its FAR (13.43%) remains significantly higher than that of Gemini-2.0 (multimodal). This demonstrates its limitations in identifying nuanced anomalies. Traditional deep learning models (GRU and CNN-LSTM) achieve moderate detection capabilities. However, their high FAR and suboptimal RC reveal persistent challenges in reliable anomaly identification. Meanwhile, the Gemini-2.0 (Numerical) suffers severe performance degradation, with a FAR of 28.37% and an MDR of 15.03%, underscoring the indispensability of multimodal fusion.

The performance of Iforest is characterized by a high degree of variability, thus rendering it unsuitable for practical deployment. These findings indicate that effective anomaly detection in aviation necessitates architectures capable of synthesizing multimodal data. In contrast, domain-agnostic models such as Qwen-2.5-7B or isolated algorithms like Iforest appear to lack the capacity to capture the contextual nuances inherent in flight operations. The exceptional outcomes of Gemini-2.0 (Multimodal) underscore its aptitude for safety-critical aviation anomaly detection operations.

4.5.2. Detection Performance by Anomaly Category

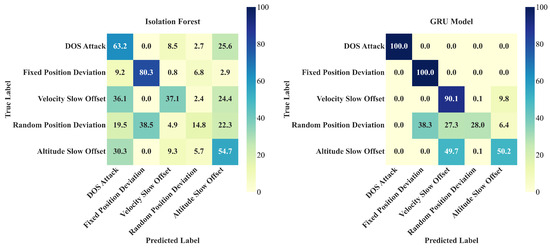

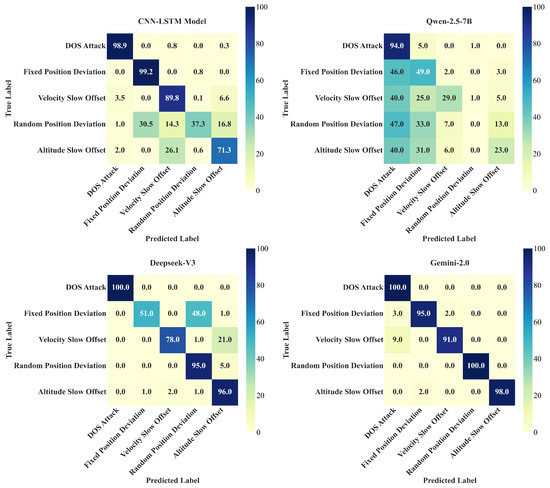

We also performed a systematic comparison of mixed anomaly samples after evaluating the performance of various models in identifying anomaly intervals. The models included three LLMs (Gemini-2.0, DeepSeek-V3, and Qwen-2.5-7B), two sequential models (GRU and CNN-LSTM), and the classic Iforest algorithm.

Five categories of anomaly datasets were used. Each category contained 100 independent samples to comprehensively assess detection capabilities across diverse anomaly types. The GRU and CNN-LSTM models adopt bidirectional recurrent architectures with input sequences of length 50 and six original features. Detection performance for minority classes was enhanced via feature augmentation and class-weight balancing. Both models were trained using the Adam optimizer (learning rate 0.0005 and batch size 32) with early stopping and learning rate decay to prevent overfitting. For Iforest, 200 trees were used, with the anomaly ratio baseline dynamically adjusted to the class distribution. Feature extraction combined multi-scale sliding windows and multiple statistical feature concatenations, and final multi-class discrimination was performed using a voting mechanism.

As shown in Figure 7, the Gemini-2.0 model shows significant advantages in detecting anomalies across categories. It achieves 100% ACC in identifying random positional deviations. It also attains 98% ACC in detecting altitude slow offset. These results validate the capability of the multimodal architecture to disentangle spatiotemporal features. In contrast, DeepSeek-V3 performs robustly on altitude slow offsets with an ACC of 96%. It handles random positional deviations at 95% ACC. However, DeepSeek-V3 shows limitations in velocity slow offset detection, achieving only 78% ACC. Specifically, 17% of the velocity slow offset cases are incorrectly labeled as fixed positional deviation. This pattern indicates difficulty distinguishing gradual velocity changes from spatial discontinuities.

Figure 7.

Confusion matrix-based evaluation of model accuracy in anomaly category classification.

Among traditional models, GRU achieves a perfect 100% ACC for fixed positional deviations. However, GRU performs poorly in altitude slow offset detection, achieving only 49.7% ACC. Nearly half of the altitude slow offset samples are confused with velocity slow offset anomalies. This suggests recurrent neural networks struggle with cross-dimensional feature interactions. The CNN-LSTM model shows 71.3% ACC for altitude slow offset detection. This performance reveals limitations in modeling vertical gradient patterns through hybrid convolutional–recurrent architectures.

Qwen-2.5-7B exhibits clear domain adaptation difficulties. Only DoS attacks reach 94% ACC. All other anomaly types suffer severe misclassification. It misclassifies 73% of altitude slow offset samples as positional deviation or velocity slow offset labels. This reflects the bias of general-purpose LLMs against aerospace physical features. The Iforest algorithm performs poorly globally. For example, 60% of DoS attacks are misidentified as random positional deviations. This suggests that traditional isolation mechanisms are insufficient to preserve the inherent spatiotemporal correlations of aviation data.

4.6. Interpretability Enhancement

In addition to quantitative detection metrics, LLMs generate detailed explanatory evidence during anomaly detection, enhancing the interpretability of model results.

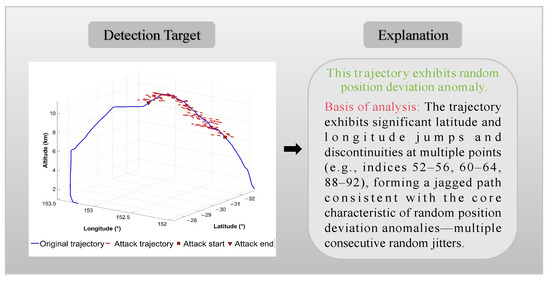

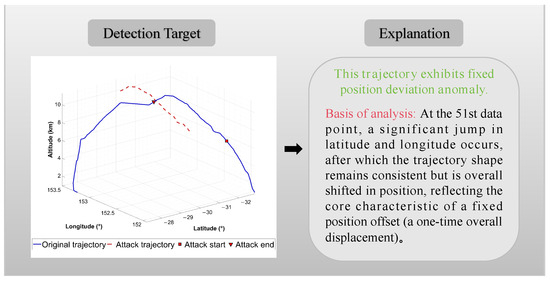

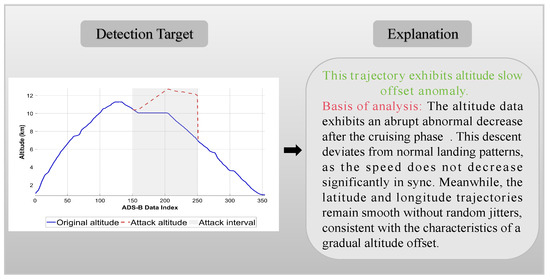

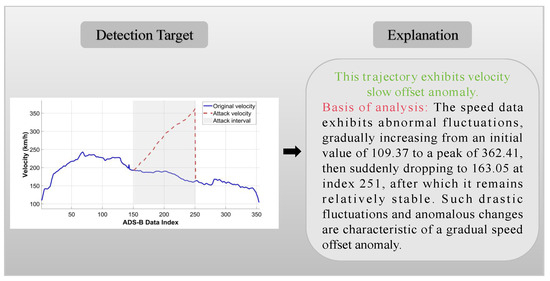

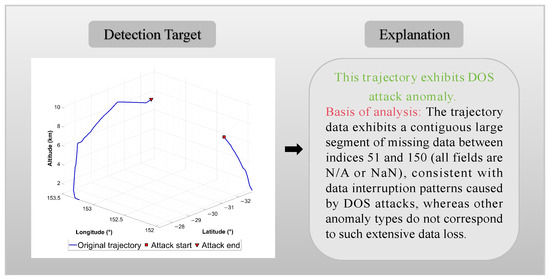

As shown in Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12, the outputs of LLMs not only specify the type of anomaly but also provide anomaly analysis descriptions based on trajectory features.

Figure 8.

LLM-driven explanation of ADS-B anomaly detection: random position deviation anomaly.

Figure 9.

LLM-driven explanation of ADS-B anomaly detection: fixed position deviation anomaly.

Figure 10.

LLM-driven explanation of ADS-B anomaly detection: altitude slow offset anomaly.

Figure 11.

LLM-driven explanation of ADS-B anomaly detection: velocity slow offset anomaly.

Figure 12.

LLM-driven explanation of ADS-B anomaly detection: DoS attack anomaly.

Figure 8 shows that the LLMs determine random position deviation anomalies by detecting abrupt jumps in trajectory latitude and longitude. They also identify sudden changes in direction and velocity. The detection report of the model identifies multiple anomalous jump points in the trajectory (e.g., coordinate index ranges 51–63, 78–87), accompanied by structural changes in heading and velocity patterns. These behavioral characteristics conform to the definition of “random position deviation.” Based on this, the model provides further anomaly pattern matching information, indicating that the deviation is a randomly generated trajectory anomaly.

As shown in Figure 9, the LLMs detect fixed position deviation anomalies by analyzing changes in trajectory latitude and longitude. At the 51st data point, a significant jump in latitude and longitude occurs. Subsequently, although the overall trajectory shape remains coherent, it is displaced from its normal position. The model identifies this distinct jump and the persistent deviation that follows, confirming the anomaly as a fixed position offset.

As shown in Figure 10, the LLMs identify altitude slow offset anomalies by examining altitude data trends. The altitude exhibits an abnormal, gradual decline, inconsistent with normal landing patterns. Unlike typical landings, the velocity does not decrease synchronously. Additionally, the latitude and longitude trajectories remain smooth without random jitter. These characteristics collectively align with the features of a gradual altitude offset anomaly.

As shown in Figure 11, the LLMs detect velocity slow offset anomalies by monitoring fluctuations in speed data. The speed data exhibit abrupt abnormal changes: rising from 109.37 to a peak of 362.41, then suddenly dropping to 163.05 at index 251, after which it remains relatively stable. The model tracks the full sequence of abnormal velocity increase and subsequent stabilization, classifying it as a velocity slow offset anomaly.

As illustrated in Figure 12, the LLMs detect DoS attack anomalies by examining the integrity of trajectory data. Between indices 51 and 150, a large continuous segment of trajectory data is missing. This pattern aligns with data interruptions caused by DoS attacks, as other anomaly types do not lead to such extensive data loss. The model uses this pronounced data gap to identify DoS attack anomalies, providing a clear basis for detecting trajectory issues related to malicious attacks. The detailed anomaly output enables the decision to go beyond a black-box determination, adding traceable physical significance.

5. Discussion

Although many well-established ADS-B anomaly detection methods based on deep learning and machine learning have been proposed, these approaches typically require periodic model retraining to adapt to newly emerging anomalous patterns. With the emergence of LLMs, the application of LLMs for ADS-B data anomaly detection is a key issue we need to focus on. Based on our research findings, the LLMs-based ADS-B anomaly detection method driven by prompt-based semantic reasoning proposed in this paper is able to identify ADS-B anomalies with a high level of accuracy. However, the performance of this method is still limited by several factors.

The flight phase has a certain level of sensitivity to detection accuracy. During the takeoff and landing phases, due to significant climb rates, descent rates, heading changes, and speed fluctuations, LLMs are more likely to misinterpret normal dynamic variations as anomalies, leading to a risk of performance degradation. This phenomenon is especially prominent when dealing with slow offset attacks on altitude and velocity.

If the attack behavior is highly covert, it will make it difficult for LLMs to assess the legitimacy of ADS-B data. However, in practice, the purpose of the attacker is to interfere with ATC situations, which requires the attack behavior to be significant. Therefore, the construction of the attack data in this paper is reasonable.

Although LLMs demonstrate strong capabilities in generating human-readable explanations for ADS-B anomalies, their interpretability faces limitations, including risks of hallucination. For example, when detecting velocity slow offsets, LLMs sometimes incorrectly attributed speed fluctuations to sudden altitude changes that were not present in the actual data. This mistake arose from the model relying heavily on learned associations between velocity and altitude in general aviation contexts.

In terms of computational cost, the method proposed in this paper concentrates its primary overhead on prompt optimization and inference with LLMs. Compared with conventional machine learning approaches, the proposed method generally imposes a lower computational burden during feature extraction and inference, owing to its reduced reliance on complex feature engineering. Nonetheless, LLMs still present challenges with respect to inference speed, memory footprint, and the ability to batch-process trajectories at large scale. Furthermore, closed-source LLMs, such as Gemini 2.0, are typically deployed in the cloud, requiring users to access their capabilities via APIs. This reliance on cloud services introduces constraints on model availability, as it depends on server and API stability and sacrifices flexibility and customizability. The method proposed in this paper is not tied to any single model architecture and can be extended to other LLMs, such as DeepSeek and Qwen. However, substantial performance differences exist across models, and therefore, selecting an appropriate model for the ADS-B anomaly detection task is particularly critical.

As shown in Table 7, LLMs, machine learning, and deep learning methods each play a critical role in ADS-B anomaly detection. Each class of model has distinct strengths and limitations. When deciding whether to use LLMs, conventional machine learning, or deep learning for a given task, it is essential to understand the key differences among model types and their practical implications. This evaluation should not only assess technical performance and resource consumption but also consider factors such as maintainability.

Table 7.

Comparative analysis of the strengths and weaknesses of LLMs, machine learning, and deep learning models for ADS-B anomaly detection.

6. Conclusions

This study proposed an LLM-driven ADS-B anomaly detection framework based on prompt-based semantic reasoning. By incorporating a reasoning–action closed-loop optimization mechanism, the framework dynamically adjusts prompts and decision logic, enabling adaptation to unseen attacks without retraining. An interpretable decision mechanism embeds trajectory and attack features into the semantic context, ensuring anomaly labels are accompanied by explicit reasoning on causes, locations, and suggested responses, thereby overcoming the “black-box” limitation. Multimodal integration further enhances separability between normal and abnormal flight patterns. Experiments on 500 real trajectories with five attack types show that the framework achieved an RC of 96.39%, significantly outperforming LSTM and Iforest, confirming its adaptability, transparency, and effectiveness in complex airspace environments.

Future research should focus on domain-specific fine-tuning and optimization of LLMs for ATM systems, integrating advanced techniques to enhance model adaptability to ADS-B data. At the same time, further improvements in the interpretability and transparency of anomaly detection are needed to reduce misattribution and the risk of hallucinations. In addition, computational overhead should be reduced through lightweight model design and inference optimization, thereby improving large-scale trajectory processing capabilities while maintaining practical efficiency and feasibility. Although this study demonstrates the potential of LLMs to produce intuitive and user-friendly explanations, developing methods to best assist air traffic controllers in understanding the underlying causes of ADS-B anomalies remains an important area for further investigation.

Author Contributions

Conceptualization, S.L.; investigation, Z.Z.; writing—original draft, S.L.; writing—review and editing, B.W., Z.Z., Y.Y. and Y.G.; supervision, B.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded by the National Natural Science Foundation of China under Grant 62472437.

Data Availability Statement

The data supporting this study’s findings are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADS-B | Automatic Dependent Surveillance-Broadcast |

| LLMs | Large Language Models |

| ATM | Air Traffic Management |

| PSR | Primary Surveillance Radar |

| SSR | Secondary Surveillance Radar |

| ATC | Air Traffic Control |

| GNSS | Global Navigation Satellite System |

| LSTM | Long Short-Term Memory |

| NLP | Natural Language Processing |

| MLAT | Multilateration |

| DoS | Denial of Service |

| DE | Detection Rate |

| RC | Recall |

| ACC | Accuracy |

| MDR | Miss Detection Rate |

| FAR | False Alarm Rate |

| Iforest | Isolated Forest |

| GRU | Gated Recurrent Unit-based model |

References

- Aposporis, P. A review of global and regional frameworks for the integration of an unmanned aircraft system in air traffic management. Transp. Res. Interdiscip. Perspect. 2024, 24, 101064. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, B.; Tian, J.; Luo, P. Efficient SFC Protection Method against Network Attack Risks in Air Traffic Information Networks. Electronics 2024, 13, 2664. [Google Scholar] [CrossRef]

- Khan, H.A.; Khan, H.; Ghafoor, S.; Khan, M.A. A Survey on Security of Automatic Dependent Surveillance-Broadcast (ADS-B) Protocol: Challenges, Potential Solutions and Future Directions. IEEE Commun. Surv. Tutor. 2024. early access. [Google Scholar] [CrossRef]

- Chen, X.; He, D.; Peng, C.; Luo, M.; Huang, X. A secure and effective hierarchical identity-based signature scheme for ads-b systems. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 5157–5168. [Google Scholar] [CrossRef]

- Gurer, G.; Dalveren, Y.; Kara, A.; Derawi, M. A Radio Frequency Fingerprinting-Based Aircraft Identification Method Using ADS-B Transmissions. Aerospace 2024, 11, 235. [Google Scholar] [CrossRef]

- Azz, M.E.A.; Aljasmi, A.; Seghrouchni, A.E.F.; Benzarti, W.; Chopin, P.; Barbaresco, F.; Zitar, R.A. ADS-B Data Anomaly Detection with Machine Learning Methods. In Proceedings of the 2024 IEEE 11th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Lublin, Poland, 3–5 June 2024; pp. 94–99. [Google Scholar]

- Wu, Z.; Guo, A.; Yue, M.; Liu, L. An ADS-B message authentication method based on certificateless short signature. IEEE Trans. Aerosp. Electron. Syst. 2019, 56, 1742–1753. [Google Scholar] [CrossRef]

- Luo, P.; Wang, B.; Tian, J.; Yang, Y. ADS-Bpois: Poisoning Attacks against Deep Learning-Based Air Traffic ADS-B Unsupervised Anomaly Detection Models. IEEE Internet Things J. 2024, 11, 38301–38311. [Google Scholar] [CrossRef]

- Lu, X.; Chen, H. Research on the Application of Artificial Intelligence Technology in ADS-B Data Anomaly Detection. In Proceedings of the 2024 IEEE International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), Shenzhen, China, 22–24 November 2024; pp. 1909–1912. [Google Scholar]

- Li, T.; Wang, B.; Shang, F.; Tian, J.; Cao, K. Dynamic temporal ADS-B data attack detection based on sHDP-HMM. Comput. Secur. 2020, 93, 101789. [Google Scholar] [CrossRef]

- Li, T.; Wang, B.; Shang, F.; Tian, J.; Cao, K. Online sequential attack detection for ADS-B data based on hierarchical temporal memory. Comput. Secur. 2019, 87, 101599. [Google Scholar] [CrossRef]

- Zhao, D.; Xu, X.; You, M.; Arun, P.V.; Zhao, Z.; Ren, J.; Wu, L.; Zhou, H. Local Sub-Block Contrast and Spatial–Spectral Gradient Feature Fusion for Hyperspectral Anomaly Detection. Remote Sens. 2025, 17, 695. [Google Scholar] [CrossRef]

- Li, Y.; Peng, X.; Zhang, J.; Li, Z.; Wen, M. DCT-GAN: Dilated convolutional transformer-based GAN for time series anomaly detection. IEEE Trans. Knowl. Data Eng. 2021, 35, 3632–3644. [Google Scholar] [CrossRef]

- Zhao, D.; Zhang, H.; Huang, K.; Zhu, X.; Arun, P.V.; Jiang, W.; Li, S.; Pei, X.; Zhou, H. SASU-Net: Hyperspectral video tracker based on spectral adaptive aggregation weighting and scale updating. Expert Syst. Appl. 2025, 272, 126721. [Google Scholar] [CrossRef]

- Wang, J.; Zou, Y.; Ding, J. ADS-B spoofing attack detection method based on LSTM. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 160. [Google Scholar] [CrossRef]

- Taşdelen, O.; Çarkacioglu, L.; Töreyin, B.U. Anomaly detection on ads-b flight data using machine learning techniques. In Proceedings of the International Conference on Computational Collective Intelligence, Rhodos, Greece, 29 September–1 October 2021; Springer: Cham, Switzerland, 2021; pp. 771–783. [Google Scholar]

- Ershen, W.; Yuanshang, S.; Song, X.; Jing, G.; Chen, H.; Pingping, Q.; Tao, P.; Jiantong, Z. ADS-B Anomaly Data Detection Model Based on Deep Learning and Difference of Gaussian Approach. Trans. Nanjing Univ. Aeronaut. Astronaut. 2020, 37, 550. [Google Scholar]

- Wang, E.; Song, Y.; Xu, S.; Guo, J.; Qu, P.; Pang, T. A detection model for anomaly on ADS-B data. In Proceedings of the 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA), Kristiansand, Norway, 9–13 November 2020; pp. 990–994. [Google Scholar]

- Wandelt, S.; Sun, X.; Fricke, H. Ads-bi: Compressed indexing of ads-b data. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3795–3806. [Google Scholar] [CrossRef]

- Manesh, M.R.; Kaabouch, N. Analysis of vulnerabilities, attacks, countermeasures and overall risk of the Automatic Dependent Surveillance-Broadcast (ADS-B) system. Int. J. Crit. Infrastruct. Prot. 2017, 19, 16–31. [Google Scholar] [CrossRef]

- Li, T.; Wang, B. Sequential collaborative detection strategy on ADS-B data attack. Int. J. Crit. Infrastruct. Prot. 2019, 24, 78–99. [Google Scholar] [CrossRef]

- Yue, M.; Zheng, H.; Cui, H.; Wu, Z. Gan-lstm-based ads-b attack detection in the context of air traffic control. IEEE Internet Things J. 2023, 10, 12651–12665. [Google Scholar] [CrossRef]

- Khandker, S.; Turtiainen, H.; Costin, A.; Hämäläinen, T. Cybersecurity attacks on software logic and error handling within ADS-B implementations: Systematic testing of resilience and countermeasures. IEEE Trans. Aerosp. Electron. Syst. 2021, 58, 2702–2719. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Z.; Wu, B.; Gui, G. A robust and practical solution to ADS-B security against denial-of-service attacks. IEEE Internet Things J. 2023, 11, 13647–13659. [Google Scholar] [CrossRef]

- Viveros, C.A.P. Analysis of the Cyber Attacks Against ADS-B Perspective of Aviation Experts. Master’s Thesis, University of Tartu, Tartu, Estonia, 2016. [Google Scholar]

- Li, T.; Wang, B.; Shang, F.; Tian, J.; Cao, K. Threat model and construction strategy on ADS-B attack data. IET Inf. Secur. 2020, 14, 542–552. [Google Scholar] [CrossRef]

- Luo, P.; Wang, B.; Li, T.; Tian, J. ADS-B anomaly data detection model based on VAE-SVDD. Comput. Secur. 2021, 104, 102213. [Google Scholar] [CrossRef]

- Wu, Z.; Shang, T.; Guo, A. Security issues in automatic dependent surveillance-broadcast (ADS-B): A survey. IEEE Access 2020, 8, 122147–122167. [Google Scholar] [CrossRef]

- Luo, P.; Wang, B.; Tian, J. TTSAD: TCN-Transformer-SVDD Model for Anomaly Detection in air traffic ADS-B data. Comput. Secur. 2024, 141, 103840. [Google Scholar] [CrossRef]

- Kacem, T.; Wijesekera, D.; Costa, P. ADS-Bsec: A holistic framework to secure ADS-B. IEEE Trans. Intell. Veh. 2018, 3, 511–521. [Google Scholar] [CrossRef]

- Kacem, T.; Barreto, A.B.; Costa, P.; Wijesekera, D. A Key Management Module for Secure ADS-B. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 1784–1789. [Google Scholar]

- Strohmeier, M.; Lenders, V.; Martinovic, I. Intrusion detection for airborne communication using PHY-layer information. In Proceedings of the International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment, Milan, Italy, 9–10 July 2015; Springer: Cham, Switzerland, 2015; pp. 67–77. [Google Scholar]

- Wang, W.; Liu, J.; Liang, J. Single antenna ADS-B overlapping signals separation based on deep learning. Digit. Signal Process. 2023, 132, 103804. [Google Scholar] [CrossRef]

- Zhu, Y.; Yu, W.; Li, X. A Multi-objective transfer learning framework for time series forecasting with Concept Echo State Networks. Neural Netw. 2025, 186, 107272. [Google Scholar] [CrossRef]

- Manesh, M.R.; Velashani, M.S.; Ghribi, E.; Kaabouch, N. Performance comparison of machine learning algorithms in detecting jamming attacks on ADS-B devices. In Proceedings of the 2019 IEEE International Conference on Electro Information Technology (EIT), Brookings, SD, USA, 20–22 May 2019; pp. 200–206. [Google Scholar]

- Wang, C.; Wu, K.; Zhou, T.; Yu, G.; Cai, Z. Tsagen: Synthetic time series generation for kpi anomaly detection. IEEE Trans. Netw. Serv. Manag. 2021, 19, 130–145. [Google Scholar] [CrossRef]

- Shabtai, A.; Habler, I. Using LSTM Encoder-Decoder Algorithm for Detecting Anomalous ADS-B Messages. U.S. Patent 11,068,593, 20 July 2021. [Google Scholar]

- Fried, A.; Last, M. Facing airborne attacks on ADS-B data with autoencoders. Comput. Secur. 2021, 109, 102405. [Google Scholar] [CrossRef]

- Su, J.; Jiang, C.; Jin, X.; Qiao, Y.; Xiao, T.; Ma, H.; Wei, R.; Jing, Z.; Xu, J.; Lin, J. Large language models for forecasting and anomaly detection: A systematic literature review. arXiv 2024, arXiv:2402.10350. [Google Scholar] [CrossRef]

- Islam, R.; Ahmed, I. Gemini-the most powerful LLM: Myth or Truth. In Proceedings of the 2024 IEEE 5th Information Communication Technologies Conference (ICTC), Nanjing, China, 10–12 May 2024; pp. 303–308. [Google Scholar]

- Deng, Z.; Ma, W.; Han, Q.L.; Zhou, W.; Zhu, X.; Wen, S.; Xiang, Y. Exploring DeepSeek: A Survey on Advances, Applications, Challenges and Future Directions. IEEE/CAA J. Autom. Sin. 2025, 12, 872–893. [Google Scholar] [CrossRef]

- Luo, J.; Yu, H.; Tan, C.; Yu, H. Enhanced Qwen-VL 7B Model via Instruction Finetuning on Chinese Medical Dataset. In Proceedings of the 2024 IEEE 5th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 12–14 April 2024; pp. 526–530. [Google Scholar]

- Elhafsi, A.; Sinha, R.; Agia, C.; Schmerling, E.; Nesnas, I.A.; Pavone, M. Semantic anomaly detection with large language models. Auton. Robot. 2023, 47, 1035–1055. [Google Scholar] [CrossRef]

- Fox, K.L.; Niewoehner, K.R.; Rahmes, M.; Wong, J.; Razdan, R. Leverage Large Language Models For Enhanced Aviation Safety. In Proceedings of the 2024 IEEE Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 23–25 April 2024; pp. 1–11. [Google Scholar]

- Deng, C.; Choi, H.C.; Park, H.; Hwang, I. Multi-agent learning for data-driven air traffic management applications. J. Air Transp. Manag. 2025, 128, 102843. [Google Scholar] [CrossRef]

- Zhang, Q.; Mott, J.H. An Exploratory Assessment of LLMs’ Potential for Flight Trajectory Reconstruction Analysis. Mathematics 2025, 13, 1775. [Google Scholar] [CrossRef]

- Connolly, B.J.; Schneider, G. Aircraft Anomaly Detection using Large Language Models: An Air Traffic Control Application. In Proceedings of the AIAA SCITECH 2024 Forum, Orlando, FL, USA, 8–12 January 2024; p. 0744. [Google Scholar]

- Li, Z.; Fan, S.; Gu, Y.; Li, X.; Duan, Z.; Dong, B.; Liu, N.; Wang, J. Flexkbqa: A flexible llm-powered framework for few-shot knowledge base question answering. AAAI Conf. Artif. Intell. 2024, 38, 18608–18616. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Marvin, G.; Hellen, N.; Jjingo, D.; Nakatumba-Nabende, J. Prompt engineering in large language models. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 27–28 June 2023; Springer: Singapore, 2023; pp. 387–402. [Google Scholar]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. React: Synergizing reasoning and acting in language models. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Sun, J.; Olive, X.; Strohmeier, M.; Schäfer, M.; Martinovic, I.; Lenders, V. OpenSky report 2021: Insights on ads-b mandate and fleet deployment in times of crisis. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; pp. 1–10. [Google Scholar]

- Kinikoglu, I. Evaluating ChatGPT and Google Gemini performance and implications in Turkish dental education. Cureus 2025, 17, e77292. [Google Scholar] [CrossRef]

- Balestri, R. Gender and content bias in Large Language Models: A case study on Google Gemini 2.0 Flash Experimental. Front. Artif. Intell. 2025, 8, 1558696. [Google Scholar] [CrossRef]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Yan, Z.; Fan, K.Q.; Zhang, Q.; Wu, X.; Chen, Y.; Wu, X.; Yu, T.; Su, N.; Zou, Y.; Chi, H.; et al. Comparative analysis of the performance of the large language models DeepSeek-V3, DeepSeek-R1, open AI-O3 mini and open AI-O3 mini high in urology. World J. Urol. 2025, 43, 416. [Google Scholar] [CrossRef]

- Hui, B.; Yang, J.; Cui, Z.; Yang, J.; Liu, D.; Zhang, L.; Liu, T.; Zhang, J.; Yu, B.; Lu, K.; et al. Qwen 2.5-coder technical report. arXiv 2024, arXiv:2409.12186. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).