1. Introduction

Machine learning (ML) is currently one of the most actively developing research areas. Significant advances have been made in techniques based on artificial neural networks (ANN) and reinforcement learning (RL). Among the applied problems in which ML methods are increasingly being applied, problems related to dynamical systems have received much attention. In particular, in the last two decades, efforts have been made to use artificial neural network technology both for modeling and identification of dynamical systems [

1,

2,

3,

4] and for their control [

5,

6,

7,

8]. The methods of reinforcement learning [

9,

10,

11], especially such a variant of this approach as Approximate Dynamic Programming (ADP) [

12,

13,

14,

15], are also actively investigated for the same purposes. Various variants of the ADP approach represent a very useful tool for synthesizing feedback control laws [

16], including those satisfying the requirements of optimality and adaptivity [

17,

18].

One of the areas in which the application of machine learning technologies is being actively explored is aircraft of various kinds and purposes. This state of affairs is caused by the complexity and variety of tasks that are assigned to aircraft. The complicating factor in this case is incomplete and inaccurate knowledge of the properties of the object under study and the conditions in which it operates. This is a typical situation for objects of this kind, which prompts the expansion of the tools that provide the solution of the required tasks. In addition, the objects of this class are characterized by the multi-mode of their use, as well as the multi-criteria character of the evaluation of the tasks solved by them.

For aircraft, as one of the most important kinds of dynamical systems, their behavior control has to be ensured under conditions of significant and diverse uncertainties in the values of their parameters and characteristics, flight modes, and environmental influences [

19,

20,

21,

22]. In addition, various abnormal situations may occur during flight, in particular equipment failures and structural damage, which must be counteracted by reconfiguring the aircraft control system [

23,

24,

25,

26]. Thus, it is necessary to operate under conditions of incomplete and inaccurate knowledge of the properties of both the aircraft and the environment in which it operates. In addition, the situation in which the aircraft finds itself at any given time may change in a significant and unpredictable way due to the occurrence of abnormal situations. The aircraft control system must be able to operate efficiently under these conditions by promptly changing the parameters and/or structure of the control laws used. Adaptive control methods allow satisfying this requirement [

22,

27,

28,

29,

30].

However, the online adjustment of the control law represents only one of the functions of an adaptive control system, although it is the most important one. The reason is that most adaptive control schemes include a model of the control object, which plays a crucial role in adjusting the control law. Obtaining such a model from data on the behavior of the control object constitutes the content of the classical dynamical system identification problem [

31,

32]. The well-known manual on system identification [

31] says, “Inferring models from observations and studying their properties is really what science is about”. To a large part this is true, but still we should not forget that models are created for certain purposes, namely, to be able to analyze the behavior of modeled systems, and in the case of controlled systems we are interested in, also to provide the synthesis of control laws for them. It is needs of this kind that largely determine the progress in the field of modeling systems.

As noted above, the properties of a control object may be inaccurately known when forming its model, or they may change for some reason already in the process of its functioning. In such a case, in adaptive systems using the object model, it is necessary to be able to promptly restore the adequacy of the model to the control object. In other words, in such systems, not only the control law but also the object model should have the property of adaptability.

Obtaining a model of an object for its use as part of an adaptive control system is closely related to three classes of problems arising in the development of aircraft of various kinds. These classes include analysis of aircraft behavior as dynamical systems [

33,

34,

35], synthesis of control algorithms for them [

36,

37,

38], and identification of their unknown or imprecisely known characteristics [

39,

40,

41]. Mathematical and computer models of controlled dynamical systems play a critical role in solving problems in all three classes. In most cases, when solving these problems, the aircraft is considered a solid body with six degrees of freedom. The traditional class of mathematical models in this situation are systems of ordinary differential equations. These models, with appropriate numerical methods [

42,

43,

44,

45], are widely used in solving problems of synthesis and analysis of controlled motion of aircraft of various classes.

The methods of formation and use of the above-mentioned models of traditional type have been developed in detail and successfully used for solving a wide range of problems. However, for modern and advanced complex technical systems, including aircraft of different classes, some problems arise, the solution of which cannot be provided by traditional techniques. These problems are caused, among other things, by the needs of adaptive control systems, i.e., the need to promptly adjust the control law and the model of the control object to maintain their adequacy to the changing situation.

Additionally, the problem of creating an adaptive control system for aircraft behavior is complicated by the fact that it becomes truly effective when working with a nonlinear model of the dynamical system under consideration. As experience shows [

2,

32], one of the most efficient approaches to solving the modeling problem regarding nonlinear systems is the use of methods and tools of artificial neural networks (ANN). Neural network modeling allows for the building of sufficiently accurate and computationally efficient ANN models. This approach can be considered as some alternative to methods of modeling dynamical systems based on the use of differential equations. It provides, among other things, the possibility of obtaining adaptive models. At the same time, traditional neural network models of controlled dynamical systems, in particular models of NARX and NARMAX classes [

2,

32,

46], which are recurrent neural networks, are the most commonly used for modeling controlled dynamical systems. These models are purely empirical; that is, they are black-box type models. Such models are based solely on experimental data about the behavior of the object. However, in problems of the complexity level typical for aerospace engineering, it is often impossible to achieve the required level of accuracy for this kind of empirical model, which would provide the solution to aircraft motion control problems. In addition, due to the structural organization of such models, they do not allow solving the problem of identification of characteristics of dynamical systems (e.g., aerodynamic characteristics of aircraft), which is a serious disadvantage of this class of models.

One of the most important reasons for the low efficiency of ANN models of the traditional type in tasks related to complicated technical systems is the fact that it is required to form a black-box type model, which should cover all the details of the behavior of the system under consideration. For this purpose, it is necessary to build an ANN model of high enough dimensionality, i.e., with numerous adjustable parameters in it. At the same time, it is known from the experience of ANN modeling that the higher the dimensionality of the ANN model, the larger the amount of training data required for its adjustment [

46]. As a result, with the amount of experimental data that can actually be obtained for complex technical systems, it is not possible to train such models at a given level of accuracy. This, in turn, does not allow us to obtain efficient adaptive control systems.

Obviously, as the complexity of the modeling problem to be solved grows, an ANN model of greater dimensionality will be required. For this reason, it is necessary to increase the number of adjustable parameters in it to ensure the complexity of the model is adequate for this problem. As an index of the complexity of an ANN model, we will take the number of connections in it, i.e., the number of adjustable parameters of the model. This index will be further referred to as the model dimensionality. Similarly, the number of examples in the training set will be called the dimensionality of the training set. The complexity of the model depends on the following factors: (1) the number of state and control variables in the model, i.e., the sum of the dimensionality of the state space and control space of the dynamical system under consideration; (2) the ranges of change for the state and control variables; and (3) the number of value samples for each of the state and control variables. But, as noted above, the increase in the dimensionality of the ANN model causes an increase in the required amount of training data. Because of this, there is a complexity threshold for the recurrent ANN model of empirical type above which it is not possible to train the network to a level acceptable in terms of modeling accuracy.

It is well known that Richard Bellman introduced the notion of the curse of dimensionality [

47,

48], which characterizes the catastrophic complexity growth of solving dynamic programming problems with increasing dimensionality of the problem to be solved. A very similar phenomenon, also a kind of curse of dimensionality, takes place in modeling controlled dynamical systems using recurrent neural networks of the empirical type.

To overcome the above-mentioned difficulties, which are typical for traditional models, both in terms of differential equations and ANN models, it is suggested to use a combined approach. Its basis is ANN modeling because only in this variant is it possible to obtain adaptive models. Theoretical knowledge about the modeling object, existing in the form of ordinary differential equations (for example, traditional models of aircraft motion), is introduced in a special way into the ANN model of hybrid type (semi-empirical ANN model, gray box model). In this case, a part of the ANN model is formed on the basis of the available theoretical knowledge and does not require further adjustment (training). Only those elements that contain uncertainties, for example, the aerodynamic characteristics of the aircraft, are subject to adjustment and/or structural correction in the process of training the ANN model.

This approach results in semi-empirical ANN models that allow us to solve problems that are inaccessible to traditional ANN methods [

49,

50,

51,

52,

53,

54,

55]. The semi-empirical approach allows us to radically reduce the dimensionality of the ANN model, which allows us to achieve the required accuracy from it using training sets that are insufficient in size for traditional ANN models. In addition, it makes it possible to identify the characteristics of the dynamical system described by nonlinear functions of many variables, e.g., coefficients of aerodynamic forces and moments.

The semi-empirical approach to ANN modeling of aircraft allows us to effectively overcome the above-mentioned model complexity threshold. As our experience shows [

56,

57,

58,

59,

60,

61], it can be used to successfully solve problems that are many times more complex than what is possible with empirical ANN models. The area of effective use of such models is the identification of aircraft characteristics from the available experimental data describing its behavior. However, these results are achieved at a very high cost. Namely, the computational time consumption of semi-empirical models is such that it completely excludes the possibility of their online adjustment, i.e., adaptation. Of course, without such a possibility, these models cannot be used as part of adaptive control systems. It is necessary to create some algorithms for estimating adjustments, which must be used together with a model that is not adaptive. This is a severe limitation for semi-empirical ANN models.

Another point to be noted is that recurrent neural networks (RNNs) exist in both the empirical and semi-empirical variants. Due to its dynamic nature, RNN is a very challenging object to train [

46,

62,

63,

64,

65,

66]. Therefore, we should look for variants of neural network-based adaptive control schemes in which we can use only feedforward networks, which are much easier to train than recurrent networks.

So, the hybrid (semi-empirical) model allows us to solve the problem of model accuracy based on the available training data. However, this model is too complex and time-consuming to train due to the dynamic (recurrent) nature of the used network. One of the options to reduce the impact of these limitations is also to build a hybrid ANN model, but this hybridization is of a quite unfamiliar nature compared with semi-empirical models. Namely, semi-empirical models combine elements based on both theoretical and empirical data on the behavior of the object under consideration. An alternative variant of the hybrid ANN model, which will be discussed below, is based on the combination of feedforward and recurrent layers as modules. In such a variant, it is possible to involve the currently well-developed methods and tools of deep neural networks and deep learning in solving the problem [

67,

68,

69,

70]. Here, the possibility of implementing algorithms for online adjustment of models appears, i.e., they are potentially suitable for their use as part of adaptive control systems.

The above-mentioned approaches to adaptive control are based on working directly with a nonlinear model of the control object. This causes considerable difficulties, which, as noted above, are due to the dynamic nature of recurrent networks. In the case of hybrid models of the second kind, the problem is somewhat mitigated since only part of the ANN model will be recurrent, but the difficulties remain, but they will not be so severe.

In our opinion, there is currently no reasonable alternative to machine learning methods and tools for creating adaptive systems for complex systems, in particular aircraft. However, as just mentioned, it is very difficult to realize object models (as well as control laws) as dynamic networks, and we would like to have more efficient methods at our disposal. In this connection, we need to search for such variants to work with a nonlinear control object, which would allow us to limit ourselves to using only feedforward networks. The training of such networks is many times easier than the learning of recurrent networks.

This requirement is satisfied by two approaches that are potentially suitable for developing adaptive systems.

The first of them is based on the linearization of the control object. This approach is traditionally used to analyze the perturbed motion of an aircraft and synthesize control laws for it. In this case, linearized models obtained by Taylor series expansion of the right-hand sides of the original nonlinear differential equations are used [

33,

34,

35,

36]. This approach severely reduces the capabilities of the resulting adaptive systems.

An alternative variant is based on online linearization of the control object. In this option, the most promising is the use of nonlinear transformation in feedback (feedback linearization), which is selected in such a way that the dynamical system closed by such feedback becomes linear [

71,

72,

73]. At the same time, the traditional linearization used in flight dynamics problems [

33,

34,

35] yields a model that has the required accuracy only in the single operational mode of the nonlinear system, as well as in a small neighborhood of this mode. In contrast, the feedback linearization approach allows obtaining an accurate model in the whole region of the operating modes of the object under consideration. For aircraft control, this approach is often referred to as nonlinear dynamic inversion (NDI) [

74,

75,

76,

77,

78,

79,

80]. The adaptability of the system based on the NDI approach is provided by adjusting the nonlinear transformation in the negative feedback enclosing the nonlinear control object. A valuable property of the NDI approach is that this transformation is a nonlinear function of several variables. This means that it is not necessary to use a recurrent neural network to represent a nonlinear dynamic model [

81,

82,

83,

84,

85,

86]. A feedforward network that efficiently handles the representation of functional transformation in feedback is sufficient.

Another approach that avoids the use of recurrent networks is based on reinforcement learning [

87]. The research area called adaptive/approximate dynamic programming (ADP) is the most actively developed in reinforcement learning for control problems in dynamical systems [

12,

14,

16,

88,

89]. The ADP approach involves primarily a class of methods based on the concept of adaptive critic design (ACD) [

9,

90,

91,

92]. Reinforcement learning methods, especially as applied to adaptive control problems for complex engineering systems, are very effective. This effectiveness is due to the joint use of reinforcement learning principles and feedforward neural networks. In addition, the ACD system’s learning algorithms for adaptive control problems are based on the techniques of optimal control, in particular, dynamic programming. In other words, this approach is also a kind of hybrid approach that combines the ideas and techniques of reinforcement learning, artificial neural networks, and optimal control.

The joint use of NDI and ADP-based approaches also opens up a wide range of possibilities. The attractive thing in such a case is that we can stay within the framework of well-developed linear control theory methods. Combining these methods with approximate dynamic programming techniques ensures the adaptability of the resulting systems. However, the traditional approach to obtaining a linearized representation of the system, based on the use of Taylor series expansion, significantly limits the applicability domain of the obtained control laws. NDI methods allow for the elimination of this limitation as they provide accurate linearization of the model for the whole range of operating modes of the system under consideration. The combined use of the model of a nonlinear control object linearized by NDI with ADP methods allows us to obtain an adaptive control system, which is especially valuable when the dynamics of the control object during its operation change unpredictably due to failures and/or damage. Promising options for ADP + NDI combinations include systems based on such variants of the ACD scheme as SNAC (Single Network Adaptive Critic) [

93,

94,

95,

96,

97,

98,

99,

100] and LQR Agent [

101,

102,

103,

104,

105,

106]. These variants, although they can be applied directly to nonlinear systems, are more efficient for a linear control object. The required linearization for both of these variants is conducted on an NDI basis. The first of these variants, i.e., SNAC, is a modification of the ACD scheme in which the critic is implemented as a feedforward network and the actor as an optimization algorithm. This distinguishes SNAC from the standard variant of ACD, in which the actor is also implemented as a feedforward network. The second variant, i.e., the LQR Agent, is a generalization of the conventional Linear Quadratic Regulator (LQR) problem of synthesizing an optimal regulator for a linear system under a quadratic criterion. While in the standard LQR method the desired control law gain coefficients are derived from the solution of the Riccati equation, in the case of the LQR Agent they are found using machine learning algorithms. We investigated the capabilities of both SNAC [

100] and LQR Agent [

106], including their combinations with NDI. The results demonstrate the potential of both of these approaches, but more research is needed to obtain results suitable for real-world applications.

In the following sections, the issues mentioned in the introduction are discussed in more detail.

In

Section 2, three classes of problems are formulated for an aircraft interpreted as a controllable dynamical system: analysis of system behavior, synthesis of control laws for them, and system identification. In all these classes of problems, the model of the object under consideration plays a key role. A general formulation of the modeling problem for controlled dynamical systems is given, and its specific features are analyzed. Further, in this section, the problem of training set generation for ANN modeling of dynamical systems is discussed. The results of solving this problem are critical for all three classes of problems mentioned above.

Section 3 presents a variant of ANN modeling of controlled dynamical systems based on the use of ANN models of the empirical type. These are models of the black-box type. All traditional neural networks belong to this kind, as they are built exclusively on the basis of experimental (empirical) data. This approach can be quite useful and, in some cases, the only possible one when there is nothing but empirical data about the object at our disposal. But this circumstance is also a source of limitation for the capabilities of this class of models. These issues are discussed using the example of the modeling problem for the longitudinal angular motion of an airplane. It is shown that for ANN models of empirical type, this problem is in most cases a marginal one in complexity level. At the same time, real aircraft modeling and control problems are much more complicated, which limits the applicability of empirical models.

Section 4 discusses an approach to solving the problem of overcoming the complexity threshold identified in the previous section. This approach is based on the transition from empirical ANN models to semi-empirical ones, i.e., from black-box to gray-box models. Semi-empirical ANN models are hybrid, as they use both empirical data on the behavior of the object and theoretical knowledge about its nature. After considering the procedure for the formation of such models, the analysis of their capabilities, including in comparison with empirical ANN models, is carried out using two examples. One of them deals with a simple dynamical system, which allows us to demonstrate the formation of a semi-empirical ANN model as well as give a comparison between the capabilities of this model and the empirical ANN model. In the second example, we again use the longitudinal angular motion problem for the same purposes. This example allows us to demonstrate another important property of the semi-empirical approach. Namely, this approach allows us to solve not only the problem of system identification (i.e., obtaining a model of the object under consideration) but also the problem of identifying the characteristics of this system. In other words, if some nonlinear functions are unknown to us in the problem to be solved, the semi-empirical approach makes it possible to reconstruct them. For an aircraft, such characteristics are usually related to the aerodynamic forces and moments acting on it. It is shown that the semi-empirical approach solves this problem with high accuracy, despite a number of complicating circumstances.

In

Section 5, we return again, although at a different level, to ANN models of the empirical type. This return is because, despite the obvious advantages of semi-empirical models, they also have a severe disadvantage. Namely, the process of forming such models requires a massive consumption of computational resources. For this reason, online adjustment of such models, which is necessary for their use in adaptive systems, is practically impossible. An alternative option to both empirical ANN models of the traditional kind and semi-empirical ANN models is the construction of another variant of the hybrid ANN model. In this variant, the hybrid nature of the model consists not in the combination of empirical and theoretical elements resulting in a recurrent network of a special kind but in the combination of elements of feedforward networks and recurrent networks. This variant opens up the possibility of using deep learning technology to build models of aircraft motion, as well as models for other types of controlled dynamic systems. This approach is illustrated again by the example of longitudinal angular motion. First, we form an ANN model for this example and then a neurocontroller based on the same approach. The possibility of fault-tolerant control using the proposed tools is also demonstrated.

Among the approaches that can successfully cope with adaptive control and modeling problems for nonlinear dynamical systems, including aircraft, two more options based on nonlinear dynamic inversion and reinforcement learning were mentioned above. These options are not considered in this paper. The issues related to the NDI approach and the ADP approach for the considered problem domain require a separate presentation due to the vastness of both of these topics. The authors intend to prepare relevant publications on these issues, which are clearly important for the control of aircraft behavior as nonlinear dynamical systems under uncertainty conditions.

In addition to the two critical elements of an adaptive system discussed in this paper, i.e., the control law and the model of the control object, there is also a third element that is critical to the performance of such systems. This element should provide the first two with information about the current situation, i.e., about the current state of the control object and the environment in which it operates. At the same time, the concept of environment should be interpreted broadly. It is not only the state of the atmosphere and gravity field that immediately affect the aircraft.

In such tasks as formation flying, air traffic control, etc., it is important to have information about the state and behavior of objects around our aircraft. Information about the current situation in which our aircraft is found is commonly referred to as situational awareness. Because of the importance of this topic, we have made a special study of the situational awareness and the ways of its formation. This challenge is relevant for both piloted and unmanned vehicles, with unmanned vehicles being much more difficult than piloted ones. This is due to the fact that, for example, in the case of a piloted aircraft, part of the formation of situational awareness can be delegated to crew members. In the case of UAVs, as with any other unmanned vehicle, everything must be accomplished by the on-board tools of the control object under consideration. This is why our study was focused on the case of an unmanned aerial vehicle. The results of this study were published in our article [

107].

3. Empirical Neural Network Models of Controllable Dynamic Systems

As it is known, the result of the solution of the system identification problem in its traditional formulation is a black-box model of the object under consideration. Methods for solving problems of this kind are described, for example, in the well-known manual [

31], as well as in two detailed reviews [

113,

114]. Since the second half of the 1980s, neural network technologies have been actively used for system identification (see, e.g., [

115,

116,

117]). The state of the art of the field is covered in the comprehensive monograph [

32], where new approaches based on neural networks, fuzzy, and neuro-fuzzy technologies are also considered, along with methods that are quite traditional for system identification.

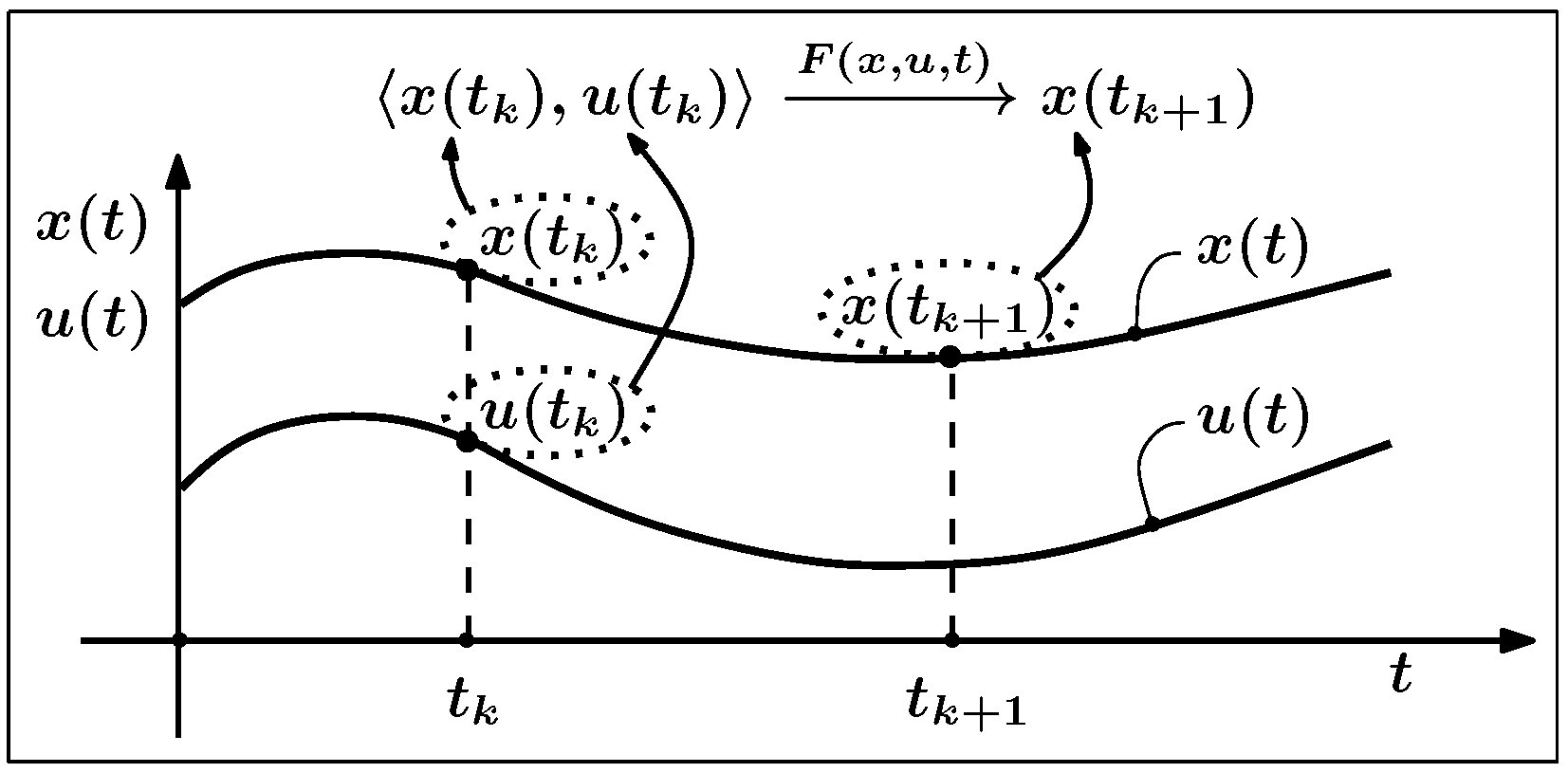

This problem is relatively easy to solve for uncontrolled dynamical systems. Namely, for systems of the kind

, where

, for time instant

with unchanged vector function

, the state of the system

is completely determined by the state

at the previous time instant

. Let us denote such a transition of the system from one state to another as

and the

k-th training example takes the form

. Now the value of

, to which the system will move, is determined not only by the value of

, but also by what values all

m components of the vector of control variables

take. It should be kept in mind that, first, each of these components can vary over a rather wide range. Moreover, secondly, to ensure the informativity of the training set, it is necessary for each

not only to vary each of the components of the vector

u, but also to look through all possible combinations of the values of these components. This circumstance dramatically increases the required training set size.

For example, in an airplane motion control problem using three channels (pitch, yaw, roll), the ranges of deflection angles of the controls (elevator, rudder, and ailerons) can be taken as grad. Let the sample of values for each of these controls include 10 elements. Then we will have to generate 1000 examples for each moment of time and its corresponding current state instead of the single example required for an uncontrolled system in the considered case of three-channel control. Even in relatively short-duration problems involving short-period angular motion of an airplane, the typical time interval is 20 s. For a maneuverable aircraft, in order to capture the peculiarities of its motion dynamics, the usual value of the time step is s, i.e., a set of examples showing the reaction of the system under study to various control actions is required to be obtained in this case for time instants. In real problems, especially for maneuverable aircraft, the above assumption will be too coarse; we need to increase the sampling at least twice, which leads to the need to generate already 8000 examples for each .

It should be noted that we have so far proceeded from a simplified formulation of the problem when the uncontrolled system

realizes a single trajectory determined only by the initial conditions

. As just shown, for the system

under the same conditions, we must additionally take into account the control variability, which leads to an increase in the required number of examples for the controlled system by three to four orders of magnitude compared with the uncontrolled system. If we also take into account that the state variables

also vary over a wide range, this gap increases even more. It is for this reason that we have to use the indirect approach to training set generation for controlled dynamical systems discussed above in

Section 2.2.

For the reasons noted above, for the implementation of adaptive systems dealing with nonlinear control objects, it is advisable to use neural network models of such objects. In this section, we consider the use of this approach for the case when the control object is an aircraft and analyze the possibilities and limitations of this approach.

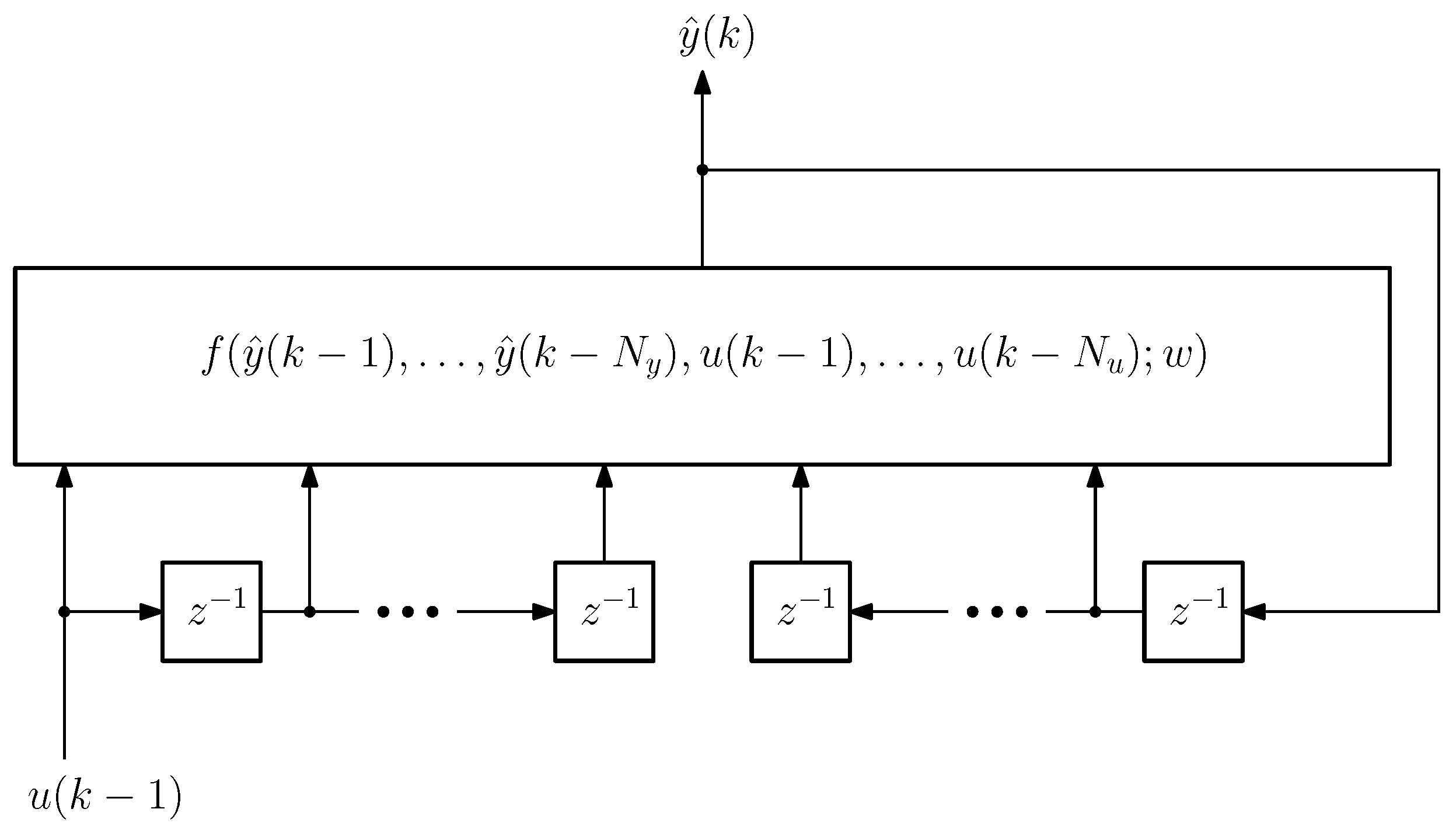

3.1. General Structure of ANN Model for Aircraft Motion Based on Multilayer Neural Network

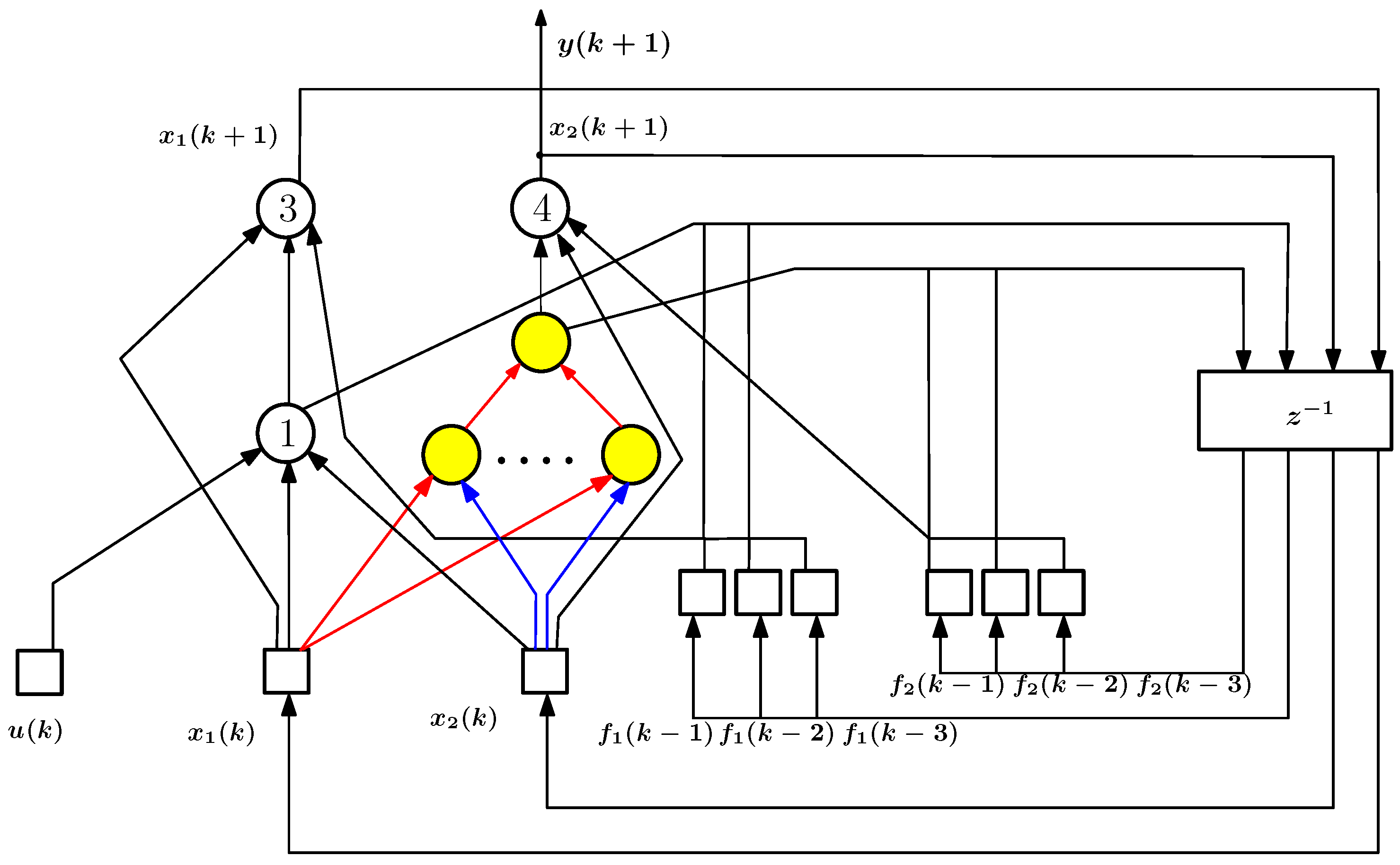

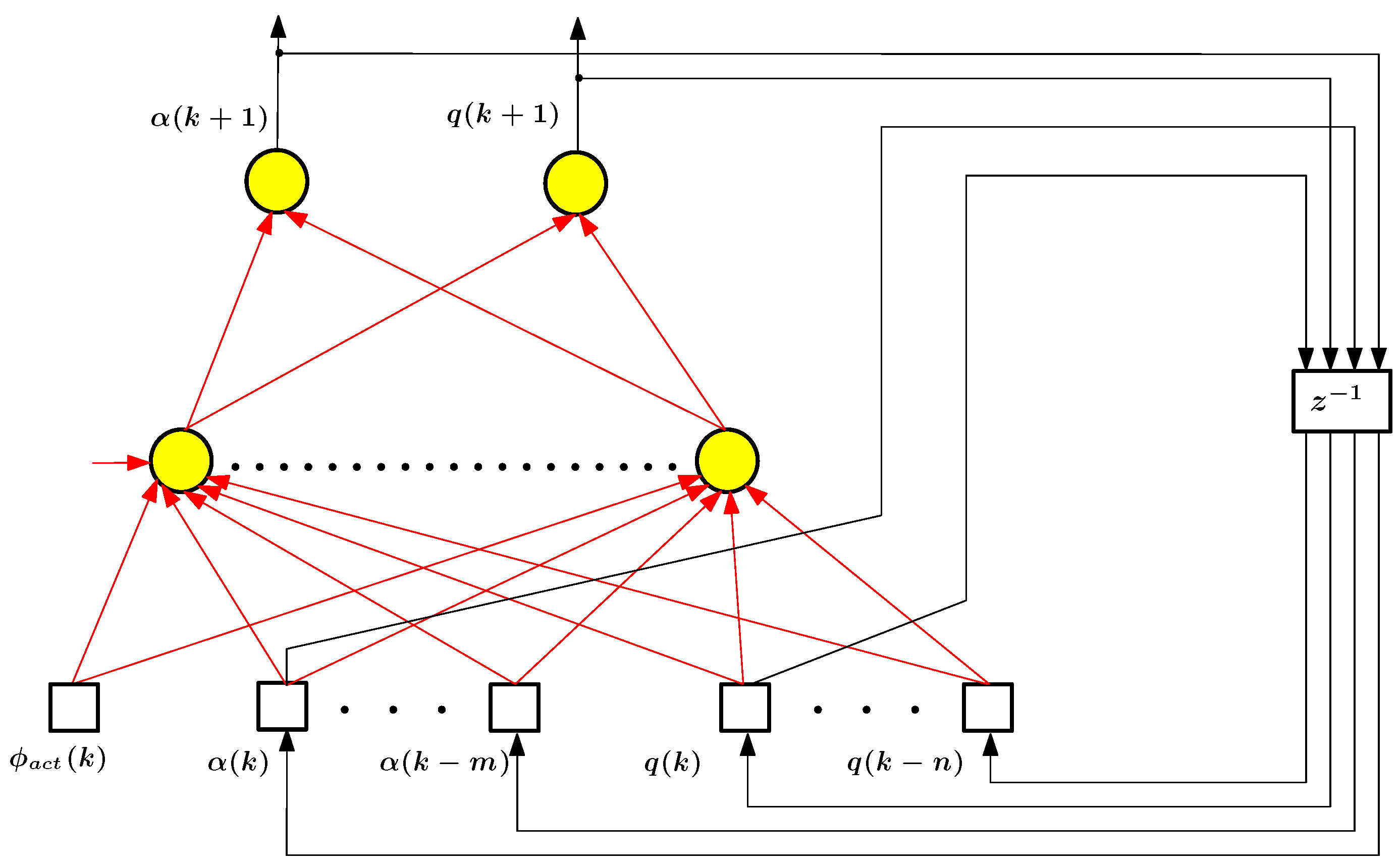

Aircraft flight dynamics is described in general by a nonlinear model. In neural networks, the NARX (Nonlinear AutoRegression with eXogenous inputs) network is often used as such a model. An extension of the NARX model is NARMAX (Nonlinear AutoRegression with Moving Average and eXogenous inputs), which allows explicitly taking into account random external influences on the system when training the network. Both of these models [

2,

32,

46,

63] are recurrent neural networks in the considered case. Next, as an example of neural network-based nonlinear system identification, we will consider the NARX model, whose structural scheme is shown in

Figure 5.

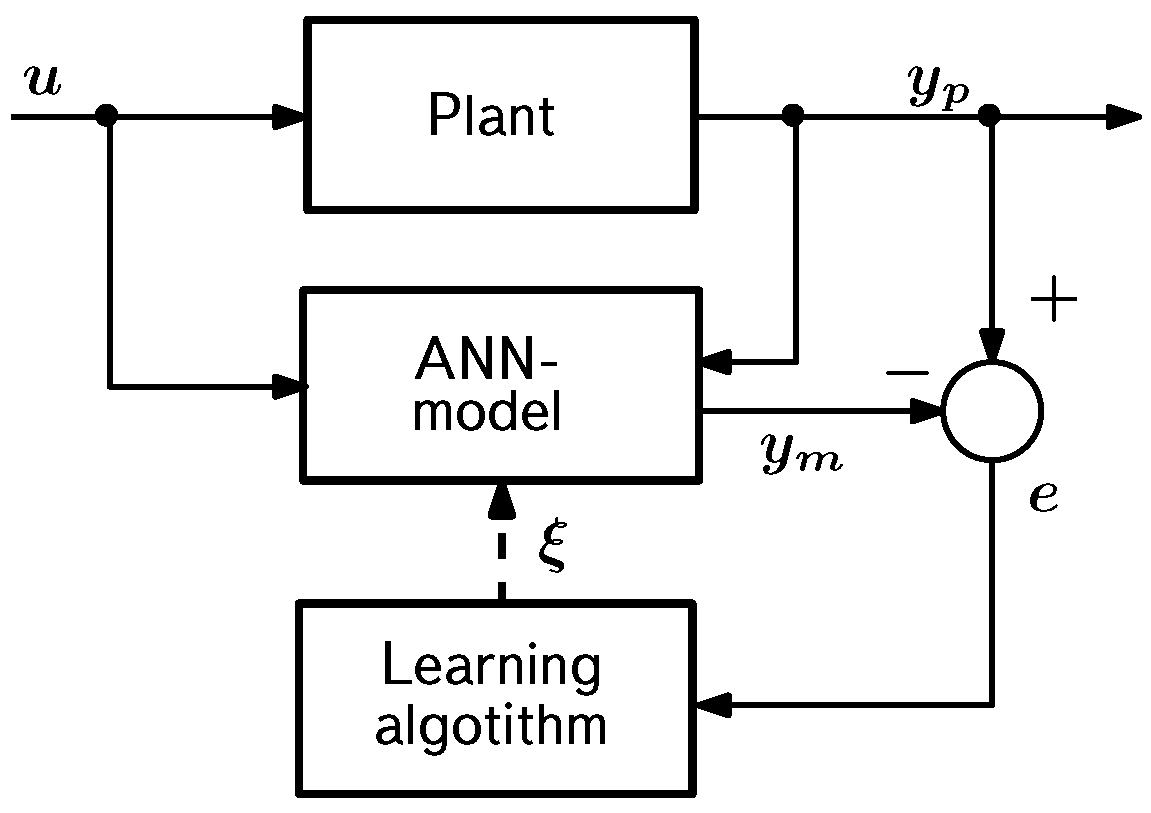

The general scheme of the identification problem-solving is shown in

Figure 6, which demonstrates that the same control signal

u is fed to the input of both the control object (plant) and the formed ANN model of the object (NARX in our case). The object responds to this control signal by producing an output value

, which is compared with the ANN model output

. In addition, the object output

is also used as additional information in the ANN model generation process. The result of comparing

and

equals

. This is the error with which the ANN model reproduces the behavior of the object at a given instant of time and at given values of adjustable parameters, i.e., synaptic weights of the neural network. The learning algorithm takes the

error as input and generates corrective actions

that change the weights to reduce the

error.

The NARX model, which we use to solve the identification problem, operating in discrete time, realizes a dynamic mapping described by a difference equation of the following kind:

where the value of the output signal

for a given time instant

k is computed based on the values

of this signal for a sequence of previous time instants, as well as the values of the input (control) signal

external to the NARX model. In the general case, the length of the prehistory by outputs and controls may not coincide, i.e.,

. In (

29),

w represents the set of adjustable parameters of the NARX model (synaptic weights). A convenient way to implement the NARX model is to use a multilayer feedforward network of multiperceptron type to approximate the mapping

in the relation (

29) and time delay lines (TDL) to obtain the values of

and

. In this case, the NARX network contains one hidden layer, as well as TDL elements for input and output signals. The hidden layer neurons are sigmoidal (linear input part and hyperbolic tangent as an activation function); the output layer neurons are linear, i.e., in addition to the linear input part, they also contain a linear activation function. Variable parameters in training the network are weights of its connections; the RTRL (Real-Time Recurrent Learning) algorithm was used for training. The hyperparameters in the NARX network training task are the number of neurons in the hidden layer, the number of cells in the TDL (delays), and the maximum allowable number of iterations of the training process. The number of neurons in the hidden layer and the number of delays are adjusted experimentally. In particular, the results shown in

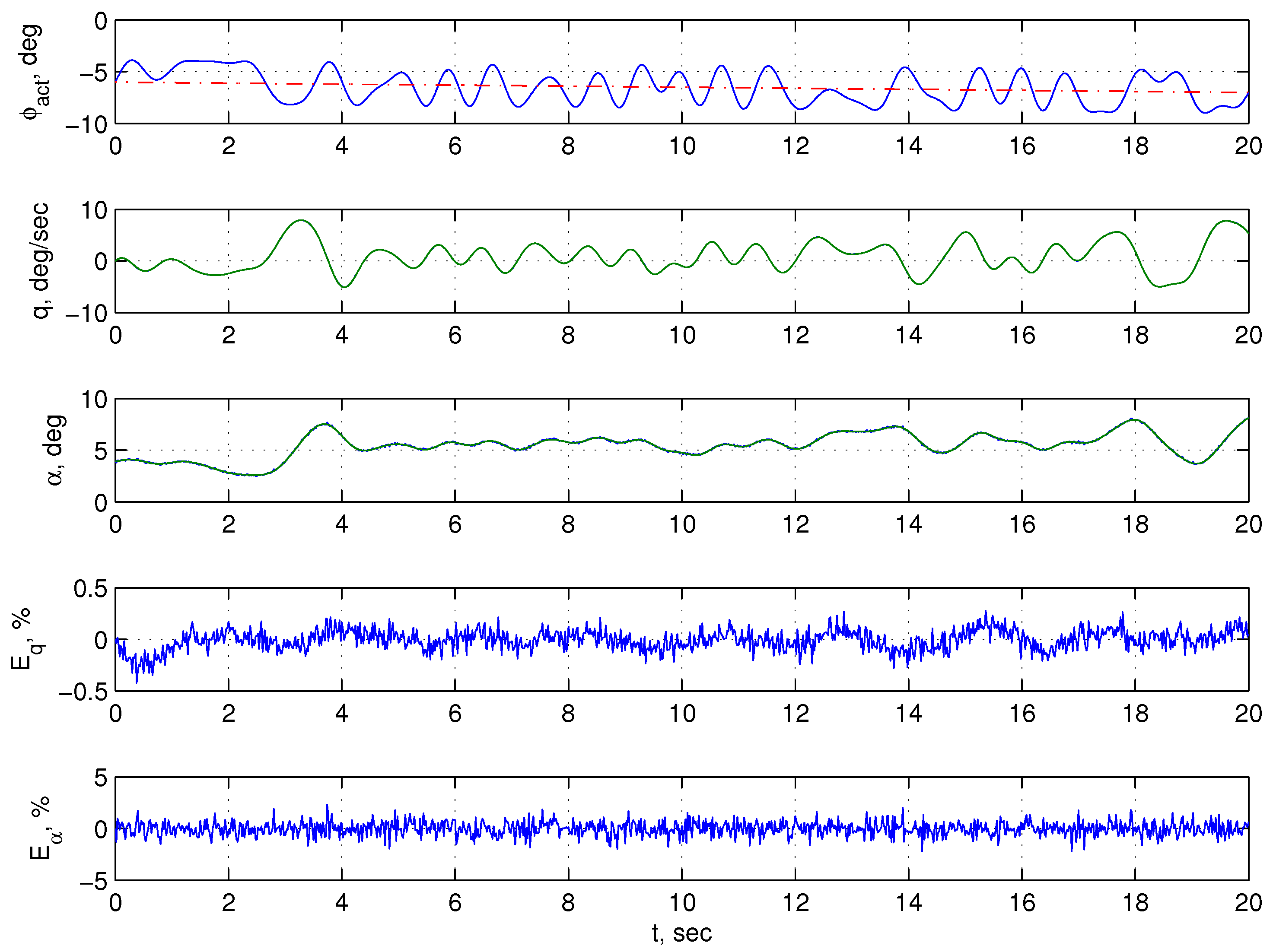

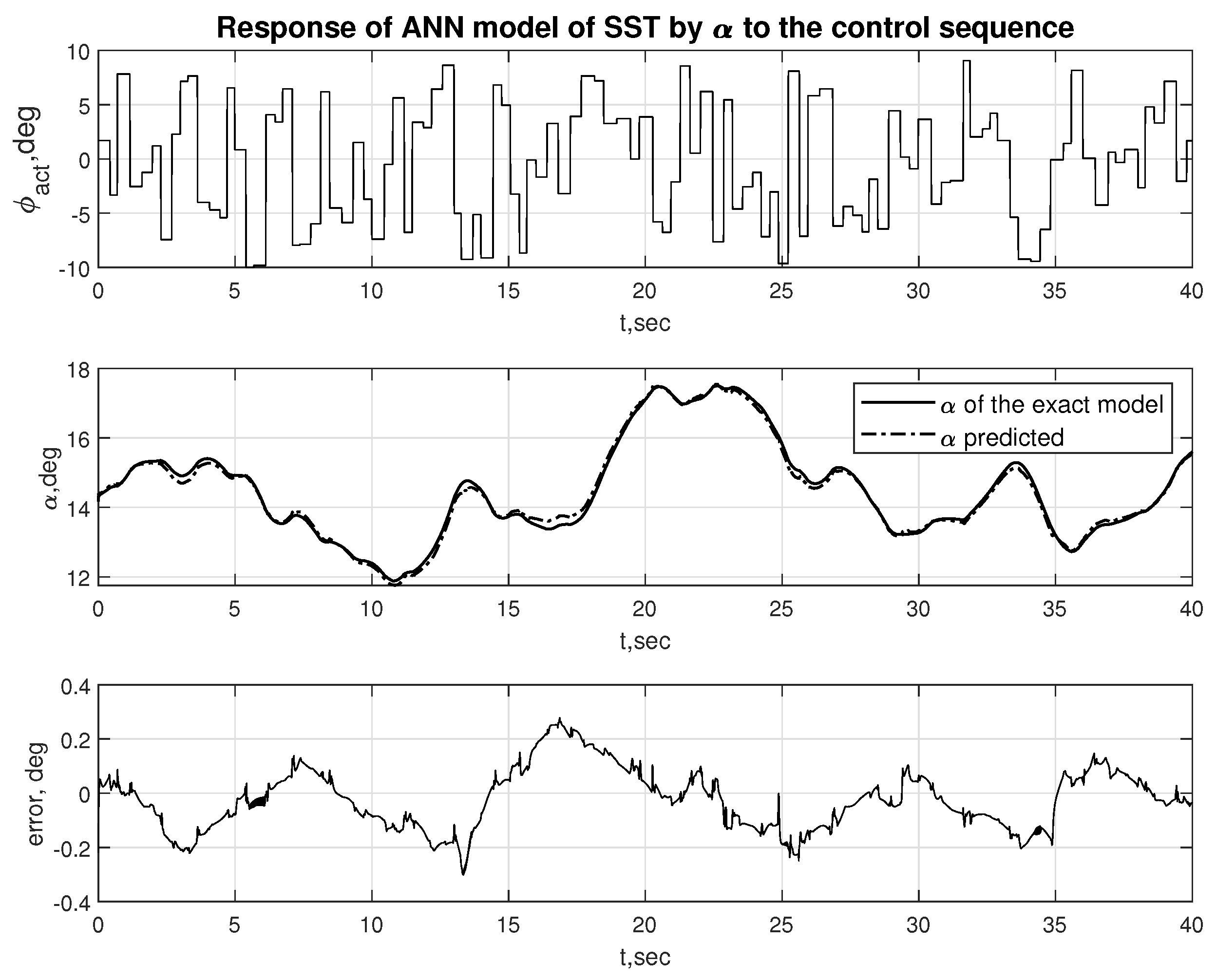

Figure 7 are obtained for 15 neurons in the hidden layer (the range of variation of this parameter from 10 to 20 was investigated) and for two delays in the TDL element (the range of variation of this parameter from 1 to 10 was investigated). Each iteration of the training process, called a training epoch, consists of running through the network all examples from the training set, which contains 2000 examples (time interval of 40 s, sampling step 0.02 s).

The training of the considered ANN model is performed in the standard way [

62,

63] as a process of minimizing the sum of squares of errors

over the whole training sequence of length

N:

Here,

represents the output values of the modeled object (they are taken from the training set), and

is the output estimate obtained by the ANN model for the current value of the set of its adjustable parameters

w.

3.2. Example of an Empirical Neural Network Model of Aircraft Motion

As an example, let us consider one of the problems of modeling the motion of controlled dynamical systems that we have solved, namely, the problem of controlling the longitudinal angular motion of a supersonic transport plane (SST) [

118,

119]. In flight dynamics, such motion is described by a mathematical model of the following form [

33,

36]:

where

is the angle of the attack, deg;

q is the pitch angular velocity, deg/s;

is the deflection angle of the elevator, deg;

is the lift coefficient;

is the pitching moment coefficient;

m is the mass of the aircraft, kg;

V is the airspeed, m/s;

is the airplane dynamic pressure;

is the mass air density, kg/m

3;

g is the acceleration of gravity, m/s

2;

S is the wing area of the aircraft, m

2;

c is the mean aerodynamic chord, m;

is the pitching moment inertia, kg · m

2. Dimensionless coefficients

and

are nonlinear functions of angle of attack;

T and

are the time constant and relative damping factor for the elevator actuator; and

is the command signal value for the elevator actuator limited by

.

In the model (

31), variables

,

q,

, and

are aircraft states; variable

is aircraft control.

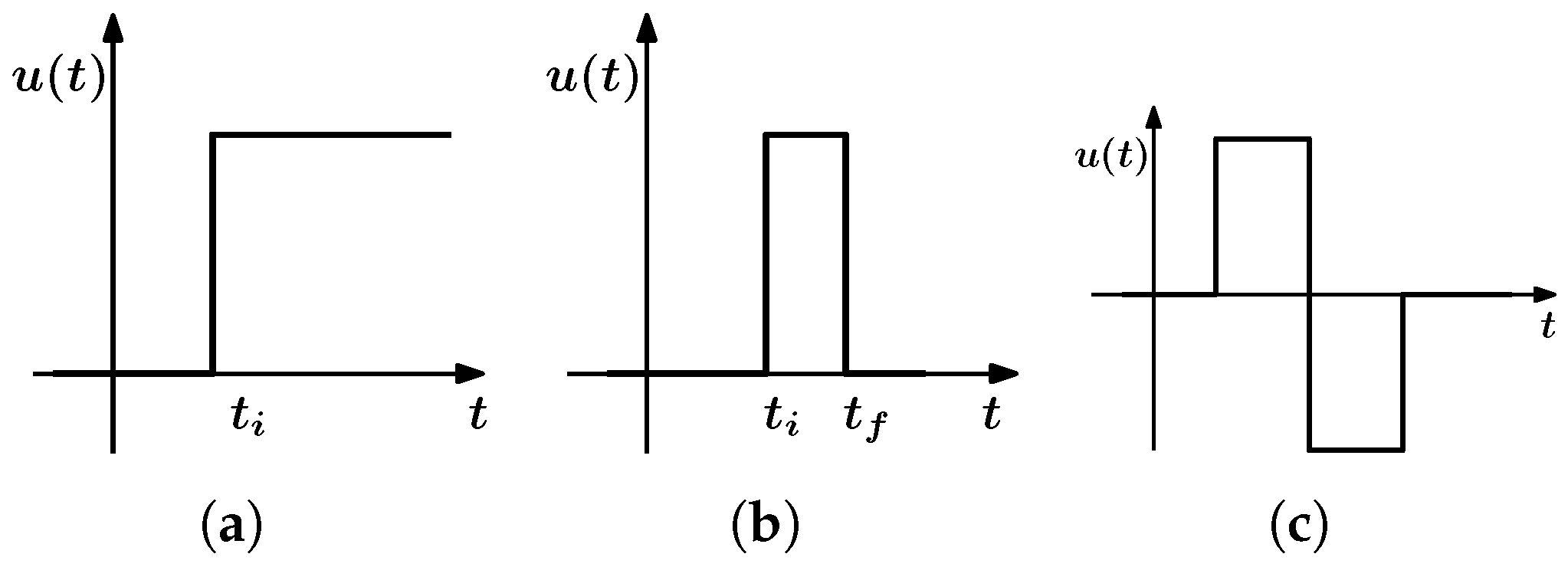

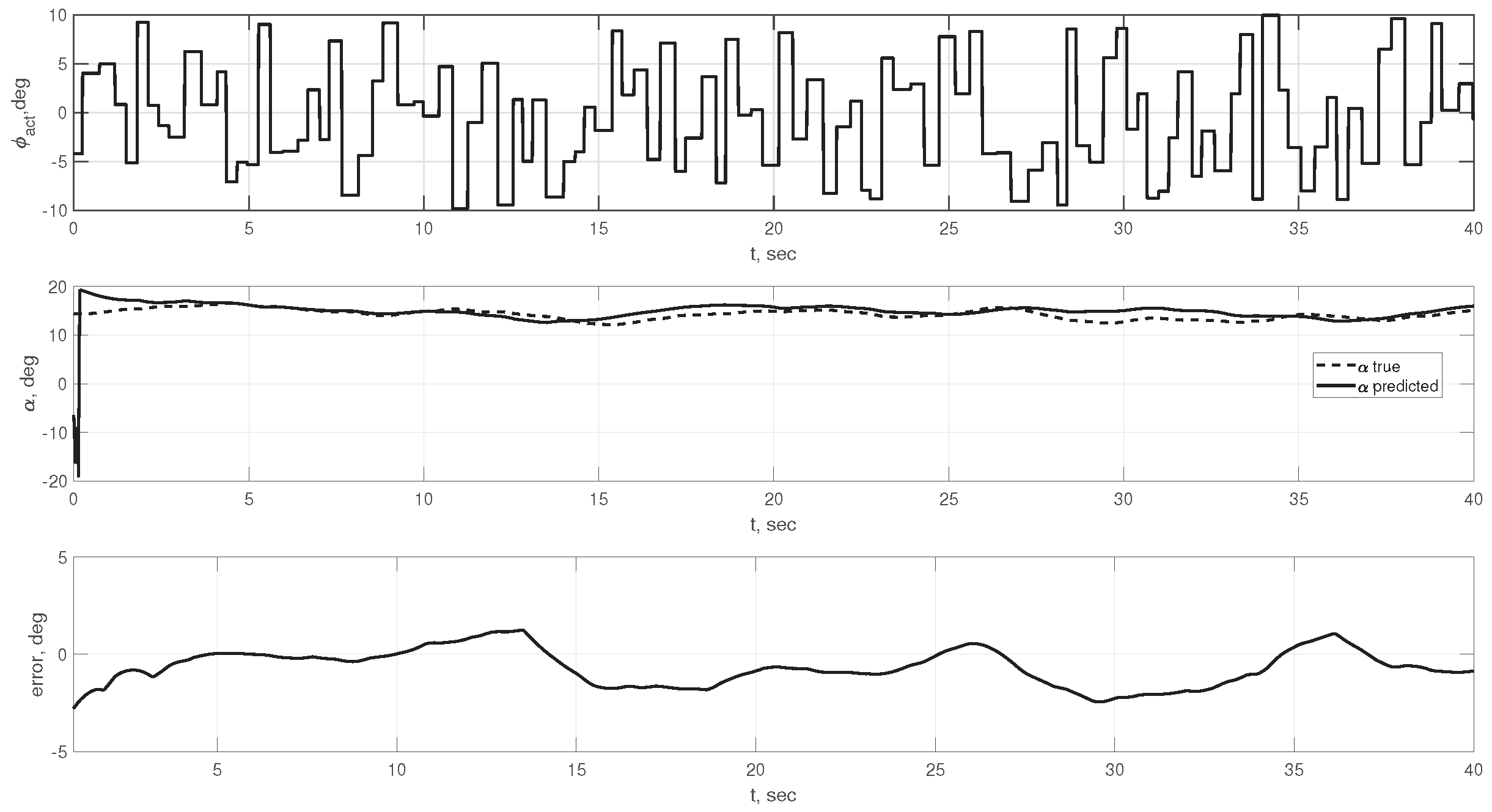

The source data for training of the considered ANN model were generated using a random step signal

fed to the input of the dynamical system. This signal is a sequence of steps with the following parameter values: elevator deflection angle in the range from

to 10 degrees for a time from 0.25 to 0.5 s. The time step was chosen to be 0.01 s. The total length of the sequence was equal to 40 s. An example of such a signal is presented in the upper part of

Figure 7.

The system under study responds to the input signal

by changing its state

. The pair

in this case is the source information for generating a set of training examples. Each of the examples

p included in the training set

P should show the reaction of the system to some combination

. By such a response we mean the state

to which the system (

31) will move from the state

at the value

of the control action (see also

Figure 2):

Thus, the example

p from the training set

P will include two parts, namely, the input (this is the pair

) and the output (this is the response of the system

); see also

Figure 2.

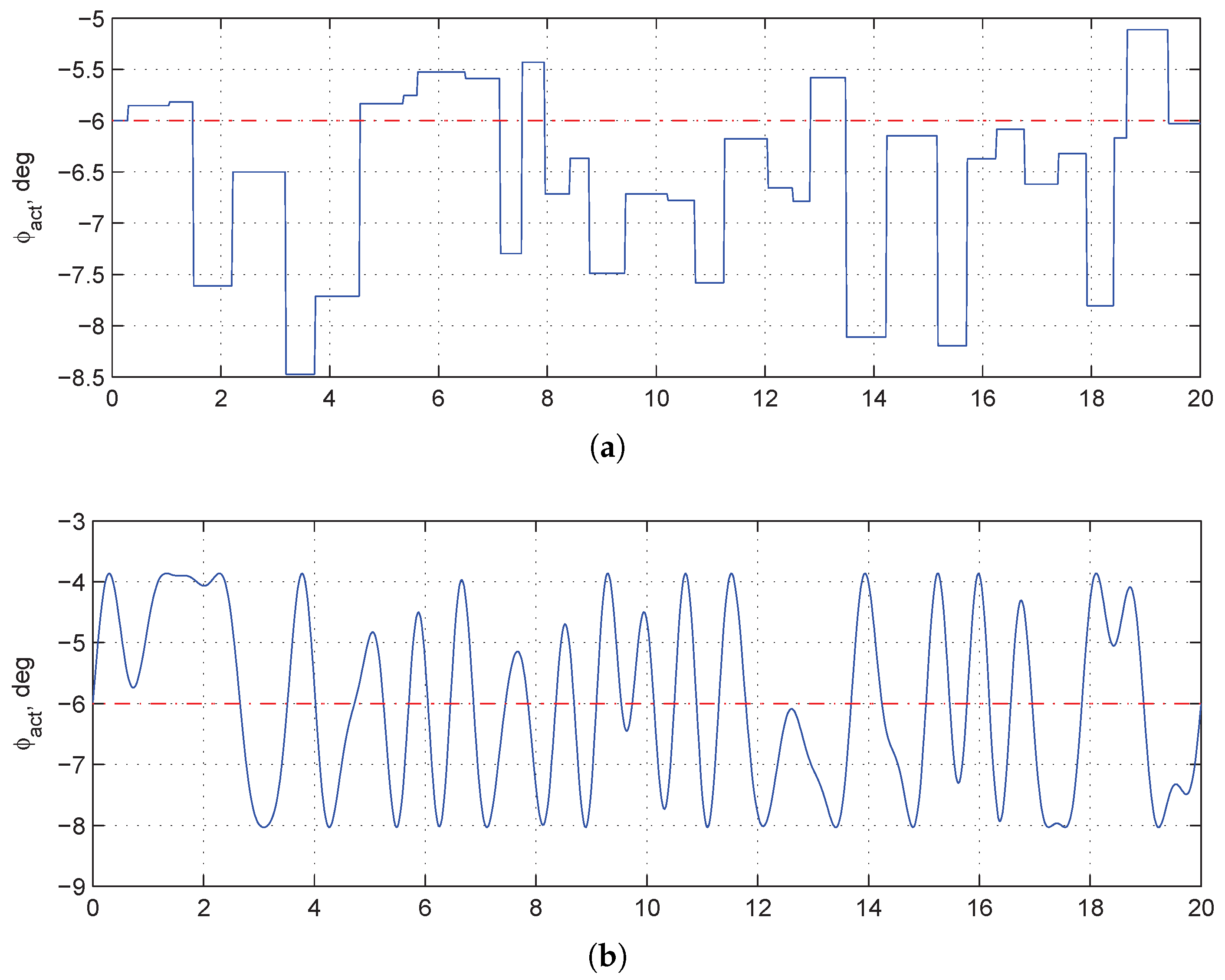

To implement the scheme described above, a number of variants with different numbers of neurons in the hidden layer, delays, and training epochs were tried with respect to the NARX network. The results for one of these variants are shown in

Figure 7.

As can be seen from

Figure 7, the modeling error reaches unacceptable values at some time instants. Different combinations of values for the network and training process parameters did not improve the results. In particular, increasing the delay parameter (i.e., the depth of the prehistory taken into account in (

29)) required a sharp increase in the training time. Within the given constraints on computational resources, this requirement could not be met.

It should be noted here that such intensive elevator operation, i.e., sharp and frequent changes in the command signal

of the elevator actuator, is very unlikely in real practice. In other words, we obtain training data “with a large margin” for aircraft operation modes, i.e., under conditions much more severe than they will be in real flight. Nevertheless, these data allow us to understand the limits of the capabilities of ANN models of the black-box type for the considered class of modeling tasks. Our experience in building and using such models for aircraft of various classes shows [

120,

121,

122,

123] that the threshold complexity of the flight dynamics problem for such models in most cases is the longitudinal angular motion problem (

31) just considered. In more complicated problems, as a rule, it is not possible to obtain acceptable generalization properties from these models.

Within the considered approach, we formed and used ANN models of the black-box type for aircraft of different classes [

120,

121,

122,

123,

124]. Our experience shows that the threshold complexity of the flight dynamics problem for such models in most cases is the longitudinal angular motion problem (

31) just considered. In more complicated problems, as a rule, it is not possible to achieve acceptable generalization properties from these models, i.e., their reasonable accuracy on test data. The same will be true for dynamical systems of other classes similar in complexity level, which is determined by the total number of state and control variables, the ranges of variation in the values of these variables, and the number of samples in each of these ranges.

As noted in the introduction, one possible way to overcome this limitation is to move from purely neural network recurrent models of the black-box type to hybrid ANN models of the gray-box type. These issues are discussed in the next section, which compares empirical and semi-empirical ANN models of controllable dynamical systems. In the same section, two more examples of ANN models of the black-box type are discussed. The results obtained for them are compared with the results for semi-empirical ANN models for the same controlled objects.

4. Semi-Empirical Models of Controlled Dynamical Systems

In this section, as in the previous one, we consider the problem of mathematical and computer modeling of nonlinear controllable dynamical systems operating under numerous and diverse uncertainties, including uncertainties associated with insufficient and inaccurate knowledge about the modeling object and its operating conditions.

As it was shown earlier, empirical ANN models have serious limitations in the complexity level of solved problems. This is due to the high dimensionality of such models, i.e., the significant number of connections in them, which causes the need for numerous training examples. In this regard, there arises the problem of reducing the dimensionality of the created model in such a way that its flexibility does not degrade. One of the possible ways to solve this problem is through the transition to semi-empirical networks that combine the capabilities of theoretical and neural network modeling.

This area has a long history of progress already [

49,

50,

51,

52,

53,

54,

55]. The assumptions for it were as follows. There is a situation when, using only available data on the behavior of the object under study, we cannot obtain a model of the required accuracy. At the same time, quite often we also have at our disposal theoretical knowledge about this object, which in the case of a dynamic system has the form of a system of differential equations. When forming an empirical model, we do not use this knowledge at all. In a situation when this approach fails to meet the requirements for modeling accuracy, a natural question arises: maybe it is necessary to somehow involve the available theoretical knowledge about the object in order to meet these requirements? The answer to this question is hybrid models of the gray box type. Such models can be obtained for both uncontrolled and controlled dynamical systems.

In particular, a field called physics-informed neural networks (PINN) is being actively developed [

125,

126,

127,

128,

129,

130,

131,

132,

133]. This field is mainly focused on modeling uncontrolled dynamical systems for which the available theoretical knowledge is expressed in the form of ordinary differential equations or partial differential equations. Even if the system under consideration contains control variables, it is generally assumed that their form is specified. This approach, despite all its advantages, is of limited use for obtaining models of controllable dynamic systems oriented to the needs of adaptive control systems.

We are developing an alternative variant of the gray box models [

56,

57,

58,

59,

60,

61,

123,

134,

135,

136], which we call semi-empirical because of their hybrid nature: They are based on an empirical black-box model (recurrent neural network), as it ensures the model’s adaptability. At the same time, knowledge about the modeled object, which takes the form of a system of ordinary differential equations, is embedded in such a network. This approach, as will be demonstrated below, allows us to drastically reduce the size of the resulting model and substantially raise the complexity threshold of the modeling and identification problems to be solved.

4.1. Semi-Empirical Model Development

The formation of semi-empirical ANN models consists of the following steps [

56,

57,

58]:

- 1.

Obtaining a theoretical continuous-time model for the dynamical system under study and collecting available experimental data on the behavior of this system;

- 2.

Accuracy assessment for the theoretical model of the dynamical system on the available data; if its accuracy is insufficient, hypothesizing about the reasons for it and possible ways to eliminate them;

- 3.

Transformation of the source continuous-time model into a discrete-time model;

- 4.

Formation of neural network representation for the obtained discrete-time model;

- 5.

Training of the neural network model;

- 6.

Evaluation of the accuracy for the trained neural network model;

- 7.

Correction, in case of insufficient accuracy, of the neural network model by making structural changes in it.

Let us assume that the source theoretical model of the object under consideration has already been obtained in one way or another. A typical situation is when such a model is a system of differential equations. In particular, this is the case in the flight dynamics of aircraft. Since this is a continuous-time model and its implementation is carried out in a digital environment, the first step is to discretize the source model. This operation is necessary to obtain a dynamical system with discrete time, for which the corresponding recurrent neural network is built. The choice of the discretization method [

42,

43,

44,

45] plays an important role since the consequences of this choice affect the stability of the resulting discrete-time model. The algorithmic basis for discretization of continuous-time models is numerical methods for solving ordinary differential equations (ODE) combined with experience in solving various kinds of such problems. The transition to discrete time in the problem (

2) can be based on almost any of the known methods of numerical integration for ODE systems [

42,

43,

44,

45]. As an example, to compare semi-empirical models with empirical ones, we will use two explicit difference schemes, namely the 1st order Euler scheme and the 4th order Adams scheme:

In (

32) and (

33), the following notations are used:

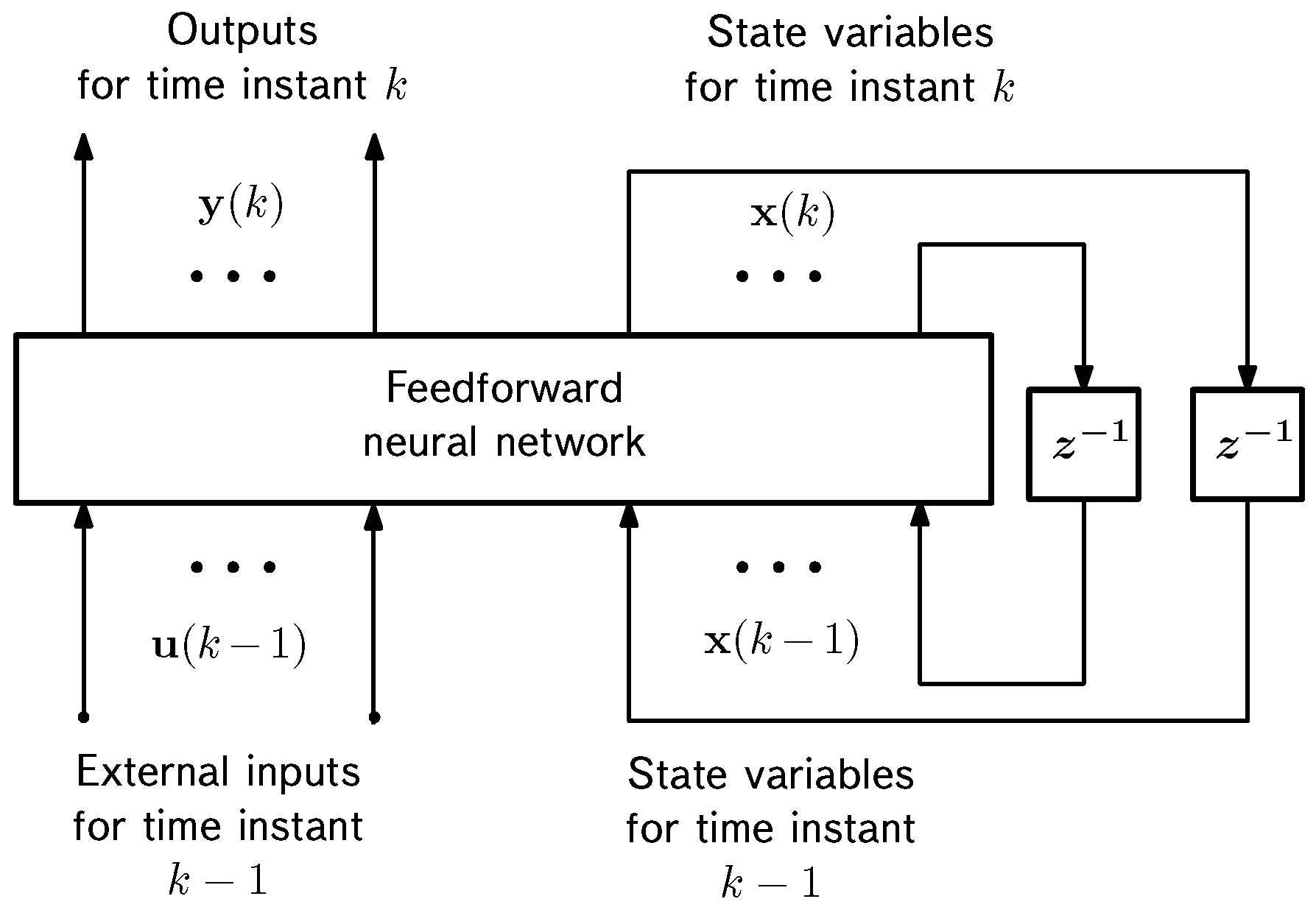

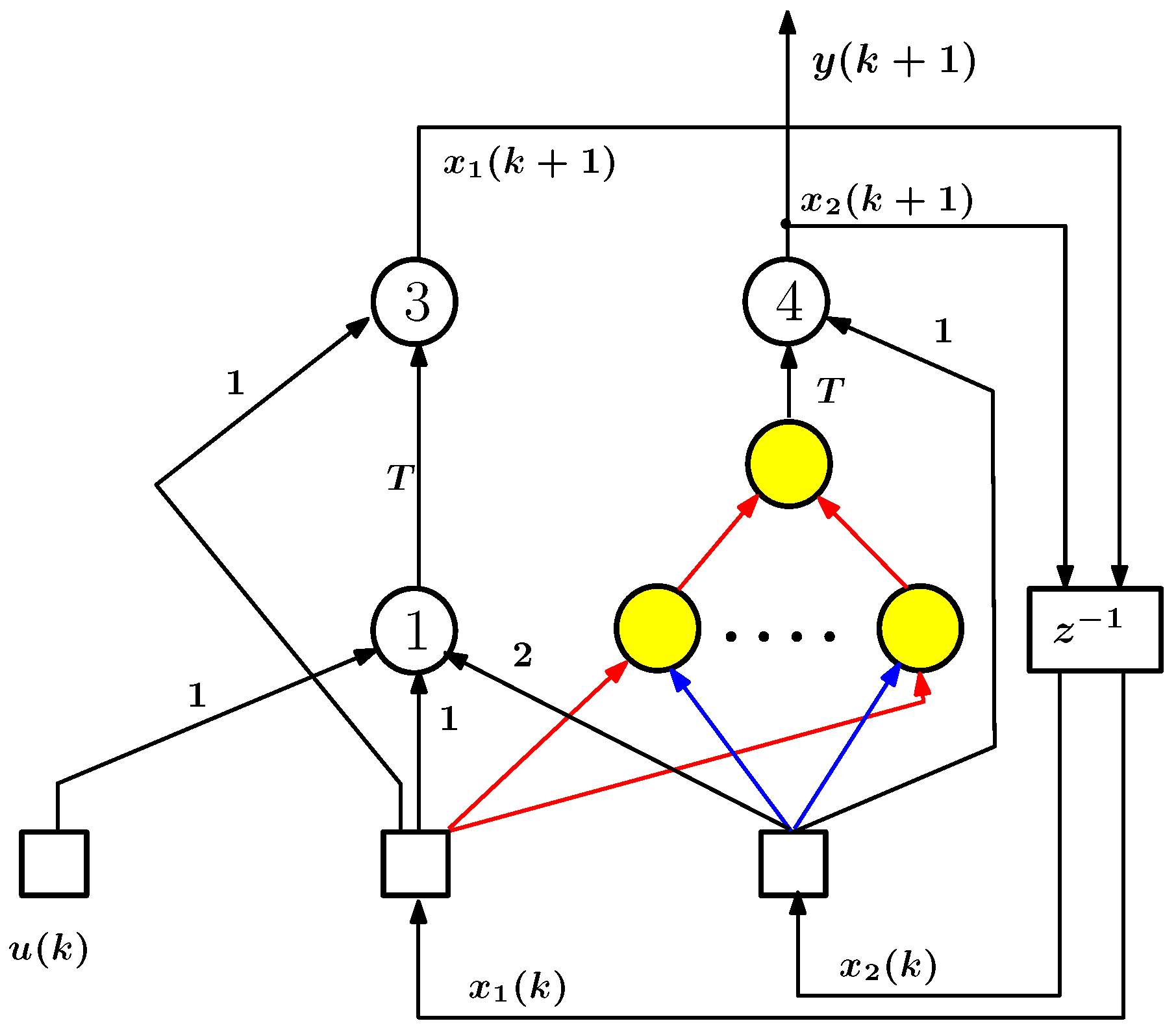

It is well known that any mathematical model or algorithm can be represented as a network structure. In the case under consideration, related to ordinary differential equations and algorithms for their numerical integration, this structure has a recurrent form similar to the structure of recurrent neural networks. Obviously, different source continuous-time models will lead to a variety of recurrent networks. The problem of unification of such networks arises, whose solution avoids the need to build for each of the networks its own training algorithm. Such a solution was proposed in [

51,

53]. It is based on reducing the original model to some “canonical” form. This problem can be solved for any recurrent neural network, and its final canonical form will be the minimum complexity model in the state space. The canonical form of the recurrent neural network is given in

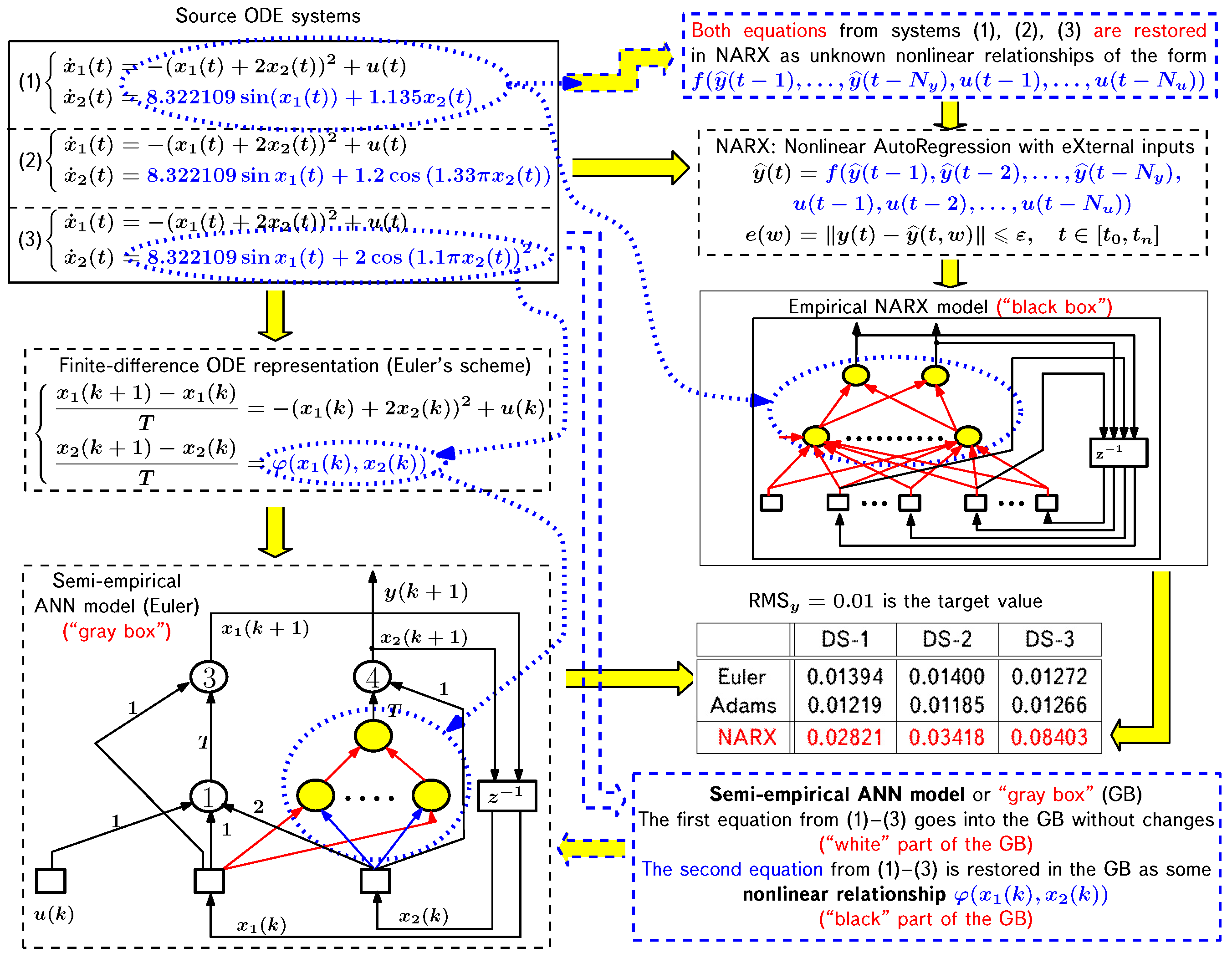

Figure 8, which shows that the core of this representation is a feedforward neural network, and all feedbacks available in the model are external to the core of this model and contain only unit delays.

4.2. Semi-Empirical Model of a Simple Dynamical System

In order to compare the capabilities of the empirical and semi-empirical models, we consider two examples. The first one involves a dynamical system described by the following equations:

The dynamical system defined by these relations uses part of the example given in [

52] as a prototype. This example is not a model of any particular system; it is purely formal (in [

52] it is referred to as “a didactive example”). The purpose of the system (34), (35) is to illustrate the procedure of semi-empirical model formation, as well as its superiority over a purely empirical neural network model in the form of the NARX network.

In problems (

34) and (

35), the adopted discretization schemes allow us to obtain the canonical representation of the network either immediately (for the explicit Euler scheme (

32),

Figure 9) or after minor adjustments to the initial non-canonical version (for the explicit Adams scheme (

33),

Figure 10). Some graphical simplification is introduced in

Figure 10 in order not to clutter this figure with redundant elements. Namely, the simplified form shows the formation of the Adams scheme from the values of

,

,

, and

, which are vector functions of the right-hand sides of the equations of motion. Detailed information about the order of formation of this scheme can be obtained from the relation (

33) describing it.

Equations (

34) and (

35) will be used to obtain training and test data in the manner described above. Once these data are obtained, they are used to form a model of the system under consideration, which will be either empirical (black box) or semi-empirical (gray box). In the case of the black-box type model (this is the NARX network), it is assumed that the kinds of both relations (

34) and (

35) are unknown to us (see

Figure 11). For the gray-box model, we will assume that only the kind of relation (

35) is unknown, while Equation (

34) represents the available theoretical knowledge about the dynamical system under consideration. In such a situation, we deal with a source model of this kind:

where

is the unknown relationship that needs to be reconstructed during the training of the grey-box model using the available experimental data on the behavior of the dynamical system under consideration. As mentioned above, the results of the integration of the equations (

34) and (

35) with given initial conditions, integration step, and control function

are used as these data.

To obtain a gray-box neural network model, including Equation (

34) and the relation corresponding to the unknown second equation in the model under consideration, two steps are required. The first step consists of going from the original continuous-time model, i.e., the differential equations, to the discrete-time model, i.e., the difference equations. For this purpose, we will use two explicit difference schemes, namely the 1st order Euler scheme (

32) and the 4th order Adams scheme (

33).

We consider a system (

36) on a time interval

with a sampling step

s and initial conditions

. The state vector is partially observable:

, with additive white noise with root-mean-square (RMS) deviation

affecting the system output

.

Ideally, when the neural network model reproduces the original system of differential equations (

34) and (

35), the modeling error will be completely determined by the noise affecting the system output. It follows that the comparison of the modeling error with the noise RMS allows us to estimate the degree of success in solving the modeling problem of the system under consideration, and the given value of the noise RMS can be taken as the target value of the modeling error.

Training was carried out using Matlab for LDDN (Layered Digital Dynamic Networks) networks using the algorithm common for this class of networks [

63]. The Levenberg–Marquardt algorithm is used to optimize the network parameters, and the Jacobi matrix is calculated using the RTRL (Real-Time Recurrent Learning) algorithm [

46]. The RMS prediction error of the model on the training sample is used as an optimization criterion:

where

N is the number of training set elements,

is the vector of target values of the observed variable (according to the model (

34) and (

35)), and

is the vector of outputs of the neural network model.

In the case under consideration, the kind of relation (

35) shows that the recoverable right-hand side of the second equation of this model must be nonlinear and have both state variables,

and

, as arguments. In the general case, however, these facts are not known to us. Therefore, we need to make a sequence of hypotheses concerning the kind of right-hand side of the second equation in (

36). These hypotheses should be ordered by the complexity of the resulting relation: on which variables it depends and whether this relation will be linear or nonlinear on the selected variables. The reasonableness of this approach is determined by the fact that as the complexity of this relationship increases, the number of connections in the neural network that will reproduce it also increases. According to the well-known result [

46,

63], the dimensionality of the network (i.e., the number of connections in it) and the dimensionality of the training set (the number of examples in it) are strictly related. Consequently, using more complicated relations for the right-hand side of the second equation in (

36) will require a larger number of training examples and, consequently, an increase in the time required to train the model. It is for this reason that one should introduce a sequence of hypotheses regarding the kind of relationship to be recovered and then experimentally determine the lowest complexity relationship that allows for the required modeling accuracy.

In the considered example, we omit all intermediate steps and define the reconstructed relation as a nonlinear function of two variables,

, which depends on both state variables

and

but does not depend on the control variable

. The structural diagram of the network corresponding to this variant of the ANN model for the Euler discretization scheme is shown in

Figure 9; similarly, it can be obtained in the case of the Adams scheme (

Figure 10). The accuracy of the obtained model (denoted as DS-1 in

Figure 11), estimated by the relation (

37), is 0.01394 for the Euler method and 0.01219 for the Adams method, with the target value of this index equal to 0.01.

To compare with the traditional approach, let us demonstrate the results of training the NARX model. The best accuracy of 0.02821 in this example was achieved by a network with three neurons in the hidden layer and five delays in the feedback. From this comparison, we can see the undeniable advantage of the semi-empirical model over the empirical model in terms of achievable solution accuracy: even for Euler’s method, the accuracy is 0.01394 versus 0.02821 for NARX. In the case of Adams’ method, the accuracy is even higher. It is equal to 0.01219, and it is possible to improve the accuracy even further by using more efficient difference schemes.

An analysis similar to the one described above was also performed for two more variants of the considered system (

34) and (

35), denoted as DS-2 and DS-3 in

Figure 11. First, instead of Equation (

35), this system uses its complicated version with a mixture of harmonics on the right-hand side:

In the second variant, Equation (

35) is replaced by a relation that contains a more complex mixture of harmonics than in (

38):

The results of calculations for these variants, including for the NARX model, are presented in

Figure 11.

A comparison of the experimental results for all three examples allows us to draw the following conclusions.

The kind of difference scheme used influences the accuracy of modeling. In this regard, the problem of choosing an adequate (the best in the ideal case) discretization scheme for a particular applied problem to be solved arises.

In order to eliminate this kind of influence of the difference scheme on the obtained result, we have proposed and implemented an alternative variant of the formation and training of the semi-empirical model [

60]. The essence of this variant is as follows.

Section 4.1 demonstrates the sequence of steps performed during semi-empirical modeling. It shows that the transformation of the original continuous-time model into a discrete-time model precedes the formation of a neural network representation for the resulting discrete-time model, as well as its training. This means that if the difference scheme used for discretization is unsuccessful, it must be replaced by another one. It is not excluded that this operation will have to be repeated many times to obtain the desired result. Each such replacement must be accompanied by training of the resulting semi-empirical model, and this requires a significant computational resource consumption. In an alternative variant, training is performed for the source model in continuous time, and only after that is its discretization, necessary for operation in a digital environment, performed. In this case, the algorithm for training the model in continuous time is based on the method of sensitivity functions, long known in automatic control theory [

137]. Of course, training a continuous-time model will be more time-consuming than a discrete-time model, but it will only have to be conducted once rather than repeatedly. The final decision as to which training option should be used, discrete or continuous, will depend on how repeatedly the reasonable difference scheme is expected to be selected in the first of these two options.

The second important conclusion is related to the comparison of results for empirical and semi-empirical models. It can be seen that semi-empirical models are many times more accurate than purely empirical ones. At the same time, the gap in modeling accuracy increases with the complexity of the behavior of the system under consideration. In this regard, in those cases where the accuracy obtained from the empirical model is insufficient and there is theoretical knowledge about the modeling object, the transition to a model of a semi-empirical type has a significant effect.

4.3. Semi-Empirical Model of Longitudinal Angular Motion of an Aircraft

The basic idea binding the examples discussed below is as follows. We want to solve not only the problem of system identification, for which we have experimental data showing the input influences on the system and its reactions to these influences, and the model that converts inputs to outputs is a black box. We are more interested in the very important task of system characteristics identification. This is, first of all, the aerodynamic characteristics of the aircraft. If we were able to solve this problem, then, substituting the obtained relations into the equations of motion, we obtain the model of the aircraft as a control object in general. To solve this problem within the framework of the semi-empirical approach, we form a hybrid model of motion, the basis for which is one of the traditional models of flight dynamics in the form of a system of ordinary differential equations. We transform it into a network structure, which includes, as customizable elements, feedforward subnets that implement nonlinear functions describing the corresponding dimensionless coefficients of aerodynamic forces and moments. All other elements of the mentioned network structure, which do not coincide with the subnets for the aerodynamic coefficients, obtain the corresponding numerical values of the connection weights from the source motion model in the form of ODEs, after which they are frozen. Thus, we obtain a gray-box type model, which, unlike black-box type models, has significantly fewer adjustable parameters, which greatly simplifies the process of training the model being formed.

The second of two examples that demonstrate the potential of a semi-empirical approach to modeling and identifying controllable dynamical systems involves the longitudinal angular motion of an airplane. We have obtained results that allow us to assess how effective this approach would be in comparison with empirical ANN models (NARX-type recurrent networks).

The theoretical model of aircraft motion is usually a system of first-order ordinary differential equations in normal form [

33,

34,

35]. For example, to describe the full angular motion of an aircraft with pitch, yaw, and roll control, a system of 14 such equations is used, which in general form looks as follows:

where

and

are state variables, and

and

are controls. Here,

,

, and

are roll, yaw and pitch angles;

p,

r, and

q are roll, yaw, and pitch angular rates;

and

are angle of attack and sideslip;

,

,

,

,

, and

are angles of deflection and angular rates for rudder, elevator, and ailerons;

,

, and

are command signals of actuators for elevator, rudder and ailerons.

The right-hand sides of the equations in the model (

40) contain the following dimensionless coefficients of aerodynamic forces and moments:

It is the incomplete and inaccurate knowledge of the nonlinear functions (

41) that is the main source of uncertainties that make it very difficult to obtain motion models of the kind (

40).

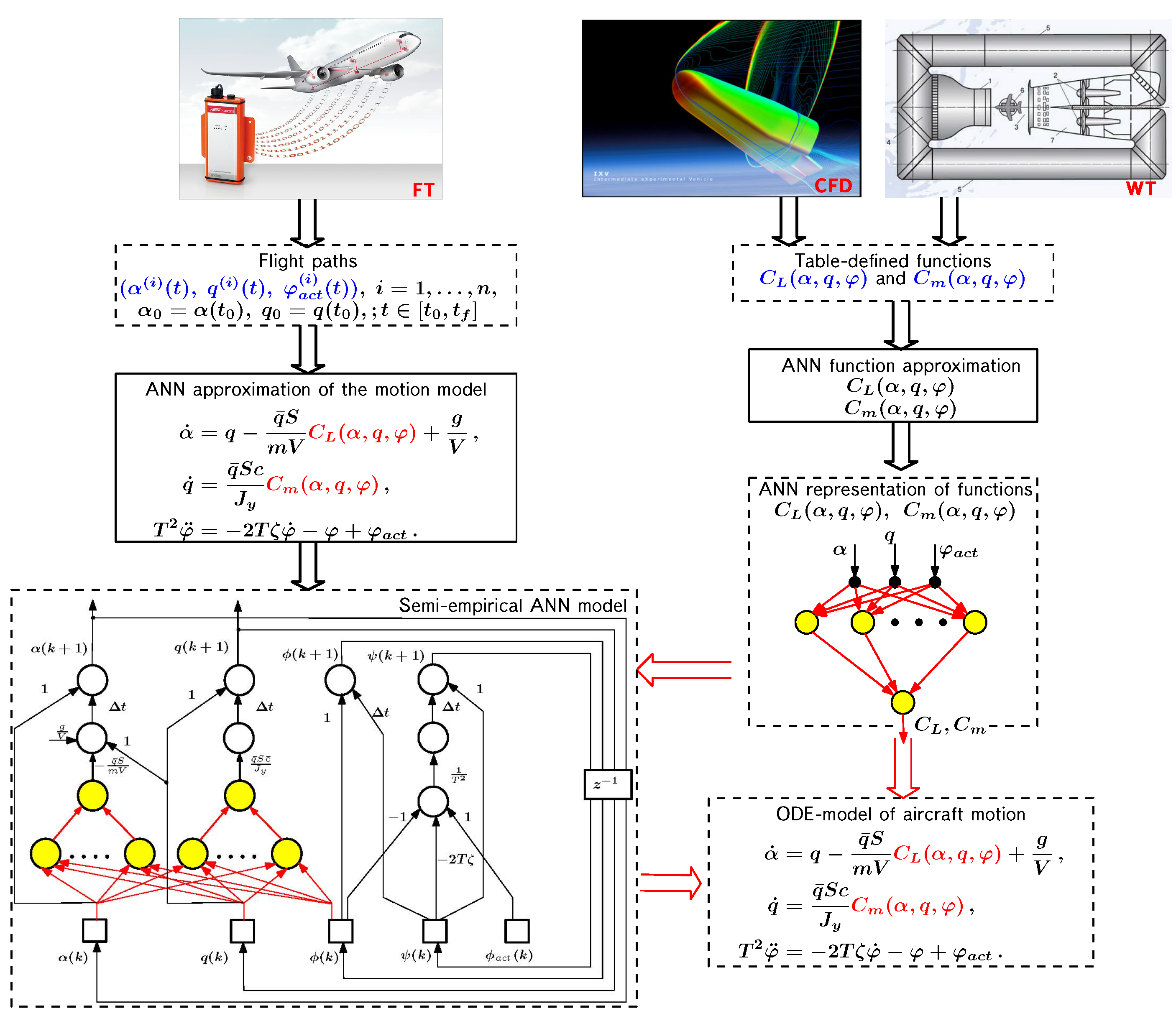

There are two approaches to the formation of dependencies (

41): direct and indirect. Let us illustrate the relationship between these two approaches with the example of the modeling problem for longitudinal angular motion of an airplane and identification of the lift force and pitching moment coefficients (see

Figure 12).

In the previous example, the model (

34), (

35) served as sources of training and test data for obtaining neural network models. Similarly, we will use as such a source the model (

31) traditional for aircraft flight dynamics [

33,

34,

35], which we introduced earlier in the section on empirical ANN models of motion. As an example of a specific modeling object, we considered a maneuverable F-16 aircraft, the source data for which were taken from [

111]. A computational experiment with the model (

31) was conducted for a time interval

sec with a sampling step

sec for the partially observed state vector

, with additive white noise with standard deviation

affecting the output of the

system.

This motion model can be used in its original form, i.e., as a system of ordinary differential equations, or as a semi-empirical neural network model built based on these equations. In the first case, it is required to somehow specify the functions

and

, which are included in the right-hand sides of the equations (

31). In the second case, these functions are included as separate modules in a gray-box type neural network. These functions can be represented in various ways, including kind of feedforward neural networks. The direct and indirect approaches mentioned above differ in the kind of available source data and the way of obtaining the required functions.

In the direct approach, we have data obtained from wind tunnel tests or computational fluid dynamics methods. The results obtained by these techniques have the kind of tabulated functions (

41) that serve as a source of both training and test data. In this case, a separate feedforward neural network is formed for each of the aerodynamic coefficients. These networks, as separate functions, can then be inserted into the appropriate places in a model (

40) or similar, after which this model can be used in the traditional way as a system of differential equations. An example of such a substitution can be seen in

Figure 12.

In the indirect approach, as seen in

Figure 12, the input data are state and control variables obtained as functions of time during a flight or computer experiment (as in the previous example). Thus, unlike the direct approach, there are no tabulated functions (

41) at our disposal. We have only data characterizing the behavior of our object under given conditions, which is influenced by the values of aerodynamic forces and moments. If all other factors affecting the behavior of the aircraft are fixed, then in this case it is possible to set and solve the problem of identification for aerodynamic coefficients from the above-listed experimental data. It has already been mentioned above that neural network models of the semi-empirical type can solve this problem.

Figure 12 shows how such a model looks as applied to the longitudinal angular motion of an aircraft.

It should be emphasized that purely empirical models, in particular the recurrent network of the traditional kind, allow us to solve only the problem of system identification, i.e., they allow us to obtain a model of the control object as a whole. However, no less important is also the problem of identifying the characteristics of the object under consideration, for example, the coefficients of aerodynamic forces and moments in the case of an aircraft. Models of the black-box type do not provide an opportunity to solve problems of this kind. At the same time, models of the gray box type, i.e., semi-empirical models, have such a possibility. They allow for solving both the problem of system identification and the problem of characteristic identification.

This fact is of great importance, since the task of identifying the aerodynamic characteristics of an aircraft from experimental data is one of the most important for flight dynamics and control. The approach to solving this problem based on semi-empirical neural network modeling differs significantly from the traditional one.

Namely, the traditional approach is based on the linearization of functions (

41) using their Taylor series expansion with terms not higher than the first order. In this case, the restoring object in the identification problem is the expansion coefficients, which include the derivatives

,

etc. Moreover, this operation is performed “at a point”, i.e., for a particular combination of arguments of these functions and not for the whole area of their definition.

At the same time, the semi-empirical approach recovers nonlinear functions of several arguments—

and

q—as holistic objects in the whole range of changes in the values of their arguments. As can be seen from

Figure 12, these functions are localized inside the semi-empirical model (they are highlighted in

Figure 12). Each of them has its own feedforward network. The training algorithm of this model selects the values of the connection weights of these networks. At the same time, the weights of other connections obtained from its theoretical part are frozen. After the training process is completed, the ANN modules realizing the functions (

41) can be extracted from the semi-empirical model and, if necessary, placed as ordinary functions in the model represented by the differential equations of motion. In particular, as shown in

Figure 12, the ANN modules for the functions

and

can be used in the right-hand sides of the equations describing the longitudinal angular motion of the airplane.

In some cases, for the restored functions , , etc., we need to find the values of their partial derivatives, for example, , , , etc. For example, the variation of the partial derivative of the pitching moment coefficient with the angle of attack provides useful information for flight dynamics and control specialists.

If necessary, the partial derivatives of the functions , , …, , given in the form of ANN modules, can be computed using an algorithm similar to the error backpropagation algorithm used to train the ANN model as a whole.

Applying to (

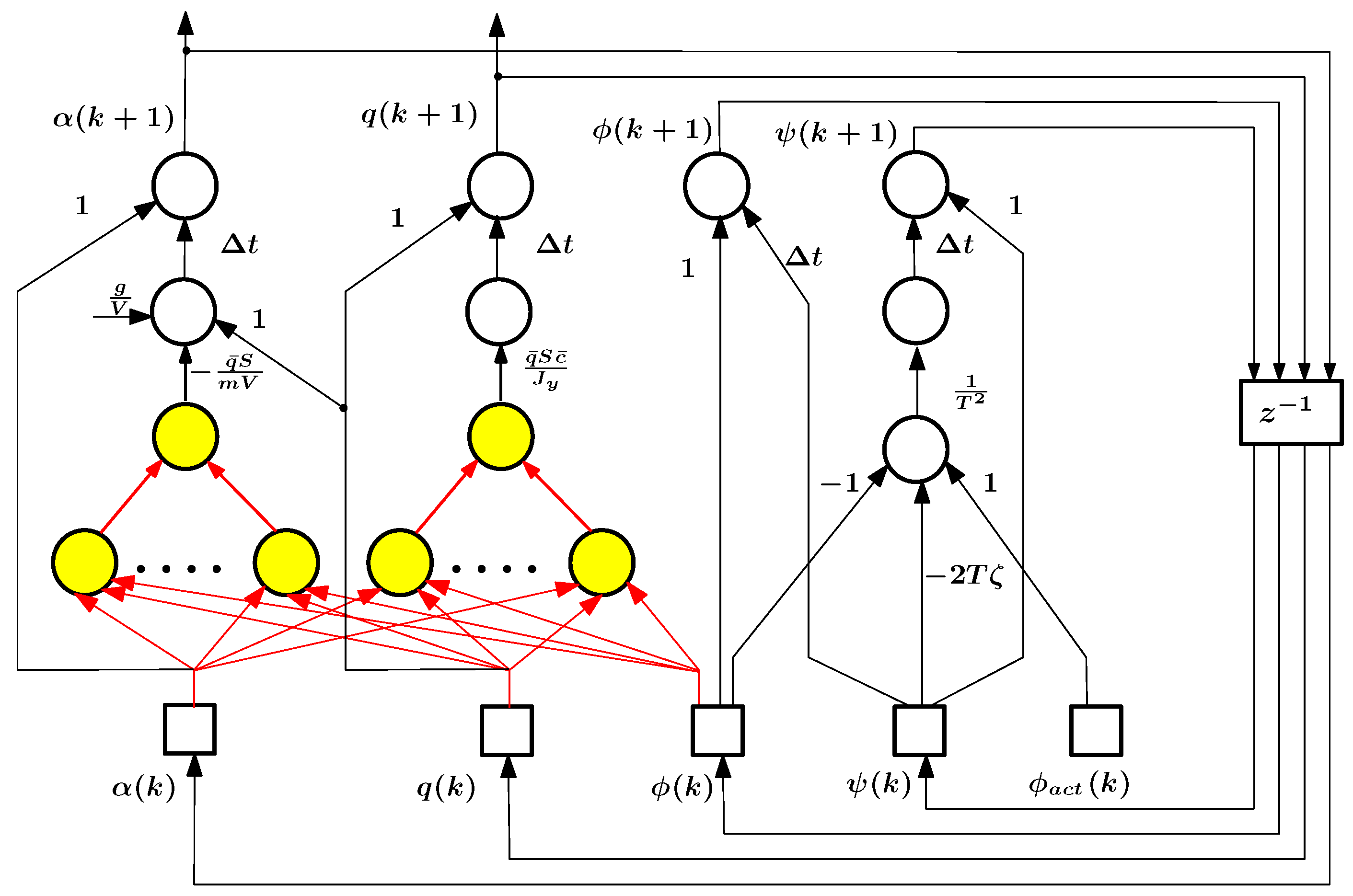

31) the above-mentioned procedure of forming a semi-empirical ANN model leads to the structure shown in

Figure 13 (it is based on the Euler discretization scheme). A similar structure for the case of a fully empirical model (NARX) corresponding to the same problem (

31) is shown in

Figure 14. For the case of the Adams scheme, a semi-empirical model can be constructed in a manner similar to that shown in

Figure 11 for the previous example.

As it was mentioned earlier, one of the critical issues arising in the formation of empirical and semi-empirical ANN models is obtaining a training set that provides an adequate representation of the peculiarities of the behavior of the modeled system. This formation is carried out by developing an appropriate test control action on the modeled object and evaluating the object’s response to this action.

As in the previous example, we will use the RMS of additive noise acting on the system output as the target value of the modeling error.

The model under consideration is trained on the sample

, obtained using the source model (

31), steady state straight line horizontal flight as the reference mode, and a polyharmonic excitation signal. It was performed in Matlab for networks in the form of LDDN (Layered Digital Dynamic Networks) using the Levenberg–Marquardt algorithm and the MSE criterion. The Jacobi matrix is calculated using the RTRL (Real-Time Recurrent Learning) algorithm [

46].

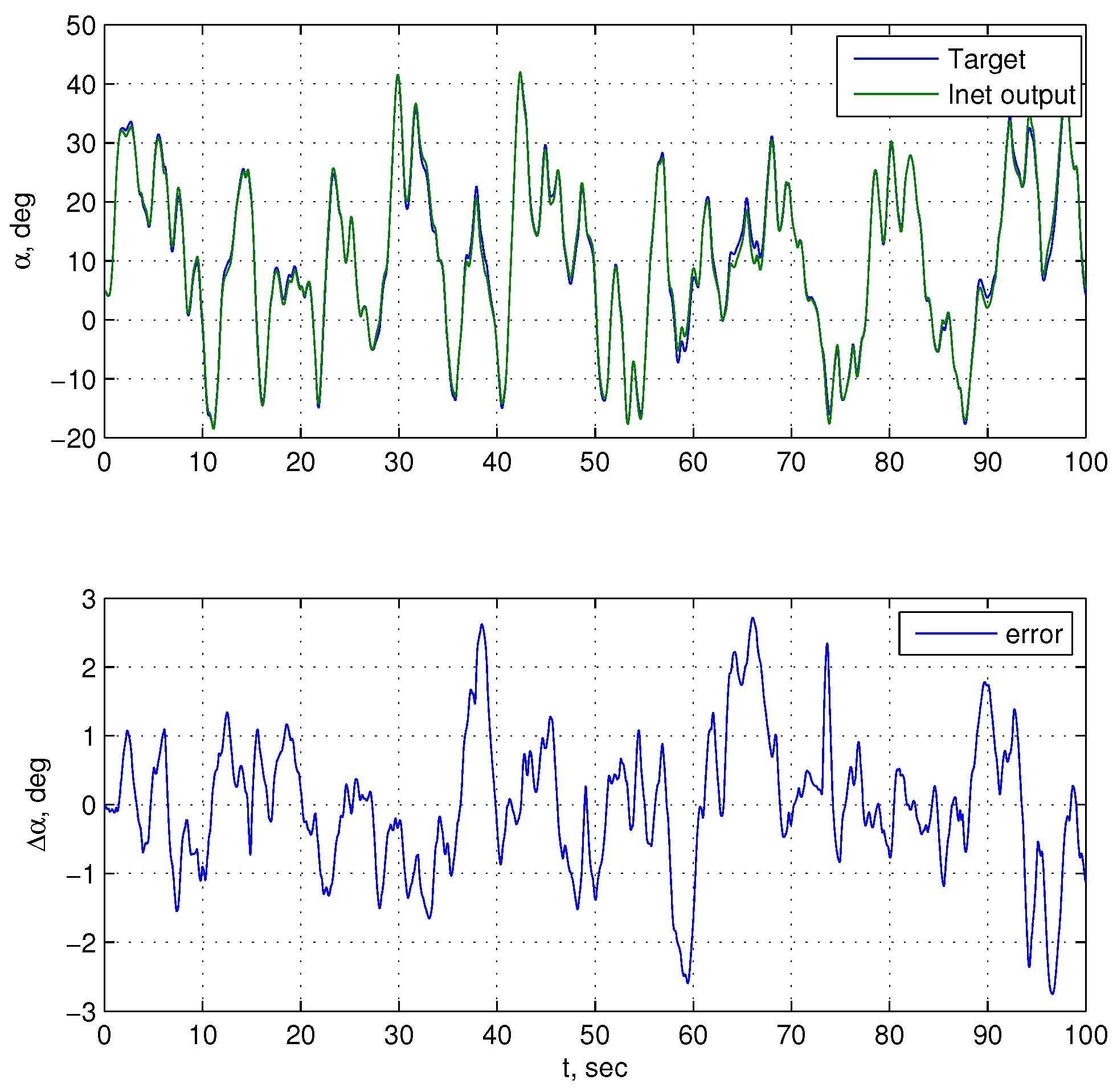

As follows from the results shown in

Figure 15, the generated semi-empirical model has a very high accuracy. The pitch rate error is within

%, and the angle of attack error is within

%. Since the uncertainty factors in the source model are only the relationships for the aerodynamic force and moment coefficients, these results indicate a successful solution not only to the problem of system identification but also to the problem of characteristic identification, i.e., the lift force and pitching moment coefficients in the problem under consideration.

Let us compare the results obtained for the semi-empirical and empirical motion models corresponding to the system (

31). For the empirical ANN model of NARX type, the results are presented in

Figure 16. A comparison of the results for these two variants of ANN models of longitudinal angular motion of the airplane allows us to draw the following conclusions. The values of the current modeling error in angle of attack lie within

deg for the semi-empirical model and within

deg for the NARX model. Typical RMS error values are

deg,

deg/s for the semi-empirical model, and

deg,

deg/s for the empirical model. Thus, as in the previous example, the semi-empirical model has an undeniable advantage in accuracy over the empirical model.

As noted above, the source of uncertainty in the motion model (

31) is the functions

and

which describe the dependence of the lift coefficient and pitching moment coefficient on the angle of attack

and elevator deflection angle

. These dependencies have a complicated character, especially for a maneuverable aircraft. As an example, the dependence

for an F-16 airplane, plotted using data from the [

111], is shown in

Figure 17a. We see that the value of the coefficient

is subject to frequent and significant changes when the parameters

and

are varied over wide ranges. Even more significantly, the derivative of this coefficient, especially

, repeatedly changes sign since for values of the angle of attack at which this derivative is positive, the control object is unstable. It follows that a nonlinear function of several arguments,

, must be reconstructed with very high accuracy to avoid incorrect values of its derivative if possible. The semi-empirical approach allows us to cope with this problem, as can be seen from

Figure 17b for the considered example.

The above results of the problem (

31) clearly demonstrate the capabilities of the semi-empirical approach. It provides a model of aircraft motion that is considerably more accurate than can be achieved by the empirical approach. In addition, unlike the empirical approach, it is possible to identify the characteristics of the object under consideration. In the case considered, these are the aerodynamic coefficients

and

as nonlinear functions of several variables.

As already noted above, the problem of modeling the controlled longitudinal angular motion of an airplane is the threshold problem in terms of the complexity of the empirical approach. The semi-empirical approach can be used to form a model of this kind without any significant difficulties while ensuring, as already noted, a much higher level of modeling accuracy.

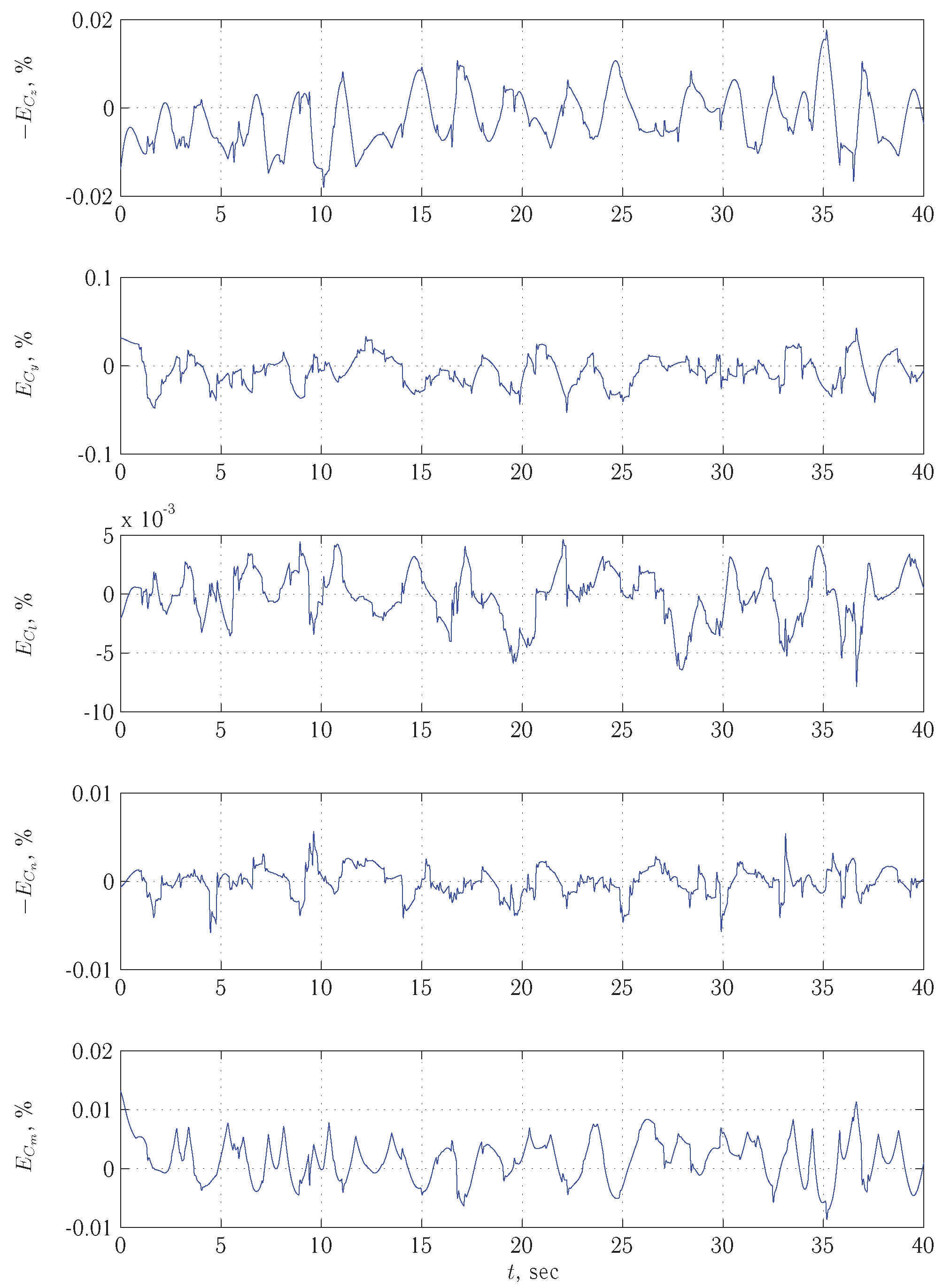

Our experience shows [

134,

135,

136] that this approach can be used to successfully solve identification problems for objects much more complicated than (

31). In particular, the problem (

40), (

41) and fourteen state variables instead of four in (

31), as well as three control variables instead of one. In problems of this kind, it was possible to obtain very high accuracy both for the model of the control object as a whole and for the functions describing all six coefficients of aerodynamic forces and moments. However, to ensure such a result, it was necessary to significantly increase the number of examples in the training set. If in problems of the kind (

31) the typical number of examples was

, then in problems of the kind (

40), (

41), and similar problems, about

is required.

The accuracy of the model to be formed is determined by how precisely the nonlinear functions describing the aerodynamic characteristics of the aircraft are reconstructed, i.e., how effectively the problem of identifying these characteristics is solved. To obtain an answer to this question, we can extract from the semi-empirical model the ANN modules corresponding to the restored functions for

,

,

,

, and

, and then compare the values they produce with the experimentally available data from [

111]. In doing so, we can obtain the mean square error values of the derivation of each of the functions

,

,

,

, and

by the corresponding ANN module. In the experiments performed, these values are as follows:

,

,

,

,

. This is an integral estimate of the accuracy for the mentioned dependencies. In addition, the dynamics of changes in the current values of the reproduction error for

,

,

, and

in the process of testing the model are also interesting. These data, shown in

Figure 18, show that the error level changes insignificantly over time; no significant changes in it, which could adversely affect the adequacy of the empirical ANN model, are detected.

It has to be noted that the semi-empirical approach to solving problems of modeling and identification of controlled dynamical systems requires a rather large consumption of computational resources for its implementation. In comparison with it, traditional variants of solving such problems are much more economical. However, in our opinion, the higher computational resource-demanding nature of our approach pays off with the advantages it provides. Namely, as noted above, the main factors of uncertainty in models of aircraft motion are its aerodynamic characteristics, characterized by dimensionless coefficients of aerodynamic forces and moments. Each of these coefficients is a nonlinear function of several variables. The traditional approach to the problem of the identification of aerodynamic characteristics (see [

39,

40,

41]) is based on linearization of these functions by means of their Taylor series expansion. This operation is performed “at a point”, i.e., for one particular combination of values of the arguments of the recovered function, rather than for the entire domain of their definition. The main advantage of the semi-empirical approach is that with its use we can reconstruct from experimental data the above-mentioned nonlinear functions of many variables as holistic objects in the whole range of changes in the values of their arguments with high accuracy. In other words, firstly, there is no need for linearization of these functions, and secondly, the obtained solution is no longer bound to a single specific combination of values of the function arguments.

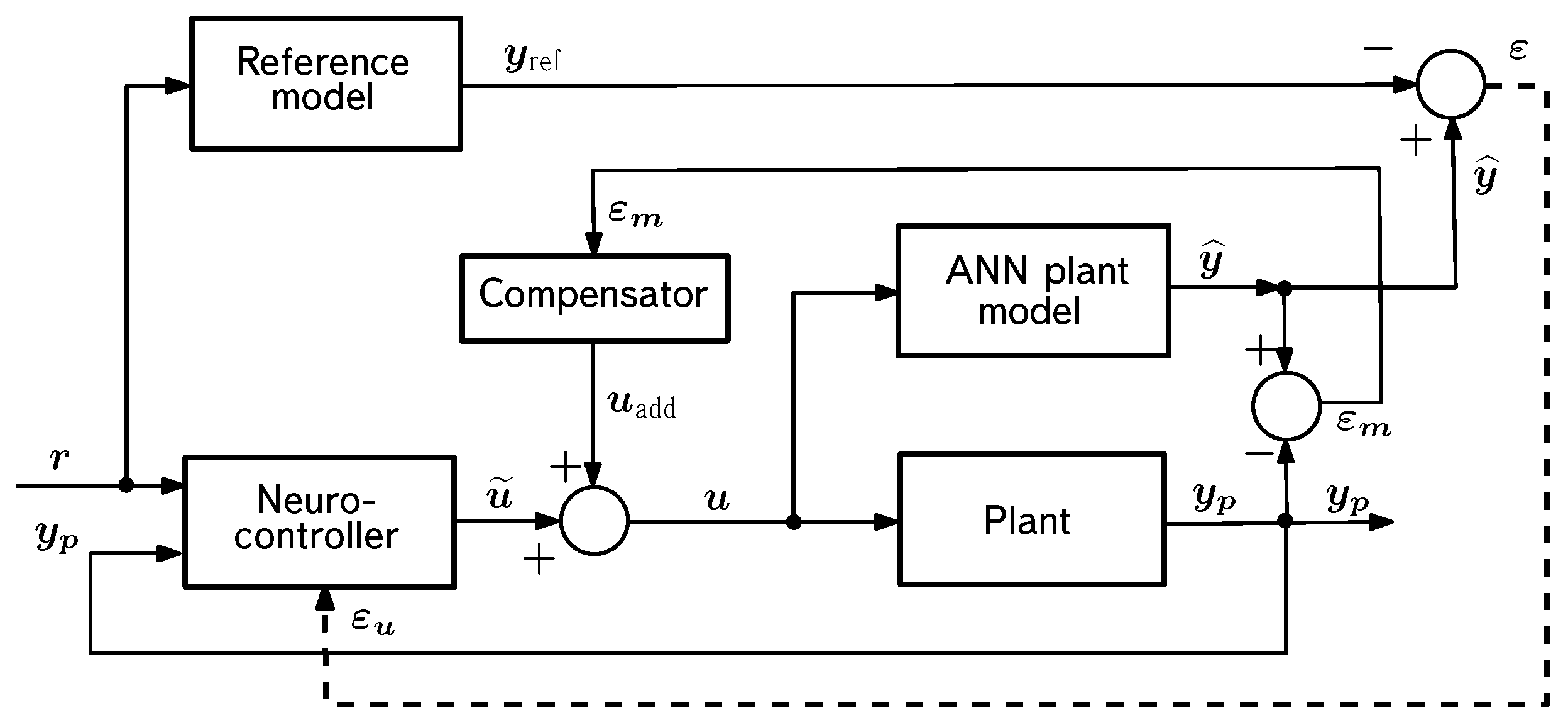

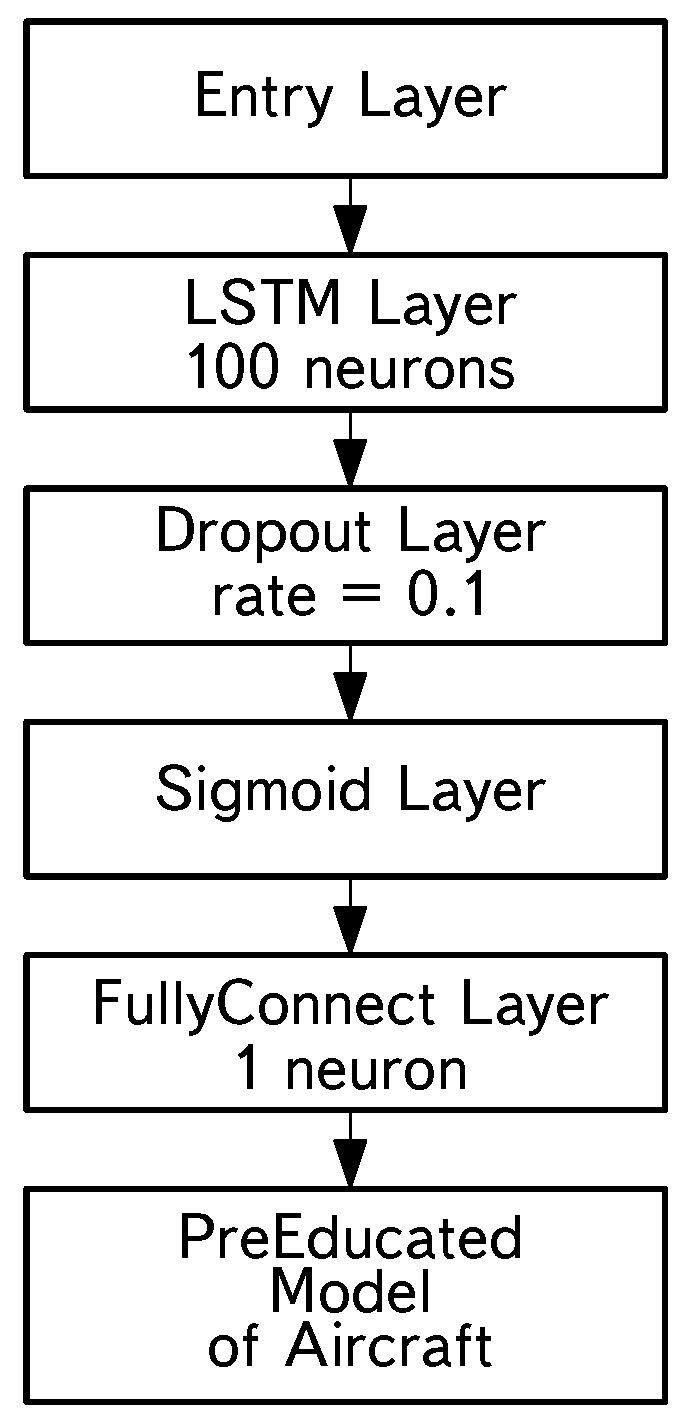

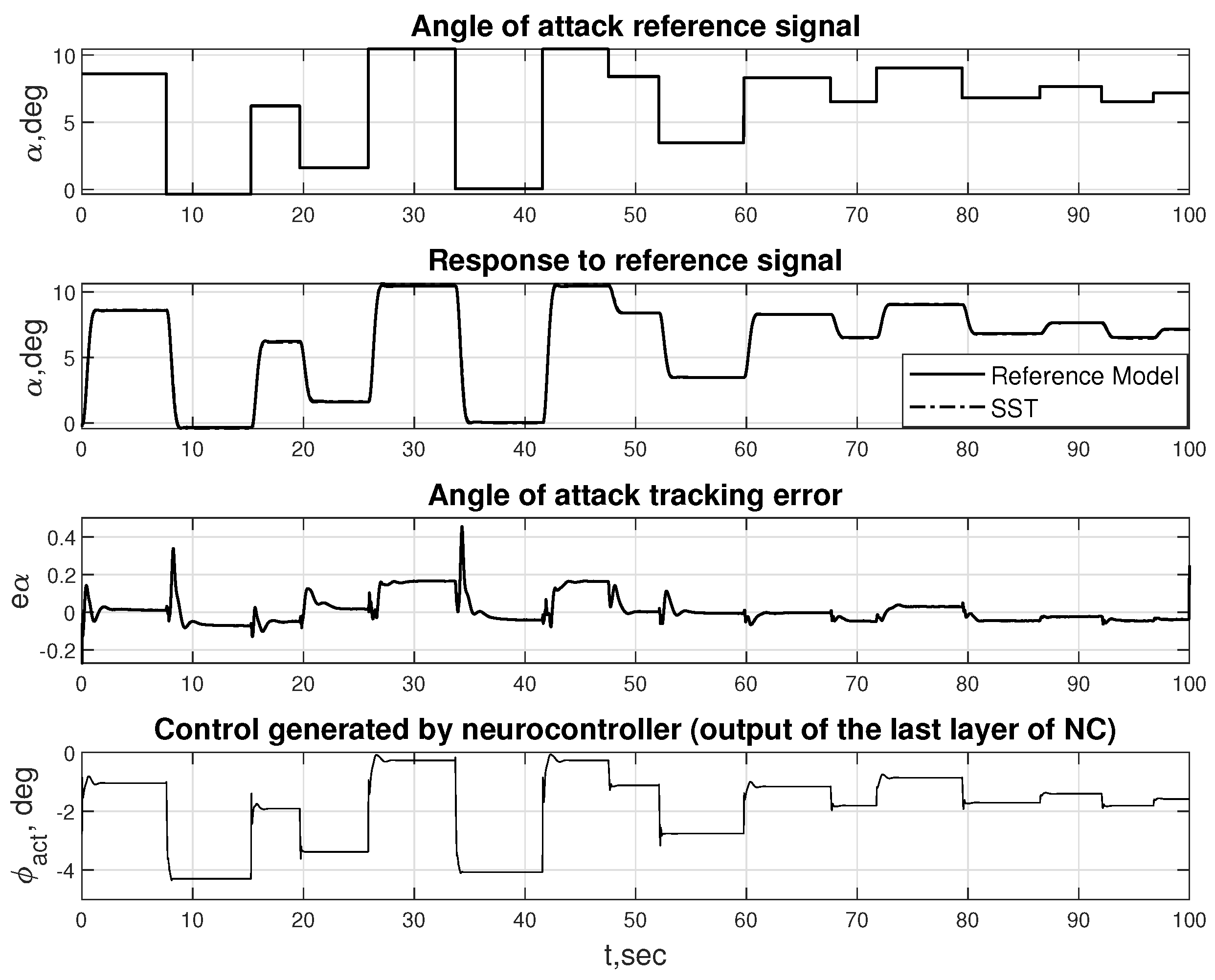

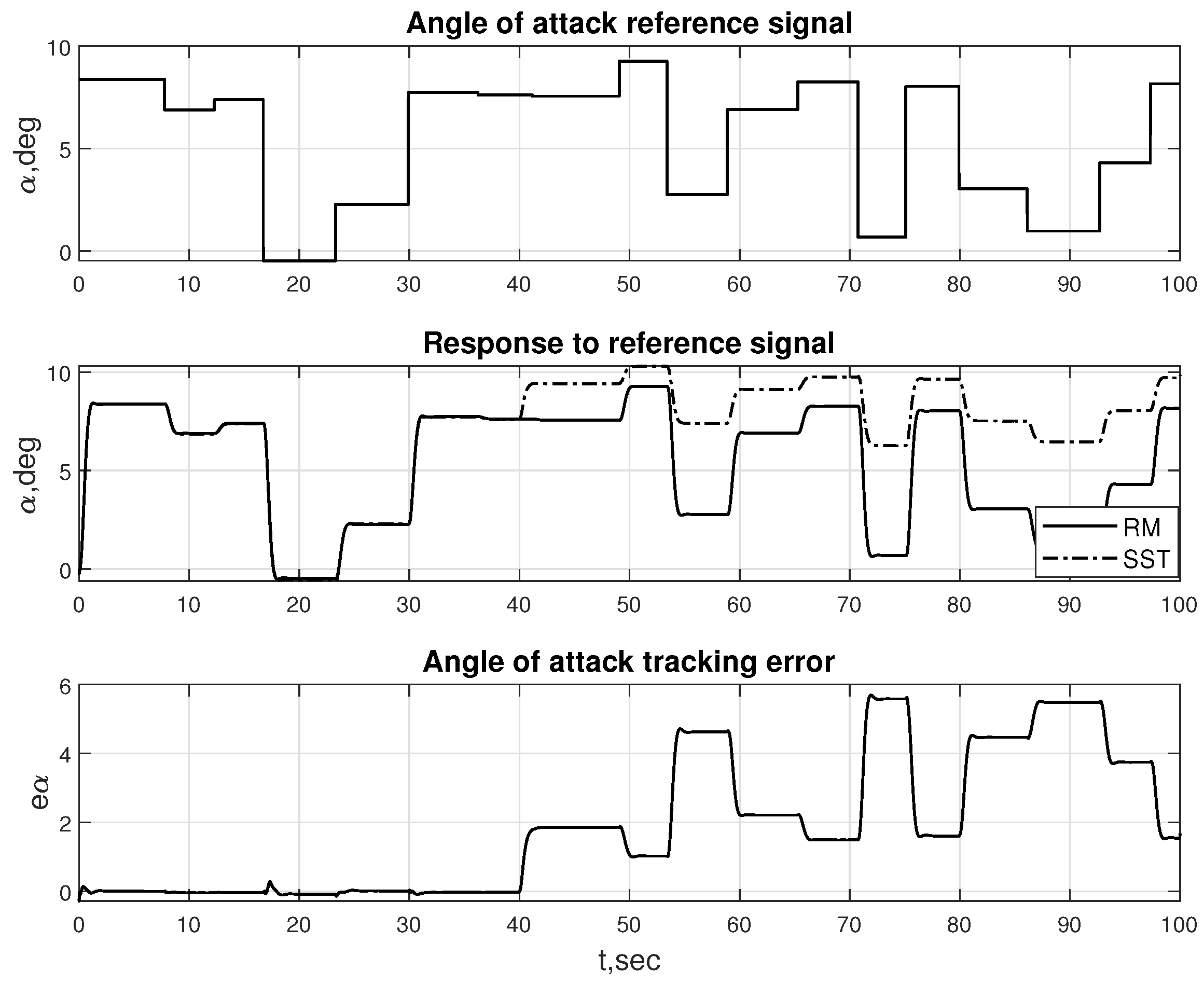

5. Deep Learning Techniques for Modeling and Controlling Aircraft Motion

So, the use of semi-empirical ANN models of aircraft motion allows us to overcome the complexity threshold that takes place for empirical ANN models. However, the price to be paid for this achievement will be unacceptably high in some cases. These are the situations when it is necessary to promptly correct the ANN model in order to restore its adequacy to the object with changed properties. For such applications, semi-empirical ANN models are absolutely unsuitable. They can be successfully applied, as was shown in the previous section, to those tasks where online adjustment of the model is not required. In particular, one of the most important problems of this kind is the identification of aerodynamic characteristics of aircraft with their representation in the form of nonlinear functions of many variables, with high accuracy in the whole area of definition of these functions.