Distributed and Scalable Cooperative Formation of Unmanned Ground Vehicles Using Deep Reinforcement Learning

Abstract

1. Introduction

- Most research works only focus on one or several simple formation shapes, without considering the scalability for different formations, and the formation scheme needs to be redesigned when the number of UGVs or the formation shape changes.

- Most of the formation algorithms based on deep reinforcement learning have limited scalability, and any change in formation requires redesigning the deep reinforcement learning network and rerunning the training process. Therefore, the scalability of the formation is of great significance. By introducing a distributed control method, the trained network can be easily applied to the newly added UGV, which would facilitate the adjustment of the formation.

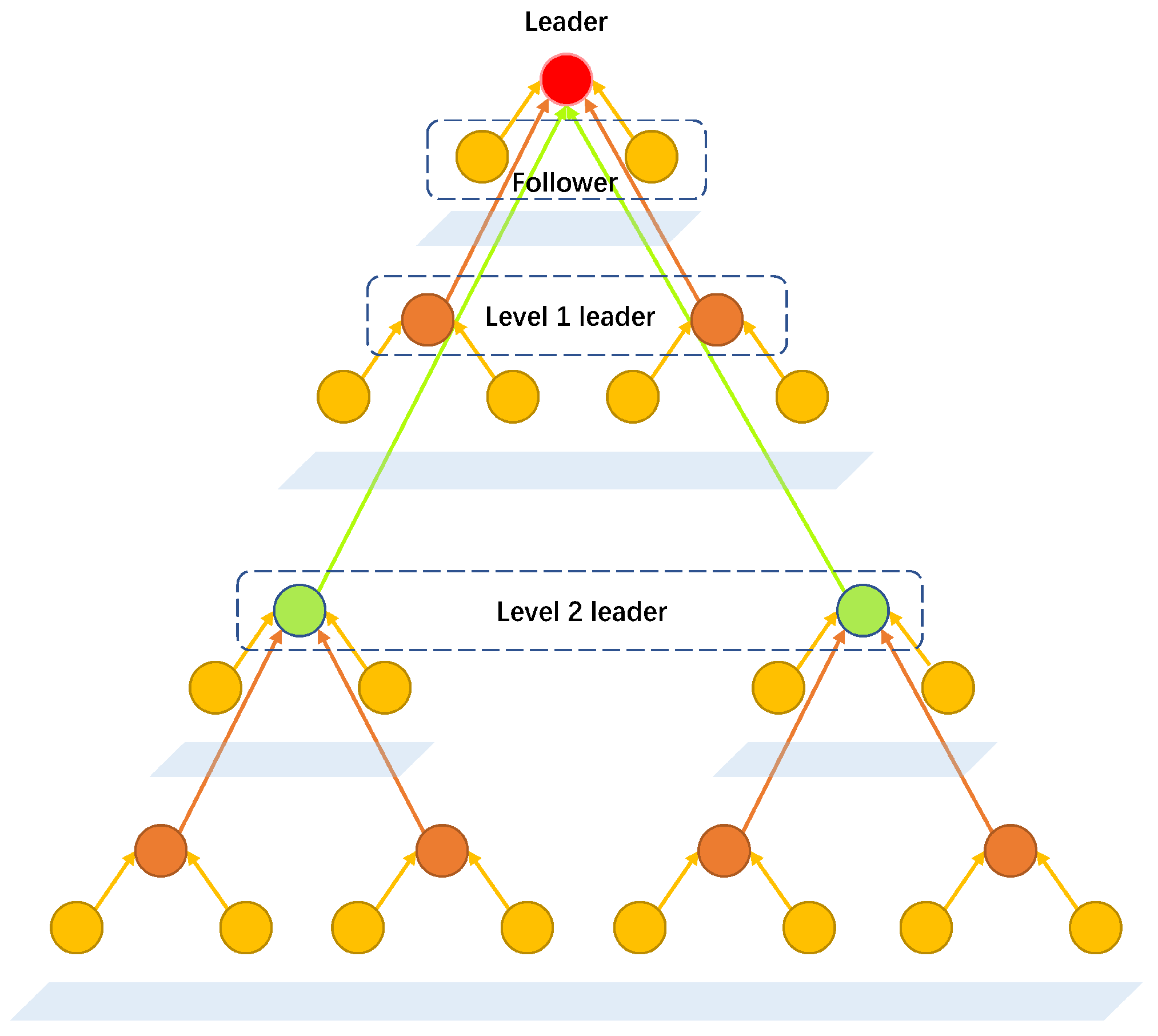

- A multi-UGV layered and scalable formation framework is proposed, using distributed deep reinforcement learning for navigation, obstacle avoidance, and formation tasks.

- To realize the coordination and scalability of the formation, a new MDP is designed for the UGV with a follower attribute so that the same type of UGV can reuse the learned control strategy, thus making the formation expansion easier.

- Simulation experiment results demonstrate that the proposed formation algorithm generalizes well in formations of different number of vehicles and shapes.

2. Preliminaries

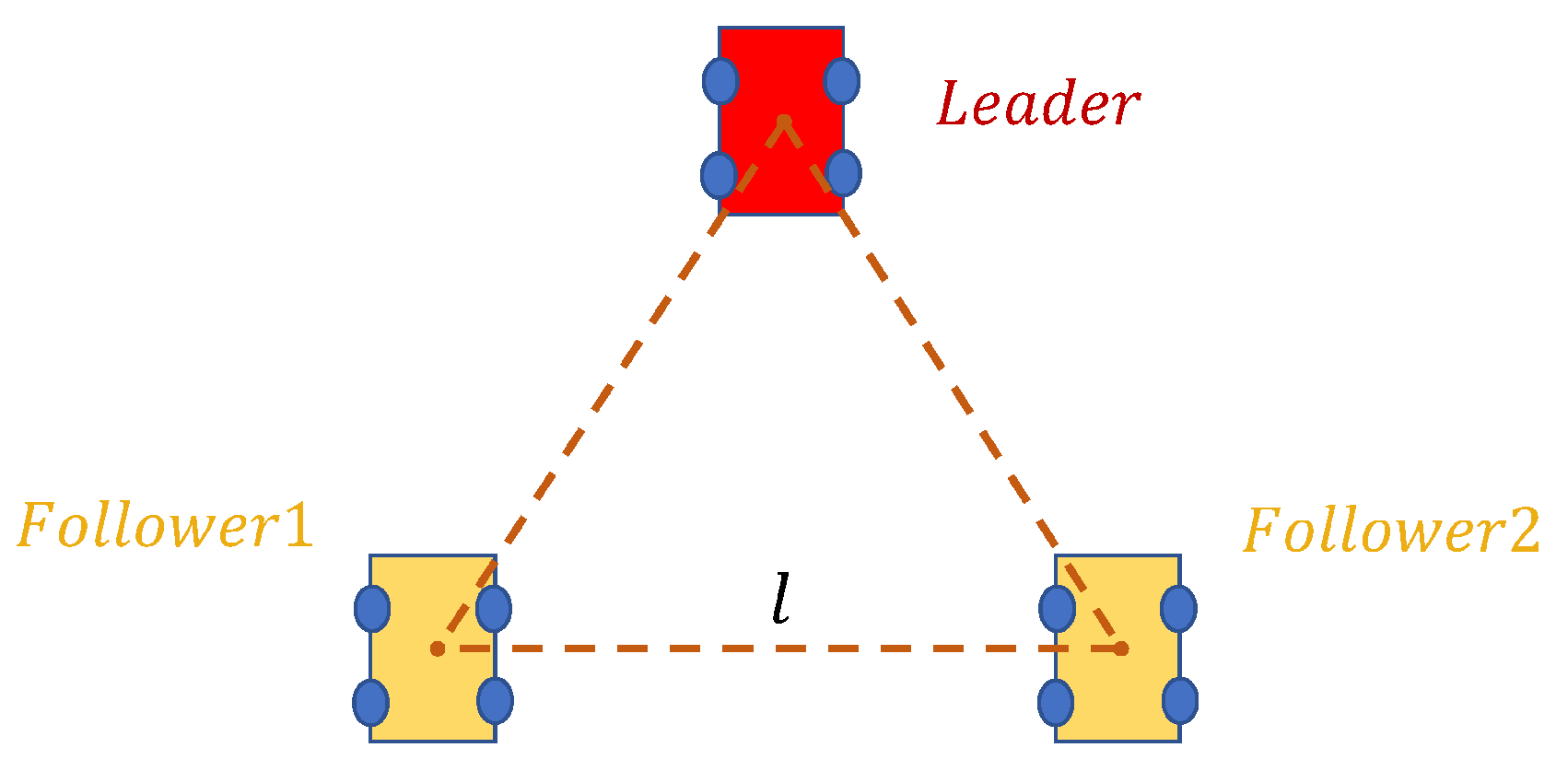

2.1. Problem Formulation

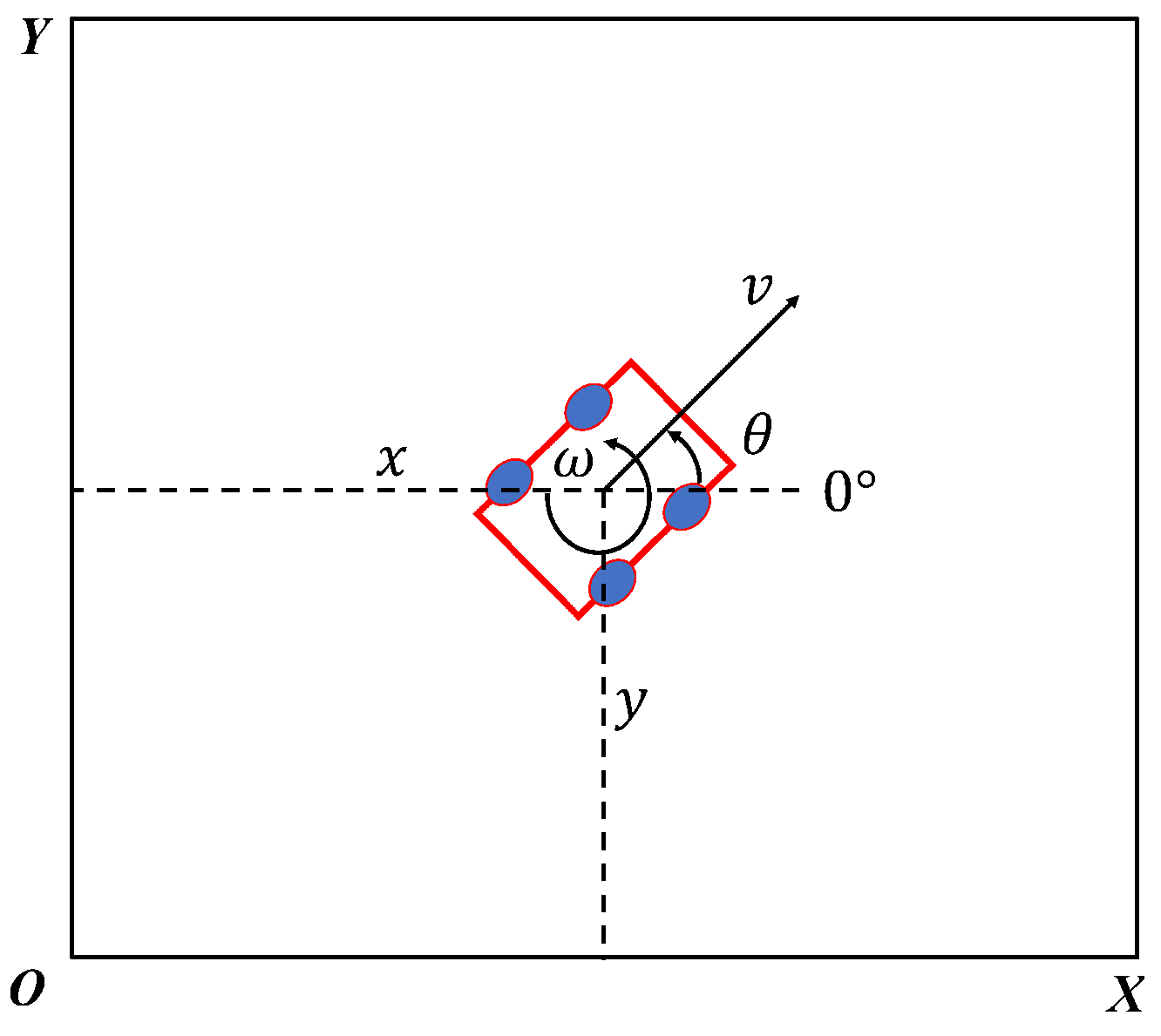

2.2. UGV Model

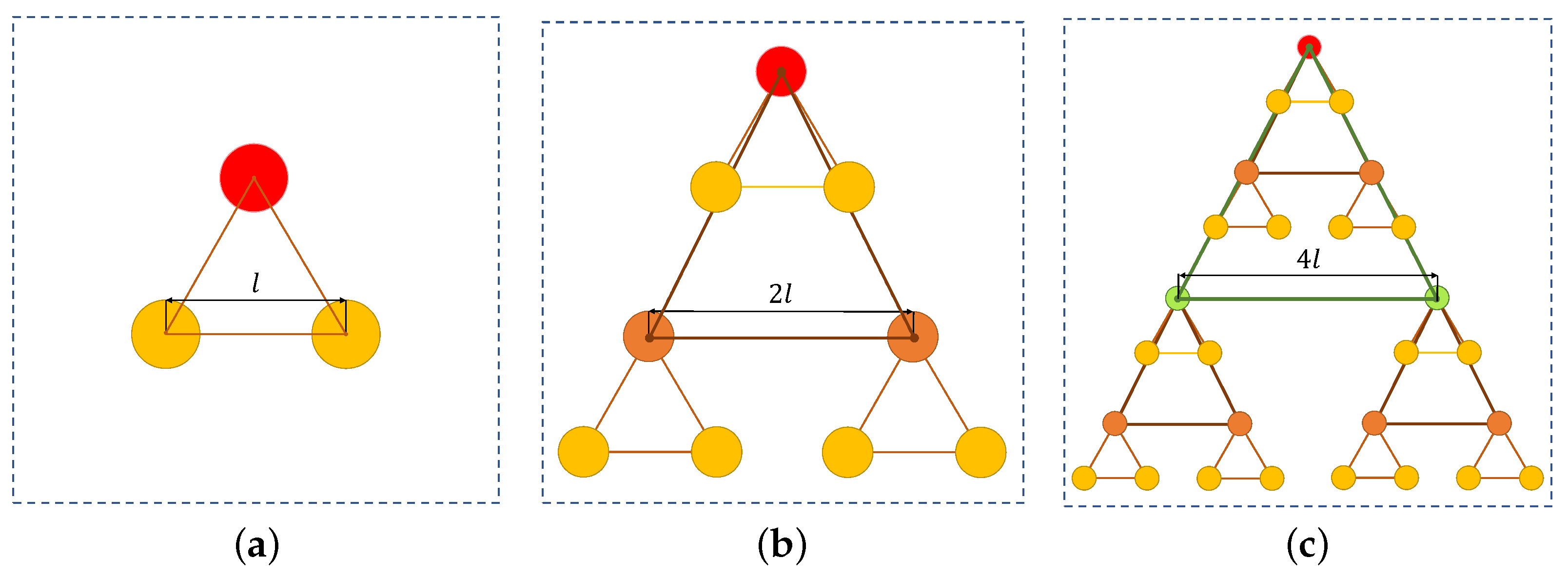

2.3. A Layered Formation Structure

2.4. Fundamentals in Reinforcement Learning

2.4.1. Markov Decision Process

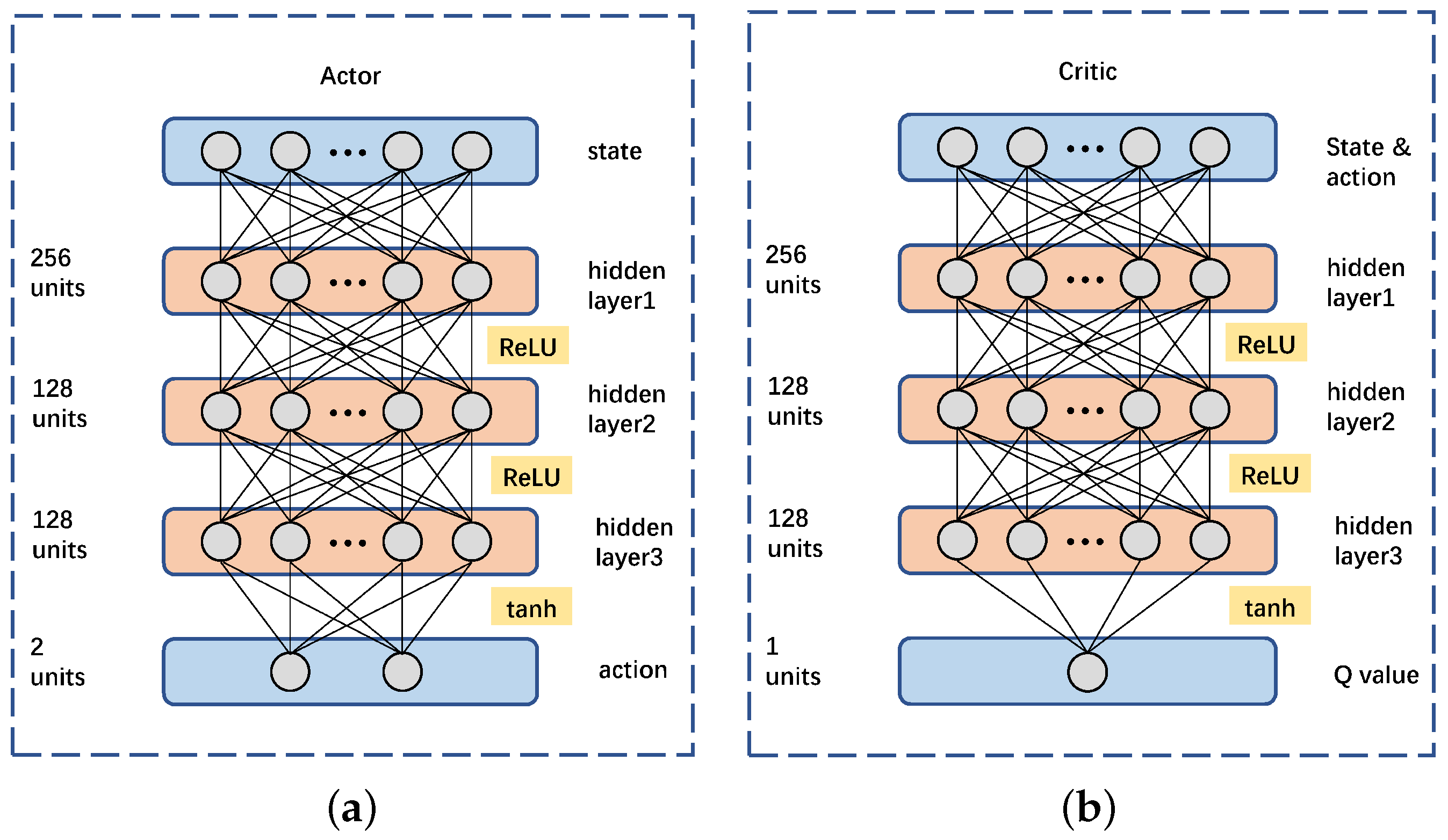

2.4.2. Deep Deterministic Policy Gradient

3. Deep-Reinforcement-Learning-Based Distributed and Scalable Cooperative Formation Control Algorithm

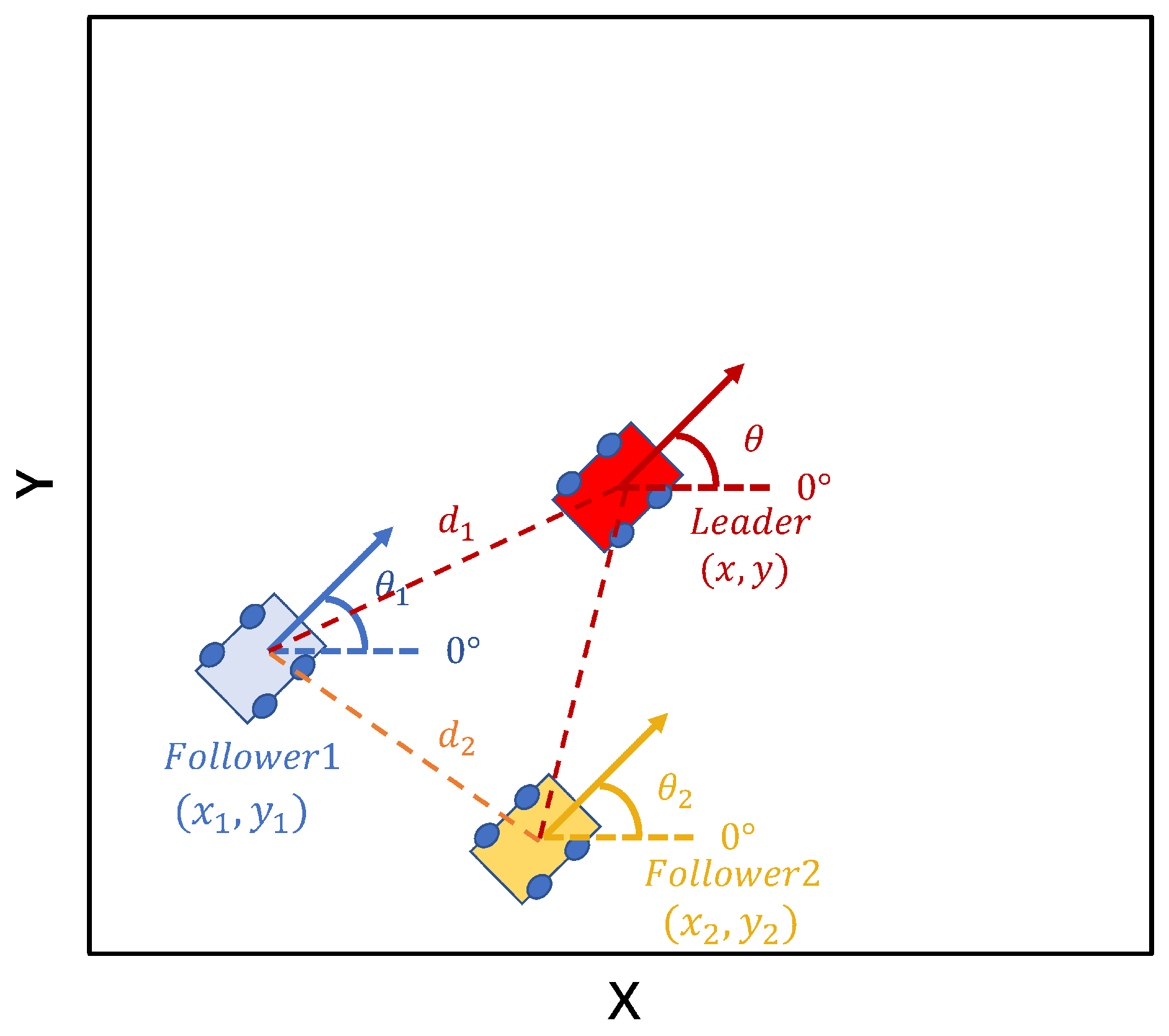

3.1. State Space

3.2. Action Space

3.3. Reward Functions

- No collision: if there is no collision with obstacles in the next state, no penalty is given.

- Collision: when the next state collides with an obstacle, the UGV is given a large negative reward as a punishment to guide it to reduce the selection of similar actions in this state.

- Collision along the route: Compared with the continuous real world, discrete time brings some special situations: the next state of the UGV does not collide with the obstacle, but there is an overlap between the connecting line of the two states and the obstacle. Therefore, checking for such collisions is easily overlooked but necessary, and was also assigned as a penalty for collision along the route.

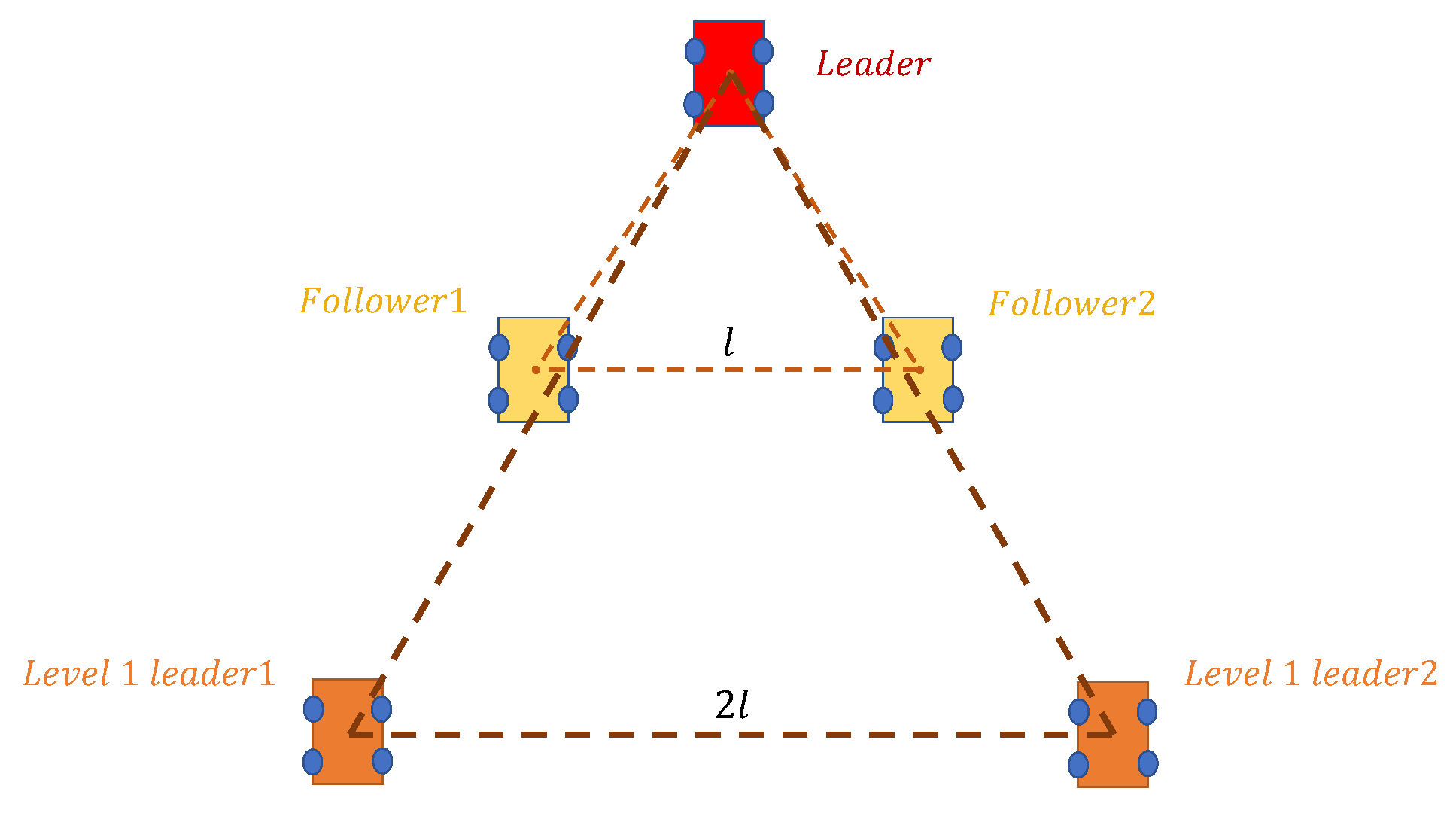

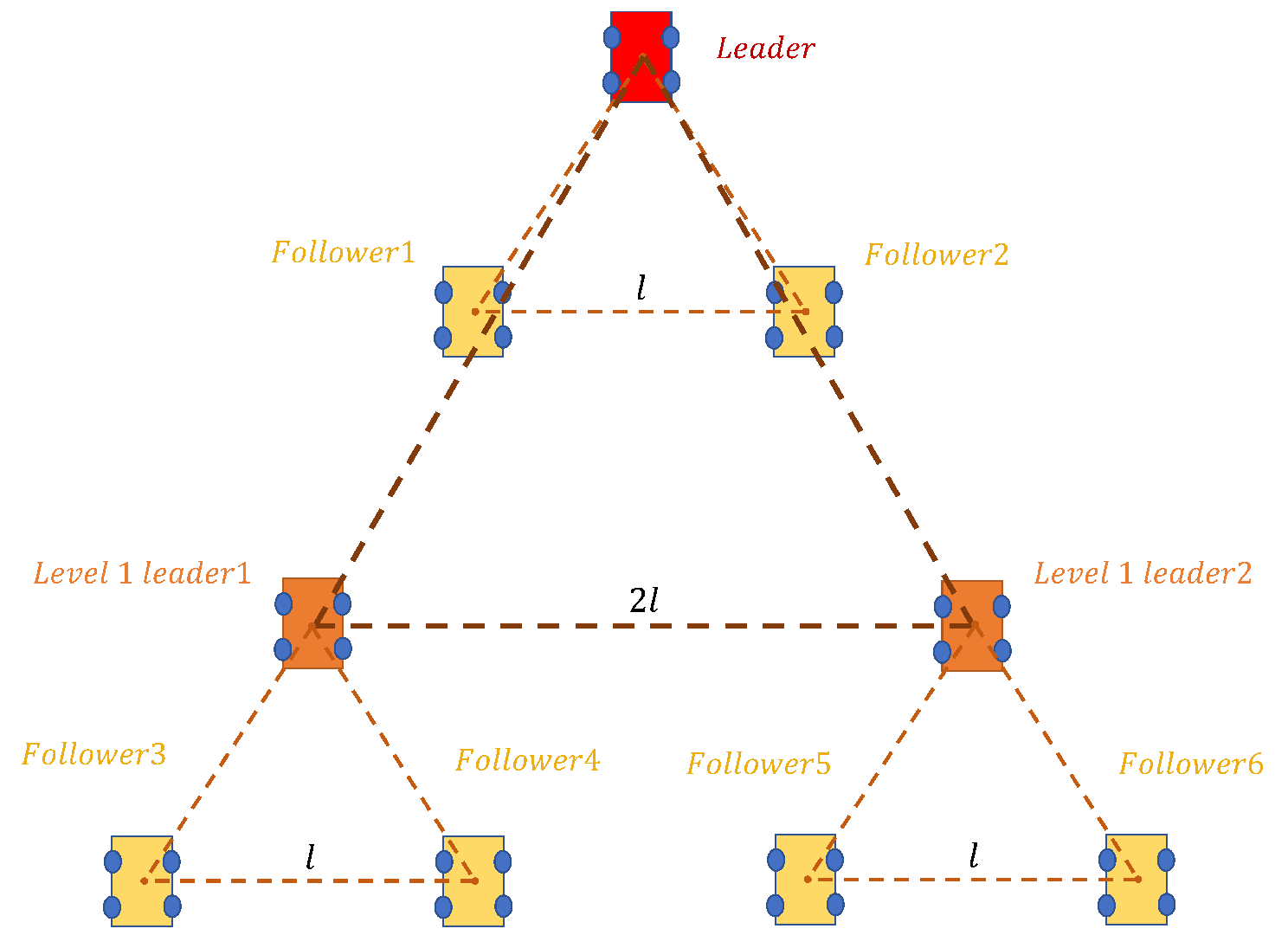

3.4. Formation Expansion Method

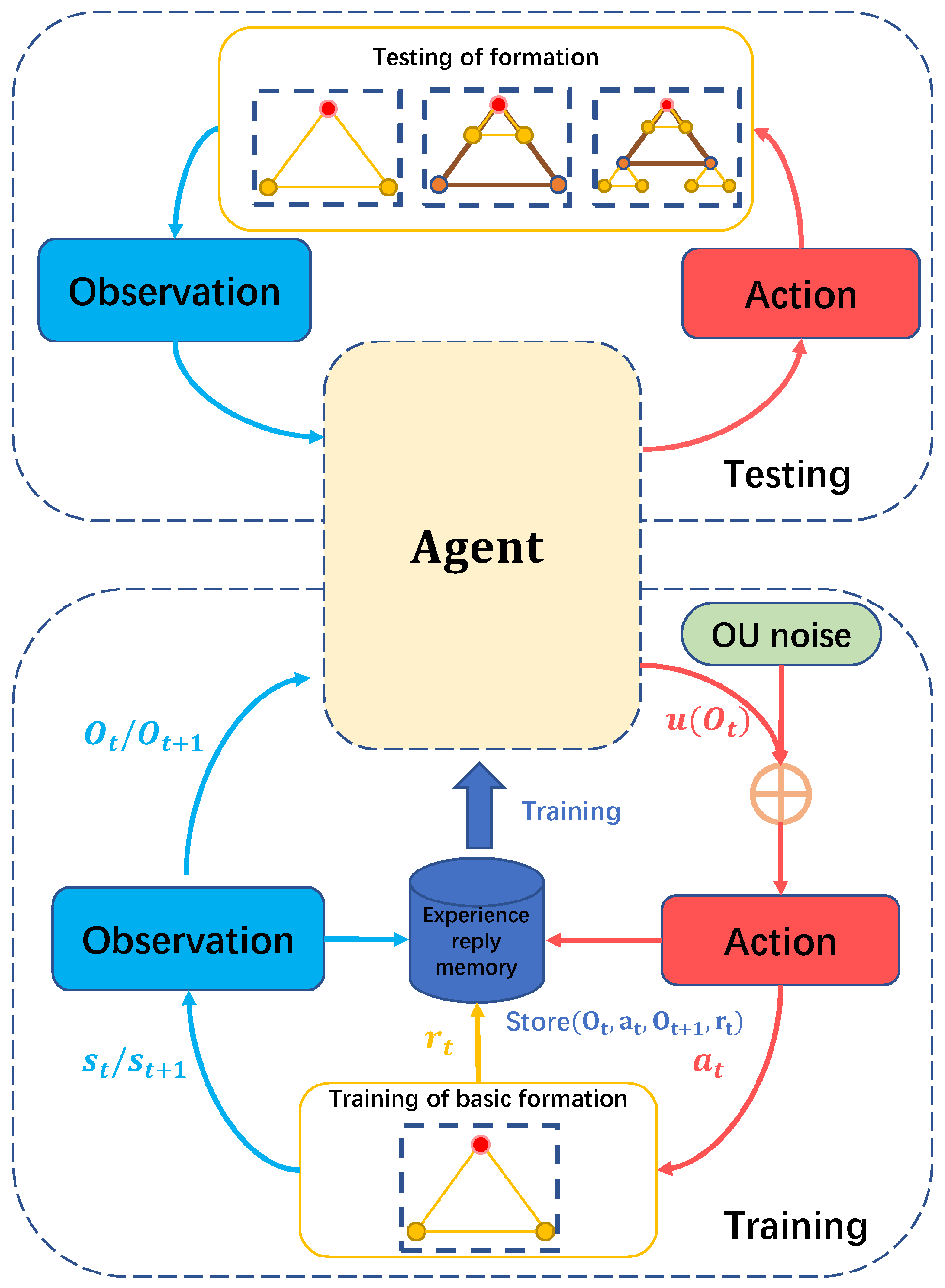

3.5. Cooperative Formation for Distributed Training and Execution Using Deep Deterministic Policy Gradient

| Algorithm 1 DDPG for Distributed and Scalable Cooperative Formation. |

|

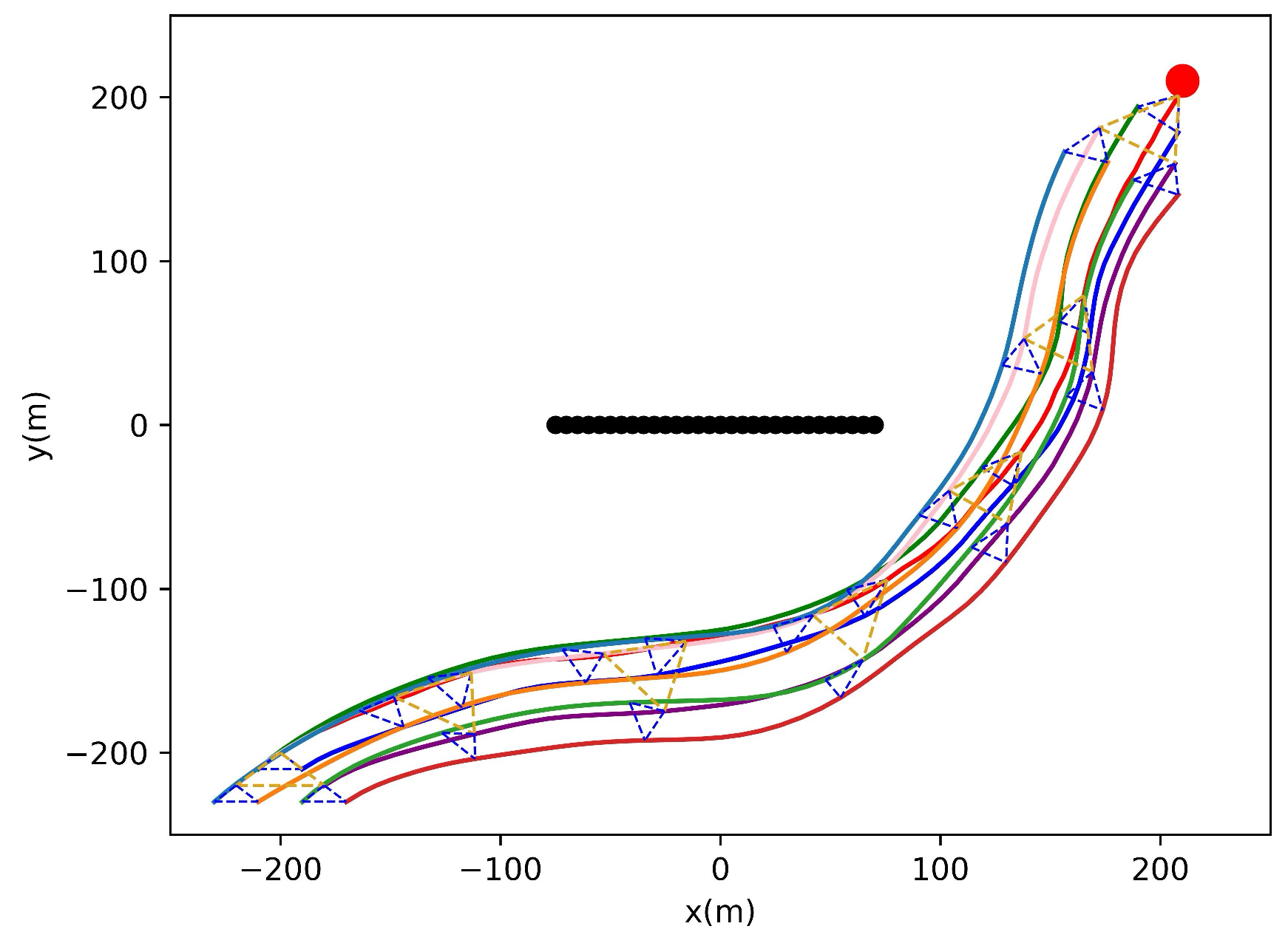

4. Simulation Results and Analysis

- Training and testing the performance of the cooperative formation control algorithm under the distributed deep reinforcement learning architecture on a basic formation in Section 4.1.

- Testing the scalability of the proposed algorithm on expanded formations in Section 4.2.

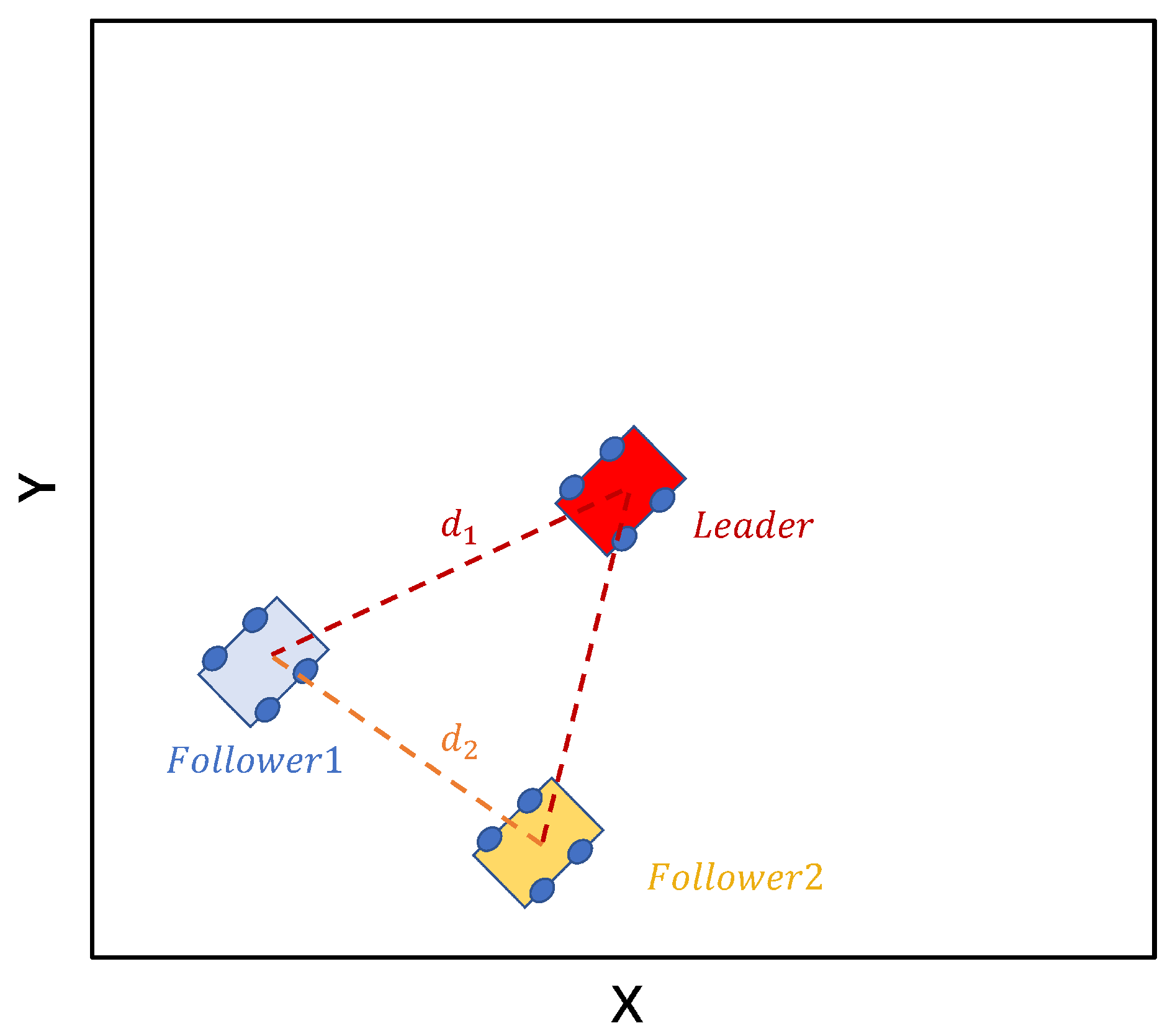

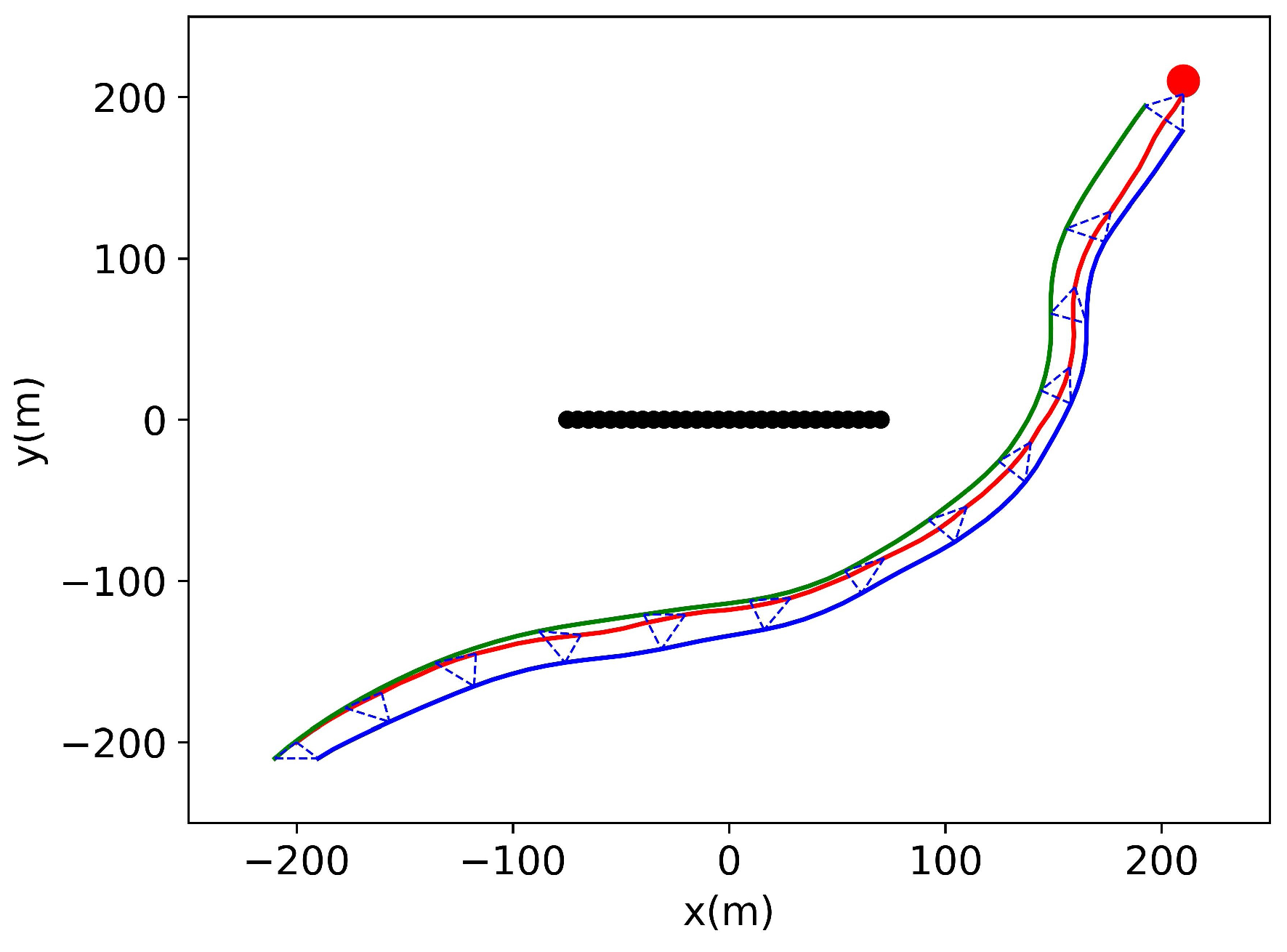

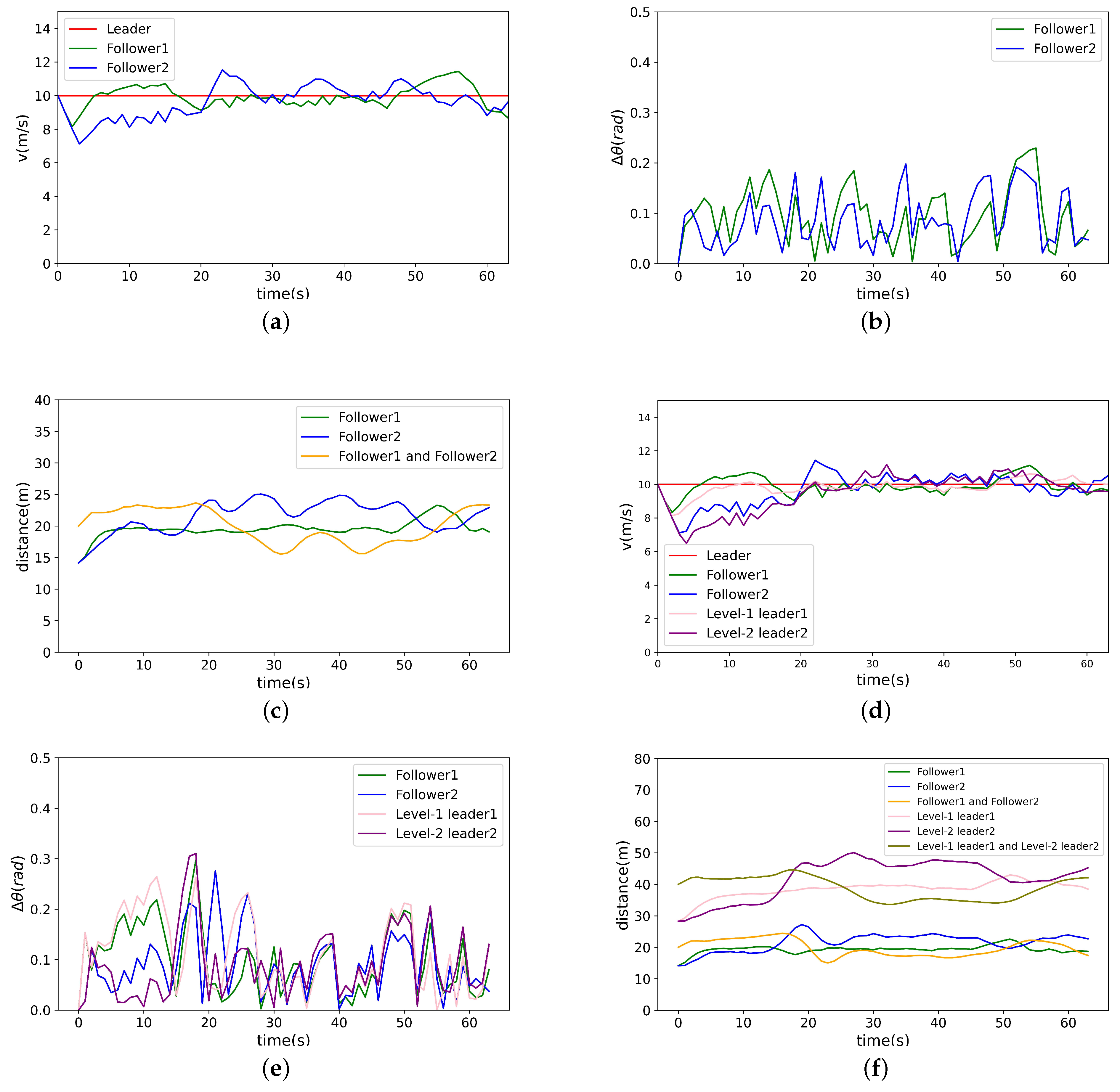

4.1. Training and Testing of Basic Formation

4.2. Testing of Expanded Formation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Panait, L.; Luke, S. Cooperative multi-agent learning: The state of the art. Auton. Agents-Multi-Agent Syst. 2005, 11, 387–434. [Google Scholar] [CrossRef]

- Dorri, A.; Kanhere, S.S.; Jurdak, R. Multi-Agent Systems: A Survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Miao, Z.; Liu, Y.-H.; Wang, Y.; Yi, G.; Fierro, R. Distributed Estimation and Control for Leader-Following Formations of Nonholonomic Mobile Robots. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1946–1954. [Google Scholar] [CrossRef]

- Walter, V.; Staub, N.; Franchi, A.; Saska, M. UVDAR System for Visual Relative Localization With Application to Leader–Follower Formations of Multirotor UAVs. IEEE Robot. Autom. Lett. 2019, 4, 2637–2644. [Google Scholar] [CrossRef]

- Monteiro, S.; Bicho, E. A dynamical systems approach to behavior-based formation control. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 3, pp. 2606–2611. [Google Scholar]

- Balch, T.; Arkin, R.C. Behavior-based formation control for multirobot teams. IEEE Trans. Robot. Autom. 1998, 14, 926–939. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, J.; Yuan, D.; Hou, X. The UAV cooperative formation control design with collision avoidance based on improved artificial potential field. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 6083–6088. [Google Scholar]

- Wang, Y.; Sun, X. Formation Control of Multi-UAV with Collision Avoidance using Artificial Potential Field. In Proceedings of the 2019 11th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 24–25 August 2019; pp. 296–300. [Google Scholar]

- Low, E.S.; Ong, P.; Cheah, K.C. Solving the optimal path planning of a mobile robot using improved Q-learning. Robot. Auton. Syst. 2019, 115, 143–161. [Google Scholar] [CrossRef]

- Iima, H.; Kuroe, Y. Swarm reinforcement learning methods improving certainty of learning for a multi-robot formation problem. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 3026–3033. [Google Scholar]

- Koenig, S.; Simmons, R.G. Complexity analysis of real-time reinforcement learning. AAAI 1993, 93, 99–105. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Keogh, E.J.; Mueen, A. Curse of dimensionality. In Encyclopedia of Machine Learning and Data Mining; Springer: Boston, MA, USA, 2017; pp. 314–315. [Google Scholar]

- Li, Y.; Zhang, S.; Ye, F.; Jiang, T.; Li, Y. A UAV Path Planning Method Based on Deep Reinforcement Learning. In Proceedings of the 2020 IEEE USNC-CNC-URSI North American Radio Science Meeting (Joint with AP-S Symposium), Montreal, QC, Canada, 5–10 July 2020; pp. 93–94. [Google Scholar]

- Yan, T.; Zhang, Y.; Wang, B. Path Planning for Mobile Robot’s Continuous Action Space Based on Deep Reinforcement Learning. In Proceedings of the 2018 International Conference on Big Data and Artificial Intelligence (BDAI), Beijing, China, 22–24 June 2018; pp. 42–46. [Google Scholar]

- Liu, X.-H.; Zhang, D.-G.; Yan, H.-R.; Cui, Y.-Y.; Chen, L. A New Algorithm of the Best Path Selection Based on Machine Learning. IEEE Access 2019, 7, 126913–126928. [Google Scholar] [CrossRef]

- Sui, Z.; Pu, Z.; Yi, J.; Xiong, T. Formation Control with Collision Avoidance through Deep Reinforcement Learning. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Bai, C.; Yan, P.; Pan, W.; Guo, J. Learning-Based Multi-Robot Formation Control With Obstacle Avoidance. IEEE Trans. Intell. Transp. Syst. 2022, 23, 11811–11822. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, P.; Zhang, H.; Guo, W.; Liu, Y. Learn to Navigate: Cooperative Path Planning for Unmanned Surface Vehicles Using Deep Reinforcement Learning. IEEE Access 2019, 7, 165262–165278. [Google Scholar] [CrossRef]

- Basiri, M.; Bishop, A.N.; Jensfelt, P. Distributed control of triangular formations with angle-only constraints. Syst. Control Lett. 2010, 59, 147–154. [Google Scholar] [CrossRef]

- Michael, N.; Zavlanos, M.M.; Kumar, V.; Pappas, G.J. Distributed multi-robot task assignment and formation control. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 128–133. [Google Scholar]

- Stamouli, C.J.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Multi-Agent Formation Control Based on Distributed Estimation With Prescribed Performance. IEEE Robot. Autom. Lett. 2020, 5, 2929–2934. [Google Scholar] [CrossRef]

- Diallo, E.A.O.; Sugawara, T. Multi-Agent Pattern Formation: A Distributed Model-Free Deep Reinforcement Learning Approach. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Bejing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Jiang, J.; Xin, J. Path planning of a mobile robot in a free-space environment using Q-learning. Prog. Artif. Intell. 2019, 8, 133–142. [Google Scholar] [CrossRef]

| ID | Parameter | Value |

|---|---|---|

| 1 | Actor learning rate | 0.001 |

| 2 | Critic learning rate | 0.002 |

| 3 | Discount rate | 0.95 |

| 4 | Soft update | 0.005 |

| 5 | Memory size | 100,000 |

| 6 | Batch size | 256 |

| ID | Parameter | Value |

|---|---|---|

| 1 | Map range | 500 m × 500 m |

| 2 | Initial position of the leader | (−200,−200) m |

| 3 | Initial position of follower 1 | (−210,−210) m |

| 4 | Initial position of follower 2 | (−190,−210) m |

| 5 | Position of the target | (210,210) m |

| 6 | Initial speed | 10 m/s |

| 7 | Initial heading | |

| 8 | Angular velocity range of the leader | /s |

| 9 | Angular velocity range of the followers | /s |

| 10 | Acceleration range of the followers | [−1,1] m/ |

| 11 | Distance requirements of followers in formation | 20 m |

| UGV ID | Initial Position | UGV Type | Formation Distance |

|---|---|---|---|

| Leader | (−200,−200) m | Leader | - |

| Follower 1 | (−210,−210) m | Left | 20 m |

| Follower 2 | (−190,−210) m | Right | 20 m |

| UGV ID | Initial Position | UGV Type | Formation Distance |

|---|---|---|---|

| Leader | (−200,−200) m | Leader | - |

| Follower 1 | (−210,−210) m | Left | 20 m |

| Follower 2 | (−190,−210) m | Right | 20 m |

| Level 1 leader 1 | (−220,−220) m | Left | 40 m |

| Level 1 leader 2 | (−180,−220) m | Right | 40 m |

| UGV ID | Initial Position | UGV Type | Formation Distance |

|---|---|---|---|

| Leader | (−200,−200) m | Leader | - |

| Follower 1 | (−210,−210) m | Left | 20 m |

| Follower 2 | (−190,−210) m | Right | 20 m |

| Level 1 leader 1 | (−220,−220) m | Left | 40 m |

| Level 1 leader 2 | (−180,−220) m | Right | 40 m |

| Follower 3 | (−230,−230) m | Left | 20 m |

| Follower 4 | (−210,−230) m | Right | 20 m |

| Follower 5 | (−190,−230) m | Left | 20 m |

| Follower 6 | (−170,−230) m | Right | 20 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.; Wang, T.; Tang, Y.; Hu, Y.; Xin, G.; Zhou, D. Distributed and Scalable Cooperative Formation of Unmanned Ground Vehicles Using Deep Reinforcement Learning. Aerospace 2023, 10, 96. https://doi.org/10.3390/aerospace10020096

Huang S, Wang T, Tang Y, Hu Y, Xin G, Zhou D. Distributed and Scalable Cooperative Formation of Unmanned Ground Vehicles Using Deep Reinforcement Learning. Aerospace. 2023; 10(2):96. https://doi.org/10.3390/aerospace10020096

Chicago/Turabian StyleHuang, Shichun, Tao Wang, Yong Tang, Yiwen Hu, Gu Xin, and Dianle Zhou. 2023. "Distributed and Scalable Cooperative Formation of Unmanned Ground Vehicles Using Deep Reinforcement Learning" Aerospace 10, no. 2: 96. https://doi.org/10.3390/aerospace10020096

APA StyleHuang, S., Wang, T., Tang, Y., Hu, Y., Xin, G., & Zhou, D. (2023). Distributed and Scalable Cooperative Formation of Unmanned Ground Vehicles Using Deep Reinforcement Learning. Aerospace, 10(2), 96. https://doi.org/10.3390/aerospace10020096