Perspectives for Very High-Resolution Climate Simulations with Nested Models: Illustration of Potential in Simulating St. Lawrence River Valley Channelling Winds with the Fifth-Generation Canadian Regional Climate Model

Abstract

:1. Introduction

2. Experimental Design

2.1. The Model Description

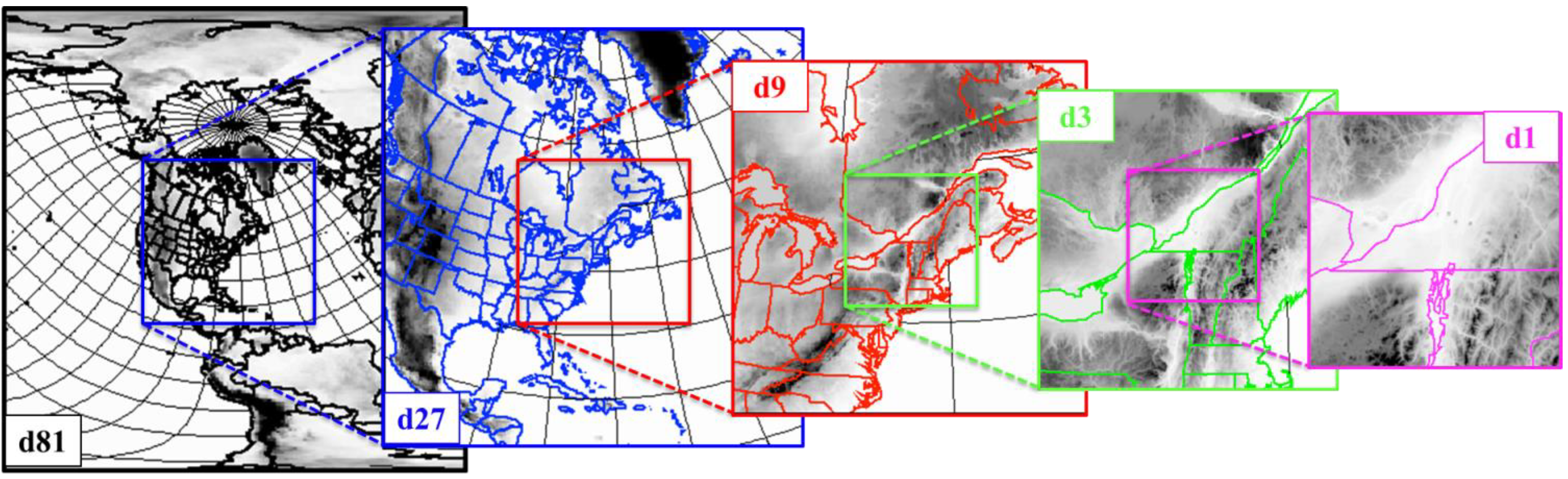

2.2. The Cascade Description

3. Results

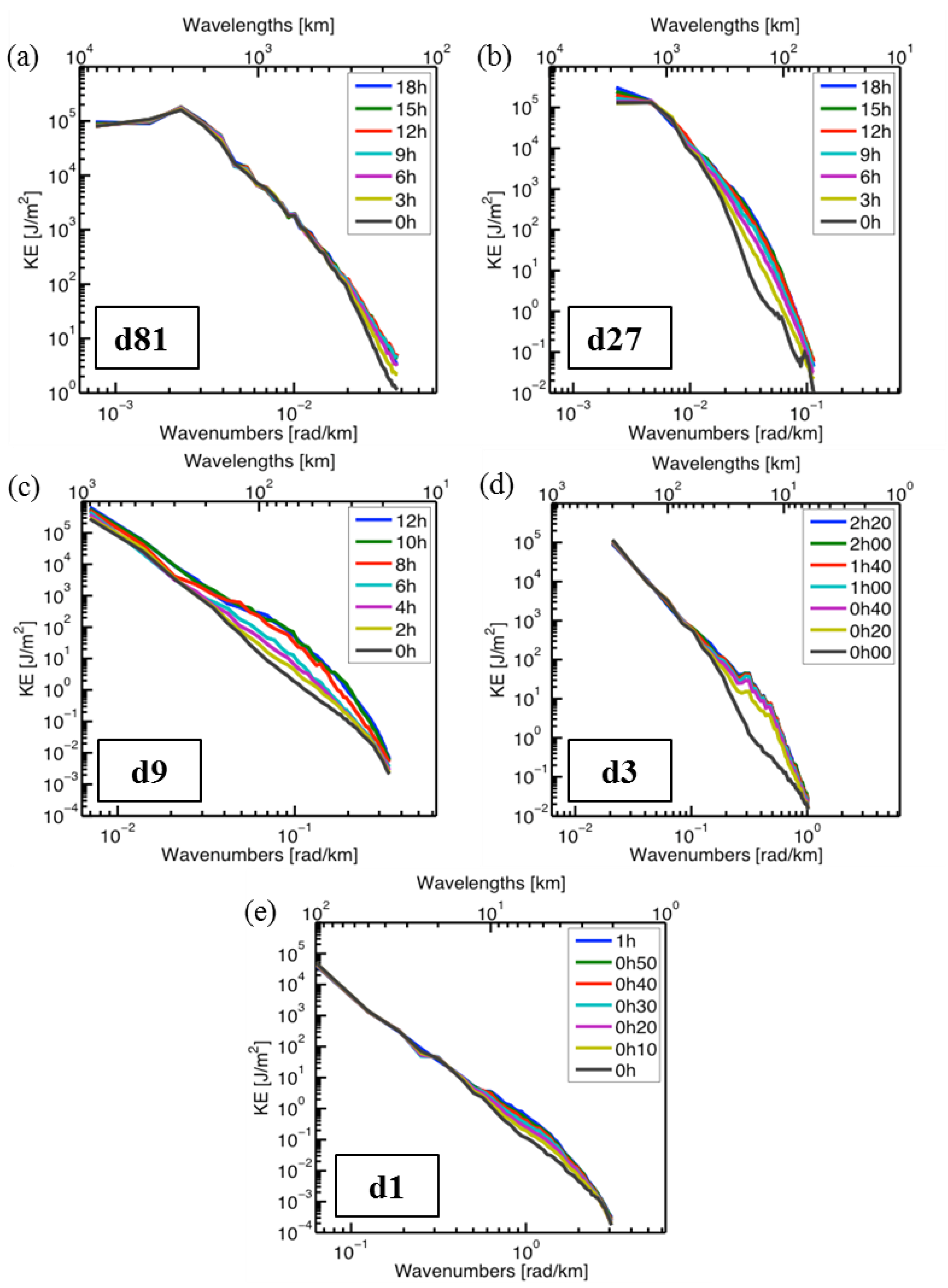

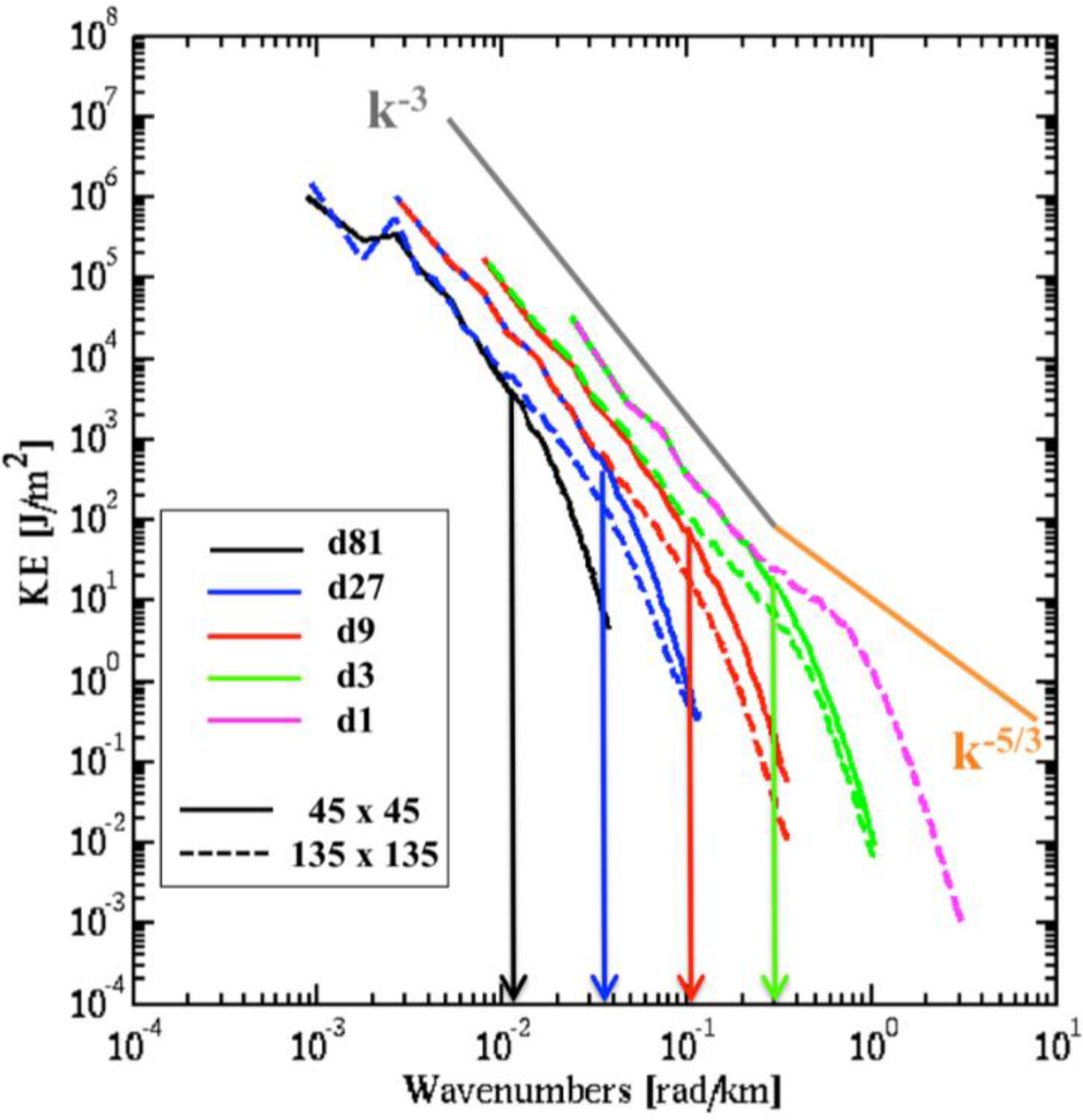

3.1. Kinetic Energy Spectra

3.1.1. Spin-Up Time

3.1.2. Effective Resolution

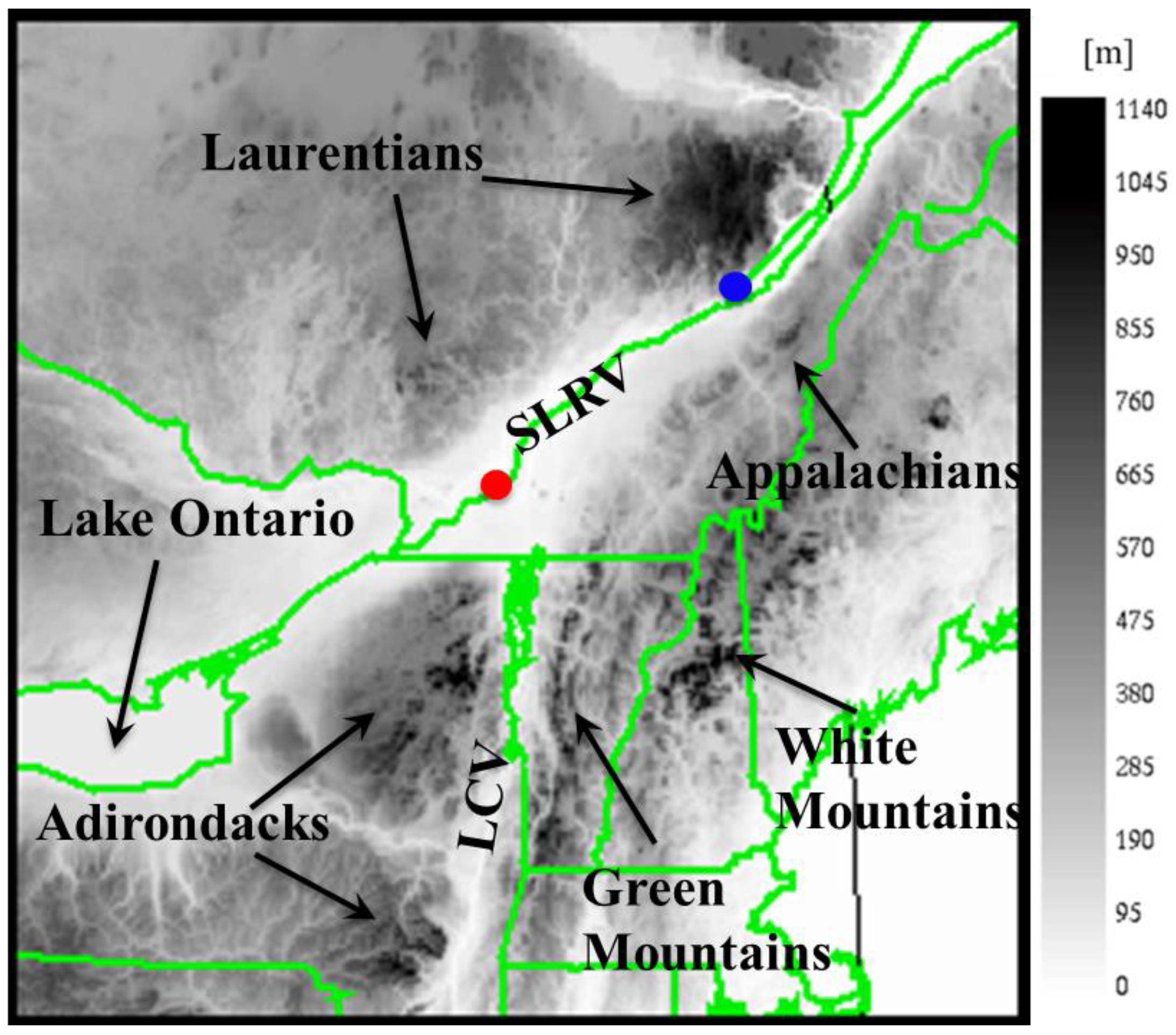

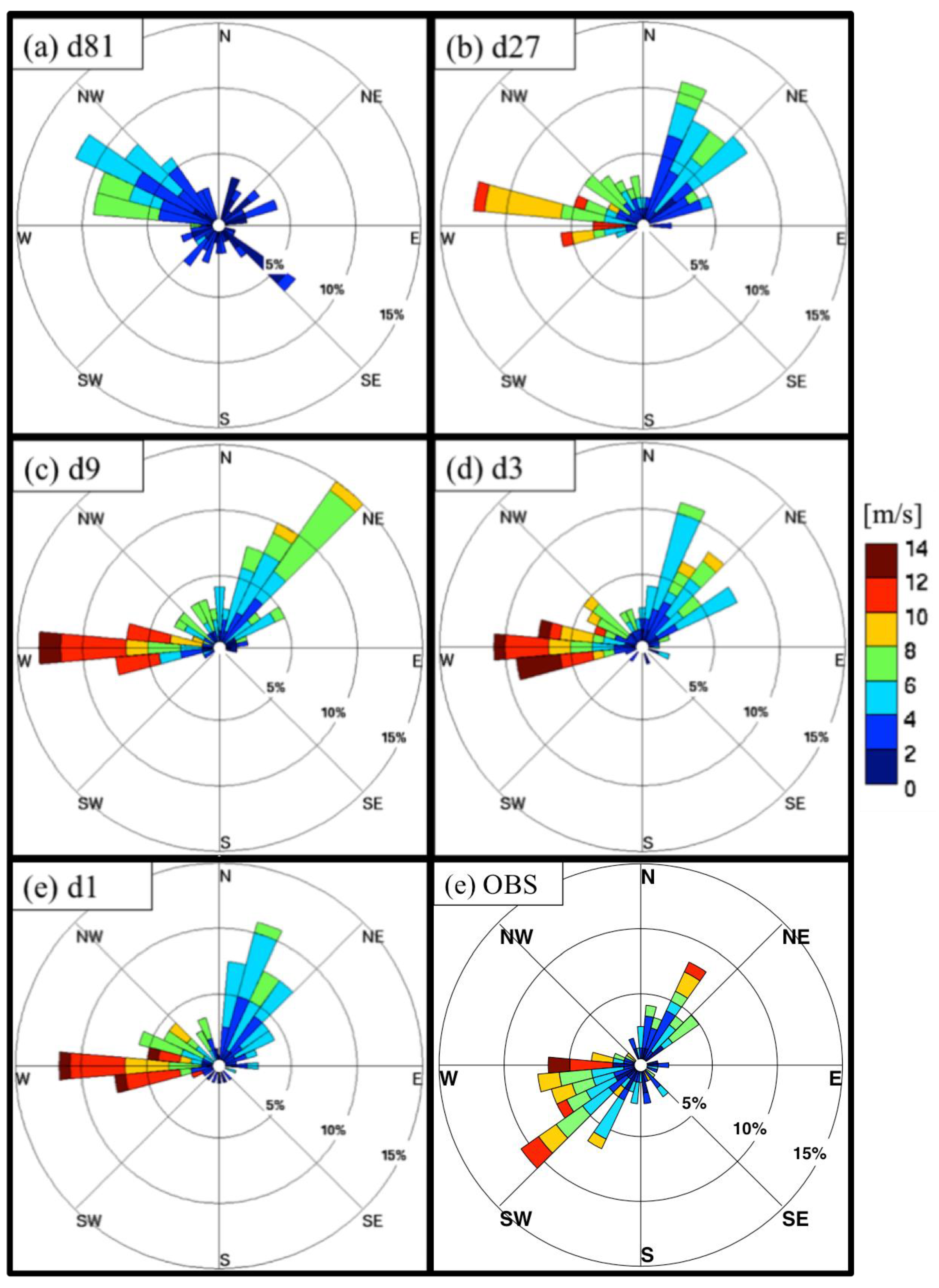

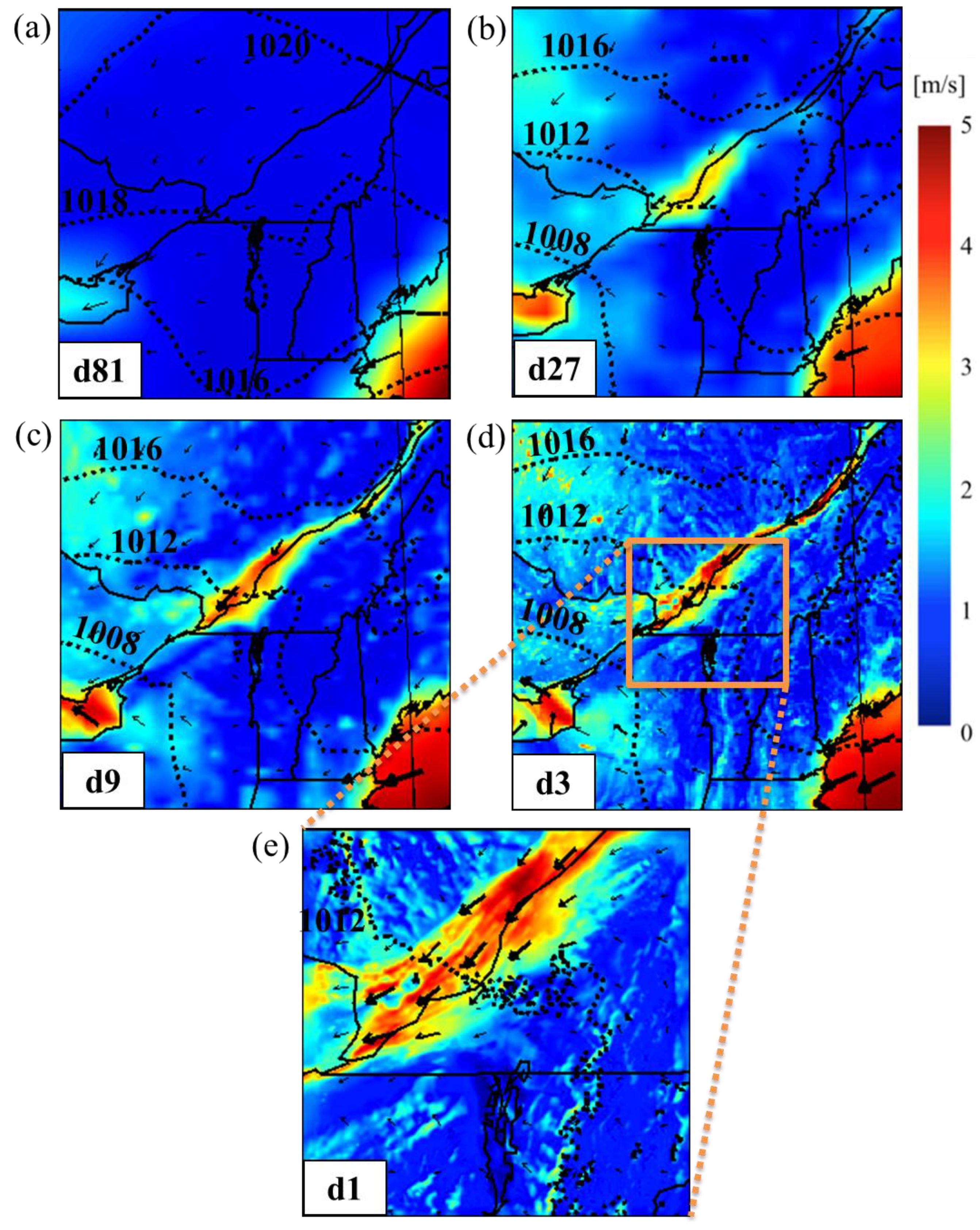

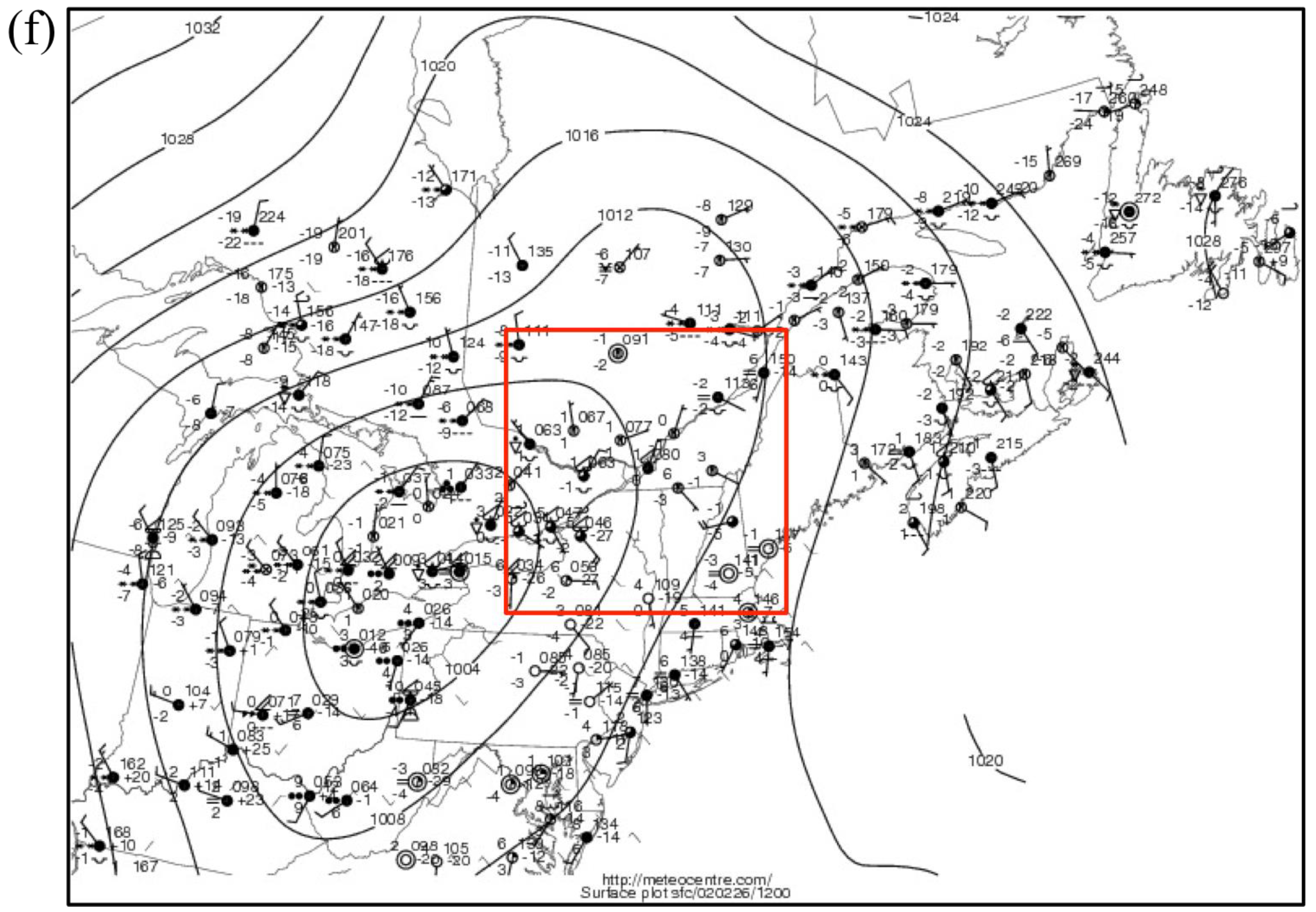

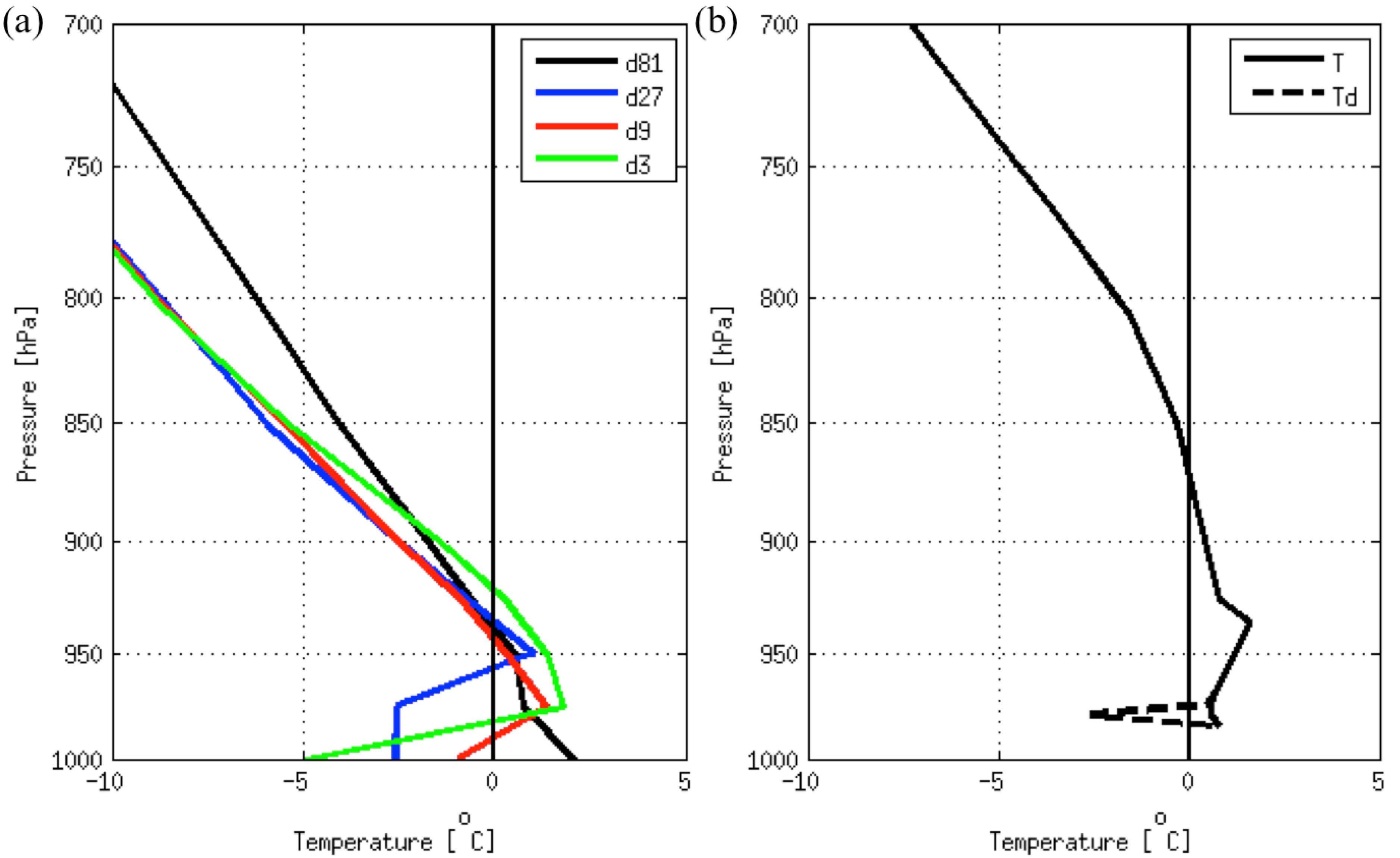

3.2. St. Lawrence River Valley Features

4. Application to a Regional Climate Modeling Approach

5. Summaries and Conclusions

- The high-resolution simulations displayed marked improvements compared to coarser ones, because the mountain ranges in the vicinity of the SLRV are simply not resolved in coarser resolution simulations. Compared to coarser resolution (d81), the d3 and d1 topographies’ definitions of the CRCM5 were enhanced, and some of the highest mountains’ peak heights were doubled in the finer resolution.

- Kinetic energy spectra showed that small scales are better resolved with the finer resolution simulations and that the effective resolution wavelength is about seven-times the grid spacing of each simulation in the cascade.

- The high-resolution simulations succeeded in reproducing the known propensity of low-level winds to blow along the SLRV, despite the modest height of the bordering Laurentian and Appalachian mountain ranges. These valley winds were simply not resolved in coarser resolution simulations. For example, the wind direction shifted by 180° at the same grid point depending on the resolution.

- The vertical temperature structure is also impacted by the model horizontal resolution. For example, a simulation with a mesh of 81 km would lead to rain at the surface, whereas the 3‑km one would be associated with freezing rain. For instance, no refreezing layer and temperature inversion are found at lower levels for the simulation with grid spacing of 81 km. Furthermore, the depth and temperature of the melting layer varies significantly across model resolutions, which is directly linked to the type of precipitation.

- A pragmatic theoretical cost argument has been developed, suggesting a climatological framework to use the cascade method for studying specific high-impact weather of interest using very high-resolution regional climate modeling.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Parry, M.L.; Canziani, O.F.; Palutikof, J.P.; van der Linden, P.J.; Hanson, C.E. IPCC: Climate Change 2007: Impacts, Adaptation and Vulnerability; Cambridge University Press: Cambridge, UK, 2007; p. 976. [Google Scholar]

- IPCC. Climate Change 2013: The Physical Science Basis; Stocker, T.F., Qin, D., Plattner, G.K., Tignor, M., Allen, S.K., Boschung, J., Nauels, A., Xia, Y., Bex, V., Midgley, P.M., Eds.; Cambridge University Press: Cambridge, UK, 2013; p. 1535. [Google Scholar]

- Mearns, L.O.; Schneider, S.H.; Thompson, S.L.; McDaniel, L.R. Analysis of climate variability in General Circulation Models: Comparison with observations and changes in variability in 2×CO2 experiments. J. Geophys. Res. 1990, 95, 20469–20520. [Google Scholar] [CrossRef]

- Christensen, J.H.; Hewitson, B.; Busuioc, A.; Chen, A.; Gao, X.; Held, I.; Jones, R.; Kolli, R.K.; Kwon, W.-T.; Laprise, R.; et al. Regional climate projections. In Climate Change 2007: The Physical Science Basis; Contribution of Working Group I (WGI) to the Fourth Assessment Report (AR4) of the Intergovernmental Panel on Climate Change (IPCC); Solomon, S., Qin, D., Manning, M., Chen, X., Marquis, M., Averyt, K.B., Tignor, M., Miller, H.L., Eds.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2007; pp. 918–925. [Google Scholar]

- Giorgi, F.; Coppola, E. Does the model regional bias affect the projected regional climate change? An analysis of global model projections. Clim. Chang. 2010, 100, 787–795. [Google Scholar] [CrossRef]

- Dickinson, R.E.; Errico, R.M.; Giorgi, F.; Bates, G.T. A regional climate model for the western United States. Clim. Chang. 1989, 15, 383–422. [Google Scholar]

- Giorgi, F. Simulation of regional climate using a limited area model nested in a general circulation model. J. Clim. 1990, 3, 941–963. [Google Scholar] [CrossRef]

- Von Storch, H.; Langenberg, H.; Feser, F. A spectral nudging technique for dynamical downscaling purposes. Mon. Weather Rev. 2000, 128, 3664–3673. [Google Scholar] [CrossRef]

- Nikulin, G.; Jones, C.; Giorgi, F.; Asrar, G.; Büchner, M.; Cerezo-Mota, R.; Christensen, O.B.; Déqué, M.; Fernandez, J.; Hänsler, A.; et al. Precipitation climatology in an ensemble of CORDEX-Africa regional climate simulations. J. Clim. 2012, 25, 6057–6078. [Google Scholar] [CrossRef]

- Giorgi, F. Future directions for CORDEX: Emerging issues concerning Regional climate projections. In Proceedings of the ICRC 2013, Brussels, Belgium, 4–7 November 2013.

- Hong, S.-Y.; Kanamitsu, M. Dynamical downscaling: Fundamental issue from an NWP point of view and recommendations. Asia-Pac. J. Atmos. Sci. 2014, 50, 83–104. [Google Scholar] [CrossRef]

- Kendon, E.J.; Roberts, N.M.; Senior, C.A.; Roberts, M.J. Realism of rainfall in a very high-resolution regional climate model. J. Clim. 2012, 25, 5791–5806. [Google Scholar] [CrossRef]

- Ban, N.; Schmidli, J.; Schär, C. Evaluation of the convection-resolving regional climate modeling approach in decade-long simulations. J. Geophys. Res. Atmos. 2014, 119, 7889–7907. [Google Scholar] [CrossRef]

- Jacob, D.; Petersen, J.; Eggert, B.; Alias, A.; Christensen, O.B.; Bouwer, L.M.; Braun, A.; Colette, A.; Déqué, M.; Georgievski, G.; et al. EURO-CORDEX: New high-reolution climate change projections for European impact research. Reg. Environ. Chang. 2014, 14, 563–578. [Google Scholar] [CrossRef]

- Lucas-Picher, P.; Riboust, P.; Somot, S.; Laprise, R. Reconstruction of the spring 2011 Richelieu River flood by two regional climate models and a hydrological model. J. Hydrometeor. 2014. [Google Scholar] [CrossRef]

- Hart, K.A.; Steenburgh, W.J.; Onton, D.J. Model forecast improvements with decreased horizontal grid spacing over finescale intermountain orography during the 2002 Olympic Winter Games. Weather Forecast. 2005, 20, 558–576. [Google Scholar] [CrossRef]

- Lean, H.W.; Clark, P.A.; Dixon, M.; Roberts, N.M.; Fitch, A.; Forbes, R.; Halliwell, C. Characteristics of high-resolution versions of the Met Office Unified Model for forecasting convection over the United Kingdom. Mon. Weather Rev. 2008, 136, 3408–3424. [Google Scholar] [CrossRef]

- Mailhot, J.; Milbrandt, J.A.; Giguère, A.; McTaggart-Cowan, R.; Erfani, A.; Denis, B.; Glazer, A.; Vallée, M. An experimental high resolution forecast system during the Vancouver 2010 winter Olympic and Paralympic games. Pure Appl. Geophys. 2014, 171, 209–229. [Google Scholar] [CrossRef]

- Coen, J.; Cameron, M.; Michalakes, J.; Patton, E.; Riggan, P.; Yedinak, K. WRF-fire: Coupled weather-wildland fire modeling with the weather research and forecasting model. J. Appl. Meteor. Climatol. 2013, 52, 16–38. [Google Scholar] [CrossRef]

- Trapp, R.J.; Halvorson, B.A.; Diffenbaugh, N.S. Telescoping, multimodel approaches to evaluate extreme convective weather under future climates. J. Geophys. Res. 2007, 112, 1–13. [Google Scholar]

- Prein, A.F.; Truhetz, H.; Suklistch, M.; Gobiet, A. Evaluation of the Local Climate Model Intercomparison Project (LocMIP) simulations. WegCenter Rep. 2011, 41, 27–57. [Google Scholar]

- Stiperski, I.; Ivančan-Picek, B.; Grubišić, V.; Bajić, A. Complex bora flow in the lee of southern velebit. Quart. J. R. Meteorol. Soc. 2012, 138, 1490–1506. [Google Scholar] [CrossRef]

- Mahoney, K.; Alexander, M.; Scott, J.D.; Barsugli, J. High-resolution downscaled simulations of warm-season extreme precipitation events in the Colorado Front Range under past and future climates. J. Clim. 2013, 26, 8671–8689. [Google Scholar] [CrossRef]

- Wang, C.; Jones, R.; Perry, M.; Johnson, C.; Clark, P. Using ultrahigh-resolution regional climate model to predict local climatology. Quart. J. R. Meteorol. Soc. 2013. [Google Scholar] [CrossRef]

- Yeung, J.K.; Smith, J.A.; Villarini, G.; Ntelekos, A.A.; Baeck, M.L.; Krajewski, W.F. Analyses of the warm season rainfall climatology of the northeastern US using regional climate model simulations and radar rainfall fields. Adv. Water Resour. 2011, 34, 184–204. [Google Scholar] [CrossRef]

- BALTEX: The Baltic Sea Experiment: 3rd Lund Regional-scale Climate Modelling Workshop, 21st Century Challenges in Regional Climate Modelling. Lund, Sweden, 16–19 June 2014; Available online: http://www.baltex-research.eu/RCM2014/ (accessed on 19 December 2014).

- Leduc, M.; Laprise, R. Regional Climate Model sensitivity to domain size. Clim. Dyn. 2009, 32, 833–854. [Google Scholar] [CrossRef]

- Hill, G.E. Grid telescoping in numerical weather prediction. J. Appl. Meteor. 1968, 7, 29–38. [Google Scholar] [CrossRef]

- Chen, J.H.; Miyakoda, K. A nested grid computation for the barotropic free surface atmosphere. Mon. Weather Rev. 1974, 102, 181–190. [Google Scholar] [CrossRef]

- Rife, D.L.; Davis, C.A.; Knievel, J.C. Temporal changes in wind as objects for evaluating mesoscale numerical weather prediction. Weather Forecast. 2009, 24, 1374–1389. [Google Scholar] [CrossRef]

- Roebber, P.J.; Gyakum, J.R. Orographic influences on the mesoscale structure of the 1998 ice storm. Mon. Weather Rev. 2003, 131, 27–50. [Google Scholar] [CrossRef]

- Hernández-Díaz, L.; Laprise, R.; Sushama, L.; Martynov, A.; Winger, K.; Dugas, B. Climate simulation over CORDEX Africa domain using the fifth-generation Canadian Regional Climate Model (CRCM5). Clim. Dyn. 2013, 40, 1415–1433. [Google Scholar] [CrossRef]

- Martynov, A.; Laprise, R.; Sushama, L.; Winger, K.; Šeparović, L.; Dugas, B. Reanalysis-driven climate simulation over CORDEX North America domain using the Canadian Regional Climate Model, version 5: Model performance evaluation. Clim. Dyn. 2013, 41, 2973–3005. [Google Scholar] [CrossRef]

- Šeparović, L.; Alexandru, A.; Laprise, R.; Martynov, A.; Sushama, L.; Winger, K.; Tete, K.; Valin, M. Present climate and climate change over North America as simulated by the fifth-generation Canadian Regional Climate Model (CRCM5). Clim. Dyn. 2013, 41, 3167–3201. [Google Scholar]

- Côté, J.; Gravel, S.; Méthot, A.; Patoine, A.; Roch, M.; Staniforth, A. The operational CMC-MRB Global Environmental Multiscale (GEM) model: Part I—Design considerations and formulation. Mon. Weather Rev. 1998, 126, 1373–1395. [Google Scholar] [CrossRef]

- Côté, J.; Desmarais, J.-G.; Gravel, S.; Méthot, A.; Patoine, A.; Roch, M.; Staniforth, A. The operational CMC-MRB Global Environmental Multiscale (GEM) model: Part II–Results. Mon. Weather Rev. 1998, 126, 1397–1418. [Google Scholar] [CrossRef]

- Yeh, K.S.; Gravel, S.; Méthot, A.; Patoine, A.; Roch, M.; Staniforth, A. The CMC-MRB global environmental multiscale (GEM) model. Part III: Non-hydrostatic formulation. Mon. Weather Rev. 2002, 130, 339–356. [Google Scholar] [CrossRef]

- Laprise, R. The Euler equation of motion with hydrostatic pressure as independent coordinate. Mon. Weather Rev. 1992, 120, 197–207. [Google Scholar] [CrossRef]

- Davies, H.C. A lateral boundary formulation for multi-level prediction models. Quart. J. R. Meteorol. Soc. 1976, 102, 405–418. [Google Scholar]

- Verseghy, D.L. The Canadian land surface scheme (CLASS): Its history and future. Atm.-Ocean 2000, 38, 1–13. [Google Scholar] [CrossRef]

- Verseghy, D.L. The Canadian Land Surface Scheme: Technical Documentation, Version 3.4; Climate Research Division, Science and Technology Branch, Environment Canada: Dorval, QC, Canada, 2008. [Google Scholar]

- Li, J.; Barker, H.W. A radiation algorithm with correlated-k distribution. Part-I: Local thermal equilibrium. J. Atmos. Sci. 2005, 62, 286–309. [Google Scholar] [CrossRef]

- Benoit, R.; Côté, J.; Mailhot, J. Inclusion of TKE boundary layer parameterization in the Canadian Regional Finite-Element Model. Mon. Weather Rev. 1989, 117, 1726–1750. [Google Scholar] [CrossRef]

- Delage, Y.; Girard, C. Stability functions correct at the free convection limit and consistent for both the surface and Ekman layers. Bound. Layer Meteor. 1992, 58, 19–31. [Google Scholar] [CrossRef]

- Delage, Y. Parameterising sub-grid scale vertical transport in atmospheric models under statically stable conditions. Bound. Layer Meteor. 1997, 82, 23–48. [Google Scholar] [CrossRef]

- Tiedtke, M. A comprehensive mass flux scheme for cumulus parameterization in large-scale models. Mon. Weather Rev. 1989, 117, 1779–1800. [Google Scholar] [CrossRef]

- Kuo, H.L. On formation and intensification of tropical cyclones through latent heat release by cumulus convection. J. Atmos. Sci. 1965, 22, 40–63. [Google Scholar] [CrossRef]

- Bélair, S.; Mailhot, J.; Girard, C.; Vaillancourt, P. Boundary-layer and shallow cumulus clouds in a medium-range forecast of a large-scale weather system. Mon. Weather Rev. 2005, 133, 1938–1960. [Google Scholar] [CrossRef]

- Molinari, J.; Dudek, M. Parameterization of convective precipitation in mesoscale numerical models: A critical review. Mon. Weather Rev. 1992, 120, 326–344. [Google Scholar] [CrossRef]

- Arakawa, A. The cumulus parameterization problem: Past, present and future. J. Clim. 2004, 17, 2493–2525. [Google Scholar] [CrossRef]

- Piriou, J.M.; Redelsperger, J.-L.; Geleyn, J.-F.; Lafore, J.-P.; Guichard, F. An approach for convective parameterization with memory: Separating microphysics and transport in grid-scale equations. J. Atmos. Sci. 2007, 64, 4127–4139. [Google Scholar] [CrossRef]

- Gérard, L. An integrated package for subgrid convection, clouds and precipitation compatible with meso-gamma scales. Quart. J. R. Meteorol. Soc. 2007, 133, 711–730. [Google Scholar] [CrossRef]

- Gérard, L.; Piriou, J.M.; Brožková, R.; Geleyn, J.-F.; Banciu, D. Cloud and precipitation parameterization in a meso-gamma-scale operational weather prediction model. Mon. Weather Rev. 2009, 137, 3960–3977. [Google Scholar] [CrossRef]

- Bengtsson, L.; Steinheimer, M.; Bechtold, P.; Geleyn, J.-F. A stochastic parametrization for deep convection using cellular automata. Quart. J. R. Meteorol. Soc. 2013, 139, 1533–1543. [Google Scholar] [CrossRef]

- Sundqvist, H.; Berge, E.; Kristjansson, J.E. Condensation and cloud parameterization studies with a mesoscale numerical weather prediction model. Mon. Weather Rev. 1989, 117, 1641–1657. [Google Scholar] [CrossRef]

- Bourgouin, P. A method to determine precipitation types. Weather Forecast. 2000, 15, 583–592. [Google Scholar] [CrossRef]

- Kain, J.S.; Fritsch, J.M. A one-dimensional entraining/detraining plume model and application in convective parameterization. J. Atmos. Sci. 1990, 47, 2784–2802. [Google Scholar] [CrossRef]

- McFarlane, N.A. The effect of orographically excited gravity-wave drag on the circulation of the lower stratosphere and troposphere. J. Atmos. Sci. 1987, 44, 1175–1800. [Google Scholar]

- Zadra, A.; Roch, M.; Laroche, S.; Charron, M. The subgrid-scale orographic blocking parametrization of the GEM Model. Atm.-Ocean 2003, 41, 155–170. [Google Scholar] [CrossRef]

- Milbrandt, J.A.; Yau, M.K. A multimoment bulk microphysics parameterization. Part I: Analysis of the role of the spectral shape parameter. J. Atmos. Sci. 2005, 62, 3051–3064. [Google Scholar] [CrossRef]

- Milbrandt, J.A.; Yau, M.K. A multimoment bulk microphysics parameterization. Part II: A proposed three-moment closure and scheme description. J. Atmos. Sci. 2005, 62, 3065–3081. [Google Scholar] [CrossRef]

- Simmons, A.; Uppala, S.; Dee, D.; Kobayashi, S. ERA-Interim: New ECMWF reanalysis products from 1989 onwards. ECMWF Newslett. 2007, 110, 26–35. [Google Scholar]

- Boer, G.J. Predictability regimes in atmospheric flow. Mon. Weather Rev. 1994, 122, 2285–2295. [Google Scholar] [CrossRef]

- Lilly, D.K.; Bassett, G.; Droegemeier, K.; Bartello, P. Stratified turbulence in the atmospheric mesoscales. Theoret. Comput. Fluid Dyn. 1998, 11, 139–153. [Google Scholar] [CrossRef]

- Pielke, R.A. A recommended specific definition of “resolution”. Agric. For. Meteorol. 1991, 55, 345–349. [Google Scholar] [CrossRef]

- De Elía, R.; Laprise, R.; Denis, B. Forecasting skill limits of nested, limited-area models: A perfect-model approach. Mon. Weather Rev. 2002, 130, 2006–2023. [Google Scholar] [CrossRef]

- Charney, J.G. Geostrophic turbulence. J. Atmos. Sci. 1971, 28, 1087–1095. [Google Scholar] [CrossRef]

- Boer, G.J.; Shepherd, T.G. Large-scale two-dimensional turbulence in the atmosphere. J. Atmos. Sci. 1983, 40, 164–184. [Google Scholar]

- Nastrom, G.D.; Gage, K.S. A climatology of atmospheric wavenumber spectra of wind and temperature observed by commercial aircraft. J. Atmos. Sci. 1985, 42, 950–960. [Google Scholar] [CrossRef]

- Gage, K.S.; Nastrom, G.D. Theoretical interpretation of atmospheric wavenumber spectra of wind and temperature observed by commercial aircraft during GASP. J. Atmos. Sci. 1986, 43, 729–740. [Google Scholar] [CrossRef]

- Denis, B.; Côté, J.; Laprise, R. Spectral decomposition of two-dimensional atmospheric fields on limited-area domains using the Discrete Cosine Transform (DCT). Mon. Weather Rev. 2002, 130, 1812–1829. [Google Scholar] [CrossRef]

- Skamarock, W.C. Evaluating mesoscale NWP models using kinetic energy spectra. Mon. Weather Rev. 2004, 132, 3019–3032. [Google Scholar] [CrossRef]

- Durran, D.R. Numerical Methods for Fluid Dynamics: With Applications to Geophysics; Springer: New York, NY, USA, 2010; Volume 32, p. 516. [Google Scholar]

- Leith, C.E. Diffusion approximation for two-dimensional turbulence. Phys. Fluids 1968, 11, 671–673. [Google Scholar] [CrossRef]

- Koshyk, J.N.; Hamilton, K. The horizontal kinetic energy spectrum and spectral budget simulated by high-resolution troposphere-stratosphere-mesosphere GCM. J. Atmos. Sci. 2001, 58, 329–348. [Google Scholar] [CrossRef]

- Historical Climate Data. Available online: http://climate.weather.gc.ca/advanceSearch/searchHistoricData_e.html/ (accessed on 25 March 2015).

- Cholette, M. Méthode de Télescopage Appliquée au MRCC5 Pour une Étude de Faisabilité d’un Modèle Régional de Climat de Très Haute Résolution. M.Sc. Dissertation, Department Earth and Atmospheric Sciences, Université du Québec à Montréal, Montréal, QC, Canada, 2013; p. 101. [Google Scholar]

- Carrera, M.L.; Gyakum, J.R.; Lin, C.A. Observational study of wind channeling within the St-Lawrence River valley. J. Appl. Meteor. Climatol. 2009, 48, 2341–2361. [Google Scholar] [CrossRef]

- MétéoCentre: Centre Météo UQAM Montréal | La météo en temps réel pour le Québec, le Canada, les États-Unis et l’Europe. Available online: http://meteocentre.com/ (accessed on 26 March 2015).

- Thériault, J.M.; Stewart, R. A parameterization of the microphysical processes forming many types of winter precipitation. J. Atmos. Sci. 2010, 67, 1492–1508. [Google Scholar] [CrossRef]

- University of Wyoming, College of Engineering, Department of Atmospheric Science. Available online: http://weather.uwyo.edu/wyoming/ (accessed on 25 March 2015).

- Lindzen, R.S.; Fox-Rabinowitz, M. Consistent vertical and horizontal resolution. Mon. Weather Rev. 1989, 117, 2575–2583. [Google Scholar] [CrossRef]

- Robert, A. A stable numerical integration scheme for the primitive meteorological equations. Atm.-Ocean 1981, 19, 35–46. [Google Scholar] [CrossRef]

- Clark, T.L.; Farley, R.D. Severe downslope windstorm calculations in two and three spatial dimensions using anelastic interactive grid nesting: A possible mechanism for gustiness. J. Atmos. Sci. 1984, 41, 329–350. [Google Scholar] [CrossRef]

- Beck, A.; Ahrens, B.; Stadlbacher, K. Impact of nesting strategies in dynamical downscaling of reanalysis data. Geophys. Res. Lett. 2004. [Google Scholar] [CrossRef]

- Suklitsch, M.; Gobiet, A.; Leuprecht, A.; Frei, C. High resolution sensitivity studies with the regional climate model CCLM in the Alpine Region. Meteorol. Z. 2008, 17, 467–476. [Google Scholar] [CrossRef]

- Denis, B.; Laprise, R.; Caya, D. Sensitivity of a regional climate model to the resolution of the lateral boundary conditions. Clim. Dyn. 2003, 20, 107–126. [Google Scholar]

- Razy, A.; Milrad, S.M.; Atallah, E.H.; Gyakum, J.R. Synoptic-scale environments conductive to orographic impacts on cold-season surface wind regimes at Montreal, Quebec. J. Appl. Meteor. Climatol. 2012, 51, 598–616. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cholette, M.; Laprise, R.; Thériault, J.M. Perspectives for Very High-Resolution Climate Simulations with Nested Models: Illustration of Potential in Simulating St. Lawrence River Valley Channelling Winds with the Fifth-Generation Canadian Regional Climate Model. Climate 2015, 3, 283-307. https://doi.org/10.3390/cli3020283

Cholette M, Laprise R, Thériault JM. Perspectives for Very High-Resolution Climate Simulations with Nested Models: Illustration of Potential in Simulating St. Lawrence River Valley Channelling Winds with the Fifth-Generation Canadian Regional Climate Model. Climate. 2015; 3(2):283-307. https://doi.org/10.3390/cli3020283

Chicago/Turabian StyleCholette, Mélissa, René Laprise, and Julie Mireille Thériault. 2015. "Perspectives for Very High-Resolution Climate Simulations with Nested Models: Illustration of Potential in Simulating St. Lawrence River Valley Channelling Winds with the Fifth-Generation Canadian Regional Climate Model" Climate 3, no. 2: 283-307. https://doi.org/10.3390/cli3020283

APA StyleCholette, M., Laprise, R., & Thériault, J. M. (2015). Perspectives for Very High-Resolution Climate Simulations with Nested Models: Illustration of Potential in Simulating St. Lawrence River Valley Channelling Winds with the Fifth-Generation Canadian Regional Climate Model. Climate, 3(2), 283-307. https://doi.org/10.3390/cli3020283