1. Introduction

Recently, with the development of machine to machine communication technologies such as Ethernet, Wi-Fi, Bluetooth, and ZigBee, people can now experience various user scenarios, which require a seamless connection between devices [

1]. These technologies have enabled different kinds of devices to interact with each other, thereby supporting remote monitoring and controlling of smart objects or devices [

1,

2]. In addition, the recent growth of sensors and actuators with a mature network infrastructure has resulted in the formation and foundation of the Internet of Things (IoT). In the era of IoT, devices can exchange data, analyze their environment, make decisions, and perform various operations without user’s explicit instructions.

To realize the ultimate goal of IoT, where different types of devices can communicate with each other to generate and provide intelligent services, standardization for interoperability between the devices is considered the main success factors in the IoT ecosystem. IoT standards [

3,

4] define how IoT devices should interact with each other to discover and manage devices, collect and share information, and perform physical actuations.

The usability of a system—how easily users can interact with a system—is also one of the important factors to expand an IoT ecosystem. However, methods to improve the usability of IoT platforms/systems have not been intensively studied. Recent commercial IoT platforms/systems such as IBM Watson IoT Platform [

5], Thing+ [

6], Amazon Web Services (AWS) IoT [

7], OpenHAB [

8], and Samsung SmartThings [

9] and ARTIK [

10] platform provide an interface to manage, monitor, and control a set of buildings, locations, devices through their mobile or web-based applications.

Figure 1a depicts a web-based interface to show a list of installed devices supported by OpenHAB [

8]. The list in

Figure 1 shows that there are approximately 20 devices installed in “Home”, some of which are sensors and the rest are actuators. However, it is not intuitive for users to recognize the name, type, and installed location of each IoT device owing to the inefficient design of its web application interface.

Figure 1b is a mobile interface provided by SmartThings [

9], which provides a simple list-based interface to show a set of installed devices. Even with a mobile application, it is obvious that the usability of an IoT platform will drastically decrease as the number of installed devices increases because users cannot easily choose the IoT device they want to observe and manipulate.

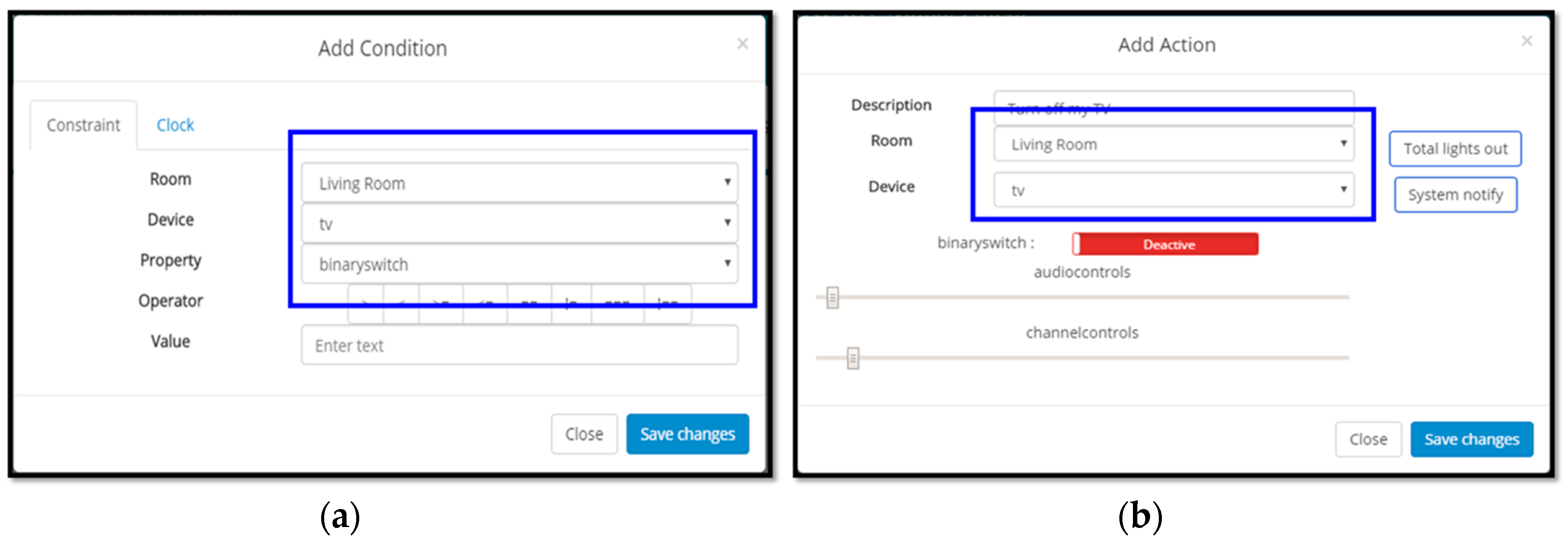

Furthermore, the recent advances in IoT ecosystem enables the interoperability between users, processes, data, and devices, which results in the increasing needs of more complex IoT scenarios such as “Turn off all lights when nobody is at home” or “If a room is cold, turn on the air-conditioner to warm up”. To handle such a complex query, several commercial IoT platforms and previous studies have tried to develop an efficient rule-engine or data structure for IoT environment [

11,

12,

13,

14] and to provide rule-based application services through their web or mobile interfaces [

7,

9]. However, the previous works focused on introducing the functionality but failed to consider the usability when designing an interface for composing IoT rules between smart objects, such as sensors, actuators, and applications. With a list or grid-type interfaces, it is still difficult to find and select an appropriate object to be used for the condition and action components of IoT rules if the number of installed devices is large.

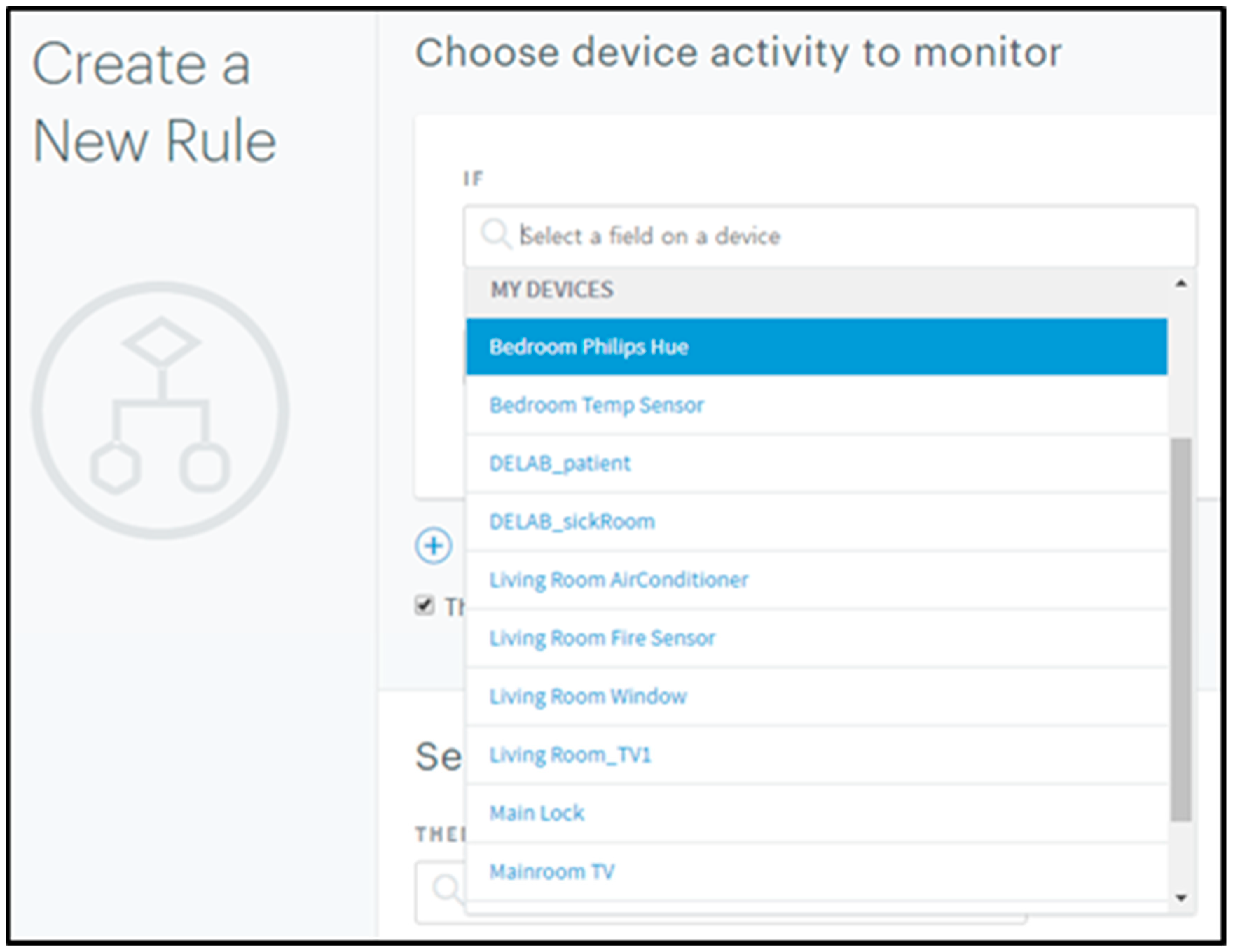

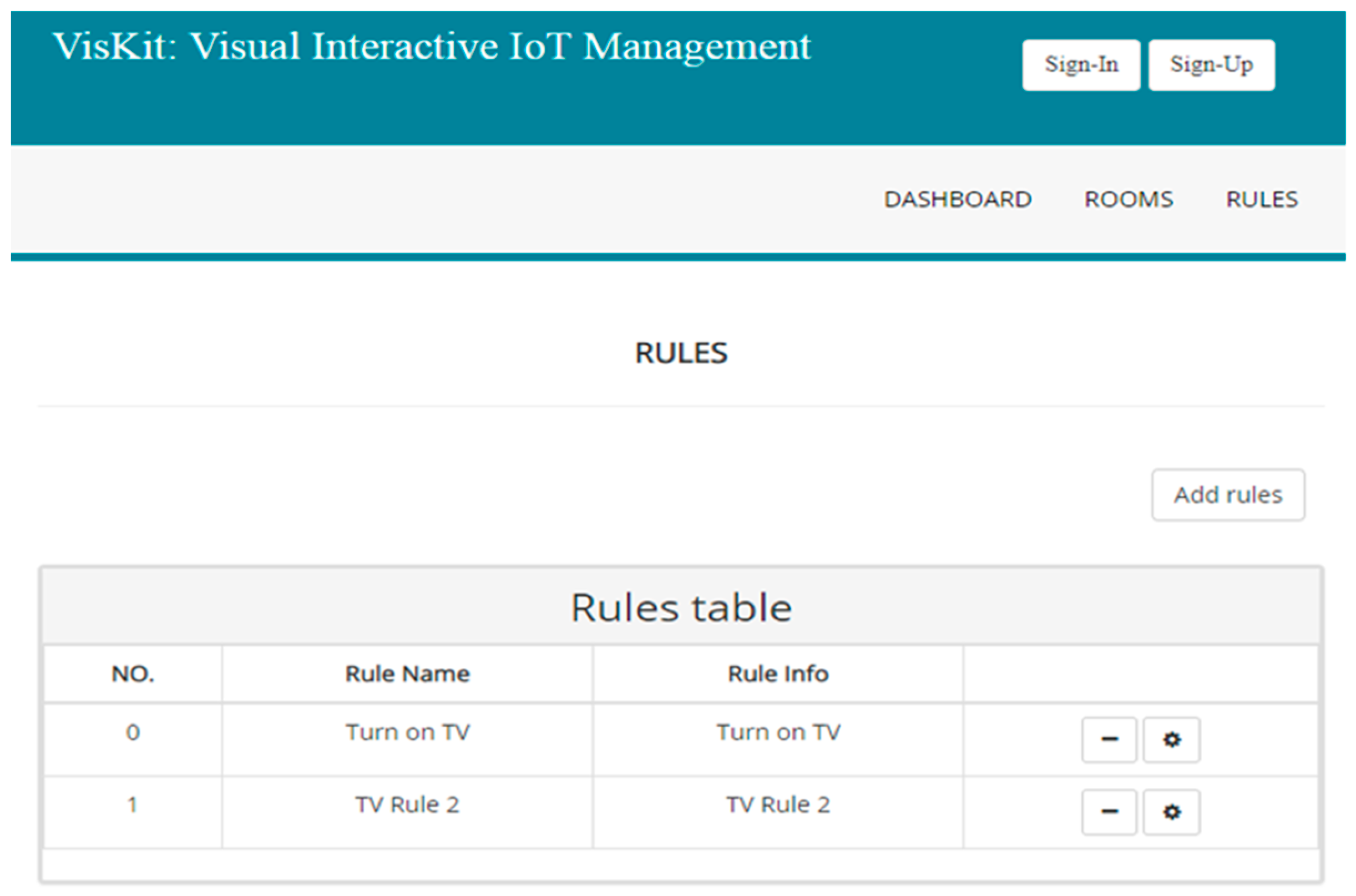

Figure 2 shows a web interface for the rule composition of ARTIK Cloud which supports a simple list-based view. In this design, users need to scroll up and down to locate a target device to use, which is inconvenient and time-consuming.

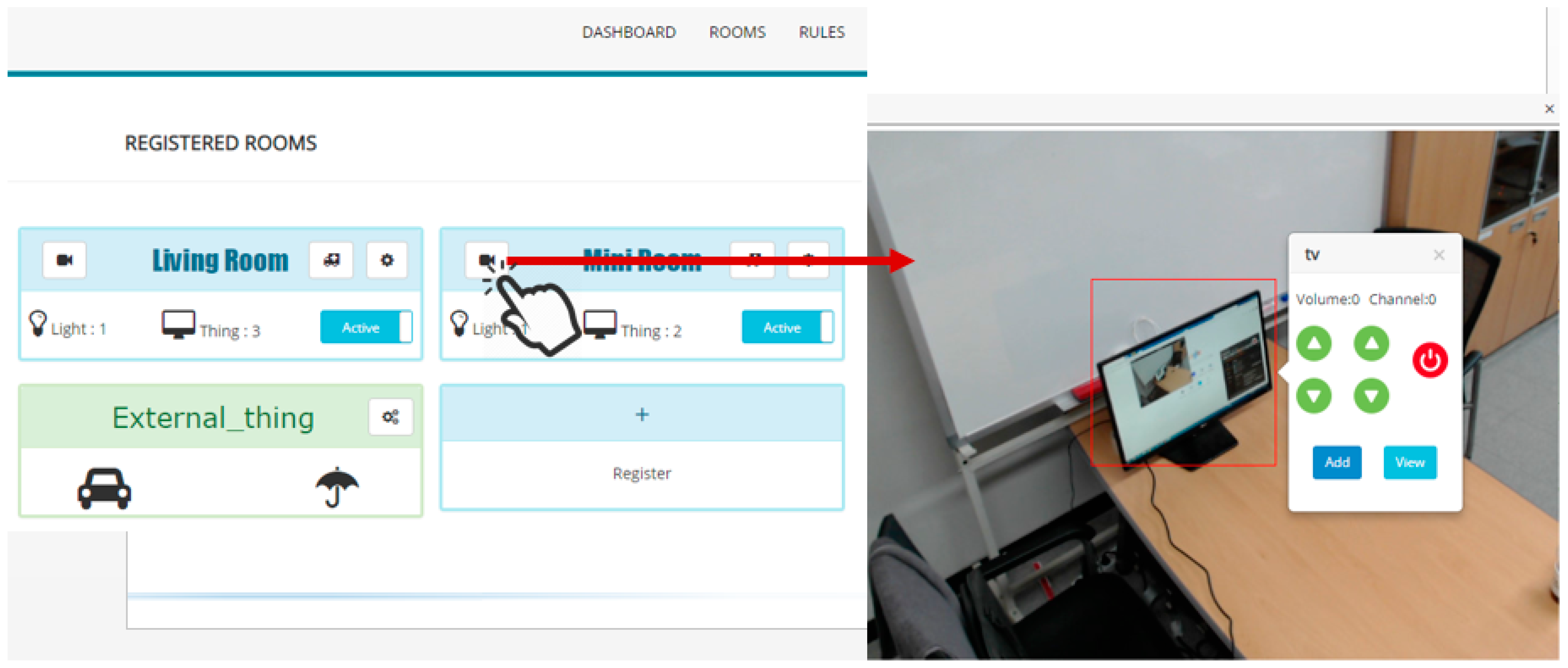

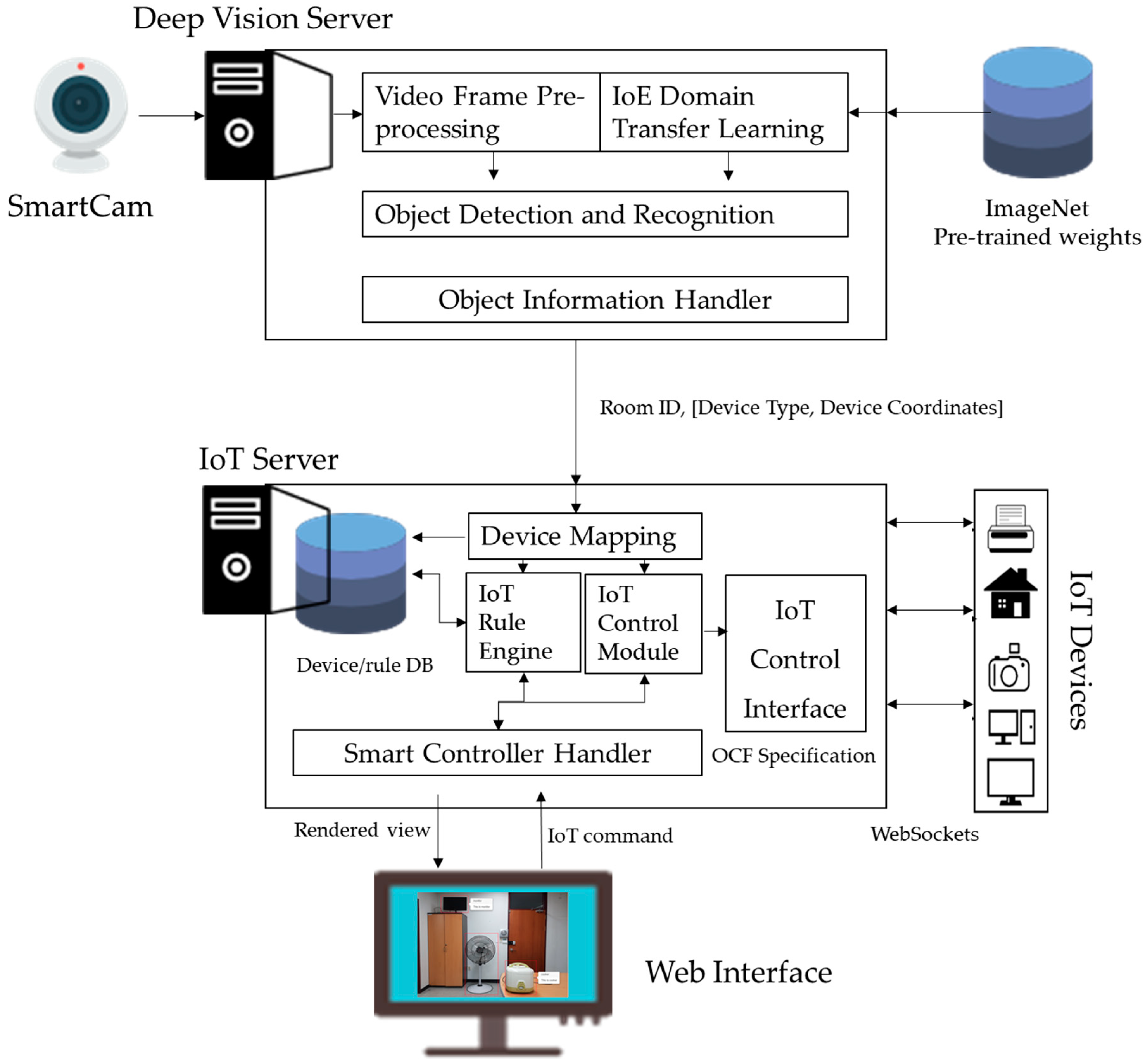

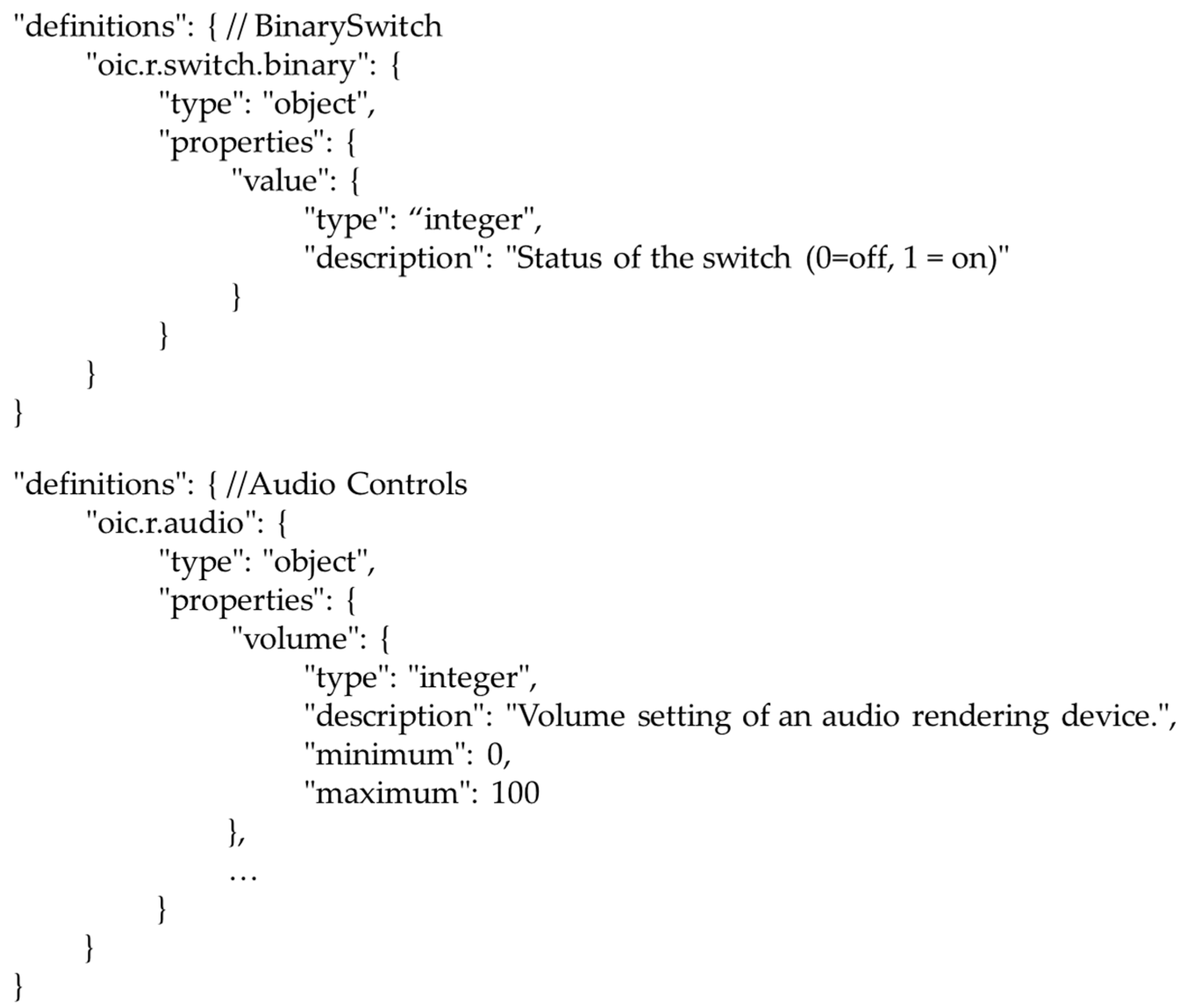

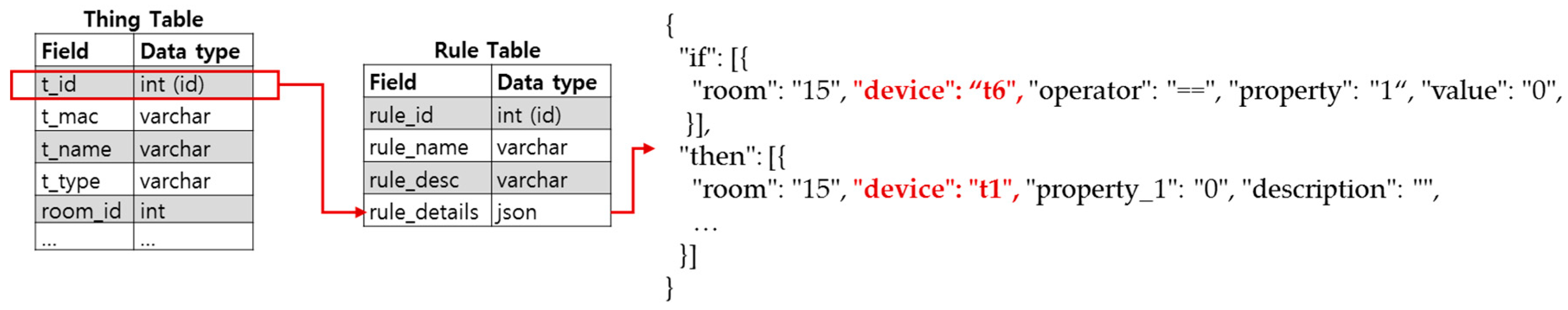

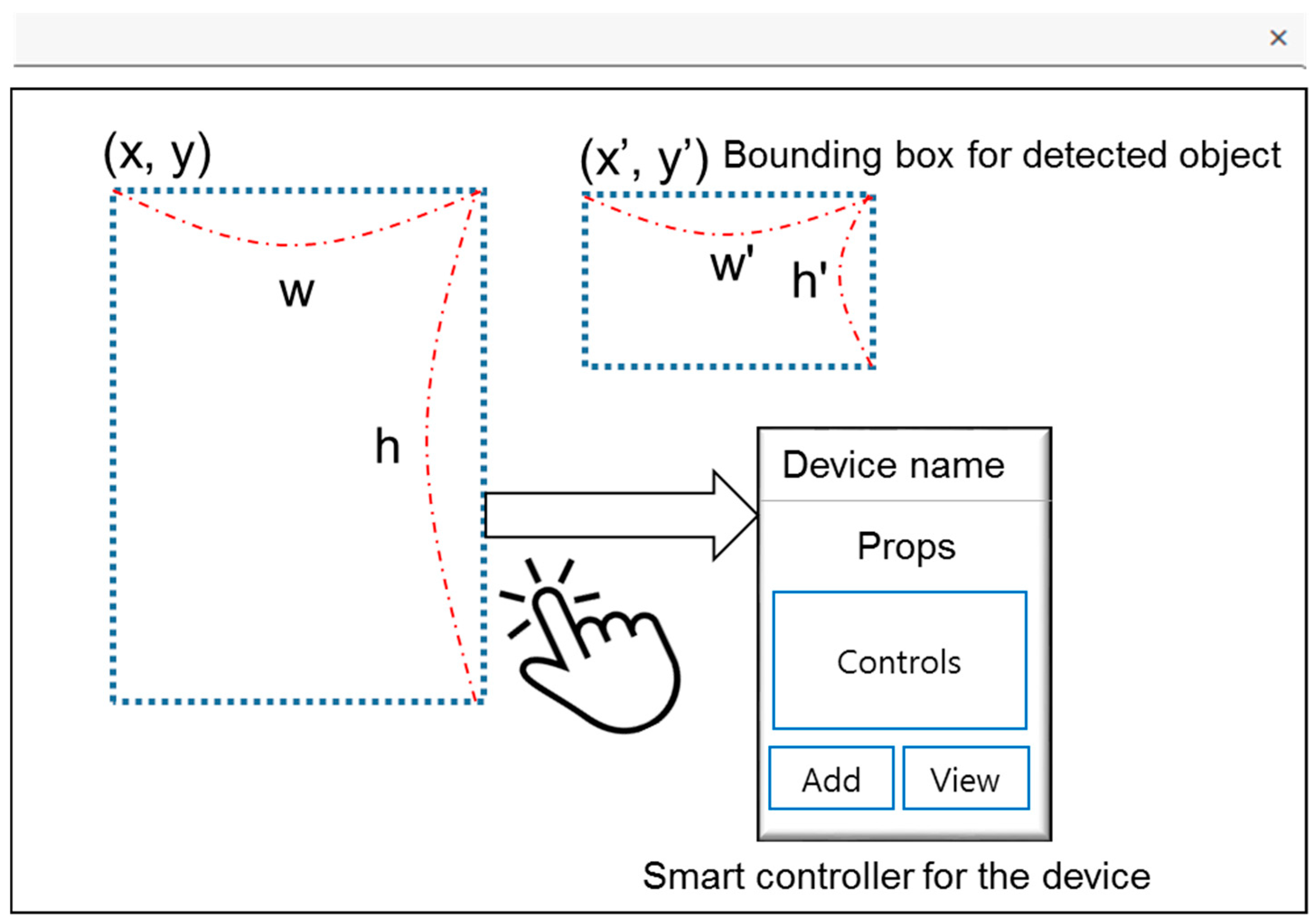

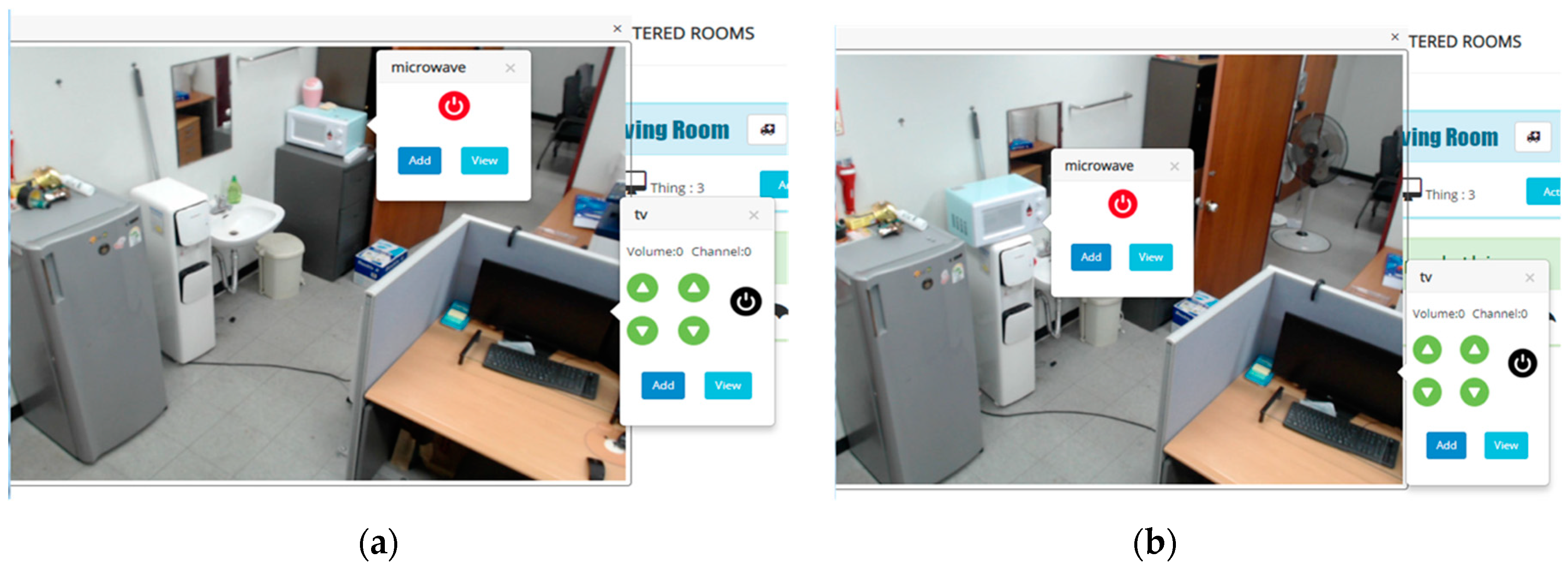

To address the limitations above, we introduce an interactive IoT management system with deep object detection techniques. The proposed system is equipped with a set of smart cameras to monitor the in-door status of a room in real-time. With the proposed system, the users not only access video streams to observe the in-door status but also directly control the smart devices found in the video streams. Specifically, the proposed system automatically analyzes the video streams to detect and recognize the category of a smart device. If users touch a detected object in the video stream, a context menu (i.e., smart controller) for the object opens to provide various IoT-related services such as monitoring and control, and composing rules. Through the proposed system, the users can more intuitively and quickly locate the target IoT device to use.

The rest of this paper is organized as follows.

Section 2 briefly reviews the previous studies.

Section 3 provides the details of the proposed interactive IoT management system with deep object detection methods. In

Section 4, prototype implementation and the results on user studies are discussed. Finally, we provide the conclusion and future research directions in

Section 5.

2. Related Work

There have been various products and studies to provide IoT applications and services with cloud support. In this section, we briefly review the previous works and compare them with the proposed work from various perspectives.

Recently, global companies such as Amazon, IBM, and Samsung have converged on the strategy of offering IoT services, platforms, and hardware modules [

15]. Amazon IoT (AWS IoT) [

7] provides interaction between the devices and AWS services through a cloud-based architecture. The connected devices can communicate with each other by traditional data protocols such as Message Queue Telemetry Transport (MQTT), HTTP, or WebSockets. In addition, the AWS IoT provides an interface for a rule engine to support building applications that act on data generated by IoT devices. IBM Watson IoT [

5] also provides a cloud-based IoT management system with various kinds of analytics services (e.g., data visualization in real-time). The messaging protocols like MQTT and HTTP are supported for communication between users, IoT devices, applications, and data storages. The IBM Watson IoT also provides a rule composition system to set conditions and actions for IoT devices or applications. Samsung ARTIK platform [

10] provides ARTIK IoT hardware modules as well as a cloud service which provides interoperability between devices, applications, and services. For easy development of IoT applications and services, ARTIK supports various connectivity protocols (e.g., Ethernet, Wi-Fi, ZigBee, Thread, etc.) and data protocols such as REST/HTTP, MQTT, and WebSockets. Similar to AWS IoT and IBM Watson IoT, Samsung ARTIK Cloud provides an IoT rule engine interface to add and edit the rules or to invoke actions when conditions are met. In particular, the ARTIK cloud provides a device simulator feature so that the development and testing of new IoT services can be easy and straightforward.

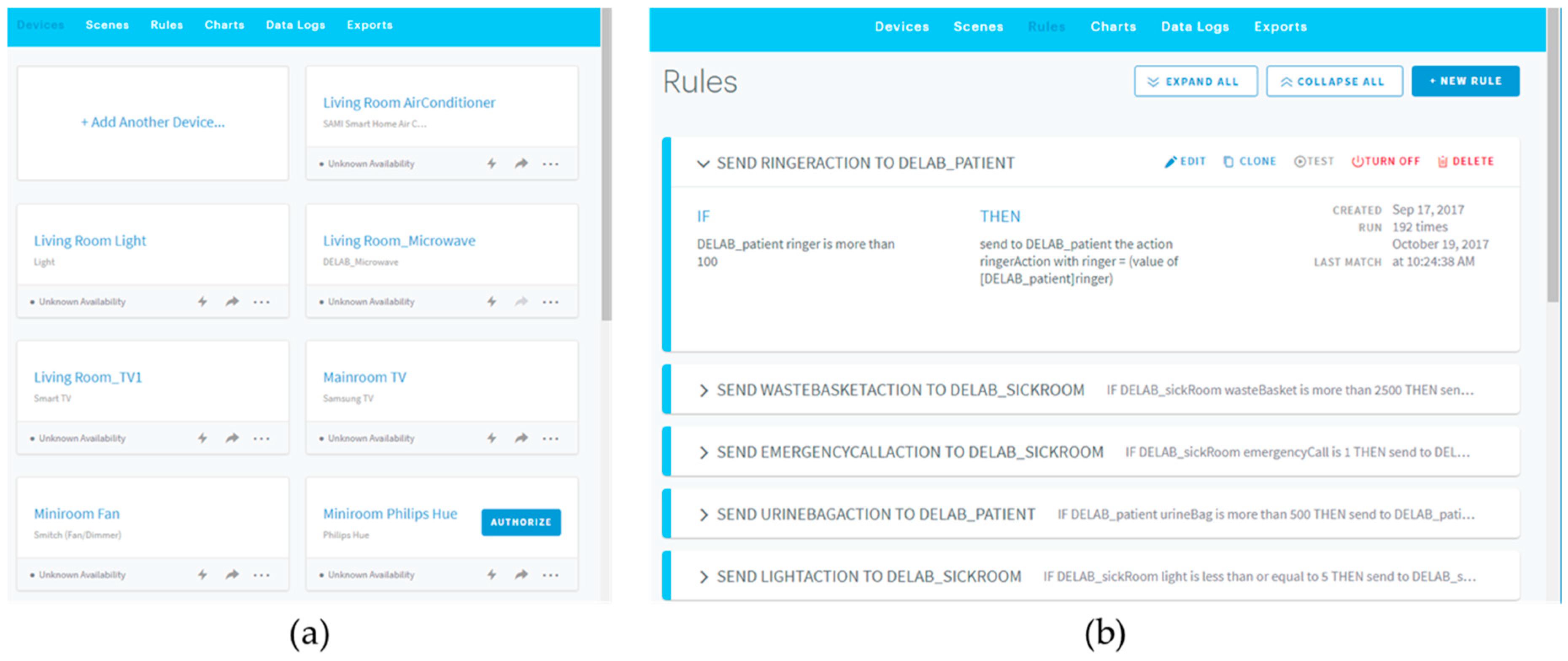

The recent IoT platforms including ARTIK, AWS IoT, and IBM Watson IoT tried to provide a GUI-based web application for device and rule management as presented in

Figure 3. As stated in Introduction, however, the current web-based GUI applications of commercial IoT platforms did not consider the usability; hence, it is not intuitive and efficient for users to manage the IoT devices and rules. For example, ARTIK cloud web application’s device management interface shown in

Figure 3a just provides a long list of installed devices, each of which displays a name, type, and some buttons. If the number of installed devices becomes large, the users are required to spend much more time to locate their target devices.

Figure 3b also presents a web interface for rule management of the ARTIK cloud. Similar to the device management interface, the rule management interface also provides a list of generated rules, each of which displays a name, descriptions of rule components based on the ARTIK cloud rule syntax. Therefore, it is obvious that the users have to spend much more time as the number of devices increases and the type varies.

Recent academic studies proposed various models, methods, and architectures to define and integrate IoT components for the success of IoT ecosystems. Garcia et al. [

16] defined a theoretical frame about smart objects with a different dimension of intelligence. Each IoT device was classified based on its level of intelligence, location of intelligence, and integration level of intelligence. The authors of [

16] claimed that a smart object with the intelligence through the network from the location of the intelligence dimension is the most important device type since these kinds of objects can have ever-increasing intelligence through the network; therefore, be more intelligent. Molano et al. [

17] analyzed and reviewed the relationships between IoT, social networking services, cloud computing, and Industry 4.0. The authors of [

17] presented a 5-layer architecture for an IoT platform which is comprised of sensing, database, network, data response, and the user layer. A meta-model of system integration which defines the responsibility and relationship between sensors, actuators, rules, and social networks was also introduced. The proposed system in this paper consists of the core components mentioned by [

17] (i.e., sensors, actuators, rules, etc.) and gives an appropriate role to each component according to the meta-model presented by [

17]. Ref. [

18,

19] presented an efficient and interactive device and service management model for cloud-based IoT systems. Dinh et al. proposed an efficient interactive model for the sensor-cloud to efficiently provide on-demand sensing services for multiple applications with different kinds of requirements [

18]. The sensor cloud model was designed to optimize the resource consumption of physical sensors as well as the bandwidth consumption of sensing traffic. Cirani et al. [

19] introduced the virtual IoT Hub replica, a cloud-based entity replicating all the features of an actual IoT Hub, which external clients can query to access resources. Their experimental results demonstrated the scalability and feasibility of the architecture for managing resources in IoT scenarios.

The aforementioned studies [

16,

17,

18,

19], however, still focused on the efficient management and interaction of smart objects (i.e., sensors and actuators) rather than interaction between users and IoT services. The platforms and systems presented by [

20,

21,

22,

23,

24] suggest various approaches to provide IoT services by taking the interaction between users and devices into account. Ref. [

20,

21] proposed an IoT-based smart home automation and monitoring system. Various sensor and actuator nodes are configured with microcomputers like Raspberry Pi and Arduino and then installed in a home. The collected data are transmitted to a cloud server and subsequently processed according to the user’s requests. However, these works also suffer from the limitations that a simple list-style interface can have. Memedi et al. [

22] presented an IoT-based system for better management of Parkinson disease by giving patients insights into symptom and medication information through mobile interfaces. In particular, the mobile interface was designed according to the usability heuristics for the elderly, such as text size, color and contrast, navigation and location, mouse use, and page organization. Similarly, Wu et al. [

23] and Hong et al. [

24] proposed a GUI-based application to monitor the status of sensor nodes in a safety and health domain. However, ref. [

23] does not support a manual or rule-based actuation features; therefore, its usefulness is limited. In particular, since [

24] depends on the ARTIK cloud as its cloud backend, the aforementioned problems and limitations of ARTIK cloud interface still exist. On the other hand, Zhao et al. [

25] and Heikkinen et al. Ref. [

26] proposed methodologies and architectural frameworks to build and support time-critical applications in a cloud environment.

Even though various studies have been proposed for the integration of IoT services, applications, and devices, how to improve the usability of a system interface focusing on the interaction between users and systems still remains challenging. The remainder of this paper describes how the proposed work addresses this issue by (1) integration between the IoT platform server and deep learning-based object detection approaches and (2) a web-based interface with smart controllers.

5. Discussion and Conclusions

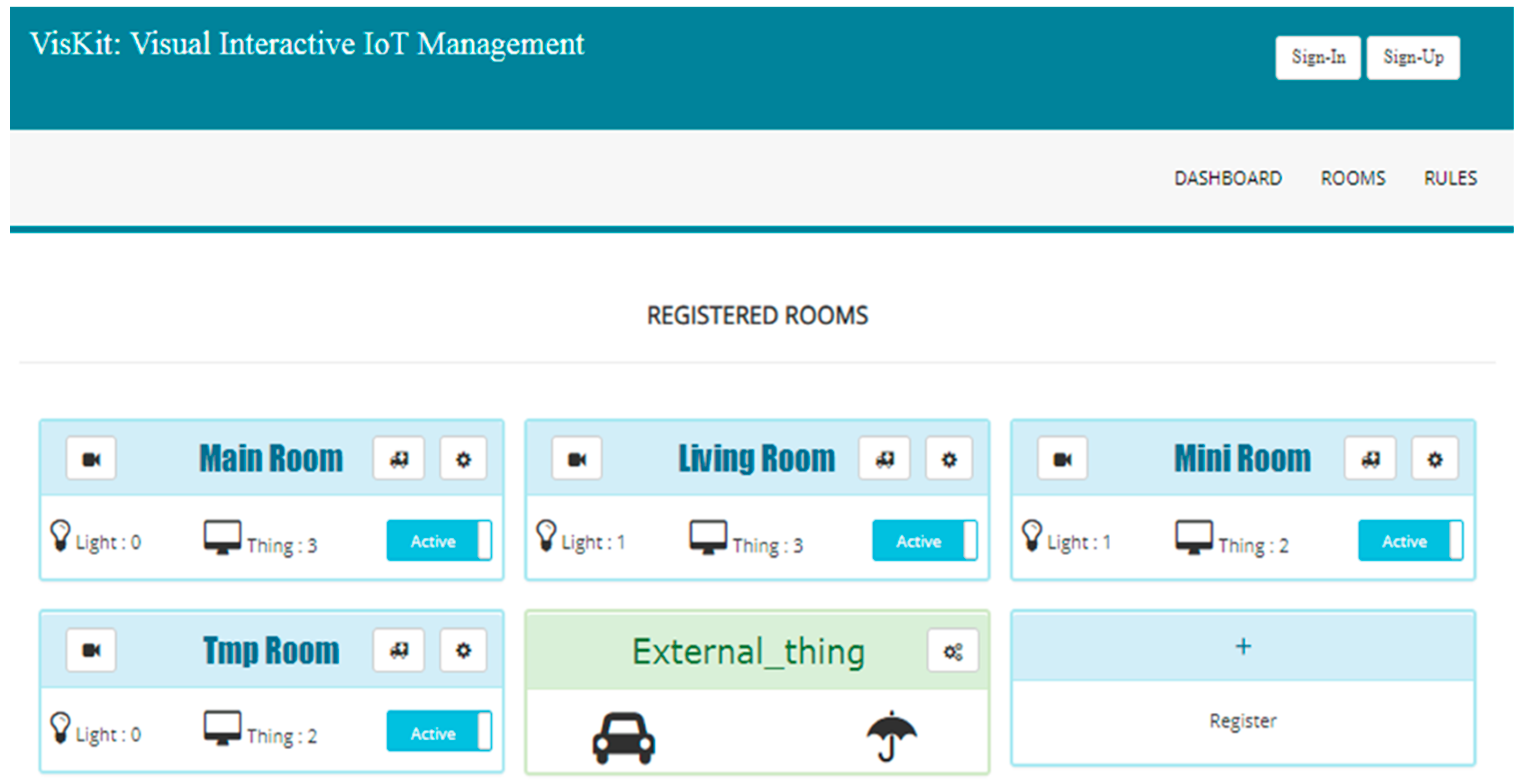

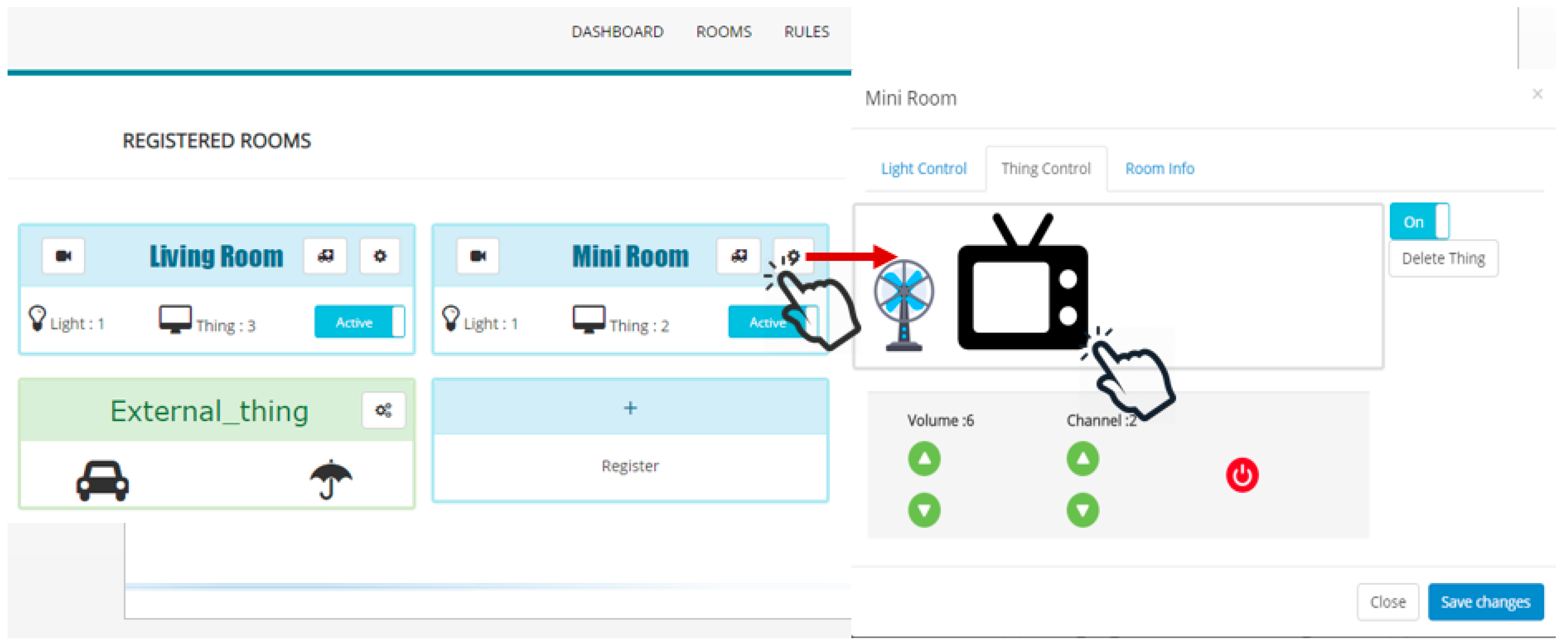

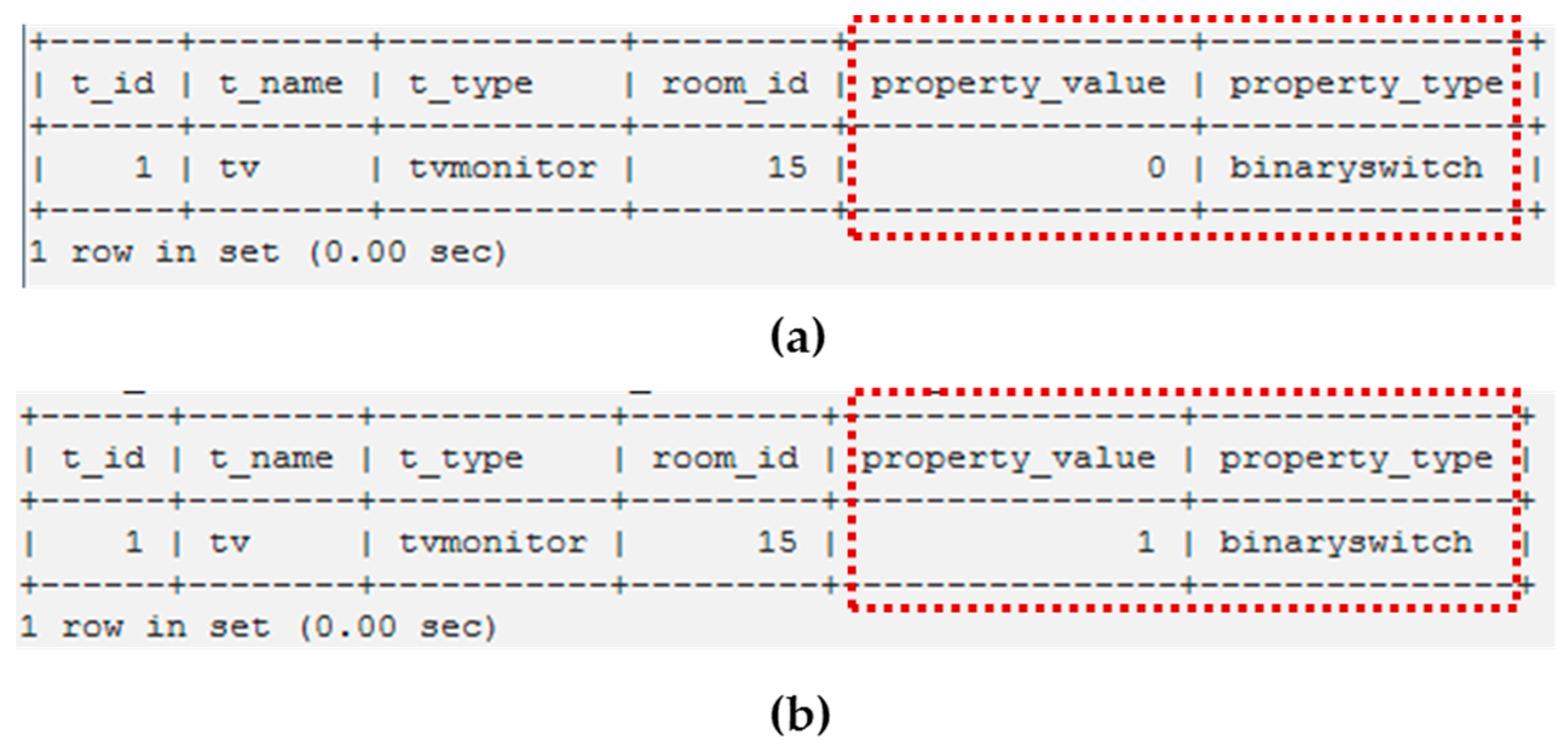

In this paper, we proposed an interactive visual interface for an intuitive and efficient IoT device management. The proposed system captures the indoor status of a room and recognizes IoT devices to provide smart controllers on the video stream. Through the web interface, users can not only monitor and control the IoT devices installed in the room but also manage the IoT rules of the selected devices.

The main strength of this work can be summarized as follows: First, the proposed system supports both monitoring the status of sensor and actuator devices installed in a room and handling actuations with various conditions. In addition to the physical devices, we also plan to expand the scope of the system to support external services and applications such as social network services, 3rd party IoT cloud solutions, and IFTTT applets. Second, the main functionalities of the proposed system are provided through a graphical user interface for improved usability. As stated in the Introduction, the GUI-based presentation can overcome the limitations of a traditional text-based grid or list style interfaces. Third, through the integration between the IoT platform server and deep learning-based object detection approach, the smart controllers for each device type are accordingly configured. It was shown that the prototype implementation tracks an IoT device with the deep object detection method so that the smart controllers are correctly activated even if the location of the device is changed. Finally, as demonstrated by the experimental results, the video view with smart controller interfaces successfully improved the performance of the system in terms of efficiency and user satisfaction. The quantitative experimental results showed that the proposed system with smart controllers achieved an average 40% performance gain in terms of efficiency. The results of the user survey also confirmed that the proposed system is more usable than competitive solutions.

However, the proposed system still suffers from the following limitations. First, the proposed architecture requires the installation of a smart camera to provide video streaming and smart controllers, which is not possible in certain environments. A low-light environment where general computer vision algorithms cannot work or an environment with limited network bandwidth where real-time video streaming is impossible are typical examples. To address these issues, we plan to study the extension of previous deep learning-based object detection approaches for a low-light environment and the utilization of compressed video streaming strategies. Second, as shown in

Figure 6 and

Figure 17, the current video view can cover only a limited angle. Therefore, multiple cameras can be required to fully cover the entire space of a single room in the worst case. As future work, we plan to design and implement a smart light module embedded with a 360-degree smart camera to provide better functionalities. Also, the improved version of deep object detection and classification method will be studied for handling a 360-degree video stream environment. Third, due to the lack of a large dataset suitable for an IoT domain, the current proposed system supports only a limited number of device types such as TV/monitor, microwave, and refrigerator. To support more device types, the Yolo framework needs to be trained and fine-tuned with more image samples of IoT devices. Finally, if the multiple devices of the same type are installed in a single room, it is impossible to correctly detect the ID of each device, which results in the failure of IoT interaction. To handle such a complex environment, we plan to record and track the location of each device.