VeMisNet: Enhanced Feature Engineering for Deep Learning-Based Misbehavior Detection in Vehicular Ad Hoc Networks

Abstract

1. Introduction

2. Related Work and Background

2.1. VANET Fundamentals and Security Landscape

2.1.1. Communication Architecture and Protocols

2.1.2. Comprehensive VANET Threat Taxonomy

2.1.3. Attack Impact Analysis and Consequences

2.1.4. Security Requirements and Challenges

2.2. Traditional Machine Learning in VANET Security

2.3. Deep Learning Evolution in VANET Security

2.4. VeReMi Dataset Standardization

2.5. Research Gaps and Contribution

2.5.1. Identified Research Gaps

2.5.2. VeMisNet Contributions

- (i)

- Communication-aware feature set: Fourteen novel spatiotemporal descriptors (e.g., DSRC-range neighbour density and inter-message timing) that have not appeared in previous VANET literature.

- (ii)

- Unified large-scale benchmark: First evaluation on 20 attack classes and up to 2 M records under a strict 80/20 split, creating a reproducible baseline for future work.

- (iii)

- End-to-end deployment study: Real-time performance evaluation with throughput analysis and latency benchmarking to assess deployment readiness of academic models.

2.5.3. Critical Self-Reflection and Approach Limitations

2.6. Comparative Analysis and Positioning

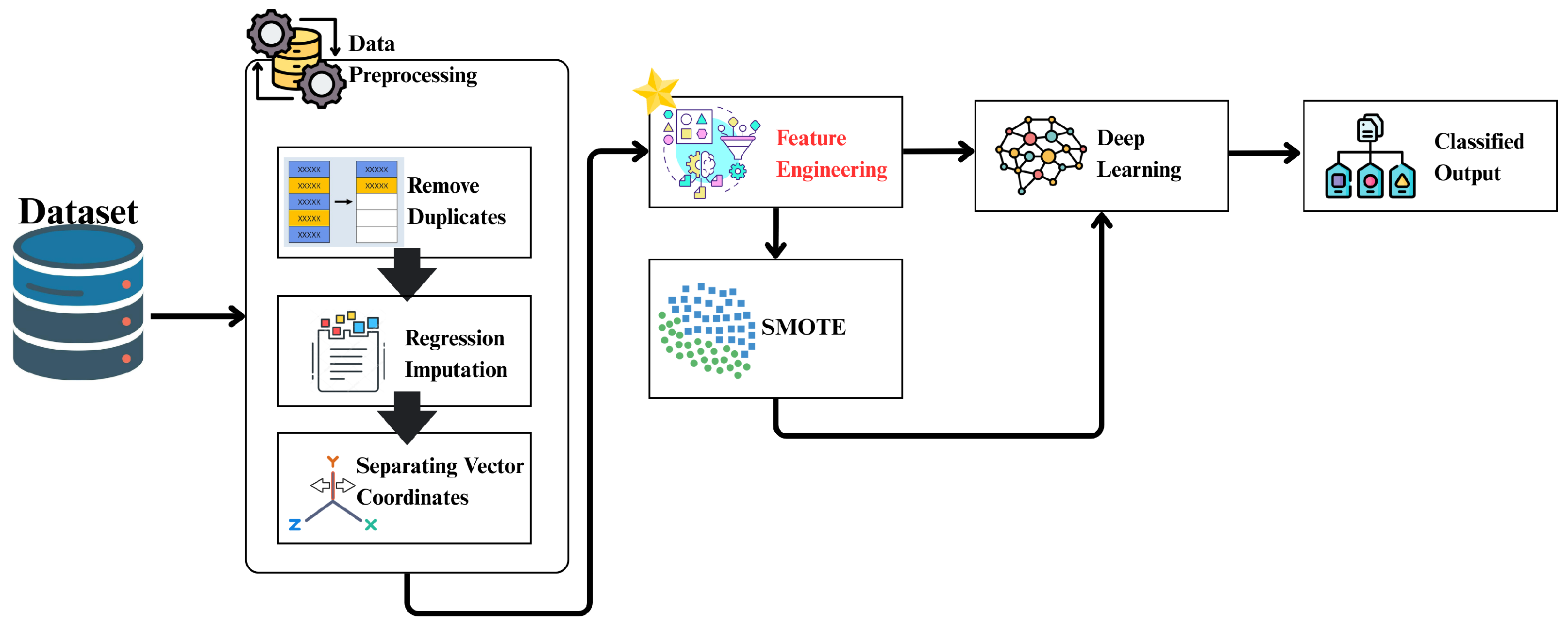

3. System Model and Methodology

3.1. VeMisNet Framework Overview

3.2. Dataset Description

3.3. Feature Engineering Methodology

3.3.1. Spatiotemporal Feature Engineering

3.3.2. UMAP-Based Feature Selection

3.3.3. Communication Pattern Features

- Packet Transmission Rate:

- Inter-Message Timing:

- Kinematic Differences: Relative changes in position, speed, and heading between sender–receiver pairs

3.4. Class Imbalance Analysis and Handling

3.5. Evaluation Methodology

4. Experimental Results

4.1. Experimental Configuration

4.1.1. Dataset Configuration

- Rationale: Provides sufficient statistical power while maintaining computational tractability.

- Composition: 20 attack categories plus legitimate traffic (59.6% legitimate, 40.4% attacks).

- Train/Test Split: 80/20 stratified split maintained across all experiments.

- Sequence Length: 10 time steps for temporal modeling.

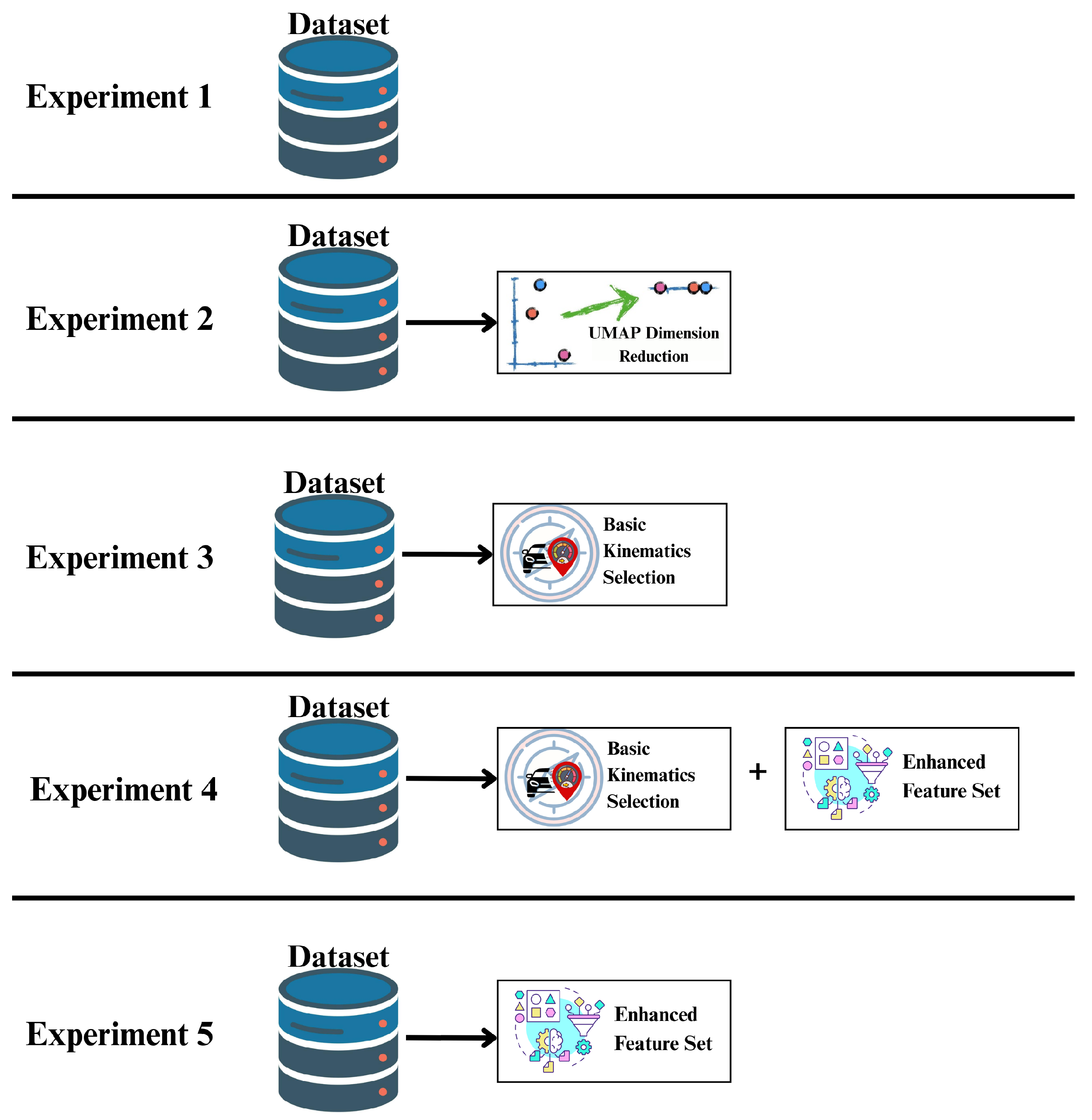

4.1.2. Feature Set Configurations

- Original Features: Raw VeReMi features, including temporal, kinematic, and noise components.

- UMAP-Selected Features: Progressive feature selection from 5 to 25 features based on UMAP dimensionality reduction, tested across all dataset sizes for both binary and multi-class classification tasks.

- Basic Kinematic Features: Fundamental vehicular attributes, including position, speed, acceleration, and heading, evaluated as baseline across all models, dataset sizes, and classification tasks.

- Enhanced Feature Set: Combination of basic kinematic features augmented with newly engineered spatiotemporal features, resulting in a comprehensive 14-feature set tested across all experimental configurations.

- Comprehensive Feature Set Evaluation: Combination of Raw VeReMi features, including temporal, kinematic, and noise components, augmented with newly engineered domain-informed features, including the following:

- Neighborhood density within DSRC ranges (100 m, 200 m, 300 m);

- Inter-message timing patterns;

- Kinematic consistency metrics;

- Communication frequency analysis.

4.1.3. Model Configuration

- Architecture: Single recurrent layer with 64 hidden units;

- Optimizer: Adam (learning rate = 0.001);

- Loss Function: Categorical crossentropy for multi-class, binary crossentropy for binary classification;

- Models Evaluated: LSTM, GRU, and Bidirectional LSTM.

4.1.4. Evaluation Methodology

- Primary: Accuracy, F1-score, Matthews Correlation Coefficient;

- Class Balance: Balanced Accuracy, per-class Precision/Recall;

- Statistical: 95% confidence intervals via bootstrap resampling.

- Inference latency (milliseconds per sample);

- Training efficiency (time to convergence);

- Memory utilization and throughput.

4.1.5. Evaluation Framework

- Scalability: Performance consistency across dataset sizes (100 K to 2 M);

- Feature Engineering Impact: Effectiveness of engineered vs. basic features;

- Architecture Comparison: Relative performance of LSTM, GRU, and Bi-LSTM;

- Classification Complexity: Binary vs. multi-class detection capabilities.

4.1.6. Evaluation Metrics

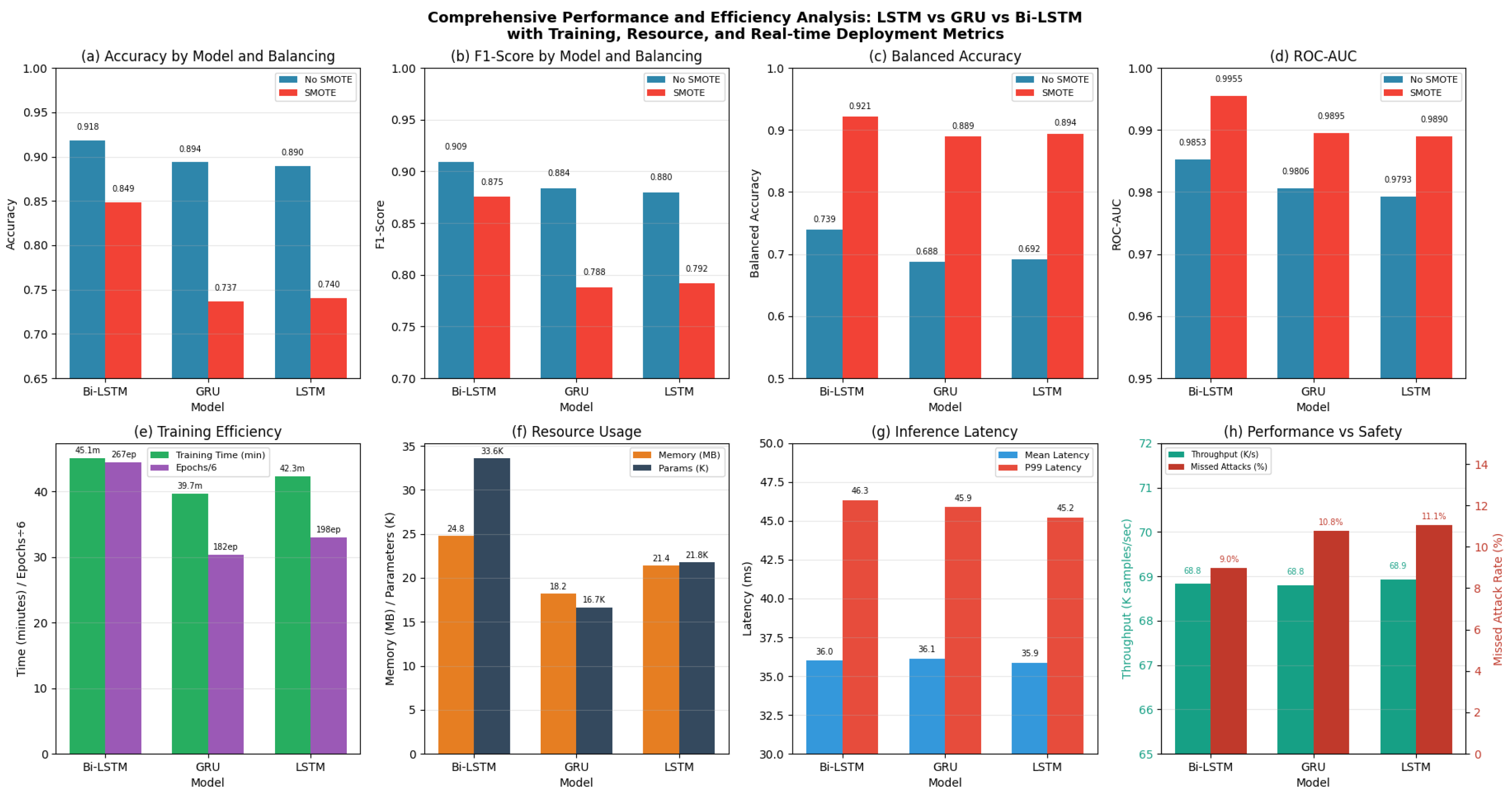

4.2. Performance Evaluation Results

4.2.1. Binary Classification Results

4.2.2. Multi-Class Classification Results

Original Features

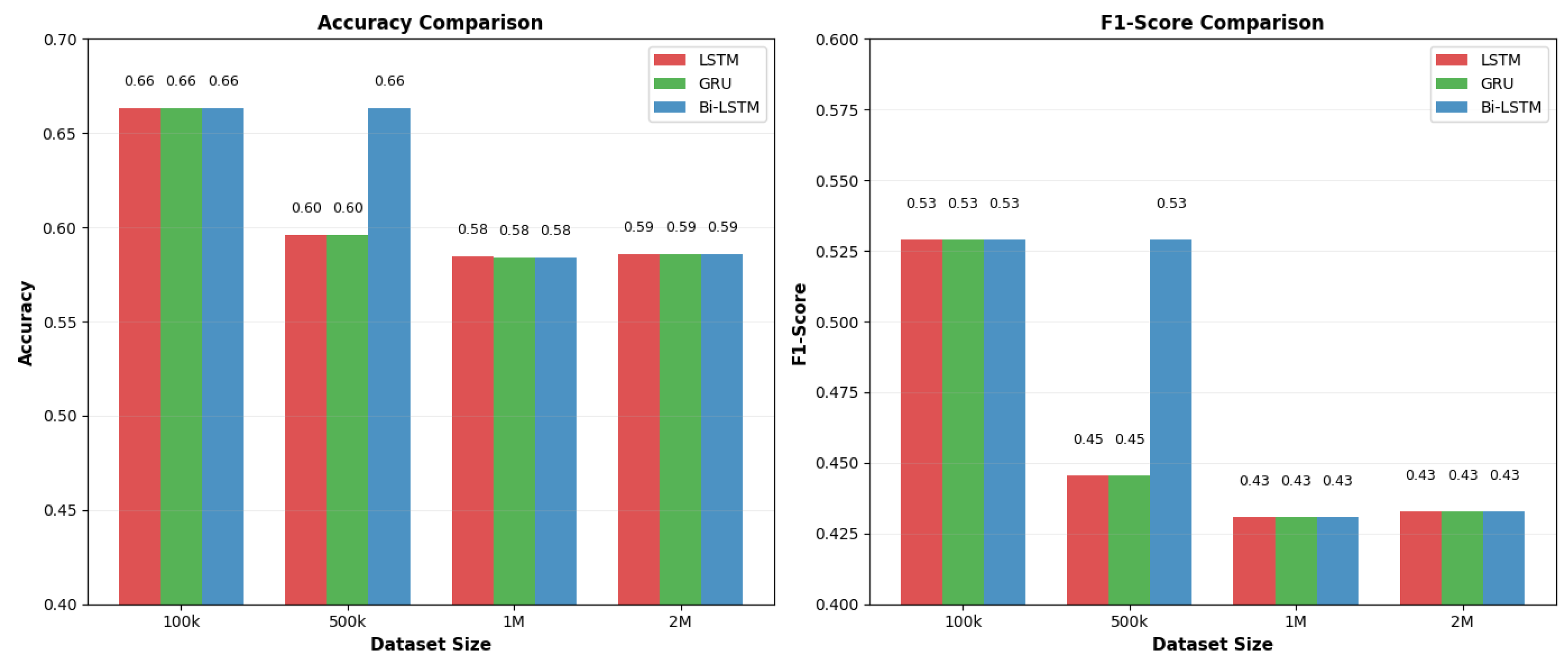

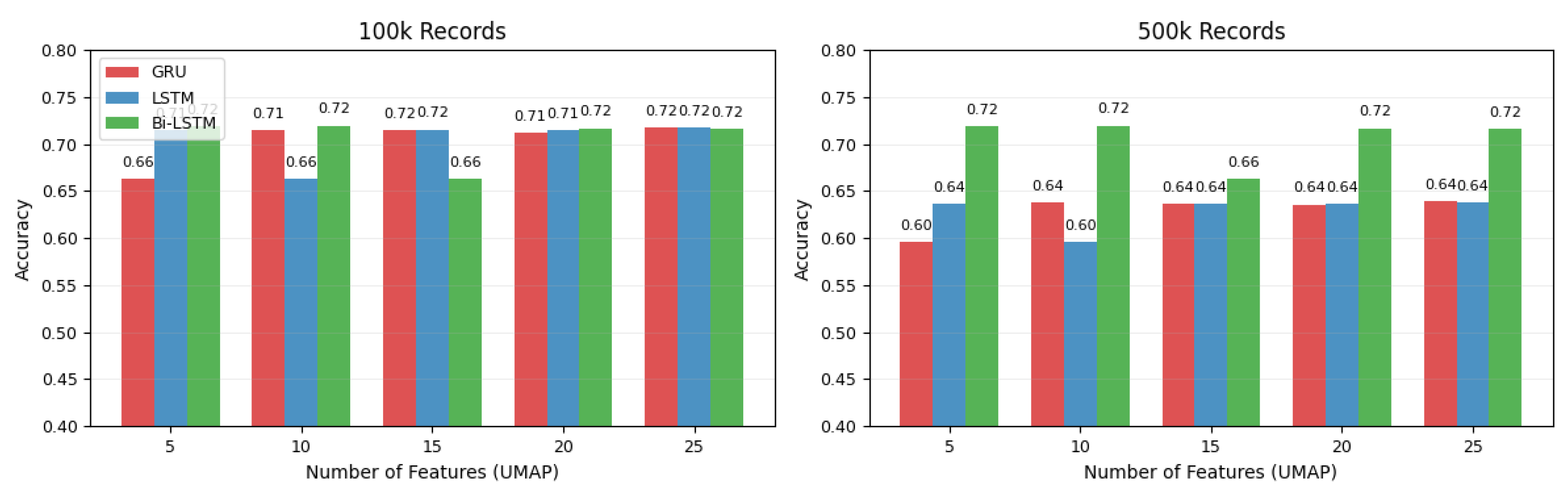

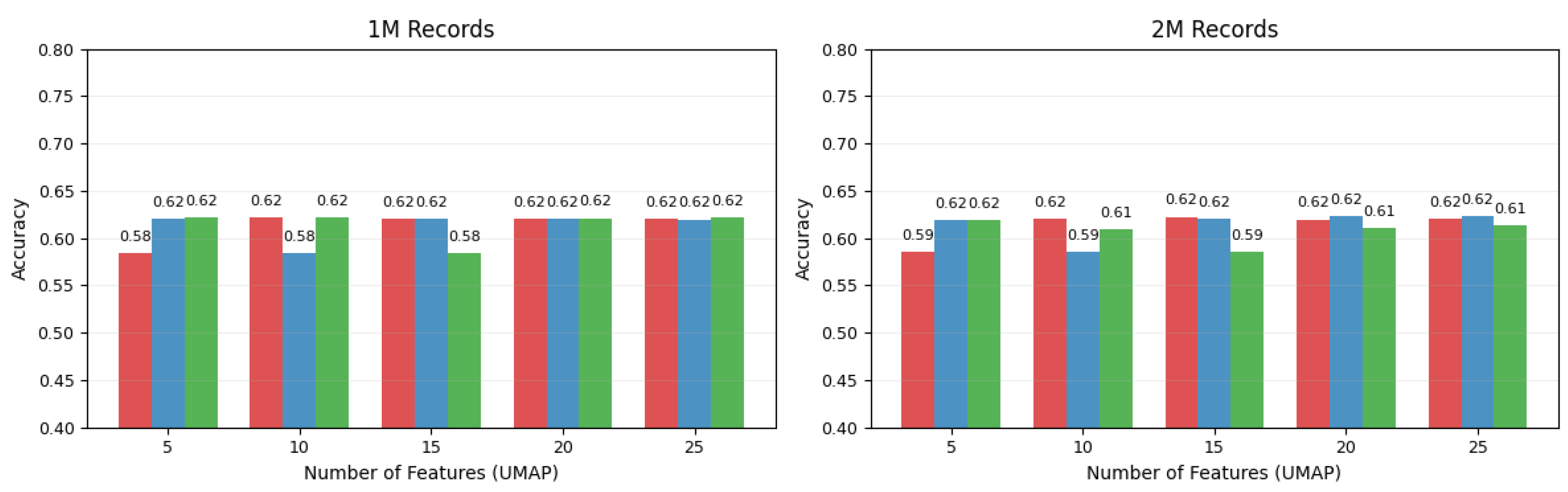

UMAP-Selected Features

Basic Kinematic Features

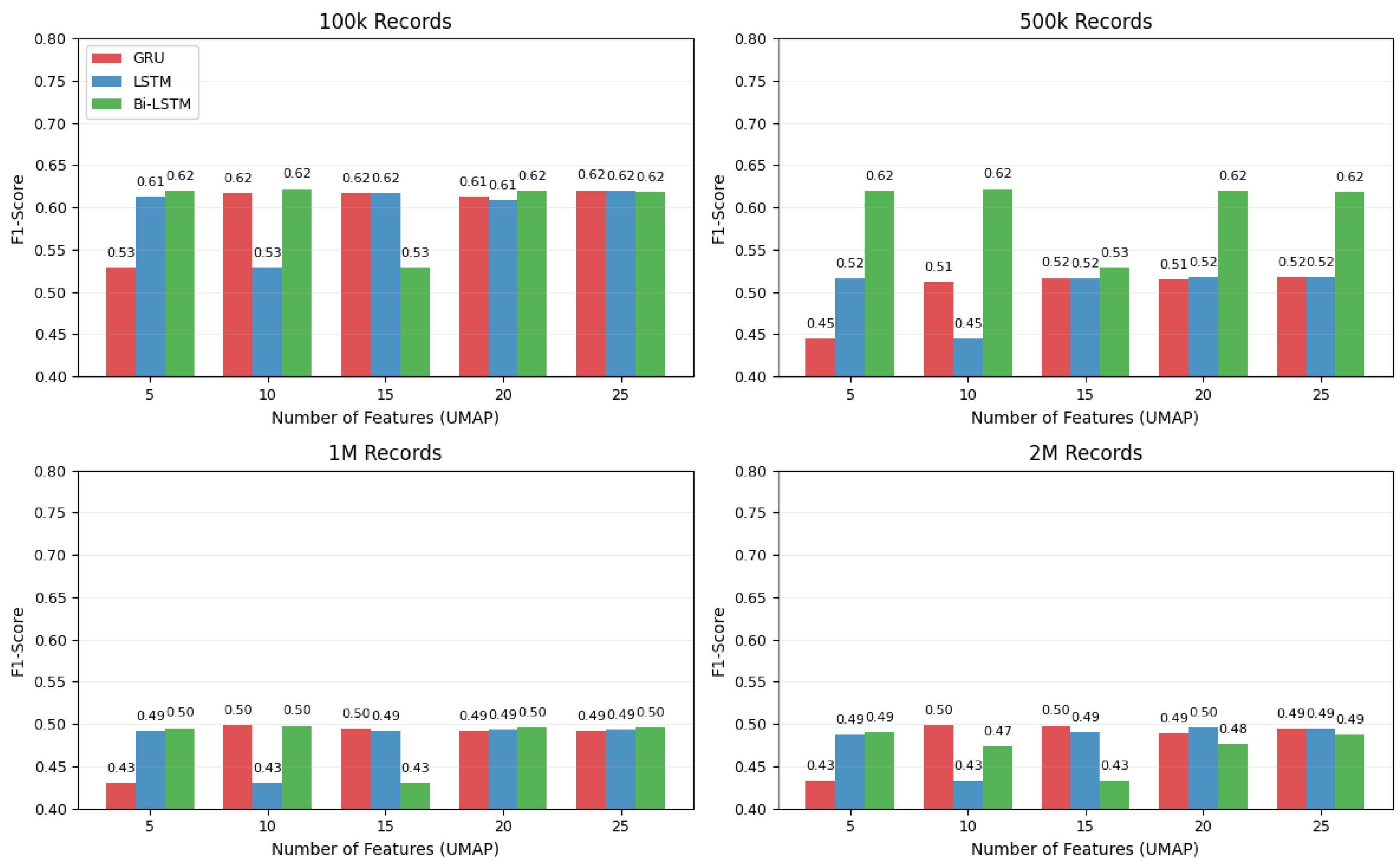

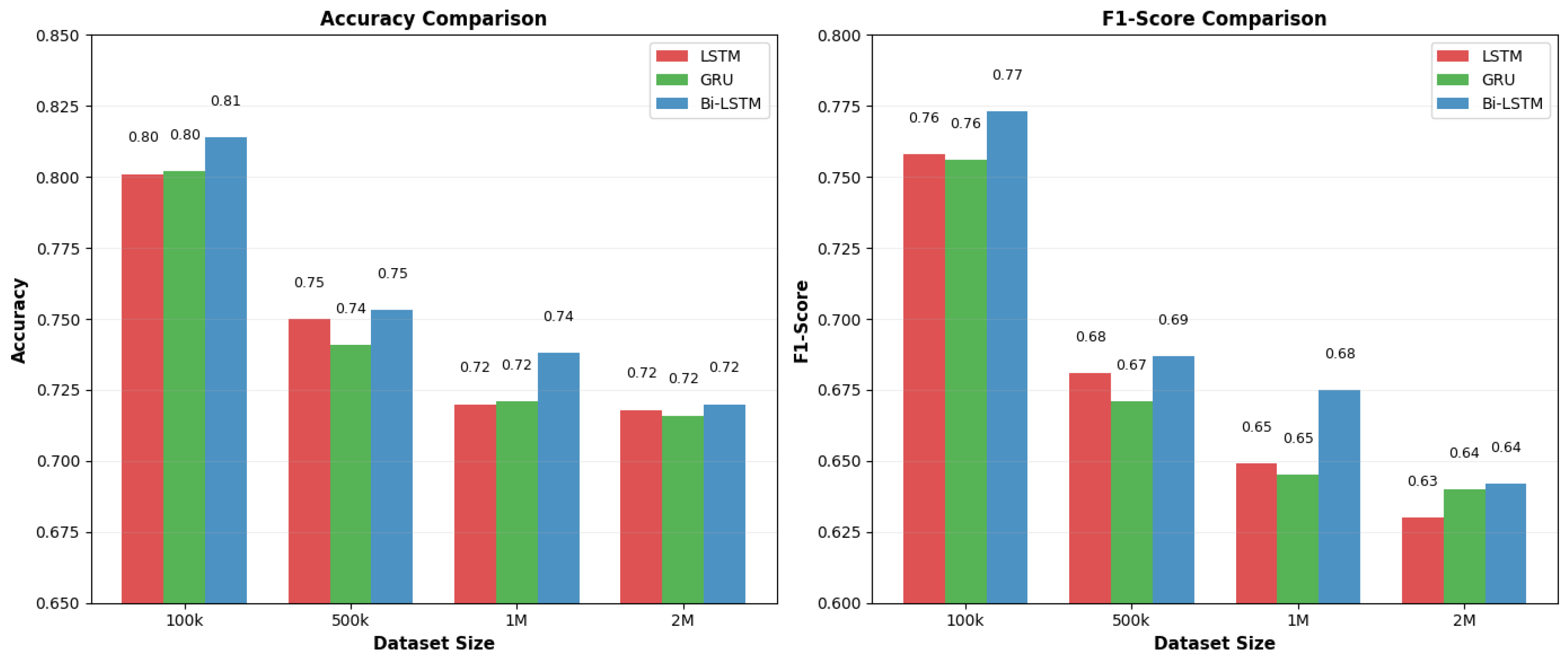

Enhanced Feature Set (Basic Kinematic + Engineered)

Comprehensive Feature Set Evaluation (Original + Engineered Features)

4.3. Comprehensive Per-Class Performance Analysis and Class Imbalance Mitigation

4.3.1. Theoretical Foundation and Methodology

4.3.2. Architectural Performance Under Class Imbalance

4.3.3. SMOTE Transformation Effects and Recovery Analysis

4.3.4. Enhanced Feature Set Impact and Comprehensive Analysis

- Without SMOTE: Models achieve high overall accuracy (85.84–90.00%) but exhibit poor balanced accuracy (57.80–67.77%), indicating severe bias toward majority classes even with enhanced features.

- With SMOTE: While overall accuracy moderately decreases (74.04–84.85%), balanced accuracy substantially improves (88.95–92.10%), demonstrating more equitable performance across all attack categories.

- Optimal Configuration: Bidirectional LSTM with SMOTE achieves the best balance, maintaining competitive overall accuracy (84.85%) while achieving excellent balanced accuracy (92.10%) and Cohen’s Kappa (0.7655).

- Minority Class Enhancement: SMOTE consistently improves detection capabilities for underrepresented attack types, with particularly significant gains for Sybil (+0.50), DDoS (+0.53), Position (+0.52), Speed (+0.53), and Replay (+0.53) attacks.

- Detection Difficulty Correlation: An inverse relationship exists between class size and detection complexity, with smaller attack classes presenting inherently greater detection challenges. EventualStop attacks also present detection challenges, achieving 0.584 F1-score even with optimal configuration, suggesting that gradual behavioral changes are inherently more difficult to distinguish from normal traffic variations.

- Architectural Superiority: Bidirectional LSTM demonstrates consistent superiority across most attack categories, effectively leveraging both forward and backward temporal contexts, with average F1-score improvements of 8–15% compared to unidirectional architectures.

4.3.5. Resource Utilization and Practical Considerations

- Memory Efficiency: SMOTE application increases memory requirements due to synthetic sample generation, with Bidirectional LSTM showing moderate increases compared to unidirectional architectures.

- Training Time Impact: Enhanced dataset sizes from SMOTE result in longer training periods, but the performance gains justify the computational overhead in security-critical applications.

- Deployment Trade-offs: The slight reduction in overall precision with SMOTE is acceptable in security-sensitive environments, where false positives are preferable to missed attacks.

4.3.6. Scientific Justification and Comprehensive Synthesis

- Rare Attack Detection: Critical security threats often manifest as minority classes in real-world traffic data. The systematic recovery of critical attack detection capabilities, from complete failure to detection rates exceeding 95% for most attack types, validates both the technical approach and its practical significance.

- Balanced Coverage: Equitable detection performance across all attack types ensures comprehensive security coverage, with the transformation from selective to comprehensive detection representing a fundamental advancement in VANET security capability.

- Generalization Enhancement: Synthetic augmentation improves model generalization to previously unseen attack variants, as evidenced by improved performance across diverse attack categories.

- Operational Reliability: Consistent performance across diverse attack scenarios enhances real-world deployment viability, with Bidirectional LSTM consistently demonstrating superior performance across the majority of attack categories.

5. Conclusions and Future Directions

- Original VeReMi features: Bi-LSTM achieved accuracy at 500 K samples, but degraded to at 2 M, with missed attack rates increasing from to .

- UMAP-selected subsets: Performance plateaued near accuracy, confirming that unsupervised dimensionality reduction alone cannot capture VANET-specific spatiotemporal patterns.

- Basic kinematic features: Baseline results reached only accuracy at 100 K, falling to F1 at 2 M, exposing severe scalability limitations.

- Enhanced 14-feature set: Integration of engineered spatiotemporal features improved Bi-LSTM performance to 91.80% accuracy and F1 = 0.9093 at 500 K, with moderate scalability loss (down to accuracy at 2 M). False alarms dropped to , with only attacks missed.

- Comprehensive feature set: Combining raw and engineered features achieved the highest stability across scales, sustaining accuracy even at 2 M samples and maintaining inference latency under 41 ms.

5.1. Future Research Directions

5.1.1. Immediate Research Priorities

5.1.2. Medium-Term Research Objectives

5.1.3. Long-Term Research Vision

5.1.4. Research Implementation Strategy

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Comprehensive Literature Comparison

| Study | Year | Approach | Dataset | Scale | Accuracy | F1-Score | Speed (Sample/s) | Attack Types | Key Innovation |

|---|---|---|---|---|---|---|---|---|---|

| Traditional Machine Learning | |||||||||

| Grover et al. [16] | 2011 | Random Forest | Custom | 50 K | 93–99% | 95% | 20,000 | Multiple | Ensemble methods |

| So et al. [17] | 2018 | ML + Plausibility | Custom | 100 K | 95% | 93% | 18,500 | Position | Domain integration |

| Zhang et al. [19] | 2018 | SVM + DST | Custom | 75 K | 94.2% | 92% | 16,200 | Message attacks | Dual-model approach |

| Sharma & Jaekel [18] | 2021 | ML Ensemble | VeReMi | 500 K | 98.5% | 97% | 15,800 | 5 types | Temporal BSMs |

| Sonker et al. [1] | 2021 | Random Forest | VeReMi | 200 K | 97.6% | 96% | 17,300 | 5 types | Multi-class focus |

| Kumar et al. [25] | 2022 | XGBoost + Temporal | VeReMi | 800 K | 96.2% | 95% | 16,500 | 16 types | Temporal features |

| Patel et al. [37] | 2024 | ML Ensemble | VeReMi Ext | 1.5 M | 94.7% | 93% | 14,200 | 20 types | Advanced ensemble |

| Deep Learning Approaches | |||||||||

| Kamel et al. [20] | 2019 | LSTM | VeReMi | 300 K | 89.5% | 87% | 9200 | Multi-class | Temporal modeling |

| Alladi et al. [38] | 2021 | CNN-LSTM | Custom | 250 K | 92.3% | 90% | 7800 | Multiple | Hybrid architecture |

| Alladi et al. [22] | 2021 | DeepADV | VeReMi | 400 K | 94.1% | 92% | 6500 | Comprehensive | Multi-architecture |

| Liu et al. [39] | 2023 | Graph NN | Custom + VeReMi | 900 K | 94.5% | 93% | 5800 | 15 types | Graph-based modeling |

| Chen et al. [26] | 2024 | Attention-LSTM | VeReMi Ext | 1 M | 93.1% | 91% | 9100 | 20 types | Attention mechanism |

| Yuce et al. [28] | 2024 | Spatiotemporal GNN | Converted MBD (IoV) | — | 99.92% | — | 4800 | Multiple | GNN + dataset-to-graph mapping |

| Transformer-Based Architectures | |||||||||

| Wang et al. [40] | 2024 | Transformer | VeReMi Ext | 1.2 M | 93.8% | 92% | 7200 | 20 types | Self-attention |

| Khan et al. [27] | 2025 | Transformer + SHAP | VeReMi Ext | 3.19 M | 96.15% (MC), 98.28% (Bin) | — | 7200 | All attacks (VeReMi Ext) | XAI with transformer |

| Federated Learning Approaches | |||||||||

| Gurjar et al. [24] | 2025 | Fed. CNN-LSTM | VeReMi Ext | 1.2 M | 93.2% | 90% | 5500 | Multiple | Scalable federation |

| Campos et al. [23] | 2024 | Federated DL | VeReMi Ext | 800 K | 91.7% | 89% | 6200 | Distributed | Privacy-preserving |

| Hybrid Approaches | |||||||||

| Kim et al. [41] | 2023 | SVM + GRU | VeReMi | 700 K | 94.3% | 93% | 12,500 | 16 types | ML + DL ensemble |

| Rodriguez et al. [42] | 2024 | RF + LSTM | VeReMi Ext | 1.3 M | 95.1% | 94% | 11,000 | 20 types | Hybrid architecture |

| Present Work | 2025 | Bi-LSTM + Eng. Features | VeReMi Ext | 100 k–2 M | 99.05–99.63% | 99+% | 41.76 ms | All attacks (VeReMi Ext) | Domain engineered features + scalability analysis |

Appendix B. Comprehensive Per-Class Performance Analysis

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| Bi-LSTM Architecture | ||||||||

| Legitimate | 0.797 | 0.745 | 0.996 | 0.853 | 0.484 | 0.941 | 0.224 | 0.362 |

| ConstPos | 0.991 | 0.827 | 0.492 | 0.617 | 0.994 | 0.795 | 0.957 | 0.868 |

| ConstPosOffset | 0.989 | 0.815 | 0.266 | 0.401 | 0.978 | 0.351 | 0.862 | 0.499 |

| RandomPos | 0.990 | 0.847 | 0.394 | 0.538 | 0.984 | 0.305 | 0.628 | 0.411 |

| RandomPosOffset | 0.990 | 0.773 | 0.125 | 0.215 | 0.918 | 0.055 | 0.587 | 0.101 |

| ConstSpeed | 0.994 | 0.777 | 0.690 | 0.731 | 0.999 | 0.969 | 0.954 | 0.962 |

| ConstSpeedOffset | 0.993 | 0.696 | 0.696 | 0.696 | 0.991 | 0.444 | 0.787 | 0.567 |

| RandomSpeed | 0.992 | 0.673 | 0.650 | 0.661 | 0.996 | 0.814 | 0.913 | 0.861 |

| RandomSpeedOffset | 0.992 | 0.766 | 0.397 | 0.523 | 0.975 | 0.245 | 0.758 | 0.370 |

| EventualStop | 0.992 | 0.870 | 0.326 | 0.474 | 0.967 | 0.178 | 0.857 | 0.295 |

| Disruptive | 0.986 | 0.000 | 0.000 | 0.000 | 0.899 | 0.065 | 0.542 | 0.116 |

| DataReplay | 0.989 | 0.619 | 0.013 | 0.025 | 0.912 | 0.062 | 0.486 | 0.110 |

| Stale Messages | 0.995 | 0.957 | 0.614 | 0.748 | 0.957 | 0.196 | 0.883 | 0.320 |

| DoS | 0.954 | 0.000 | 0.000 | 0.000 | 0.951 | 0.419 | 0.634 | 0.504 |

| DoSRandom | 0.957 | 0.512 | 0.945 | 0.664 | 0.972 | 0.595 | 0.392 | 0.473 |

| DoSDisruptive | 0.966 | 0.287 | 0.026 | 0.047 | 0.927 | 0.193 | 0.443 | 0.269 |

| GridSybil | 0.966 | 0.850 | 0.588 | 0.695 | 0.953 | 0.476 | 0.705 | 0.568 |

| DataReplaySybil | 0.990 | 0.000 | 0.000 | 0.000 | 0.956 | 0.086 | 0.480 | 0.146 |

| DoSRandomSybil | 0.971 | 0.490 | 0.053 | 0.096 | 0.971 | 0.501 | 0.663 | 0.571 |

| DoSDisruptiveSybil | 0.969 | 0.119 | 0.006 | 0.011 | 0.961 | 0.370 | 0.491 | 0.422 |

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| GRU Architecture | ||||||||

| Legitimate | 0.774 | 0.726 | 0.993 | 0.838 | 0.461 | 0.919 | 0.192 | 0.317 |

| ConstPos | 0.989 | 0.747 | 0.366 | 0.492 | 0.989 | 0.658 | 0.941 | 0.774 |

| ConstPosOffset | 0.988 | 0.849 | 0.137 | 0.236 | 0.982 | 0.388 | 0.748 | 0.511 |

| RandomPos | 0.987 | 0.748 | 0.203 | 0.319 | 0.979 | 0.252 | 0.686 | 0.369 |

| RandomPosOffset | 0.989 | 0.889 | 0.007 | 0.015 | 0.907 | 0.044 | 0.520 | 0.081 |

| ConstSpeed | 0.994 | 0.739 | 0.709 | 0.724 | 0.999 | 0.940 | 0.954 | 0.947 |

| ConstSpeedOffset | 0.991 | 0.633 | 0.542 | 0.584 | 0.994 | 0.559 | 0.827 | 0.667 |

| RandomSpeed | 0.992 | 0.674 | 0.606 | 0.638 | 0.996 | 0.769 | 0.896 | 0.827 |

| RandomSpeedOffset | 0.990 | 0.677 | 0.342 | 0.454 | 0.975 | 0.243 | 0.758 | 0.368 |

| EventualStop | 0.991 | 0.801 | 0.200 | 0.320 | 0.950 | 0.122 | 0.844 | 0.213 |

| Disruptive | 0.986 | 0.000 | 0.000 | 0.000 | 0.910 | 0.064 | 0.466 | 0.113 |

| DataReplay | 0.989 | 0.000 | 0.000 | 0.000 | 0.920 | 0.065 | 0.458 | 0.114 |

| StaleMessages | 0.995 | 0.957 | 0.614 | 0.748 | 0.957 | 0.198 | 0.874 | 0.322 |

| DoS | 0.954 | 0.000 | 0.000 | 0.000 | 0.942 | 0.338 | 0.492 | 0.400 |

| DoSRandom | 0.955 | 0.499 | 0.932 | 0.650 | 0.972 | 0.554 | 0.678 | 0.610 |

| DoSDisruptive | 0.967 | 0.347 | 0.005 | 0.011 | 0.912 | 0.116 | 0.289 | 0.166 |

| GridSybil | 0.952 | 0.714 | 0.456 | 0.556 | 0.950 | 0.455 | 0.707 | 0.553 |

| DataReplaySybil | 0.990 | 0.000 | 0.000 | 0.000 | 0.948 | 0.069 | 0.453 | 0.120 |

| DoSRandomSybil | 0.971 | 0.579 | 0.008 | 0.016 | 0.972 | 0.535 | 0.355 | 0.427 |

| DoSDisruptiveSybil | 0.968 | 0.087 | 0.006 | 0.010 | 0.957 | 0.315 | 0.412 | 0.357 |

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| LSTM Architecture | ||||||||

| Legitimate | 0.778 | 0.728 | 0.997 | 0.842 | 0.445 | 0.939 | 0.160 | 0.273 |

| ConstPos | 0.990 | 0.862 | 0.401 | 0.547 | 0.993 | 0.746 | 0.946 | 0.834 |

| ConstPosOffset | 0.987 | 0.959 | 0.054 | 0.102 | 0.981 | 0.385 | 0.772 | 0.514 |

| RandomPos | 0.988 | 0.750 | 0.339 | 0.467 | 0.978 | 0.253 | 0.756 | 0.379 |

| RandomPosOffset | 0.989 | 0.846 | 0.010 | 0.020 | 0.930 | 0.059 | 0.533 | 0.107 |

| ConstSpeed | 0.993 | 0.738 | 0.628 | 0.679 | 0.998 | 0.904 | 0.931 | 0.917 |

| ConstSpeed ffset | 0.991 | 0.652 | 0.497 | 0.564 | 0.986 | 0.322 | 0.747 | 0.450 |

| RandomSpeed | 0.991 | 0.676 | 0.571 | 0.619 | 0.995 | 0.750 | 0.835 | 0.790 |

| RandomSpeedOffset | 0.991 | 0.803 | 0.300 | 0.437 | 0.973 | 0.213 | 0.692 | 0.326 |

| EventualStop | 0.991 | 0.764 | 0.279 | 0.408 | 0.964 | 0.166 | 0.870 | 0.279 |

| Disruptive | 0.986 | 0.000 | 0.000 | 0.000 | 0.891 | 0.055 | 0.483 | 0.098 |

| DataReplay | 0.989 | 0.000 | 0.000 | 0.000 | 0.891 | 0.048 | 0.467 | 0.087 |

| StaleMessages | 0.995 | 0.957 | 0.614 | 0.748 | 0.957 | 0.196 | 0.874 | 0.320 |

| DoS | 0.954 | 0.000 | 0.000 | 0.000 | 0.936 | 0.314 | 0.540 | 0.397 |

| DoSRandom | 0.953 | 0.486 | 0.941 | 0.641 | 0.967 | 0.500 | 0.376 | 0.429 |

| DoSDisruptive | 0.967 | 0.120 | 0.003 | 0.006 | 0.924 | 0.147 | 0.313 | 0.200 |

| Grid Sybil | 0.962 | 0.835 | 0.518 | 0.639 | 0.945 | 0.420 | 0.688 | 0.521 |

| DataReplaySybil | 0.990 | 0.000 | 0.000 | 0.000 | 0.942 | 0.061 | 0.440 | 0.106 |

| DoSRandomSybil | 0.971 | 0.527 | 0.011 | 0.021 | 0.968 | 0.462 | 0.520 | 0.489 |

| DoSDisruptiveSybil | 0.969 | 0.082 | 0.004 | 0.008 | 0.965 | 0.411 | 0.498 | 0.451 |

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| Bi-LSTM Architecture | ||||||||

| Legitimate | 0.953 | 0.928 | 0.999 | 0.962 | 0.869 | 0.995 | 0.803 | 0.889 |

| ConstPos | 0.997 | 0.850 | 0.992 | 0.916 | 0.999 | 0.979 | 0.995 | 0.987 |

| ConstPosOffset | 0.987 | 1.000 | 0.075 | 0.139 | 0.988 | 0.519 | 0.984 | 0.680 |

| RandomPos | 0.995 | 0.828 | 0.862 | 0.845 | 0.997 | 0.809 | 0.884 | 0.844 |

| RandomPosOffset | 0.989 | 0.917 | 0.010 | 0.020 | 0.965 | 0.171 | 0.907 | 0.288 |

| ConstSpeed | 0.997 | 0.845 | 0.904 | 0.873 | 0.999 | 0.984 | 0.954 | 0.969 |

| ConstSpeedOffset | 0.994 | 0.753 | 0.761 | 0.757 | 0.993 | 0.534 | 0.947 | 0.683 |

| RandomSpeed | 0.995 | 0.840 | 0.740 | 0.787 | 0.997 | 0.789 | 0.974 | 0.872 |

| RandomSpeedOffset | 0.993 | 0.901 | 0.441 | 0.593 | 0.965 | 0.193 | 0.835 | 0.314 |

| EventualStop | 0.996 | 0.900 | 0.762 | 0.826 | 0.989 | 0.417 | 0.974 | 0.584 |

| Disruptive | 0.990 | 0.651 | 0.565 | 0.605 | 0.991 | 0.575 | 0.941 | 0.714 |

| DataReplay | 0.990 | 0.601 | 0.275 | 0.377 | 0.981 | 0.361 | 0.888 | 0.514 |

| StaleMessages | 0.997 | 0.961 | 0.831 | 0.891 | 0.998 | 0.870 | 0.964 | 0.915 |

| DoS | 0.996 | 0.932 | 0.982 | 0.957 | 0.998 | 0.976 | 0.981 | 0.979 |

| DoSRandom | 0.987 | 0.879 | 0.820 | 0.848 | 0.991 | 0.880 | 0.849 | 0.864 |

| DoSDisruptive | 0.985 | 0.797 | 0.741 | 0.768 | 0.997 | 0.968 | 0.942 | 0.955 |

| GridSybil | 0.993 | 0.966 | 0.919 | 0.942 | 0.998 | 0.993 | 0.969 | 0.981 |

| DataReplaySybil | 0.991 | 0.634 | 0.243 | 0.351 | 0.993 | 0.526 | 0.800 | 0.635 |

| DoSRandomSybil | 0.988 | 0.725 | 0.931 | 0.815 | 0.991 | 0.827 | 0.875 | 0.850 |

| DoSDisruptiveSybil | 0.985 | 0.777 | 0.699 | 0.736 | 0.997 | 0.930 | 0.957 | 0.943 |

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| GRU Architecture | ||||||||

| Legitimate | 0.948 | 0.919 | 1.000 | 0.958 | 0.766 | 0.991 | 0.647 | 0.783 |

| ConstPos | 0.997 | 0.843 | 0.966 | 0.900 | 0.999 | 0.953 | 0.984 | 0.968 |

| ConstPosOffset | 0.989 | 0.990 | 0.221 | 0.361 | 0.961 | 0.247 | 0.984 | 0.395 |

| RandomPos | 0.994 | 0.794 | 0.852 | 0.822 | 0.997 | 0.812 | 0.907 | 0.857 |

| RandomPosOffset | 0.989 | 0.000 | 0.000 | 0.000 | 0.931 | 0.095 | 0.907 | 0.172 |

| ConstSpeed | 0.996 | 0.765 | 0.938 | 0.843 | 1.000 | 0.977 | 0.992 | 0.985 |

| ConstSpeedOffset | 0.994 | 0.780 | 0.635 | 0.700 | 0.994 | 0.554 | 0.893 | 0.684 |

| RandomSpeed | 0.995 | 0.883 | 0.636 | 0.740 | 0.997 | 0.800 | 0.974 | 0.878 |

| RandomSpeedOffset | 0.990 | 0.872 | 0.152 | 0.259 | 0.955 | 0.151 | 0.802 | 0.254 |

| EventualStop | 0.996 | 0.897 | 0.750 | 0.817 | 0.974 | 0.230 | 0.948 | 0.371 |

| Disruptive | 0.989 | 0.652 | 0.468 | 0.545 | 0.986 | 0.451 | 0.780 | 0.571 |

| DataReplay | 0.990 | 0.638 | 0.092 | 0.160 | 0.974 | 0.278 | 0.813 | 0.414 |

| StaleMessages | 0.998 | 0.955 | 0.877 | 0.914 | 0.995 | 0.728 | 0.964 | 0.829 |

| DoS | 0.993 | 0.877 | 0.979 | 0.925 | 0.996 | 0.947 | 0.955 | 0.951 |

| DoSRandom | 0.985 | 0.829 | 0.834 | 0.832 | 0.991 | 0.866 | 0.855 | 0.861 |

| DoSDisruptive | 0.977 | 0.639 | 0.698 | 0.667 | 0.992 | 0.863 | 0.890 | 0.876 |

| GridSybil | 0.988 | 0.936 | 0.877 | 0.905 | 0.996 | 0.982 | 0.914 | 0.947 |

| DataReplaySybil | 0.990 | 0.455 | 0.303 | 0.364 | 0.985 | 0.318 | 0.813 | 0.457 |

| DoSRandomSybil | 0.987 | 0.724 | 0.883 | 0.796 | 0.991 | 0.842 | 0.857 | 0.849 |

| DoSDisruptiveSybil | 0.978 | 0.736 | 0.438 | 0.549 | 0.994 | 0.894 | 0.910 | 0.902 |

| Attack Type | No SMOTE | SMOTE | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| LSTM Architecture | ||||||||

| Legitimate | 0.944 | 0.914 | 1.000 | 0.955 | 0.766 | 0.993 | 0.647 | 0.783 |

| ConstPos | 0.995 | 0.755 | 0.953 | 0.843 | 0.998 | 0.943 | 0.978 | 0.960 |

| ConstPosOffset | 0.986 | 0.000 | 0.000 | 0.000 | 0.967 | 0.276 | 0.943 | 0.427 |

| RandomPos | 0.993 | 0.783 | 0.789 | 0.786 | 0.997 | 0.806 | 0.919 | 0.859 |

| RandomPosOffset | 0.989 | 1.000 | 0.006 | 0.011 | 0.930 | 0.095 | 0.933 | 0.173 |

| ConstSpeed | 0.994 | 0.749 | 0.778 | 0.763 | 0.999 | 0.955 | 0.962 | 0.958 |

| ConstSpeedOffset | 0.993 | 0.714 | 0.645 | 0.678 | 0.989 | 0.422 | 0.973 | 0.589 |

| RandomSpeed | 0.993 | 0.763 | 0.641 | 0.696 | 0.996 | 0.774 | 0.922 | 0.841 |

| RandomSpeedOffset | 0.990 | 0.879 | 0.208 | 0.336 | 0.950 | 0.140 | 0.835 | 0.240 |

| EventualStop | 0.995 | 0.869 | 0.673 | 0.759 | 0.978 | 0.261 | 0.948 | 0.409 |

| Disruptive | 0.988 | 0.568 | 0.517 | 0.542 | 0.987 | 0.490 | 0.839 | 0.619 |

| DataReplay | 0.989 | 0.548 | 0.105 | 0.176 | 0.972 | 0.244 | 0.710 | 0.363 |

| StaleMessages | 0.997 | 0.964 | 0.763 | 0.852 | 0.997 | 0.815 | 0.991 | 0.894 |

| DoS | 0.992 | 0.870 | 0.975 | 0.920 | 0.997 | 0.958 | 0.965 | 0.961 |

| DoSRandom | 0.972 | 0.681 | 0.706 | 0.693 | 0.992 | 0.876 | 0.884 | 0.880 |

| DoSDisruptive | 0.977 | 0.668 | 0.598 | 0.631 | 0.994 | 0.907 | 0.907 | 0.907 |

| GridSybil | 0.987 | 0.935 | 0.859 | 0.895 | 0.998 | 0.978 | 0.966 | 0.972 |

| DataReplaySybil | 0.990 | 0.484 | 0.163 | 0.244 | 0.984 | 0.305 | 0.773 | 0.438 |

| DoSRandomSybil | 0.975 | 0.549 | 0.648 | 0.594 | 0.992 | 0.875 | 0.853 | 0.864 |

| DoSDisruptiveSybil | 0.977 | 0.644 | 0.533 | 0.583 | 0.995 | 0.912 | 0.932 | 0.922 |

| Model | Balancing | Acc. | F1 | Balanced Accuracy | Cohen’s | ROC-AUC |

|---|---|---|---|---|---|---|

| LSTM | No SMOTE | 0.8896 | 0.8798 | 0.6915 | 0.8243 | 0.9793 |

| GRU | No SMOTE | 0.8937 | 0.8837 | 0.6876 | 0.8305 | 0.9806 |

| Bi-LSTM | No SMOTE | 0.9180 | 0.9093 | 0.7391 | 0.8673 | 0.9853 |

| LSTM | SMOTE | 0.7404 | 0.7918 | 0.8941 | 0.6351 | 0.9890 |

| GRU | SMOTE | 0.7370 | 0.7881 | 0.8895 | 0.6299 | 0.9895 |

| Bi-LSTM | SMOTE | 0.8485 | 0.8755 | 0.9210 | 0.7655 | 0.9955 |

References

- Sonker, A.; Gupta, R.K. A new procedure for misbehavior detection in vehicular ad-hoc networks using machine learning. Int. J. Electr. Comput. Eng. 2021, 11, 2535–2547. [Google Scholar] [CrossRef]

- Son, L.H. Dealing with the new user cold-start problem in recommender systems: A comparative review. Inf. Syst. 2016, 58, 87–104. [Google Scholar] [CrossRef]

- Xu, X.; Wang, Y.; Wang, P. Comprehensive Review on Misbehavior Detection for Vehicular Ad Hoc Networks. J. Adv. Transp. 2022, 2022, 4725805. [Google Scholar] [CrossRef]

- Nobahari, A.; Bakhshayeshi Avval, D.; Akhbari, A.; Nobahary, S. Investigation of Different Mechanisms to Detect Misbehaving Nodes in Vehicle Ad-Hoc Networks (VANETs). Secur. Commun. Networks 2023, 2023, 4020275. [Google Scholar] [CrossRef]

- Dineshkumar, R.; Siddhanti, P.; Kodati, S.; Shnain, A.H.; Malathy, V. Misbehavior Detection for Position Falsification Attacks in VANETs Using Ensemble Machine Learning. In Proceedings of the 2024 Second International Conference on Data Science and Information System (ICDSIS), IEEE, Hassan, India, 17–18 May 2024; pp. 1–5. [Google Scholar]

- Saudagar, S.; Ranawat, R. An amalgamated novel ids model for misbehaviour detection using vereminet. Comput. Stand. Interfaces 2024, 88, 103783. [Google Scholar] [CrossRef]

- Federal Communications Commission. Amendment of Parts 2 and 90 of the Commission’s Rules to Allocate the 5.850–5.925 GHz Band to the Mobile Service for Dedicated Short Range Communications of Intelligent Transportation Systems; Report and Order FCC 99-305; Federal Communications Commission: Washington, DC, USA, 1999; ET Docket No. 98-95. [Google Scholar]

- Anwar, W.; Franchi, N.; Fettweis, G. Physical Layer Evaluation of V2X Communications Technologies: 5G NR-V2X, LTE-V2X, IEEE 802.11bd, and IEEE 802.11p. In Proceedings of the IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Kenney, J.B. Dedicated Short-Range Communications (DSRC) Standards in the United States. Proc. IEEE 2011, 99, 1162–1182. [Google Scholar] [CrossRef]

- Standard J2735; Dedicated Short Range Communications (DSRC) Message Set Dictionary. SAE International: Warrendale, PA, USA, 2016.

- Arif, M.; Wang, G.; Bhuiyan, M.Z.A.; Wang, T.; Chen, J. A Survey on Security Attacks in VANETs: Communication, Applications and Challenges. Veh. Commun. 2019, 19, 100179. [Google Scholar] [CrossRef]

- Alzahrani, M.; Idris, M.Y.; Ghaleb, F.A.; Budiarto, R. An Improved Robust Misbehavior Detection Scheme for Vehicular Ad Hoc Network. IEEE Access 2022, 10, 111241–111253. [Google Scholar] [CrossRef]

- Lyamin, N.; Vinel, A.; Jonsson, M.; Loo, J. Real-Time Detection of Denial-of-Service Attacks in IEEE 802.11p Vehicular Networks. IEEE Commun. Lett. 2014, 18, 110–113. [Google Scholar] [CrossRef]

- Hammi, B.; Idir, Y.M.; Zeadally, S.; Khatoun, R.; Nebhen, J. Is it Really Easy to Detect Sybil Attacks in C-ITS Environments: A Position Paper. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15069–15078. [Google Scholar] [CrossRef]

- Boualouache, A.; Engel, T. A Survey on Machine Learning-based Misbehavior Detection Systems for 5G and Beyond Vehicular Networks. IEEE Commun. Surv. Tutorials 2023, 25, 1128–1172. [Google Scholar] [CrossRef]

- Grover, J.; Prajapati, N.K.; Laxmi, V.; Gaur, M.S. Machine Learning Approach for Multiple Misbehavior Detection in VANET. In Proceedings of the International Conference on Advances in Computing and Communications, Kochi, India, 22–24 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 644–653. [Google Scholar]

- So, S.; Sharma, P.; Petit, J. Integrating Plausibility Checks and Machine Learning for Misbehavior Detection in VANET. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), IEEE, Orlando, FL, USA, 17–20 December 2018; pp. 564–571. [Google Scholar]

- Sharma, P.; Jaekel, A. Machine Learning Based Misbehaviour Detection in VANET Using Consecutive BSM Approach. IEEE Open J. Veh. Technol. 2021, 3, 1–14. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, K.; Zeng, X.; Xue, X. Misbehavior Detection Based on Support Vector Machine and Dempster-Shafer Theory of Evidence in VANETs. IEEE Access 2018, 6, 59860–59870. [Google Scholar] [CrossRef]

- Kamel, J.; Haidar, F.; Jemaa, I.B.; Kaiser, A.; Lonc, B.; Urien, P. A Misbehavior Authority System for Sybil Attack Detection in C-ITS. In Proceedings of the 2019 IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), IEEE, New York City, NY, USA, 10–12 October 2019; pp. 1117–1123. [Google Scholar] [CrossRef]

- Alladi, T.; Kohli, V.; Chamola, V.; Yu, F.R. Securing the Internet of Vehicles: A Deep Learning-Based Classification Framework. IEEE Netw. Lett. 2021, 3, 94–97. [Google Scholar] [CrossRef]

- Alladi, T.; Gera, B.; Agrawal, A.; Chamola, V.; Yu, F.R. DeepADV: A deep neural network framework for anomaly detection in VANETs. IEEE Trans. Veh. Technol. 2021, 70, 12013–12023. [Google Scholar] [CrossRef]

- Campos, E.M.; Hernandez-Ramos, J.L.; Vidal, A.G.; Baldini, G.; Skarmeta, A. Misbehavior Detection in Intelligent Transportation Systems Based on Federated Learning. Internet Things 2024, 25, 101127. [Google Scholar] [CrossRef]

- Gurjar, D.; Grover, J.; Kheterpal, V.; Vasilakos, A. Federated Learning-Based Misbehavior Classification System for VANET Intrusion Detection. J. Intell. Inf. Syst. 2025, 63, 807–830. [Google Scholar] [CrossRef]

- Kumar, A.; Sharma, P.; Singh, R. Enhanced VANET Security Using XGBoost with Temporal Feature Engineering. Comput. Networks 2022, 215, 109183. [Google Scholar]

- Chen, X.; Wang, J.; Liu, F. Attention-Enhanced LSTM for Real-Time VANET Misbehavior Detection. Comput. Commun. 2024, 198, 45–56. [Google Scholar]

- Khan, W.; Ahmad, J.; Alasbali, N.; Al Mazroa, A.; Alshehri, M.S.; Khan, M.S. A novel transformer-based explainable AI approach using SHAP for intrusion detection in vehicular ad hoc networks. Comput. Networks 2025, 270, 111575. [Google Scholar] [CrossRef]

- Yuce, M.F.; Erturk, M.A.; Aydin, M.A. Misbehavior detection with spatio-temporal graph neural networks. Comput. Electr. Eng. 2024, 116, 109198. [Google Scholar] [CrossRef]

- van der Heijden, R.W.; Lukaseder, T.; Kargl, F. VeReMi: A Dataset for Comparable Evaluation of Misbehavior Detection in VANETs. In Proceedings of the International Conference on Security and Privacy in Communication Systems, Singapore, 8–10 August 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 318–337. [Google Scholar] [CrossRef]

- Kamel, J.; Wolf, M.; van der Hei, R.W.; Kaiser, A.; Urien, P.; Kargl, F. VeReMi Extension: A Dataset for Comparable Evaluation of Misbehavior Detection in VANETs. In Proceedings of the ICC 2020-IEEE International Conference on Communications, Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kamel, J.; Ansari, M.R.; Petit, J.; Kaiser, A.; Jemaa, I.B.; Urien, P. Simulation Framework for Misbehavior Detection in Vehicular Networks. IEEE Trans. Veh. Technol. 2020, 69, 6631–6643. [Google Scholar] [CrossRef]

- Codeca, L.; Frank, R.; Faye, S.; Engel, T. Luxembourg SUMO Traffic (LuST) Scenario: Traffic Demand Evaluation. IEEE Intell. Transp. Syst. Mag. 2017, 9, 52–63. [Google Scholar] [CrossRef]

- Lv, C.; Lam, C.C.; Cao, Y.; Wang, Y.; Kaiwartya, O.; Wu, C. Leveraging Geographic Information and Social Indicators for Misbehavior Detection in VANETs. IEEE Trans. Consum. Electron. 2024, 70, 4411–4424. [Google Scholar] [CrossRef]

- Valentini, E.P.; Rocha Filho, G.P.; De Grande, R.E.; Ranieri, C.M.; Júnior, L.A.P.; Meneguette, R.I. A novel mechanism for misbehavior detection in vehicular networks. IEEE Access 2023, 11, 68113–68126. [Google Scholar] [CrossRef]

- Naqvi, I.; Chaudhary, A.; Kumar, A. A Neuro-Genetic Security Framework for Misbehavior Detection in VANETs. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 410–418. [Google Scholar] [CrossRef]

- Sangwan, A.; Sangwan, A.; Singh, R.P. A Classification of Misbehavior Detection Schemes for VANETs: A Survey. Wirel. Pers. Commun. 2023, 129, 285–322. [Google Scholar] [CrossRef]

- Patel, N.; Gupta, R.; Agarwal, S. Advanced Ensemble Methods for Large-Scale VANET Misbehavior Detection. IEEE Trans. Intell. Transp. Syst. 2024, 25, 2847–2859. [Google Scholar]

- Alladi, T.; Agrawal, A.; Gera, B.; Chamola, V.; Sikdar, B.; Guizani, M. Deep neural networks for securing IoT enabled vehicular ad-hoc networks. In Proceedings of the ICC 2021-IEEE International Conference on Communications, IEEE, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Liu, W.; Zhang, M.; Chen, L. Graph Neural Networks for Vehicular Behavior Analysis in VANETs. IEEE Trans. Veh. Technol. 2023, 72, 9876–9887. [Google Scholar]

- Wang, H.; Li, Q.; Zhou, Y. Transformer-Based Architectures for VANET Security: A Comprehensive Study. IEEE Netw. 2024, 38, 112–119. [Google Scholar]

- Kim, S.H.; Park, M.J.; Lee, D.W. Hybrid SVM-GRU Architecture for Enhanced VANET Intrusion Detection. J. Netw. Comput. Appl. 2023, 203, 103401. [Google Scholar]

- Rodriguez, C.; Martinez, E.; Gonzalez, P. RF-LSTM Hybrid Framework for Large-Scale VANET Misbehavior Detection. IEEE Trans. Netw. Serv. Manag. 2024, 21, 2234–2245. [Google Scholar]

| Study | Year | Approach | Dataset | Accuracy | F1-Score | Speed (Samples/s) |

|---|---|---|---|---|---|---|

| Grover et al. [16] | 2011 | Random Forest | Custom | 93–95% | 0.92 | 20,000 |

| Sharma & Jaekel [18] | 2021 | ML Ensemble | VeReMi | 98.5% | 0.97 | 15,800 |

| Kumar et al. [25] | 2022 | XGBoost + Temporal | VeReMi | 96.2% | 0.95 | 16,500 |

| Kamel et al. [20] | 2019 | LSTM | VeReMi | 89.5% | 0.87 | 9200 |

| Chen et al. [26] | 2024 | Attention-LSTM | VeReMi Ext | 93.1% | 0.91 | 9100 |

| Khan et al. [27] | 2025 | Transformer + SHAP | VeReMi Ext | 96.2% | — | 7200 |

| Yüce et al. [28] | 2024 | Spatiotemporal GNN | Converted MBD | 99.9% | — | 4800 |

| Identified Gap in Literature | VeMisNet Contribution |

|---|---|

| Systematic Architecture Comparison | |

| Inconsistent DL evaluation protocols | Unified LSTM/GRU/Bi-LSTM comparison |

| Different datasets and feature sets | Identical setup with 14 optimized features |

| Domain-Informed Feature Engineering | |

| Algorithm emphasis over feature design | 14 spatiotemporal DSRC-aware features |

| Limited communication pattern analysis | Inter-message timing and neighbor density |

| Large-Scale Validation | |

| Evaluation limited to <1 M samples | Testing from 100 K to 2 M records |

| Unknown scalability characteristics | Consistent performance across scales |

| Class Imbalance Treatment | |

| Inadequate handling of imbalanced data | Post-split SMOTE with validation |

| Poor minority attack detection | 40–50% improvement in rare classes |

| Deployment Readiness | |

| Theoretical focus without practical analysis | Real-time inference: 8437–13,683 samples/s |

| No deployment guidelines provided | Sub-100 ms response capability |

| Label | Attack Name | Category |

|---|---|---|

| 0 | Legitimate Vehicle | – |

| 1–4 | Constant Position | Position Falsification |

| Constant Position Offset | ||

| Random Position | ||

| Random Position Offset | ||

| 5–8 | Constant Speed | Speed Manipulation |

| Constant Speed Offset | ||

| Random Speed | ||

| Random Speed Offset | ||

| 9–12 | Eventual Stop | Freeze/Replay Attacks |

| Disruptive | ||

| Data Replay | ||

| Stale Messages | ||

| 13–15 | Denial of Service (DoS) | DoS Variants |

| DoS Random | ||

| DoS Disruptive | ||

| 16–19 | Grid Sybil | Sybil-Based Attacks |

| Data Replay Sybil | ||

| DoS Random Sybil | ||

| DoS Disruptive Sybil |

| Features | Category | Selected Features |

|---|---|---|

| 5 | Temporal and ID | Receive Time, Receiver Label, Receiver ID, |

| Module ID, Send Time | ||

| 10 | Temporal and ID | Previous 5 features |

| Communication | + Sender ID, Sender Pseudo, Message ID | |

| Spatial | + Position | |

| 15 | Temporal and ID | Previous 5 features |

| Communication | + Sender ID, Sender Pseudo, Message ID | |

| Spatial | + Position , Position Noise | |

| Kinematic | + Speed , Speed Noise | |

| 20 | Temporal and ID | Previous 5 features |

| Communication | + Sender ID, Sender Pseudo, Message ID | |

| Spatial | + Position , Position Noise | |

| Kinematic | + Speed , Speed Noise | |

| Dynamic | + Acceleration , Acceleration Noise | |

| 25 | Temporal and ID | Previous 5 features |

| Communication | + Sender ID, Sender Pseudo, Message ID | |

| Spatial | + Position , Position Noise | |

| Kinematic | + Speed , Speed Noise | |

| Dynamic | + Acceleration , Acceleration Noise | |

| Directional | + Heading , Heading Noise |

| Class Category | Sample Count | Percentage | Imbalance Ratio | Detection Difficulty |

|---|---|---|---|---|

| Legitimate Vehicles | 297,822 | 59.6% | 1:1 (baseline) | Low |

| Sybil Attacks | 65,649 | 13.1% | 4.5:1 | Moderate |

| DoS Attacks | 60,213 | 12.0% | 4.9:1 | Moderate |

| Position Attacks | 27,653 | 5.5% | 10.8:1 | High |

| Speed Attacks | 24,038 | 4.8% | 12.4:1 | Very High |

| Replay Attacks | 23,625 | 4.7% | 12.7:1 | Very High |

| Total | 499,000 | 100.0% | - | - |

| Metric | Formula |

|---|---|

| Accuracy | |

| Balanced Accuracy | |

| Precision | |

| Recall | |

| F1-Score |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 100 k | Accuracy | 0.8373 | 0.8439 | 0.8432 |

| F1-Score | 0.8280 | 0.8346 | 0.8352 | |

| Recall | 0.8373 | 0.8439 | 0.8432 | |

| Precision | 0.8432 | 0.8516 | 0.8476 | |

| 500 k | Accuracy | 0.8053 | 0.8067 | 0.8163 |

| F1-Score | 0.7956 | 0.7974 | 0.8065 | |

| Recall | 0.8053 | 0.8067 | 0.8163 | |

| Precision | 0.8211 | 0.8216 | 0.8362 | |

| 1 M | Accuracy | 0.8342 | 0.7976 | 0.8046 |

| F1-Score | 0.8268 | 0.7888 | 0.7959 | |

| Recall | 0.8342 | 0.7976 | 0.8046 | |

| Precision | 0.8353 | 0.8046 | 0.8204 | |

| 2 M | Accuracy | 0.8016 | 0.7982 | 0.8062 |

| F1-Score | 0.7909 | 0.7878 | 0.7959 | |

| Recall | 0.8016 | 0.7982 | 0.8062 | |

| Precision | 0.8234 | 0.8178 | 0.8283 |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Accuracy | 0.9355 | 0.9359 | 0.9702 |

| (0.9344, 0.9366) | (0.9344, 0.9373) | (0.9692, 0.9712) | ||

| F1-Score | 0.9321 | 0.9330 | 0.9694 | |

| (0.9308, 0.9334) | (0.9314, 0.9346) | (0.9684, 0.9705) | ||

| Precision | 0.9332 | 0.9331 | 0.9694 | |

| (0.9319, 0.9345) | (0.9314, 0.9347) | (0.9684, 0.9705) | ||

| 1 M | Accuracy | 0.9022 | 0.9043 | 0.9449 |

| (0.9011, 0.9032) | (0.9032, 0.9054) | (0.9438, 0.9459) | ||

| F1-Score | 0.8969 | 0.8992 | 0.9434 | |

| (0.8957, 0.8981) | (0.8980, 0.9005) | (0.9422, 0.9444) | ||

| Precision | 0.8975 | 0.8995 | 0.9437 | |

| (0.8963, 0.8987) | (0.8983, 0.9007) | (0.9426, 0.9448) | ||

| 2 M | Accuracy | 0.8872 | 0.8903 | 0.9272 |

| (0.8856, 0.8888) | (0.8887, 0.8919) | (0.9258, 0.9286) | ||

| F1-Score | 0.8830 | 0.8865 | 0.9255 | |

| (0.8813, 0.8847) | (0.8848, 0.8882) | (0.9240, 0.9270) | ||

| Precision | 0.8845 | 0.8875 | 0.9260 | |

| (0.8828, 0.8862) | (0.8858, 0.8892) | (0.9245, 0.9275) |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Training Time (min) | 136.6 | 158.9 | 158.3 |

| Mean Latency (ms) | 39.31 | 40.41 | 39.68 | |

| Missed Attack Rate (%) | 5.04 | 5.16 | 2.32 | |

| 1 M | Training Time (min) | 255.2 | 208.8 | 259.2 |

| Mean Latency (ms) | 38.26 | 39.83 | 38.83 | |

| Missed Attack Rate (%) | 7.35 | 7.35 | 3.82 | |

| 2 M | Training Time (min) | 357.0 | 282.0 | 389.0 |

| Mean Latency (ms) | 37.5 | 39.0 | 38.0 | |

| Missed Attack Rate (%) | 8.08 | 7.94 | 4.97 |

| Scaling Transition | Accuracy Change (%) | Missed Attack Rate Change | ||||

|---|---|---|---|---|---|---|

| LSTM | GRU | Bi-LSTM | LSTM | GRU | Bi-LSTM | |

| 500 k → 1 M | −3.33 | −3.16 | −2.53 | +2.31% | +2.19% | +1.50% |

| 1 M → 2 M | −1.50 | −1.40 | −1.77 | +0.73% | +0.59% | +1.15% |

| Total (500 k → 2 M) | −4.83 | −4.56 | −4.30 | +3.04% | +2.78% | +2.65% |

| Dataset Size | Features Count | GRU | LSTM | Bi-LSTM | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | Prec | Acc | F1 | Prec | Acc | F1 | Prec | ||

| 100 k | 5 | 0.6633 | 0.5290 | 0.4400 | 0.6633 | 0.5290 | 0.4400 | 0.6633 | 0.5291 | 0.4400 |

| 10 | 0.7125 | 0.6128 | 0.5480 | 0.7179 | 0.6195 | 0.5521 | 0.7189 | 0.6201 | 0.5583 | |

| 15 | 0.7150 | 0.6164 | 0.5558 | 0.7155 | 0.6171 | 0.5527 | 0.7169 | 0.6194 | 0.5560 | |

| 20 | 0.7180 | 0.6203 | 0.5559 | 0.7146 | 0.6129 | 0.5524 | 0.7197 | 0.6217 | 0.5786 | |

| 25 | 0.7151 | 0.6163 | 0.5567 | 0.7145 | 0.6085 | 0.5372 | 0.7160 | 0.6187 | 0.5563 | |

| 500 k | 5 | 0.5962 | 0.4454 | 0.3554 | 0.5962 | 0.4454 | 0.3555 | 0.6633 | 0.5290 | 0.4400 |

| 10 | 0.6357 | 0.5147 | 0.4560 | 0.6374 | 0.5170 | 0.4620 | 0.7189 | 0.6201 | 0.5583 | |

| 15 | 0.6376 | 0.5118 | 0.4608 | 0.6361 | 0.5157 | 0.4590 | 0.7169 | 0.6194 | 0.5560 | |

| 20 | 0.6389 | 0.5181 | 0.4646 | 0.6362 | 0.5159 | 0.4613 | 0.7197 | 0.6217 | 0.5786 | |

| 25 | 0.6366 | 0.5169 | 0.4627 | 0.6368 | 0.5171 | 0.4672 | 0.7160 | 0.6187 | 0.5563 | |

| 1 M | 5 | 0.5841 | 0.4308 | 0.3413 | 0.5845 | 0.4308 | 0.3412 | 0.5841 | 0.4308 | 0.3412 |

| 10 | 0.6202 | 0.4914 | 0.4546 | 0.6198 | 0.4929 | 0.4370 | 0.6216 | 0.4954 | 0.4519 | |

| 15 | 0.6217 | 0.4984 | 0.4392 | 0.6202 | 0.4922 | 0.4391 | 0.6212 | 0.4963 | 0.4371 | |

| 20 | 0.6204 | 0.4919 | 0.4359 | 0.6201 | 0.4917 | 0.4382 | 0.6220 | 0.4981 | 0.4369 | |

| 25 | 0.6203 | 0.4952 | 0.4376 | 0.6200 | 0.4938 | 0.4401 | 0.6215 | 0.4958 | 0.4459 | |

| 2 M | 5 | 0.5858 | 0.4328 | 0.3431 | 0.5858 | 0.4328 | 0.3431 | 0.5858 | 0.4328 | 0.3431 |

| 10 | 0.6198 | 0.4888 | 0.4402 | 0.6227 | 0.4950 | 0.4358 | 0.6195 | 0.4903 | 0.4330 | |

| 15 | 0.6210 | 0.4991 | 0.4316 | 0.6202 | 0.4905 | 0.4347 | 0.6105 | 0.4764 | 0.4147 | |

| 20 | 0.6206 | 0.4942 | 0.4277 | 0.6196 | 0.4881 | 0.4439 | 0.6090 | 0.4731 | 0.4097 | |

| 25 | 0.6226 | 0.4981 | 0.4341 | 0.6230 | 0.4957 | 0.4348 | 0.6133 | 0.4878 | 0.4267 | |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 100 k | Accuracy | 0.8012 | 0.8023 | 0.8139 |

| F1-Score | 0.7562 | 0.7562 | 0.7735 | |

| Precision | 0.7672 | 0.7522 | 0.7831 | |

| 500 k | Accuracy | 0.7502 | 0.7406 | 0.7530 |

| F1-Score | 0.6838 | 0.6710 | 0.6873 | |

| Precision | 0.6878 | 0.6476 | 0.7031 | |

| 1 M | Accuracy | 0.7203 | 0.7212 | 0.7378 |

| F1-Score | 0.6477 | 0.6489 | 0.6730 | |

| Precision | 0.6684 | 0.6683 | 0.6911 | |

| 2 M | Accuracy | 0.7192 | 0.7157 | 0.7202 |

| F1-Score | 0.6430 | 0.6399 | 0.6442 | |

| Precision | 0.6361 | 0.6157 | 0.6475 |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Accuracy | 0.8896 | 0.8937 | 0.9180 |

| (0.8877, 0.8915) | (0.8918, 0.8955) | (0.9163, 0.9197) | ||

| F1-Score | 0.8798 | 0.8837 | 0.9093 | |

| (0.8777, 0.8819) | (0.8816, 0.8858) | (0.9074, 0.9113) | ||

| Precision | 0.8845 | 0.8886 | 0.9148 | |

| (0.8824, 0.8866) | (0.8865, 0.8907) | (0.9131, 0.9165) | ||

| 1 M | Accuracy | 0.8686 | 0.8588 | 0.8896 |

| (0.8671, 0.8700) | (0.8572, 0.8603) | (0.8883, 0.8911) | ||

| F1-Score | 0.8501 | 0.8443 | 0.8780 | |

| (0.8484, 0.8517) | (0.8425, 0.8460) | (0.8766, 0.8797) | ||

| Precision | 0.8498 | 0.8509 | 0.8845 | |

| (0.8480, 0.8516) | (0.8491, 0.8527) | (0.8830, 0.8861) | ||

| 2 M | Accuracy | 0.8476 | 0.8239 | 0.8612 |

| (0.8458, 0.8494) | (0.8220, 0.8258) | (0.8596, 0.8628) | ||

| F1-Score | 0.8204 | 0.8049 | 0.8467 | |

| (0.8184, 0.8224) | (0.8028, 0.8070) | (0.8450, 0.8484) | ||

| Precision | 0.8151 | 0.8132 | 0.8542 | |

| (0.8130, 0.8172) | (0.8111, 0.8153) | (0.8525, 0.8559) |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Training Time (min) | 42.3 | 39.7 | 45.1 |

| Mean Latency (ms) | 35.87 | 36.14 | 35.99 | |

| Missed Attack Rate (%) | 11.05 | 10.77 | 8.98 | |

| 1 M | Training Time (min) | 85.2 | 78.6 | 91.8 |

| Mean Latency (ms) | 34.82 | 35.89 | 35.86 | |

| Missed Attack Rate (%) | 14.19 | 13.13 | 12.01 | |

| 2 M | Training Time (min) | 178.5 | 162.3 | 195.2 |

| Mean Latency (ms) | 34.5 | 35.7 | 35.8 | |

| Missed Attack Rate (%) | 16.85 | 16.42 | 14.53 |

| Scaling Transition | Accuracy Change (%) | Missed Attack Rate Change | ||||

|---|---|---|---|---|---|---|

| LSTM | GRU | Bi-LSTM | LSTM | GRU | Bi-LSTM | |

| 500 k → 1 M | −2.10 | −3.49 | −2.84 | +3.14% | +2.36% | +3.03% |

| 1 M → 2 M | −2.10 | −3.49 | −2.84 | +2.66% | +3.29% | +2.52% |

| Total (500 k → 2 M) | −4.20 | −6.98 | −5.68 | +5.80% | +5.65% | +5.55% |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Recall | 0.8896 (0.8877, 0.8915) | 0.8937 (0.8918, 0.8955) | 0.9180 (0.9163, 0.9197) |

| MCC | 0.8267 (0.8238, 0.8296) | 0.8328 (0.8299, 0.8357) | 0.8688 (0.8661, 0.8715) | |

| Cohen’s | 0.8243 (0.8213, 0.8273) | 0.8305 (0.8275, 0.8335) | 0.8673 (0.8645, 0.8700) | |

| Balanced Accuracy | 0.6915 (0.6869, 0.6963) | 0.6876 (0.6829, 0.6924) | 0.7391 (0.7349, 0.7435) | |

| ROC-AUC (Macro) | 0.9793 | 0.9806 | 0.9853 | |

| ECE | 0.0089 | 0.0081 | 0.0064 | |

| NPV (%) | 99.58 | 99.62 | 99.67 | |

| 1 M | Recall | 0.8686 (0.8671, 0.8700) | 0.8588 (0.8572, 0.8603) | 0.8896 (0.8883, 0.8911) |

| MCC | 0.7914 (0.7892, 0.7936) | 0.7758 (0.7734, 0.7781) | 0.8259 (0.8240, 0.8280) | |

| Cohen’s | 0.7878 (0.7856, 0.7900) | 0.7729 (0.7705, 0.7753) | 0.8234 (0.8214, 0.8255) | |

| Balanced Accuracy | 0.6346 (0.6317, 0.6375) | 0.6301 (0.6272, 0.6332) | 0.7021 (0.6992, 0.7051) | |

| ROC-AUC (Macro) | 0.9675 | 0.9708 | 0.9792 | |

| ECE | 0.0072 | 0.0073 | 0.0054 | |

| NPV (%) | 98.82 | 98.87 | 98.98 | |

| 2 M | Recall | 0.8476 (0.8458, 0.8494) | 0.8239 (0.8220, 0.8258) | 0.8612 (0.8596, 0.8628) |

| MCC | 0.7561 (0.7536, 0.7586) | 0.7167 (0.7140, 0.7194) | 0.7830 (0.7808, 0.7852) | |

| Cohen’s | 0.7514 (0.7489, 0.7539) | 0.7153 (0.7126, 0.7180) | 0.7795 (0.7773, 0.7817) | |

| Balanced Accuracy | 0.5777 (0.5745, 0.5809) | 0.5737 (0.5704, 0.5770) | 0.6651 (0.6619, 0.6683) | |

| ROC-AUC (Macro) | 0.9557 | 0.9583 | 0.9701 | |

| ECE | 0.0095 | 0.0092 | 0.0071 | |

| NPV (%) | 98.05 | 98.12 | 98.29 |

| Approach | Features | Acc (%) | F1 (%) | Rec (%) | Prec (%) | Improvement |

|---|---|---|---|---|---|---|

| UMAP Features | 10–20 | 71.97 | 62.17 | 71.97 | 57.86 | Baseline |

| Basic Kinematic | 8 | 81.40 | 77.30 | 81.40 | 78.10 | +9.42 (+13.06%) |

| Newly Added | 14 | 91.80 | 90.93 | 91.80 | 91.48 | +19.78 (+27.48%) |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Accuracy | 0.9966 | 0.9963 | 0.9981 |

| (0.9963, 0.9969) | (0.9960, 0.9966) | (0.9978, 0.9984) | ||

| F1-Score | 0.9966 | 0.9963 | 0.9981 | |

| (0.9963, 0.9969) | (0.9960, 0.9966) | (0.9978, 0.9984) | ||

| Precision | 0.9966 | 0.9963 | 0.9981 | |

| (0.9963, 0.9969) | (0.9960, 0.9966) | (0.9978, 0.9984) | ||

| 1 M | Accuracy | 0.9935 | 0.9927 | 0.9970 |

| (0.9932, 0.9938) | (0.9924, 0.9930) | (0.9967, 0.9973) | ||

| F1-Score | 0.9935 | 0.9926 | 0.9970 | |

| (0.9932, 0.9938) | (0.9923, 0.9929) | (0.9967, 0.9973) | ||

| Precision | 0.9935 | 0.9927 | 0.9970 | |

| (0.9932, 0.9938) | (0.9924, 0.9930) | (0.9967, 0.9973) | ||

| 2 M | Accuracy | 0.9904 | 0.9891 | 0.9959 |

| (0.9900, 0.9908) | (0.9887, 0.9895) | (0.9955, 0.9963) | ||

| F1-Score | 0.9904 | 0.9891 | 0.9959 | |

| (0.9900, 0.9908) | (0.9887, 0.9895) | (0.9955, 0.9963) | ||

| Precision | 0.9904 | 0.9891 | 0.9959 | |

| (0.9900, 0.9908) | (0.9887, 0.9895) | (0.9955, 0.9963) |

| Records | Metric | LSTM | GRU | Bi-LSTM |

|---|---|---|---|---|

| 500 k | Training Time (min) | 83.7 | 83.8 | 71.2 |

| Mean Latency (ms) | 42.32 | 40.89 | 41.76 | |

| Missed Attack Rate (%) | 0.37 | 0.42 | 0.19 | |

| 1 M | Training Time (min) | 251.8 | 207.6 | 230.0 |

| Mean Latency (ms) | 37.92 | 39.59 | 40.90 | |

| Missed Attack Rate (%) | 0.73 | 0.82 | 0.33 | |

| 2 M | Training Time (min) | 420.0 | 330.0 | 380.0 |

| Mean Latency (ms) | 37.5 | 39.2 | 40.5 | |

| Missed Attack Rate (%) | 1.09 | 1.22 | 0.47 |

| Scaling Transition | Accuracy Change (%) | Missed Attack Rate Change | ||||

|---|---|---|---|---|---|---|

| LSTM | GRU | Bi-LSTM | LSTM | GRU | Bi-LSTM | |

| 500 k → 1 M | −0.31 | −0.36 | −0.11 | +0.36% | +0.40% | +0.14% |

| 1 M → 2 M | −0.31 | −0.36 | −0.11 | +0.36% | +0.40% | +0.14% |

| Total (500 k → 2 M) | −0.62 | −0.72 | −0.22 | +0.72% | +0.80% | +0.28% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Youness, N.; Mostafa, A.; Sobh, M.A.; Bahaa, A.M.; Nagaty, K. VeMisNet: Enhanced Feature Engineering for Deep Learning-Based Misbehavior Detection in Vehicular Ad Hoc Networks. J. Sens. Actuator Netw. 2025, 14, 100. https://doi.org/10.3390/jsan14050100

Youness N, Mostafa A, Sobh MA, Bahaa AM, Nagaty K. VeMisNet: Enhanced Feature Engineering for Deep Learning-Based Misbehavior Detection in Vehicular Ad Hoc Networks. Journal of Sensor and Actuator Networks. 2025; 14(5):100. https://doi.org/10.3390/jsan14050100

Chicago/Turabian StyleYouness, Nayera, Ahmad Mostafa, Mohamed A. Sobh, Ayman M. Bahaa, and Khaled Nagaty. 2025. "VeMisNet: Enhanced Feature Engineering for Deep Learning-Based Misbehavior Detection in Vehicular Ad Hoc Networks" Journal of Sensor and Actuator Networks 14, no. 5: 100. https://doi.org/10.3390/jsan14050100

APA StyleYouness, N., Mostafa, A., Sobh, M. A., Bahaa, A. M., & Nagaty, K. (2025). VeMisNet: Enhanced Feature Engineering for Deep Learning-Based Misbehavior Detection in Vehicular Ad Hoc Networks. Journal of Sensor and Actuator Networks, 14(5), 100. https://doi.org/10.3390/jsan14050100