AI-Based Pedestrian Detection and Avoidance at Night Using Multiple Sensors

Abstract

1. Introduction

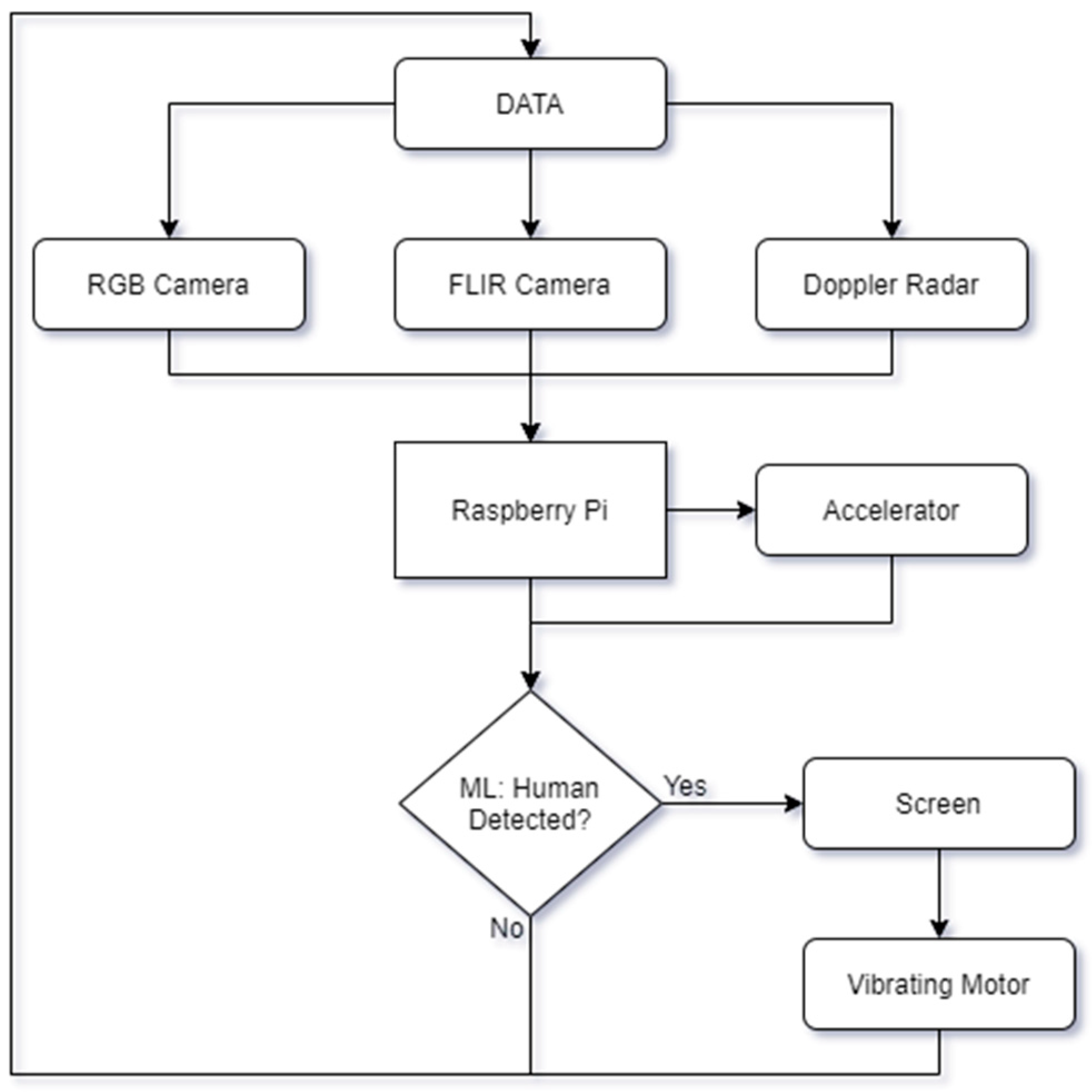

2. System Overview

2.1. System Design

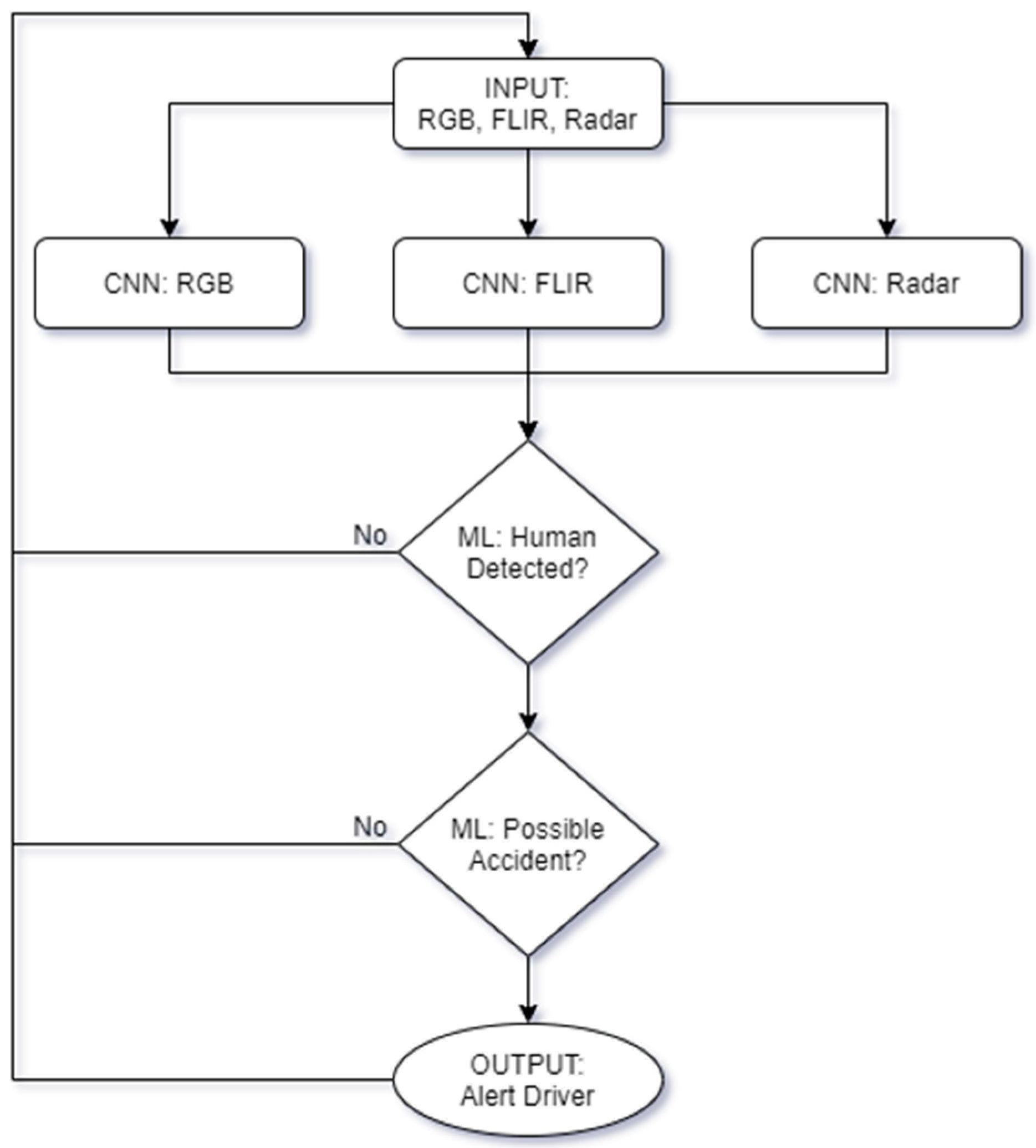

2.2. Multi-Sensor Data Fusion Algorithm

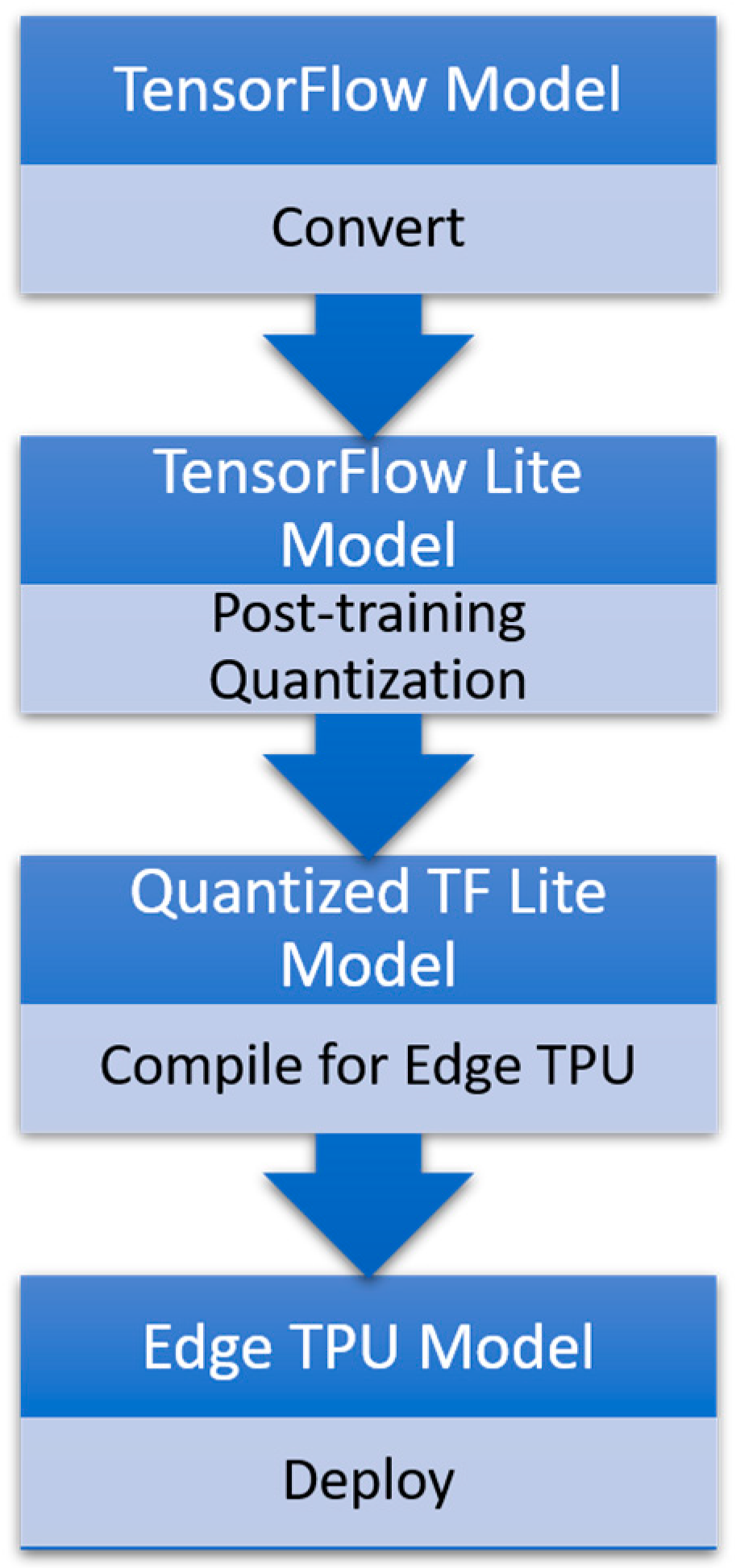

2.3. Deep Convolutional Neural Network Design

3. Pedestrian Detection Using a Video Camera

3.1. RGB Camera

3.2. Data Collection Using the RGB Camera

3.3. Performance Results

3.4. Limitations

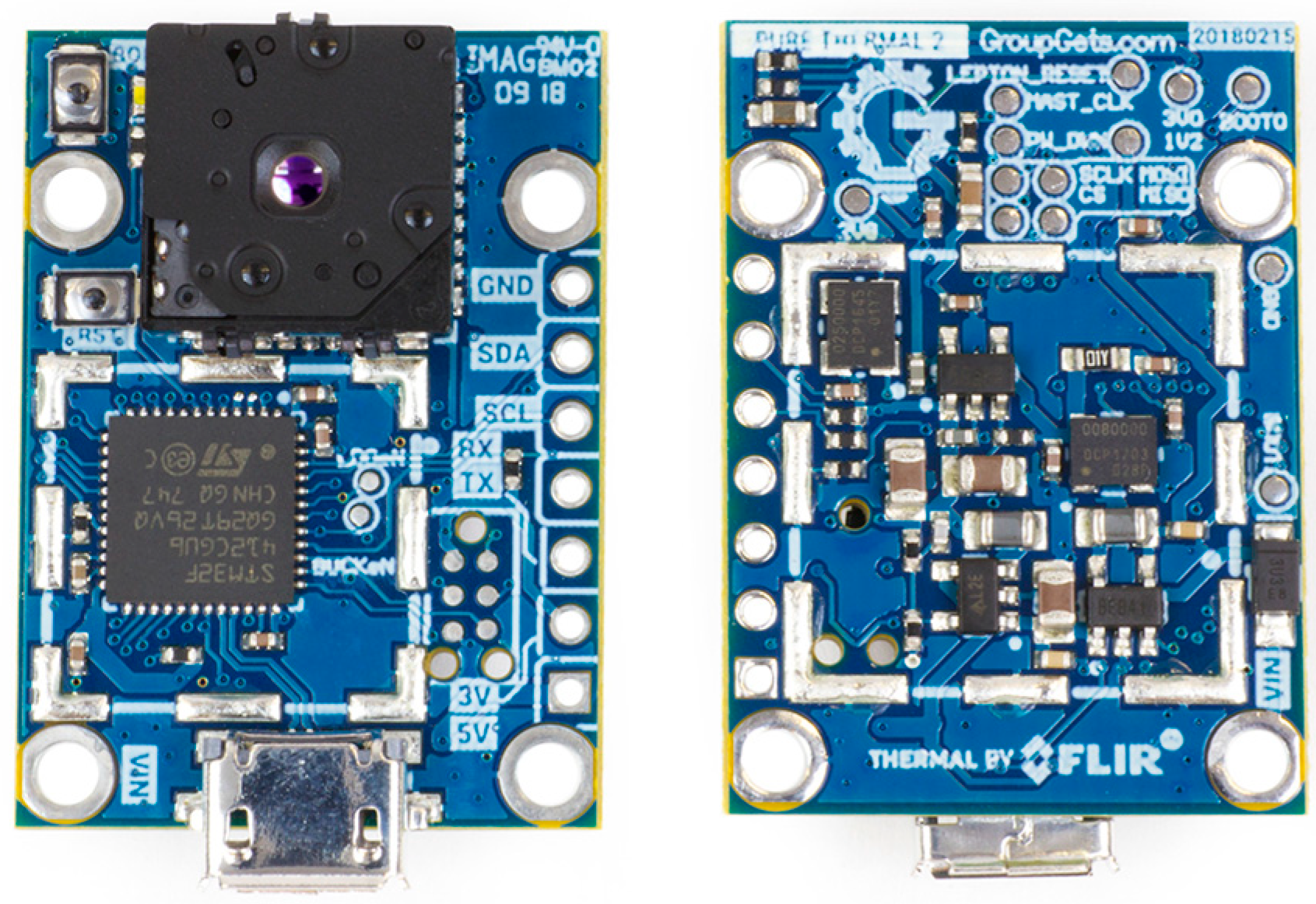

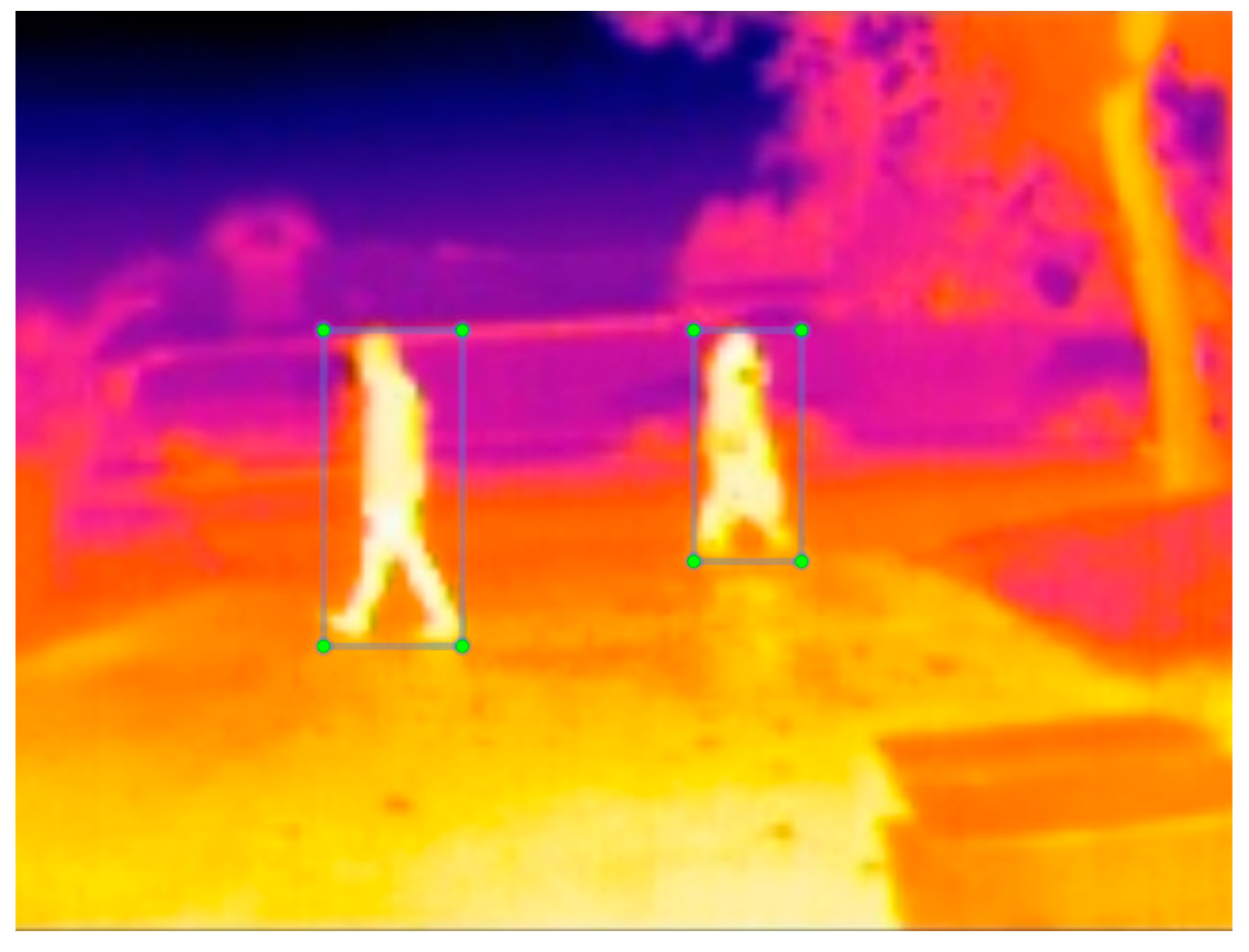

4. Pedestrian Detection Using an IR Camera

4.1. Infrared

4.2. Data Collection Using Infrared Camera

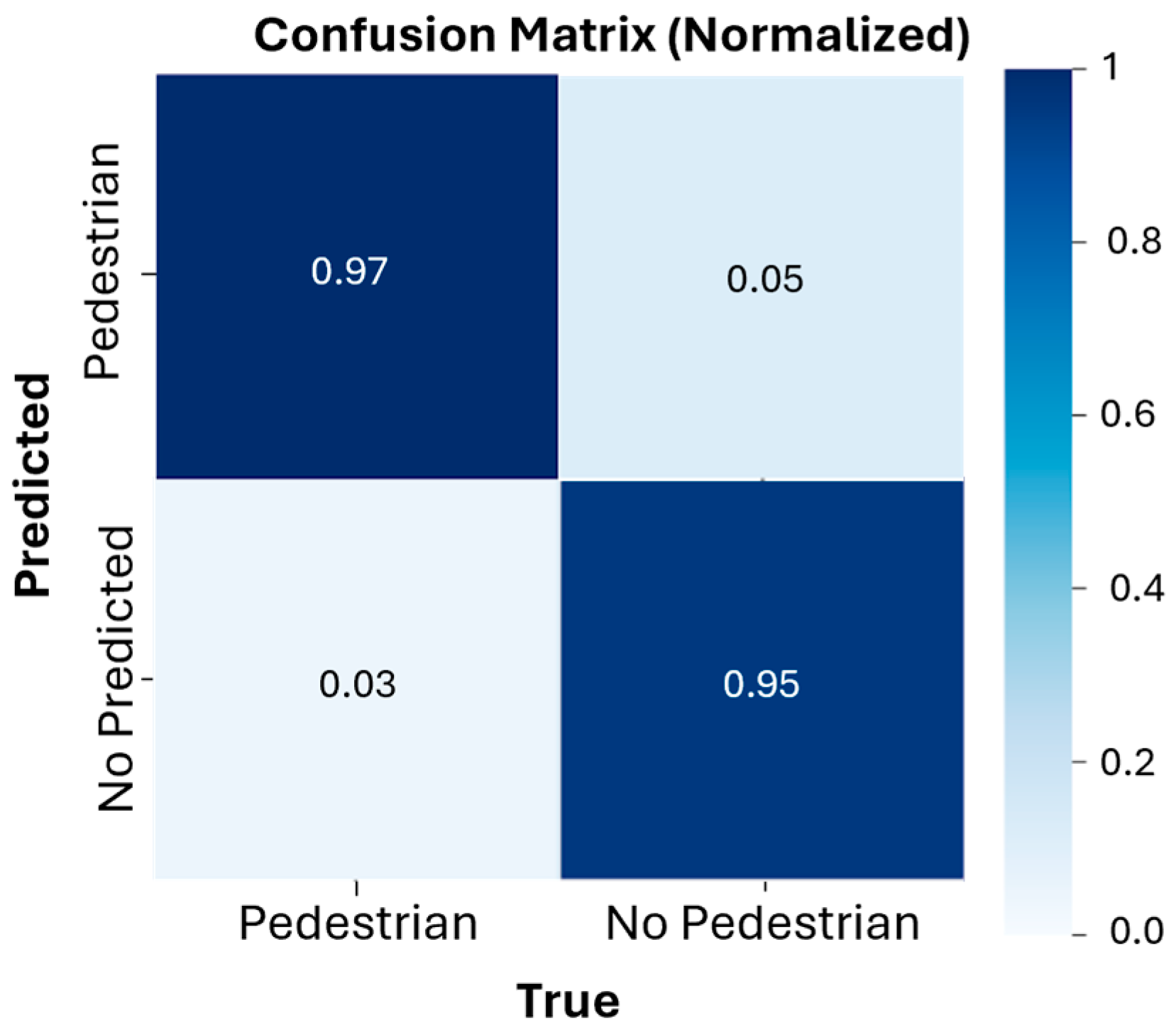

4.3. Performance Results

4.4. Limitations

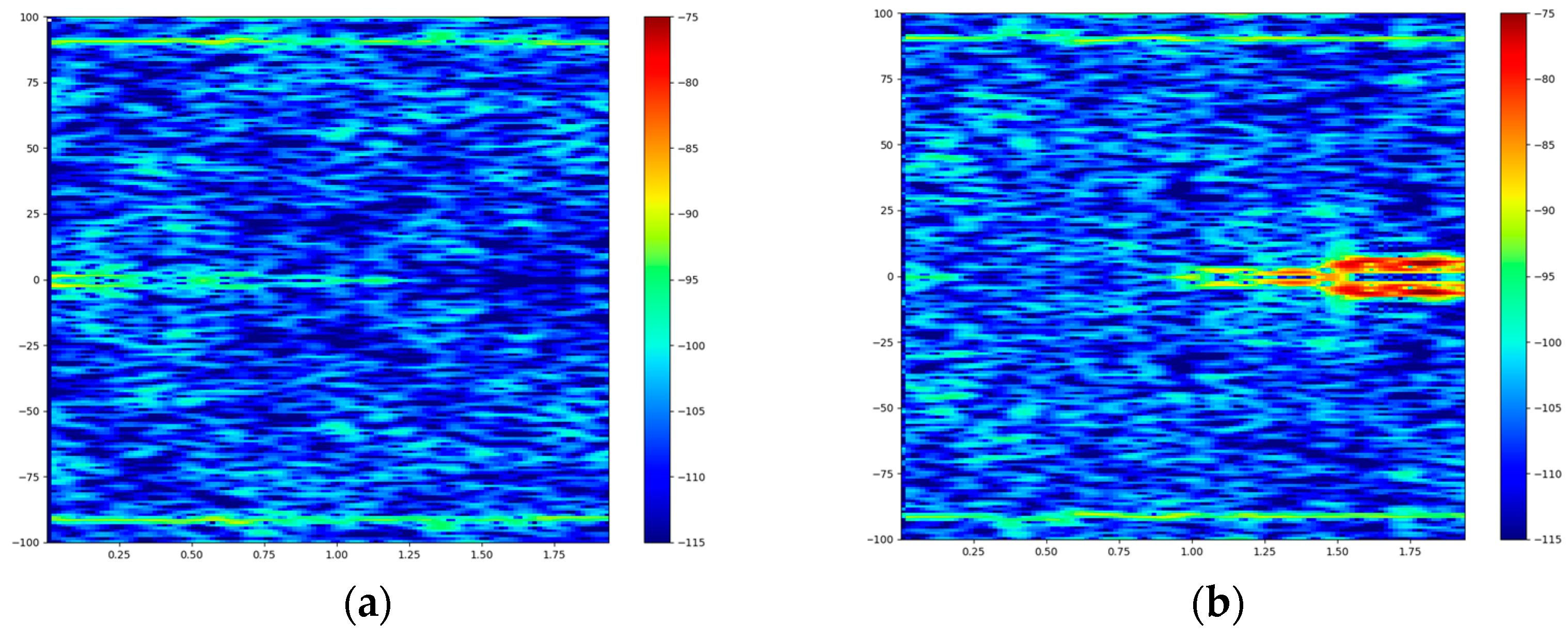

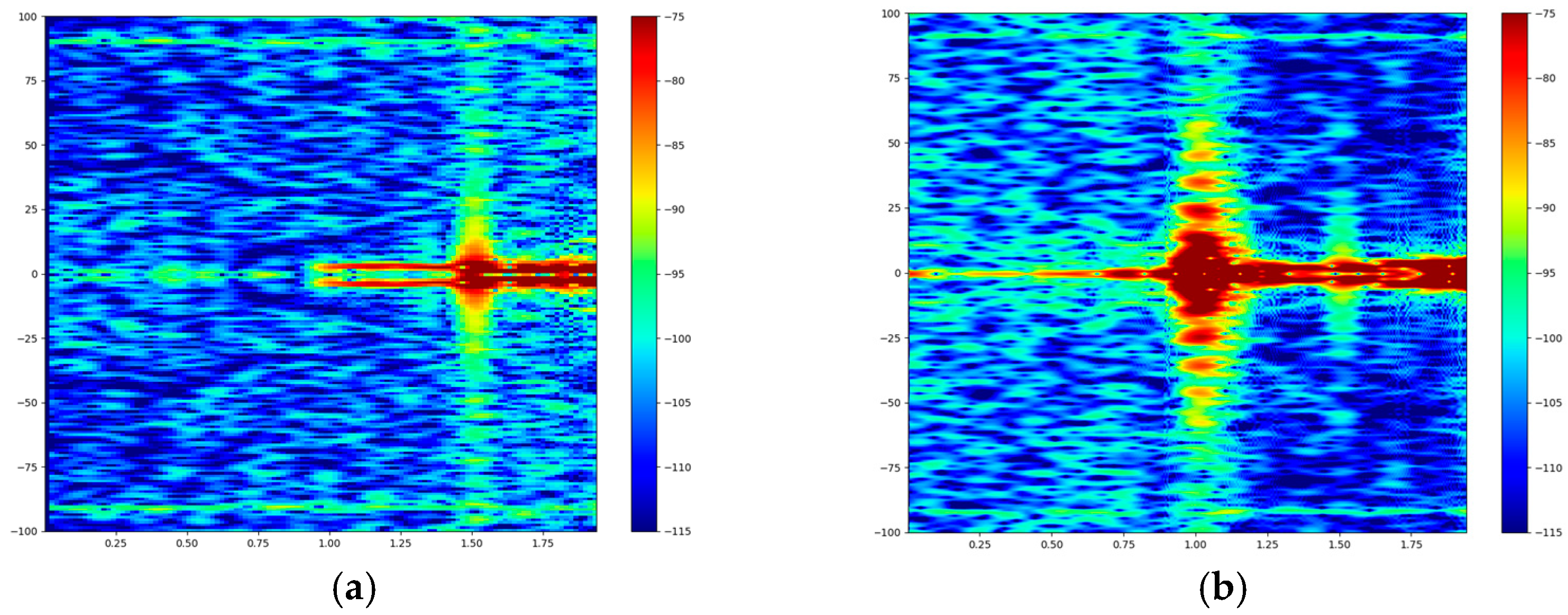

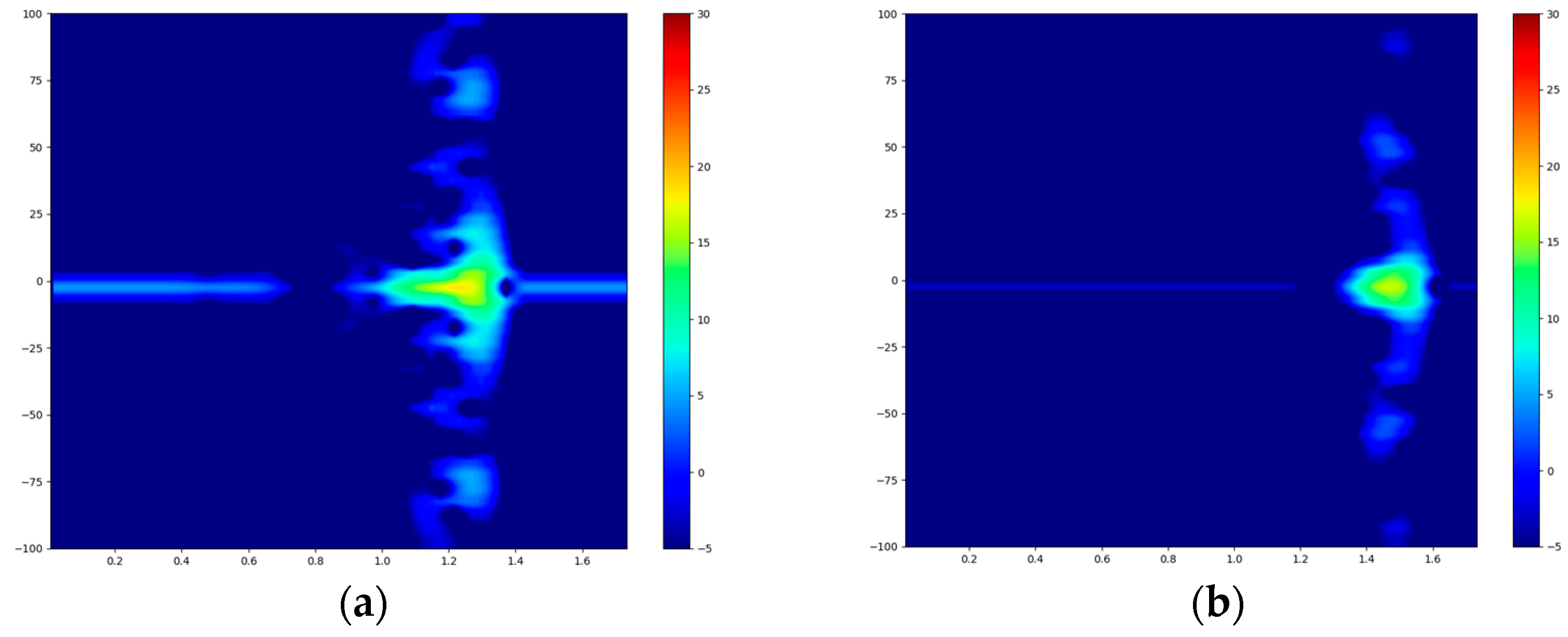

5. Pedestrian Detection Using a Micro-Doppler Radar

5.1. Micro-Doppler Radar Setup

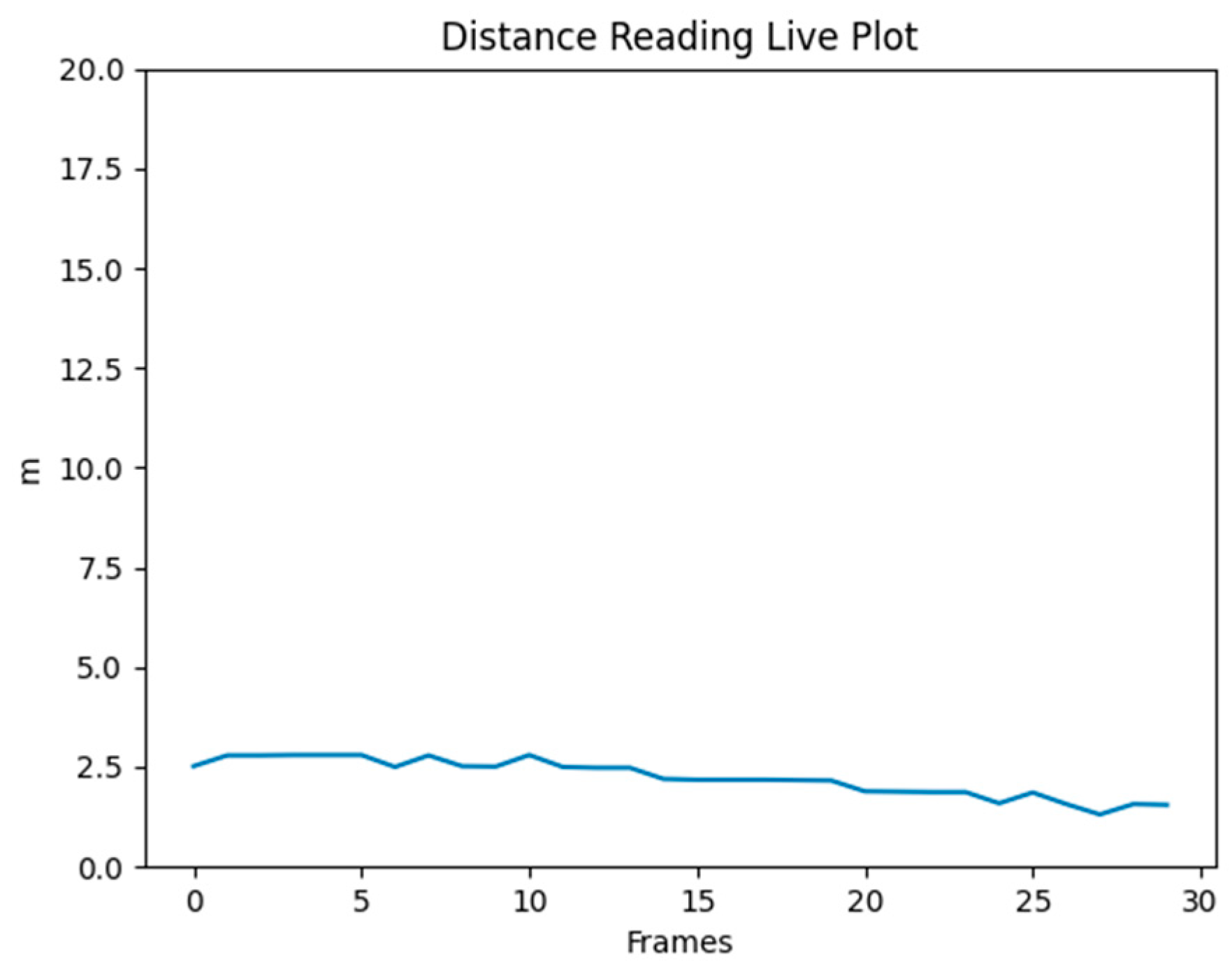

5.2. Experimental Results Using the Radar Sensor

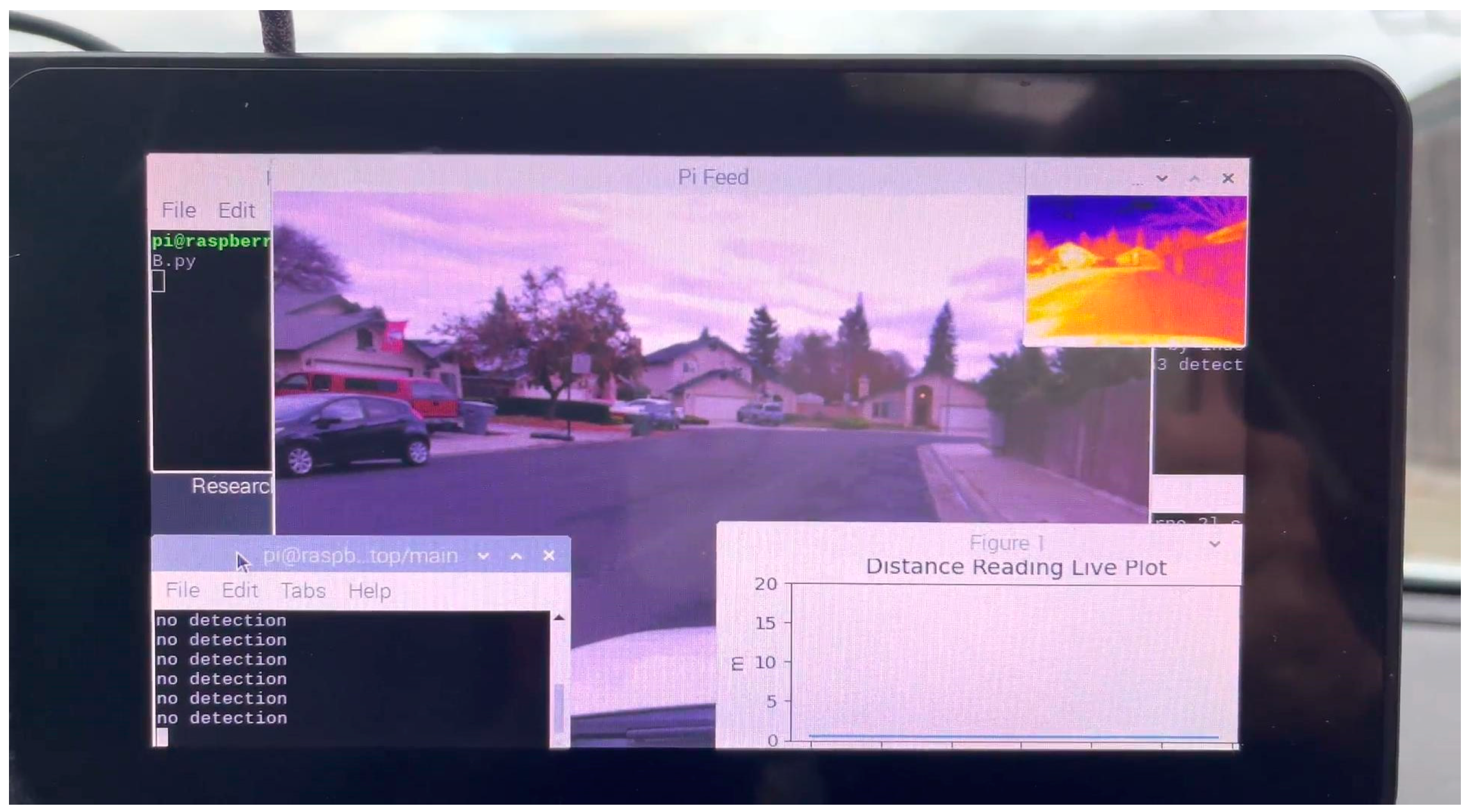

6. Prototype Experimentation

6.1. System Setup in a Vehicle

6.2. Testbed Experimentation in a Vehicle

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- National Highway Traffic Safety Administration (NHTSA) United States. Early Estimate of Motor Vehicle Traffic Fatalities for the First 9 Months (Jan–Sep) of 2020. Available online: https://www.nhtsa.gov/risky-driving/drowsy-driving (accessed on 5 June 2024).

- Feese, J. Highway Safety Association, New Projection: 2019 Pedestrian Fatalities Highest Since 1988. February 2020. Available online: https://www.ghsa.org/resources/news-releases/pedestrians20 (accessed on 5 June 2024).

- Galvin, G. The 10 Worst States for Pedestrian Traffic Deaths. February 2022. Available online: https://www.usnews.com/news/healthiest-communities/slideshows/10-worst-states-for-pedestrian-traffic-deaths?slide=3 (accessed on 5 June 2024).

- Romero, S. Pedestrian Deaths Spike in U.S. as Reckless Driving Surges. 14 February 2022. Available online: https://www.nytimes.com/2022/02/14/us/pedestrian-deaths-pandemic.html (accessed on 5 June 2024).

- Valera, D. Your Central Valley News, 64 Percent of Fresno’s Deadly Collisions are Vehicle vs. Pedestrian Ones, Police Say. 21 October 2018. Available online: https://www.yourcentralvalley.com/news/64-percent-of-fresnos-deadly-collisions-are-vehicle-vs-pedestrian-ones-police-say/ (accessed on 5 June 2024).

- Yanagisawa, M.; Swanson, E.D.; Azeredo, P.; Najm, W. Estimation of Potential Safety Benefits for Pedestrian Crash Avoidance Systems; National Highway Traffic Safety Administration: Washington, DC, USA, 2017. [Google Scholar]

- Tubaishat, M.; Zhuang, P.; Qi, Q.; Shang, Y. Wireless sensor networks in intelligent transportation systems. Wirel. Commun. Mob. Comput. 2009, 9, 287–302. [Google Scholar] [CrossRef]

- El-Faouzi, N.E.; Leung, H.; Kurian, A. Data fusion in intelligent transportation systems: Progress and challenges—A survey. Inf. Fusion 2011, 12, 4–10. [Google Scholar] [CrossRef]

- Lim, H.S.; Lee, J.E.; Park, H.M.; Lee, S. Stationary Target Identification in a Traffic Monitoring Radar System. Appl. Sci. 2020, 10, 5838. [Google Scholar] [CrossRef]

- Cao, Z.; Bıyık, E.; Wang, W.Z.; Raventos, A.; Gaidon, A.; Rosman, G.; Sadigh, D. Reinforcement learning based control of imitative policies for near-accident driving. arXiv 2020, arXiv:2007.00178. [Google Scholar]

- Datondji, S.R.; Dupuis, Y.; Subirats, P.; Vasseur, P. A survey of vision-based traffic monitoring of road intersections. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2681–2698. [Google Scholar] [CrossRef]

- Al Sobbahi, R.; Tekli, J. Comparing deep learning models for low-light natural scene image enhancement and their impact on object detection and classification: Overview, empirical evaluation, and challenges. Signal Process. Image Commun. 2022, 109, 116848. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhou, K.; Cui, G.; Jia, L.; Fang, Z.; Yang, X.; Xia, Q. Deep learning for occluded and multiscale pedestrian detection: A review. IET Image Process. 2021, 15, 286–301. [Google Scholar] [CrossRef]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian Detection at Day/Night Time with Visible and FIR Cameras: A Comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef] [PubMed]

- Jain, D.K.; Zhao, X.; González-Almagro, G.; Gan, C.; Kotecha, K. Multimodal pedestrian detection using metaheuristics with deep convolutional neural network in crowded scenes. Inf. Fusion 2023, 95, 401–414. [Google Scholar] [CrossRef]

- Fukui, H.; Yamashita, T.; Yamauchi, Y.; Fujiyoshi, H.; Murase, H. Pedestrian detection based on deep convolutional neural network with ensemble inference network. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 21 June–1 July 2015; pp. 223–228. [Google Scholar]

- Luo, Y.; Remillard, J.; Hoetzer, D. Pedestrian detection in near-infrared night vision system. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 51–58. [Google Scholar] [CrossRef]

- Han, T.Y.; Song, B.C. Night vision pedestrian detection based on adaptive preprocessing using near-infrared camera. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Republic of Korea, 26–28 October 2016; pp. 1–3. [Google Scholar]

- Fu, X. Pedestrian detection based on improved interface difference and double threshold with parallel structure. In Proceedings of the 2019 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Shanghai, China, 21–24 November 2019; pp. 133–138. [Google Scholar]

- Kulhandjian, H.; Ramachandran, N.; Kulhandjian, M.; D’Amours, C. Human Activity Classification in Underwater using sonar and Deep Learning. In Proceedings of the ACM Conference on Underwater Networks and Systems (WUWNet), Atlanta, GA, USA, 23–25 October 2019. [Google Scholar]

- Kulhandjian, H.; Sharma, P.; Kulhandjian, M.; D’Amours, C. Sign Language Gesture Recognition using Doppler Radar and Deep Learning. In Proceedings of the IEEE GLOBECOM Workshop on Machine Learning for Wireless Communications, Waikoloa, HI, USA, 9–13 December 2019. [Google Scholar]

- Kulhandjian, H.; Davis, A.; Leong, L.; Bendot, M.; Kulhandjian, M. AI-based Human Detection and Localization in Heavy Smoke using Radar and IR Camera. In Proceedings of the IEEE Radar Conference (RadarConf23), San Antonio, TX, USA, 1–5 May 2023. [Google Scholar]

- Kulhandjian, H.; Barron, J.; Tamiyasu, M.; Thompson, M.; Kulhandjian, M. Pedestrian Detection and Avoidance at Night Using Multiple Sensors and Machine Learning. In Proceedings of the IEEE International Conference on Computing, Networking and Communications (ICNC), Honolulu, Hawaii, 20–23 February 2023. [Google Scholar]

- Kulhandjian, H.; Martinez, N.; Kulhandjian, M. Drowsy Driver Detection using Deep Learning and Multi-Sensor Data Fusion. In Proceedings of the IEEE Vehicle Power and Propulsion Conference (IEEE VPPC 2022), Merced, CA, USA, 1–4 November 2022. [Google Scholar]

- FLIR. Flir Lepton Engineering Datasheet. 2018. Available online: https://cdn.sparkfun.com/assets/e/9/6/0/f/EngineeringDatasheet-16465-FLiR_Lepton_8760_-_Thermal_Imaging_Module.pdf (accessed on 5 June 2024).

- Teledyne FLIR Thermal Dataset for Algorithm Training. Available online: https://www.flir.com/oem/adas/adas-dataset-form/ (accessed on 5 June 2024).

- Tzutalin. LabelImg. GitHub Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 5 June 2024).

| Article | Features | Pedestrian Detection | Prototype | Using RGB, IR, Radar | Data Fusion | Low-light Condition |

|---|---|---|---|---|---|---|

| Lim et al. [9] | Discriminating stational targets in traffic monitoring radar systems. | Radar | ||||

| Cao et al. [10] | Hierarchical reinforcement and imitation learning (H-ReIL). | RGB | ||||

| Sobbahi and Tekli [12] | Low-light image enhancement and object detection. | RGB | ✓ | |||

| Xiao et al. [13] | Concentrates on occlusion and multi-scale pedestrian identification challenges. | ✓ | RGB | |||

| Gonzales et al. [14] | Assess the accuracy gain of various pedestrian models. | ✓ | RGB, Far IR | ✓ | ||

| Jain et al. [15] | Multimodal pedestrian detection for crowded scenes. | ✓ | RGB | ✓ | ||

| Fukui et al. [16] | Vision-based pedestrian detection framework based on CNN. | ✓ | RGB | ✓ | ||

| Luo et al. [17] | Pedestrian detection utilizing active and passive night vision. | ✓ | Near IR | ✓ | ||

| Han and Song [18] | Night-vision pedestrian detection system for automatic emergency breaking via infrared cameras. | ✓ | Near IR | ✓ | ||

| Fu [19] | Pedestrian detection based on three-frame difference method. | ✓ | RGB | |||

| This article | Thermal, visible image and radar fusion and deep learning techniques for low-light pedestrian detection. | ✓ | ✓ | RGB, IR, Radar | ✓ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kulhandjian, H.; Barron, J.; Tamiyasu, M.; Thompson, M.; Kulhandjian, M. AI-Based Pedestrian Detection and Avoidance at Night Using Multiple Sensors. J. Sens. Actuator Netw. 2024, 13, 34. https://doi.org/10.3390/jsan13030034

Kulhandjian H, Barron J, Tamiyasu M, Thompson M, Kulhandjian M. AI-Based Pedestrian Detection and Avoidance at Night Using Multiple Sensors. Journal of Sensor and Actuator Networks. 2024; 13(3):34. https://doi.org/10.3390/jsan13030034

Chicago/Turabian StyleKulhandjian, Hovannes, Jeremiah Barron, Megan Tamiyasu, Mateo Thompson, and Michel Kulhandjian. 2024. "AI-Based Pedestrian Detection and Avoidance at Night Using Multiple Sensors" Journal of Sensor and Actuator Networks 13, no. 3: 34. https://doi.org/10.3390/jsan13030034

APA StyleKulhandjian, H., Barron, J., Tamiyasu, M., Thompson, M., & Kulhandjian, M. (2024). AI-Based Pedestrian Detection and Avoidance at Night Using Multiple Sensors. Journal of Sensor and Actuator Networks, 13(3), 34. https://doi.org/10.3390/jsan13030034