Self-Supervised Learning and Multi-Sensor Fusion for Alpine Wetland Vegetation Mapping: Bayinbuluke, China

Abstract

1. Introduction

2. Results

2.1. Implementation Details

. We minimize the loss in Equation (4):

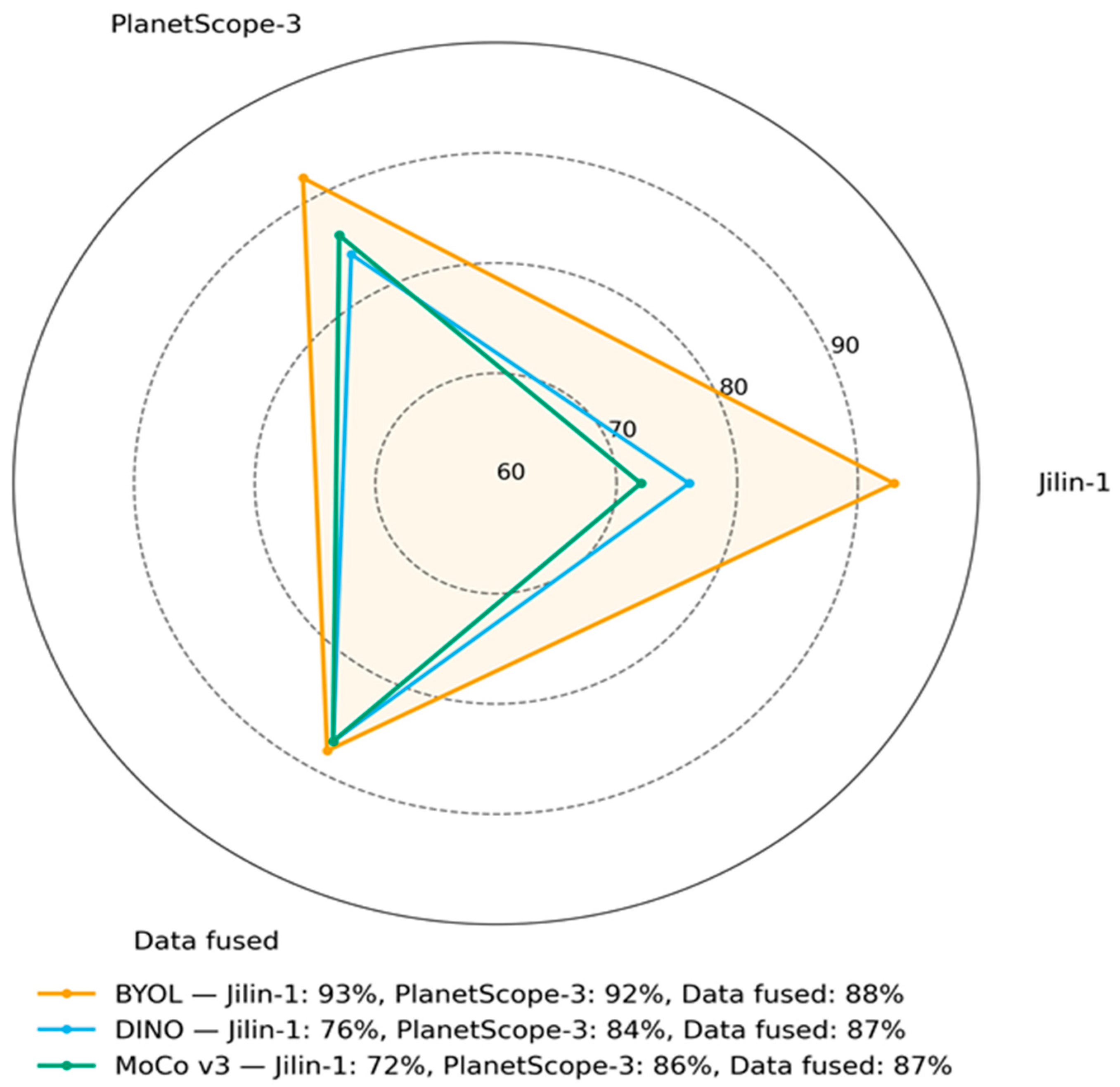

. We minimize the loss in Equation (4):2.2. Comparing with Other SSL Methods

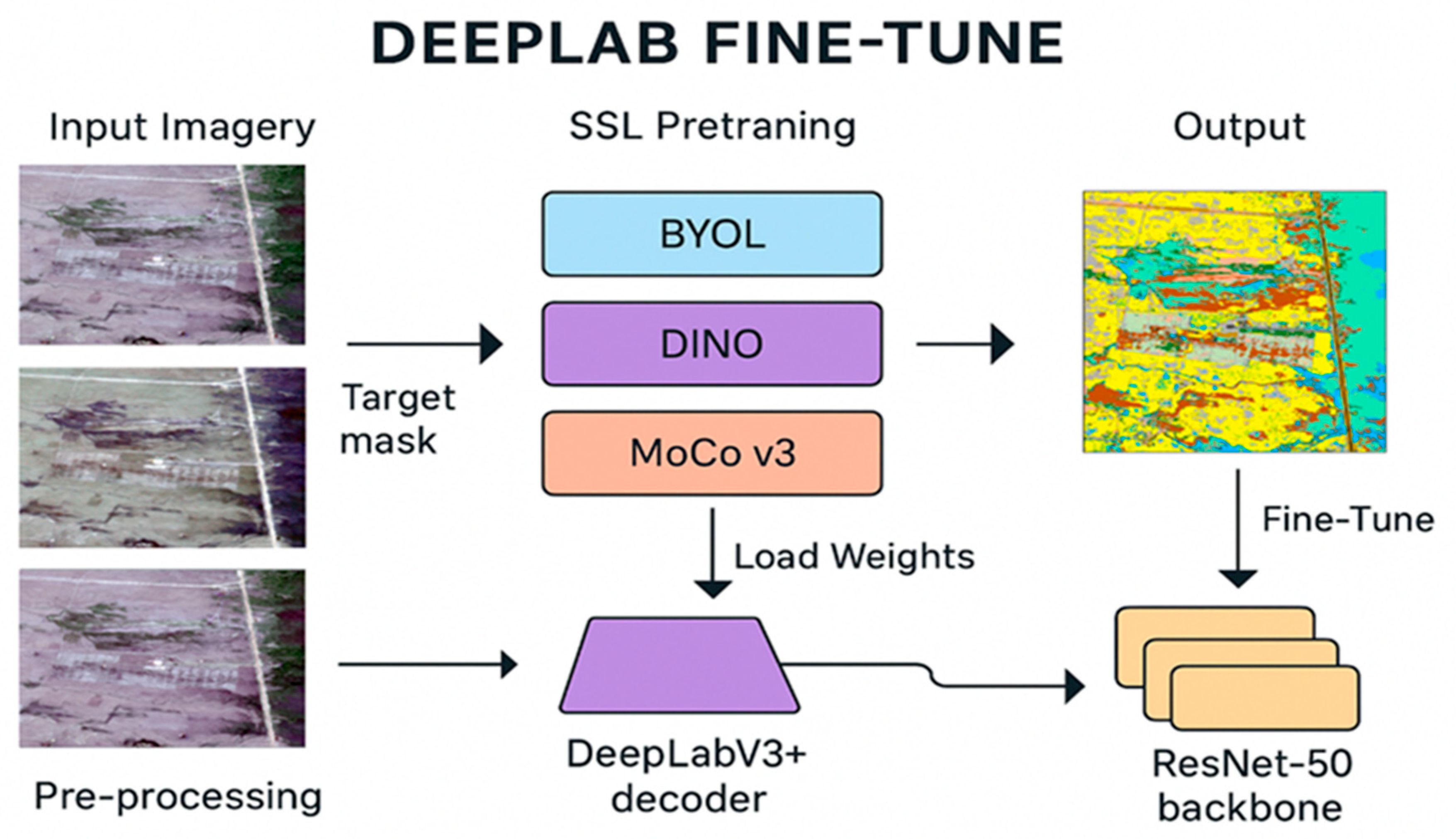

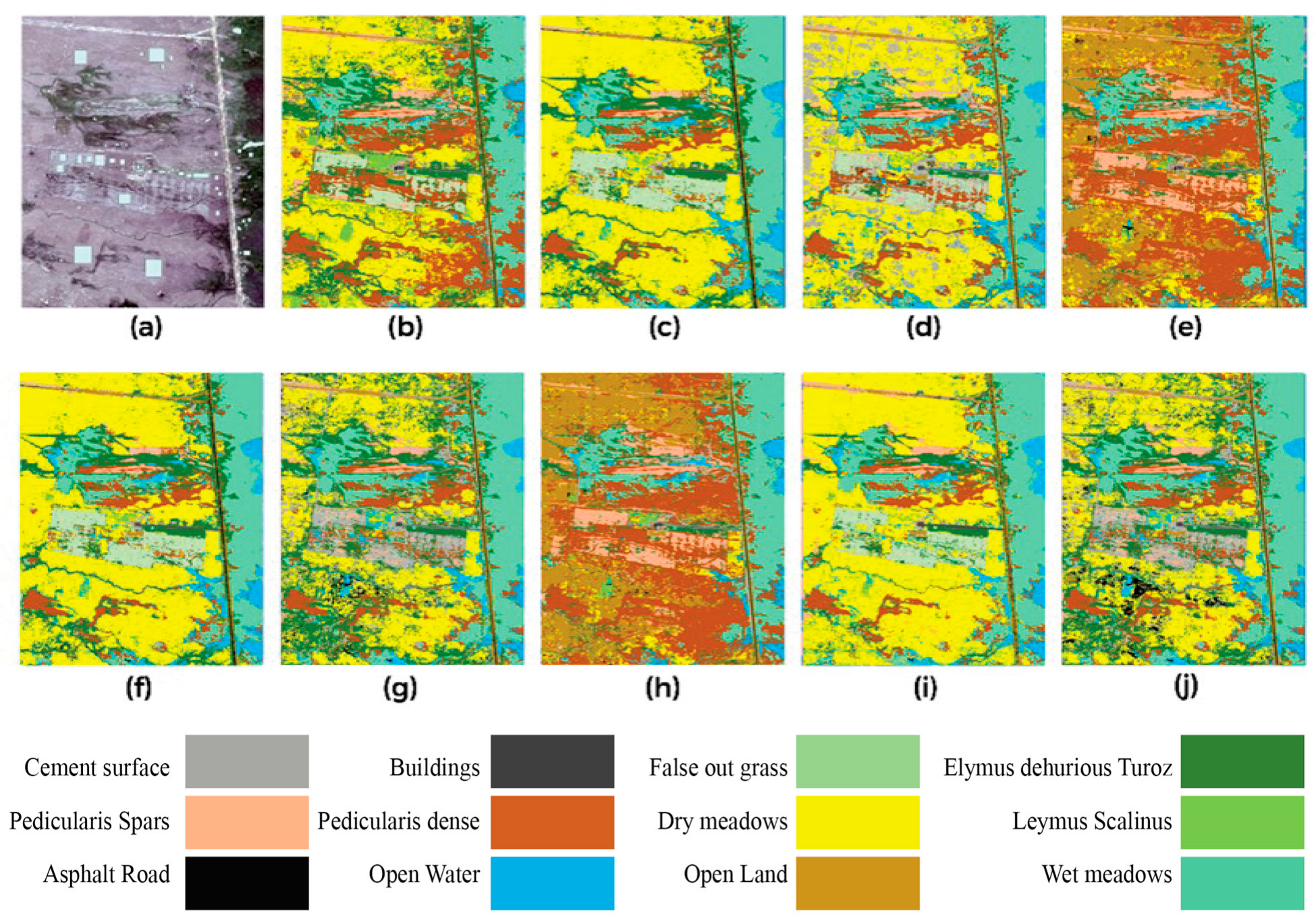

2.3. Fine Tune SSL by DeeplabV3

3. Discussion

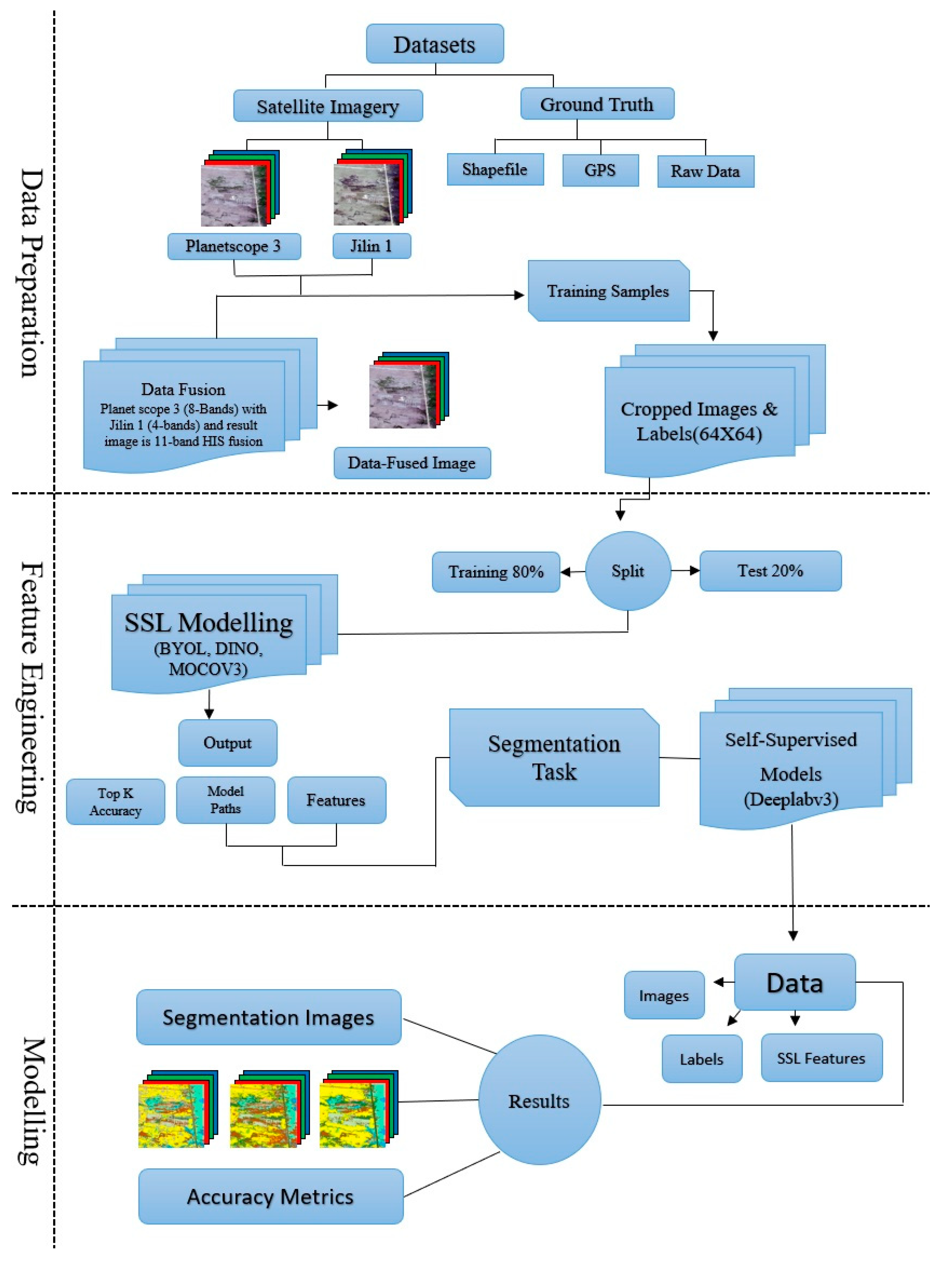

4. Material and Methods

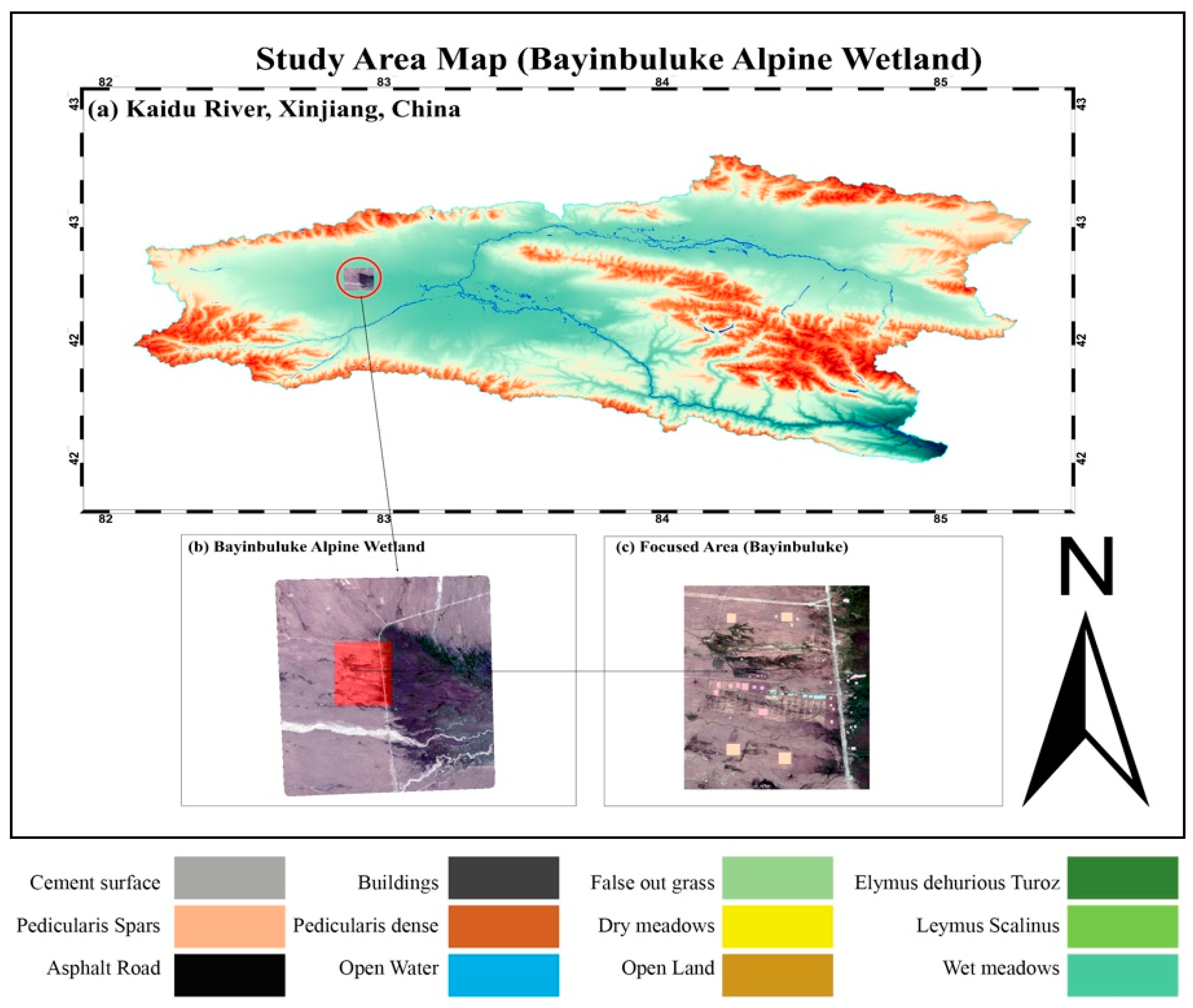

4.1. Study Area

4.2. Data and Processing

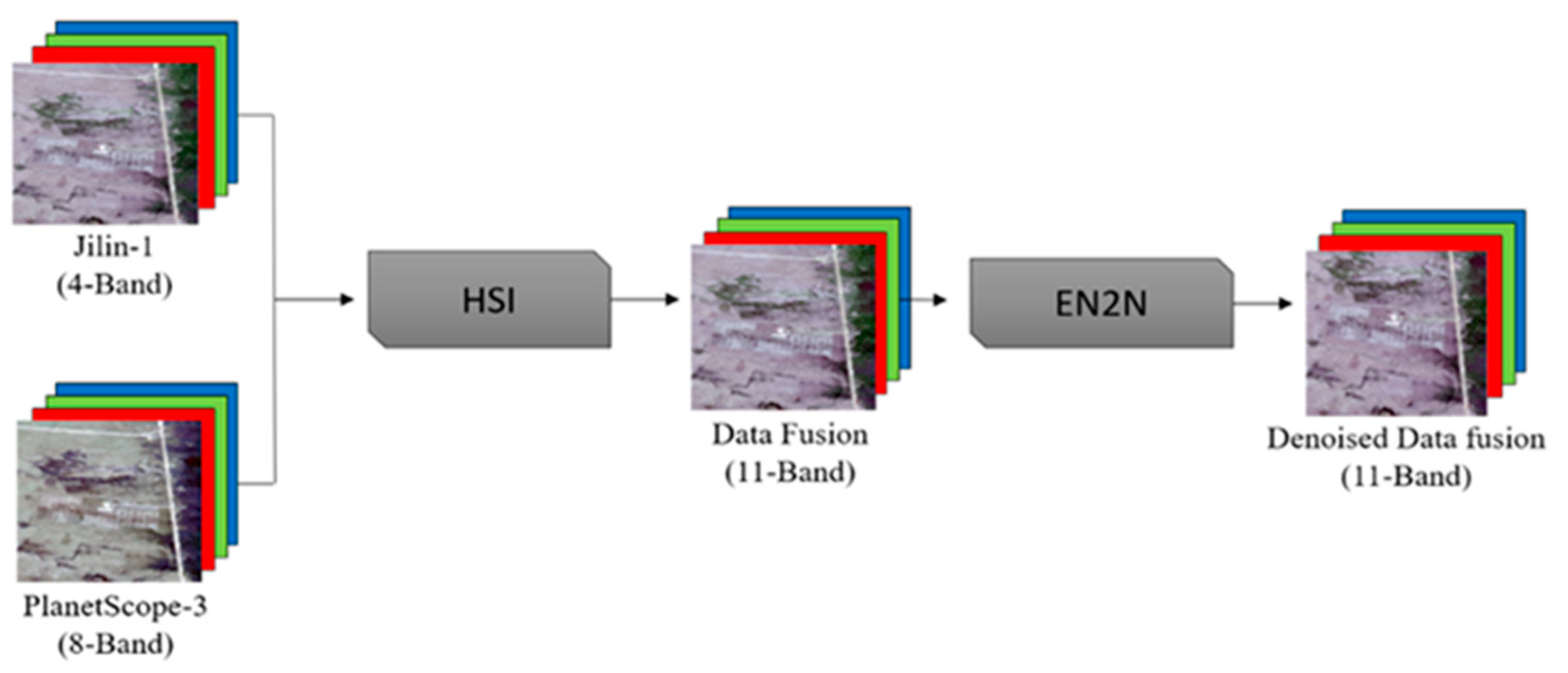

4.2.1. Satellite Imagery

4.2.2. Topographic Information

4.2.3. Proximity Information

4.2.4. Reference Data

4.2.5. Methodology Workflow

4.2.6. Computational Environment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chakraborty, S.K.; Sanyal, P.; Ray, R. Wetlands Ecology: Eco-Biological Uniqueness of a Ramsar Site (East Kolkata Wetlands, India); Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Barua, P.; Rahman, S.H.; Eslamian, S. Coastal Zone and Wetland Ecosystem: Management Issues. In Life Below Water; Leal Filho, W., Azul, A.M., Brandli, L., Salvia, A.L., Wall, T., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–19. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, L.; Wang, Q.; Jiang, L.; Qi, Y.; Wang, S.; Shen, T.; Tang, B.-H.; Gu, Y. UAV Hyperspectral Remote Sensing Image Classification: A Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 3099–3124. [Google Scholar] [CrossRef]

- Berhane, T.M.; Costa, H.; Lane, C.R.; Anenkhonov, O.A.; Chepinoga, V.V.; Autrey, B.C. The influence of region of interest heterogeneity on classification accuracy in wetland systems. Remote Sens. 2019, 11, 551. [Google Scholar] [CrossRef] [PubMed]

- Rapinel, S.; Panhelleux, L.; Gayet, G.; Vanacker, R.; Lemercier, B.; Laroche, B.; Chambaud, F.; Guelmami, A.; Hubert-Moy, L. National wetland mapping using remote-sensing-derived environmental variables, archive field data, and artificial intelligence. Heliyon 2023, 9, e13482. [Google Scholar] [CrossRef]

- Hejda, M.; Pyšek, P.; Jarošík, V. Impact of invasive plants on the species richness, diversity and composition of invaded communities. J. Ecol. 2009, 97, 393–403. [Google Scholar] [CrossRef]

- Zedler, J.B.; Kercher, S. Causes and Consequences of Invasive Plants in Wetlands: Opportunities, Opportunists, and Outcomes. Crit. Rev. Plant Sci. 2004, 23, 431–452. [Google Scholar] [CrossRef]

- Bradley, B.A. Remote detection of invasive plants: A review of spectral, textural and phenological approaches. Biol. Invasions 2013, 16, 1411–1425. [Google Scholar] [CrossRef]

- Villa, P.; Pinardi, M.; Tóth, V.; Hunter, P.; Bolpagni, R.; Bresciani, M. Remote sensing of macrophyte morphological traits: Implications for the management of shallow lakes. J. Limnol. 2016, 76, e1629. [Google Scholar] [CrossRef]

- Zaka, M.M.; Samat, A. Advances in Remote Sensing and Machine Learning Methods for Invasive Plants Study: A Comprehensive Review. Remote Sens. 2024, 16, 3781. [Google Scholar] [CrossRef]

- Joshi, C.; De Leeuw, J.; Van Duren, I.C. Remote Sensing and GIS Applications for Mapping and Spatial Modelling of Invasive Species. In Proceedings of the XXth ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; ISPRS: Hanover, Germany, 2004; pp. 669–677. [Google Scholar]

- Yuan, S.; Liang, X.; Lin, T.; Chen, S.; Liu, R.; Wang, J.; Zhang, H.; Gong, P. A comprehensive review of remote sensing in wetland classification and mapping. arXiv 2025, arXiv:2504.10842. [Google Scholar] [CrossRef]

- Pang, Y.; Räsänen, A.; Wolff, F.; Tahvanainen, T.; Männikkö, M.; Aurela, M.; Korpelainen, P.; Kumpula, T.; Virtanen, T. Comparing multispectral and hyperspectral UAV data for detecting peatland vegetation patterns. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104043. [Google Scholar] [CrossRef]

- Piaser, E.; Villa, P. Evaluating capabilities of machine learning algorithms for aquatic vegetation classification in temperate wetlands using multi-temporal Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2023, 117, 103202. [Google Scholar] [CrossRef]

- Driba, D.L.; Emmanuel, E.D.; Doro, K.O. Predicting wetland soil properties using machine learning, geophysics, and soil measurement data. J. Soils Sediments 2024, 24, 2398–2415. [Google Scholar] [CrossRef]

- Fu, B.; Zuo, P.; Liu, M.; Lan, G.; He, H.; Lao, Z.; Zhang, Y.; Fan, D.; Gao, E. Classifying vegetation communities karst wetland synergistic use of image fusion and object-based machine learning algorithm with Jilin-1 and UAV multispectral images. Ecol. Indic. 2022, 140, 108989. [Google Scholar] [CrossRef]

- Scheibenreif, L.; Mommert, M.; Borth, D. Contrastive self-supervised data fusion for satellite imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-3-2022, 705–711. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L. A Generic Self-Supervised Learning (SSL) Framework for Representation Learning from Spectral–Spatial Features of Unlabeled Remote Sensing Imagery. Remote Sens. 2023, 15, 5238. [Google Scholar] [CrossRef]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap Your Own Latent—A New Approach to Self-Supervised Learning. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; pp. 21271–21284. Available online: https://proceedings.neurips.cc/paper_files/paper/2020/file/f3ada80d5c4ee70142b17b8192b2958e-Paper.pdf (accessed on 2 August 2025).

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar] [CrossRef]

- Chen, X.; Xie, S.; He, K. An empirical study of training self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 9640–9649. [Google Scholar]

- Chen, H.; Yokoya, N.; Chini, M. Fourier domain structural relationship analysis for unsupervised multimodal change detection. ISPRS J. Photogramm. Remote Sens. 2023, 198, 99–114. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, Y.; Xiao, Z.; Chu, J.; Wang, X. Spectral-Spatial Self-Supervised Learning for Few-Shot Hyperspectral Image Classification. arXiv 2025, arXiv:2505.12482. [Google Scholar] [CrossRef]

- Liu, W.; Liu, K.; Sun, W.; Yang, G.; Ren, K.; Meng, X.; Peng, J. Self-Supervised Feature Learning Based on Spectral Masking for Hyperspectral Image Classification. Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Zhu, W.; Ren, G.; Wang, J.; Wang, J.; Hu, Y.; Lin, Z.; Li, W.; Zhao, Y.; Li, S.; Wang, N. Monitoring the Invasive Plant Spartina alterniflora in Jiangsu Coastal Wetland Using MRCNN and Long-Time Series Landsat Data. Remote Sens. 2022, 14, 2630. [Google Scholar] [CrossRef]

- Shi, L.; Lan, X.; Duan, X.; Liu, X. FV-DLV3+: A light-weight flooded vegetation extraction method with attention-based DeepLabv3+. Int. J. Remote Sens. 2024, 46, 366–391. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Munizaga, J.; García, M.; Ureta, F.; Novoa, V.; Rojas, O.; Rojas, C. Mapping Coastal Wetlands Using Satellite Imagery and Machine Learning in a Highly Urbanized Landscape. Sustainability 2022, 14, 5700. [Google Scholar] [CrossRef]

- Li, J.; Ma, J.; Ye, X. A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images. Remote Sens. 2022, 14, 4252. [Google Scholar] [CrossRef]

- Kwan, C.; Gribben, D.; Ayhan, B.; Bernabe, S.; Plaza, A.; Selva, M. Improving land cover classification using extended multi-attribute profiles (EMAP) enhanced color, near infrared, and LiDAR data. Remote Sens. 2020, 12, 1392. [Google Scholar] [CrossRef]

- Reji, J.; Nidamanuri, R.R. Deep Learning-Based Multisensor Approach for Precision Agricultural Crop Classification Based on Nitrogen Levels. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- He, C.; Wei, Y.; Guo, K.; Han, H. Removal of Mixed Noise in Hyperspectral Images Based on Subspace Representation and Nonlocal Low-Rank Tensor Decomposition. Sensors 2024, 24, 327. [Google Scholar] [CrossRef] [PubMed]

- Kahraman, S.; Bacher, R. A comprehensive review of hyperspectral data fusion with LiDAR and SAR data. Annu. Rev. Control. 2021, 51, 236–253. [Google Scholar] [CrossRef]

- Ding, G.; Liu, C.; Yin, J.; Teng, X.; Tan, Y.; He, H.; Lin, H.; Tian, L.; Cheng, J.-X. Self-supervised elimination of non-independent noise in hyperspectral imaging. Newton 2025, 1, 100195. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning (ICML 2020), Virtual, 13–18 July 2020; PMLR: Cambridge, MA, USA, 2020; Volume 119, pp. 1597–1607. Available online: https://proceedings.mlr.press/v119/chen20j.html (accessed on 15 July 2025).

- Wu, B.; Hao, S.; Wang, W. Class-Aware Self-Distillation for Remote Sensing Image Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2173–2188. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9630–9640. [Google Scholar] [CrossRef]

- Ayush, K.; Uzkent, B.; Meng, C.; Tanmay, K.; Burke, M.; Lobell, D.; Ermon, S. Geography-Aware Self-Supervised Learning. Available online: https://openaccess.thecvf.com/content/ICCV2021/html/Ayush_Geography-Aware_Self-Supervised_Learning_ICCV_2021_paper.html (accessed on 27 July 2025).

- Mañas, O.; Lacoste, A.; Giró-I-Nieto, X.; Vazquez, D.; Rodriguez, P. Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data. Available online: https://openaccess.thecvf.com/content/ICCV2021/html/Manas_Seasonal_Contrast_Unsupervised_Pre-Training_From_Uncurated_Remote_Sensing_Data_ICCV_2021_paper.html (accessed on 27 July 2025).

- Skowronek, S.; Ewald, M.; Isermann, M.; Van De Kerchove, R.; Lenoir, J.; Aerts, R.; Warrie, J.; Hattab, T.; Honnay, O.; Schmidtlein, S.; et al. Mapping an invasive bryophyte species using hyperspectral remote sensing data. Biol. Invasions 2016, 19, 239–254. [Google Scholar] [CrossRef]

- Kooistra, L.; Berger, K.; Brede, B.; Graf, L.V.; Aasen, H.; Roujean, J.-L.; Machwitz, M.; Schlerf, M.; Atzberger, C.; Prikaziuk, E.; et al. Reviews and syntheses: Remotely sensed optical time series for monitoring vegetation productivity. Biogeosciences 2024, 21, 473–511. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Tan, S.; Zhang, X.; Wang, H.; Yu, L.; Du, Y.; Yin, J.; Wu, B. A CNN-Based Self-Supervised Synthetic Aperture Radar Image Denoising Approach. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5213615. [Google Scholar] [CrossRef]

| Datasets | BYOL (O/A) | BYOL (mIoU) | MoCoV3 (O/A) | MoCoV3 (mIoU) | DINO (O/A) | DINO (mIoU) |

|---|---|---|---|---|---|---|

| Jilin-1 | 93% | 64% | 72% | 58% | 76% | 56% |

| PlanetScope-3 | 92% | 63% | 86% | 61% | 84% | 65% |

| Data fusion | 88% | 64% | 87% | 63% | 87% | 57% |

| Data | Description | Application Data |

|---|---|---|

| Satellite Imagery | High-resolution optical imagery acquired in June 2024 from PlanetScop-3 and Jilin-1 for wetland classification. | PlanetScope-3 (9-Band Image), Jilin-1 (4-Band Image) or data-fused image (PlanetScope-3 (8-band) + Jilin-1 (4-Band) = 11-Band-Fused Image). |

| Topographic Information | High-altitude alpine basin (~2400 m elevation) with flat valley floors surrounded by the Tianshan Mountains; predominantly marshes and meadows. | Alpine basin (~2400 m), whose valley floors are flat and is surrounded by Tianshan mountains; water accumulation provides the setting of marshes and alpine meadows. |

| Proximity Information | Proximity data from field-collected GPS data and OpenStreetMap (OSM)—includes wetland boundary information, water bodies and nearby landscape features. | Applied to study spatial relationships between wetlands, water bodies, and the surrounding land features; supports habitat mapping and hydrological modeling. |

| Reference Data | Reference data were generated using field-collected GPS points and OpenStreetMap (OSM) vectors. Polygons for 13 land cover and vegetation classes were manually digitized and cross-verified using high-resolution imagery. These served as ground-truth for supervised learning. | Supports training and validation of deep learning models for land cover classification and segmentation; ensures label accuracy and spatial alignment across image patches. |

| ID | Class Names | Image Samples |

|---|---|---|

| 1 | Cement surface | 526 |

| 2 | Buildings | 668 |

| 3 | False out grass | 985 |

| 4 | Elymus dehurious Turoz | 531 |

| 5 | Pedicularis sparse | 568 |

| 6 | Pedicularis dense | 1026 |

| 7 | Dry meadows | 2522 |

| 8 | Leymus Scalinus | 200 |

| 9 | Asphalt road | 567 |

| 10 | Open water | 269 |

| 11 | Open land | 334 |

| 12 | Wet meadows | 1383 |

| Total | 8892 |

| Category | Specification |

|---|---|

| Hardware | Intel CPU, 64 GB RAM, NVIDIA GeForce RTX 4060 (8 GB VRAM) |

| Operating System | Ubuntu 22.04 LTS |

| Python | 3.12.11 (Conda-Forge, MSC v.1943 64-bit) |

| PyTorch | 2.4.1 + cu124 |

| CUDA | 12.4 |

| GPU Device | NVIDIA GeForce RTX 4060 |

| Libraries | Torchvision 0.19.1+cu124, NumPy 1.26.4, Matplotlib 3.10.6, Lightly 1.5.20, scikit-learn 1.7.2, Rasterio 1.4.3, etc. |

| Software | ArcGIS Pro |

| SSL Training | 100 epochs, batch size = 64, LR = 1 × 10−4, weight decay = 1 × 10−4 |

| Training Time | Pretraining: ~1 h 45 m (±5 m), Linear evaluation: ~45 m (±5 m), Fine-tuning: ~2 h (±10 m) |

| Path Inference | 64 × 64 patches, <1 s (real time) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zaka, M.M.; Samat, A.; Abuduwaili, J.; Zhu, E.; Akhtar, A.; Li, W. Self-Supervised Learning and Multi-Sensor Fusion for Alpine Wetland Vegetation Mapping: Bayinbuluke, China. Plants 2025, 14, 3153. https://doi.org/10.3390/plants14203153

Zaka MM, Samat A, Abuduwaili J, Zhu E, Akhtar A, Li W. Self-Supervised Learning and Multi-Sensor Fusion for Alpine Wetland Vegetation Mapping: Bayinbuluke, China. Plants. 2025; 14(20):3153. https://doi.org/10.3390/plants14203153

Chicago/Turabian StyleZaka, Muhammad Murtaza, Alim Samat, Jilili Abuduwaili, Enzhao Zhu, Arslan Akhtar, and Wenbo Li. 2025. "Self-Supervised Learning and Multi-Sensor Fusion for Alpine Wetland Vegetation Mapping: Bayinbuluke, China" Plants 14, no. 20: 3153. https://doi.org/10.3390/plants14203153

APA StyleZaka, M. M., Samat, A., Abuduwaili, J., Zhu, E., Akhtar, A., & Li, W. (2025). Self-Supervised Learning and Multi-Sensor Fusion for Alpine Wetland Vegetation Mapping: Bayinbuluke, China. Plants, 14(20), 3153. https://doi.org/10.3390/plants14203153