Lightweight GAN for Restoring Blurred Images to Enhance Citrus Detection

Abstract

1. Introduction

2. Materials and Methods

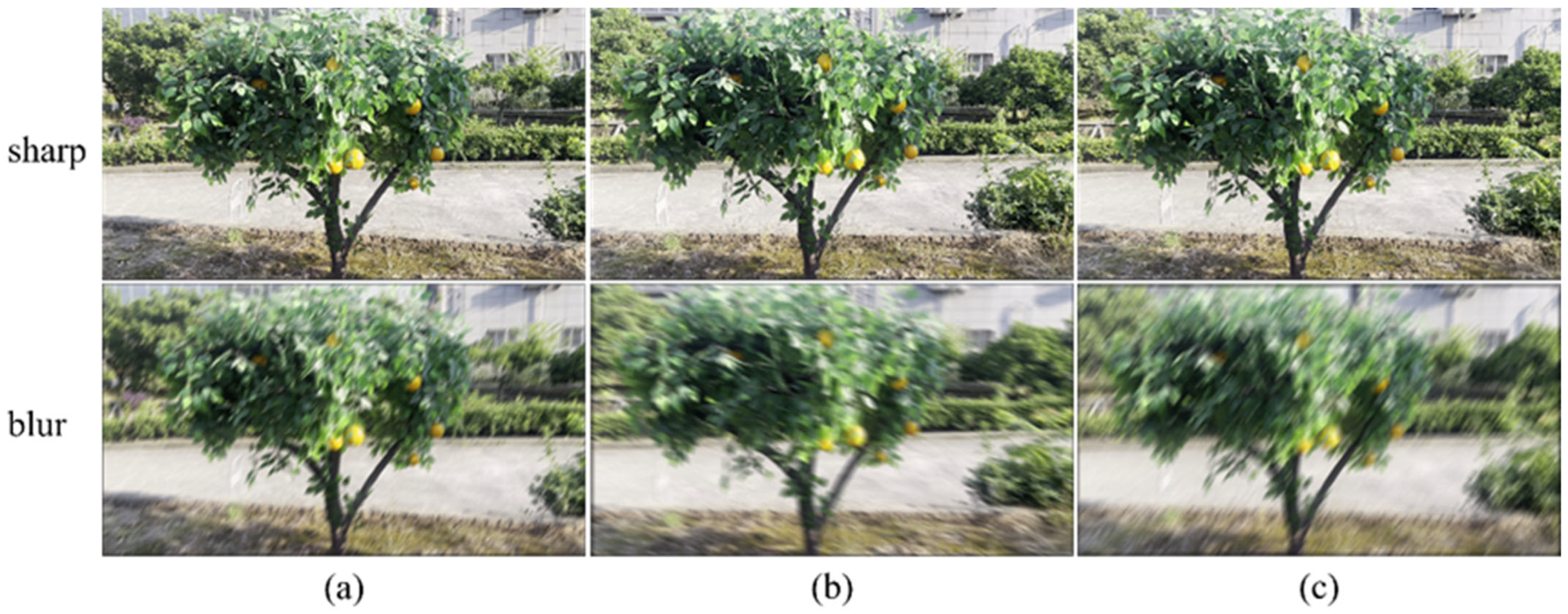

2.1. Construction of the Citrus Image Dataset

2.1.1. Sharp Image Acquisition

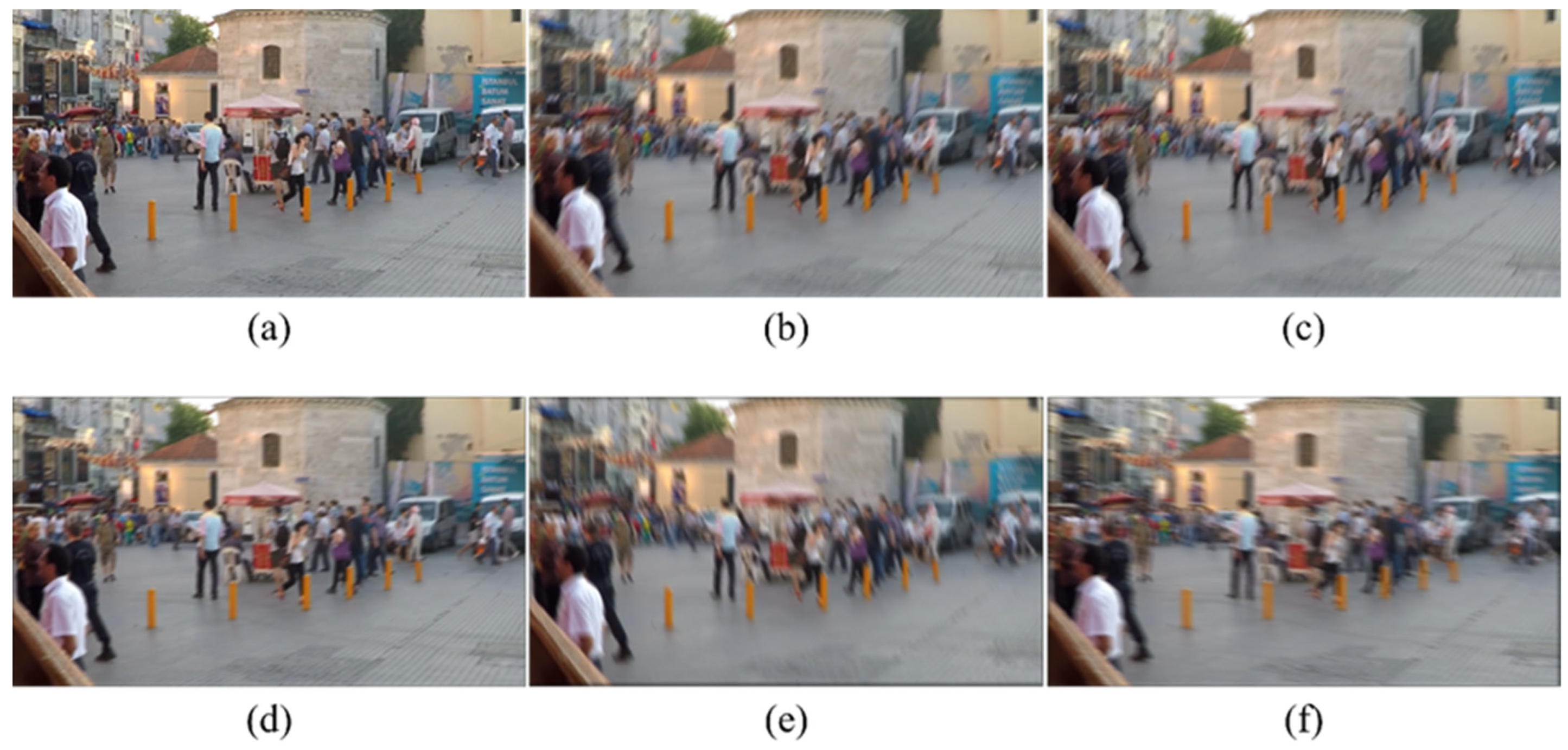

2.1.2. Dataset Synthesis and Pairing

2.2. Deblurring Network Model

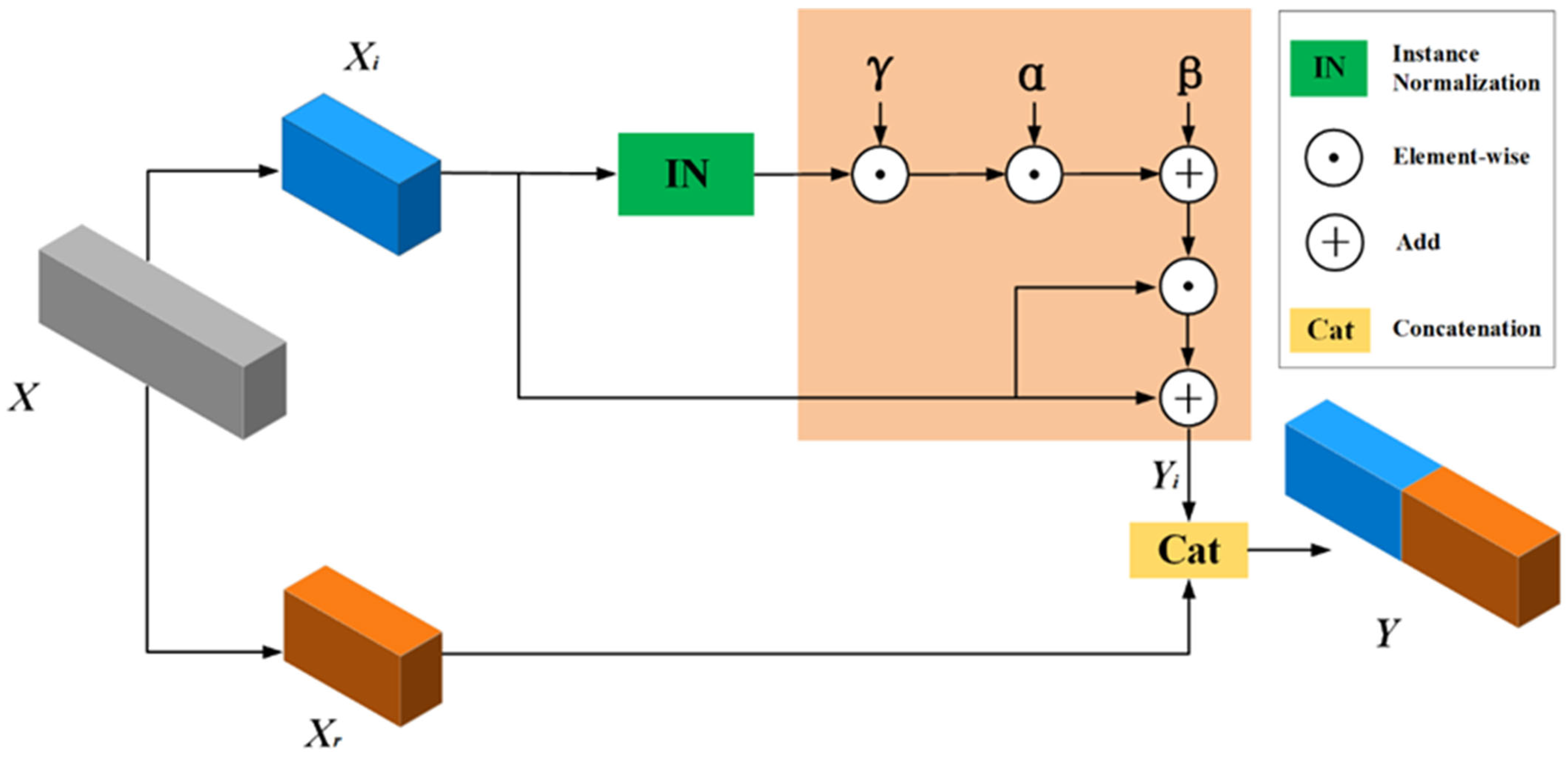

2.2.1. AGG Module

2.2.2. Double-Scale Discriminator

2.3. Loss Function

2.4. Training Parameters

2.5. Image Quality Assessment

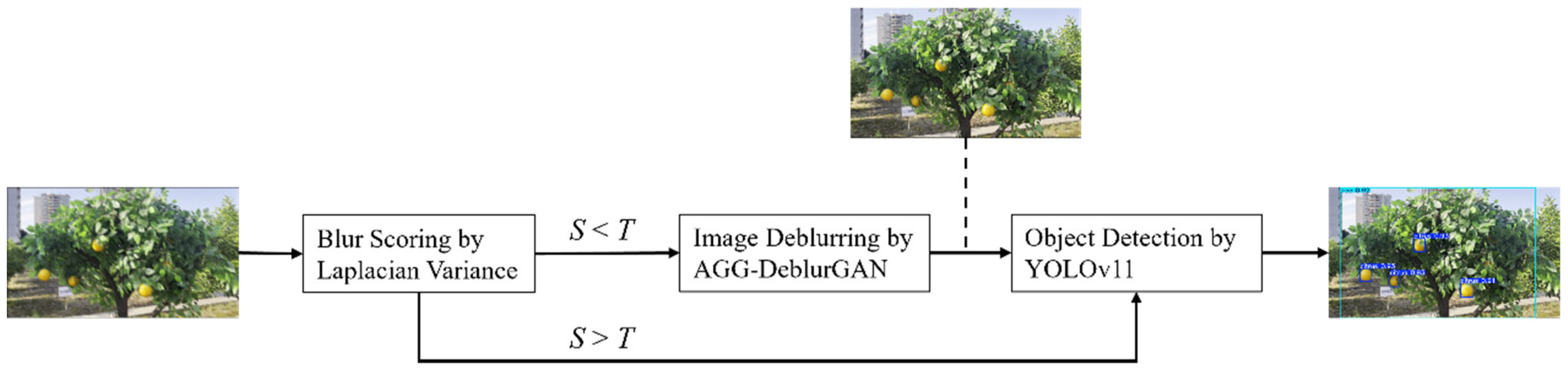

2.6. Dynamic Path Scheduling Mechanism

3. Results

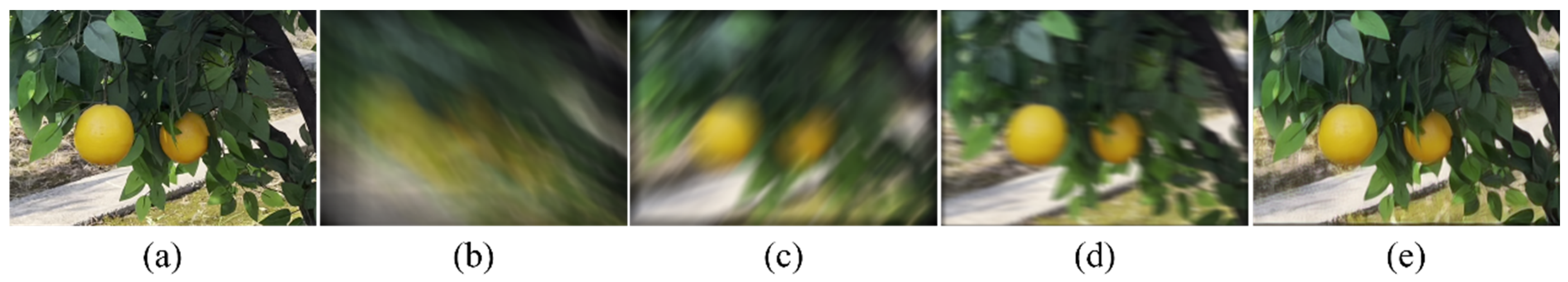

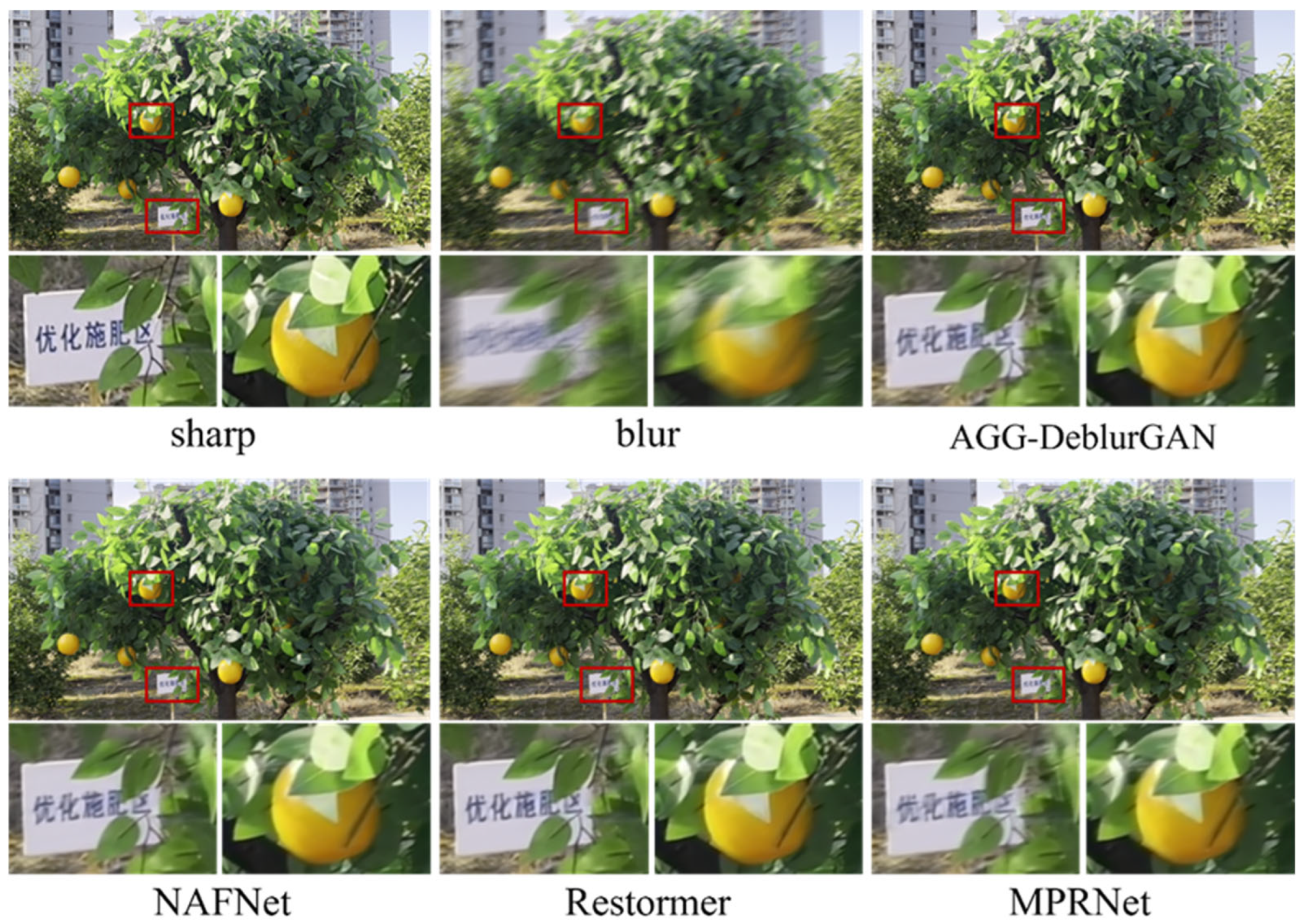

3.1. Image Deblurring

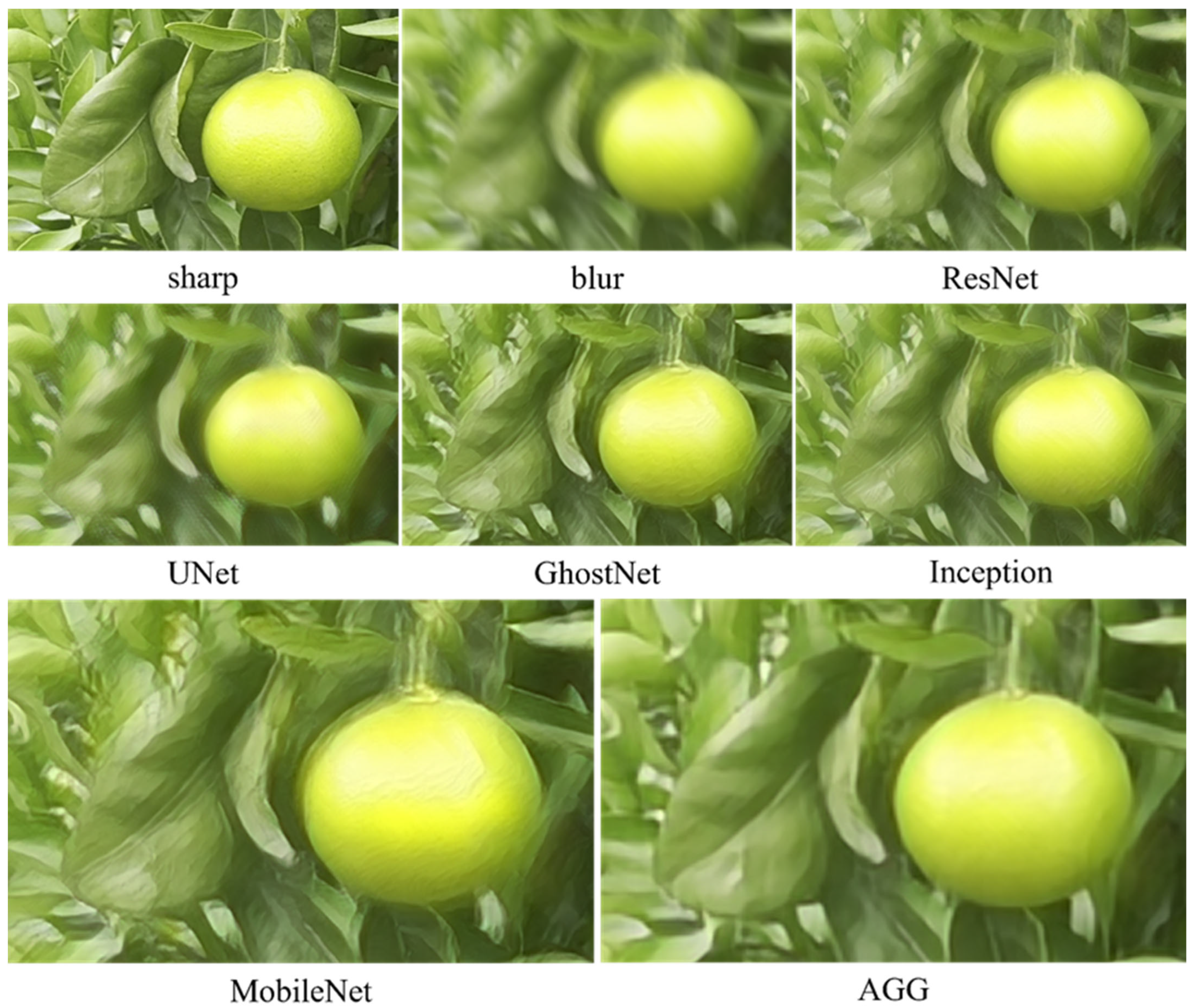

3.2. Ablation Study

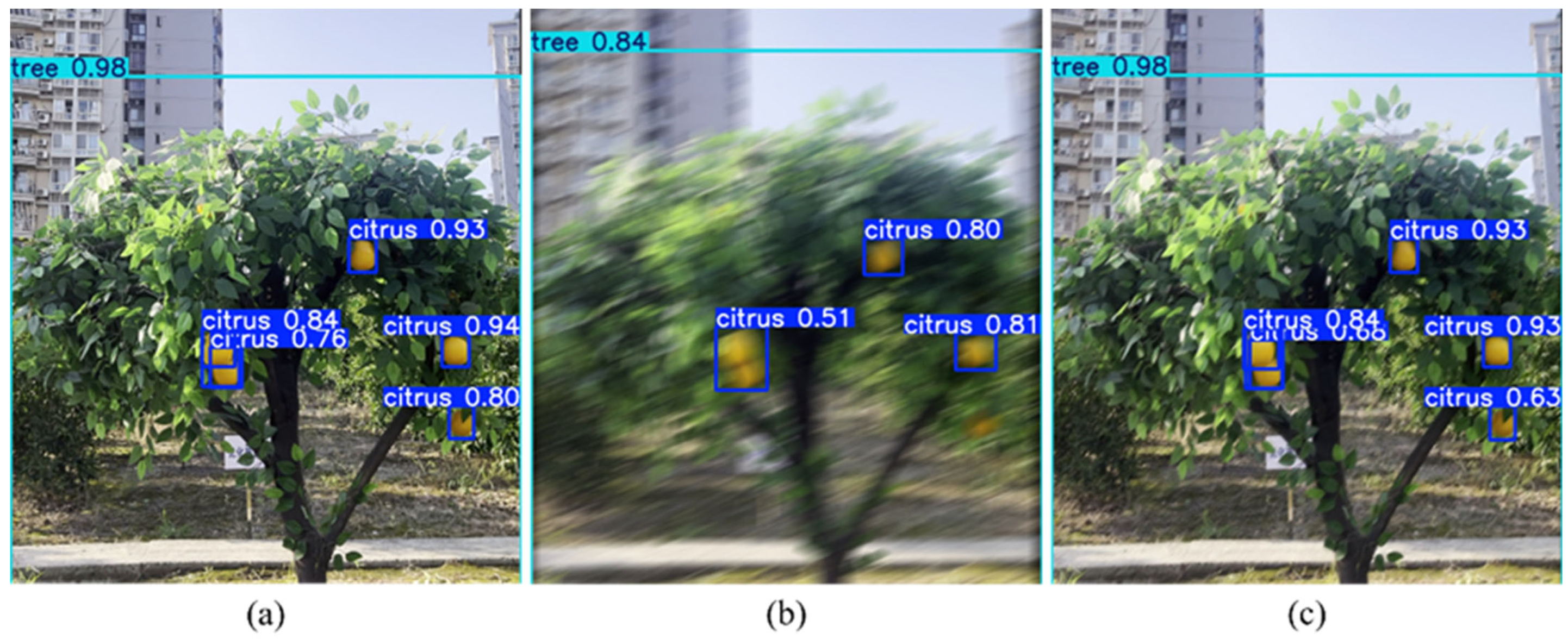

3.3. Citrus Tree Object Detection

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lu, Y.; Chen, D.; Olaniyi, E.; Huang, Y. Generative adversarial networks (GANs) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 2022, 200, 107208. [Google Scholar] [CrossRef]

- Tian, Q.; Zhang, H.; Bian, L.; Zhou, L.; Shen, Z.; Ge, Y. Field-based phenotyping for poplar seedlings biomass evaluation based on zero-shot segmentation with multimodal UAV images. Comput. Electron. Agric. 2025, 236, 110462. [Google Scholar] [CrossRef]

- Wen, F.; Wu, H.; Zhang, X.; Shuai, Y.; Huang, J.P.; Li, X.; Huang, J.Y. Accurate recognition and segmentation of northern corn leaf blight in drone RGB Images: A CycleGAN-augmented YOLOv5-Mobile-Seg lightweight network approach. Comput. Electron. Agric. 2025, 236, 110433. [Google Scholar] [CrossRef]

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C.; Zhang, J. Navigation line extraction algorithm for corn spraying robot based on improved YOLOv8s network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Xing, Z.; Wang, Y.; Qu, A.; Yang, C. MFENet: Multi-scale feature extraction network for images deblurring and segmentation of swinging wolfberry branch. Comput. Electron. Agric. 2023, 215, 108413. [Google Scholar] [CrossRef]

- Yu, H.; Li, D.; Chen, Y. A state-of-the-art review of image motion deblurring techniques in precision agriculture. Heliyon 2023, 9, e17332. [Google Scholar] [CrossRef]

- Zhan, Z.; Yang, X.; Li, Y.; Pang, C. Video deblurring via motion compensation and adaptive information fusion. Neurocomputing 2019, 341, 88–98. [Google Scholar] [CrossRef]

- Lin, X.; Huang, Y.; Ren, H.; Liu, Z.; Zhou, Y.; Fu, H.; Cheng, B. ClearSight: Human Vision-Inspired Solutions for Event-Based Motion Deblurring. arXiv 2025. [Google Scholar] [CrossRef]

- Wen, Y.; Chen, J.; Sheng, B.; Chen, Z.; Li, P.; Tan, P.; Lee, T.-Y. Structure-Aware Motion Deblurring Using Multi-Adversarial Optimized CycleGAN. IEEE Trans. Image Process. 2021, 30, 6142–6155. [Google Scholar] [CrossRef] [PubMed]

- Song, T.; Li, L.; Wu, J.; Dong, W.; Cheng, D. Quality-aware blind image motion deblurring. Pattern Recognit. 2024, 153, 110568. [Google Scholar] [CrossRef]

- Sun, L.; Gong, B.; Liu, J.; Gao, D. Visual object tracking based on adaptive deblurring integrating motion blur perception. J. Vis. Commun. Image Represent. 2025, 107, 104388. [Google Scholar] [CrossRef]

- Zhang, K.; Ren, W.; Luo, W.; Lai, W.-S.; Stenger, B.; Yang, M.-H.; Li, H. Deep Image Deblurring: A Survey. Int. J. Comput. Vis. 2022, 130, 2103–2130. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar] [CrossRef]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. DeblurGAN-v2: Deblurring (Orders-of-Magnitude) Faster and Better. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8877–8886. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Shah, M.; Kumar, P. Improved handling of motion blur for grape detection after deblurring. In Proceedings of the 2021 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; pp. 949–954. [Google Scholar] [CrossRef]

- Batchuluun, G.; Hong, J.S.; Wahid, A.; Park, K.R. Plant Image Classification with Nonlinear Motion Deblurring Based on Deep Learning. Mathematics 2023, 11, 4011. [Google Scholar] [CrossRef]

- Yun, C.; Kim, Y.H.; Lee, S.J.; Im, S.J.; Park, K.R. WRA-Net: Wide Receptive Field Attention Network for Motion Deblurring in Crop and Weed Image. Plant Phenomics 2023, 5, 0031. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; Volume 42, pp. 2011–2023. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, H.; Zang, L.; Jiang, Y.; Wang, X.; Liu, Q.; Huang, D.; Hu, B. Gated normalization unit for image restoration. Pattern Anal. Appl. 2025, 28, 16. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Comparison of Full-Reference Image Quality Models for Optimization of Image Processing Systems. Int. J. Comput. Vis. 2021, 129, 1258–1281. [Google Scholar] [CrossRef] [PubMed]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image Quality Assessment: Unifying Structure and Texture Similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H.; Shao, L. Multi-Stage Progressive Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar] [CrossRef]

- Liu, Y.; Haridevan, A.; Schofield, H.; Shan, J. Application of Ghost-DeblurGAN to Fiducial Marker Detection. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 6827–6832. [Google Scholar] [CrossRef]

- Chen, L.; Lu, X.; Zhang, J.; Chu, X.; Chen, C. HINet: Half Instance Normalization Network for Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Cai, S.; Pan, W.; Liu, H.; Zeng, X.; Sun, Z. Orchard Obstacle Detection Based on D2-YOLO Deblurring Recognition Network. Trans. Chin. Soc. Agric. Mach. 2023, 54, 284–292. [Google Scholar] [CrossRef]

| Name | Method | Type |

|---|---|---|

| DISTS | Deep Image Structure and Texture Similarity | Perceptual features |

| LPIPS | Learned Perceptual Image Patch Similarity | Perceptual features |

| VSI | Visual Saliency-Induced Index | Perceptual features |

| MS-SSIM | Multi-Scale Structural Similarity | structural similarity |

| FSIM | Feature Similarity Index | structural similarity |

| GMSD | Gradient Magnitude Similarity Deviation | structural similarity |

| NLPD | Normalized Laplacian Pyramid Distance | statistical modeling |

| (a) | ||||

| Networks | DISTS | LPIPS | VSI | MS-SSIM |

| Test-blur | 0.723 0.012 | 0.638 0.011 | 0.868 0.010 | 0.472 0.032 |

| ResNet | 0.848 0.005 | 0.740 0.004 | 0.961 0.003 | 0.817 0.010 |

| Inception | 0.854 0.006 | 0.729 0.005 | 0.956 0.003 | 0.802 0.011 |

| UNet | 0.853 0.005 | 0.740 0.005 | 0.959 0.003 | 0.812 0.014 |

| GhostNet | 0.881 0.004 | 0.724 0.004 | 0.960 0.003 | 0.810 0.008 |

| MobileNet | 0.884 0.004 | 0.729 0.004 | 0.961 0.003 | 0.809 0.009 |

| AGG | 0.911 0.003 | 0.766 0.004 | 0.971 0.002 | 0.863 0.008 |

| (b) | ||||

| Networks | FSIM | GMSD | NLPD | Score |

| Test-blur | 0.679 0.018 | 0.783 0.008 | 0.546 0.010 | 0.673 0.012 |

| ResNet | 0.891 0.006 | 0.847 0.002 | 0.673 0.006 | 0.825 0.003 |

| Inception | 0.881 0.006 | 0.836 0.003 | 0.654 0.007 | 0.816 0.006 |

| UNet | 0.885 0.007 | 0.843 0.004 | 0.665 0.008 | 0.822 0.006 |

| GhostNet | 0.889 0.005 | 0.837 0.002 | 0.655 0.005 | 0.822 0.004 |

| MobileNet | 0.891 0.005 | 0.838 0.002 | 0.654 0.006 | 0.824 0.003 |

| AGG | 0.914 0.004 | 0.860 0.003 | 0.697 0.007 | 0.855 0.004 |

| Networks | PSNR | SSIM | IQA | Params (M) | Flops (G) | Memory (MB) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|

| Test-blur | 18.07 | 0.404 | 0.673 | / | / | / | / |

| ResNet | 21.10 | 0.602 | 0.825 | 7.84 | 32.71 | 346.09 | 384 1.95 |

| Inception | 20.48 | 0.572 | 0.816 | 35.84 | 17.57 | 214.38 | 205 2.81 |

| UNet | 21.07 | 0.603 | 0.822 | 51.29 | 21.40 | 280.99 | 293 1.98 |

| GhostNet | 21.12 | 0.609 | 0.822 | 1.52 | 1.38 | 75.31 | 73 1.28 |

| MobileNet | 21.34 | 0.626 | 0.824 | 2.11 | 2.74 | 119.92 | 85 1.19 |

| AGG | 22.35 | 0.687 | 0.855 | 1.53 | 1.38 | 75.32 | 58 1.08 |

| Method | PSNR | SSIM | IQA (95% CI) | Params (M) | Inference Time (ms) |

|---|---|---|---|---|---|

| DeblurGAN-v2 | 21.10 | 0.601 | 0.825 ± 0.003 | 28.84 | 384 1.95 |

| MPRNet [25] | 22.29 | 0.673 | 0.844 ± 0.012 | 20.1 | 1998 3.71 |

| Restormer [16] | 23.21 | 0.727 | 0.872 ± 0.008 | 16.13 | 1199 3.79 |

| NAFNet [26] | 22.64 | 0.703 | 0.860 ± 0.010 | 67.89 | 780 3.27 |

| AGG-DeblurGAN | 22.35 | 0.687 | 0.855 ± 0.004 | 5.24 | 58 1.08 |

| Model | PSNR | SSIM | IQA (95% CI) | Params (M) | Inference Time (ms) |

|---|---|---|---|---|---|

| AGG-DeblurGAN | 22.35 | 0.687 | 0.855 ± 0.004 | 5.24 | 58 1.08 |

| Remove adversarial loss | 20.81 | 0.599 | 0.807 ± 0.005 | 5.24 | 58 1.07 |

| Remove SE module | 22.06 | 0.674 | 0.849 ± 0.005 | 5.23 | 54 1.67 |

| Remove GHIN module | 21.15 | 0.611 | 0.819 ± 0.006 | 5.24 | 53 1.10 |

| Remove Gating mechanism | 21.45 | 0.633 | 0.823 ± 0.004 | 5.24 | 53 1.12 |

| Source | Class | mAP@0.5 | mAP@0.5:0.95 | Precision | Recall | F1 | FNR | mConf |

|---|---|---|---|---|---|---|---|---|

| Sharp | citrus | 0.925 | 0.630 | 0.868 | 0.842 | 0.855 | 0.158 | 0.787 |

| tree | 0.995 | 0.868 | 0.982 | 1.000 | 0.987 | 0.000 | 0.928 | |

| Blur | citrus | 0.673 | 0.324 | 0.855 | 0.454 | 0.593 | 0.546 | 0.675 |

| tree | 0.967 | 0.741 | 0.955 | 0.900 | 0.927 | 0.100 | 0.847 | |

| Restore | citrus | 0.898 | 0.604 | 0.861 | 0.803 | 0.831 | 0.197 | 0.767 |

| tree | 0.995 | 0.842 | 0.975 | 1.000 | 0.981 | 0.000 | 0.922 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Li, H.; Yang, Y.; Li, C.; Wang, L.; Wang, P. Lightweight GAN for Restoring Blurred Images to Enhance Citrus Detection. Plants 2025, 14, 3085. https://doi.org/10.3390/plants14193085

Huang Y, Li H, Yang Y, Li C, Wang L, Wang P. Lightweight GAN for Restoring Blurred Images to Enhance Citrus Detection. Plants. 2025; 14(19):3085. https://doi.org/10.3390/plants14193085

Chicago/Turabian StyleHuang, Yuyu, Hui Li, Yuheng Yang, Chengsong Li, Lihong Wang, and Pei Wang. 2025. "Lightweight GAN for Restoring Blurred Images to Enhance Citrus Detection" Plants 14, no. 19: 3085. https://doi.org/10.3390/plants14193085

APA StyleHuang, Y., Li, H., Yang, Y., Li, C., Wang, L., & Wang, P. (2025). Lightweight GAN for Restoring Blurred Images to Enhance Citrus Detection. Plants, 14(19), 3085. https://doi.org/10.3390/plants14193085