1. Introduction

With the continuous growth of the global population and the escalating challenges to agricultural production posed by climate change, food security has become a global issue of common concern to all countries. As one of the world’s three major staple foods, maize occupies a core position in the global food supply system due to its wide adaptability, high yield potential, and diverse applications (including food, feed, and industrial raw materials, among others). It plays an irreplaceable role in ensuring global food security, supporting sustainable agricultural development, and stabilizing economic and social operations [

1,

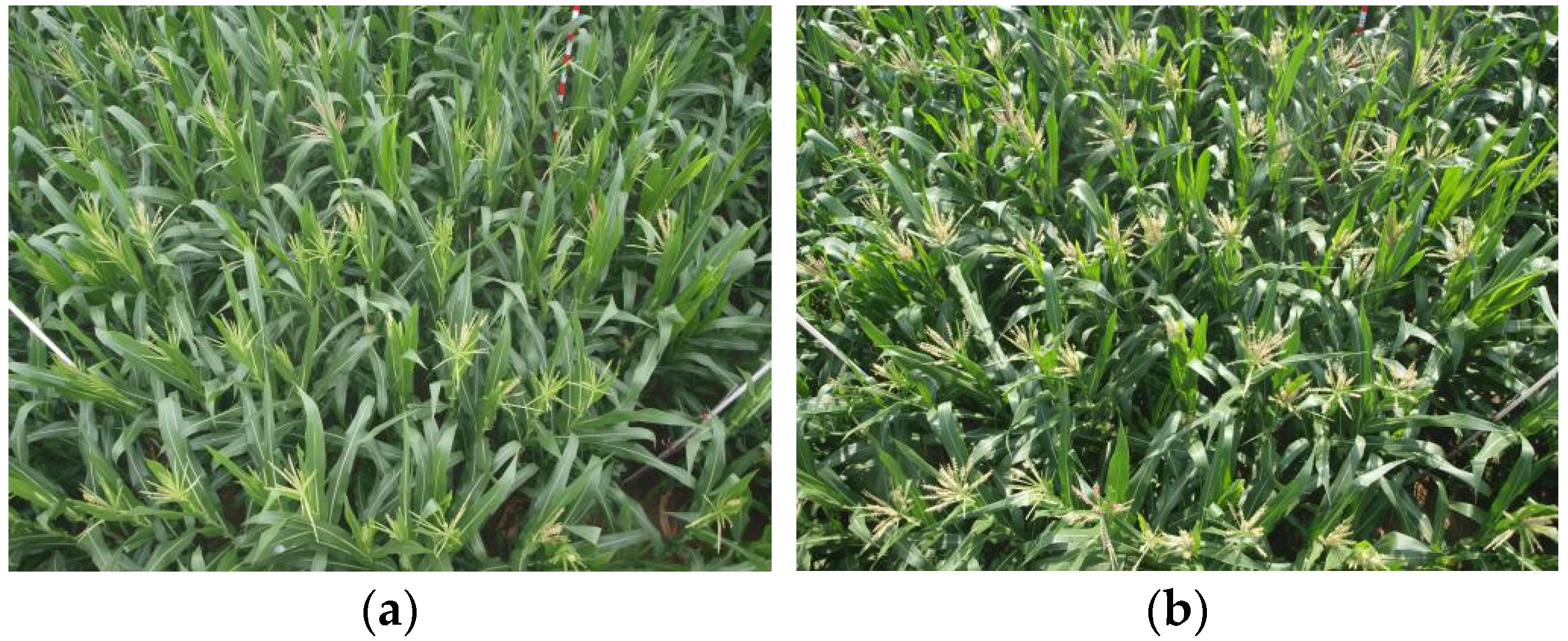

2]. The industry of maize seed production stands as the linchpin of maize cultivation, with maize being a monoecious species that undergoes cross-pollination. In its natural state, when the pollen from a feminized tassel alights upon the same ear, self-pollination ensues, yielding inbred seeds that drastically compromise seed purity and weakens the vigor of heterosis—the phenomenon wherein the initial hybrid progeny derived from genetically distinct parents exhibits enhanced attributes in growth, vitality, resilience to stress, yield, and quality—subsequently precipitating a decrement in both the yield and quality of maize, and posing a threat to farmers’ livelihoods. Thus, the meticulous identification and excision of residual tassels post-detasseling of the pistillate parent, are instrumental in preserving seed purity [

3]. However, traditional manual detection methods suffer from inherent limitations such as low efficiency and high subjectivity, failing to meet the demands of large-scale modern agricultural production, thus urgently requiring breakthroughs in intelligent detection technologies.

Unmanned Aerial Vehicles (UAVs) equipped with visible light sensors have become an important technical means for crop phenotypic information collection due to their advantages of strong mobility, high cost-effectiveness, and efficient operation [

4,

5,

6,

7]. Deep learning technology, with its advantages of high efficiency and non-contact operation, has provided a new approach for automated tassel detection, and relevant research has made positive progress.

In existing studies, scholars have carried out a series of explorations focusing on model lightweighting, accuracy optimization, and scenario adaptability. Yu et al. [

8] constructed segmentation models such as PspNet and DeepLab V3 + using RGB images from experimental bases, verifying the universality of U-Net for maize tassel segmentation, but it did not address the issue of complex field scale differences; Liang et al. [

9] compared the performance of Faster R-CNN, SSD, and other models in UAV images, and optimized onboard deployment efficiency through SSD_mobilenet; however, the self-built dataset was affected by weather, leading to insufficient model robustness; Zhang et al. [

10] replaced the backbone network of YOLOv4 with GhostNet and optimized the activation function, achieving a balance in accuracy, speed, and parameters but did not design dedicated modules for the dynamic morphological characteristics of tassels; Ma et al. [

11] improved YOLOv7-tiny by introducing modules such as SPD-Conv and ECA-Net, improving detection accuracy under the premise of lightweighting, yet its adaptability to multi-growth-stage tassel scales remains limited; Du et al. [

12] proposed a method combining UAV remote sensing and deep learning to detect maize ears at different stages, with an average precision of 94.5% but the detection performance fluctuates significantly under strong light conditions; Song et al. [

13] proposed the SEYOLOX-tiny model, which improved the mean average precision to 95.0% by introducing an attention mechanism but the detection performance for small-sized targets still needs optimization. The above studies all focus on improving the accuracy or efficiency of maize tassel detection, providing important references for this research. However, their improvement directions are mostly focused on single-dimensional optimization (such as backbone network replacement or the introduction of a single attention mechanism), failing to form a systematic solution for complex field scenarios.

Despite the significant progress made by existing methods, tassel detection in multi-temporal UAV images still faces three core challenges that have not been fully addressed by current research: First, tassel morphology evolves dynamically with growth stages, and coupled with differences in UAV flight heights, the target scale span is extremely large. Existing studies mostly rely on single-scale feature extraction networks (e.g., [

10,

11]), which are difficult to adapt to full-growth-stage scale changes. Second, the complex field environment, including leaf occlusion, uneven illumination, and soil background interference, easily leads to false detection and missed detection. Although some studies have introduced attention mechanisms to enhance feature expression (e.g., [

12,

13]), they have not solved the problem of information loss in cross-dimensional feature interaction. Third, the computing power at the UAV edge is limited, and the parameter quantity and computational complexity of traditional models restrict real-time detection performance. Existing lightweight schemes (e.g., [

9]) mostly achieve efficiency improvement by simplifying network structures, which easily leads to accuracy sacrifice and makes it difficult to balance the dual demands of “accuracy and efficiency”. Therefore, although similar studies have explored intelligent detection methods for maize tassels, there is still an urgent need to develop detection models that are both lightweight and have a high accuracy to meet the actual demand for real-time tasseling stage monitoring in smart agriculture, addressing the challenges of accurate identification of complex germplasm resources and real-time processing through multi-dimensional collaborative optimization.

Based on this, aiming at the limitations of existing methods, this study proposes an OTB-YOLO model integrating multi-module collaborative improvements. Through the enhancement of backbone network features, optimization of neck attention mechanisms, and innovation of multi-scale fusion strategies, it systematically addresses the problems of poor scale adaptability, insufficient robustness in complex environments, and the difficulty in balancing lightweight and accuracy, providing technical support for automated monitoring of tassels in large-scale seed production bases.

3. Network Model and Improvements

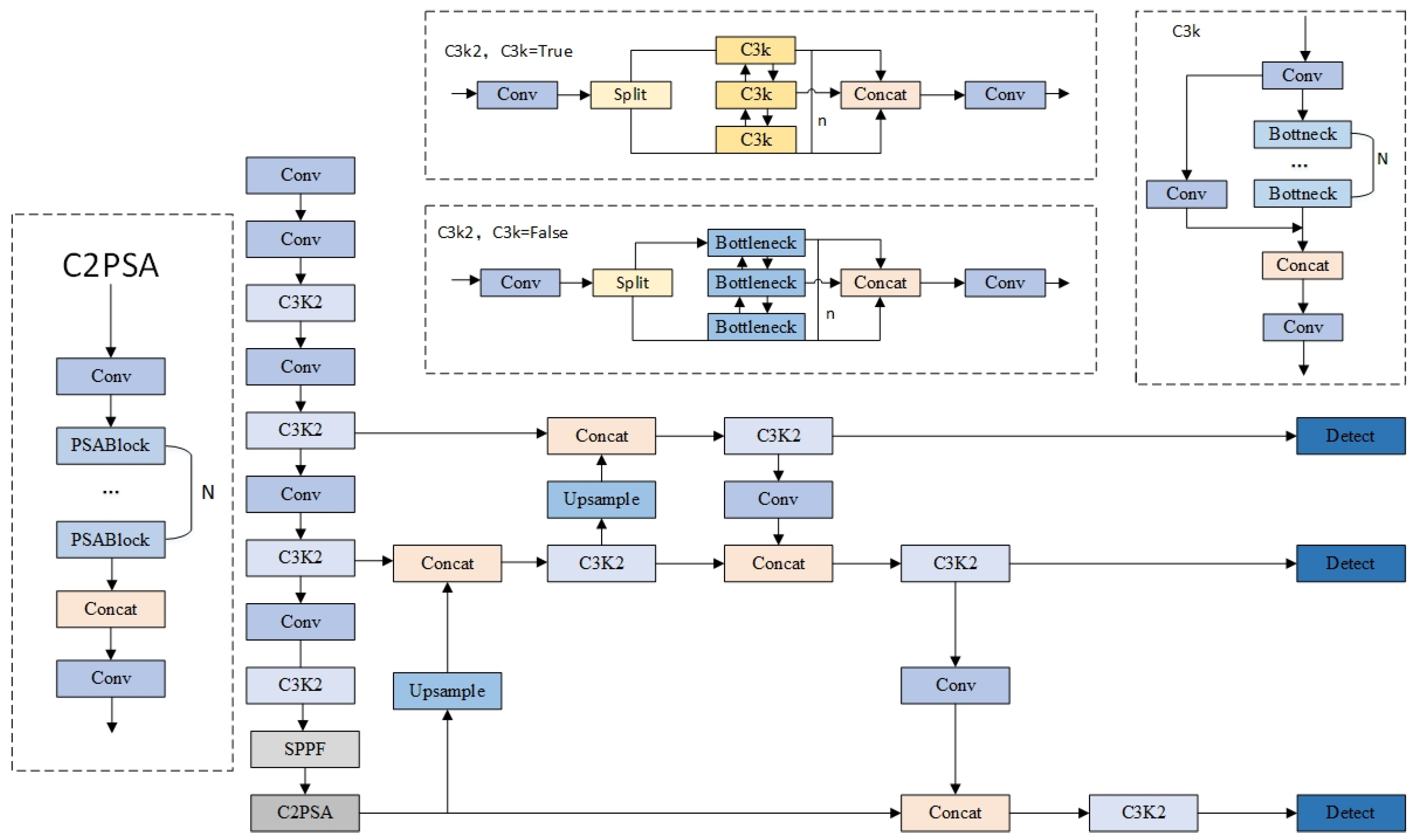

3.1. YOLOv11 Network Architecture

YOLOv11, an efficient general-purpose object detection framework developed and open-sourced by Ultralytics [

20], features a network architecture comprising three key components: the backbone network, the neck network, and the detection head. Its fundamental structure is illustrated in

Figure 2, with core improvements embodied in the design of the following modules:

The backbone network employs the C3K2 module as a replacement for the traditional convolution structure. This module integrates the advantages of standard convolution and group convolution, supporting the selection of C3k units with different convolution kernel sizes or standard bottleneck structures through configuration. It ensures feature extraction efficiency while providing flexible architecture configuration. In the neck network, the C2PSA module is introduced after the SPPF (Spatial Pyramid Pooling Fast) layer. Based on the Pyramid Squeeze Attention mechanism, this module enhances the model’s ability to capture key features through multi-scale feature compression and channel attention weighting. For the detection head, a lightweight prediction layer is constructed using depthwise separable convolution (DWConv). By separating spatial filtering and channel combination operations, it significantly reduces computational complexity while maintaining detection accuracy, with systematic optimization of network depth and width to achieve a balance between computational efficiency and feature expression capabilities.

3.2. OTB-YOLO Maize Tassel Detection Model

Leveraging the advantages of the YOLOv11 algorithm, this study proposes a lightweight detection method integrating improved attention mechanisms, namely OTB-YOLO (where “OTB” is derived from the initials of the three core improved modules: ODConv, Triplet Attention, and BiFPN), targeting the specific characteristics of maize tassel detection scenarios (e.g., complex field background interference, diverse tassel morphologies with large scale variations, and the need for lightweight deployment on UAVs). The core innovation of this method lies in achieving a “dual improvement in accuracy and efficiency” through modular improvements: it not only addresses the insufficient capture of tassel features by traditional models but also meets the deployment requirements of UAVs through computational cost optimization. Specifically, the creative structural improvements are as follows:

To tackle the issue that tassel features are easily disturbed in complex field environments, enhanced feature extraction in the backbone is achieved through an independently designed fusion scheme of ODConv (Omni-Dimensional Convolution) and C3K2 modules. The ODConv module is embedded into the C3K2 modules at the last three critical levels of the backbone, strengthening the model’s feature extraction capability while reducing computational costs. For an optimized attention mechanism in the neck, aiming to solve the problem of losing fine morphological information of tassels during feature dimension reduction in traditional attention mechanisms, this study proposes introducing the Triplet Attention mechanism after the feature fusion nodes in the upsampling path of the neck. It enhances the expression of key features (e.g., tassel morphological details) through cross-dimensional interaction, avoiding information loss caused by dimension reduction in traditional attention mechanisms. Regarding the innovative multi-scale feature fusion strategy, considering the significant scale variations in tassel targets captured by UAVs (from small tassels in the seedling stage to large tassels in the mature stage), this study proposes introducing the BiFPN (Bidirectional Feature Pyramid Network) at the feature fusion nodes of both the upsampling and downsampling paths in the neck. It optimizes multi-scale feature fusion through bidirectional cross-layer connections, thereby enhancing the detection robustness of tassel targets at different resolutions.

The network structure of the improved OTB-YOLO maize tassel detection model is illustrated in

Figure 3.

3.2.1. ODConv

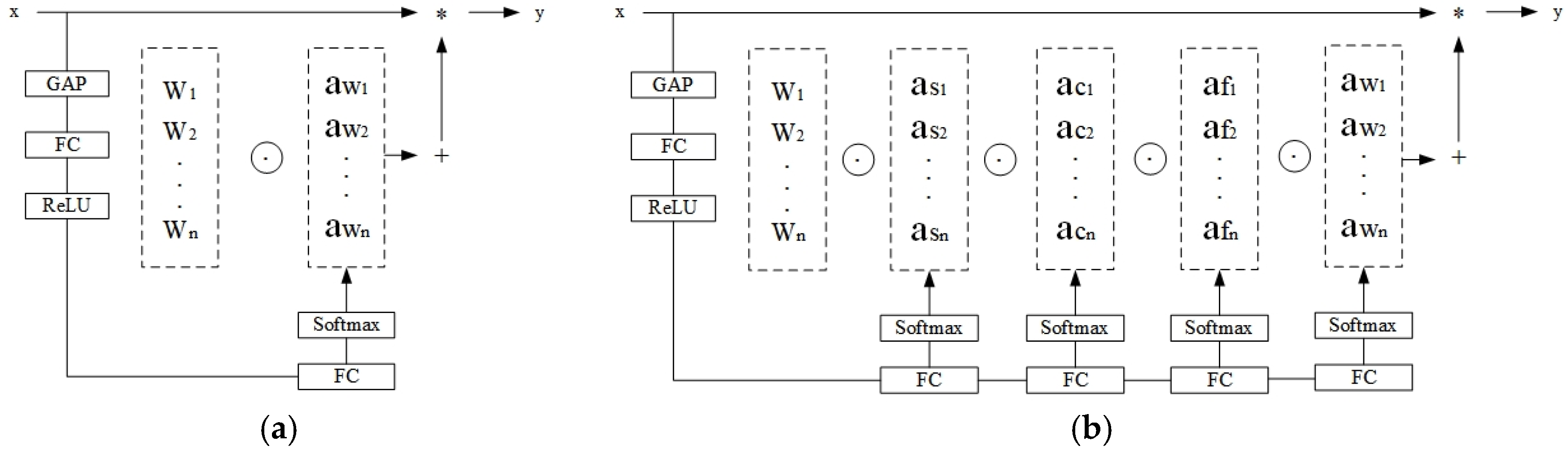

The core technical advantage of ODConv [

21] lies in its multi-dimensional dynamic attention mechanism. Compared with traditional dynamic convolution, ODConv not only realizes dynamic adjustment of the number of convolution kernels but also innovatively extends to three key dimensions: spatial size, number of input channels, and number of output channels.

Figure 4 shows the structural difference between the traditional convolution block and ODConv. This multi-dimensional dynamic characteristic enables ODConv to more finely adapt to the feature changes in maize tassels. Specifically, in dense tassel areas, ODConv can dynamically adjust the spatial size of the convolution kernel to enhance the receptive field, while optimizing the number of input and output channels to highlight key features, thereby effectively avoiding missed and false detections.

Additionally, ODConv uses a parallel strategy to simultaneously learn attention in different dimensions. This parallel architecture not only improves computational efficiency but also ensures the synergistic effect between features in each dimension. In the maize tassel detection task, this strategy allows the network to process multi-dimensional information such as the spatial position, morphological features, and spectral characteristics of tassels in parallel. Through this parallel learning mechanism, ODConv achieves the comprehensive capture of tassel features, while reducing computational redundancy and further optimizing the lightweight characteristics of the model, making it more suitable for deployment on UAVs and other computing-constrained platforms to meet real-time detection requirements.

Therefore, this study adopts the ODConv dynamic convolution technology. By utilizing the multi-dimensional attention mechanism and parallel strategy, it can comprehensively capture the features of maize tassels, thereby improving the accuracy of detection and counting. Through systematic experimental verification, it was finally determined to deploy the C3K2-ODConv module in the last three key feature extraction layers of the backbone and the P4 (medium scale) and P5 (large scale) detection layers of the neck network. This optimization scheme dynamically adjusts convolution kernel parameters and intelligently allocates computing resources, significantly improving the efficiency of feature extraction and downsampling while effectively maintaining the lightweight characteristics of the model, achieving the best balance between detection accuracy and computational efficiency.

3.2.2. Triplet Attention Mechanism

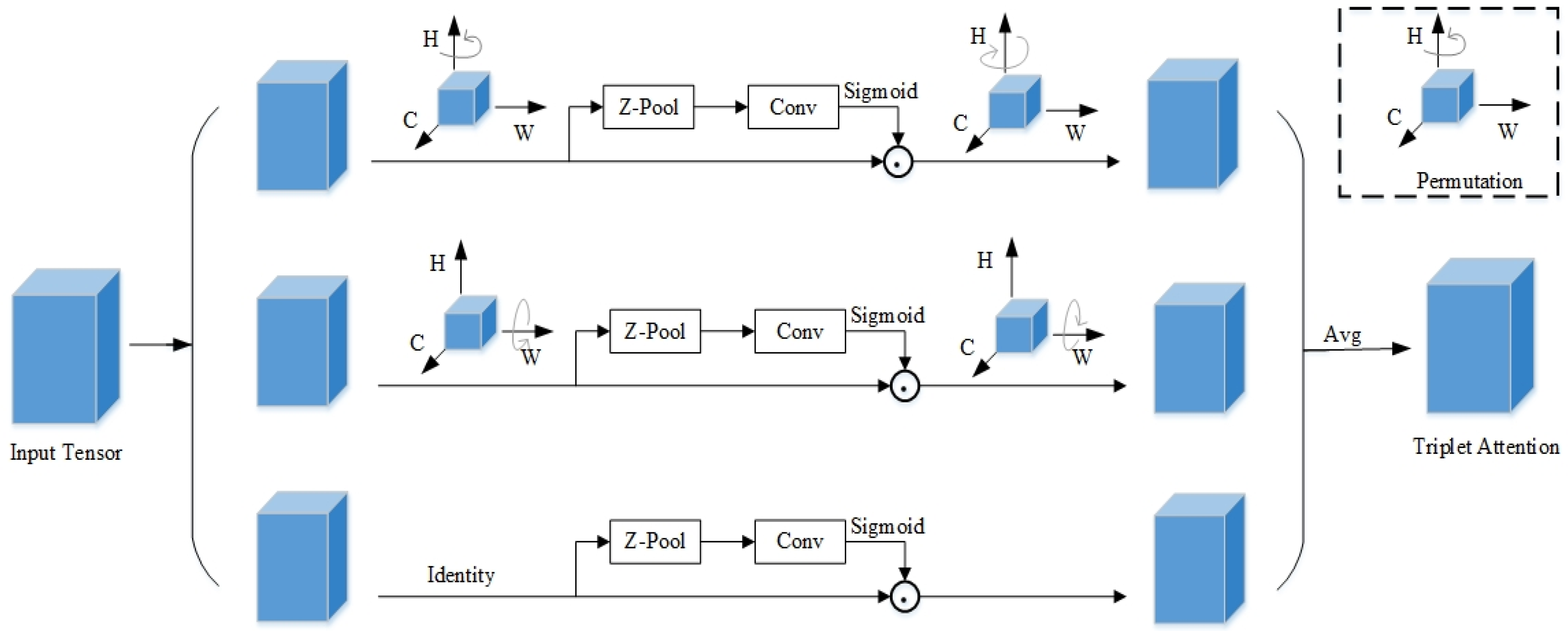

The Triplet Attention mechanism [

22] achieves cross-dimensional feature interaction through a three-branch parallel architecture.

Figure 5 shows the specific implementation flowchart of Triplet Attention, demonstrating how the three branches process the input tensor and finally synthesize triplet attention. It mainly includes four key steps: first, the input features are, respectively, converted into interactive representations of different dimensions through tensor permutation transformation, including channel-height, height-width, and width-channel interactions; subsequently, each branch compresses global features through Z-pooling, captures local details in combination with k × k convolution, and generates attention weights using the Sigmoid function; then, the generated attention weights are subjected to Hadamard product with the transformed features, and finally, the original dimensions are restored through inverse transformation and fused with weights to obtain the triplet attention output.

This structure establishes the interaction relationship between channels and spatial dimensions through different tensor rotation and permutation operations, thereby comprehensively extracting the morphological features of the detection target. In addition, the mechanism realizes lightweight design by sharing convolution kernel parameters and feature reuse between branches, reducing the model’s computational burden. In the maize tassel detection task, this study deploys the Triplet Attention module in the small target detection layer. Through the unique three-branch structure, it captures the cross-dimensional interaction information of the input data and efficiently calculates the attention weights, strengthening the network’s perception ability to target features in the detection task and enhancing the model’s ability to capture multi-scale and multi-angle features of maize tassels. At the same time, through its lightweight design, while reducing the computational burden, it constructs the interdependence between input channels and spatial positions, enhances the model’s ability to capture multi-scale and multi-angle features of maize tassels, focuses precisely on the key features of tassels, and avoids missed detections caused by complex backgrounds or lighting changes. It is particularly suitable for complex scenarios such as missed detection of small tassels and uneven distribution in UAV images.

3.2.3. BiFPN Bidirectional Feature Pyramid Network

The BiFPN (Bidirectional Feature Pyramid Network) [

23] realizes efficient feature transfer and information interaction through a multi-scale feature fusion mechanism, and its network structure is shown in

Figure 6. This structure introduces bidirectional cross-scale connections on the basis of the traditional feature pyramid. First, it performs top-down feature transfer on the input features to fuse high-level semantic information; subsequently, it integrates low-level detail features through the bottom-up path; finally, it realizes dynamic fusion of multi-scale features through learnable feature weights.

Aiming at the problem of feature extraction for targets of different scales at different growth stages in maize tassel detection, this study introduces the BiFPN module into the neck network to improve the model’s ability to fuse tassel detail and global features through constructing a bidirectional feature fusion mechanism. BiFPN breaks through the limitation of unidirectional feature transfer and realizes efficient interaction of features at different scales by constructing bidirectional connection paths from top to bottom and from bottom to top. In the maize tassel detection scenario, this mechanism allows the detailed edge information of tassels captured by shallow networks (such as branch texture and tassel axis morphology) to be fully fused with the semantic features extracted by deep networks (such as overall contour and spatial distribution), effectively solving the problems of missed detection of small tassels and false detection of large tassels. At the same time, BiFPN reduces computational complexity by deleting redundant single-input nodes, adding direct connection edges between same-level nodes, and modularly reusing bidirectional paths, strengthening the transfer efficiency of cross-scale features.

5. Discussion

To address issues such as feature interference, detail loss, and poor scale adaptability in maize tassel detection from UAV images, this study proposes the OTB-YOLO model based on improved YOLOv11. Three core optimizations are implemented to enhance performance: replacing traditional convolution with omni-dimensional dynamic convolution (ODConv) to reduce computational redundancy; introducing a Triplet Attention module to strengthen the feature extraction capability of the backbone network; and designing a bidirectional feature pyramid network (BiFPN) to optimize multi-scale feature fusion.

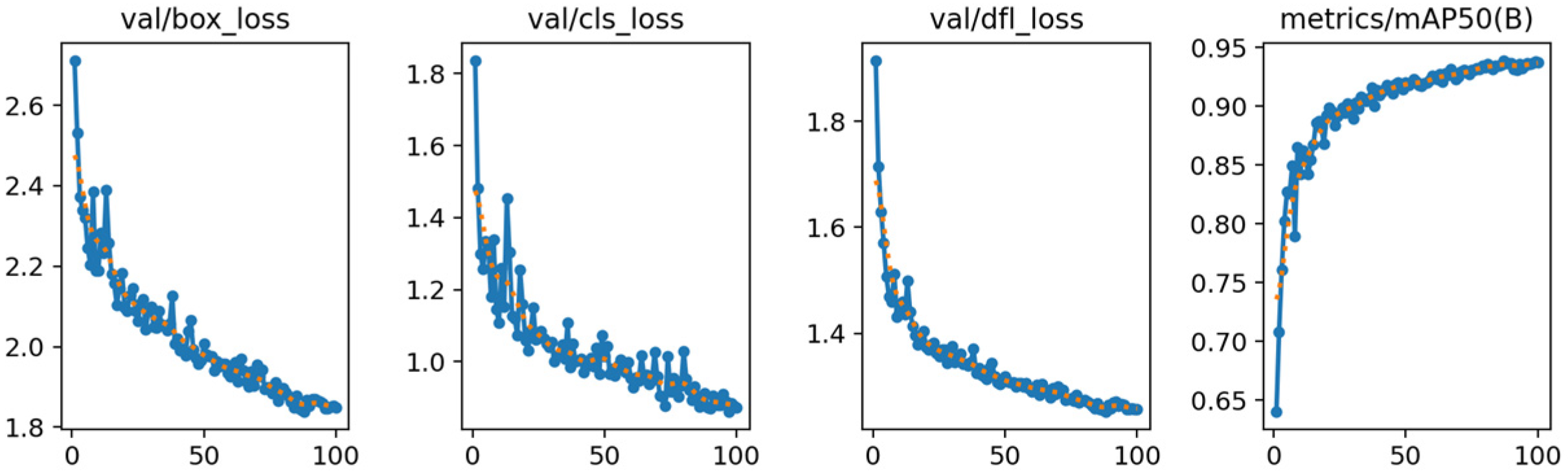

Ablation experiments validate the synergistic effectiveness of the improved modules: the model achieves a precision of 95.6% (+3.2%), a recall of 92.1% (+2.5%), and an mAP@0.5 of 96.6% (+3.1%), while maintaining lightweight characteristics with 3.4 M parameters and 6.0 GFLOPs. Specifically, ODConv dynamically adapts to morphological changes in maize tassels, compensating for the lack of dedicated dynamic feature modules in Zhang et al.’s [

10] model; the Triplet Attention module enhances the expression of key features through cross-dimensional interaction, effectively alleviating the poor robustness of Liang et al. ’s [

9] model caused by environmental interference; BiFPN improves the efficiency of multi-scale fusion via bidirectional cross-layer connections, breaking through the limitation of Ma et al. ’s [

11] model in scale adaptation for maize tassels across multiple growth stages. Together, these modules achieve a balanced optimization of “precision-efficiency” in complex field scenarios.

Comparative experiments with mainstream models such as Faster-RCNN, SSD, RetinaNet, YOLOv5, and YOLOv8 further confirm the superiority of OTB-YOLO. Its detection accuracy (precision: 95.6%, recall: 92.1%, mAP@0.5: 96.6%) significantly outperforms the comparison models, and its lightweight characteristics (3.4 M parameters, 6.0 GFLOPs) are superior to most similar algorithms. Compared with Song et al. ’s [

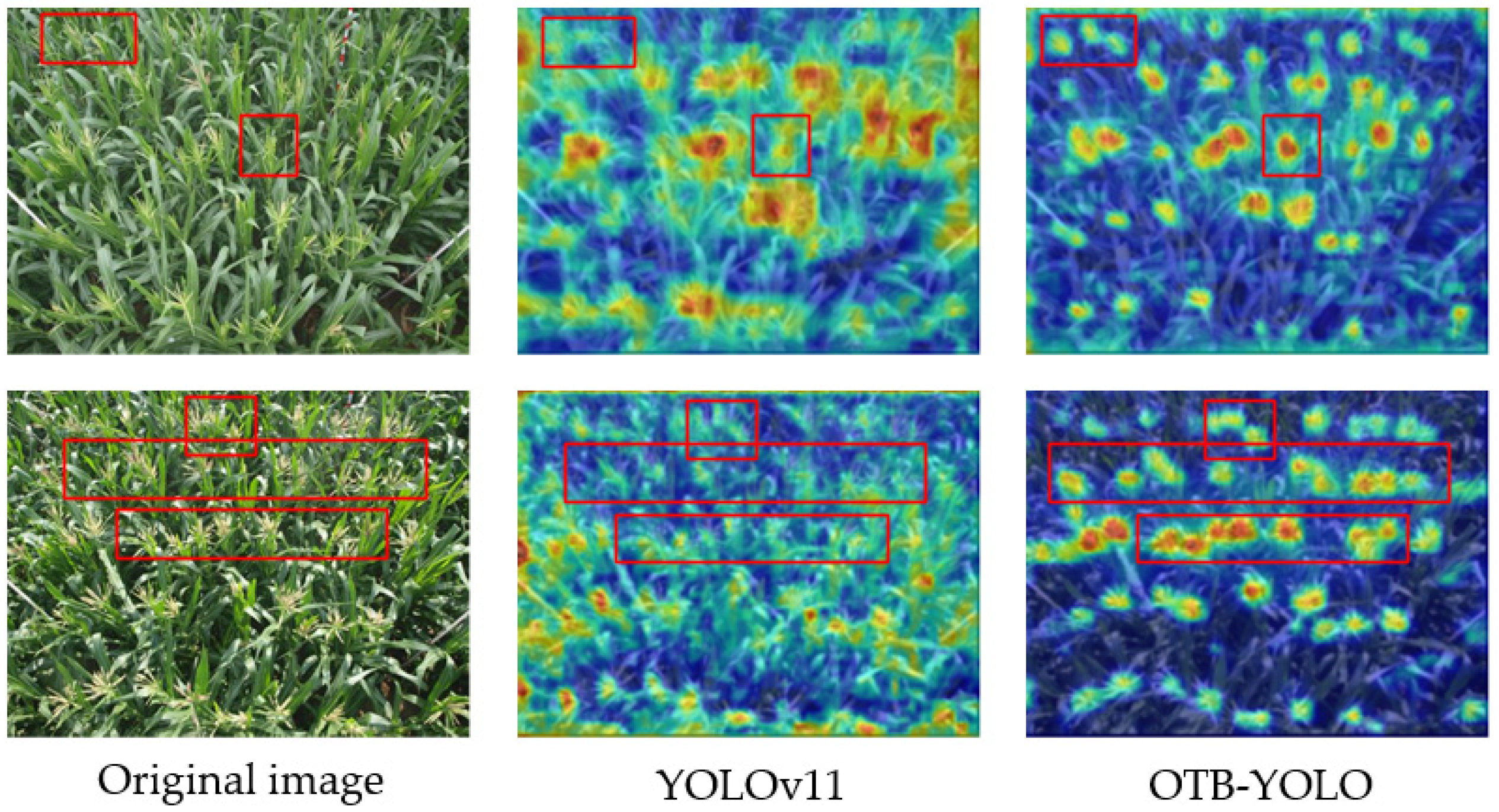

13] SEYOLOX-tiny model (mAP@0.5 of 95.0%), OTB-YOLO not only achieves a 1.6 percentage point improvement in accuracy but also optimizes the detection performance for small-sized tassels through the enhanced extraction of small-scale features by BiFPN, thereby addressing the drawback of its high missed detection rate for small targets. Grad-CAM visualization analysis intuitively shows that the model exhibits more precise attention distribution on key regions of maize tassels, such as tassel axes and branches, providing a mechanistic explanation for its performance advantages.

6. Conclusions

This study successfully develops the OTB-YOLO model by improving YOLOv11, effectively addressing key challenges in maize tassel detection from UAV images, including feature interference, detail loss, and poor scale adaptability. The integration of three core optimizations—omni-dimensional dynamic convolution (ODConv), Triplet Attention module, and bidirectional feature pyramid network (BiFPN)—synergistically enhances detection performance while maintaining lightweight properties (3.4 M parameters, 6.0 GFLOPs). Experimental results validate that OTB-YOLO achieves superior accuracy metrics (precision: 95.6%, recall: 92.1%, mAP@0.5: 96.6%) compared to existing models and effectively mitigates limitations of prior studies, such as poor dynamic feature adaptation and high small-target missed detection rates.

Future research will focus on exploring multi-modal data fusion strategies (e.g., thermal infrared) to further improve the model’s detection robustness in complex environments. Ultimately, the goal is to construct an intelligent monitoring platform covering the entire growth cycle of maize, providing reliable technical support for precision agricultural field management.