1. Introduction

Spatially explicit characterization of land cover and land use, as well as their changes over time, is key to long-term agricultural, environmental, and natural resource planning and management. One land-use characteristic that is becoming increasingly relevant for a wide range of policy questions is the extent of single-cropping versus multi-cropping systems; the latter refers to multiple crops grown and harvested in the same field in the same year. For example, government-subsidized insurance for farmers that practice multi-cropping has been proposed as a way to increase production and keep food prices down [

1]. Additionally, climate change has the potential to disrupt single-cropping systems in positive and negative ways. On the positive side, longer growing seasons under warming can allow cooler regions that historically could only support single-cropping to transition into multi-cropping systems [

2], increase total food production, and make farming more profitable. On the negative side, such a switch can increase crop irrigation water demands, exacerbating water security concerns [

3]. It may also increase fertilizer use, exacerbating nutrient loading problems in water bodies and negatively affecting soil health in general [

4,

5]. Therefore, having spatio-temporal information on the prevalence of multi-cropping is important for understanding these implications, but such data are lacking. Even in the United States (US), multi-cropping practices are surveyed only for a few grain commodity crops [

6], and this information is not available for areas with a large crop diversity.

Over the last decade, several studies have utilized satellite imagery and mapped cropping intensities, where intensities of 1, 2, and 3 are indicative of single-, double-, and triple-cropping, respectively. While earlier work utilized coarse-spatial-resolution imagery at 0.5 to several-kilometer resolutions [

7,

8,

9,

10], recent work has focused on Landsat or Sentinel data at finer spatial resolutions of 10 m to 30 m [

11,

12,

13,

14]. With the exception of [

14]—a global spatially explicit product building on the methodology from [

11]—works have primarily focused on geographic regions with high current multi-cropping extent, such as parts of China, India, and Indonesia. Regions such as the irrigated Western US, where the multi-cropping practice is less prevalent historically but has potential to increase under a changing climate, are largely ignored. Additionally, crop diversity in the irrigated Western US is very high (with hundreds of crops) compared to the handful of crop types typically referenced in existing studies. It is unclear if the methodology used in existing works will translate well into monitoring tools for areas of high crop diversity.

The typical methodologies used in quantifying cropping intensities are rule-based. They use a vegetation index (VI) time-series, apply threshold rules to identify peaks and troughs or starts and ends of the season, and count the number of crop cycles. Please note that by

time-series, we refer to the sequence of VI over a given year. Given that these VI thresholds can vary by crop and region, some modifications to generalize the process are considered. This includes standardization practices that replace the direct use of a VI time-series with standardized values [

11,

14]. Additional enhancements to disregard spurious growth cycles are sometimes considered. For example, spectral indices indicative of bare soil such as the Land Surface Water Index (LSWI) are used to double-check the starts and ends of the season [

10,

12], especially when water-logged rice cropping systems are involved. A minimum crop-cycle-length constraint is also applied in some cases [

11,

14]. A key advantage of these methods is that a training dataset is not necessary.

While machine learning (ML) model applications—which require ground-truth datasets—are ubiquitous in applications like crop mapping, they are relatively rare and recent in crop intensity mapping [

15,

16,

17,

18,

19,

20]. However, these methods can be potentially helpful for learning important nuances in high-crop-diversity environments where vegetation thresholds and cycle lengths vary significantly by crop, soil characteristics, and farming practices (e.g., herbicide applications). Advantages of ML models are as follows: (i) they will be more accurate as the training set grows, (ii) they are more robust to rare or erroneous cases, and (iii) these methods learn mostly from frequent instances. The challenge in exploring ML methods is lack of ground-truth data (spatially explicit labeled cropping intensity) across a range of cropping systems for training the models. While all labeling exercises are time- and labor-extensive, some applications, such as the single-crop versus multi-crop distinction, are prohibitively so. For example, labeling images with irrigation canals or apples on a tree can be performed by researchers familiar with the topic by investigating the image once directly, with relatively minimal training. In contrast, labeling fields as single- or multi-cropped requires hiring enough personnel to visit fields multiple times within a season, which quickly becomes time- and cost-prohibitive.

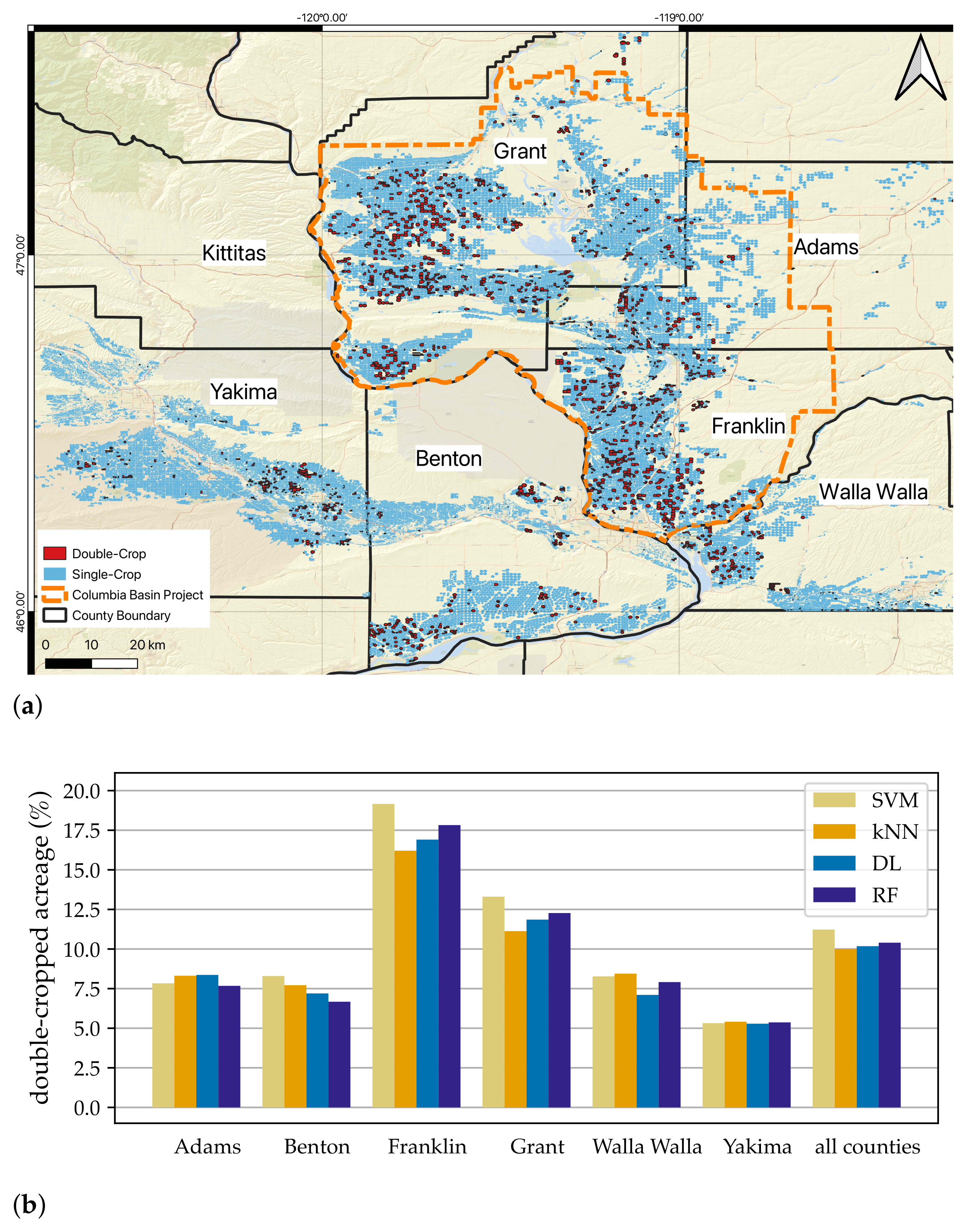

Our objective is to address the gap in field-scale single- and multi-cropped land-use data availability and monitoring capability in regions with high crop diversity. We address this by (a) labeling a ground-truth dataset in a relatively low-cost and scalable manner, (b) developing and evaluating ML classification methods for single- and multi-cropped fields that are crop-agnostic and at a field scale, and (c) comparing the performance of the ML models with the simpler rule-based method that is typically used. We consider a suite of ML models, but a novelty is the consideration of the time-series signature as an image and undertaking a deep learning image classification. Our hypothesis was that, given the fact that the shape of the time-series curve contains highly relevant information, image classification should work well. By

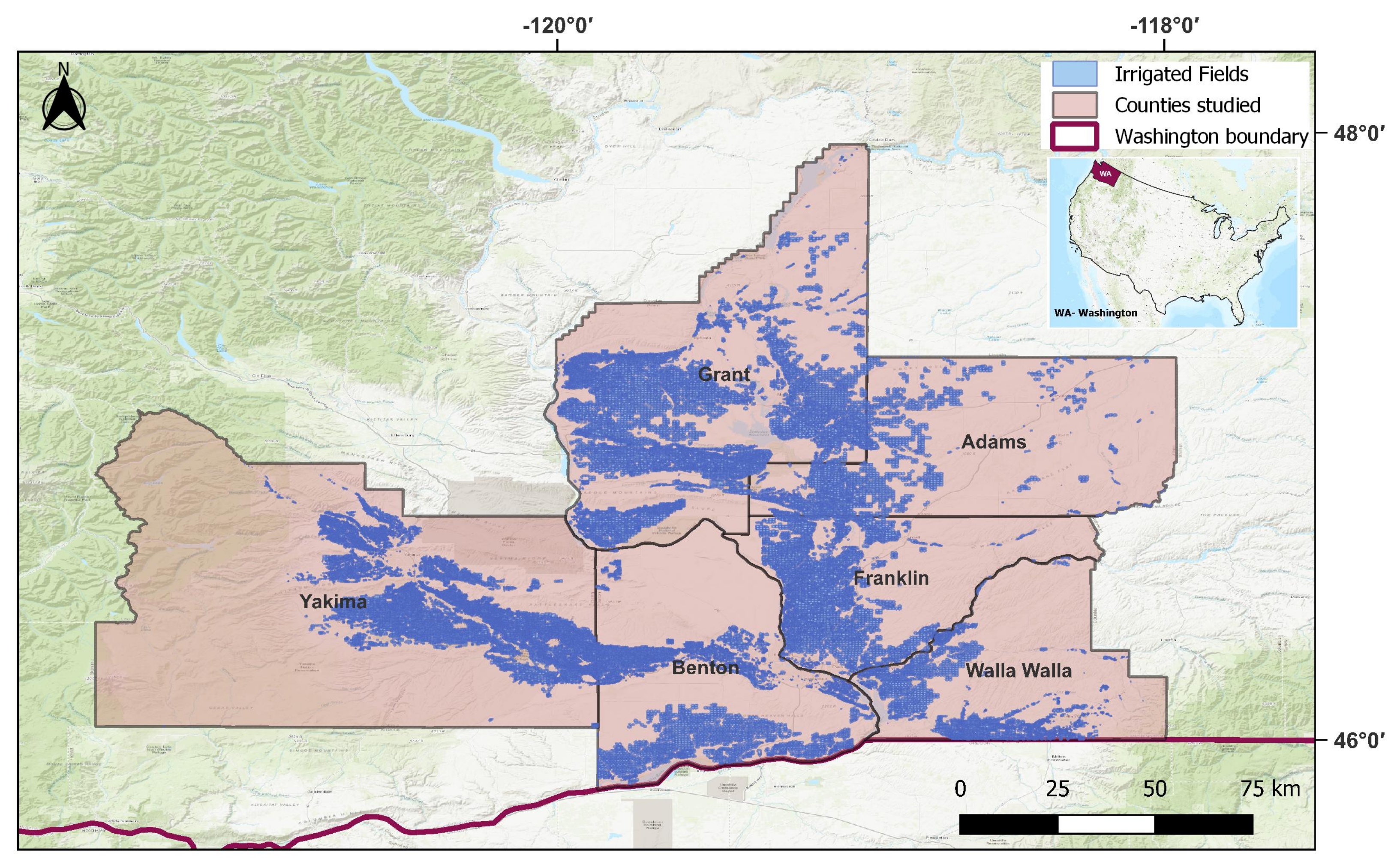

low cost, we mean the relatively lower cost in terms of time and labor needs compared to drive-by/windshield surveys, which are prohibitively expensive for our use case and not scalable to large areas. Finally, we will apply our best-performing method to the irrigated agricultural extent of eastern Washington State in the Pacific Northwest US (

Figure 1) as a case study to predict all fields as single- or double-cropped. Analysis of the predictions will help understand the capability of using this as a long-term monitoring tool for multi-cropping extent. In this regional context, multi-cropping is essentially double-cropping, and the rest of the paper uses this more specific terminology.

Our study area is located in the Upper Columbia River basin and the Yakima River basin of Washington State. This region is characterized by an arid climate with hot, dry summers and moderately cold, dry winters with significant spatial variability in climatic and topographic characteristics. The average annual precipitation ranges from 164

to 992

. The ground elevation ranges from 81

to 3739

. The region grows a wide variety of crops, most of which are high-value agricultural produce. This region is a good test-bed for several reasons. Even though double-cropping is practiced, single-cropping dominates, posing a challenge for identifying double-cropped fields. It is located in the northern latitudes, and as the climate warms and the growing season lengthens, conditions could become more conducive for double-cropping. Additionally, it relies on surface water for irrigation, and there are important field-scale water management challenges associated with increased double-cropping, necessitating spatially explicit information. This relates not just to the magnitude of additional irrigation water that might be needed but also to the timing. Water law in the states in the Western US generally specifies that water rights are associated with a specific

place and season of use regulatory burden on the water rights holder (farmer or irrigation district) and the managing agency (e.g., Washington State Department of Ecology) to modify the water rights specifications accordingly. This is a time- and cost-intensive process as changes need to be verified under the stipulations of the Western Water Law and Prior Appropriation Doctrine [

21,

22].

While recent cropping-intensity studies have leveraged data from the Sentinel mission—providing a 10

spatial resolution with a 5-day revisit [

23], we intentionally utilize data from the Landsat mission [

24,

25] at a 30

spatial resolution and an 8-day (when two concurrent Landsat products are combined) revisit. This allows future leveraging of the Landsat time-series going back several decades—invaluable for understanding the trends, drivers, and impacts of adopting double-cropping. One might decide to use a harmonized Landsat and Sentinel product (or compare the performance of using only Landsat against such products) if focus is just on the current double-cropping extent.

For a low-cost labeling process that does not involve drive-by surveys multiple times within a season, we leverage satellite imagery and the knowledge of regional experts who are familiar with the cropping systems and fields. While this labeled dataset may not be as good as that from a drive-by survey, we expect that it will provide an adequate policy-relevant level of accuracy for identifying single- versus double-cropped extent. We develop and evaluate four ML models, and compare them with a traditional rule-based method that does not require ground-truthing to assess the importance of the labeling process. This is an important result for applying this method to other crop-diverse regions.

The double-cropping monitoring products that come out of this research should have broad global relevance, as cropping intensity is a global issue connected to food security and water-resource management and the interest in developing global-scale products [

14]. It can inform many policy questions, for example, those related to meeting several of the UN 2030 Sustainable Development Goals [

26], for which remote sensing applications are expected to be important [

27]. Additionally, insights from this case study can be applied more broadly to the development of land-use datasets under the challenging contexts of prohibitively expensive costs in developing ground-truth datasets, which is a ubiquitous challenge across the globe.

2. Methods and Data

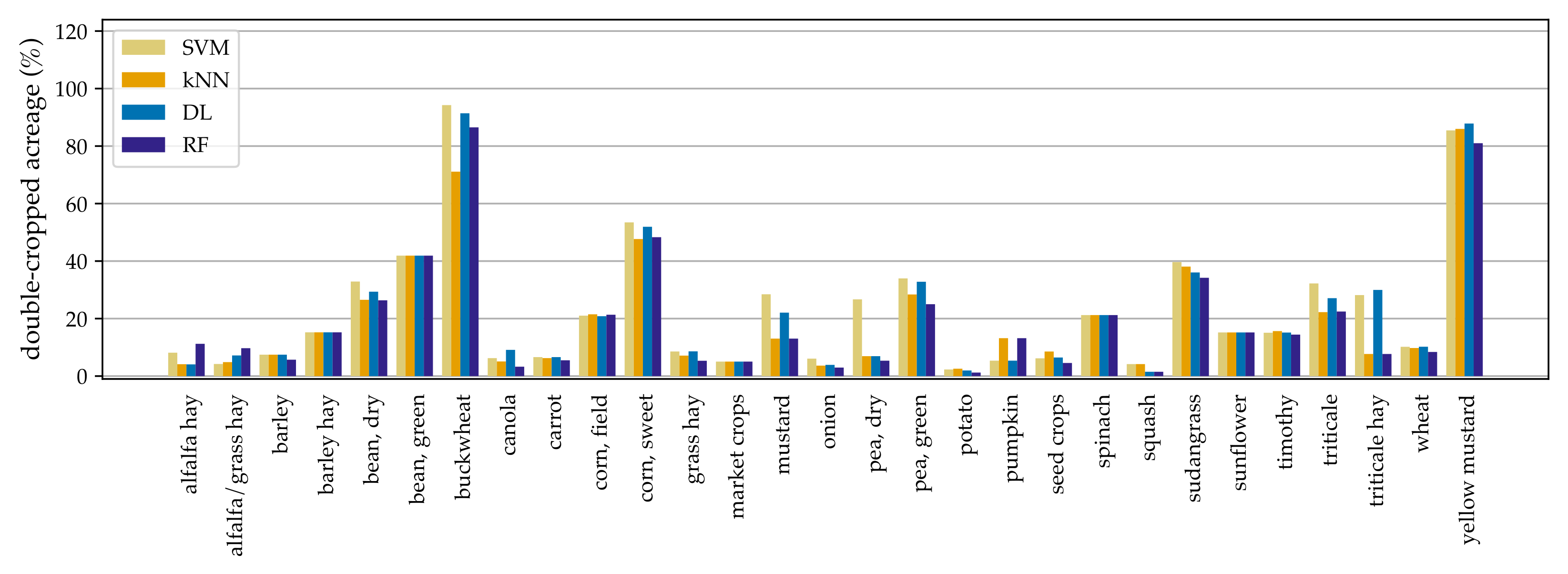

The overall workflow components described in this study are summarized in

Figure 2. We first compute the field-scale smoothed NDVI time-series. These data, along with some additional information, are provided to the expert labeling process that creates the labeled ground-truth dataset; the information provided includes the field’s location on Google Maps so the experts have a sense of which field they are labeling, the date of data recording, the irrigation type, and the NDVI of the field in the year prior and after a given target year to provide a better picture of farming practices such as fall planting in the prior year or crop rotation practices. Classification models are then trained on the NDVI data and evaluated with the test set. Crop-specific summaries are also created for the test set. Finally, the models are applied over the full study area to obtain annual spatially explicit maps of double-cropping.

Our analysis is a field-level rather than pixel-level analysis because that is the decision scale and because pixel-level analysis has challenges related to spatial correlations and within-field differences in classes across pixels [

28]. Therefore, this falls under the class of object-based analysis.

2.1. Input Datasets

The input data include a time-series of a commonly used VI—Normalized Difference Vegetation Index (NDVI) [

29,

30]—generated from multi-spectral observations at a 30

spatial resolution from 2015 to 2018 (

Table 1) from Landsat 7 and 8. We did not harmonize the Landsat 7 and 8 data. While [

31] demonstrated that there is about a 5% bias between these datasets, we are interested in the overall shape of the curve, and the biases would get smoothed out with the data processing we describe below. With two concurrent missions running at a staggered temporal resolution of 16 days, we obtain a combined finer temporal resolution of ∼8 days—important for capturing the quick succession of harvest and planting cycles in double-cropped systems. A field-scale NDVI time-series is obtained by averaging values across all pixels contained within a field. More details of the time-series construction are provided in

Section 2.3. We would like to note that we did explore the Enhanced Vegetation Index (EVI) that address saturation issues with NDVI but found no improved performance and do not report results with this index in this paper.

Given that our model development and application are intended for the field scale, we use the Washington Department of Agriculture’s (WSDA) Agricultural Landuse Geodatabase [

32] to identify fields for our ground-truth data and broader mapping. This dataset was created via drive-by surveys by WSDA covering approximately a third of the state each year. That is, sections of the study area (

Figure 1) were surveyed at least once over a three-year period. Each year’s dataset has the field boundary, crop type, irrigation system, and survey date. The crop type recorded is just the main crop that was observed at the time of the annual drive-by survey. That is, information on double-cropping is not present in this dataset, as that would require multiple drive-by passes of the same field each year.

To create a ground-truth set we only consider fields that were surveyed in the year under consideration so that the field boundaries and crop types are accurate (see

Section 2.2 for the labeling process). We used data from the years 2015 to 2018, which resulted in 44,850 fields. Fields smaller than 10 acres were removed from the analysis for the following reasons: Our case study was conducted in parts of the US where field sizes tend to be larger in the absence of smallholder farms. However, smallholder farms are common in other parts of the world and in transferring the proposed methods to those regions the input data source might become critical. In these contexts, a 1-acre farm would have fewer than 5 Landsat pixels and field-scale vegetation indices averaged from a handful of pixels can have biases. Therefore, utilizing high-spatial-resolution satellite products—such as Sentinel-2 or commercial satellite imagery—to develop the VI time-series for the model will become important. However, this comes at the cost of not being able to develop cropping-intensity time-series that go back in time for decades like the Landsat-based products. Moreover, small fields can suffer from edge effects which result in a noisy VI signal. These smaller fields comprise less than 8% of the total area that we consider and are rarely double-cropped according to the experience of our experts. Therefore, their exclusion will have a minimal impact on our analysis. We also filtered out crop types with minimal irrigated acreage (less than 2874.57 acres—11.63

) in the study area. We selected 10% of the fields from each crop for labeling and added additional fields by crop as necessary to ensure that we have at least 50 fields per crop (if available) in the labeled ground-truth dataset. The fields were sampled from each crop individually with stratified sampling following best practices suggested by Stehman and Foody [

28].

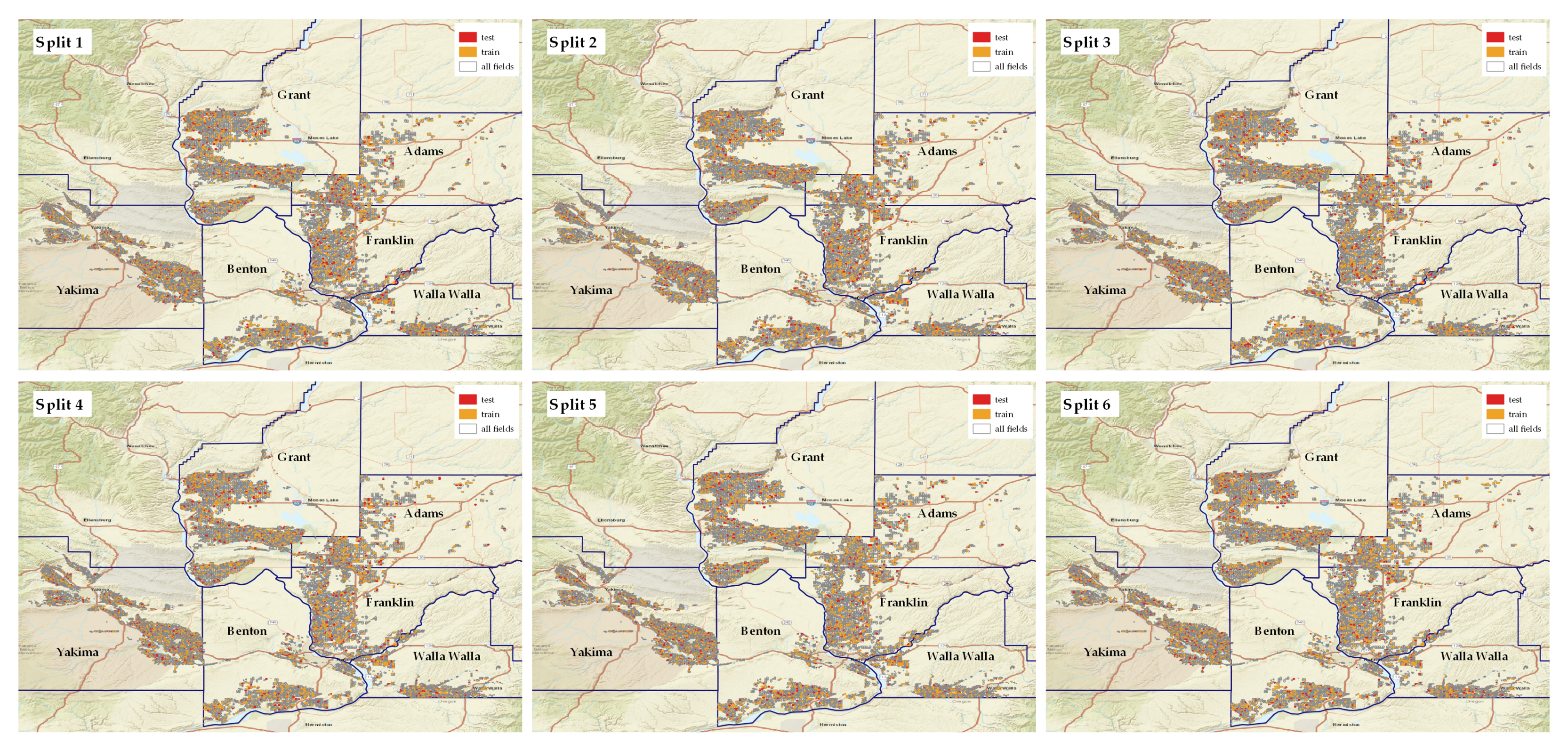

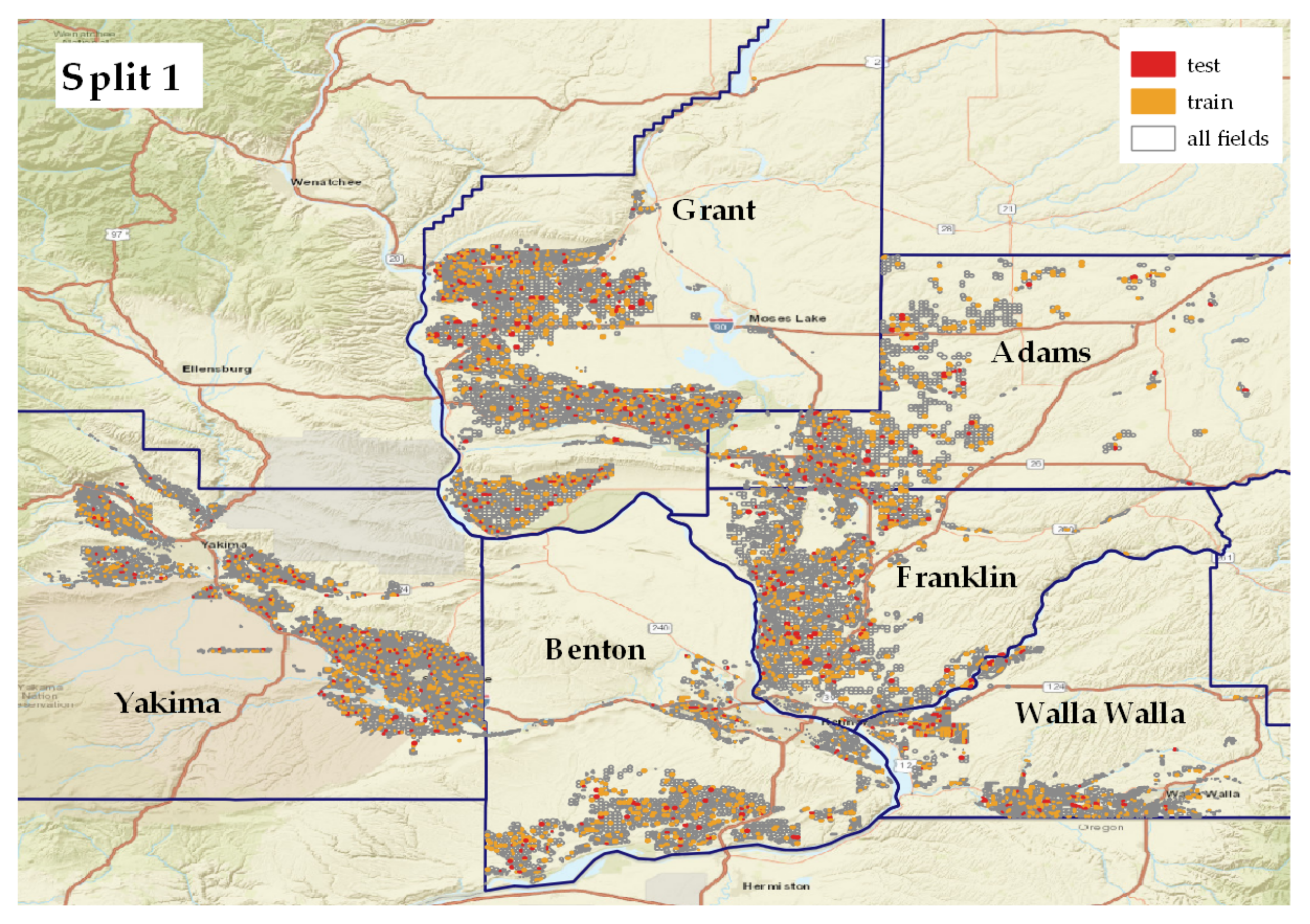

Figure 3 shows the distribution of the ground-truth set. The yellow dots are the fields used for training, and the red dots are the fields in the test set. As we can see, the fields are spread uniformly across the study region. For the final model application, we covered the entire irrigated extent in our counties of interest (

Figure 1).

2.2. Expert Labeling Process

We collaborated with three individuals with extensive knowledge of cropping patterns in the region of study based on considerable time on the ground directly observing production. These individuals are professionals with university extension and governmental agricultural agencies (McGuire and Waters are county extension professionals from our region of interest, and Beale initiated and currently manages WSDA’s agricultural land-use mapping efforts. All experts have strong familiarity with fields, cropping practices, and growers in the region). Such experts are present in many agriculturally intensive production regions, which adds to the repeatability of our approach to other areas. Using multiple labelers aligns with the recommendation from [

28] which states “protocols should be in place to monitor and evaluate the quality of the reference data and results. An example of quality assurance would be to evaluate consistency of reference class labels obtained by different interpreters” and is practiced by other researchers (e.g., [

18]).

We had a three-step process for interacting with the expert panel. First was a meeting that allowed for a more open-ended discussion to identify crops and regions most associated with double-cropping, the timing of planting and harvesting of the first and second crops, and potential complications such as cover crops or hay crops that are harvested multiple times in the growing season. Such open-ended meetings are valuable for narrowing the scope of the analysis, although it is important to not allow existing perceptions that may be partially incorrect to influence the analysis. In our case, we took a nuanced position to cover-crops. While cover-crops are generally not considered a double-crop, since production is not harvested, we made an exception for the yellow-mustard cover-crop and categorized it as a double-crop because our interest is in the water footprint. Other cover-crops are planted very late in the season, requiring minimal or no irrigation. In contrast, yellow mustard is planted early enough to require significant irrigation. A research question focused on the total production of food and fiber, but not on resource use intensity, may result in a different categorization. It is also important to recognize that it may not be possible to differentiate a double-crop from a mustard cover-crop given the expected similarities in their time-series signatures of VIs.

To provide some additional context on the type of issues that may be discussed at this phase, the following are examples of topics raised: Certain crops (e.g., buckwheat, beans, peas, sweet corn, seed crops, sudangrass, triticale in some regions, and alfalfa) are more prevalent in double-cropping systems, and therefore, it would be good to know the crop type for the labeling process. Certain irrigation systems (e.g., flood) are not typically seen in double-cropped fields and the irrigation system would also be useful information for the labeling process. Additionally, double-cropping is prevalent in some areas and with some growers, and having a mapped location of the field will assist the expert in labeling by leveraging their local knowledge. In a double-cropping system, the second crop is typically planted by early August at the latest, and any indication of a second planting much after that is either a fall-planted crop for the following year or a cover-crop which should be considered a single-crop system for our purposes.

The second phase of the expert panel interaction was asynchronous labeling. For each field, we provided the VI time-series, the crop type, the irrigation system, and a Google Map link to the field’s location in a Google Form. The form had five choices for labels: “single-crop”, “double-crop”, “mustard crop”, “either double- or mustard crop”, and “unsure”. Before finalizing the ground-truth set to be sent to the expert panel, four other team members labeled easy-to-categorize crops—including crops that were clearly single-cropped from a clean VI time-series, as well as perennial tree crops and berries which we know cannot be double-cropped—and checked for agreement across team members. The remaining observations, which constitute the more difficult cases, were labeled by experts asynchronously with each field labeled by at least two experts.

We flagged the fields where the experts were not in agreement and resolved discrepancies during a final synchronous meeting. Some challenging discrepancies could not be resolved, and 15 fields were left as unsure and omitted from our dataset. The final labeled dataset consists of 3160 fields across four years (2015–2018) and is representative of 63 crops (

Table 2) and five counties (

Table 1). The labeling process was guided by more information than just the VI time-series, which is the only training input to the models.

2.3. Vegetation Index Time-Series

Crops have distinct spectral reflectance signatures related to their growth and phenological stages. In remote sensing applications, this is typically captured via VIs that capture the growth condition, phenology, and canopy cover of plants as the season progresses. While many VIs exist, a commonly used one in agricultural contexts is the NDVI [

11,

13,

33], which is calculated from spectrometric reflectances in the red and near-infrared bands (Equation (1)). NDVI steadily increases after planting and decreases with senescence leading to harvest. We used the Google Earth Engine (GEE)—a geospatial processing platform that helps access the large suite of publicly available satellite imagery—to obtain field-scale NDVI time-series. We used Landsat Level 2, Collection 2, Tier 1 products (

LE07/C02/T1_L2 [

34] and

LC08/C02/T1_L2 [

35]) with atmospherically corrected surface reflectance bands.

Satellite images are negatively affected by poor atmospheric conditions such as clouds or snow. These adverse effects typically lead to lower values of NDVI. As a part of data collection from GEE, we remove cloudy pixels and then compute the average field-scale NDVI using the remaining clean pixels. Taking the average across pixels is a standard and routine practice [

36,

37]. After fetching the data, we remove noise from the time-series to smooth the bumps and kinks that could throw off the detection of the crop growth cycle. There are numerous ways of smoothing, including weighted moving averages, Fourier and wavelet techniques, asymmetric Gaussian function fitting, and double logistic techniques. For a comparison of different smoothing methods, please see [

38,

39,

40,

41,

42]. The performance of these methods depends on the data, the vegetation type, the data source, and the task at hand [

39,

40]. We use Savitzky-Golay (SG) [

39,

43], which is a commonly used polynomial regression fit based on a moving window. By varying the degree of polynomial and the size of window, one can adjust the level of smoothness.

Positive values of NDVI are indicative of greenness, and negative values represent the lack of vegetation where NDVI varies between −1 and 1.

The following steps are performed at the field scale for denoising:

- 1.

Correct big jumps. The process of growing, and consequently, the temporal pattern of greenness cannot have abrupt increases within a short period of time. Therefore, if NDVI increases too quickly from time t to , a correction is required. In such cases, we assume NDVI at time t is affected negatively and therefore we replace it via linear interpolation. In our study, we used a threshold of as the maximum NDVI growth allowed per day; choosing this threshold makes sure that planting to peak canopy with high NDVI takes at least a month.

- 2.

Set negative NDVIs to zero. Negative NDVI values are an indication of lack of vegetation. In our scenario, the magnitude of such NDVIs are irrelevant. The negative NDVIs with high magnitudes adversely affect the NDVI-ratio method for classification, and therefore, the negative values are set to zero. Assume there is only one erroneous NDVI value that is negative and large in magnitude, e.g., −0.9, while all other values are positive. Then, the NDVI-ratio method, which looks at normalized NDVI values, will have a large value in its denominator, and this single point affects the other points’ standardized values, which can throw off the NDVI-ratio method. Under this scenario, the NDVI-ratio at the time of trough will be pushed up, which leads to missing the harvest and re-planting in the middle of a growing year.

- 3.

Regularization. In this step, we regularize the time-series so that the data points are equidistant. In every 10-day time period, we pick the maximum NDVI as representative of those 10 days. The maximum is chosen because NDVI is negatively affected by poor atmospheric conditions [

44,

45]. We utilize a 10-day regularization window as it is commonly used in the literature (e.g., [

44,

46,

47]).

- 4.

Smooth the time-series. As the last step, the SG filter is applied to the time-series to smooth them even further. SG filtering is a local method that fits the data with a polynomial using the least square method. The parameters used in this study are a polynomial degree of 3 and a moving window size of 7; i.e., a third-degree polynomial is fitted to seven data points.

2.4. Models

With the labeled data and VI time-series, we develop and evaluate five models to classify fields as single- or double-cropped. This includes the NDVI-ratio method used in prior related works [

11,

14] and four ML models: random forest (RF), support vector machine (SVM), k-nearest neighbors (kNN), and deep learning (DL).

Train and test datasets. A stratified splitting across crops (see

Section 2.1) is applied to the ground-truth set six times to obtain six sets, each containing 80% training and 20% testing subsets. Within the 80% training data, a 5-fold cross-validation was used to train the ML models.

NDVI-ratio method. One widely used approach for detecting the start of the season (SOS) and end of season (EOS) is the so-called NDVI-ratio method of White et al. [

48]. The NDVI-ratio is defined by

where

is NDVI at a given time,

and

are the minimum and maximum of NDVI over a year, respectively. When this ratio crosses a given threshold,

[

11,

14], then there is an SOS or EOS event sometime close to the crossing. One pair of (SOS, EOS) event in a season is indicative of single-cropping, and two pairs are indicative of double-cropping. The following rules are applied under the NDVI-ratio method scenario:

- 1.

If the range of NDVI during the months of May through October (inclusively) is less than or equal to 0.3, then this field is labeled as single-cropped. This step was motivated by the low and flat time-series of VIs exhibited by orchards during the visual inspection of figures.

- 2.

Determine SOS and EOS by the NDVI-ratio method.

- 3.

If an SOS is detected for which there is no EOS in a given year, we nullify the SOS. Such an event occurs for winter wheat for example. Similarly, if we detect one EOS over the year with no corresponding SOS, we drop the EOS and consider it as a single-cropping cycle. An example is winter wheat that is planted in the previous year.

- 4.

A growing cycle cannot be less than 40 days.

Machine learning models. We build three statistical learning models—SVM, RF, and kNN—as well as a DL model for classification. Given that the kNN model computes the distance between two vectors (NDVI at multiple points in time), the vectors need to be comparable. Planting dates may vary by field, and therefore, the measurement for distance should take the time shift into account. This is accomplished by using dynamic time warping (DTW) as the distance measure for the kNN model. DTW was introduced by Itakura [

49] in a speech recognition application and is now used in a variety of applications including agriculture [

15,

50]. It measures the distance between two time-series and takes into account the delay that may exist in one of the time-series; it warps time to match the two series so that the peaks and valleys are aligned.

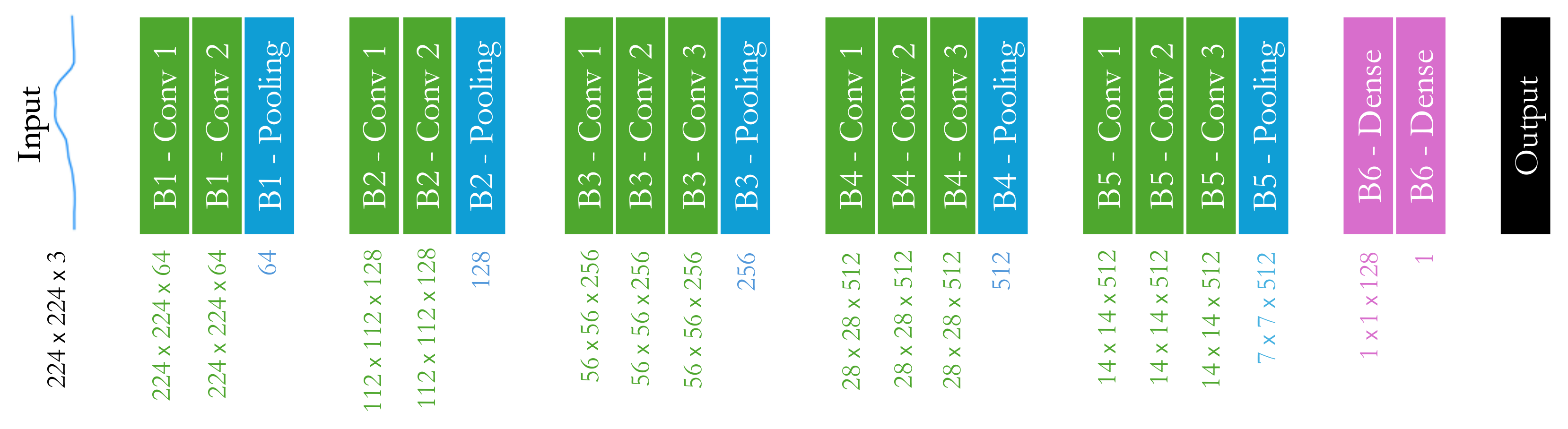

For DL, we use the pre-trained VGG16 model provided by Keras and train only the last layer to avoid the overfitting problem. While the SVM, RF, and kNN models are provided with a vector of NDVI values as input, the DL model is provided with NDVI time-series images as inputs. This means that our approach to DL falls under the category of image classification. While time-series data are not usually analyzed in this manner [

51], it allows for nuances in the shape of the time-series that other models may not capture, potentially resulting in better performance.

Given that there are fewer double-cropped than single-cropped fields, we oversampled the double-cropped instances (the minority class) to address the class imbalance in the dataset. Oversampling was performed by first identifying the number of majority class instances in the training set and then sampling (with replacement) from the minority class until its size reached a specified fraction of the majority class. The only tunable parameter in this process is the size ratio (size of minority class relative to the size of majority class). In our case, the optimal choice was 50%. Oversampling more than 50% led to lower performance. The problem, of course, is not the “imbalanced-ness”. The problem is that each class invades the space of the other class [

52,

53], and excessive oversampling contributed to lowering the performance in our case. Please note that there is no oversampling in the NDVI-ratio method, since there is no training involved and the rules in the NDVI-ratio method are pre-defined. These rules are applied to each individual field independent of other fields. In ML, however, all data points play a role in determining the shape of the classifier. Thus, oversampling is an attempt to tilt the weight in favor of the minority class.

The training process was optimized using 5-fold cross-validation. Details of search space (parameters to choose from) are in

Appendix A.1. The models were implemented using the Python (

v3.9.16) scikit-learn package (

v1.2.1) for RF, SVM, and kNN. For the DTW metric used in kNN, dtaidistance

v2.3.9 was used. For DL, we used TensorFlow (

v2.9.1) and Keras (

v2.9.0) (data processing and visualization were performed using multiple Python packages. Scripts are accessible at

https://github.com/HNoorazar/NASA_WWAO_DoubleCropping/tree/main).

Accuracy assessment. There are three accuracy metrics used and presented in this study. Overall accuracy (OA) is used during training and for optimizing the parameters and hyperparameters. For the testing phase, we report count-based overall, user’s, and producer’s accuracies (UA, PA), as well as their standard errors (SEs) following the methods described in [

54], which accounts for the sampling strategy (e.g., stratifications).

Unlike OA, UA and PA provide information on errors associated with each specific class. The PA helps identify consistent under-representation of a class on a map while the UA indicates if a class is consistently mislabeled as another class. The PA (for the double-cropped class) refers to the fraction of true double-cropped fields that were predicted as double-cropped, and the UA refers to the fraction of predicted double-cropped fields that were actually double-cropped.

Given that we are interested in double-cropped areas, we also report the proportional area-based confusion matrix; however, we are unable to report SEs given that there is no available methodology to address this for object-based classification [

28,

55].

4. Discussion

Two metrics indicate that our approach to identifying double- versus single-cropped fields works well and could be extended to other regions with crop diversity. The first is the high OA and PA of our DL model on the test sets. The second is the qualitative evidence that we are capturing the overall double-cropped crop types and spatial extent in line with experts’ expectations. Additionally, the DL model has fewer instances of mislabeling double-cropped as single-cropped and therefore higher PA and lower standard errors for the double-cropped class. For some applications, PA might be more important. For example, a policy maker interested in capturing the double-cropping extent for estimating the related water footprint will likely prefer a higher PA.

While the adjusted NDVI-ratio method (with augmented auxiliary algorithms) performed well in identifying double- and triple-cropped fields in another work [

11], its performance was poor in our case with very low user’s accuracy (46-59%) and over-prediction of the double-cropped class. Note that this is not a direct comparison because (a) the geographic area is different, (b) Liu et al. [

11] is a pixel-level analysis, while ours is object-based at a field scale, (c) we exclude cover-crops and focus on harvested crops, which is consistent with the definition of multi-cropping from a food security perspective, and the NDVI-ratio methods are unlikely to be able to make this distinction unless a season definition is included, and (d) our methods have a training process that allows the model to learn more nuances related to the shapes of the VI time-series compared to a simpler rule-based method. Therefore, while the difference in performance cannot be solely attributed to the diversity of crops in our region, it likely contributed to it. Each crop has different canopy cover and VI characteristics, likely rendering one threshold value to be inadequate in capturing this diversity.

A more direct comparison is possible with a recent global spatially explicit cropping-intensity product at a 30

resolution [

14]. We overlaid the global product with our study region and identified the overlapping area for comparison. Given significant missing data in the global product in one of our counties of interest (Yakima county), we excluded that county from the comparison. We aggregated the pixel-level cropping intensity from [

14] to our field level to obtain the dominant intensity class. The resulting multi-cropped extent (primarily double-cropped) was ≈1015.76k

compared to our best DL model’s estimate of ≈437.06k

. Therefore, while the global product from [

14] is expected to be a conservative estimate in general, we find that it may be significantly overestimating the cropping intensity in our irrigated study area and perhaps across all of Western US irrigated extent. We hypothesize that this could be due to several reasons. First, the methodology of [

14] is based on an NDVI-ratio process which, as we demonstrated, does not work well and overestimates double-cropping in our highly diverse cropping environment. Additionally, our comparison highlighted that the product in [

14] seems to have erroneously classified a significant acreage of perennial crops (e.g., tree fruit, berries, alfalfa) as double-cropped instead of single-cropped. This highlights that there is an opportunity to integrate ML methods that can be trained to capture additional nuance and regional insights into the global products to enhance their utility, especially in regions such as ours that are not well covered and tested in the cropping-intensity mapping literature.

We acknowledge that operationalizing this as a global product is more complex than rule-based methods, whose simplicity is a strength for broad global-scale applications. But the benefits of trained models can be substantial in complex contexts. Operationalizing an ML-based global product would need (a) further exploration of ML approaches in crop intensity mapping, (b) a concerted effort to curate a global-scale public training dataset that is accessible to researchers across the world including metadata about regional cropping systems and crop cycles which would be relevant, and (c) significant coordination to bring in local expertise for labeling. This is not an easy task and as regional datasets that are more reliable start to develop perhaps where available and can be integrated into global products that are based on more scalable rule-based methods.

The expert labeling process we describe here is much less burdensome than extensive drive-by surveys for land-use labeling. The challenge is in identifying the right set of local experts for the application under consideration. At least in the agricultural context of the US, the Land Grant University County Extension system has an established network of local experts that are familiar with the area, the fields, the growers, and their practices and are an excellent source of knowledge that should be leveraged more in developing labeled datasets that can aid remote sensing applications. While the precision of a drive-by survey may not be achievable, useful information for informing policy can be developed as demonstrated in this work.

One aspect we have not thoroughly evaluated in the temporal scalability of results. Typically, this would be evaluated as a leave-one-year-out experiment. However, in our case, the dataset spans different regions in different years, making it difficult to isolate temporal scalability from spatial variability. Additionally, the relatively limited size of our ground-truth dataset poses further constraints. That said, we do not anticipate major issues with generalizing the model across years, unless there are extreme climatic anomalies—such as unusually warm or cold years—that significantly alter crop phenology. Such shifts could affect key assumptions in our ground-truth labeling, particularly regarding planting dates that distinguish double-cropping from cover-cropping. Additionally, in scenarios involving long-term climate trends, non-stationarity may gradually alter phenology. However, such shifts typically occur over several decades and generally result in week-scale changes which do not impact our assumptions as much. Given this, we believe the model should remain applicable across the past few decades and into the near future, but the temporal scalability issues should be borne in mind for applications.

This method has a number of potential extensions and applications. The first is extending the geographic scope of this work. While the model we have developed is publicly shared and can be directly applied, some additional labeling and retraining would likely be necessary, especially for regions where the cropping intensity can be larger than two crops in a year. Even in this scenario, our DL model can be used for transfer-learning approaches, which can shorten the training time. The models can be continually retrained and refined as new labeled data are created. While our ground-truth data collection and model development efforts focused on irrigated areas, the method is generic and should work for dryland regions as well, though this will need to be tested. Additionally, while our model development and application were at a field scale, which is the scale of decisions, the method can be directly applied at a pixel scale as well in areas where field boundary data are not easily available.

The second is to apply the proposed method and develop a historical time-series of double-cropped extent. This would allow a trend analysis that could be used to identify drivers of increased or decreased double-cropping including changing climatic patterns and agricultural commodity market conditions [

58]. Further analysis would be needed to isolate the climatic drivers of trends, but the methods and dataset developed here are an important first step in generating data that will facilitate these analyses. Climatic relationships, once established, can be extrapolated in a climate-change context to quantify the potential for increased double-cropping in the future. When coupled with a cross-sectional analysis of historical double-cropping trends across diverse regions, climatic thresholds at which double-cropping becomes infeasible (e.g., because the summers are too hot to double-crop) can also be identified. This will provide a realistic upper bound of the potential for increased double-cropping.

Finally, from a water footprint perspective, this spatially explicit dataset can be a valuable input to crop models (e.g., VIC-CropSyst [

59]) to quantify irrigation demands under current and future conditions of double-cropping adoption. Existing studies on climate-change impacts on irrigation demands do not account for double-cropping, and this addition will address an important missing component in the climate-change-impacts literature. This can inform the planning efforts of various stakeholders, including irrigation districts planning for infrastructure management based on season length, water rights management agencies planning for change requests, and water management agencies planning to minimize scarcity issues or adjust reservoir operations.

There are several other policy questions and trade-offs that can be informed by utilizing such spatially explicit datasets (along with other data and models). For example, how much of the growing global food demand can multi-cropping meet? Are the environmental outcomes of increased food production though intensification on existing land better or worse than outcomes from expanding agricultural land? What are the impacts of fertilizer inputs on the land or carbon sequestration potential? As stewards of our natural resources, it is important for us to address the broad range of trade-offs that accompany any land-use change trends and equip ourselves with datasets and monitoring platforms that help address them.