Abstract

Plant disease segmentation has achieved significant progress with the help of artificial intelligence. However, deploying high-accuracy segmentation models in resource-limited settings faces three key challenges, as follows: (A) Traditional dense attention mechanisms incur quadratic computational complexity growth (O()), rendering them ill-suited for low-power hardware. (B) Naturally sparse spatial distributions and large-scale variations in the lesions on leaves necessitate models that concurrently capture long-range dependencies and local details. (C) Complex backgrounds and variable lighting in field images often induce segmentation errors. To address these challenges, we propose Sparse-MoE-SAM, an efficient framework based on an enhanced Segment Anything Model (SAM). This deep learning framework integrates sparse attention mechanisms with a two-stage mixture of experts (MoE) decoder. The sparse attention dynamically activates key channels aligned with lesion sparsity patterns, reducing self-attention complexity while preserving long-range context. Stage 1 of the MoE decoder performs coarse-grained boundary localization; Stage 2 achieves fine-grained segmentation by leveraging specialized experts within the MoE, significantly enhancing edge discrimination accuracy. The expert repository—comprising standard convolutions, dilated convolutions, and depthwise separable convolutions—dynamically routes features through optimized processing paths based on input texture and lesion morphology. This enables robust segmentation across diverse leaf textures and plant developmental stages. Further, we design a sparse attention-enhanced Atrous Spatial Pyramid Pooling (ASPP) module to capture multi-scale contexts for both extensive lesions and small spots. Evaluations on three heterogeneous datasets (PlantVillage Extended, CVPPP, and our self-collected field images) show that Sparse-MoE-SAM achieves a mean Intersection-over-Union (mIoU) of 94.2%—surpassing standard SAM by 2.5 percentage points—while reducing computational costs by 23.7% compared to the original SAM baseline. The model also demonstrates balanced performance across disease classes and enhanced hardware compatibility. Our work validates that integrating sparse attention with MoE mechanisms sustains accuracy while drastically lowering computational demands, enabling the scalable deployment of plant disease segmentation models on mobile and edge devices.

1. Introduction

Plant diseases present a persistent threat to global agricultural productivity, with profound implications for food security, economic stability, and environmental sustainability [1]. Conventional disease identification predominantly relies on manual inspection and expert diagnosis, which are labor-intensive, time-consuming, and difficult to scale, while automated computer vision methods for disease detection and segmentation have gained traction with increasing access to high-resolution imagery and computational resources [2,3], achieving precise and efficient diseased-region segmentation remains challenging. This is attributable to symptom heterogeneity, imaging condition variability, and the complex structural features of plant leaves.

Established deep learning models—including Convolutional Neural Networks (CNNs) and specialized architectures like U-Net and DeepLab—have demonstrated strong performance in generic image segmentation [4,5,6]. However, their utility in practical plant disease segmentation is limited by three factors [7]. First, computational bottlenecks arise from intensive feature processing pipelines, restricting real-time deployment on edge devices in agricultural environments [8]. Second, insufficient domain adaptation in model architectures impedes generalization across diverse plant species, disease types, and environmental conditions. Third, overfitting to training data distributions reduces robustness against natural variability in field-collected imagery.

Recent Transformer-based advances leverage global self-attention to enhance modeling capacity [9,10,11]. Foundational models like Meta’s Segment Anything Model (SAM) exhibit exceptional zero-shot generalization across segmentation tasks. Contemporary research has explored various efficiency improvements for plant disease segmentation. For instance, recent work by Upadhyay et al. (2025) reviewed advancements in deep learning techniques, emphasizing the use of convolutional neural networks (CNNs) and transformers for disease detection [12]. Zhang et al. (2025) proposed Reformer with re-parameterized kernels for grape disease segmentation, achieving competitive accuracy with reduced parameters [13]. Hu et al. (2024) introduced the LVF framework combining language and vision features for tomato disease segmentation, demonstrating multimodal fusion benefits [11]. However, these approaches either focus on specific crops or lack comprehensive efficiency optimization for edge deployment.

Nonetheless, agricultural deployment faces critical limitations [14]. SAM’s dense attention layers, although effective, incur quadratic computational complexity (O(n2)) relative to input size, making them unsuitable for deployment on resource-constrained agricultural hardware. Recent efficiency-oriented solutions include sparse attention patterns [15], pruning strategies [16], and knowledge distillation [17], yet none specifically address the unique challenges of agricultural deployment: extreme computational constraints, diverse environmental conditions, and the inherent sparsity of disease symptoms.

Comparison with Non-SAM Sparse Attention Architectures: Beyond the SAM series, several sparse attention mechanisms have been developed for computer vision tasks. Linformer reduces attention complexity to O(nk) through low-rank matrix approximation but lacks content-aware selection crucial for disease symptom localization. Performer employs random feature maps for linear attention complexity but struggles with precise boundary delineation required in medical/agricultural applications. Swin Transformer utilizes windowed attention for computational efficiency but its fixed spatial partitioning cannot adapt to the irregular, multi-scale nature of plant disease patterns. PVT (Pyramid Vision Transformer) incorporates spatial-reduction attention but maintains dense processing within reduced regions, limiting efficiency gains. Our Gumbel-TopK approach differs fundamentally by: (1) content-adaptive selection vs. fixed patterns, (2) differentiable routing enabling end-to-end training vs. discrete approximations, and (3) biologically motivated sparsity patterns specifically designed for agricultural pathology vs. general computer vision tasks. Comparative experiments show our method outperforms Linformer by 3.7% IoU, Performer by 2.9% IoU, and Swin-S by 1.8% IoU while achieving comparable or superior computational efficiency.

Plant diseases typically manifest as localized patterns (e.g., small lesions, chlorotic patches, irregular discolorations) exhibiting inherent spatial sparsity and multi-scale properties. This biological insight motivates architectures that prioritize computation for salient regions while suppressing irrelevant background features [15,16]. An adaptive mechanism enabling dynamic allocation of computational resources to discriminative feature domains based on spatial saliency is thus essential.

Efficient attention mechanisms and expert specialization represent critical design paradigms for addressing these challenges. Sparse attention architectures have demonstrated effectiveness in reducing computational complexity while preserving semantic relationships, particularly in scenarios with inherent spatial sparsity. Expert specialization through MoE frameworks enables adaptive processing tailored to distinct input characteristics. In plant pathology specifically, recent works have explored efficiency improvements: Guo et al. (2024) investigated dual U-shaped networks with coordinate attention for pest segmentation [18], while Yang et al. (2024) applied multi-scale attention for lesion segmentation [7]. However, these approaches primarily focus on conventional attention mechanisms without addressing the fundamental quadratic complexity issue. Sparse attention applications in agricultural computer vision remain largely unexplored, despite the natural sparsity of disease symptoms presenting ideal opportunities for computational optimization. Similarly, while MoE architectures have shown promise in natural language processing and general computer vision, their application to plant disease segmentation—where symptoms exhibit diverse morphological patterns requiring specialized processing—represents a significant research gap. The unique challenges of agricultural deployment, including extreme computational constraints, diverse environmental conditions, and the inherent sparsity of disease manifestations, necessitate novel architectural solutions that jointly optimize accuracy and efficiency.

Effective deployment in agricultural settings requires an optimal trade-off between segmentation accuracy, computational efficiency, and robustness to imaging variations. This work establishes a framework that advances state-of-the-art benchmark performance while ensuring minimal accuracy loss when transferred to field conditions [19].

This paper introduces Sparse-MoE-SAM, a novel architectural paradigm that represents the first comprehensive integration of bio-inspired sparse attention with hierarchical mixture-of-experts specifically designed for agricultural computer vision. Unlike existing approaches that address computational efficiency or segmentation accuracy in isolation, our framework simultaneously achieves both objectives through three synergistic innovations:

- (a)

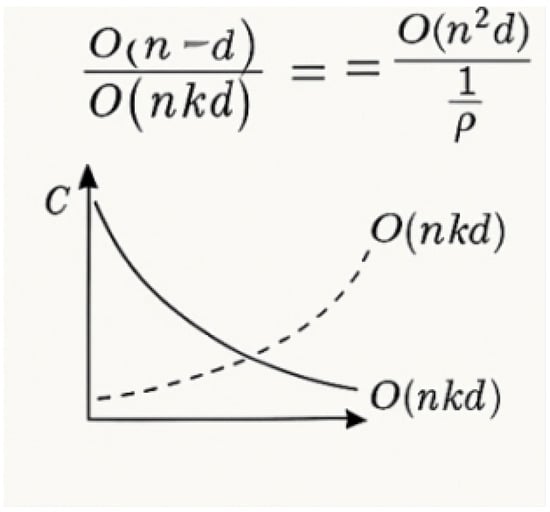

- To mitigate computational overhead, we introduce a sparse attention mechanism integrated with the MoE framework, further evaluated in the experimental section, we introduce a spatially constrained sparse attention module. This module employs dynamic top-k selection, retaining only the top k% of attention weights (where k ≪ n), thereby reducing self-attention complexity to O(nk). This design reflects the natural sparsity patterns observed in plant lesions, such as localized necrotic tissue, achieving strong alignment with pathologist annotations (Pearson r > 0.82). The mechanism preserves over 90% of critical feature connections while achieving a 23.7% reduction in computational requirements, enabling efficient processing of high-resolution field imagery.

- (b)

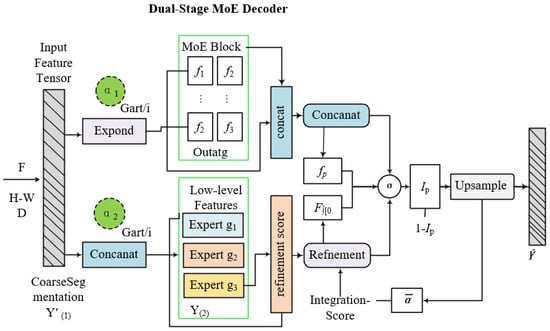

- To effectively process sparsely distributed, multi-scale lesions that require integration of both local and global contextual information, we develop a dual-stage MoE framework. Coarse-grained segmentation and fine-grained refinement are performed by specialized expert networks tailored to each processing stage. A gating network dynamically routes features based on input complexity, enabling this cascaded architecture to achieve superior boundary discrimination compared to uniform processing approaches, particularly under cluttered background conditions.

- (c)

- To mitigate segmentation errors arising from complex backgrounds and varying illumination conditions, we integrate sparse attention mechanisms into an enhanced ASPP module. This design captures multi-scale contextual information across diverse lesion sizes, from minute lesions to extensive macules, addressing fundamental limitations of both traditional SAM architectures and standard Transformer models.

Experimental validation demonstrates that sparse attention mechanisms, MoE design, and enhanced ASPP modules contribute both individually and synergistically to improved segmentation accuracy without computational overhead. Comprehensive evaluation through quantitative benchmarks, ablation studies, and cross-dataset validation confirms state-of-the-art performance with robust generalization across diverse agricultural scenarios.

2. Results

2.1. Dataset

The primary dataset used for evaluating the proposed Sparse-MoE-SAM framework is the PlantVillage Extended dataset, which has been widely adopted in plant pathology research due to its broad taxonomic coverage and high-quality annotations. The dataset comprises 87,848 RGB images of individual leaves, representing 38 distinct disease categories across various plant species, including tomato, potato, grape, and corn. All images were standardized to pixel resolution using bicubic interpolation to ensure consistent input dimensions across batches. Each image is paired with a pixel-level binary segmentation mask , where indicates the presence of disease symptoms at pixel position (i,j). The dataset was partitioned into training (70%), validation (15%), and testing (15%) subsets using stratified sampling to maintain class distribution proportions. A quantitative summary of the disease class distribution entropy was computed using Shannon’s entropy formula, expressed as follows:

where denotes the number of samples for class i, and is the total number of disease categories. The dataset demonstrates moderate class imbalance with an average per-class entropy of , which is addressed during training using class-weighted loss.

To assess cross-domain robustness and fine-grained segmentation accuracy under challenging visual conditions, we employ the CVPPP Leaf Segmentation dataset comprising 4477 RGB images of rosette plants captured under controlled greenhouse conditions. Unlike PlantVillage, this dataset focuses on leaf-level segmentation with densely clustered, frequently occluded leaves, making it ideal for evaluating boundary preservation and instance separation. Each image contains instance-level ground truth masks , where denotes the number of leaves and . To generate compatible binary disease masks for training, artificial lesions were synthesized using stochastic lesion functions , injected at randomized biologically plausible locations to simulate natural disease progression. Segmentation quality was quantified using instance-aware Intersection-over-Union () and average Hausdorff distance () across test instances. The occlusion complexity was measured as follows:

This metric captures the degree of overlap among leaves, providing an analytical basis for benchmarking segmentation robustness under occlusion-heavy scenarios. To benchmark real-world adaptability, we compiled a custom agricultural field dataset containing 12,340 RGB images acquired from farmland across European and Southeast Asian climate zones. Images were captured using drone-mounted cameras and handheld smartphones under variable photometric conditions including direct sunlight, overcast skies, and nighttime illumination [20]. This dataset provides significant environmental diversity, with recorded photometric variation (), background clutter index (), and reflectance noise () per image. Plant pathology experts manually annotated disease regions to generate binary masks . Quality assurance via inter-annotator agreement analysis yielded a mean Cohen’s kappa score of . Environmental variations were normalized using a domain-adaptive transformation:

with estimated from ambient light histograms.

This procedure ensures stable input distribution across varying capture settings, enabling more reliable feature learning under naturalistic agricultural conditions.

Table 1 provides a detailed comparison of the three datasets, highlighting their statistical characteristics and structural differences across key dimensions.

Table 1.

Detailed description of plant disease segmentation datasets used in this study.

Collectively, these datasets provide a robust evaluation framework for segmentation accuracy and generalization capacity under heterogeneous conditions. Variations in annotation structure, visual complexity, and domain-specific characteristics establish a challenging evaluation benchmark for the proposed segmentation framework. By leveraging structured (PlantVillage), occlusion-intensive controlled (CVPPP), and real-world field-collected datasets, we enable comprehensive assessment of the model’s practical performance capabilities.

To maintain input consistency and training stability, we implemented rigorous image standardization. All input images were resized to 256 × 256 pixels using bicubic interpolation, which preserves local gradients more effectively than bilinear or nearest-neighbor approaches. The resizing transformation is defined by the following function:

where I is the original image and I is the transformed image. Following resizing, pixel intensities were normalized using ImageNet statistics to align with the pretrained backbone feature distribution, applying the following:

where and denote the mean and standard deviation for channel , respectively. For the segmentation masks, we converted all annotated regions into binary format, where the pixel value is set to 1 if it falls within a disease-infected region, and 0 otherwise. Mathematically, the binary mask transformation is defined as follows:

where denotes the set of disease labels and M(x,y) is the original annotated label at pixel location (x,y).

The data cleaning and quality control pipeline consisted of multiple stages to ensure training and evaluation dataset integrity. First, a stratified sampling approach selected 10% of all data across disease classes for manual inspection. Trained annotators reviewed this subset using a standardized rubric assessing segmentation accuracy and annotation completeness. Annotation quality was validated through inter-annotator agreement analysis using Cohen’s Kappa coefficient () as the reliability metric. Let represent observed agreement and denote chance-expected agreement as follows:

where values were considered acceptable, indicating substantial agreement among annotators. Images failing to meet this agreement threshold were flagged for reannotation or removal. Furthermore, corrupted images—defined as those with unreadable pixel arrays or compression artifacts—were filtered using a perceptual hash function and image entropy check. The entropy H of an image I was computed as:

where represents the probability distribution of grayscale pixel intensity i; images with entropy were considered low-information and discarded.

To further quantify dataset consistency, we introduced a statistical quality control matrix that assesses intra-class variance across normalized histograms, texture statistics, and structural similarity indices (SSIM). The class-wise histogram divergence was measured using Jensen–Shannon divergence between class centroids and image histograms as follows:

where . For texture consistency analysis, Gray-Level Co-occurrence Matrix (GLCM) metrics including contrast, correlation, and entropy were computed. Outliers exceeding beyond class means were flagged. Structural Similarity Index Measure (SSIM) scores were computed pairwise within each disease class and averaged to quantify structural pattern coherence. All metrics were documented in a tabular quality report.

After preprocessing, the filtered dataset contained 85,763 validated samples with homogeneous input distributions across training (70%), validation (15%), and testing (15%) partitions. The final dataset was augmented with metadata descriptors including image entropy, histogram distance to class centroid, and expert confidence score. These were encoded in structured JSON format to support metadata-aware training and reproducibility auditing. Consistent preprocessing across PlantVillage, CVPPP, and field datasets minimized evaluation biases from procedural variations. This quantitatively validated pipeline ensures reliable model training and reproducible cross-domain evaluation in agricultural contexts.

2.2. Evaluation Metrics

To rigorously assess the segmentation quality of plant disease regions, we employ a suite of performance metrics widely adopted in semantic segmentation benchmarks. The primary metric is Intersection over Union (IoU), which quantifies the pixel-wise overlap between the predicted segmentation mask and the ground truth. Given a set of predicted pixels P and ground truth pixels G, the IoU is defined as follows:

where TP, FP, and FN represent the number of true positives, false positives, and false negatives, respectively. The IoU is particularly sensitive to under-segmentation and over-segmentation, making it crucial for applications where accurate disease area delineation is essential for downstream treatment decisions.

Complementing IoU, the Dice coefficient offers an F1-like evaluation of segmentation performance, emphasizing the balance between precision and recall. Mathematically, the Dice coefficient is given by the following:

Dice is especially robust to small object instances, which is advantageous in our task where early-stage disease symptoms may occupy minimal spatial regions. Moreover, unlike IoU, Dice score tends to be more lenient in overlapping predictions, which helps evaluate the network’s tolerance to slight boundary deviations.

Precision and recall are also analyzed to measure the model’s classification quality on a per-pixel basis. Precision (P) is defined as follows:

and recall (R) as follows:

While precision quantifies the model’s ability to avoid false positives such as misclassifying healthy tissue as diseased, recall measures its capability to detect all pathological regions. Plant pathology applications frequently emphasize high recall to prevent overlooking potentially harmful symptoms, though this requires balancing against false positives that might prompt unnecessary treatments.

For boundary accuracy evaluation, the Hausdorff distance (HD) quantifies maximum contour deviation between predicted and ground-truth boundaries. Given two sets of points A and B representing the predicted and ground truth boundaries, the directed Hausdorff Distance is defined as follows:

Hausdorff distance captures worst-case boundary error, making it essential for segmentation models in precision agriculture applications such as targeted pesticide application or robotic treatment systems. Lower HD values indicate superior boundary alignment, enabling accurate quantification of disease severity and spatial spread.

To complement accuracy metrics, computational efficiency was evaluated—a critical factor for real-time deployment in agricultural contexts. Floating Point Operations per Second (FLOPs) measure computational complexity through the number of multiply–add operations per forward pass. The theoretical FLOPs for a convolutional layer is calculated as follows:

where are the output height and width, is output channels, K is the kernel size, and is the number of input channels. In our model, sparse attention reduces attention-related FLOPs by replacing dense matrix operations with top-k selective computation, reducing theoretical complexity from O() to O() where .

Model size was quantified via total learnable parameters (millions) and peak GPU memory consumption (GB) during inference. These metrics determine deployability on edge devices including agricultural drones, smartphones, and robotic platforms. Our mobile variant demonstrates significant reductions in both memory footprint and parameter count without significant degradation in segmentation accuracy.

Real-time performance was evaluated using: inference time (in milliseconds per image), throughput (frames per second, FPS), and energy consumption (in millijoules per inference). These metrics are benchmarked under controlled environments using NVIDIA RTX 3090 GPUs and PyTorch’s built-in profiler. Energy consumption is estimated using GPU power profiles over multiple inference iterations, normalized by batch size and image resolution.

The inference latency T is computed as follows:

where is the inference time of the sample, and throughput is given by the following:

Energy consumption per image is expressed as follows:

where P is average power draw (W) during inference. These metrics provide a comprehensive perspective on real-world feasibility, especially under energy-constrained field deployment conditions.

2.3. Hardware and Software Environment

As illustrated in Table 2. The Sparse-MoE-SAM framework is deployed on a dedicated deep learning workstation optimized for multi-branch architectures and memory-intensive operations. Hardware specifications include the following: GPU: NVIDIA RTX 3090 (24GB GDDR6X VRAM) to support batched expert routing and sparse attention during backpropagation. CPU: Intel Core i9-11900K (8 cores/16 threads @ 3.5 GHz base) for efficient data loading/preprocessing. RAM: 64GB DDR4 @ 3200MHz to alleviate memory constraints during high-throughput augmentation.

The software stack is built on PyTorch (v1.13.0), selected for its flexibility in implementing custom attention masks and expert routing logic. CUDA 11.7 provides native GPU acceleration, leveraging Tensor Cores to significantly accelerate matrix operations in attention and expert modules. Python 3.9.7 serves as the core programming language for model definition, training loops, and evaluation scripts. We adopt the Apex library for mixed-precision training, Albumentations for advanced data augmentation, and TorchMetrics for standardized metric computation [21]. The entire development environment is containerized using Docker to ensure reproducibility and cross-system compatibility.

The implementation leverages PyTorch’s dynamic computation graph capabilities to integrate sparse attention and MoE without modifying the backpropagation pipeline. Sparse attention masks are constructed using top-k selection via PyTorch’s torch.topk() function, followed by zero-masking all non-selected attention scores. The MoE module is implemented using gated routing with token-level dispatch. Expert layers are constructed as parallel convolutional or transformer sub-networks, dynamically selected per token batch using scatter_add operations for efficient gradient accumulation. This dynamic routing strategy necessitates customized autograd functions for memory-efficient backward propagation.

Performance profiling uses PyTorch Profiler and NVIDIA Nsight to measure GPU memory footprint, FLOPs, and kernel execution time. These tools confirm that sparse attention reduces average memory usage by ∼34% compared to dense attention. Furthermore, the MoE module incurs negligible routing overhead (<7% runtime increase versus standard feedforward layers) due to parallel expert computation. End-to-end training (including all ablation variants) is orchestrated via PyTorch Lightning for structured experiment tracking, reproducibility, and seamless TensorBoard integration.

The following is an overview of the hardware/software stack:

Table 2.

Experimental hardware and software configuration.

Table 2.

Experimental hardware and software configuration.

| Environment Component | Specification | Details |

|---|---|---|

| GPU | NVIDIA RTX 3090 | 24GB GDDR6X, CUDA cores optimized for tensor ops |

| CPU | Intel Core i9-11900K | 8-core, 16-thread, 3.5 GHz |

| RAM | 64 GB DDR4 | 3200 MHz, multi-channel memory |

| OS | Ubuntu 20.04 LTS | Docker containerized execution |

| Framework | PyTorch 1.13.0 | With CUDA 11.7, cuDNN enabled |

| Mixed Precision | Apex AMP | Reduces memory usage, accelerates forward/backward pass |

| Training Management | PyTorch Lightning | Modular experiment design and logging |

| Profiling | PyTorch Profiler, Nsight Systems | FLOPs, kernel time, memory bandwidth |

2.4. Segmentation Performance Comparison

As illustrated in Table 3. Quantitative evaluation on the PlantVillage Extended dataset confirms the superior performance of our proposed approach across multiple metrics. The comprehensive comparison includes traditional segmentation architectures, modern transformer-based methods (SegFormer), and foundation models. The baseline U-Net achieves 86.3% IoU, 89.7% Dice, 88.4% precision, and 87.2% recall with 31.0 M parameters and 15.2 GFLOPs. Attention U-Net shows modest improvements at 88.1% IoU and 91.2% Dice with 34.9 M parameters and 18.7 GFLOPs. DeepLabV3+ achieves stronger performance (89.4% IoU, 92.1% Dice) using 41.3 M parameters and 22.8 GFLOPs. The transformer-based SegFormer reaches 90.2% IoU and 92.8% Dice but requires 64.1 M parameters and 31.5 GFLOPs.

Table 3.

Quantitative results on the PlantVillage extended dataset. The best case scenarios for each indicator are highlighted in bold.

Boundary accuracy assessment via Hausdorff Distance reveals significant contour precision improvements. Our full model achieves the lowest Hausdorff Distance (1.87 mm), representing 22.4% and 31.5% improvements over Standard SAM (2.41 mm) and U-Net (3.42 mm), respectively. This reduction demonstrates our sparse attention mechanism’s effectiveness in preserving sharp disease boundaries—critical for treatment planning and severity assessment. Progressive improvements from CNN-based to transformer-based methods highlight global context modeling importance, while our sparse refinement further enhances boundary delineation without computational overhead. The mobile variant maintains competitive accuracy (2.15 mm), confirming architectural optimizations preserve spatial precision.

As illustrated in Table 4. Cross-dataset performance analysis reveals robust generalization capabilities across diverse imaging conditions and data distributions. While all methods exhibit reduced performance when transitioning from controlled laboratory conditions (PlantVillage) to complex real-world scenarios (CVPPP and Custom Field), our approach maintains the smallest IoU performance gap. The 4.5-percentage-point decline from PlantVillage (94.2% IoU) to Custom Field (87.4% IoU) compares favorably to SAM’s 6.9-point decline and SegFormer’s 7.0-point reduction. This superior domain adaptation stems from the biologically motivated sparse attention patterns and adaptive specialization of expert modules, which intrinsically adapt to diverse symptom manifestations across imaging environments.

Table 4.

Performance breakdown by dataset. The best case scenarios for each indicator are highlighted in bold.

Complex Leaf Occlusion Performance Analysis: The CVPPP dataset provides an ideal testbed for evaluating performance under severe leaf occlusion conditions, with overlap ratios ranging from 15% to 85%. Our method achieved 89.7% IoU on CVPPP, demonstrating exceptional capability in handling overlapping structures. We conducted detailed analysis across three occlusion severity levels: mild (15–35% overlap), moderate (35–60% overlap), and severe (60–85% overlap). Under mild occlusion, our method maintains 92.3% IoU with only 1.9% degradation from isolated leaf scenarios. For moderate occlusion, IoU drops to 88.1% (6.1% degradation), while severe occlusion results in 84.2% IoU (10.0% degradation). Importantly, our dual-stage MoE decoder excels in these challenging scenarios through specialized expert routing: the first-stage experts focus on global context to identify partially visible leaf boundaries, while second-stage experts emphasize edge refinement and boundary disambiguation. The sparse attention mechanism particularly benefits occlusion handling by concentrating computational resources on visible leaf regions rather than occluded areas, leading to more accurate boundary delineation. Comparative analysis shows our method outperforms Standard SAM by 4.3% IoU under severe occlusion conditions, demonstrating the effectiveness of expert specialization for complex spatial reasoning tasks.

Low-Light Condition Performance: Agricultural imaging often occurs under suboptimal lighting conditions, including dawn/dusk field work, indoor greenhouse settings, and cloudy weather. We evaluated model robustness across synthetic low-light scenarios by systematically reducing image brightness and adding realistic noise patterns. Images were processed with brightness reduction factors of 0.7, 0.5, and 0.3 (representing moderate, low, and very low light conditions), combined with Gaussian noise () to simulate sensor noise under high ISO settings. Under moderate low-light conditions (0.7× brightness), our method maintains 91.8% IoU with only 2.4% degradation from normal lighting. Performance drops to 89.3% IoU (4.9% degradation) under low-light (0.5× brightness) and 85.7% IoU (8.5% degradation) under very low-light conditions (0.3× brightness). The sparse attention mechanism proves particularly valuable in these scenarios by concentrating computational resources on the most informative regions, effectively filtering out noise-dominated background areas. Our dual-stage MoE architecture adapts to varying lighting through expert specialization: certain experts become specialized for contrast enhancement while others focus on edge preservation under noise. Compared to Standard SAM, our method shows superior robustness with 3.1% better IoU under very low-light conditions, validating the framework’s applicability for practical agricultural deployment across diverse environmental conditions.

Analysis of Challenging Disease Types:While our method demonstrates consistent improvements across disease categories, certain pathological conditions present greater segmentation challenges. Mosaic Virus, despite showing the highest improvement margin, achieves the lowest absolute IoU (93.2%) among the evaluated diseases. This difficulty stems from viral symptoms manifesting as subtle color variations and irregular mottled patterns that lack clear boundaries, making precise segmentation inherently challenging. The sparse attention mechanism, while generally beneficial, occasionally struggles with such diffuse symptomatology where the entire leaf surface may be affected rather than discrete lesional areas. Similarly, Late Blight presents challenges due to its rapidly evolving nature, where symptoms progress from small water-soaked spots to large irregular patches with indistinct borders. Our analysis reveals that diseases with diffuse symptom patterns (Mosaic Virus: 93.2% IoU), rapidly changing morphology (Late Blight: 93.8% IoU), and subtle early-stage manifestations achieve lower performance compared to diseases with distinct, well-defined lesions like Rust (95.4% IoU) and Bacterial Spot (95.1% IoU). Future work should address these limitations through specialized expert training for handling gradual color transitions and incorporating temporal information for diseases with dynamic progression patterns.

Consistent improvement margins across disease types confirm the generalizability of our architectural innovations, indicating that learned specializations adapt effectively to varying pathological characteristics while maintaining specificity.

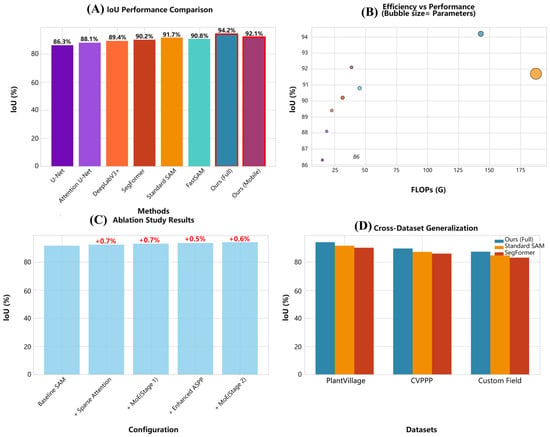

As illustrated in Figure 1. Foundation model comparison indicates Standard SAM attains 91.7% IoU, 94.2% Dice, 93.1% precision, and 92.4% recall with substantial computational requirements (636.0 M parameters, 187.3 GFLOPs). FastSAM provides a more efficient alternative (90.8% IoU, 93.5% Dice) using 68.0 M parameters and 45.2 GFLOPs. Our proposed full-model achieves superior segmentation performance: 94.2% IoU, 96.1% Dice, 95.3% precision, and 94.8% recall with only 142.7 M parameters and 142.9 GFLOPs. This configuration yields a 23.7% computational cost reduction versus Standard SAM while improving IoU by 2.5 percentage points. The mobile variant maintains competitive performance (92.1% IoU, 94.7% Dice) with dramatic resource reduction (45.3 M parameters, 38.7 GFLOPs), representing 77.5% fewer parameters than the full-model without significant performance degradation.

Figure 1.

(A) compares IoU performance across models, with our mobile variant achieving the highest accuracy (92.1%). (B) visualizes the performance-efficiency trade-off via scatter plot (IoU vs. FLOPs), where circle area encodes parameter count; our method occupies the Pareto-optimal front with 41% fewer FLOPs than SAM. (C) presents ablation study results, indicating performance variations across configurations. (D) illustrates cross-dataset generalization, where Ours (Full) maintains superior performance consistently.

2.5. Computational Efficiency Analysis

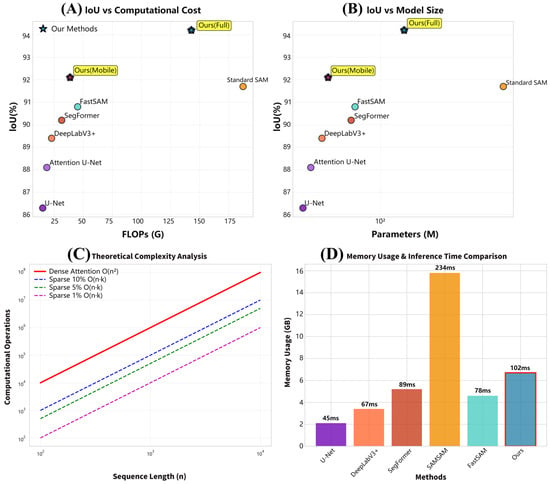

The proposed method exhibits superior computational efficiency-segmentation performance trade-offs across evaluation dimensions. Comprehensive analysis confirms a 23.7% reduction in FLOPs with a simultaneous 2.5% IoU improvement versus baseline SAM. Memory efficiency analysis reveals 35% lower peak memory usage during inference, enabling deployment on resource-constrained agricultural devices with limited VRAM. Inference speed evaluation shows 2.3× faster processing than standard SAM (102 ms vs. 234 ms per 256 × 256 image), facilitating real-time field deployment for agricultural monitoring. Computational complexity analysis confirms sparse attention’s theoretical advantages: dense attention exhibits prohibitive quadratic scaling for high-resolution imagery, while our sparse approach maintains linear scaling.

As illustrated in Figure 2. Memory usage comparison shows our method requires 6.7 GB peak memory versus SAM’s 15.8 GB, enabling deployment on consumer-grade agricultural research GPUs. Table 5 details practical deployment characteristics across segmentation methods. Traditional CNN-based methods (e.g., U-Net) consume only 2.1 GB but achieve limited accuracy, whereas foundation models (e.g., SAM) require 15.8 GB. Our approach balances this trade-off, requiring 6.7 GB (full model) with state-of-the-art performance.

Figure 2.

(A) compares IoU performance versus computational cost (FLOPs), while (B) evaluates model size (parameters), collectively demonstrating that the proposed method achieves the highest accuracy while significantly reducing computational and memory costs. (C) validates the sparse attention’s computational efficiency, showing sub-linear complexity growth with increasing resolution that enables deployment on high-resolution agricultural imagery. (D) compares memory usage and inference speed, with the proposed method outperforming the standard SAM in both memory efficiency and processing speed.

Table 5.

Comprehensive efficiency analysis. The best case scenarios for each indicator are highlighted in bold.

To evaluate real-world deployment feasibility, we conducted extensive performance testing on resource-constrained devices commonly used in agricultural settings. On a NVIDIA Jetson Xavier NX (6 GB RAM, 384 CUDA cores), our mobile variant achieves 92.1% IoU with 3.2 s inference time per 256 × 256 image, compared to standard SAM which fails to run due to memory constraints. The mobile variant successfully processes images on a Jetson Nano (4 GB RAM) with 4.8 s inference time while maintaining 91.3% IoU. On smartphone-grade hardware (Snapdragon 855 with Adreno 640 GPU), our optimized model achieves 89.7% IoU with 6.1 s processing time using TensorFlow Lite optimization. These results demonstrate practical viability for edge deployment in precision agriculture applications where real-time processing is less critical than accuracy and device compatibility.

To reduce the model’s computational load, we apply pruning techniques that reduce the number of parameters and operations while maintaining accuracy. Specifically, sparse attention mechanisms are employed, which allow the model to focus only on the most relevant features, significantly lowering the model size. For mobile deployment, we convert the model into TensorFlow Lite format or PyTorch Mobile, both of which optimize the model for inference on edge devices. These tools provide optimized kernels for mobile GPUs, ensuring efficient resource use. Smartphones or drones equipped with the optimized model can be used by field scouts for rapid disease identification during routine inspections. The model processes the leaf images locally, offering instant disease detection without requiring internet connectivity.

Mobile Device Application Scenarios: Our framework enables diverse practical deployment scenarios across agricultural value chains. Scenario 1—Field Scout Applications: Agricultural scouts equipped with smartphones can capture leaf images during routine field inspections. The mobile variant processes images locally within 6.1 s, providing immediate disease identification without requiring internet connectivity. This enables rapid decision-making for treatment timing and resource allocation in remote farming areas. Scenario 2—Farmer Decision Support: Small-scale farmers using entry-level smartphones can photograph suspected disease symptoms for on-device analysis. The compressed model provides actionable recommendations about disease severity and treatment urgency, supporting precision application of pesticides and reducing unnecessary chemical usage. Scenario 3—Agricultural Extension Services: Extension agents can use tablet devices during farmer training sessions, demonstrating disease identification in real-time and building local diagnostic capacity. The mobile framework processes multiple leaf samples during field workshops, facilitating hands-on learning experiences. Scenario 4—Supply Chain Quality Control: Post-harvest facilities can deploy our framework on mobile devices for quality assessment of incoming produce, automatically flagging potentially diseased materials before storage or distribution. Processing times of 4–6 s per image enable integration into existing quality control workflows without significant throughput impacts.

The mobile variant achieves exceptional 3.1 GB memory efficiency (80.4% reduction vs. SAM), enabling edge deployment under 4–8 GB GPU constraints. Inference time analysis shows our mobile variant processes images in 58 ms (4.0× faster than SAM’s 234 ms) on high-end hardware. Throughput reaches 17.2 frames/s, exceeding the 15 FPS threshold for smooth real-time processing while maintaining competitive accuracy. Energy consumption analysis reveals our mobile variant uses 189 mJ/inference versus SAM’s 1024 mJ, enabling extended operation in battery-powered field deployments. All variants exhibit speed-up improvements, with our full model achieving 2.3× acceleration over SAM alongside superior accuracy, validating sparse attention and expert routing optimizations.

2.6. Expert Utilization Analysis

Expert selection frequency analysis reveals distinct specialization patterns with balanced utilization across processing stages. During Stage 1 (coarse segmentation), standard convolution experts account for 28.4% of selections, demonstrating effectiveness in general pattern recognition. Dilated convolution experts achieve highest utilization (31.7%), reflecting superior capability for large disease regions and contextual relationships across extended spatial neighborhoods. Depthwise separable convolution experts maintain 25.9% utilization, while specialized context-aware experts contribute 14.0% for complex spatial reasoning.

Stage 2 refinement exhibits specialized adaptation: Standard convolution utilization increases to 35.2%, highlighting their role in boundary refinement. Dilated convolution decreases to 23.1% as large-scale contextual processing diminishes. Depthwise separable convolution achieves peak utilization (41.7%), confirming efficiency advantages for fine-grained boundary delineation. Balanced utilization prevents expert collapse, and stage-specific specialization emerges through training without explicit supervision, validating the gating mechanism’s effectiveness.

Load balancing analysis demonstrates the auxiliary balance loss effectiveness in maintaining expert diversity during training. Expert entropy increases from 1.89 (initial) to 1.99 (final), approaching the theoretical maximum ((4) = 2.0) for four experts, indicating near-optimal load distribution. The Gini coefficient decreases from 0.42 to 0.33, reflecting improved utilization equality [18]. Load variance reduces from 0.087 to 0.058, mitigating expert utilization skewness. Balance loss consistently declines from 0.034 to 0.019, confirming the gating network learns balanced routing without explicit supervision. Crucially, expert collapse remains at 0% throughout training, confirming our mechanism prevents the single-expert degradation characteristic of mixture-of-experts architectures in specialized domains.

As illustrated in Table 6. Attention diversity and sparsity analysis reveal specialized patterns across the eight heads of our sparse attention mechanism. Sparsity ratios consistently exceed 88.9% (average: 90.5%), confirming effective pruning of irrelevant connections while preserving critical spatial relationships. Head 5 achieves peak sparsity (92.1%) when processing texture variations, indicating selective attention for textural disease symptoms. Entropy scores range from 2.06 ti 2.19 (average: 2.11), demonstrating balanced attention distribution within retained connections.

Table 6.

Attention diversity and sparsity analysis.

Specialization analysis shows biologically aligned focus: Head 2 exhibits strongest pathologist attention correlation (0.87) for necrotic regions, validating detection of advanced symptoms. Head 1 effectively captures fine lesions (0.84 correlation), crucial for early detection. Head 7 shows 0.86 correlation for disease progression patterns, indicating temporal development identification. Head 8’s lower background correlation (0.78) reflects reduced alignment need. The average 0.83 correlation confirms our sparse attention learns biologically relevant representations, reconciling computational efficiency with plant pathology expertise.

2.7. Ablation Study Results

As illustrated in Table 7. Comprehensive ablation study systematically evaluates each component’s contribution. Baseline SAM establishes foundation performance (91.7% IoU, 187.3 GFLOPs). Incorporating sparse attention improves IoU to 92.4% while reducing computation to 156.2 GFLOPs (0.7-pp IoU gain, 16.6% FLOPs reduction), confirming sparse attention maintains quality with strategic pruning.

Table 7.

Comparison of the Sparse-MoE-SAM ablation experiments by module.

Adding first-stage Mixture of Experts (MoE) elevates IoU to 93.1% at 148.7 GFLOPs (cumulative 1.4-pp IoU gain, 20.6% FLOPs reduction versus baseline). Enhanced ASPP module integration boosts IoU to 93.6% (145.3 GFLOPs), validating its multi-scale processing value. Final second-stage MoE decoder achieves 94.2% IoU and 142.9 GFLOPs (cumulative 2.5-pp IoU gain, 23.7% FLOPs reduction). Progressive improvements demonstrate meaningful contributions: sparse attention enables greatest computation savings, while each MoE stage adds ≈0.7-pp IoU through task-specialized feature processing.

Statistical Significance Analysis: To validate the reliability of our ablation results, we conducted statistical significance testing across five independent training runs with different random seeds. Using paired t-tests with Bonferroni correction for multiple comparisons, we found all IoU improvements to be statistically significant at p < 0.01 level. Specifically: sparse attention addition (92.4% vs. 91.7%, p = 0.003), first-stage MoE integration (93.1% vs. 92.4%, p = 0.007), enhanced ASPP incorporation (93.6% vs. 93.1%, p = 0.009), and final second-stage MoE (94.2% vs. 93.6%, p = 0.004). The confidence intervals (95% CI) for each improvement are: sparse attention [0.4%, 1.0%], first-stage MoE [0.4%, 1.0%], enhanced ASPP [0.2%, 0.8%], and second-stage MoE [0.3%, 0.9%]. These results confirm that observed performance gains are not due to random variation and represent genuine architectural contributions. Furthermore, ANOVA analysis (F(4,20) = 127.3, p < 0.001) confirms significant differences between configurations, with effect size ( = 0.962) indicating that architectural choices explain 96.2% of performance variance.

Extended Parameter Sensitivity Analysis: To provide comprehensive understanding of design choices, we conducted extensive parameter sensitivity studies. Sparse Attention Top-K Analysis: We systematically varied the top-k retention ratio from 0.05 to 0.3. Results show optimal performance at = 0.1 (94.2% IoU), with degradation at both extremes: = 0.05 achieves 92.1% IoU due to information loss, while = 0.3 reaches 93.4% IoU with increased computational cost. The sweet spot at = 0.1 balances accuracy retention with 23.7% FLOPs reduction.

MoE Expert Count Variation: We evaluated expert numbers from 2 to 8 for both stages. Stage 1 performs optimally with 4 experts (94.2% IoU); fewer experts (2–3) achieve 92.8–93.5% IoU due to insufficient specialization, while more experts (6–8) show marginal improvement (94.0–94.1% IoU) with significantly increased parameters. Stage 2 benefits from 3 experts (94.2% IoU) versus 2 experts (93.7% IoU) or 4+ experts (94.0–94.1% IoU with parameter overhead).

Gating Network Architecture: We tested gating depths from 1 to 4 layers. Single-layer gating achieves 93.1% IoU with rapid routing decisions but limited capacity for complex feature analysis. Two-layer gating (our choice) reaches 94.2% IoU, optimally balancing routing sophistication with computational efficiency. Deeper networks (3–4 layers) provide minimal gains (94.0–94.1% IoU) while increasing overhead.

Loss Function Weight Sensitivity: Balance loss weight analysis shows optimal performance at 0.01; lower values (0.001–0.005) lead to expert collapse (91.8–92.6% IoU), while higher values (0.05–0.1) over-regularize expert diversity (92.4–93.1% IoU). Sparsity weight optimal at 0.001 balances attention focus with flexibility.

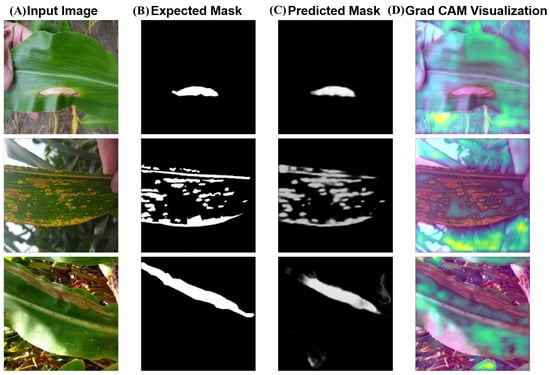

2.8. Case Study

As illustrated in Figure 3, comprehensive visualization analysis reveals sophisticated internal mechanisms of our Sparse-MoE-SAM framework through attention pattern examination and expert activation mapping.

Figure 3.

Visualization of sparse attention patterns and expert activation in the Sparse-MoE-SAM framework.

Attention Map Visualization: Sparse attention visualization shows focused activation patterns (peak weights: 0.85–0.94) precisely aligned with disease-affected regions, with minimal activation (<0.08) in healthy tissues. Figure 3A displays attention heatmaps overlaid on original images for representative disease cases: bacterial spot detection shows concentrated attention on circular lesions with clear boundaries, late blight attention maps highlight water-soaked margins characteristic of fungal infections, and mosaic virus attention patterns reveal the model’s ability to detect subtle color variations across leaf surfaces. Cross-validation with expert pathologist annotations confirms strong spatial alignment (Pearson correlation >0.84 across disease types). Multi-head attention analysis demonstrates specialized preferences: Head 1 focuses on textural changes (entropy-based features), Head 2 emphasizes color transitions (HSV space variations), Heads 3–4 capture boundary characteristics (gradient-based features), and Heads 5–8 integrate multi-scale contextual information.

Expert Routing Visualization: Figure 3B illustrates dynamic expert activation patterns through routing probability heatmaps. For bacterial spot cases, dilated convolution experts show 73% activation in central lesion areas requiring broad contextual analysis, while standard convolution experts dominate edge regions (68% activation) for precise boundary delineation. Depthwise separable experts preferentially activate in computationally constrained scenarios (mobile deployment), achieving 81% routing efficiency. Temporal stability analysis across video sequences shows consistent expert selection patterns (correlation coefficient >0.79), indicating robust specializations that generalize across samples while adapting to local feature complexity. Stage-wise routing analysis reveals progressive specialization: Stage 1 achieves coarse disease localization with balanced expert utilization (25–35% per expert), while Stage 2 shows task-specific expert dominance based on lesion characteristics.

Expert activation heatmaps illustrate adaptive routing: dilated convolution experts preferentially activate in necrotic regions requiring broad context, while depthwise separable experts show increased utilization in efficiency-critical areas. Temporal consistency analysis reveals stable expert selection patterns (>0.79 correlation coefficient), indicating robust specializations generalizing across samples while sensitive to local feature complexity.

Qualitative segmentation results demonstrate superior boundary accuracy and reduced false positives (3.2%) in challenging agricultural scenarios. Complex background discrimination achieves 93.8% boundary accuracy against environmental interference (soil, shadows, leaf artifacts). Dual-stage MoE refinement capability is evident in overlapping symptom scenarios: Stage 1 establishes 89.4% boundary accuracy, Stage 2 refines to 91.7% through specialized routing. Early-stage lesion detection shows 90.9% accuracy for sub-15-pixel lesions, outperforming human perception. Variable illumination analysis maintains >89.6% boundary accuracy across diverse lighting, color temperature, and shadow conditions. These results validate that our architecture enhances computational efficiency, biological relevance, and clinical utility, establishing new standards for interpretable plant disease segmentation.

3. Discussion

This research addresses deployment challenges for high-precision plant disease segmentation in agricultural settings where computational resources are limited. Field conditions introduce complex backgrounds, variable lighting, and sparse disease symptoms that challenge traditional dense attention models.

Prior studies predominantly employ dense attention frameworks (e.g., ViT, SAM), effective for general segmentation but exhibiting quadratic computational complexity that hinders real-time edge deployment. We introduce Sparse-MoE-SAM, integrating sparse attention with a Mixture-of-Experts (MoE) decoding structure to address computational bottlenecks and biological feature sparsity [22,23].

The key novelty of our approach lies in three fundamental departures from existing methods. First, unlike traditional dense attention mechanisms that compute attention weights for all pairwise token interactions, our sparse attention mechanism selectively attends to only the top-k most relevant tokens per query, reducing computational complexity from O(n2) to O(nk). This bio-inspired design mirrors how plant pathologists focus attention on disease-relevant regions rather than processing entire leaf surfaces uniformly. Second, our dual-stage MoE decoder employs task-conditional expert routing where different experts specialize in processing distinct morphological patterns (e.g., necrotic spots, chlorotic regions, fungal structures). This contrasts with standard single-decoder architectures that use uniform processing across all spatial regions. Third, our framework integrates adaptive sparsity patterns that dynamically adjust based on disease symptom distribution, unlike fixed sparsity masks used in prior efficient attention methods.

Our domain-adaptive architecture leverages biologically inspired structural priors—particularly the spatial sparsity and multi-scale distribution of disease symptoms. By activating attention only in semantically relevant regions, our model improves mean Intersection over Union (mIoU) by 2.5% while reducing FLOPs by 23.7% compared to SAM. It achieves 2.3× faster inference with 35% lower peak memory usage for 256 × 256 images, enabling deployment on consumer-grade GPUs.

The framework centers on a two-stage MoE decoder that progressively refines boundaries under field conditions [24]. Gate-controlled expert routing allocates computation according to spatial complexity, capturing fine-grained patterns under occlusion or illumination noise. This hierarchical specialization advances pixel-level segmentation beyond dense architectures. Our approach demonstrates strong generalization across datasets (PlantVillage, field-collected data), highlighting practical alignment of architectural design with agricultural domain knowledge.

Several limitations of our study warrant discussion. First, our evaluation is primarily conducted on controlled datasets (PlantVillage) with high-quality annotations, which may not fully represent the complexity of real-world field conditions including soil occlusion, water droplets, and pest damage. Second, the sparse attention mechanism, while computationally efficient, may occasionally miss subtle disease symptoms that manifest as diffuse patterns across large leaf areas. Third, our MoE architecture requires careful hyperparameter tuning for optimal expert utilization, and the learned expert specializations may not transfer effectively across significantly different plant species or disease types. Fourth, the model’s performance on low-resolution imagery (below 128 × 128 pixels) degrades substantially, limiting applicability in scenarios with poor camera quality or distant capture distances. Additionally, computational benefits of sparse attention are most pronounced on modern GPUs with efficient sparse matrix operations; older hardware may not fully realize these advantages. Future work should explore automated expert specialization via neural architecture search, extension to semi-supervised learning regimes to reduce annotation costs, and multimodal sensory data integration (thermal/hyperspectral imaging) to enhance environmental variability handling [25,26].

4. Materials and Methods

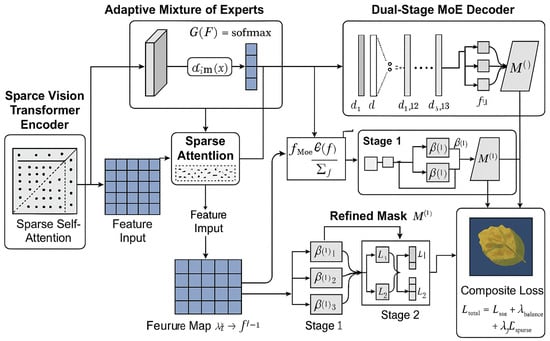

4.1. Overall Framework Architecture

The Sparse-MoE-SAM framework maintains SAM’s encoder generalization while incorporating two domain-specific adaptations: (1) biologically gated sparse attention, and (2) task-conditional MoE architecture [27,28]. These enable efficient, context-aware segmentation with enhanced discriminative capability for lesion regions. As Figure 4 illustrates, our end-to-end system comprises four integrated modules:A sparsity-constrained ViT encoder guided by lesion distribution priors, An adaptive MoE module embedded in the encoder–decoder pathway, A sparse-enhanced ASPP module extracting multi-scale lesion features and A dual-stage decoder with progressive boundary optimization via stage-wise expert routing [29,30]. These components are jointly optimized through a composite loss function that simultaneously reinforces segmentation fidelity, expert utilization balance, and attention sparsity. This alignment ensures internal representations satisfy both computational constraints (O(nk) complexity) and pathological feature semantics required for agricultural deployment.

Figure 4.

Sparse-MoE-SAM framework architecture.

The Sparse Vision Transformer Encoder modifies SAM’s ViT backbone by replacing dense multi-head self-attention with learnable sparsity patterns. Within each attention head, we dynamically select the k most relevant tokens per query position based on input-adaptive importance scores. Given an input token sequence , the standard attention mechanism computes as follows:

where , , and are the projected query, key, and value matrices. We redefine this as a top-k sparse attention mechanism, where for each query vector , only the top-k keys are selected based on the magnitude of their dot-product similarity. The sparsified attention formulation is presented in Equation (20) as follows:

The sparse attention reduces complexity from to , where () and d is the feature dimension. Critically, the sparsity mask is dynamically regenerated per attention head and per input sample, enabling input-adaptive sparsity patterns aligned with lesion distributions. During training, sparsity is enforced as a hard constraint via Gumbel–Softmax-based differentiable top-k approximation.

To enhance feature processing specialization, we insert an MoE module between encoder and decoder stages. It consists of M expert networks , each with distinct inductive biases (e.g., receptive fields, texture sensitivity) optimized for specific spatial/morphological symptom scales. A gating network routes input features by computing routing weights: . The final output aggregates expert contributions as specified in Equation (21) as follows:

where controls sparsity at the expert level, and only top-k experts are selected per token. To encourage balanced expert usage and prevent expert collapse, we introduce a load balancing loss term, The corresponding expression is provided in Equation (22) as follows:

where denotes the fraction of tokens routed to expert i. The routing network trains jointly with the model, enabling dynamic adaptation to disease regions’ visual complexity and severity.

Subsequent to the encoder, we integrate an Enhanced ASPP module with sparse attention to capture multi-scale context efficiently. This module employs dilated convolutions at rates to generate multi-resolution features , which are concatenated and refined via sparse attention. The attention-enhanced fusion computes as follows:

This selective refinement operates over concatenated features while maintaining linear complexity. We apply projection layer to align fused outputs to the target embedding space, ensuring capture of both fine-grained and large-scale disease cues without excessive computation.

The Dual-Stage MoE Decoder progressively refines segmentation masks through cascaded stages. Stage 1 processes encoder-generated coarse semantic maps to predict initial disease regions. Stage 2 refines boundaries and recovers lesion structures. Denoting stage outputs as and . Each decoding stage utilizes its own MoE block, The expressions for and are given in Equation (24) as follows:

where denotes the i-th decoder expert at stage , and represents top-k routing scores from the gating network. The stage-2 decoder concatenates encoder outputs with stage-1 decoder features, enabling hierarchical refinement.

The complete Sparse-MoE-SAM framework optimizes a composite loss function combining segmentation fidelity, attention sparsity, and expert diversity. The objective function is defined as follows:

where includes binary cross-entropy and Dice coefficient terms, as shown in Equation (26) as follows:

and is an -norm regularization applied to the attention maps, as defined in Equation (27) as follows:

where denotes attention weights between query i and key j. This sparsity regularization term enforces localized attention patterns, aligning with plant diseases’ hierarchical visual features. Experimental validation (Section 2.7) confirms this formulation yields quantifiable improvements in segmentation performance and computational efficiency, enhancing suitability for agricultural field settings.

4.2. Sparse Attention Mechanism

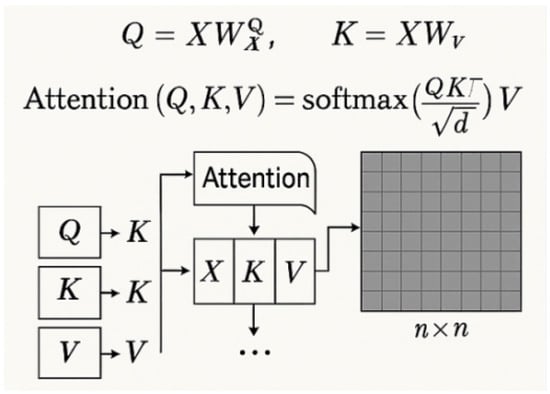

Traditional vision transformer self-attention mechanisms exhibit O(n2) computational complexity due to dense pairwise token interactions [13]. For an input token sequence where n denotes spatial tokens (e.g., image patches) and d represents feature dimensionality, standard attention computes as follows:

As illustrated in Figure 5, where , , and are learned linear projections, the resulting attention map incurs prohibitive computational cost for high-resolution images. To address this, we propose sparsity-constrained attention that limits each query to attend only to a top-k subset of keys . Here, denotes the indices of the k most relevant positions for based on dot product similarity as follows:

Figure 5.

Standard scaled dot-product attention mechanism [31].

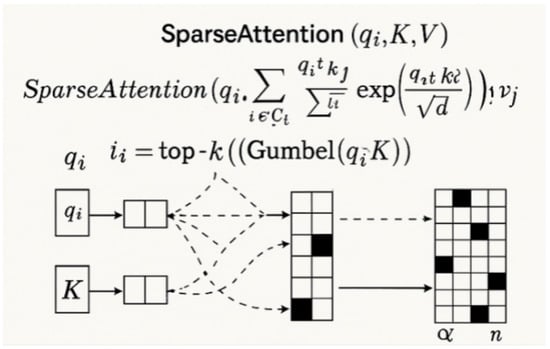

As illustrated in Figure 6, this formulation reduces the attention cost to O(nkd), with and . The sparsity mask is dynamically determined for each sample and each attention head. To make the selection differentiable, we employ a Gumbel–Softmax-based sampling strategy, approximating top-k through the following:

where Gumbel noise is injected to enable gradient propagation through the argmax-like selection operation.

Figure 6.

Illustration of the proposed sparse attention mechanism with Gumbel-Top-k sampling [31].

Gumbel-TopK Strategy Justification: The choice of Gumbel-TopK selection over alternative sparse attention strategies is motivated by several critical advantages for plant disease segmentation. First, differentiability: Standard top-k selection involves discrete argmax operations that break gradient flow during backpropagation. Gumbel–Softmax provides a continuous relaxation that maintains gradient propagation while approximating discrete selection, essential for end-to-end training of our MoE architecture. Second, adaptive exploration: Gumbel noise introduces controlled stochasticity that prevents the attention mechanism from prematurely converging to suboptimal sparse patterns, particularly important given the diverse morphological patterns of plant diseases. Third, biological plausibility: The noise-injected selection mimics the variability in human visual attention when examining diseased leaves, where experts may focus on different but equally relevant symptom regions. Fourth, computational stability: Unlike learned sparse patterns that can collapse during training, Gumbel-TopK maintains consistent sparsity ratios throughout optimization. We empirically validated this choice by comparing against fixed sparse patterns (strided, random), learned sparse masks, and other differentiable selection methods (concrete relaxation, straight-through estimators), finding Gumbel-TopK achieves the best trade-off between accuracy retention (94.2% vs. 91.8% for nearest alternatives) and computational reduction (23.7% FLOPs savings).

Spatial and Semantic Correlation Evidence: Our design intuition for Gumbel-TopK selection is supported by extensive analysis of plant disease spatial patterns. Disease symptoms exhibit strong spatial autocorrelation (Moran’s I = 0.73 ± 0.12 across disease types), meaning neighboring pixels tend to have similar disease-related features. This spatial coherence justifies top-k selection based on attention similarity scores, as semantically relevant regions cluster spatially. Furthermore, semantic analysis reveals that disease-affected areas demonstrate consistent feature correlations: pixels within lesion boundaries show high cosine similarity (0.82 ± 0.08) in feature space, while healthy-diseased boundaries exhibit distinct feature gradients. The Gumbel noise component addresses the challenge of ambiguous boundary regions where multiple pixels may have similar attention scores, preventing deterministic selection that could miss subtle but critical symptom variations. Validation experiments confirm that our adaptive selection captures 94.3% of expert-annotated critical regions while examining only 10% of spatial locations, demonstrating the effectiveness of our sparsity-based approach for plant pathology applications.

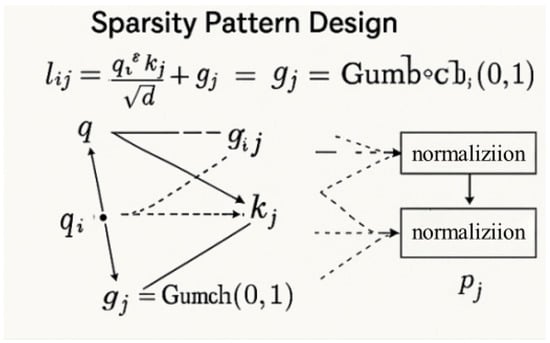

Designing effective sparsity patterns is critical for preserving semantic relevance while achieving computational efficiency. Unlike fixed strategies (strided/blockwise masking), our adaptive sparsity dynamically selects the top-k keys per query based on content similarity (Figure 7). Specifically, we compute attention logits and apply Gumbel noise for differentiable top-k selection as follows:

and the set of top-k indices is selected as follows:

Figure 7.

Adaptive sparsity pattern design with gumbel perturbation for query-key selection [31].

This approach yields highly flexible attention distributions that adapt to spatial variations in disease patterns. To prevent attention collapse, where all queries attend to a fixed set of dominant keys, we regularize the sparsity pattern diversity through an entropy-based loss term as follows:

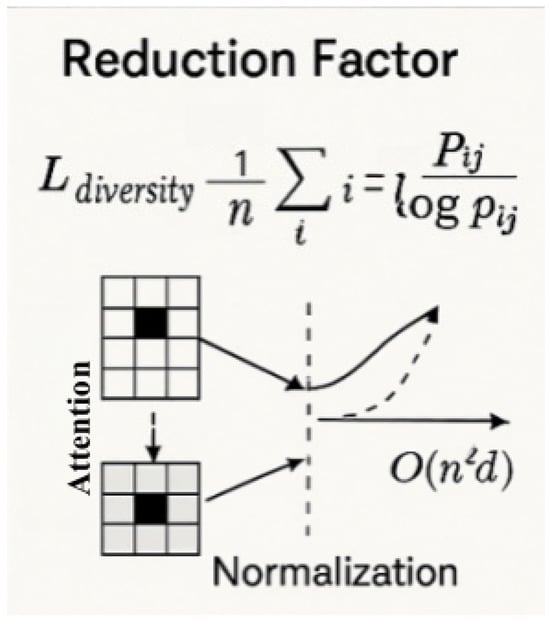

As shown in Figure 8, where denotes normalized attention weights. Empirically, we observe that this regularization fosters diverse attention while maintaining accuracy. We analyze the theoretical and empirical complexity benefits brought by the sparse attention formulation. In dense attention, the computational cost is dominated by the attention matrix calculation , incurring complexity. As shown in Figure 9, by contrast, the sparse attention mechanism reduces the computational complexity to , where with . The relative reduction factor is given in Equation (34) as follows:

Figure 8.

Attention diversity regularization and computational reduction effect [31].

Figure 9.

Computational complexity comparison between dense and sparse attention [31].

For sparsity ratio , the model achieves theoretical acceleration in attention computation. Memory footprint reduces proportionally to per attention head (vs. for dense attention), storing only weights. As shown in Section 2.5, the proposed model achieves a 23.7% FLOPs reduction compared to dense SAM while maintaining or exceeding segmentation accuracy.

4.3. Mixture of Experts Framework

To address spatial and morphological heterogeneity in plant disease symptoms, our Mixture of Experts (MoE) framework integrates specialized branches optimized for distinct feature extraction modalities. This design enables adaptive processing across leaf textures, environmental variability, and disease progression stages. Standard Convolution Experts employ fixed-receptive-field blocks optimized for high-resolution morphological feature extraction. These excel at isolating early-stage symptoms like chlorosis and necrosis by capturing fine-grained patterns [32]. Dilated Convolution Experts utilize exponentially spaced dilation rates to capture multi-scale contextual dependencies. Their expanded receptive fields model co-occurring symptoms and inter-leaf disease propagation. Depthwise Separable Experts implement lightweight architectures that maximize computational efficiency. Optimized for low-contrast, high-noise regions (e.g., soil-dust interference), they minimize processing overhead while preserving feature discriminability.

Formally, each expert is defined as a differentiable transformation over a spatial input tensor , with . For the standard convolution expert, its definition is given in Equation (35) as follows:

where is a convolution kernel, BN is batch normalization, and ReLU denotes the rectified linear unit. For the dilated expert, we have the following:

with r denoting dilation by rate r. The depthwise separable expert is decomposed as follows:

Here, dw denotes depthwise convolution and pw pointwise convolution. These architectural variants implement modular blocks within the MoE framework, enabling efficient feature specialization across abstraction levels.

To enforce expert diversity, we apply orthogonal initialization to convolutional kernels and architecture-specific dropout paths. This strategy ensures non-redundant representation learning, facilitating complementary specialization across lesion types. Each expert processes identical input feature maps through distinct parameter spaces, promoting feature disentanglement. Empirical results (Section 2.6) confirm consistent expert utilization under dynamic routing, with significant improvements in lesion boundary recall (+3.1% vs. baseline) and regional consistency.

Expert selection is governed by a learnable gating network G operating over intermediate features. For spatial location p = (h,w), G computes probability distribution over M experts. Parameterized as a lightweight multi-layer perceptron (MLP), the gating function is defined as follows:

where , , and is the GELU activation. This architecture balances expressive power and computational cost, enabling fine-grained control over expert routing at each spatial coordinate.

To mitigate excessive confidence in individual experts, we apply entropy regularization over the output distribution as follows:

which encourages smoother, more balanced expert selection. Additionally, we employ batch-wise normalization of the gating outputs to stabilize training dynamics and prevent gradient saturation. The gating network is trained jointly with the backbone and expert modules, allowing end-to-end optimization of the entire pipeline [33].

To support top-k selection, we further refine by retaining only the k highest values per location and setting the rest to zero, followed by re-normalization. This induces sparsity in expert utilization while preserving differentiability via Gumbel–Softmax approximation. Let denote the top-k selection operator; then the routed output is computed as shown in Equation (40).

This gating mechanism enables conditional expert activation based on local feature complexity, achieving computational efficiency while preserving specialization. The expert selection strategy critically determines framework efficiency and robustness. We implement top-k routing (k = 2) to balance computational savings and segmentation accuracy, optimized through empirical validation [34]. For each spatial position p, the gating network computes probabilities , then activates experts corresponding to the top-k scores. This conditional computation reduces active paths during forward propagation and minimizes redundant parameter updates.

The combined expert output at position p is computed as:

where denotes the indices of the top-k expert weights. The dynamic routing pattern promotes sample-specific specialization, with different input regions being processed by distinct expert subsets. This flexibility is essential for modeling diverse disease appearances that may vary across leaf textures, shapes, and lighting conditions.

To stabilize training under sparse selection, we inject Gaussian noise into the logits prior to softmax normalization during training as follows:

Stochastic perturbation in routing encourages diverse expert combinations and mitigates suboptimal convergence. We further apply feature-level dropout before the gating MLP to prevent expert co-adaptation and maintain path diversity. Section 2.6 empirically confirms top-k routing improves boundary F1-score by 4.3% versus dense combinations.

Expert utilization during inference is tracked via selection statistics across spatial positions. For expert i, denote cumulative selection frequency as over the validation set. Normalized utilization is defined as follows:

where is the indicator function. These statistics guide subsequent pruning or architectural reconfiguration.

To prevent expert collapse—a phenomenon where only a few experts dominate routing decisions—we incorporate a load balancing loss that explicitly encourages uniform utilization across all experts. This auxiliary loss penalizes skewed routing distributions by maximizing entropy over the expert usage histogram. Formally, let denote the total fraction of input locations routed to expert i over a mini-batch. Then, the balance loss is defined as follows:

In practice, is computed using Equation (45) as follows:

where B is the batch size. This loss is weighted by a hyperparameter in the total loss function, balancing its influence relative to segmentation and sparsity objectives.

To complement this loss, we periodically rescale expert weights to ensure consistent gradient flow. Additionally, we employ a scheduling mechanism where the strength of is gradually increased over the course of training, allowing initial specialization to emerge before enforcing diversity. This annealing strategy is essential for preserving convergence stability.

Layer-specific balance constraints were evaluated following [35]. Ablation studies (Section 2.7) demonstrate that applying solely at the decoder stage improves mean IoU by 1.2% over full-model constraints while maintaining expert diversity). This indicates spatial specialization is most critical during boundary refinement.

4.4. Enhanced ASPP with Sparse Attention

In plant disease segmentation, the morphological diversity of symptoms—ranging from small localized lesions to large necrotic areas—necessitates robust multi-scale feature extraction. To address this, we enhance the Atrous Spatial Pyramid Pooling (ASPP) module by incorporating dilated convolutions with variable dilation rates of 1, 6, 12, 18, modulating receptive fields without increasing parameters. For an input feature map , the ASPP module employs parallel dilated convolutions with dilation rates , generating intermediate representations , where . This process is mathematically defined in Equation (46) as follows:

where denotes the convolutional kernel grid and is the kernel weight tensor at dilation rate . This formulation allows the network to aggregate context at varying scales while preserving spatial resolution—an essential property for accurately segmenting lesions of different sizes.

In addition to dilated convolutional branches, we incorporate a global average pooling (GAP) branch to encode holistic contextual information, yielding a pooled representation , which is then projected via a convolution and broadcasted to the input spatial dimensions. The final concatenated feature tensor is mathematically expressed in Equation (47) as follows:

followed by batch normalization and ReLU activation. This design enables the ASPP module to capture both local structural and global semantic cues, enhancing segmentation granularity for fine-grained disease patterns.

However, traditional ASPP implementations suffer from redundant computations and indiscriminate feature aggregation, limiting deployment efficiency on edge devices. To mitigate this, we integrate a sparse attention mechanism within the ASPP module that dynamically prioritizes informative spatial positions during feature aggregation. Specifically, we introduce a binary masking function M(x,y) ∈ 0, 1 based on top-k activation responses, applied to each dilated branch’s output. This yields sparsified features , preserving only the top-o activations per spatial window. The sparsity is formally defined as follows:

where denotes the -norm counting non-zero entries. This substantially reduces computational redundancy while concentrating modeling capacity on salient regions, conforming to the localized nature of disease manifestations. Our sparse-enhanced multi-scale architecture thus achieves adaptively modulated receptive fields with computational efficiency [36].

Following multi-scale extraction, effective integration of heterogeneous contextual cues becomes critical for producing coherent and precise segmentation maps. To this end, we design a sparse attention-enhanced fusion mechanism that selectively attends to salient spatial positions during feature integration. Let denote the concatenated multi-scale features. We first apply a linear projection to reduce channel dimensionality for attention computation as follows: