Detection of Pine Wilt Disease in UAV Remote Sensing Images Based on SLMW-Net

Abstract

1. Introduction

- (a)

- A high-resolution ARen dataset is developed to include trees infected by pine wood nematodes. The dataset consists of 750 annotated UAV images for training and testing purposes. This dataset captures a wide range of forest conditions, such as bare soil, red broadleaf species, dead trees, and various background disturbances, ensuring a comprehensive representation of complex environmental factors.

- (b)

- A Self-Learning Feature Extraction Module (SFEM) is proposed that contains a convolution block and a learnable normalization layer. This design improves the discriminatory representation of pine wilt disease features through normalization while effectively retaining the original local details of the input. It is very efficient at extracting characteristics of nematode-infected pine trees, even in scenarios with complex ground conditions and significant vegetation interference.

- (c)

- A MicroFeature Attention Mechanism (MFAM) is introduced, combining Grouped Attention with a Gated Feed-Forward network. This method significantly improves the ability to capture pine wood nematode characteristics and also enhances the overall accuracy of feature representation. By overcoming the limitations of traditional attention mechanisms in detecting microscopic disease characteristics, this method greatly improves the accuracy of pine wood nematode detection.

- (d)

- A Weighted and Linearly Scaled IoU Loss (WLIoU Loss) is designed to further enhance the training process. It is specifically tailored for pine trees infected with pine wilt disease. By regulating multifaceted factors, the WLIoU Loss function surpasses category imbalance and hard sample detection challenges and enhances positive sample weighting by stretching and truncation mechanisms, thereby effectively addressing the issue of biased prediction boxes.

2. Datasets and Methods

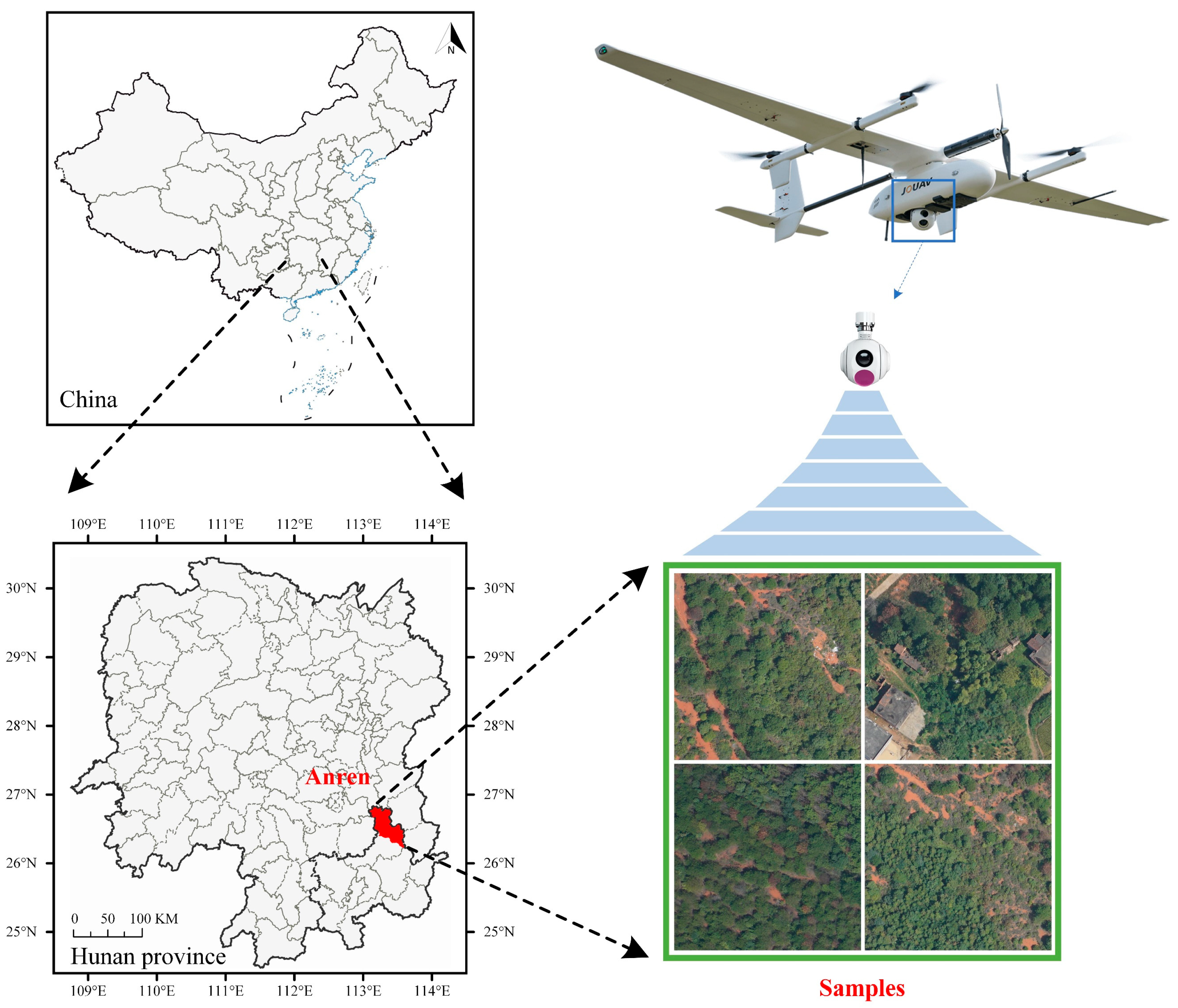

2.1. Data Acquisition

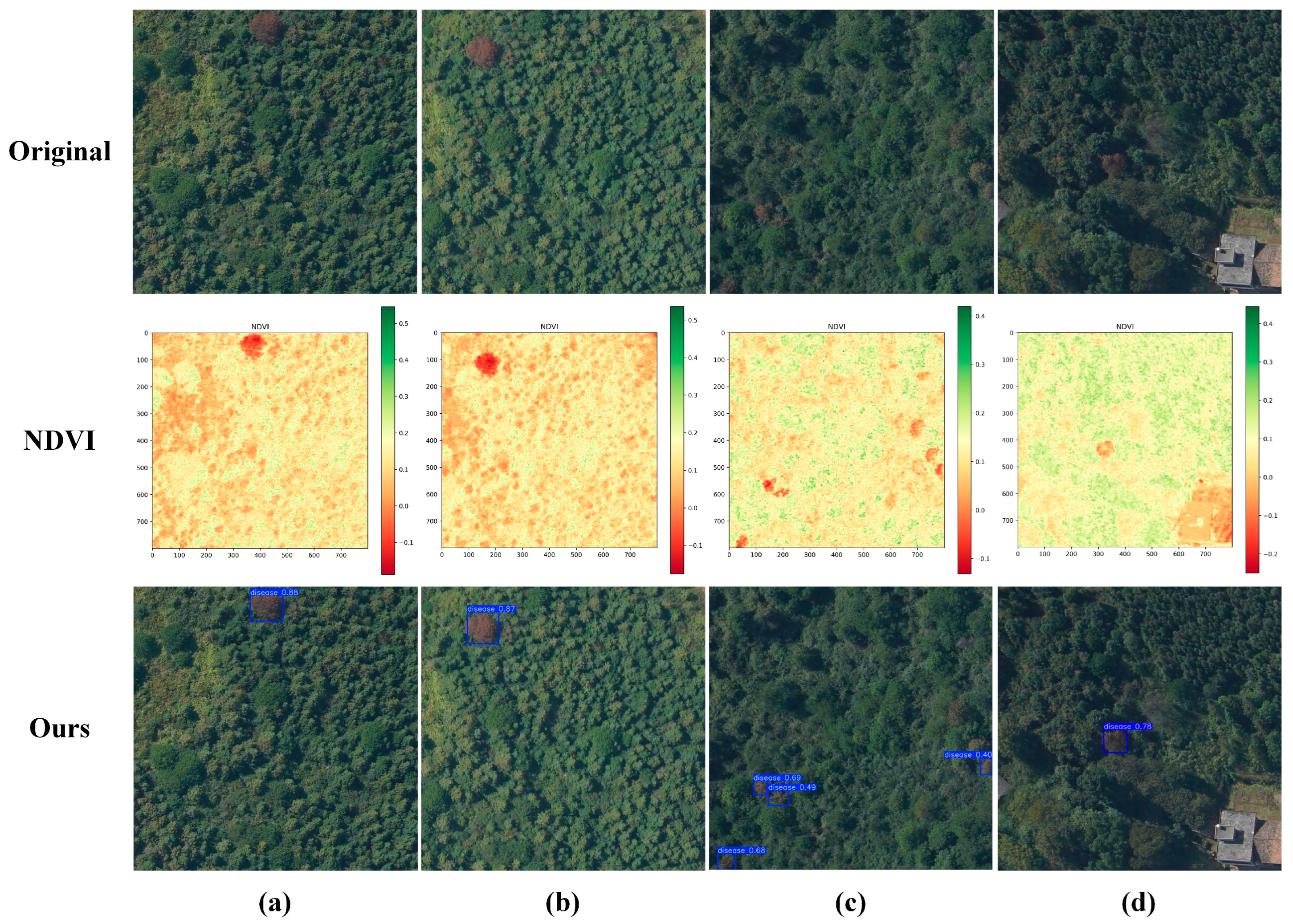

2.2. Data Processing

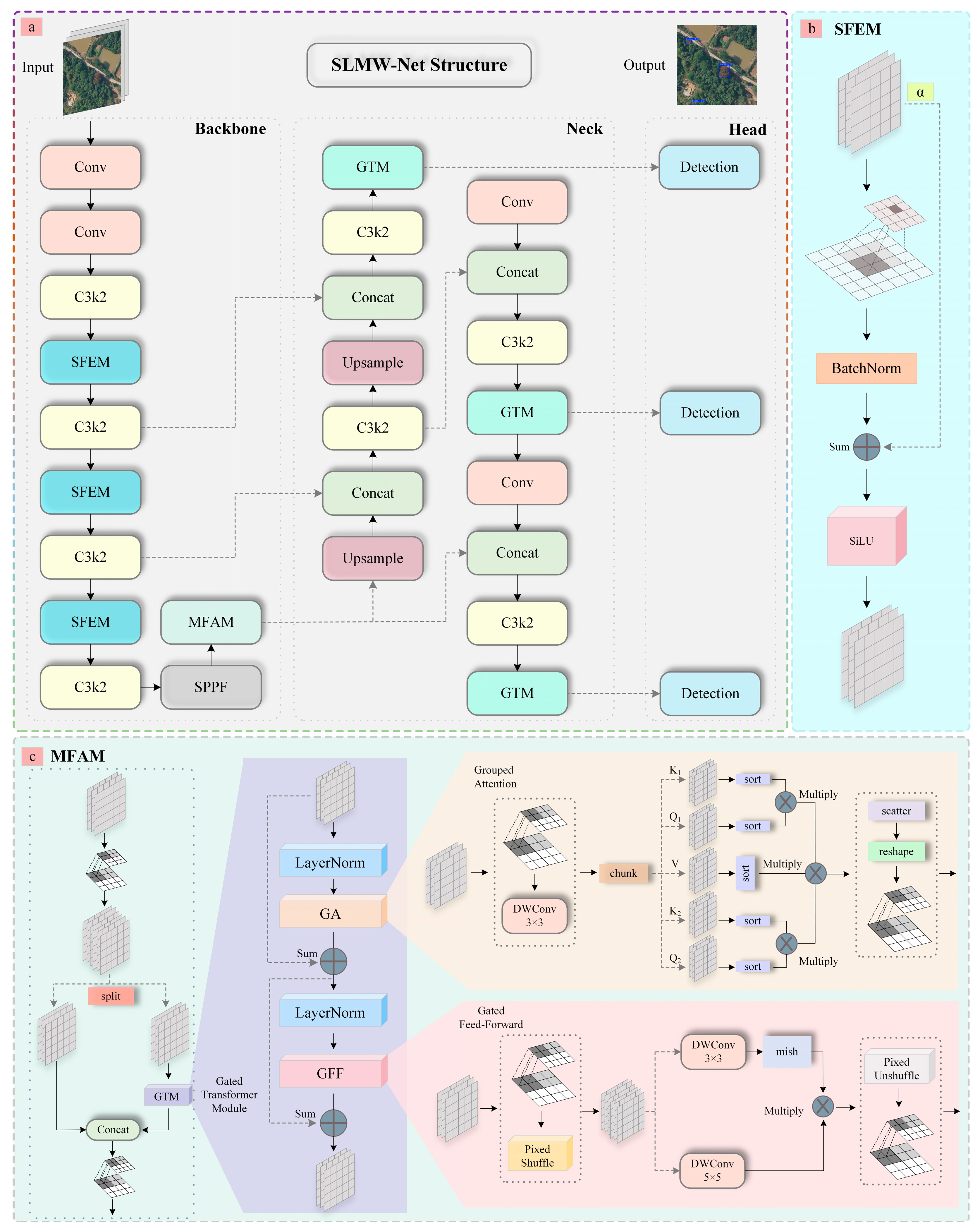

2.3. SLMW-Net

2.3.1. Self-Learning Feature Extraction Module (SFEM)

2.3.2. MicroFeature Attention Mechanism (MFAM)

2.3.3. Weighted and Linearly Scaled IoU Loss (WLIoU Loss)

3. Results

3.1. Experimental Environment and Training Details

3.2. Evaluation Indicators

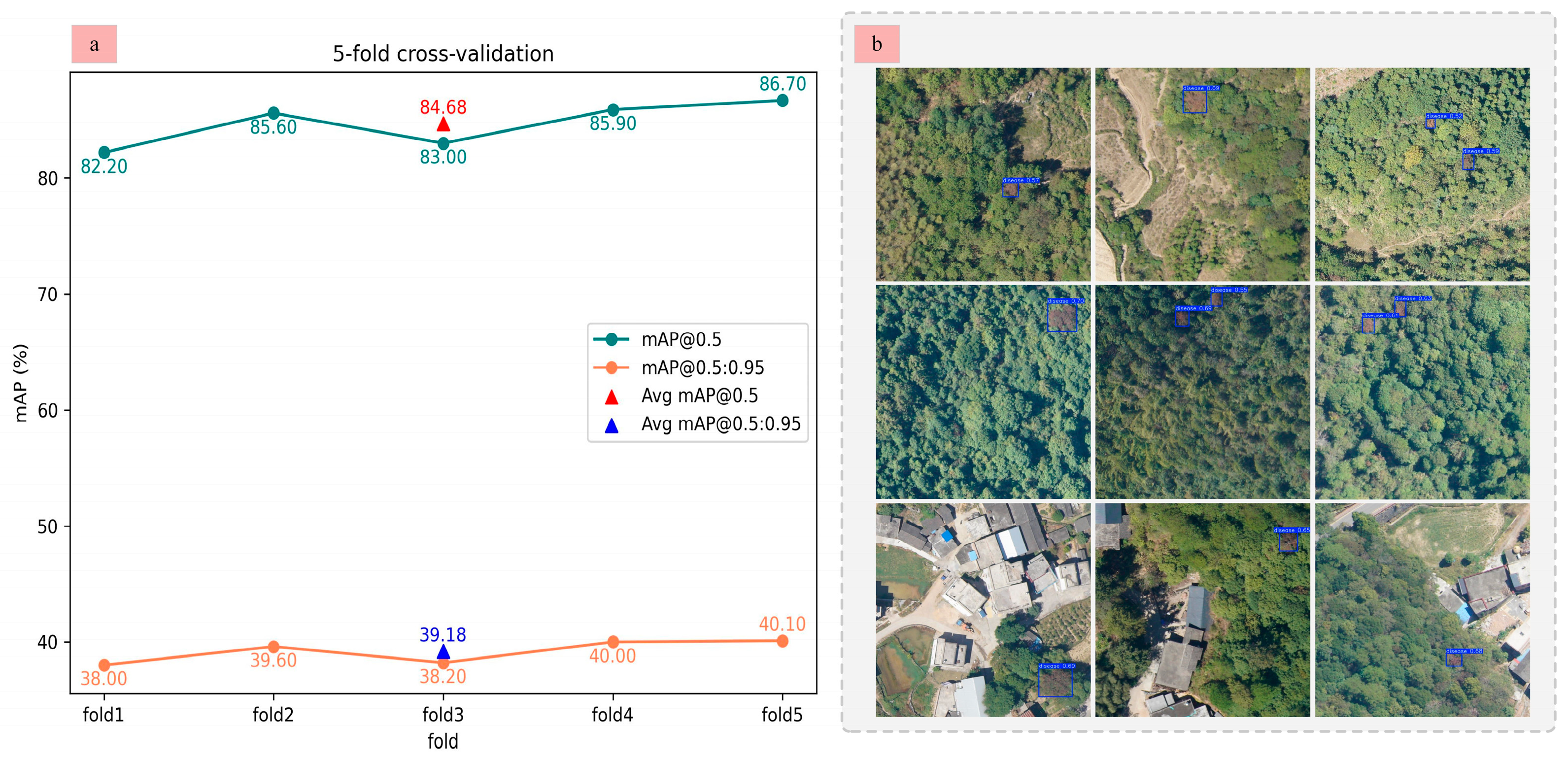

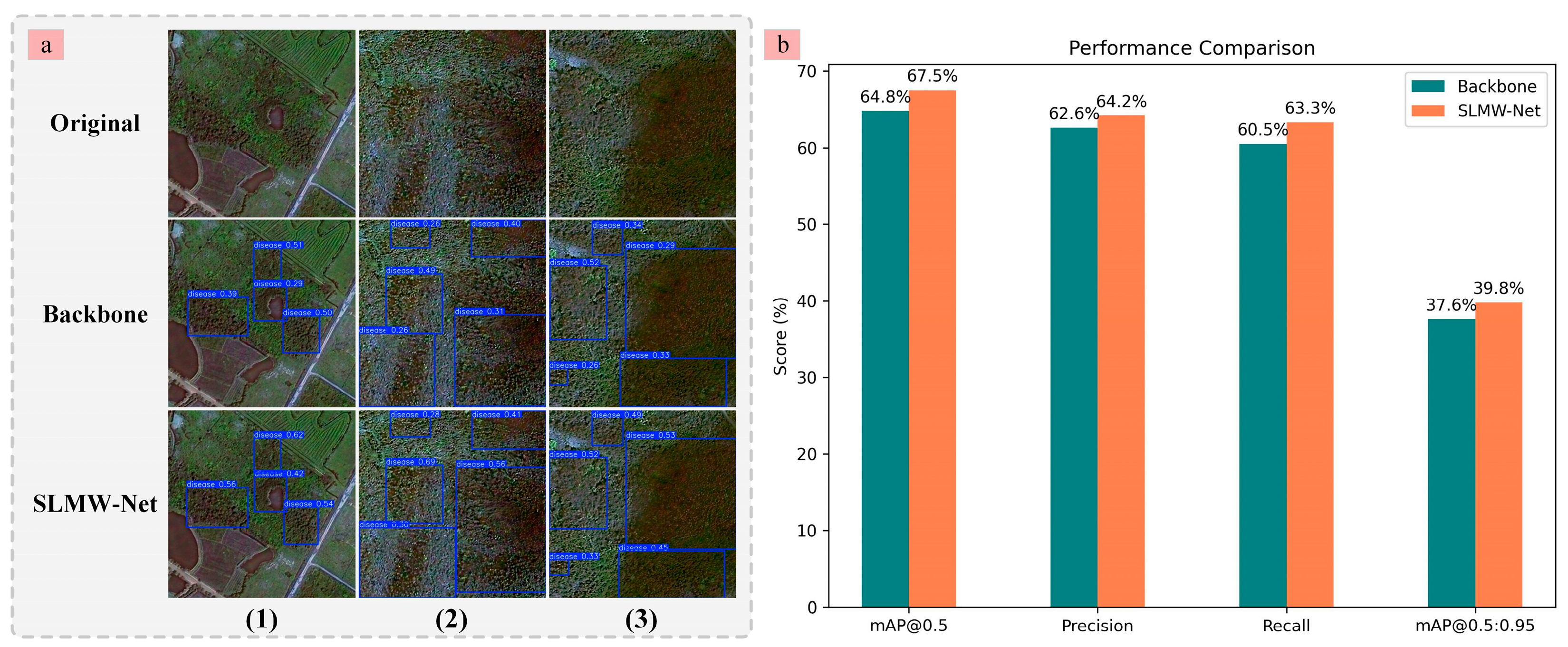

3.3. Model Performance Analysis

3.4. Module Effectiveness Experiment

3.4.1. Effectiveness of SFEM

3.4.2. Effectiveness of MFAM

3.4.3. Effectiveness of WLIoU Loss

3.5. Ablation Experiment

3.6. Comparison with State-of-the-Art Methods

3.6.1. SLMW-Net Performance on ARen Dataset

3.6.2. SLMW-Net Performance on Roboflow Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pan, S.; Yan, P.; Zhao, R.; Li, F.; Wang, L.; Wang, Y.; Lv, Z.; Ma, Y.; Yu, M.; Guo, X.; et al. Conduction of AgNPs with different surface charges in pine trees and their prevention and control of pine wood nematode disease. Chem. Eng. J. 2025, 520, 166146. [Google Scholar] [CrossRef]

- Luo, J.; Fan, J.; Huang, S.; Wu, S.; Zhang, F.; Li, X. Semi-supervised learning techniques for detection of dead pine trees with UAV imagery for pine wilt disease control. Int. J. Remote Sens. 2025, 46, 575–605. [Google Scholar] [CrossRef]

- Khuman, S.N.; Lee, H.Y.; Cho, I.-G.; Chung, D.; Lee, S.Y.; Lee, J.; Oh, J.-K.; Choi, S.-D. Monitoring of Organochlorine Pesticides Using Pine Needle, Pine Bark, and Soil Samples across South Korea: Source Apportionment and Implications for Atmospheric Transport. Chemosphere 2024, 370, 144043. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Ning, L.; Dai, T.; Wei, W.; Ma, N.; Hong, M.; Wang, F.; You, C. Application of Bio-Based Nanopesticides for Pine Surface Retention and Penetration, Enabling Effective Control of Pine Wood Nematodes. Chem. Eng. J. 2024, 502, 158009. [Google Scholar] [CrossRef]

- Gao, R.; Liu, L.; Li, R.; Fan, S.; Dong, J.; Zhao, L. Predicting Potential Distributions of Monochamus Saltuarius, a Novel Insect Vector of Pine Wilt Disease in China. Front. For. Glob. Change 2023, 6, 1243996. [Google Scholar] [CrossRef]

- Hao, Z.; Huang, J.; Li, X.; Sun, H.; Fang, G. A Multi-Point Aggregation Trend of the Outbreak of Pine Wilt Disease in China over the Past 20 Years. For. Ecol. Manag. 2021, 505, 119890. [Google Scholar] [CrossRef]

- Back, M.A.; Bonifácio, L.; Inácio, M.L.; Mota, M.; Boa, E. Pine Wilt Disease: A Global Threat to Forestry. Plant Pathol. 2024, 73, 1026–1041. [Google Scholar] [CrossRef]

- Li, M.; Li, H.; Ding, X.; Wang, L.; Wang, X.; Chen, F. The Detection of Pine Wilt Disease: A Literature Review. Int. J. Mol. Sci. 2022, 23, 10797. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, Q.; He, G.; Liu, X.; Peng, W.; Cai, Y. Impacts of Climate Change on Pine Wilt Disease Outbreaks and Associated Carbon Stock Losses. Agric. For. Meteorol. 2023, 334, 109426. [Google Scholar] [CrossRef]

- Sharma, A.; Cory, B.; McKeithen, J.; Frazier, J. Structural Diversity of the Longleaf Pine Ecosystem. For. Ecol. Manag. 2020, 462, 117987. [Google Scholar] [CrossRef]

- You, J.; Zhang, R.; Lee, J. A Deep Learning-Based Generalized System for Detecting Pine Wilt Disease Using RGB-Based UAV Images. Remote Sens. 2021, 14, 150. [Google Scholar] [CrossRef]

- Syifa, M.; Park, S.-J.; Lee, C.-W. Detection of the Pine Wilt Disease Tree Candidates for Drone Remote Sensing Using Artificial Intelligence Techniques. Engineering 2020, 6, 919–926. [Google Scholar] [CrossRef]

- Pan, J.; Lin, J.; Xie, T. Exploring the Potential of UAV-Based Hyperspectral Imagery on Pine Wilt Disease Detection: Influence of Spatio-Temporal Scales. Remote Sens. 2023, 15, 2281. [Google Scholar] [CrossRef]

- Iordache, M.-D.; Mantas, V.; Baltazar, E.; Pauly, K.; Lewyckyj, N. A Machine Learning Approach to Detecting Pine Wilt Disease Using Airborne Spectral Imagery. Remote Sens. 2020, 12, 2280. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. A Machine Learning Algorithm to Detect Pine Wilt Disease Using UAV-Based Hyperspectral Imagery and LiDAR Data at the Tree Level. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102363. [Google Scholar] [CrossRef]

- Hu, G.; Wang, T.; Wan, M.; Bao, W.; Zeng, W. UAV Remote Sensing Monitoring of Pine Forest Diseases Based on Improved Mask R-CNN. Int. J. Remote Sens. 2022, 43, 1274–1305. [Google Scholar] [CrossRef]

- Li, F.; Liu, Z.; Shen, W.; Wang, Y.; Wang, Y.; Ge, C.; Sun, F.; Lan, P. A Remote Sensing and Airborne Edge-Computing Based Detection System for Pine Wilt Disease. IEEE Access 2021, 9, 66346–66360. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; Volume 9905. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Wu, K.; Zhang, J.; Yin, X.; Wen, S.; Lan, Y. An Improved YOLO Model for Detecting Trees Suffering from Pine Wilt Disease at Different Stages of Infection. Remote Sens. Lett. 2023, 14, 114–123. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Sun, Z.; Ibrayim, M.; Hamdulla, A. Detection of Pine Wilt Nematode from Drone Images Using UAV. Sensors 2022, 22, 4704. [Google Scholar] [CrossRef]

- Wang, L.; Cai, J.; Wang, T.; Zhao, J.; Gadekallu, T.R.; Fang, K. Detection of Pine Wilt Disease Using AAV Remote Sensing with an Improved YOLO Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19230–19242. [Google Scholar] [CrossRef]

- Ye, X.; Pan, J.; Shao, F.; Liu, G.; Lin, J.; Xu, D.; Liu, J. Exploring the Potential of Visual Tracking and Counting for Trees Infected with Pine Wilt Disease Based on Improved YOLOv5 and StrongSORT Algorithm. Comput. Electron. Agric. 2024, 218, 108671. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, R.; Shi, W.; Liu, X.; Ren, Y.; Xu, S.; Wang, X. Detection of Pine-Wilt-Disease-Affected Trees Based on Improved YOLO V7. Forests 2024, 15, 691. [Google Scholar] [CrossRef]

- Huang, X.; Gang, W.; Li, J.; Wang, Z.; Wang, Q.; Liang, Y. Extraction of Pine Wilt Disease Based on a Two-Stage Unmanned Aerial Vehicle Deep Learning Method. J. Appl. Remote Sens. 2024, 18, 014503. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, L.; Wang, T.; Bashir, A.K.; Al Dabel, M.M.; Wang, J.; Feng, H.; Fang, K.; Wang, W. YOLOv8-RD: High-Robust Pine Wilt Disease Detection Method Based on Residual Fuzzy YOLOv8. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 385–397. [Google Scholar] [CrossRef]

- Xu, S.; Huang, W.; Wang, D.; Zhang, B.; Sun, H.; Yan, J.; Ding, J.; Wang, J.; Yang, Q.; Huang, T.; et al. Automatic Pine Wilt Disease Detection Based on Improved YOLOv8 UAV Multispectral Imagery. Ecol. Inform. 2024, 84, 102846. [Google Scholar] [CrossRef]

- Yao, J.; Song, B.; Chen, X.; Zhang, M.; Dong, X.; Liu, H.; Liu, F.; Zhang, L.; Lu, Y.-B.; Xu, C.; et al. Pine-YOLO: A Method for Detecting Pine Wilt Disease in Unmanned Aerial Vehicle Remote Sensing Images. Forests 2024, 15, 737. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Deng, Y.; Xi, H.; Zhou, G.; Chen, A.; Wang, Y.; Li, L.; Hu, Y. An Effective Image-Based Tomato Leaf Disease Segmentation Method Using MC-UNet. Plant Phenomics 2023, 5, 0049. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Zhu, W.; Dai, Y.; Zhou, G.; Chen, G.; Jiang, Y.; Zhu, M.; He, M. Identification of Pepper Leaf Diseases Based on TPSAO-AMWNet. Plants 2024, 13, 1581. [Google Scholar] [CrossRef]

- Li, J.; Zhao, F.; Zhao, H.; Zhou, G.; Xu, J.; Gao, M.; Li, X.; Dai, W.; Zhou, H.; Hu, Y.; et al. A Multi-Modal Open Object Detection Model for Tomato Leaf Diseases with Strong Generalization Performance Using PDC-VLD. Plant Phenomics 2024, 6, 0220. [Google Scholar] [CrossRef]

- Hang, R.; Li, Z.; Liu, Q.; Ghamisi, P.; Bhattacharyya, S.S. Hyperspectral Image Classification with Attention-Aided CNNs. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2281–2293. [Google Scholar] [CrossRef]

- Sadasivan, A.; Gananathan, K.; Dhanith, J.; Balasubramanian, S. A Systematic Survey of Graph Convolutional Networks for Artificial Intelligence Applications. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2025, 15, 70012. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, Y.; Liu, S.; Zhou, G.; Li, M.; Hu, Y.; Li, J.; Sun, L. DMFGAN: A Multifeature Data Augmentation Method for Grape Leaf Disease Identification. Plant J. 2024, 120, 1278–1303. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, G.; Zhu, W.; Chai, Y.; Li, L.; Wang, Y.; Hu, Y.; Dai, W.; Liu, R.; Sun, L. Identification of Rice Disease under Complex Background Based on PSOC-DRCNet. Expert Syst. Appl. 2024, 249, 123643. [Google Scholar] [CrossRef]

- Putra, H.A.A.; Murni Arymurthy, A.; Chahyati, D. Enhancing Bounding Box Regression for Object Detection: Dimensional Angle Precision IoU-Loss. IEEE Access 2025, 13, 81029–81047. [Google Scholar] [CrossRef]

- Chu, T.; Chen, J.; Sun, J.; Lian, S.; Wang, Z.; Zuo, Z.; Zhao, L.; Xing, W.; Lu, D. Rethinking Fast Fourier Convolution in Image Inpainting. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 23138–23148. [Google Scholar]

- Duta, I.C.; Liu, L.; Zhu, F.; Shao, L. Pyramidal Convolution: Rethinking Convolutional Neural Networks for Visual Recognition. arXiv 2020, arXiv:2006.11538. [Google Scholar]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. SegNeXt: Rethinking Convolutional Attention Design for Semantic Segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the Stars. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR 139. pp. 11863–11874. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Khanam, R.; Hussain, M. What Is YOLOv5: A Deep Look into the Internal Features of the Popular Object Detector. arXiv 2024, arXiv:2407.20892. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Varghese, R.; M., S. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

| Category | Component | Specification |

|---|---|---|

| Hardware | CPU | AMD EPYC 9754 128-Core Processor |

| RAM | 1TB | |

| GPU | NVIDIA GeForce RTX 4090 D | |

| Software | OS | Linux |

| Python | Python 3.8.10 | |

| CUDA Toolkit | 11.8 | |

| CUDNN | V8.7 | |

| PyTorch | 2.0.0 |

| Methods | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|

| FFC | 84.2 | 77.3 | 77.7 | 38.5 |

| PyConv | 83.9 | 76.8 | 75.6 | 38.2 |

| MSCA | 82.6 | 75.5 | 73.0 | 37.6 |

| SFEM | 84.7 | 77.8 | 78.9 | 38.8 |

| Methods | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|

| CBAM | 83.1 | 75.9 | 74.8 | 37.2 |

| SimAM | 83.6 | 77.8 | 76.2 | 37.8 |

| Star Blocks | 84.2 | 76.4 | 75.3 | 38.3 |

| MFAM | 84.9 | 78.9 | 76.3 | 38.9 |

| Methods | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|

| CIOU | 83.6 | 77.3 | 78.7 | 37.6 |

| GIOU | 83.2 | 76.2 | 77.1 | 37.4 |

| WLIoU Loss | 84.6 | 78.6 | 78.9 | 38.8 |

| A | B | C | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|---|

| no | no | no | 83.9 | 76.9 | 76.3 | 38.4 |

| yes | no | no | 84.7 | 77.8 | 78.9 | 38.8 |

| no | yes | no | 84.9 | 78.9 | 76.3 | 38.9 |

| no | no | yes | 84.6 | 78.6 | 78.9 | 38.8 |

| yes | yes | no | 85.2 | 78.7 | 78.2 | 39.6 |

| yes | no | yes | 85.4 | 79.2 | 79.4 | 39.5 |

| no | yes | yes | 85.5 | 80.4 | 78.8 | 39.1 |

| yes | yes | yes | 86.7 | 80.5 | 80.6 | 40.1 |

| Methods | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 59.5 | 58.5 | 61.3 | 23.2 | 28.3 | 492.9 |

| YOLOv3 | 82.2 | 75.7 | 75.7 | 39.3 | 61.5 | 155.3 |

| YOLOv5s | 83.4 | 76.4 | 77.7 | 37.3 | 7.0 | 15.9 |

| YOLOv7 | 79.7 | 74.8 | 74.9 | 37.4 | 37.2 | 105.1 |

| YOLOv8n | 83.7 | 78.0 | 74.0 | 38.3 | 3.0 | 8.2 |

| YOLOv9m | 83.4 | 79.5 | 74.6 | 39.9 | 20.2 | 77.5 |

| YOLOv10n | 80.9 | 78.9 | 71.8 | 37.7 | 2.7 | 8.4 |

| SLMW-Net | 86.7 | 80.5 | 80.6 | 40.1 | 3.9 | 8.8 |

| Methods | mAP@0.5 (%) | Precision (%) | Recall (%) | mAP@0.5:0.95 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 71.0 | 54.4 | 73.8 | 31.2 | 28.3 | 492.9 |

| YOLOv3 | 81.6 | 78.0 | 75.9 | 37.4 | 61.5 | 155.3 |

| YOLOv5s | 83.7 | 79.4 | 75.5 | 38.4 | 7.0 | 15.9 |

| YOLOv7 | 84.6 | 78.9 | 79.8 | 38.0 | 37.2 | 105.1 |

| YOLOv8n | 85.1 | 80.6 | 78.2 | 39.3 | 3.0 | 8.2 |

| YOLOv9m | 84.0 | 77.7 | 77.1 | 39.4 | 20.2 | 77.5 |

| YOLOv10n | 82.3 | 77.4 | 75.2 | 38.2 | 2.7 | 8.4 |

| SLMW-Net | 85.3 | 81.3 | 80.7 | 40.4 | 3.9 | 8.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, X.; Zhou, G.; Yan, Y.; Yan, X. Detection of Pine Wilt Disease in UAV Remote Sensing Images Based on SLMW-Net. Plants 2025, 14, 2490. https://doi.org/10.3390/plants14162490

Yuan X, Zhou G, Yan Y, Yan X. Detection of Pine Wilt Disease in UAV Remote Sensing Images Based on SLMW-Net. Plants. 2025; 14(16):2490. https://doi.org/10.3390/plants14162490

Chicago/Turabian StyleYuan, Xiaoli, Guoxiong Zhou, Yongming Yan, and Xuewu Yan. 2025. "Detection of Pine Wilt Disease in UAV Remote Sensing Images Based on SLMW-Net" Plants 14, no. 16: 2490. https://doi.org/10.3390/plants14162490

APA StyleYuan, X., Zhou, G., Yan, Y., & Yan, X. (2025). Detection of Pine Wilt Disease in UAV Remote Sensing Images Based on SLMW-Net. Plants, 14(16), 2490. https://doi.org/10.3390/plants14162490