Research on Lettuce Canopy Image Processing Method Based on Hyperspectral Imaging Technology

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design

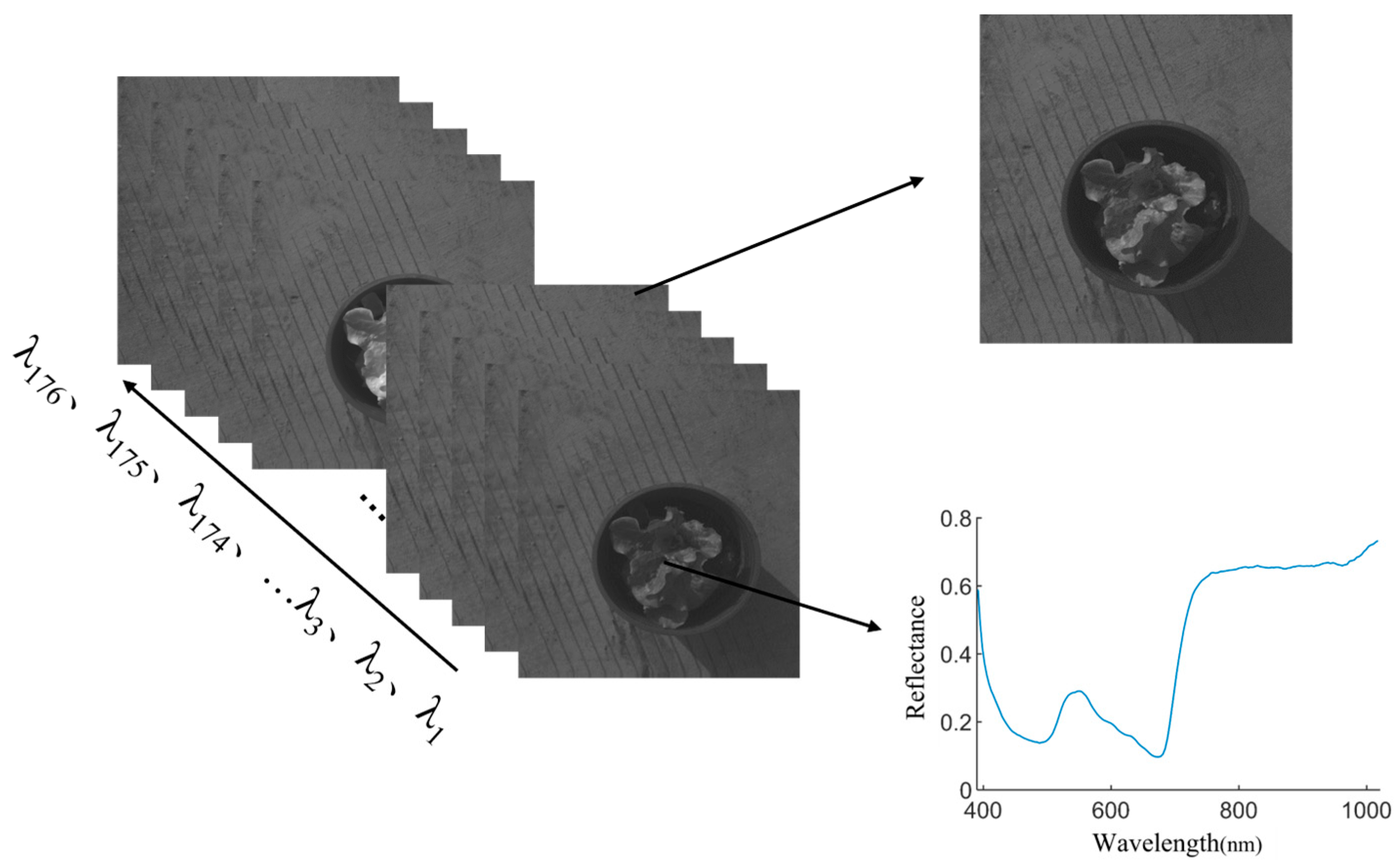

2.2. Hyperspectral Image Acquisition

2.3. Extraction of Lettuce Canopy Region Segmentation Based on Feature Image

2.3.1. Image Enhancement

2.3.2. Image Fusion

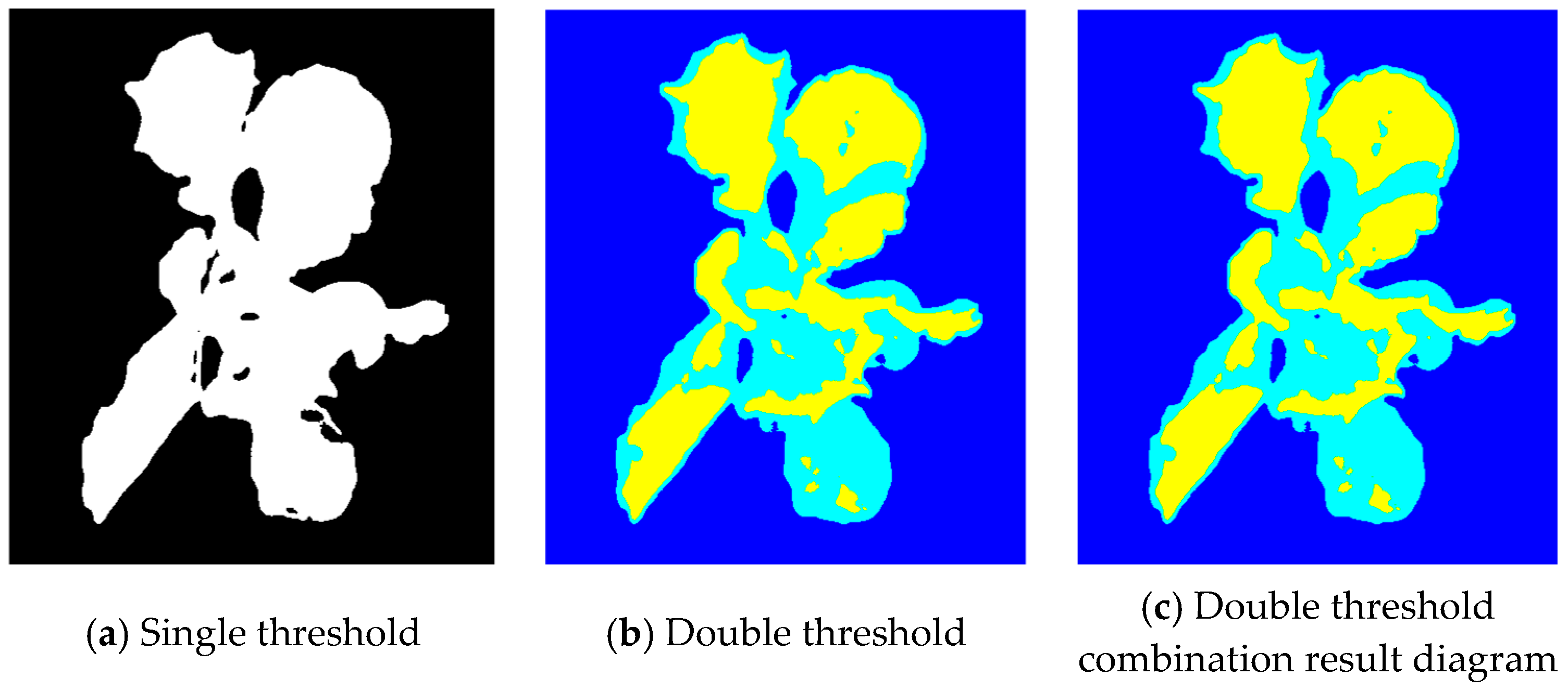

2.3.3. Image Segmentation

2.3.4. Segmentation Accuracy Evaluation Method

3. Results and Discussion

3.1. Selection of the Optimal Splitting Wavelength

3.2. Image Enhancement and Fusion

3.3. Canopy Region Segmentation of Lettuce in Hyperspectral Image

4. Discussion

5. Conclusions

- (1)

- Wavelengths with large differences between lettuce leaves and background regions were extracted by the spectral ratio method and were 553.8 nm and 731.3 nm, 550.3 nm and 742.1 nm, 553.8 nm and 702.5 nm, respectively. The wavelengths with similar characteristics were removed by the principle of band correlation, and the three wavelengths of 553.8 nm, 702.5 nm and 731.3 nm were finally extracted as the characteristic wavelengths with the largest difference between the background and the leaf spectrum.

- (2)

- The characteristic wavelength image was processed by median smoothing to remove local noise. The filtered image was processed by the band algorithm for image fusion. The average gray levels of the three backgrounds were 0.0681, 0.0890 and 0.0701, respectively, while the average gray levels of the normal leaves and shadowed leaves were 0.3983 and 0.2135, respectively. There was a significant difference in gray values between background and leaves. This method was used for image fusion to improve the accuracy of the image segmentation. To facilitate comparison with the fusion method in this study, three wavelength images were processed using PCA.

- (3)

- In this study, three characteristic wavelength images, fusion images and PC1 images obtained by principal component analysis were segmented by single and double threshold methods, and the segmentation results were evaluated by area overlap () and misclassification rate (). In addition to the 553.8 nm image, the of the single threshold segmentation was higher than the double threshold, and the was lower than the double threshold. In the remaining wavelength images, the double threshold segmentation results were better than the single threshold segmentation results. After PCA processing, the of the image was lower than that of the other images, the value was higher than that of the other images, and the segmentation result was not good. The results showed that the multi-threshold segmentation of multi-wavelength fusion images was the best. The average values of and were 0.9526 and 0.0477, respectively, and the corresponding variances were 0.0111 and 0.0110, respectively, which indicate the accurate segmentation of lettuce canopy images.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Song, X.R.; Zou, L.; Wu, L.D. Hyperspectral image anomaly detection based on background reconstruction. J. Syst. Simul. 2020, 32, 1287–1293. [Google Scholar]

- Huang, L.S.; Shao, S.; Lu, X.J. Lettuce multispectral image segmentation and registration based on convolutional neural network. Trans. CSAM 2021, 52, 186–194. [Google Scholar]

- Yue, X.J.; Ling, K.J.; Hong, T.S. Hyperspectral image-based modeling of chlorophyll content distribution in longan leaves. J. Agric. Mach. 2018, 49, 25–32. [Google Scholar]

- Zhang, H. Research on the Identification Method of Ground Cover in Lint Cotton Based on Hyperspectral Imaging Technology; China Agricultural University: Beijing, China, 2016. [Google Scholar]

- Zhu, W.J. Research on NPK and Interaction Detection in Tomato Based on Polarization-Hyperspectral Multidimensional Light Information; Jiangsu University: Zhenjiang, China, 2014. [Google Scholar]

- Zhang, X.D.; Mao, H.P.; Zhou, Y. Hyperspectral imaging-based water detection of lettuce leaves. Anhui Agric. Sci. 2011, 39, 20329–20331+20714. [Google Scholar]

- Wang, C.L.; Chen, W.Z.; Lu, C.Y. Hyperspectral imaging-based segmentation of post-harvest root stubble rows in maize. J. Agric. Mach. 2020, 51, 421–426. [Google Scholar]

- Tian, Y.W.; Xing, X.Q.; Wang, X.Q. Selection of optimal waveband for nondestructive detection of corn borer based on hyperspectral imaging. J. Shenyang Agric. Univ. 2015, 46, 719–724. [Google Scholar]

- Yue, X.J.; Quan, D.P.; Hong, T.S. Hyperspectral nondestructive detection model for chlorophyll content of citrus leaves. J. Agric. Eng. 2015, 31, 294–302. [Google Scholar]

- Blanco, M.; Castillo, M.; Peinado, A.; Beneyto, R. Determination of Low Analyte Concentrations by Near-Infrared Spectroscopy: Effect of Spectral Pretreatments and Estimation of Multivariate Detection Limits. Anal. Chim. Acta 2007, 581, 318–323. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, F.; Chen, L.H. A model for estimating chlorophyll content of plant leaves by spectral index. Spectrosc. Spectr. Anal. 2018, 38, 211–217. [Google Scholar]

- Xiong, S.; Ding, S.; Guo, J.; Zhang, Z.; Xu, S.; Fan, Z.; Mu, Y.; Ma, X. Estimation of glutamine synthetase activity in wheat grain based on hyperspectral remote sensing. J. Henan Agric. Univ. 2021, 55, 821–829. [Google Scholar]

- Shao, Y.Y.; Wang, Y.X.; Xuan, G.T. Rapid shelf-life prediction of kiwifruit by hyperspectral imaging. Spectrosc. Spectr. Anal. 2020, 40, 1940–1946. [Google Scholar]

- Bai, X.B.; Yu, J.S.; Fu, Z.T. Progress and trends in the application of spectral imaging technology for crop disease detection. Spectrosc. Spectr. Anal. 2020, 40, 350–355. [Google Scholar]

- Sun, X.; Pang, B.; Liu, D.Y. Beef marbling segmentation based on spectral information of hyperspectral images. J. Agric. Mach. 2013, 44, 177–181. [Google Scholar]

- Gao, H.S.; Guo, Z.Q.; Zeng, Y.L. Early classification and detection of soft rot in kiwifruit based on hyperspectral image band fusion. Spectrosc. Spectr. Anal. 2024, 44, 241–249. [Google Scholar]

- Yang, L.X.; Chen JWZhang, R.; Yang, S.Y.; Zhang, X.Y. Precise crop classification of UAV hyperspectral imagery using kernel tensor slice sparse coding-based classifier. Neurocomputing 2023, 551, 126487. [Google Scholar] [CrossRef]

- Lei, L.; Yang, Q.; Yang, L. Deep learning implementation of image segmentation in agricultural applications: A comprehensive review. Artif. Intell. Rev. 2024, 57, 149. [Google Scholar] [CrossRef]

- Khan, S.D.; Alarabi, L.; Basalamah, S. Segmentation of farmlands in aerial images by deep learning framework with feature fusion and context aggregation modules. Multimed. Tools Appl. 2023, 82, 42353–42372. [Google Scholar] [CrossRef]

- Moudrý, V.; Klápště, P.; Fogl, M.; Gdulová, K.; Barták, V.; Urban, R. Assessment of LiDAR Ground Filtering Algorithms for Determining Ground Surface of Non-Natural Terrain Overgrown with Forest and Steppe Vegetation. Measurement 2020, 150, 107047. [Google Scholar] [CrossRef]

- Long, Y.W.; Li, M.Z.; Gao, D.W. Plant segmentation and chlorophyll distribution detection based on crop spectrogram features. Spectrosc. Spectr. Anal. 2020, 40, 2253–2258. [Google Scholar]

- Zhang, H.; Qiao, X.; Li, Z.B. Hyperspectral image segmentation method for ground cover in lint cotton. J. Agric. Eng. 2016, 32, 161–167. [Google Scholar]

- Ma, D.; Maki, H.; Neeno, S.; Zhang, L.; Wang, L.; Jin, J. Application of Non-Linear Partial Least Squares Analysis on Prediction of Biomass of Maize Plants Using Hyperspectral Images. Biosyst. Eng. 2020, 200, 40–54. [Google Scholar] [CrossRef]

- Elvanidi, A.; Katsoulas, N.; Ferentinos, K.P.; Bartzanas, T.; Kittas, C. Hyperspectral Machine Vision as a Tool for Water Stress Severity Assessment in Soilless Tomato Crop. Biosyst. Eng. 2018, 165, 25–35. [Google Scholar] [CrossRef]

- Lei, Y.; Han, D.J.; Zeng, Q.D. A method for grading the degree of wheat stripe rust disease based on hyperspectral imaging. J. Agric. Mach. 2018, 49, 226–232. [Google Scholar]

- Zhao, J.; Li, H.; Chen, C.; Pang, Y.; Zhu, X. Detection of Water Content in Lettuce Canopies Based on Hyperspectral Imaging Technology under Outdoor Conditions. Agriculture 2022, 12, 1796. [Google Scholar] [CrossRef]

- Yin, H.; Li, B.; Liu, Y.; Zhang, F.; Su, C.; Ou-yang, A. Detection of early bruises on loquat using hyperspectral imaging technology coupled with band ratio and improved Otsu method. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 283, 121775. [Google Scholar] [CrossRef]

- Lu, Y.; Duanmu, L.; Zhai, Z.; Wang, Z. Application and improvement of Canny edge-detection algorithm for exterior wall hollowing detection using infrared thermal images. Energy Build. 2022, 274, 112421. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, L.; Wu, C.; Jia, J.; Chen, J. Method of famous tea sprout identification and segmentation based on improved watershed algorithm. Comput. Electron. Agric. 2021, 184, 106108. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, H.; Wang, Z.; Wu, J. An image restoration and detection method for picking robot based on convolutional auto-encoder. Comput. Electron. Agric. 2022, 196, 106896. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of consumer RGB-D cameras for fruit detection and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

| Segmentation Object | Segmentation Method | AOM | ME | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Maximum Value | Minimum Value | Average Value | Variance | Maximum Value | Minimum Value | Average Value | Variance | ||

| 553.8 nm Image | Single Threshold | 0.9250 | 0.8500 | 0.8875 | 0.0229 | 0.1501 | 0.0777 | 0.1132 | 0.0230 |

| Double Threshold | 0.9513 | 0.6375 | 0.8753 | 0.0892 | 0.5658 | 0.0492 | 0.1529 | 0.1447 | |

| 702.5 nm Image | Single Threshold | 0.9278 | 0.8653 | 0.9043 | 0.0210 | 0.1347 | 0.0723 | 0.0961 | 0.0208 |

| Double Threshold | 0.9658 | 0.7119 | 0.9304 | 0.0695 | 0.4023 | 0.0346 | 0.0798 | 0.1021 | |

| 731.3 nm Image | Single Threshold | 0.9368 | 0.8315 | 0.8962 | 0.0320 | 0.1685 | 0.0633 | 0.1038 | 0.0320 |

| Double Threshold | 0.9668 | 0.9187 | 0.9464 | 0.0159 | 0.0813 | 0.0336 | 0.0538 | 0.0158 | |

| Fusion Image | Single Threshold | 0.9392 | 0.8456 | 0.9032 | 0.0277 | 0.154 | 0.069 | 0.0970 | 0.0276 |

| Double Threshold | 0.9687 | 0.9322 | 0.9526 | 0.0111 | 0.067 | 0.031 | 0.0477 | 0.0110 | |

| PC1 Image | Single Threshold | 0.8950 | 0.7439 | 0.8209 | 0.0442 | 0.256 | 0.105 | 0.1792 | 0.0442 |

| Double Threshold | 0.9502 | 0.8466 | 0.8954 | 0.0317 | 0.153 | 0.049 | 0.1047 | 0.0316 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Jiang, Y.; Zhu, X. Research on Lettuce Canopy Image Processing Method Based on Hyperspectral Imaging Technology. Plants 2024, 13, 3403. https://doi.org/10.3390/plants13233403

Chen C, Jiang Y, Zhu X. Research on Lettuce Canopy Image Processing Method Based on Hyperspectral Imaging Technology. Plants. 2024; 13(23):3403. https://doi.org/10.3390/plants13233403

Chicago/Turabian StyleChen, Chao, Yue Jiang, and Xiaoqing Zhu. 2024. "Research on Lettuce Canopy Image Processing Method Based on Hyperspectral Imaging Technology" Plants 13, no. 23: 3403. https://doi.org/10.3390/plants13233403

APA StyleChen, C., Jiang, Y., & Zhu, X. (2024). Research on Lettuce Canopy Image Processing Method Based on Hyperspectral Imaging Technology. Plants, 13(23), 3403. https://doi.org/10.3390/plants13233403