1. Monitoring Urban Environments through Satellite Data

Considering the extreme impact of urban areas on the surrounding environments in terms of mass, energy, and resource fluxes [

1], combined with the overall growth of the world’s population, the constant monitoring of urban environments is the key for a sustainable urban development. In this regard, the 2030 Agenda for the Sustainable Development established 17 Sustainable Development Goals (SDGs) [

2,

3,

4], among which Goal 11—Sustainable Cities and Communities—aims at tackling the main societal challenges associated with urban growth [

5,

6,

7] .

In this regard, the role of Earth Observation and space technology is recognized by the United Nations as a significant support for the achievement of the SDGs [

8]. As such, remotely sensed data constitute a significant resource for mapping anthropized areas [

9,

10,

11,

12]. In fact, the built environment can be identified and studied with different sensors, by analyzing its reflectance, thermal and microwave properties, and night light [

13].

The most common approaches for urban monitoring applications involve the use of imagery data originated from multispectral sensors (from the visible to the near and short-wave infrared wavelengths). Such approaches include, for example, pixel- and object-based classifications [

14,

15,

16,

17,

18,

19,

20,

21] and the use of spectral indices [

12,

22,

23,

24,

25,

26]. On the other hand, some authors have used the imagery data of SAR (synthetic-aperture radar) satellite systems, by considering the microwave properties of urban environments [

27,

28,

29,

30,

31,

32,

33], whereas some others have explored the thermal infrared properties of urban areas (and land surface temperature) [

34,

35], and nighttime light satellite images [

36].

Nonetheless, the accurate mapping of urban areas still remains challenging, due to limitations in the spatial, spectral, and temporal resolution of remote sensing systems that often fail to obtain an univocal response [

13]. An additional challenge is represented by the wide heterogeneity of anthropic structures in terms of size, shape, material composition, and fragmentation which might result in strong spectral similarities with other land cover surfaces, such as bare soil and non-vegetated areas [

13,

14,

37].

The general contest of this work focuses on urban and land surface monitoring from the point of view of local governments and public administrations, such as the Veneto Region. Within the latter, many different land cover and land use maps are currently being produced. These maps are produced within multi-annual time intervals, and for different purposes and objectives, thus not always constantly updated. Furthermore, they often rely on photogrammetric surveys related to specific case studies limited to restricted target areas. On the other hand, the use of satellite data can provide constantly updated information and overcome any spatial restriction.

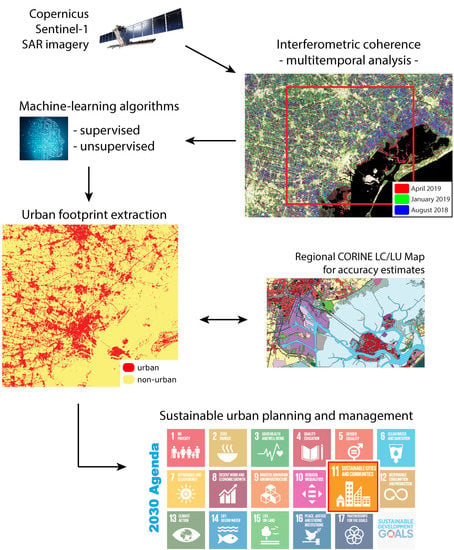

In order to guarantee the reusability of our approach on larger spatial and time scales, we adopted Open Data policies that involve the use of non-commercial satellite data. More specifically, we used imagery data provided by the Copernicus satellites, and in particular focusing on Sentinel-1 SAR (synthetic-aperture radar) imagery. This satellite system provides free data with global coverage at a weekly revisit time [

38,

39,

40].

Unlike optical sensors, SAR systems can provide imagery data without being affected by cloud-cover, seasonality, and daytime variations, and can identify urban structures, as well. In fact, vertical structures such as buildings and other anthropic constructions act as corner reflectors, generating a particularly strong radar-echo signal (backscattered) that allows their easy identification. The sole exceptions are very large roads, central squares, and wide runways (larger than 40–50 m, considering we adopted an average Sentinel-1 geometric resolution of 15 m—see

Section 2.3), which are likely to act as mirror-like reflectors. Due to the low frequency of such wide infrastructures in our study area and considering the scale of our analysis (see

Section 2), we included them within the urban environment. On the contrary, smooth surfaces such as water bodies and rough irregular surfaces such as vegetated areas, respectively, act as mirror-like and diffuse reflectors, resulting in null or low backscattered intensity [

41].

Therefore, due to their “double-bounce” effect, urban structures appear brighter in SAR images than all other surface types [

13,

41]. Moreover, the information provided by interferometric coherence allows better identification of urban areas, significantly reducing the number of outliers and anomalous values, with respect to the single backscattered images. Indeed, urban structures usually display high coherence due to their regular geometric properties and relatively constant physical parameters over time.

We focus on the analysis of an automatic and semi-automatic process to provide the extraction of urban features over non-urban areas, taking into account the use of machine-learning algorithms. In this work, we refer to “urban footprint” as the percentage of pixels classified as “urban” over the total number of pixels on a specified study area. In order to perform reliable accuracy assessments, we compared such results—obtained using 2018–2019 Sentinel data—with the land cover map of the Veneto Region, produced in 2015, as the most detailed and updated source of information for urban environment in our study area.

Accordingly, our goal is to test a methodology for local governments and public administrations that provides accurate and constantly updated information of urban footprint all over the Veneto Region, which is essential for strategic urban planning, resource management, and decision-making processes. This methodology may be a useful tool to support the production of geographic indicators able to address the targets of Goal 11 in the 2030 Agenda [

6,

7], by feeding and updating the Sustainable Development (SD) indicators associated with such targets [

5,

6,

7]. In particular, this research intends to prepare a data workflow to help feed the SD indicators 11.3.1 “Ratio of land consumption rate to population growth rate” and 11.7.1 “Average share of the built-up area of cities that is open space for public use for all, by sex, age and persons with disabilities”. These indicators are included in targets 11.3 and 11.7, which aim, in a more general context, at enhancing sustainable urbanization through sustainable urban planning and management, and providing universal access to safe, inclusive, and accessible, green and public spaces [

2].

Furthermore, local authorities and public administration constantly update and feed indicators for strategic land-monitoring and sustainable urban development [

5,

6,

7], at both regional and national scales. In the case of Veneto Region in Italy, the constant update of such information is useful to feed not only regional SD indicators [

42], but also the national statistical set [

43,

44], and the European monitoring framework.

2. Materials and Methods

2.1. RUS Copernicus: A New Frontier for Satellite Imagery Analysis

The ever-increasing availability of satellite imagery along with its growing complexity generates the need of a powerful computational environment able to analyze these data. In this regard, a consortium led by CS Group (Communication and Systèmes) developed the Research and User Support (RUS) Service, in response to a call made in 2016 by the European Commission [

45]. It aims at developing an online free-access platform to promote the uptake of Copernicus data and support its applications. This service is available upon registration and under request to all citizens within the European Union (both public and private sector organizations and entities) [

46].

During the development of this work, we requested and obtained five Level-A Virtual Machines with powerful computing environment (four CPU cores and 16 Gb RAM each), a set of open-source GIS software (such as ESA’s SNAP and QGIS), and shared cloud memory, allowing simultaneous processing and analysis of the same type of data. These VMs were fundamental to effectively run all the processes used in this work.

2.2. Sentinel-1 SAR Imagery

Sentinel-1 mission consists of two polar-orbiting satellites, carrying a single C-band synthetic-aperture radar (SAR) instrument operating in four exclusive acquisition modes, with different resolution (down to 5 m) and coverage (up to 400 km) [

38,

39,

40].

In particular, in this work we made use of the Interferometric Wide swath (IW) mode products at Level-1 Single Look Complex (SLC). The IW swath mode acquires data with a 250 km swath at 5 × 20 m spatial resolution (for SLC products). The wide-swath coverage is achieved by employing the Terrain Observation by Progressive Scans (TOPS) acquisition mode, which acquires images by recording different subsets of echoes of the SAR aperture, which are called “bursts” [

47]. Sentinel-1 IW SLC products contain a total of three (single polarization) or six (dual polarization) sub-swath images. For example, the images we examined consist of three sub-swaths (named IW1, IW2, and IW3), each including both polarization (VV and VH). Furthermore, each sub-swath image consists of a series of bursts.

Within this work, we perform multi-temporal analysis, collecting a series of images from July 2018 to April 2019 that are displayed in

Table 1. In particular, in order to achieve interferometry with exact repeated coverage, we took into account only images derived from the same satellite sensor (Sentinel-1A), therefore within a bi-weekly revisit time.

Due to the low rate of urbanization in recent years in Italy (in terms of increase of built-up structures), the changes in urban footprint observed within the last couple of years can be neglected if considering the spatial resolution of the Sentinel-1 SAR sensors. Therefore, we can consider urban footprint as a constant value for all the Sentinel-1 images acquired within a single-year time frame.

All Sentinel mission data we used are available at the ESA Copernicus Open Access Hub [

48].

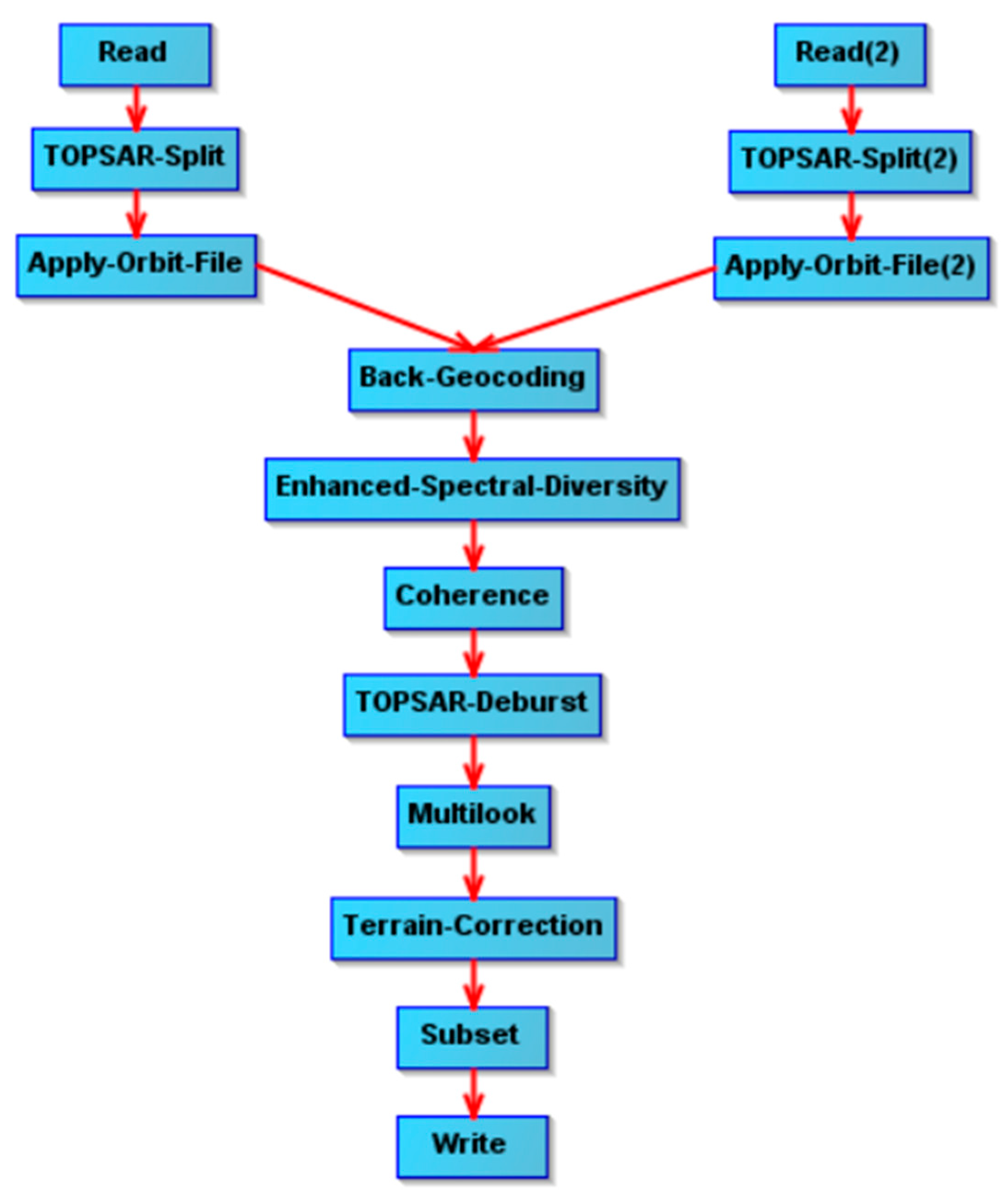

2.3. Preprocessing Interferometric Coherence

The use and interpretation of SAR imagery require a series of complex pre-processing procedures, which we ran on ESA’s SNAP software within the RUS environment. Such procedures refer to standard preprocessing commonly applied to Sentinel-1 products to derive interferometric coherence [

49]. In particular, we followed the approach described by RUS guidelines available in [

50,

51], which we summarize in

Figure 1.

On all SAR input images we took into account both polarizations (VH and VV), so that the output coherence image is composed by two different raster files, related indeed to the different polarizations. As for the processing workflow, we identified one of the three sub-swaths from the original product, subsequently selecting only those bursts that cover our study areas. Furthermore, we computed the coherence estimation setting the range window size to the default value of 20 pixels. Lastly, for the terrain correction we used the Range-Doppler terrain correction, which involves the use of a digital elevation mode (DTM) automatically downloaded from the Sentinel-1 repository, choosing WGS84-UTM 32N as projected coordinate system and selecting an average output resolution of ≈15 m.

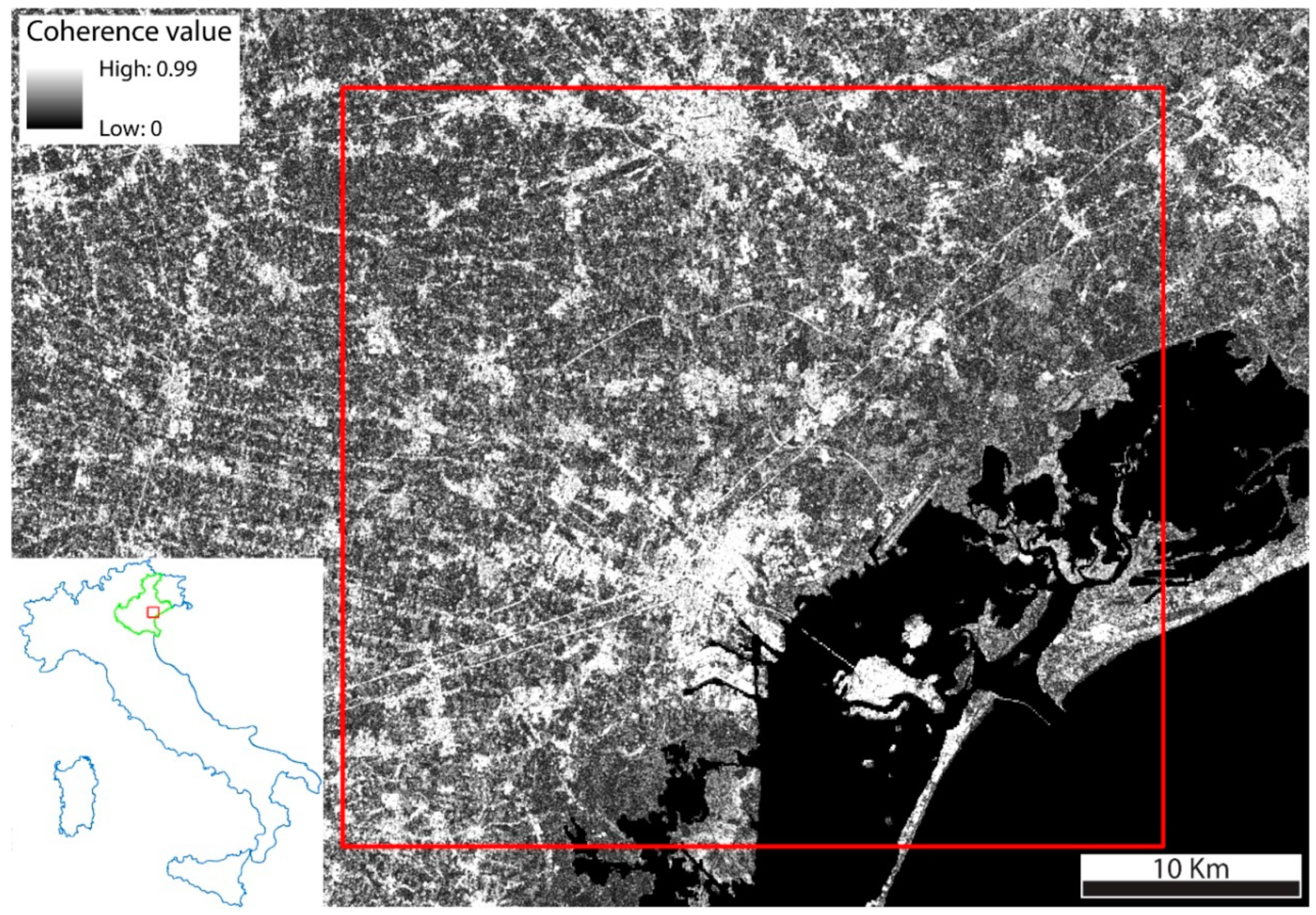

An example of output interferometric coherence image is displayed in

Figure 2.

2.4. Supervised and Unsupervised Classification Applied to Interferometric Coherence

The output coherence image consists of two different bands, reporting interferometric coherence values (from 0 to 1) for two polarizations (VH and VV). Within this type of raster, it is possible to extract urban footprint by applying supervised (and unsupervised) classification algorithms.

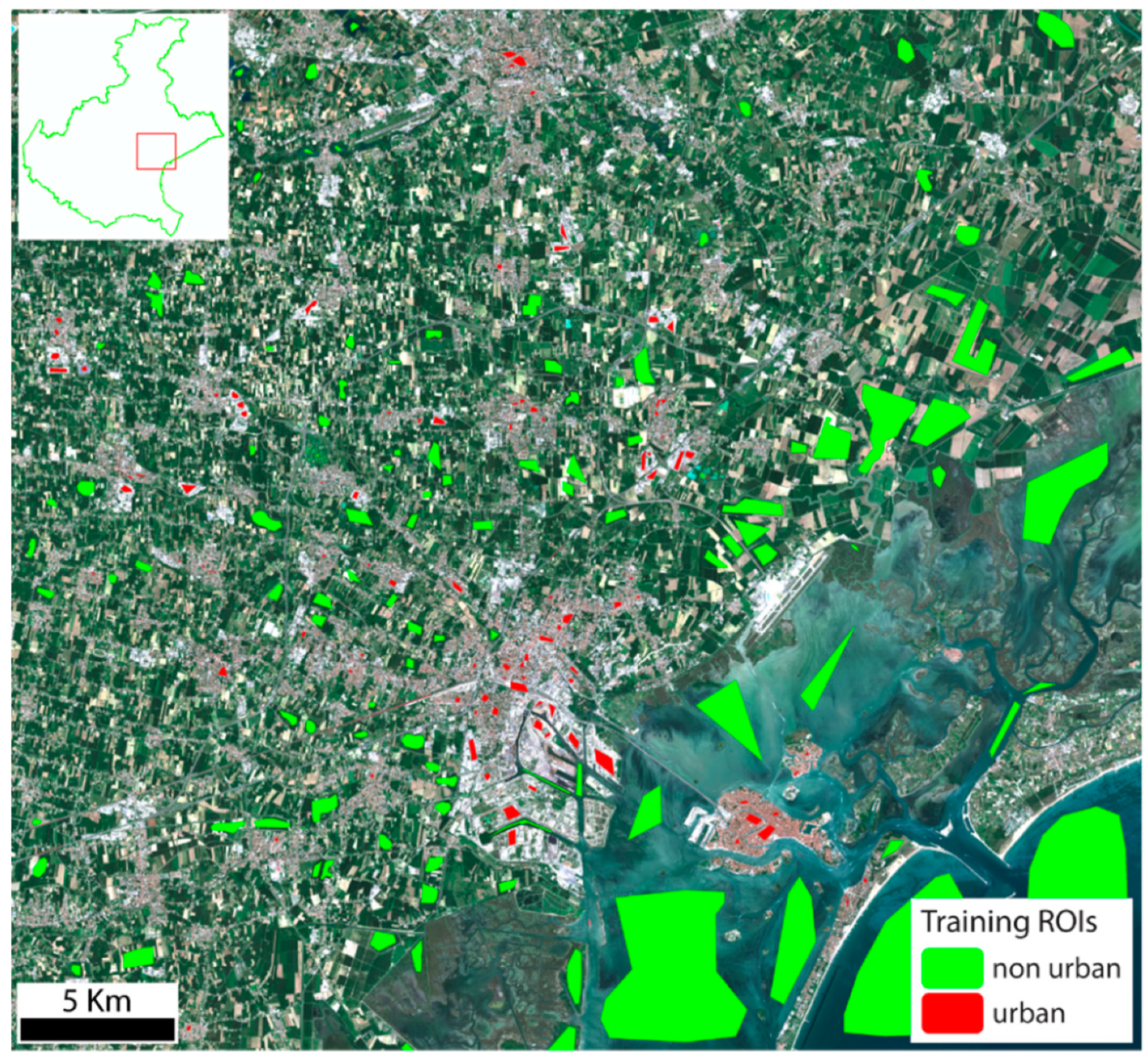

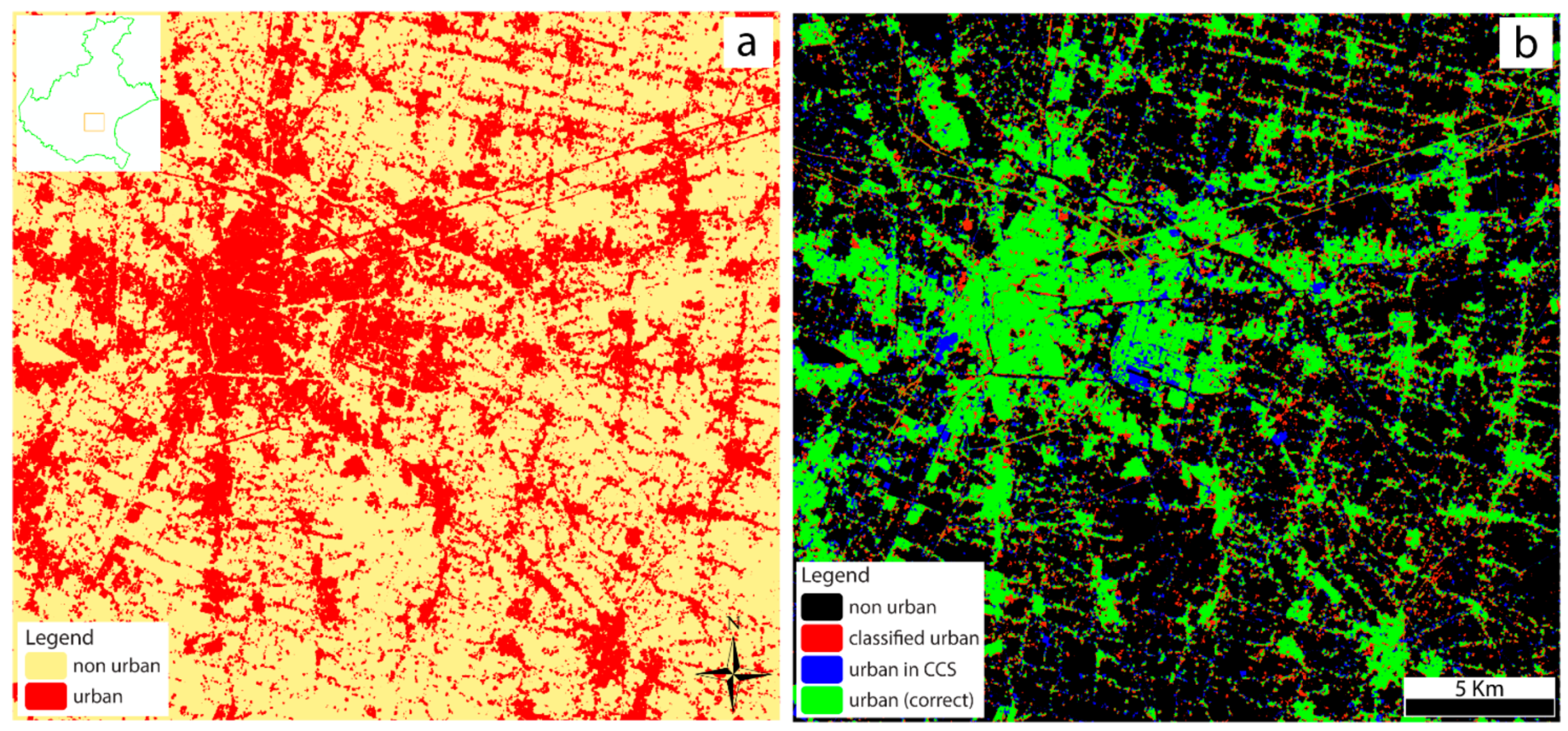

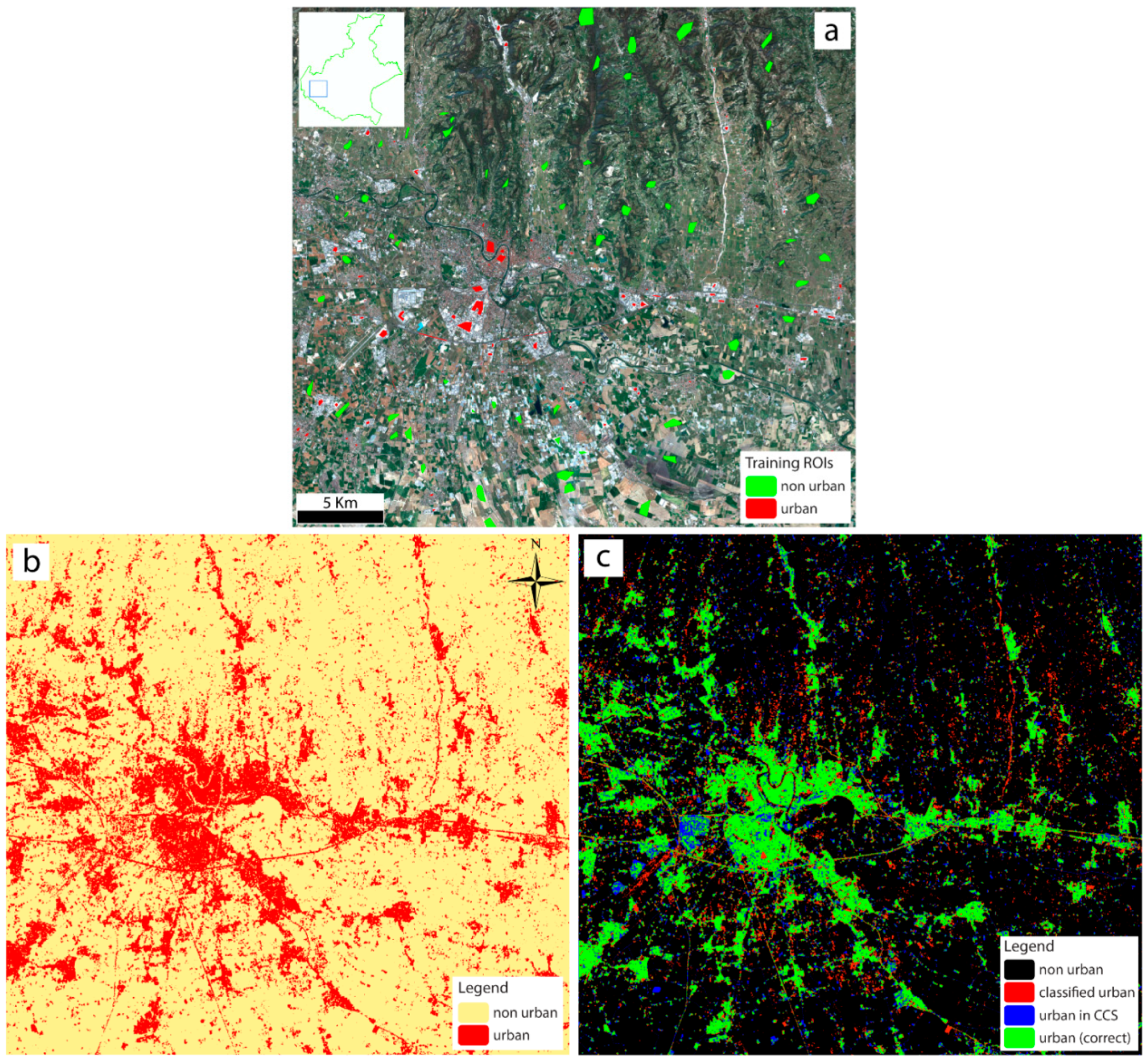

In the case of supervised classification, for training inputs we digitized the ROIs (regions of interest) over a Sentinel-2 image (

Table 1) covering the same target area (

Figure 3). Since we were interested in distinguishing only two different classes, i.e., urban and non-urban areas, when digitizing the ROIs, we aggregated all non-urban land cover types into the same class (such as sparse vegetation, forest, bare soil, and cultivated soil) (

Figure 3). For further statistical analysis and high-resolution image validation of ROIs, the reader is referred to

Table S1 and

Figure S1, respectively, within the

supplementary material. We then used such ROIs as input training sites for the classification algorithms applied to the SAR images. The Sentinel-2 images we used for this purpose are listed in

Table 2. These Sentinel-2 images were processed in SNAP by extracting the bands on the visible wavelength range (bands 2, 3, and 4) and exporting the selected spatial subset into a GeoTIFF.

As for the supervised classification algorithms, we made use of the maximum likelihood (ML) [

52] and the random forest (RF) classifiers [

53,

54], both available in SNAP. Specifically, the ML classifier in SNAP does not require setting of a threshold value for classifying pixels, thus no pixel will remain unclassified. On the other hand, for the RF classifier we specified the maximum number of decision trees, which we set to 500 as best value in order to obtain a good noise removal and a homogenous response [

55]. We firstly applied supervised classification algorithms to a single coherence image (

Figure 2), made by two distinct raster files, which indeed are related to the different polarizations of the same SAR image (VH and VV). Subsequently, we considered the use of multiple coherence images, taken within a longer period of time (see next section).

Ultimately, we also made use of unsupervised classification algorithms, in particular the K-means clustering, which does not require the digitalization of ROIs, but only the number of classes (or “clusters”) set for the classification map. In fact, the distinct backscattered intensity and interferometric coherence values of urban environments allow their sharp distinction from all other land cover types, regardless of the level of anthropization, weather conditions, or environmental parameters. For this reason, by setting only two classes for the K-means algorithm, it is likely that those classes will be automatically attributed to urban and non-urban pixels. We performed the unsupervised classification using the K-means algorithm available in SNAP. As in the case of the ML classifier, no threshold value is required for the K-means algorithm in SNAP, and we left the default value of 30 as number of iterations (which we found was a value high enough to identify the two cluster centers and successfully separate the two classes).

2.5. Multitemporal Analysis of Interferometric Coherence

Following the guidelines available in the RUS web material [

50,

51], we repeated the processing workflow of interferometric coherence, described in the previous section, to a series of Sentinel-1 images taken from April 2019 to July 2018 (

Table 1), always related to the province of Venice and its surroundings. Subsequently, we produced a series of interferometric coherence images, every time processing two IW SLC images (taking into account both polarizations—VH and VV). Then, we stacked all coherence images into a single raster file using the

Stack tool in SNAP. A sample result is displayed in

Figure 4. By doing so, urban areas are likely to be identified more rigorously, progressively reducing the error and noise in the images and increasing the overall accuracy.

Lastly, we applied the same classification algorithms described in the previous section.

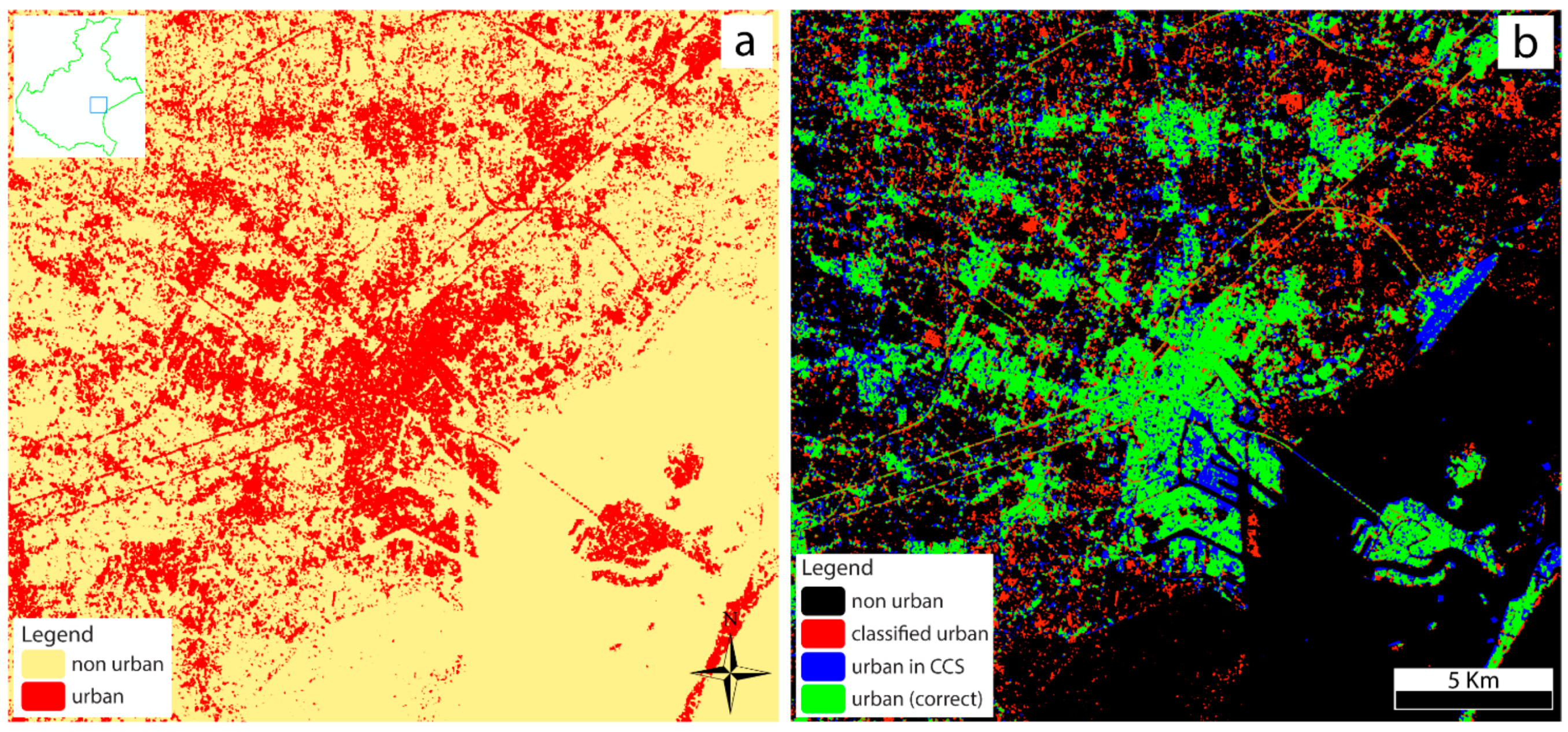

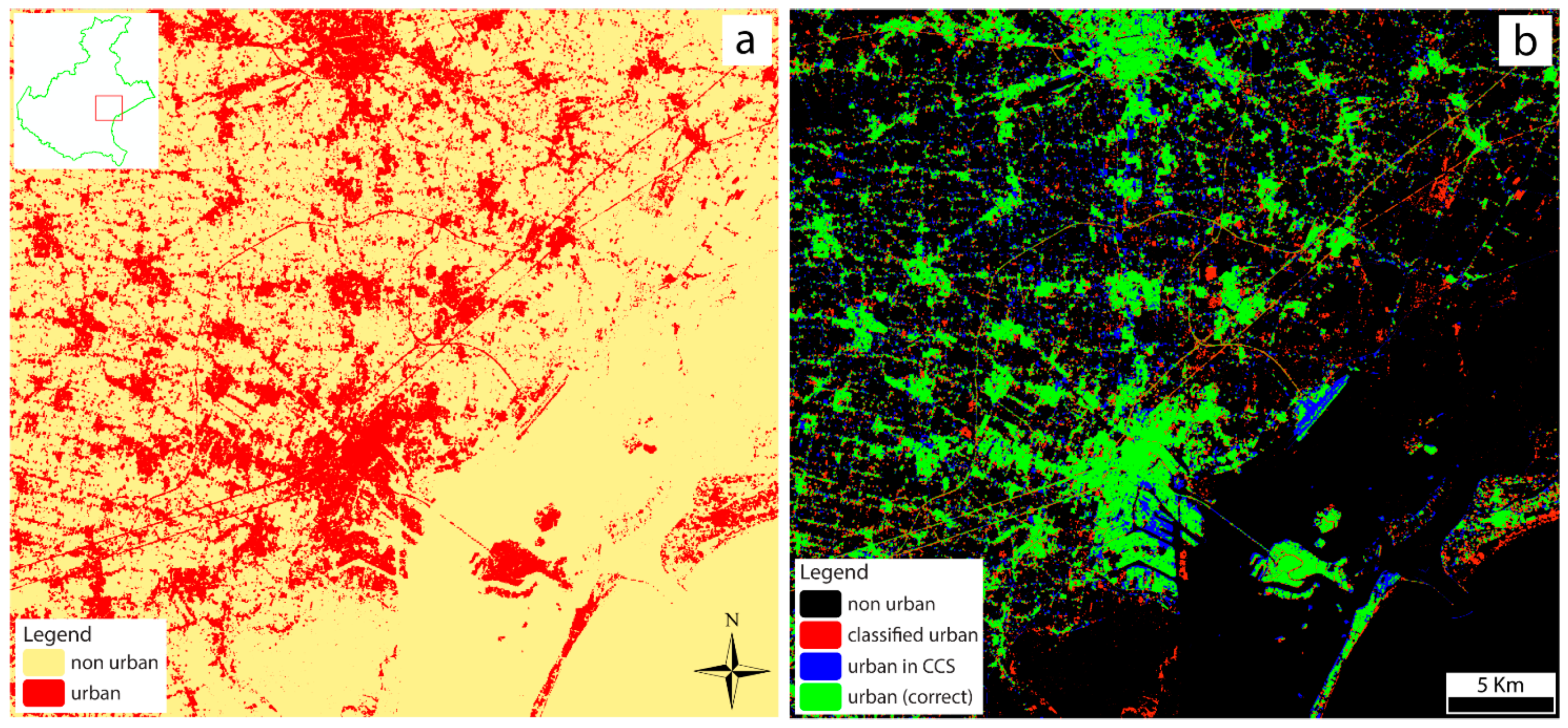

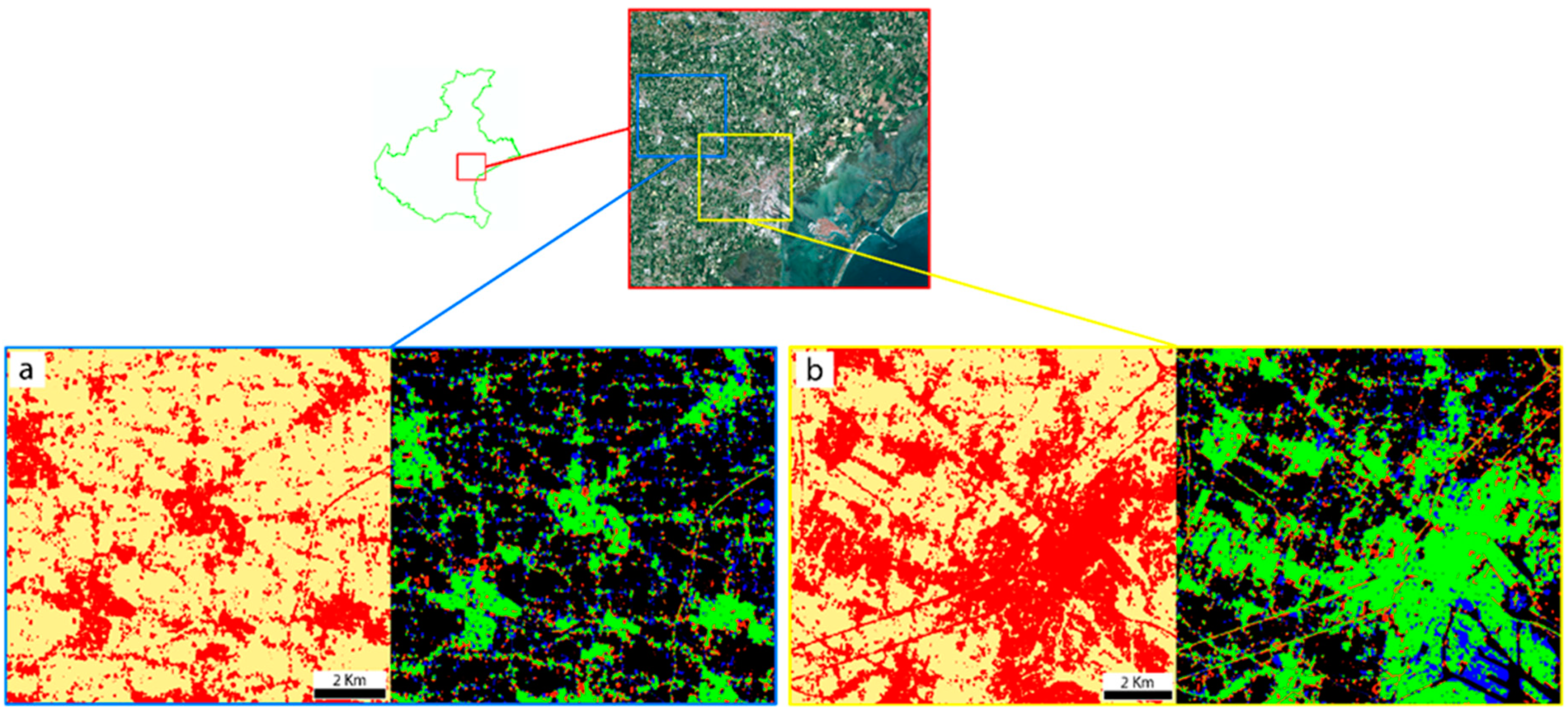

2.6. Testing Multitemporal Coherence on Other Test Areas

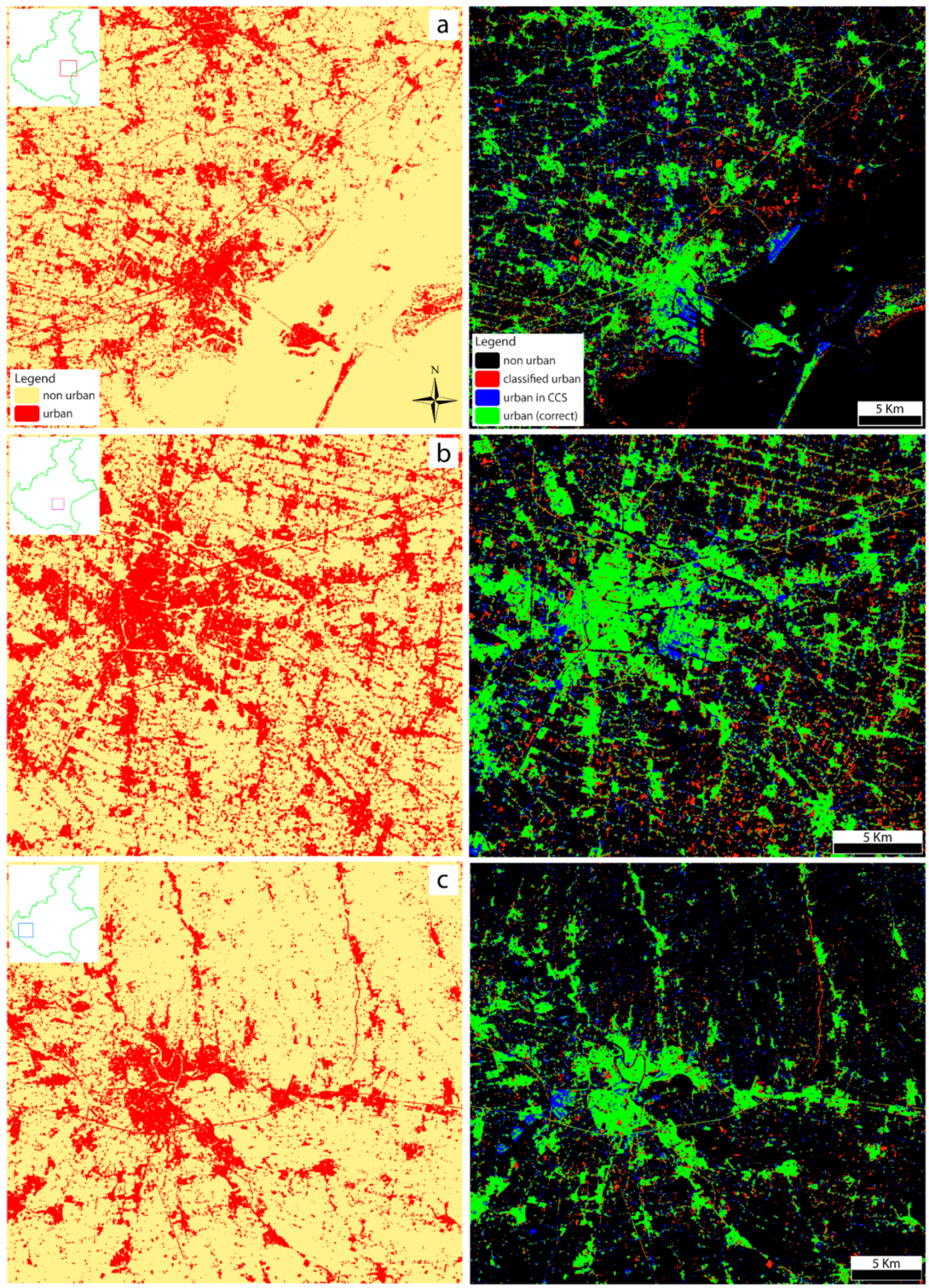

After testing this approach on the first study area (i.e., the Venice area), in order to verify the consistency and reliability of the method, we extended the same analysis on other test areas.

Firstly, we made use of the same ROIs digitized for the Venice region, but restricting the target area on which we applied the supervised classification algorithms. In this case, we tested the efficiency of our approach to extract urban footprint on smaller areas (at local scales), and over highly anthropized regions (see

Section 3.3). We then repeated the same procedures on a large urbanized area around the city of Padua. Lastly, we tested the approach on a different environment, considering areas of similar size, taking into account the heterogeneous province of Verona, in the western boundary of the Veneto region, where dense residential and industrial areas alternate with sparsely populated (and thus less urbanized) mountain environments. We followed the same approach that involves the digitalization of ROIs over a Sentinel-2 image (

Figure 3), which we used as input training sites for the supervised classification algorithms applied to the multitemporal set of interferometric coherence images.

We also applied unsupervised classification to these other test areas.

2.7. Land Use/Land Cover Map of the Veneto Region as Reference for Accuracy Assessments

When dealing with automatic processes that involve feature extraction and image classification, in order to estimate the reliability of any adopted approach, an accuracy assessment is always required. Within the Veneto Region, in particular, different sources related to urban environments were available, such as the land cover Map, the geo-topographic database, and cadastral data from the Land Registry (the Italian “Catasto”). These sources provide detailed multi-layer cartographic information of urban features and urban areas, with geometric resolution up to centimeters. However, we took into consideration only the land cover map of the Veneto Region (which we will refer to as “Carta di Copertura del Suolo”—CCS). Produced by Corvallis S.p.A. in 2015 through photointerpretation and ground truth information, as an update of previous versions, this map is the most reliable and up to date data source for the analysis of urban environment and the extraction of urban footprint. The map is available online as shapefile [

55], i.e., as a vector file, with land cover codes included in the attribute table. These codes refer to the CORINE land cover classification (e.g., [

56,

57,

58]). For further details on the CORINE land cover nomenclature, the reader is referred to

Table S2 and

Figure S2 in the

supplementary material.

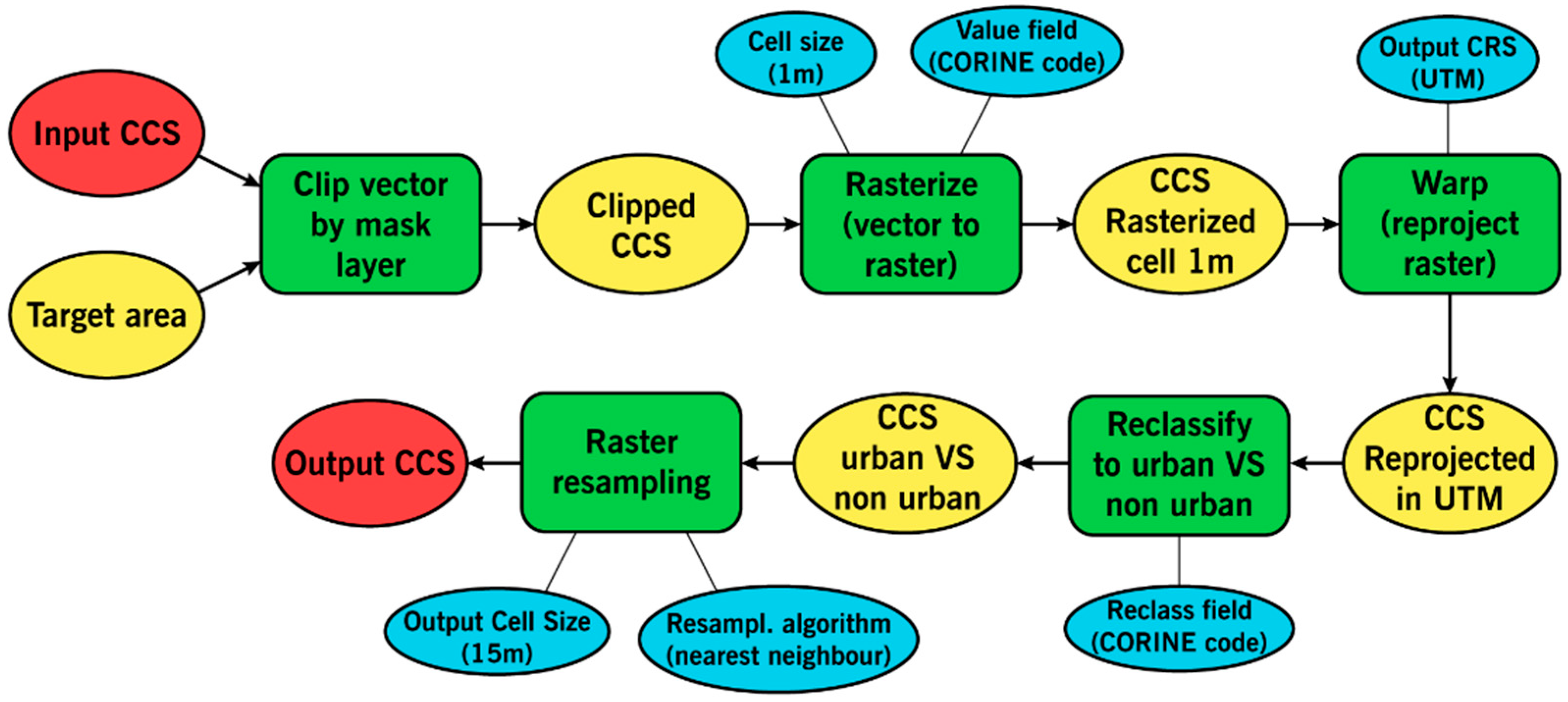

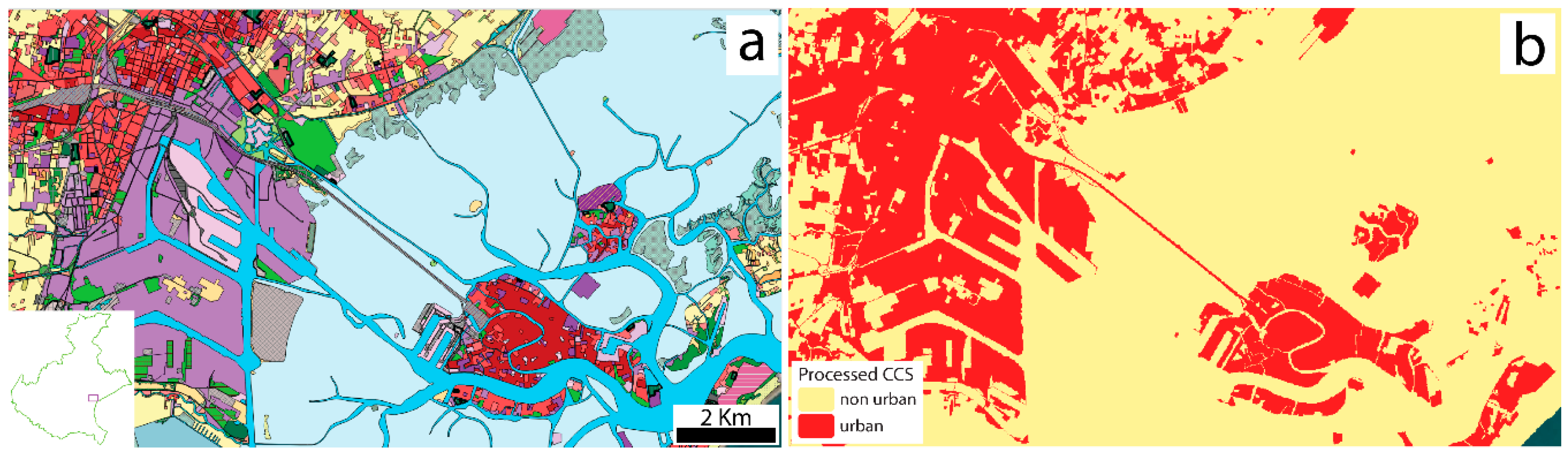

In order to perform accuracy assessments, we processed the vector input file of the CCS into a new raster file, with the same pixel characteristics of the classification images (i.e., urban footprint) obtained from Sentinel-1 SAR imagery. The processing workflow is summarized in

Figure 5, whereas an example of the input and output map is displayed in

Figure 6.

Once clipped into the study area, we converted the vector CCS into a raster image, attributing to the pixel values the codes of CORINE land cover classification. During the rasterization process, we set the initial pixel size of 1 m in order to avoid incorrect aggregation of different classes and preserve the high nominal resolution of the vector input file. Furthermore, we re-projected the CCS, originally projected in the Italian “Fuso 12” coordinate system (EPSG code 6876), into WGS84-UTM Zone 32N (EPSG 32632) as the Sentinel images.

The classification images produced from Sentinel-1 imagery consist of discrete rasters, with all pixels classified into either “urban” or “non-urban” values (with values 1 and 0, respectively). Therefore, once rasterized, we reclassified the CCS into “urban” and “non-urban” pixels, following the CORINE classification codes. In particular, we set as “urban” only the pixels included in Classes 1.1 and 1.2, referred to artificial surfaces, such as urban fabric and industrial, commercial, and transport units. On the other hand, we excluded Classes 1.3 and 1.4, respectively, “Mine, dump, construction sites” and “Artificial, non-agricultural vegetated areas”, since they are likely to be identified as non-urban surfaces on SAR images. We classified all other Classes 2, 3, and 4 (agricultural areas, forest and seminatural areas, and wetlands, respectively) as “non-urban”. Lastly, in order to perform the accuracy assessments, we resampled the output raster to the output Sentinel-1 image resolution, which is 15 m. The entire conversion process was run in QGIS. An example of a processed test area of the CCS is displayed in

Figure 6b. Finally, once the CCS was properly pre-processed to match all the characteristics of the Sentinel-1 classification images, accuracy assessments were performed by means of the Semiautomatic Classification Plugin (SCP) implemented in QGIS. This plugin produces an error matrix indicating all statistical parameters, such as overall accuracy, user and producer accuracy, and Kappa hat coefficient (or Cohen’s kappa coefficient) (e.g., see [

41]).

4. Conclusions

In this work, we aimed at developing an automatic (or semi-automatic) process able to map and monitor urban environment, and in particular to extract the spatial extent of urban features over non-urban areas (i.e., urban footprint). During the entire project development we had access to a series of Virtual Machines as powerful computational environment provided by the Copernicus RUS service [

45,

46].

When dealing with urban mapping, different data sources can be exploited in several different ways. In this work, we investigated the use of Sentinel-1 SAR imagery and in particular IW Level-1 SLC products. The high backscattered intensity of urban areas, acting as corner reflectors, proved to be highly effective when mapping urban environments. In particular, interferometric coherence highlights all surfaces with constantly high backscattered intensity, fixed shape, and constant physical parameters through time. Such surfaces are indeed urban areas.

We stacked a series of coherence image related to a multitemporal series of images (from April 2019 to July 2018) in order to increase the accuracy of the classified coherence images. We performed a supervised classification using the random forest and maximum likelihood classifiers and then performed accuracy assessments, considering the properly preprocessed CCS as a reference. The classified images resulted in a high overall accuracy (up to 90%). Moreover, we obtained a percentage of urban footprint significantly precise compared to the values extracted from the CCS, with errors lower than 1%–2%. We repeated the same procedure on many other test areas, analyzing restricted areas around Venice, and other large areas around Padova and Verona (the latter including mountain environments, as well) obtaining similar accuracy values. Finally, we attempted a completely automatic approach in terms of processing workflow, which involves the use of unsupervised classification (with no need of digitization of training sites). We applied the K-means algorithm to the interferometric coherence stack image, exploiting the separability of urban environments with respect to all other surface types. Surprisingly, we obtained the same high accuracy values and, in some cases, even better results compared to the supervised classification approach.

By repeating these procedures within constant time intervals, it is possible to monitor the development of urban environment through time potentially in any region of the world. Considering our results, this methodology should provide reliable accuracy values within time intervals of 6–12 months. Moreover, since our methodology relies on open source tools and software, and globally available free data, we especially encourage the use of similar approaches for developing countries with emerging economies, considering more dynamic urban areas, as well.

As such, in the context of the 2030 Agenda for the Sustainable Development [

2,

3,

4], in particular for Goal 11—Sustainable City and Communities, satellite imagery and Earth Observation technologies can play an important role for extracting and updating data to feed SD indicators. More specifically, we refer to targets 11.3 and 11.7, and in particular SD indicators 11.3.1 and 11.7.1 [

5,

6,

7] (see

Section 1 for details). Therefore, from the perspective of local governments and public authorities, we propose an organized dataflow coming from Sentinel-1 SAR data, in order to provide not only a helpful tool for a sustainable city development and urban planning at local and regional scales, but also help feeding such indicators for the national statistical set [

43,

44] and the European monitoring framework.

That being said, some limitations concerning Sentinel data still remain, mostly related to the limited geometric resolution of the Sentinel satellites with respect to other commercial satellites. Moreover, the first Copernicus Sentinel mission (Sentinel-1A) was launched in 2014, limiting so far the possibility of performing multitemporal analysis in the previous decades (using Sentinel data only). Nonetheless, the ever-increasing availability of high-resolution optical and radar satellite sensor, along with the growing awareness of open data policies, will definitely encourage the use of satellite images to tackle environmental changes and will promote new applications and technologies, in all fields, including urban applications.