Constructing Geospatial Concept Graphs from Tagged Images for Geo-Aware Fine-Grained Image Recognition

Abstract

1. Introduction

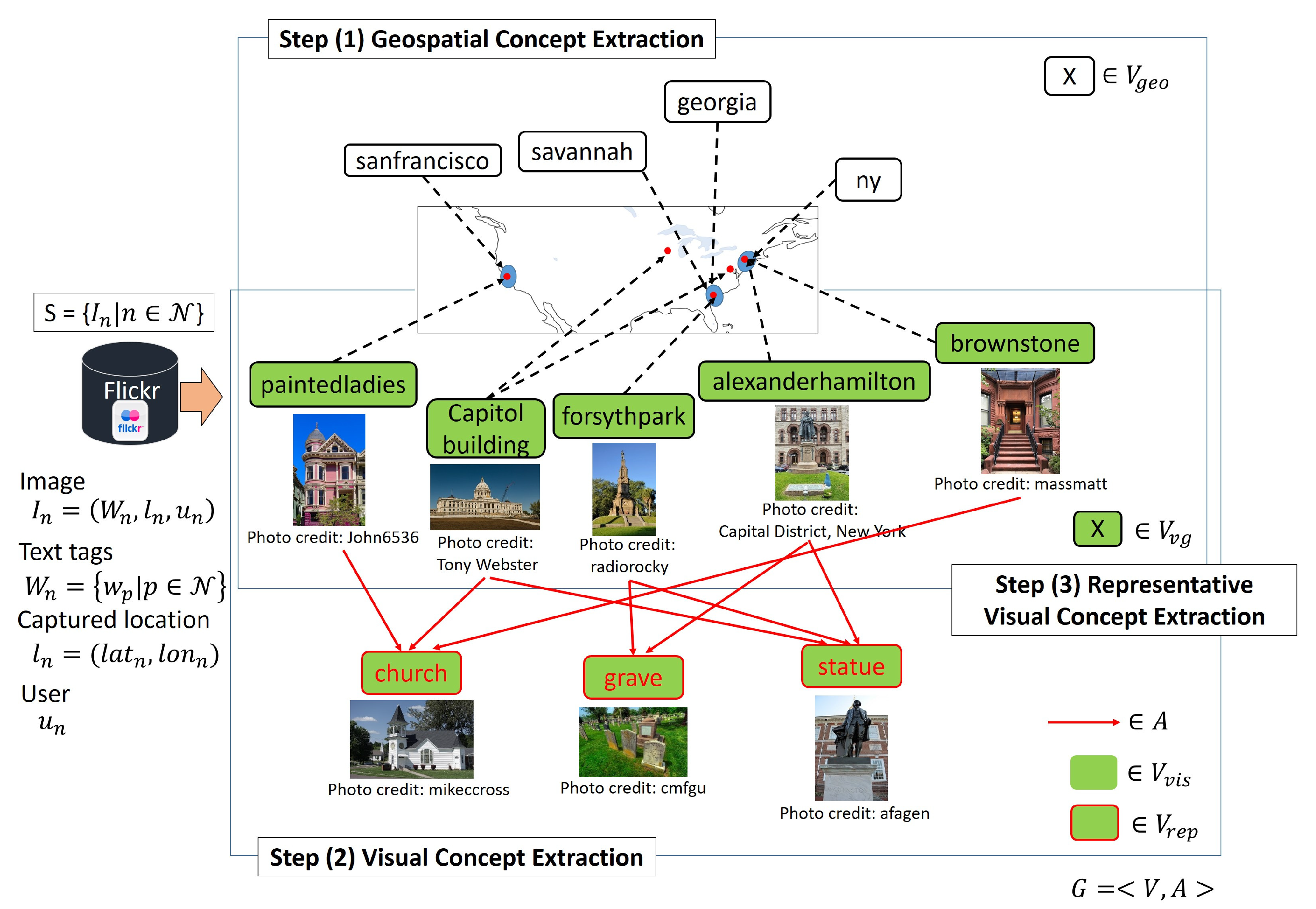

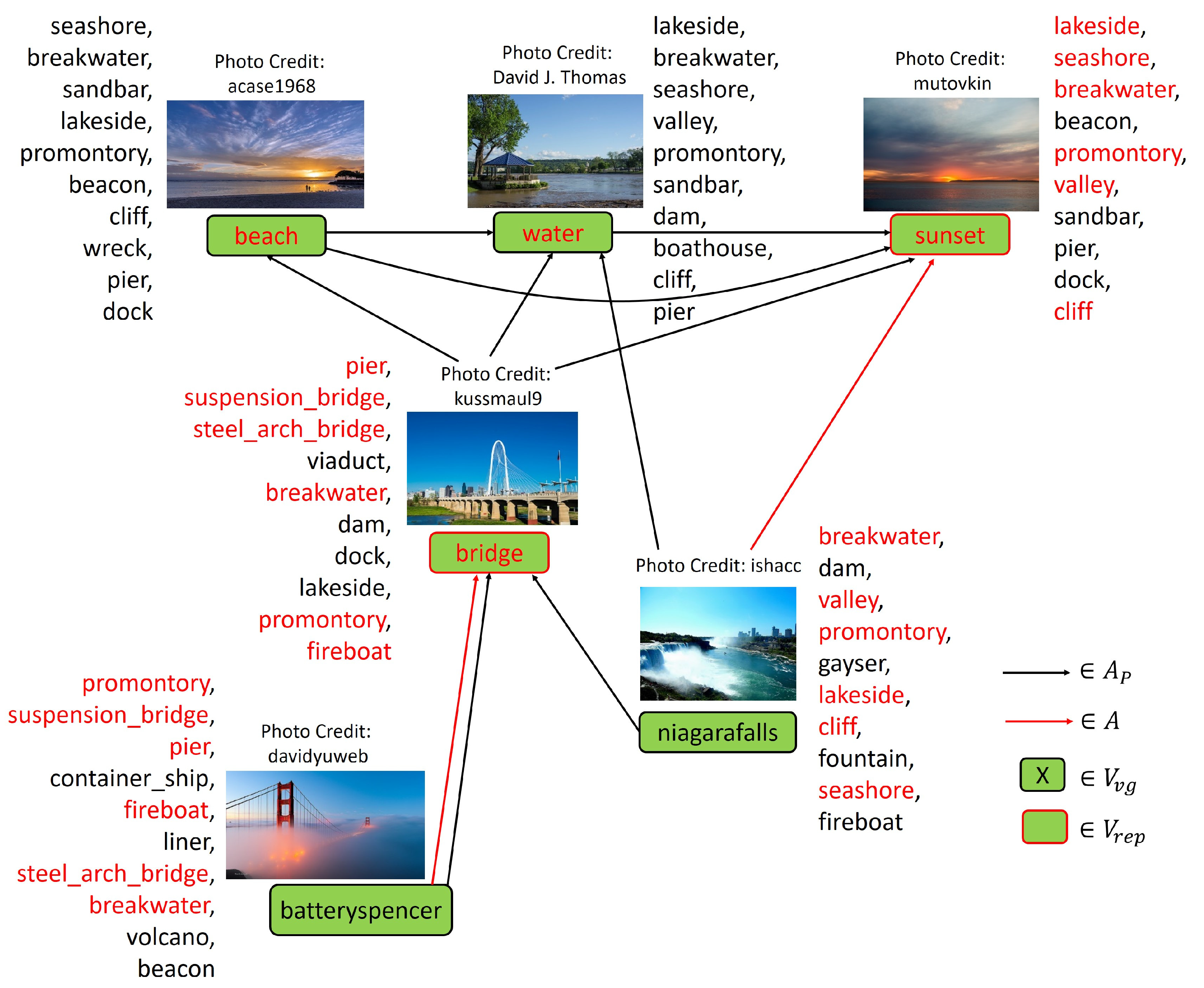

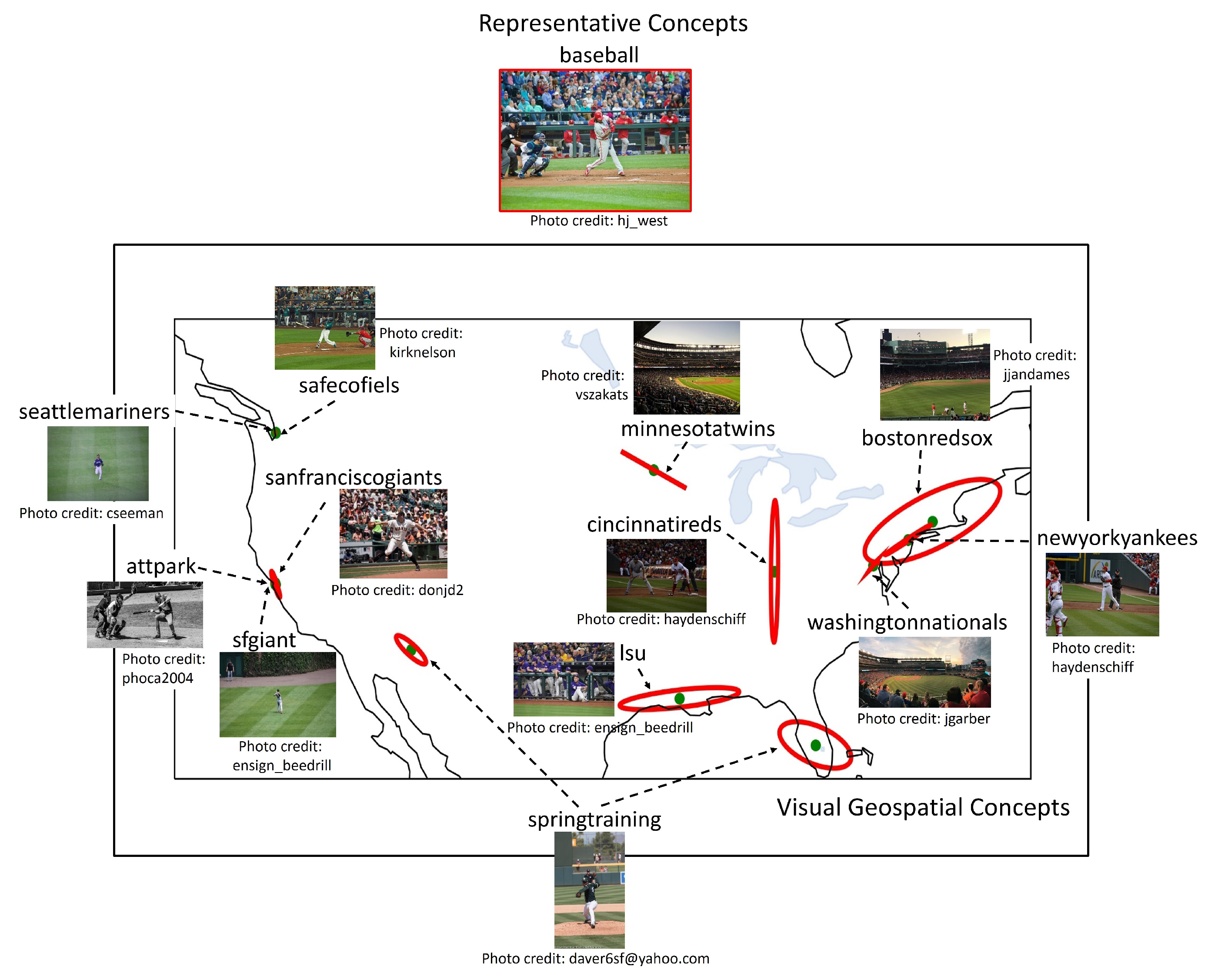

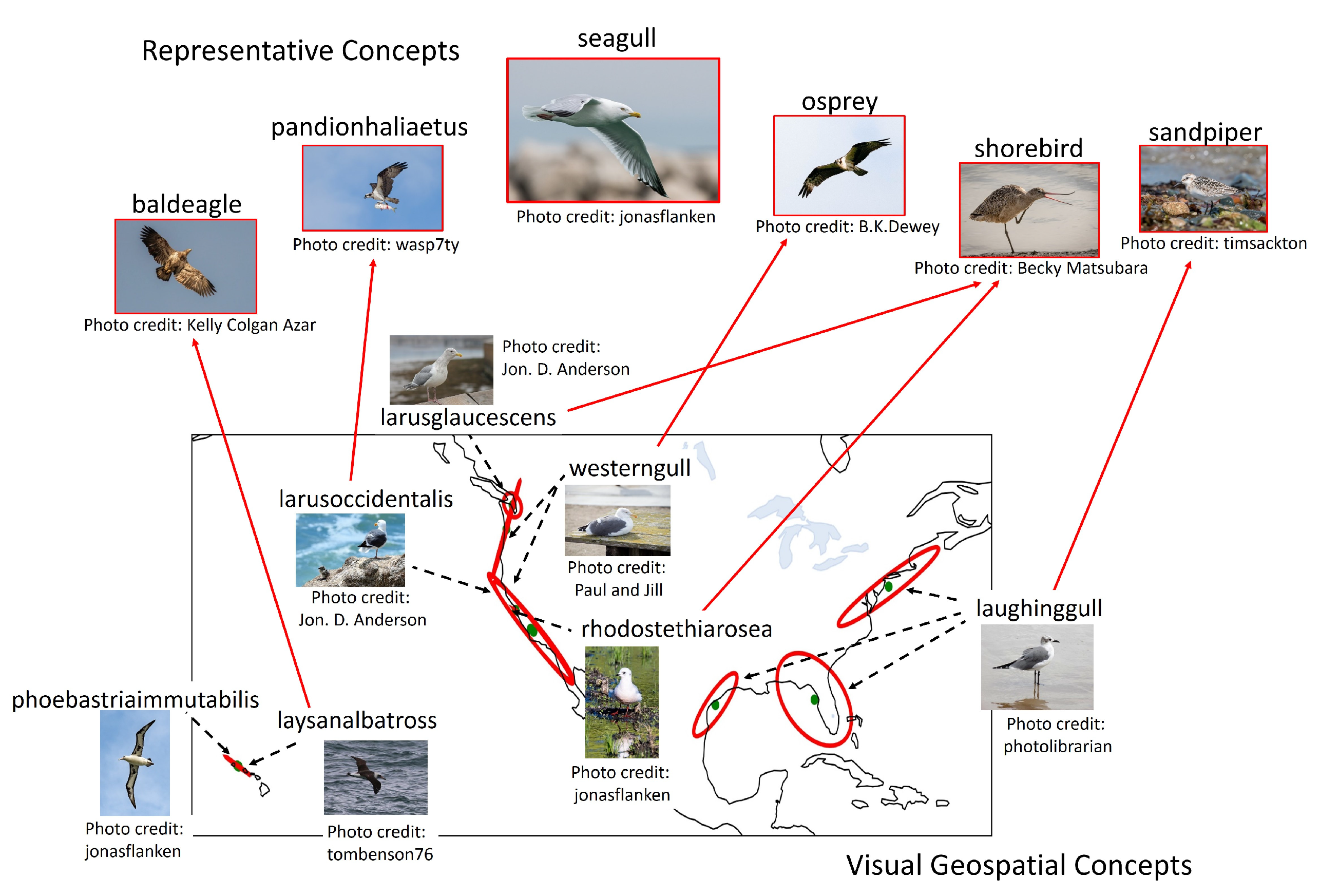

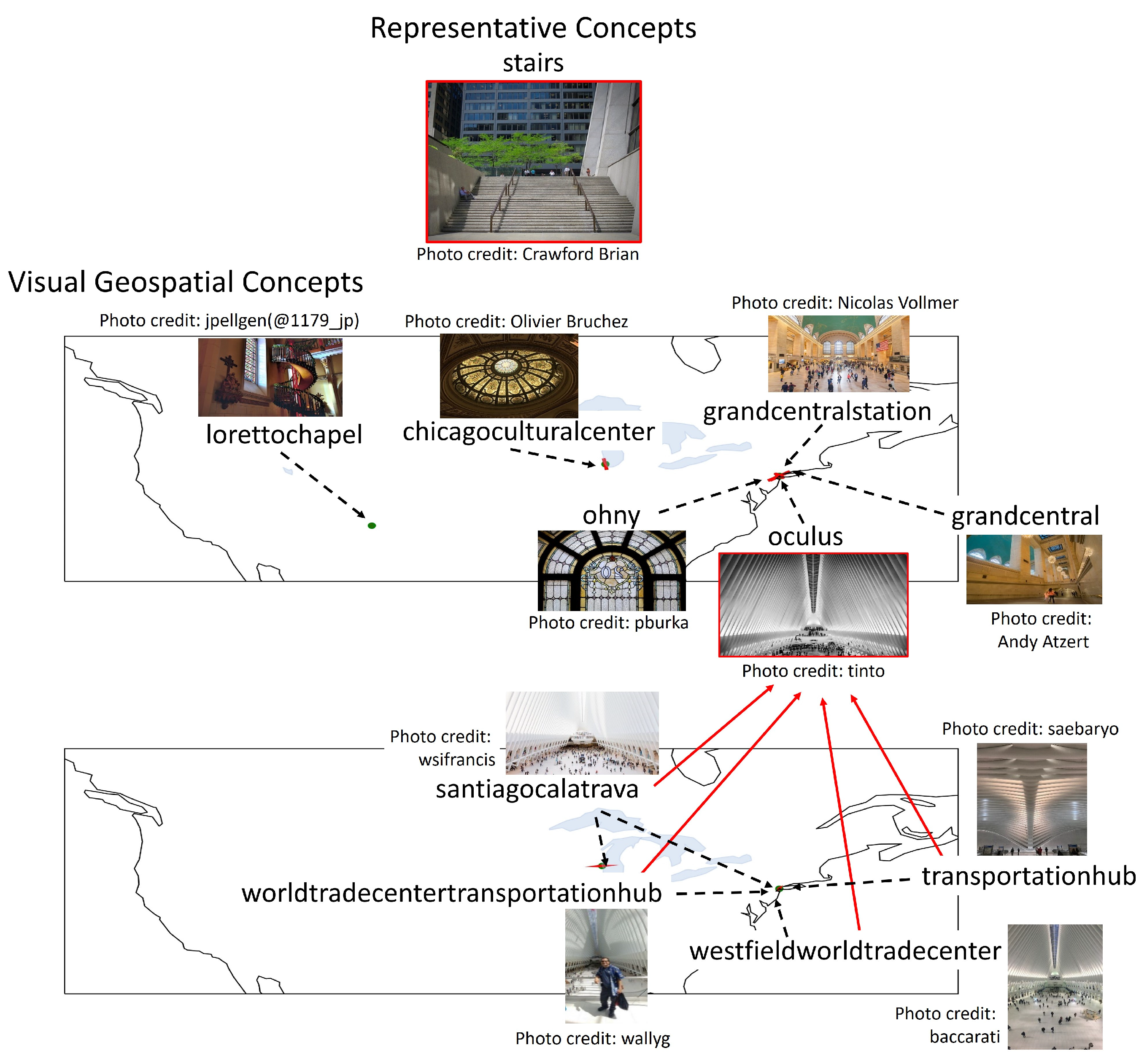

- Our method can automatically extract fine-grained geospatial concepts of various domains with their geospatial and visual features. Further, the representative concepts for each geospatial concept are automatically determined so that the reliable visual features extracted from the representative concepts with many example images can be shared to recognize their subordinate geospatial concepts. The extracted knowledge is represented as a graph, composed of nodes representing concepts and edges representing their relations.

- While existing work has verified that the accuracy of the fine-grained image recognition can be improved by using the geographical location information where the image was captured, the domains of the recognizable concepts are limited to those the visual and geospatial features of which can be obtained from manually prepared databases. Further, what kinds of fine-grained geospatial concepts in the real world should or can be recognized are not known. By using Flickr images, our work can increase the diversity of concepts/domains to which such geo-aware fine-grained image recognition can be applied without the manual labor and by considering the interest of general public.

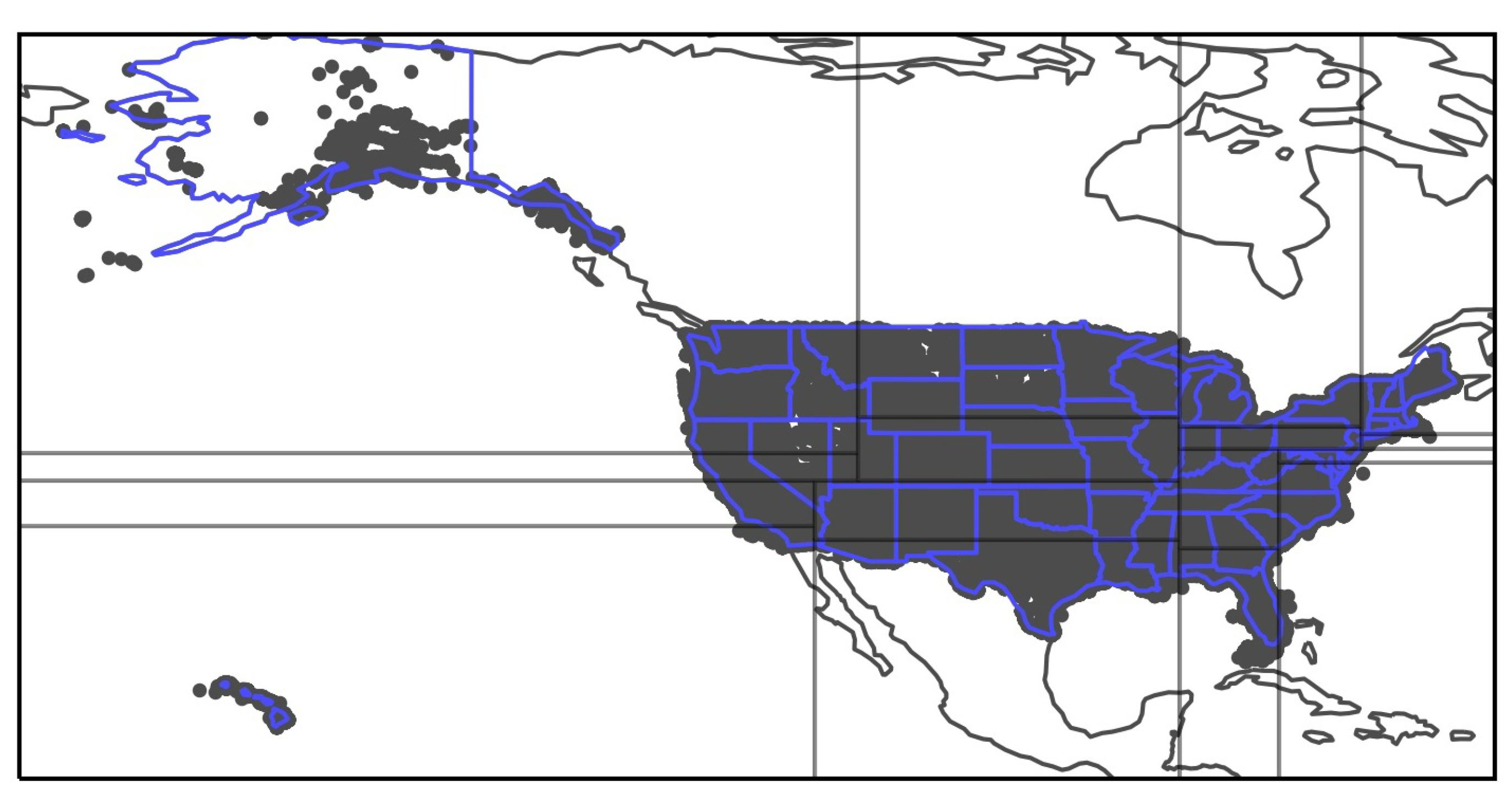

- The geospatial concept graph constructed from a set of Flickr images posted in the U.S. in a year is evaluated based on the results of geo-aware image recognition for a set of Flickr images posted in a different year. The results show the potential of using the prior information obtained from Flickr images for the automatic geo-aware fine-grained recognition, for example, of the images captured by smart phones with GPS systems.

2. Related Work

3. Proposed Method

- Step (1) Geospatial Concept Extraction

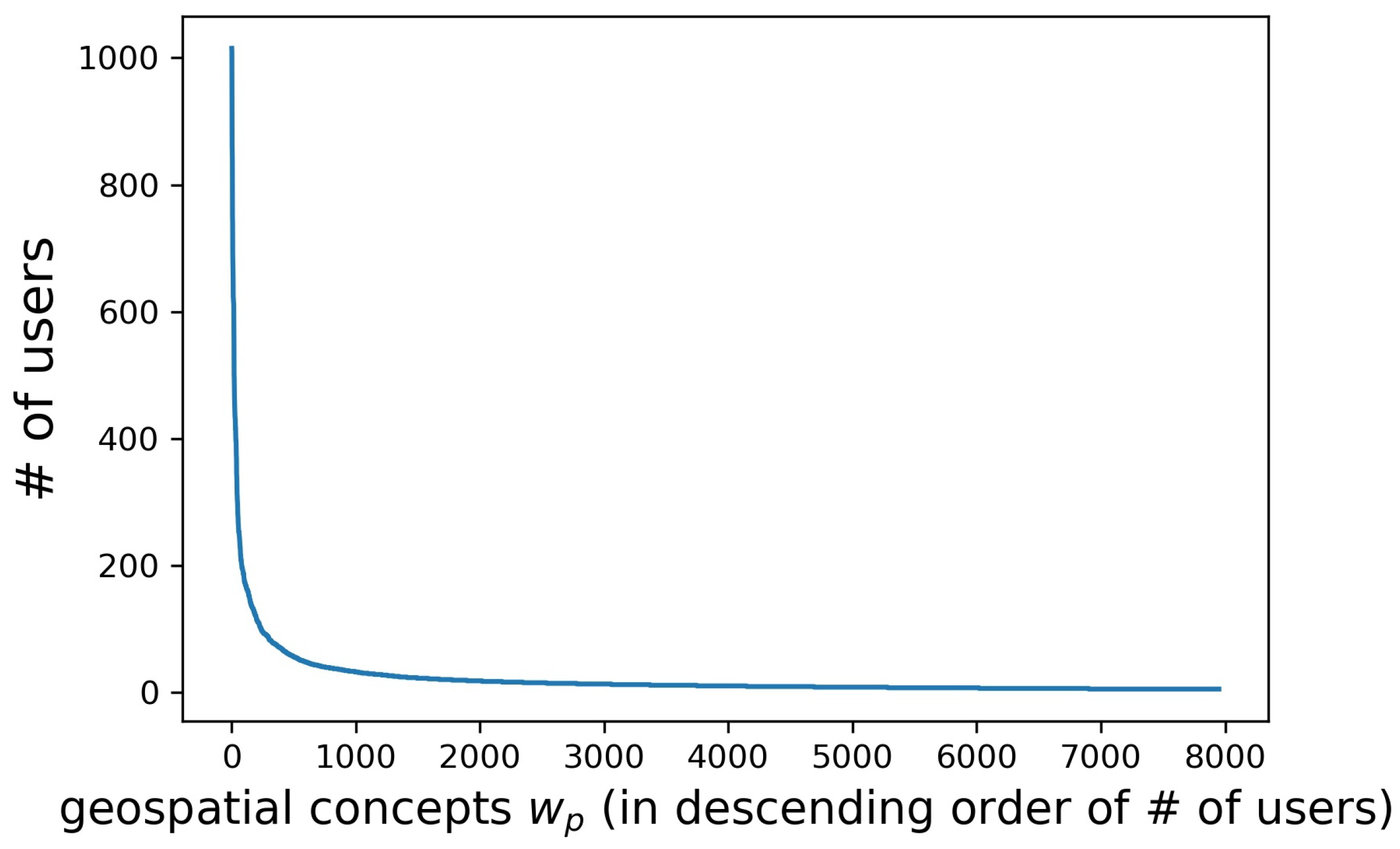

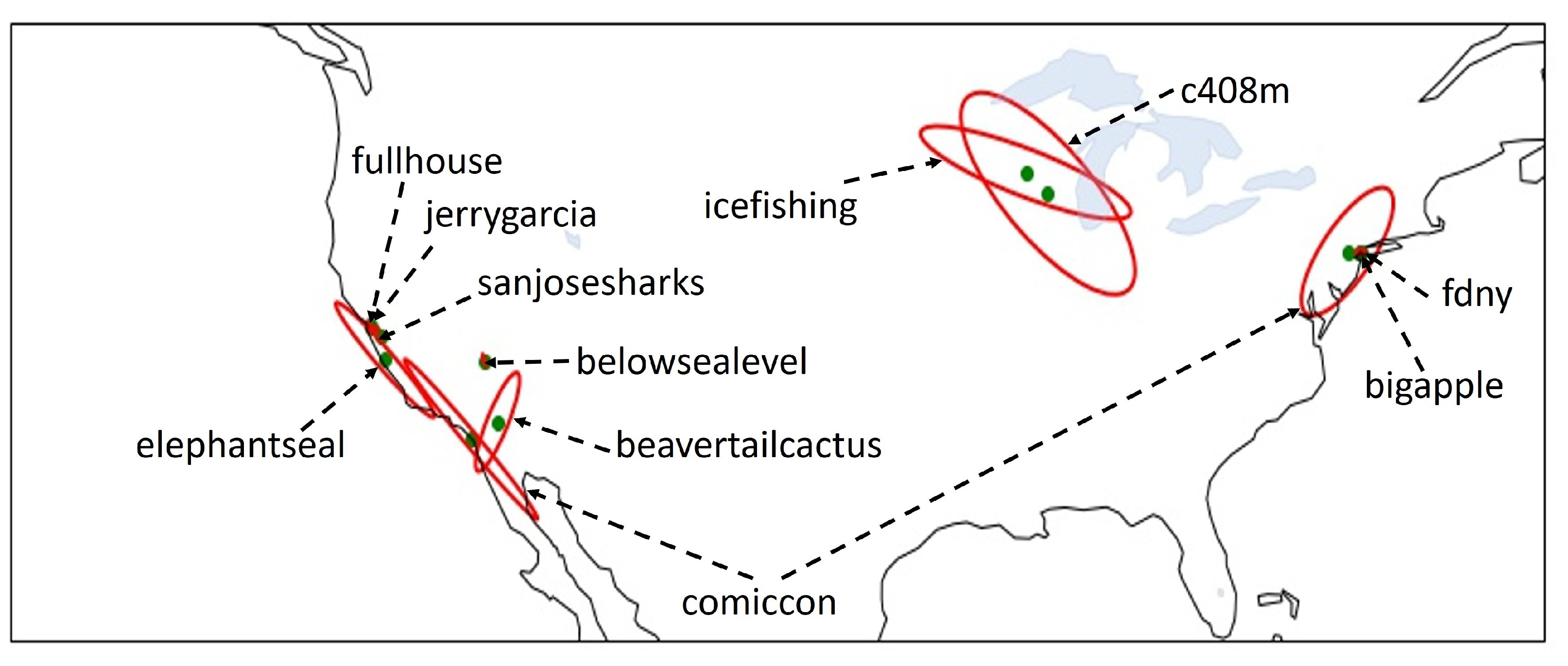

- Tags used only in specific locations are extracted as geospatial concepts with their geospatial features.

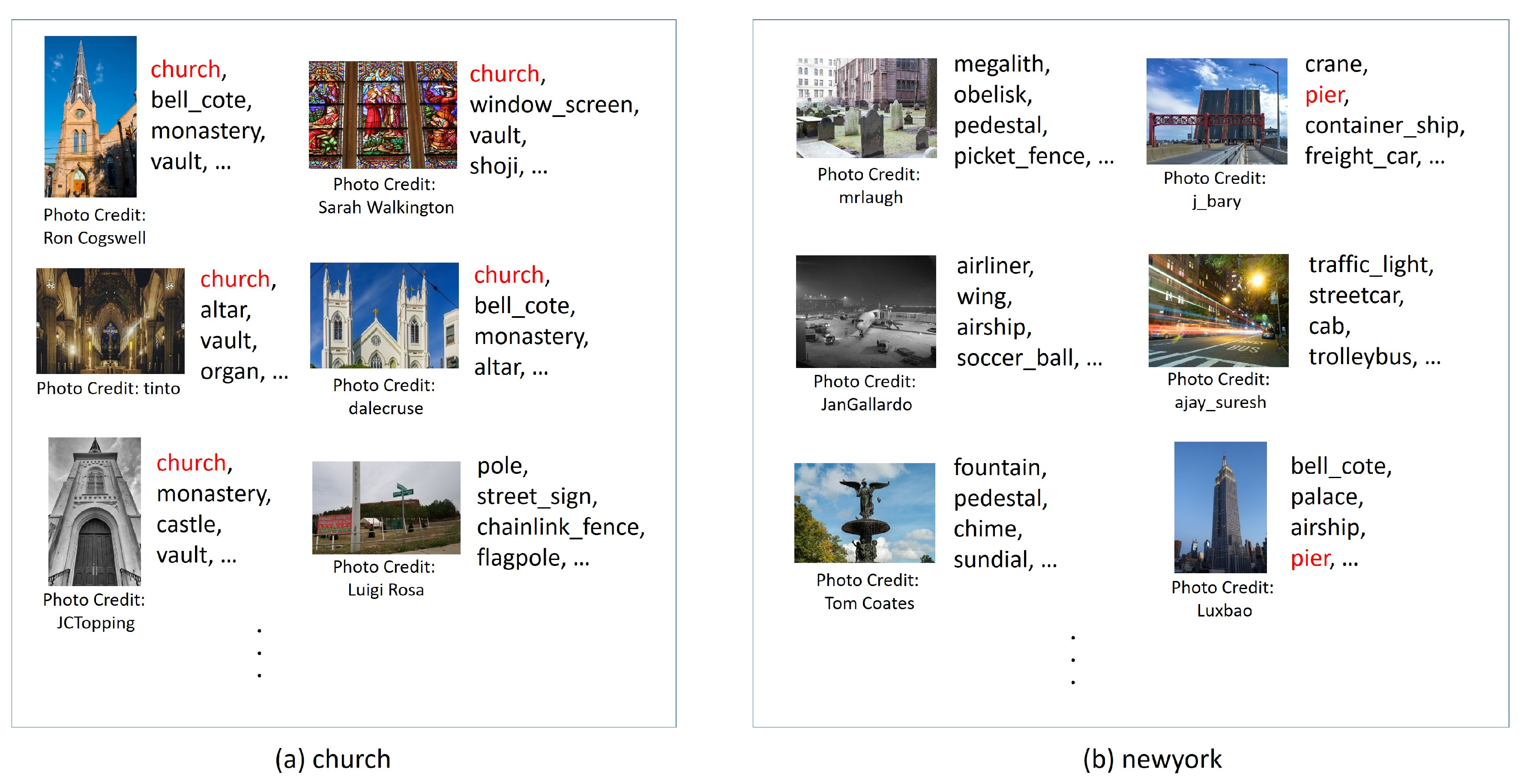

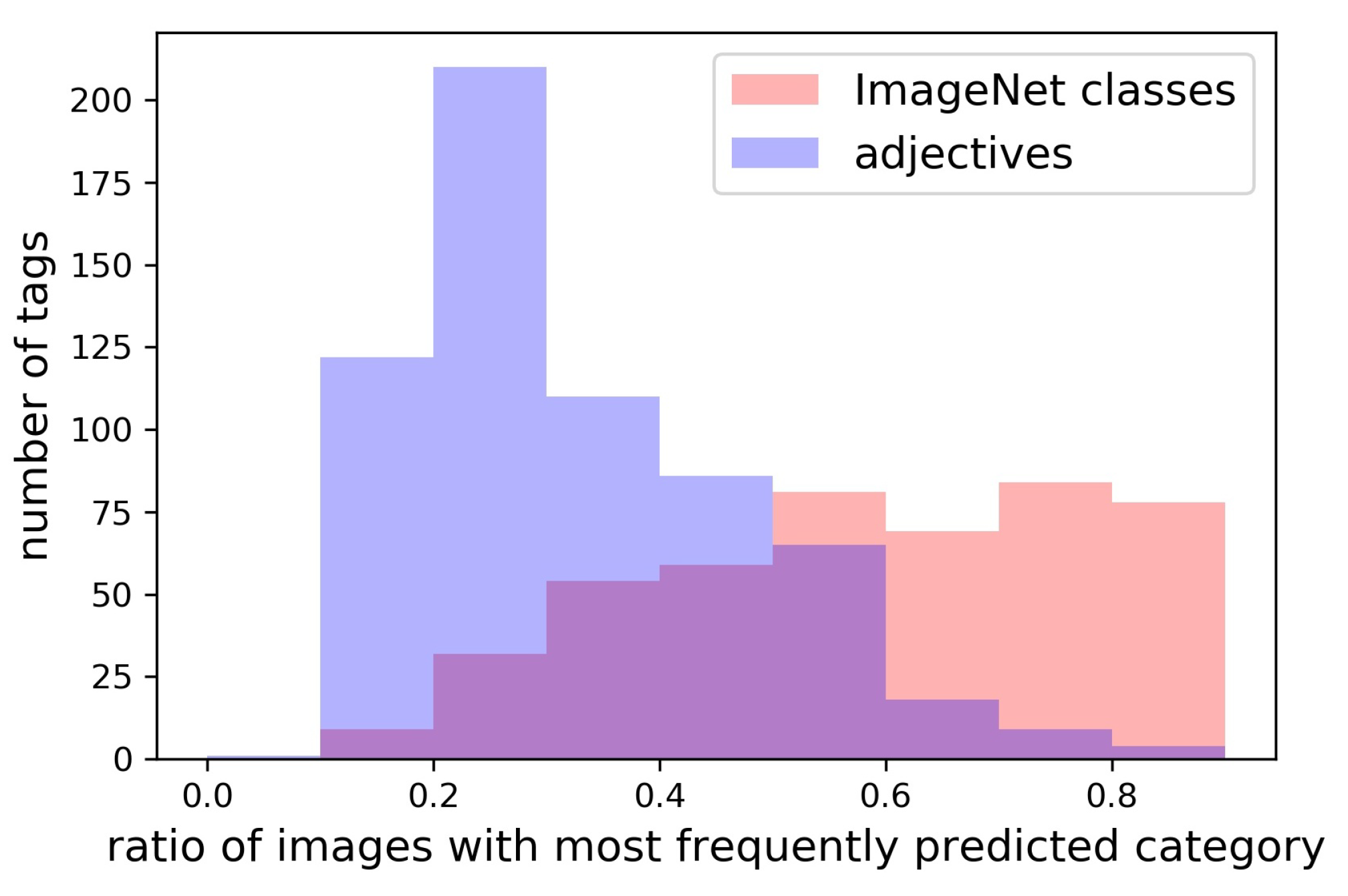

- Step (2) Visual Concept Extraction

- Tags assigned to images with visually uniform appearance are extracted as visual concepts with their visual features.

- Step (3) Representative Visual Concept Extraction

- Tags extracted both as geospatial and visual concepts are considered as visual geospatial concepts , which have both geospatial and visual features. For each visual geospatial concept , its representative visual concepts are selected from visual concepts based on their co-occurrence frequency and visual similarity. As a result, and are extracted.

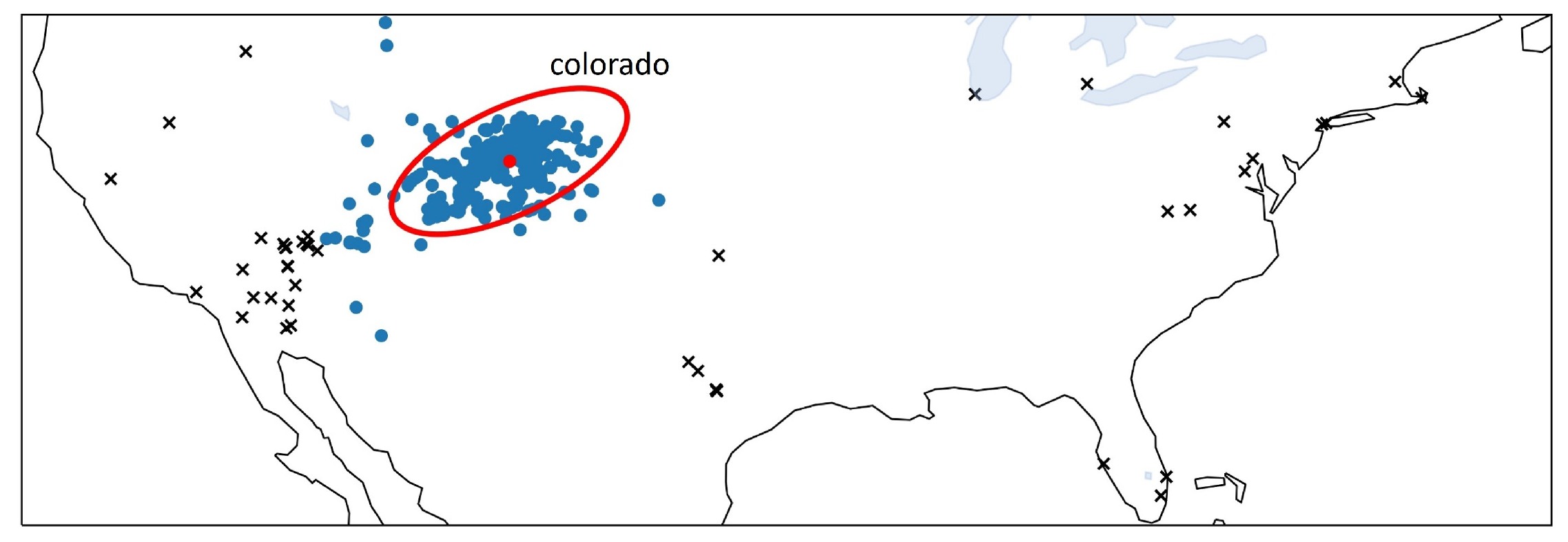

3.1. Geospatial Concept Extraction

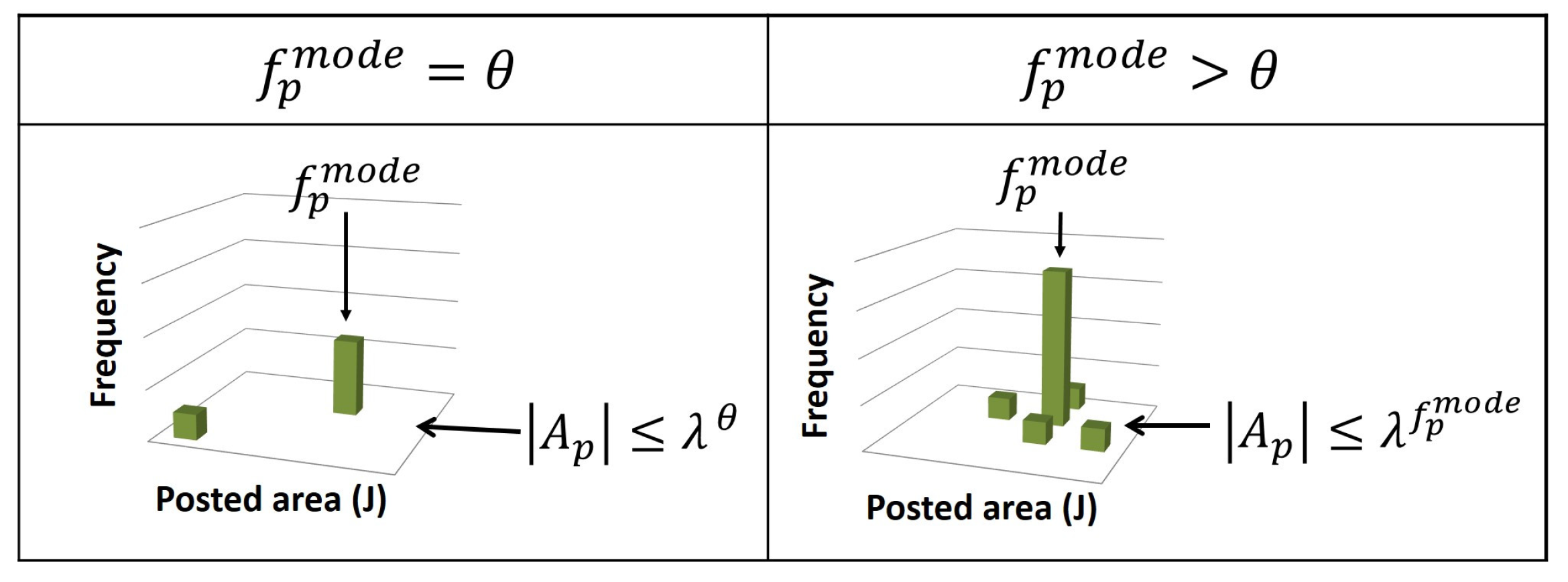

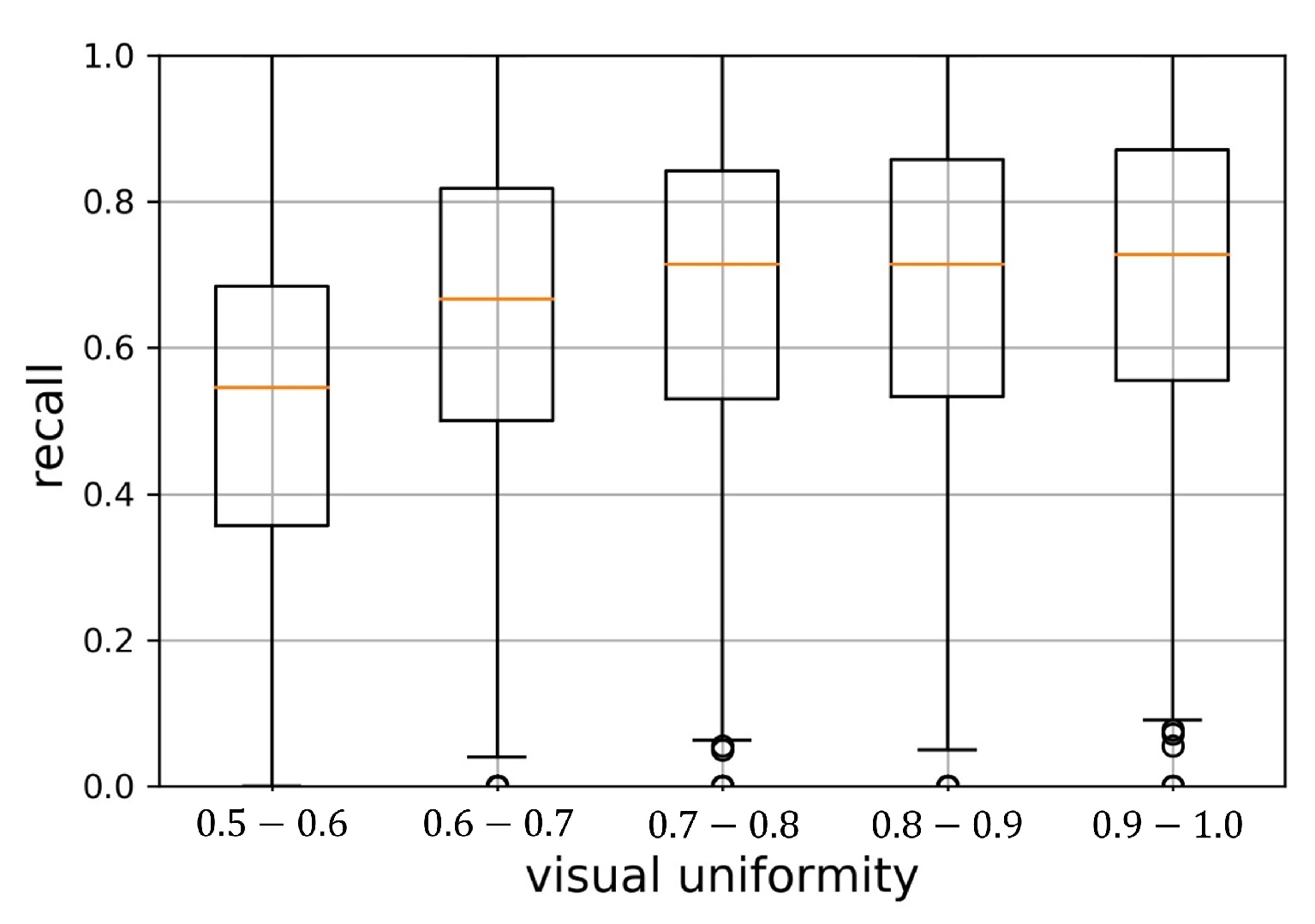

3.2. Visual Concept Extraction

3.3. Representative Visual Concept Extraction

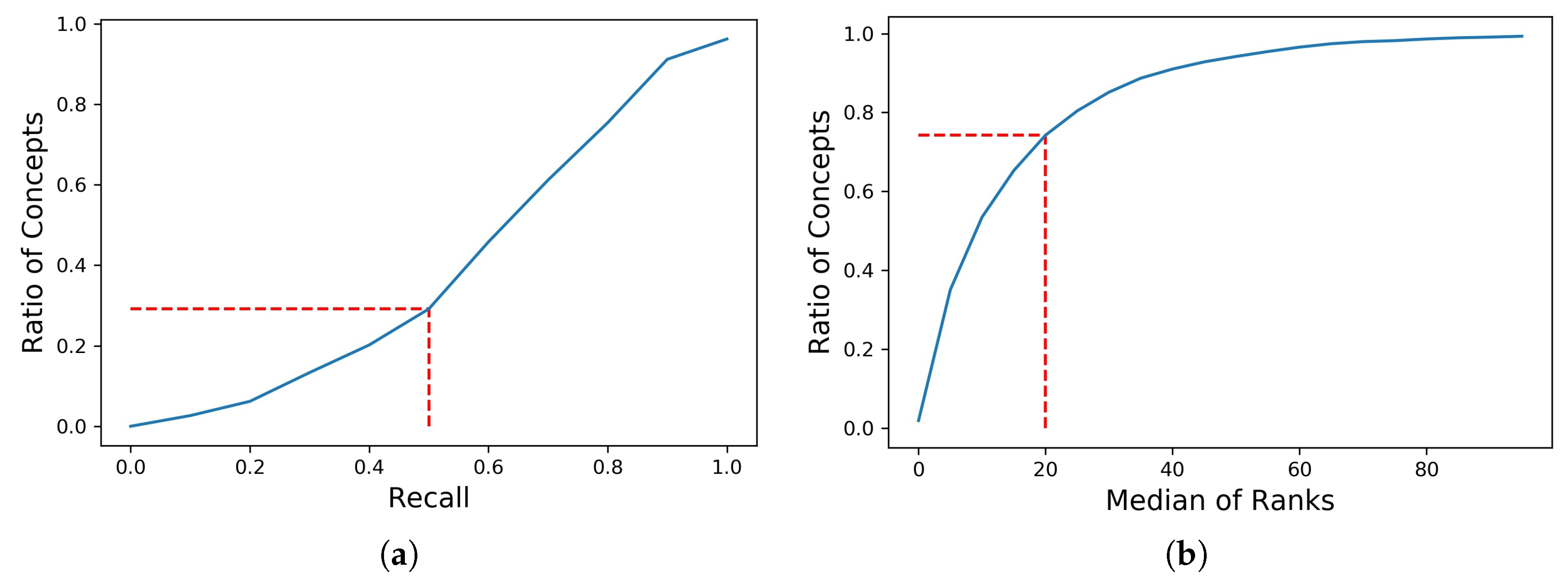

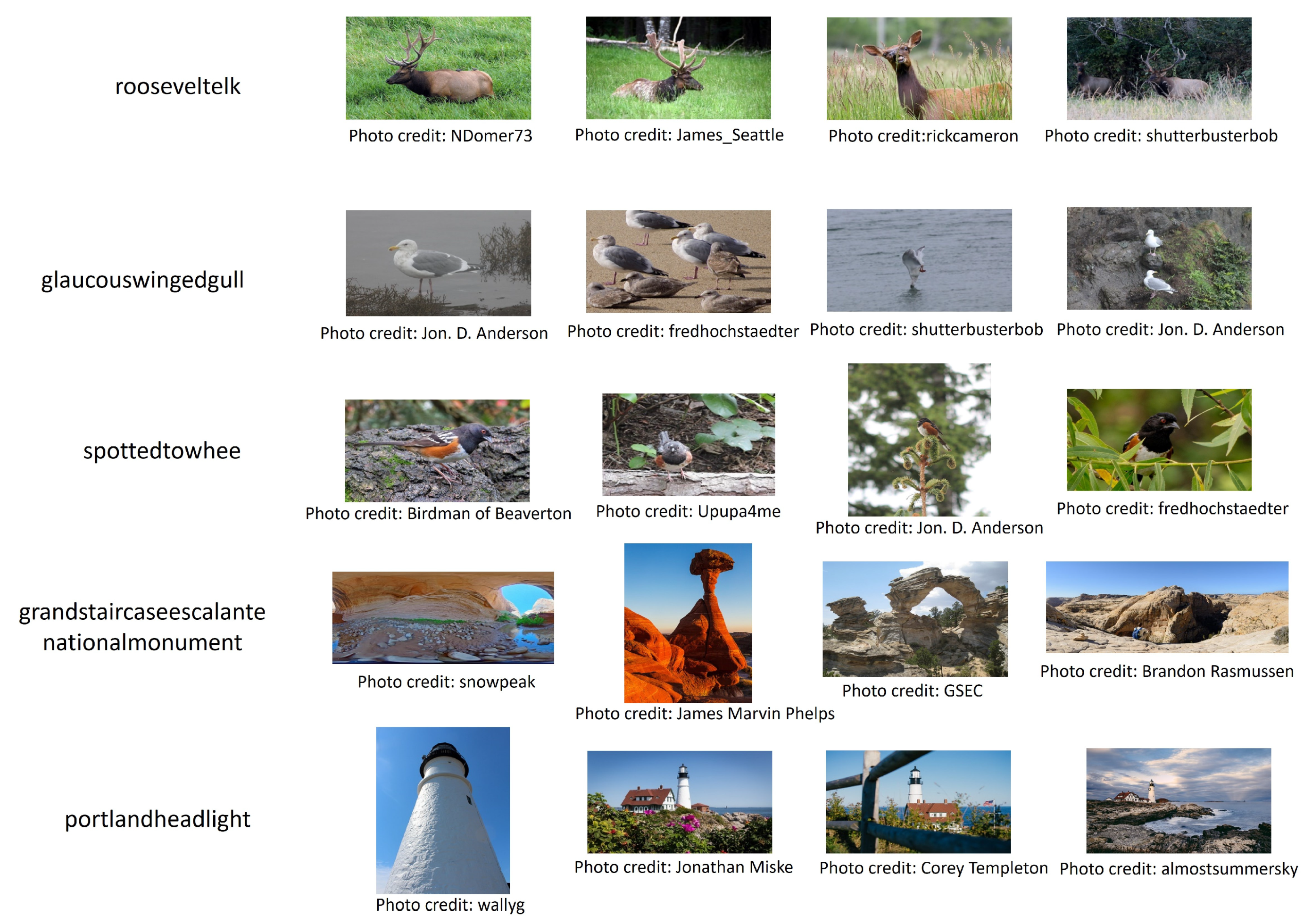

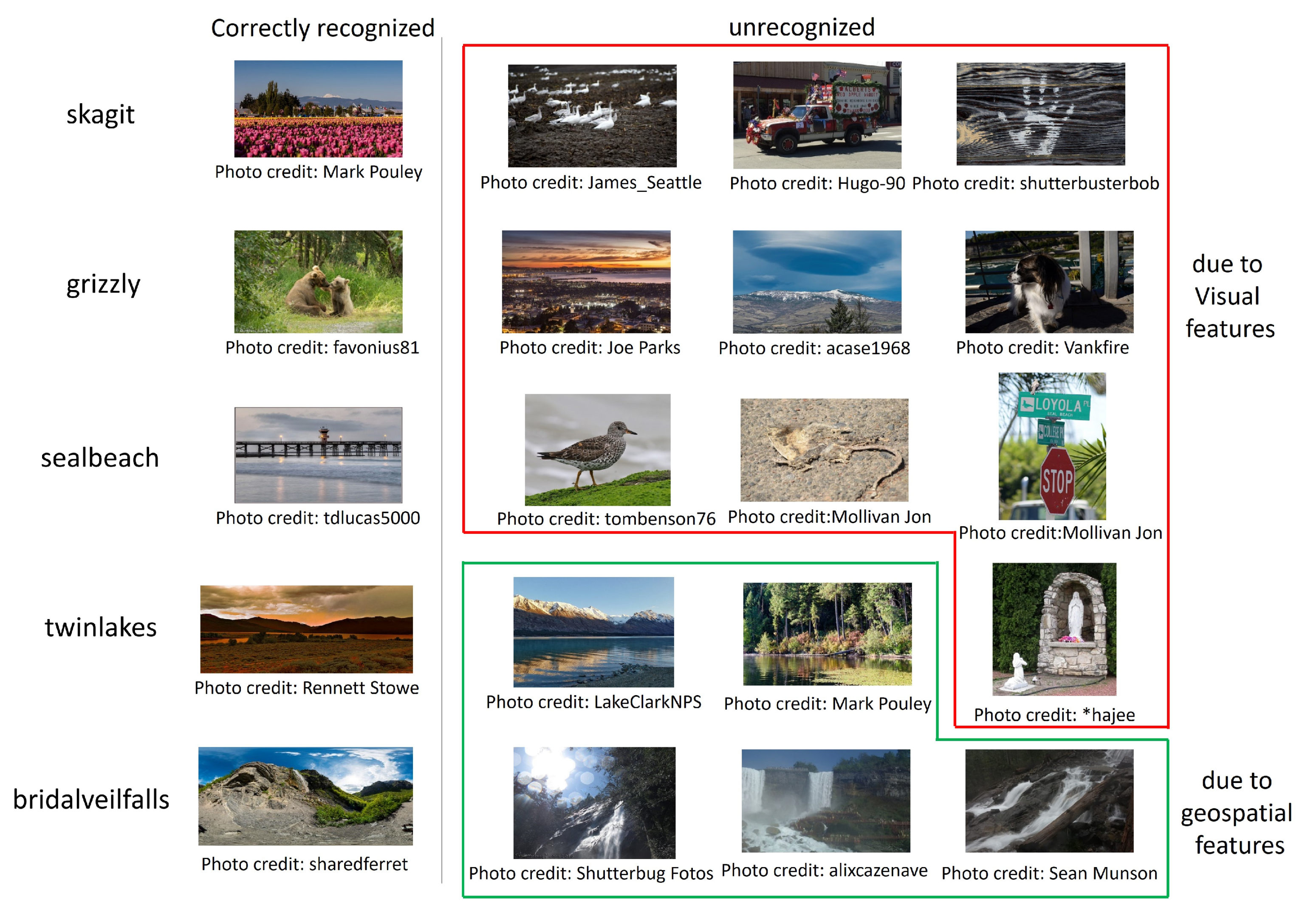

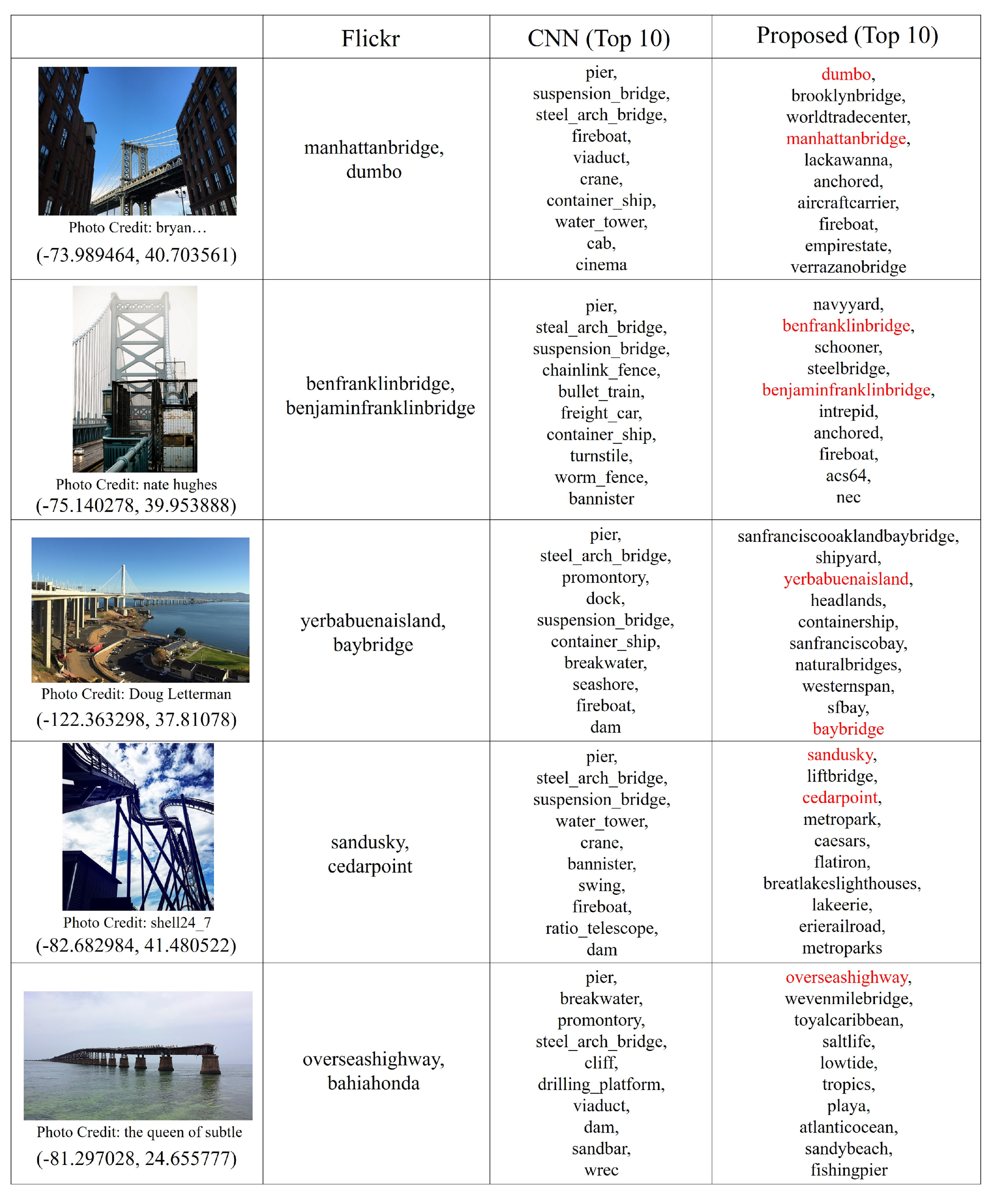

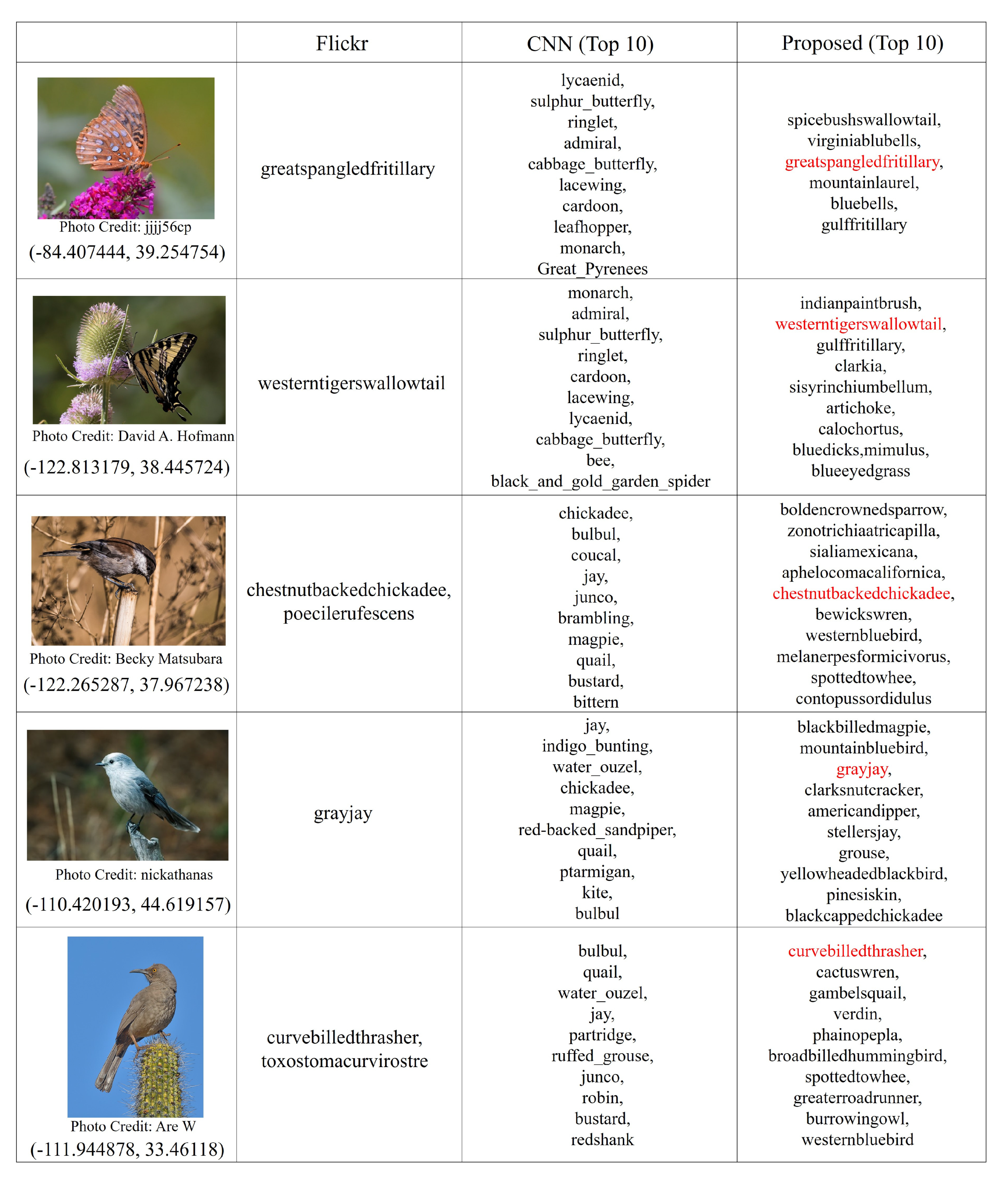

4. Experiments

4.1. Geospatial Concept Graph Construction from Flickr Images

4.2. Evaluation by Geo-Aware Image Recognition

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wei, X.S.; Wu, J.; Cui, Q. Deep learning for fine-grained image analysis: A survey. arXiv 2019, arXiv:1907.03069. [Google Scholar]

- Berg, T.; Liu, J.; Woo Lee, S.; Alexander, M.L.; Jacobs, D.W.; Belhumeur, P.N. Birdsnap: Large-scale fing-grained visual categorization of birds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 2011–2018. [Google Scholar]

- Mac Aodha, O.; Cole, E.; Perona, P. Presence-only geographical priors for fine-grained image classification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9596–9606. [Google Scholar]

- Chu, G.; Potetz, B.; Wang, W.; Howard, A.; Song, Y.; Brucher, F.; Leung, T.; Adam, H. Geo-aware networks for fine-grained recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8769–8778. [Google Scholar]

- Flickr. Available online: https://www.flickr.com/ (accessed on 26 May 2020).

- Sun, C.; Gan, C.; Nevatia, R. Automatic Concept Discovery from Parallel Text and Visual Corpora. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2596–2604. [Google Scholar]

- Chen, X.; Shrivastava, A.; Gupta, A. NEIL: Extracting visual knowledge from web data. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2013; pp. 1409–1416. [Google Scholar]

- Learning everything about anything: webly-supervised visual concept learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 3270–3277.

- Golge, E.; Duygulu, P. ConceptMap: mining noisy web data for concept learning. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 439–455. [Google Scholar]

- Qiu, S.; Wang, X.; Tang, X. Visual semantic complex network for web images. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3623–3630. [Google Scholar]

- Tsai, D.; Jing, Y.; Liu, Y.; Rowley, H.A.; Ioffe, S.; Rehg, J.M. Large-scale image annotation using visual synset. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 611–618. [Google Scholar]

- Zhou, B.; Jagadeesh, V.; Piramuthu, R. ConceptLearner: discovering visual concepts from weakly labeled image collections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1492–1500. [Google Scholar]

- Moxley, E.; Kleban, J.; Manjunath, B. SpiritTagger: a geo-aware tag suggestion tool mined from Flickr. In Proceedings of the ACM International Conference on Multimedia Information Retrieval, Vancouver, BC, Canada, 30–31 October 2008; pp. 24–30. [Google Scholar]

- Silva, A.; Martins, B. Tag recommendation for georeferenced photos. In Proceedings of the ACM International Workshop on Location-Based Social Networks, San Diego, CA, USA, 30 November 2011; pp. 57–64. [Google Scholar]

- Liao, S.; Li, X.; Shen, H.T.; Yang, Y.; Du, X. Tag features for geo-aware image classification. IEEE Trans. Multimed. 2015, 17, 1058–1067. [Google Scholar] [CrossRef]

- Cui, Y.; Song, Y.; Sun, C.; Howard, A.; Belongie, S. Large scale fine-grained categorization and domain-specific transfer learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4109–4118. [Google Scholar]

- Zhu, X.; Anguelov, D.; Ramanan, D. Capturing long-tail distributions of object subcategories. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 915–922. [Google Scholar]

- Deng, J.; Ding, N.; Jia, Y.; Frome, A.; Murphy, K.; Bengio, S.; Li, Y.; Neven, H.; Adam, H. Large-scale object classification using label relation graphs. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 48–64. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Xecption: deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: a large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning deep features for scene recognition using places database. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 487–495. [Google Scholar]

- Marino, K.; Salakhutdinov, R.; Gupta, A. The more you know: using knowledge graphs for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Malisiewicz, T.; Efros, A. Beyond categories: the visual memex model for reasoning about object relationships. In Proceedings of the Advances in neural information processing systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 1222–1230. [Google Scholar]

- Fang, Y.; Kuan, K.; Lin, J.; Tan, C.; Chandrasekhar, V. Object detection meets knowledge graphs. In Proceedings of the International Joint Conference on Artificial Intelligence, Honolulu, HI, USA, 21–26 July 2017; pp. 1661–1667. [Google Scholar]

- Zhang, D.; Cui, M.; Yang, Y.; Yang, P.; Xie, C.; Liu, D.; Yu, B.; Cheng, Z. Knowledge graph-based image classification refinement. IEEE Access 2019, 7, 57678–57690. [Google Scholar] [CrossRef]

- WordNet. Available online: https://wordnet.princeton.edu/ (accessed on 26 May 2020).

- DBpedia. Available online: https://wiki.dbpedia.org/ (accessed on 26 May 2020).

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: a database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Chen, T.; Lin, L.; Chen, R.; Wu, Y.; Luo, X. Knowledge-embedded representation learning for fine-grained image recognition. arXiv preprint 2018, arXiv:1807.00505. [Google Scholar]

- Xu, H.; Qi, G.; Li, J.; Wang, M.; Xu, K.; Gao, H. Fine-grained image classification by visual-semantic embedding. In Proceedings of the International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1043–1049. [Google Scholar]

- Krause, J.; Sapp, B.; Howard, A.; Zhou, H.; Toshev, A.; Duerig, T. The unreasonable effectiveness of noisy data for fine-grained recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 301–320. [Google Scholar]

- Papadopoulos, S.; Zigkolis, C.; Kompatsiaris, Y.; Vakali, A. Cluster-based landmark and event detection for tagged photo collections. IEEE Multimed. 2010, 52–63. [Google Scholar] [CrossRef]

- Zheng, Y.T.; Zhao, M.; Song, Y.; Adam, H.; Buddemeier, U.; Bissacco, A.; Brucher, F.; Chua, T.S.; Neven, H. Tour the world: building a web-scale landmark recognition engine. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1085–1092. [Google Scholar]

- Crandall, D.J.; Backstrom, L.; Huttenlocher, D.; Kleinberg, J. Mapping the world’s photos. In Proceedings of the International Conference on World Wide Web, Madrid, Spain, 20–24 April 2009; pp. 761–770. [Google Scholar]

- Tang, K.; Paluri, M.; Fei-Fei, L.; Fergus, R.; Bourdev, L. Improving image classification with location context. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1008–1016. [Google Scholar]

- Rattenbury, T.; Naaman, M. Methods for extracting place semantics from Flickr tags. ACM Trans. Web 2009, 3, 1–30. [Google Scholar]

- Zheng, X.; Han, J.; Sun, A. A survey of location prediction on Twitter. IEEE Trans. Knowl. Data Eng. 2018, 30, 1652–1671. [Google Scholar] [CrossRef]

- Lim, J.; Nitta, N.; Nakamura, K.; Babaguchi, N. Constructing geographic dictionary from streaming geotagged tweets. ISPRS Int. J. -Geo-Inf. 2019, 8, 216. [Google Scholar] [CrossRef]

- Roller, S.; Speriosu, M.; Rallapalli, S.; Wing, B.; Baldridge, J. Supervised text-based geolocation using language models on an adaptive grid. In Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Korea, 12–14 July 2012; pp. 1500–1510. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- GeoNames. Available online: http://www.geonames.org/ (accessed on 26 May 2020).

- Stopwords ISO. Available online: https://github.com/stopwords-iso/stopwords-iso (accessed on 26 May 2020).

| ♯ of Sub-Areas J | ||||||||

|---|---|---|---|---|---|---|---|---|

| 8 | 16 | 32 | 64 | 128 | 256 | |||

| ♯ of candidate place names | 7312 | 6710 | 6236 | 5859 | 5528 | 5229 | ||

| ♯ of candidate stop words | 148 | 118 | 86 | 69 | 53 | 38 | ||

| 4 | ♯ of extracted place names | 4687 | 4529 | 4443 | 4380 | 4313 | 4215 | |

| ♯ of extracted stop words | 2 | 1 | 1 | 2 | 3 | 2 | ||

| 5 | ♯ of extracted place names | 4935 | 4671 | 4545 | 4477 | 4388 | 4270 | |

| ♯ of extracted stop words | 3 | 1 | 1 | 3 | 3 | 2 | ||

| 6 | ♯ of extracted place names | 5237 | 4790 | 4616 | 4536 | 4435 | 4322 | |

| ♯ of extracted stop words | 11 | 2 | 2 | 3 | 3 | 3 | ||

| 7 | ♯ of extracted place names | 5561 | 4915 | 4710 | 4604 | 4499 | 4357 | |

| ♯ of extracted stop words | 33 | 2 | 2 | 3 | 3 | 4 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nitta, N.; Nakamura, K.; Babaguchi, N. Constructing Geospatial Concept Graphs from Tagged Images for Geo-Aware Fine-Grained Image Recognition. ISPRS Int. J. Geo-Inf. 2020, 9, 354. https://doi.org/10.3390/ijgi9060354

Nitta N, Nakamura K, Babaguchi N. Constructing Geospatial Concept Graphs from Tagged Images for Geo-Aware Fine-Grained Image Recognition. ISPRS International Journal of Geo-Information. 2020; 9(6):354. https://doi.org/10.3390/ijgi9060354

Chicago/Turabian StyleNitta, Naoko, Kazuaki Nakamura, and Noboru Babaguchi. 2020. "Constructing Geospatial Concept Graphs from Tagged Images for Geo-Aware Fine-Grained Image Recognition" ISPRS International Journal of Geo-Information 9, no. 6: 354. https://doi.org/10.3390/ijgi9060354

APA StyleNitta, N., Nakamura, K., & Babaguchi, N. (2020). Constructing Geospatial Concept Graphs from Tagged Images for Geo-Aware Fine-Grained Image Recognition. ISPRS International Journal of Geo-Information, 9(6), 354. https://doi.org/10.3390/ijgi9060354