Watershed Segmentation Algorithm Based on Luv Color Space Region Merging for Extracting Slope Hazard Boundaries

Abstract

1. Introduction

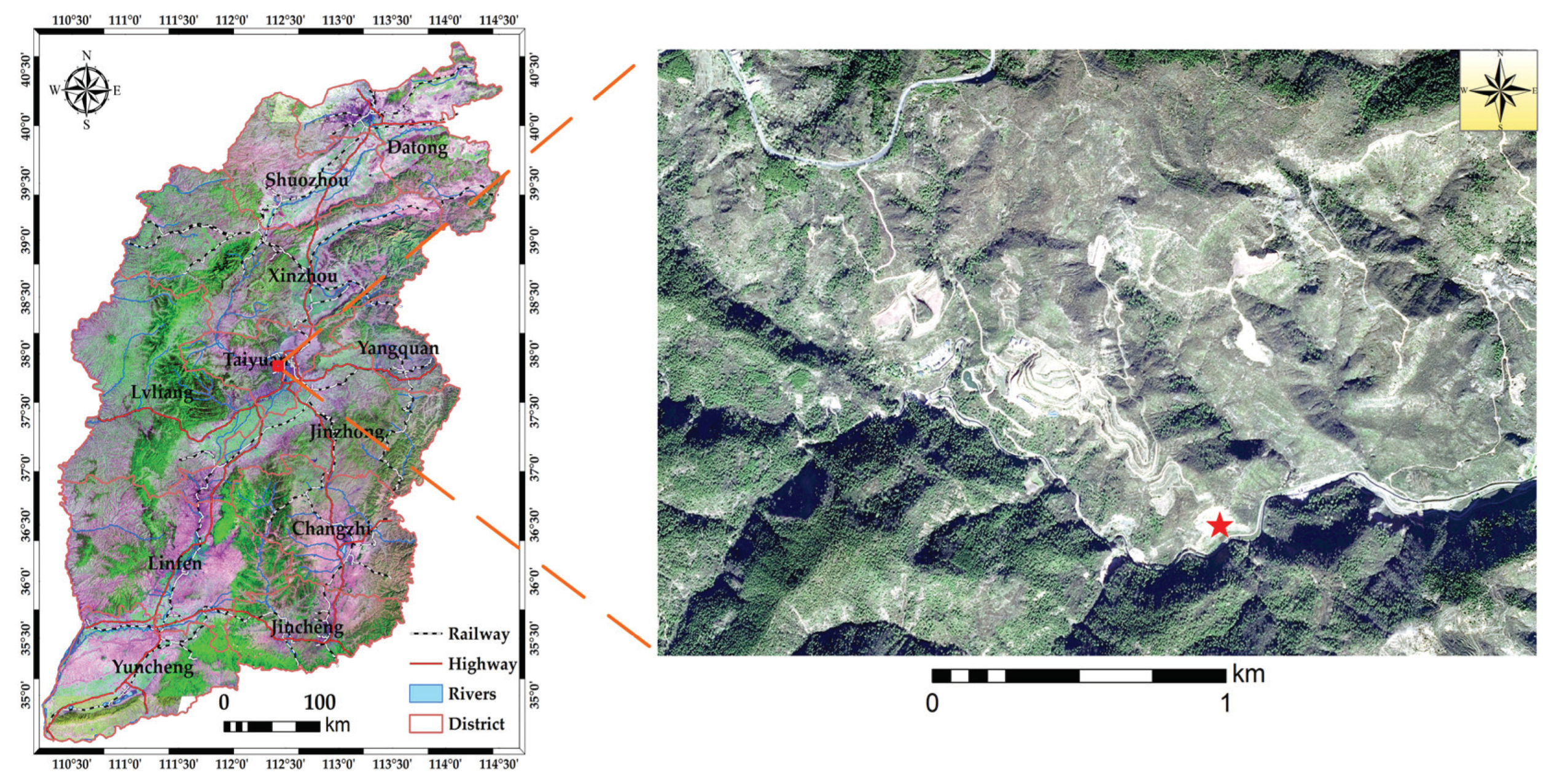

2. Study Area and Data Preprocessing

2.1. Study Area

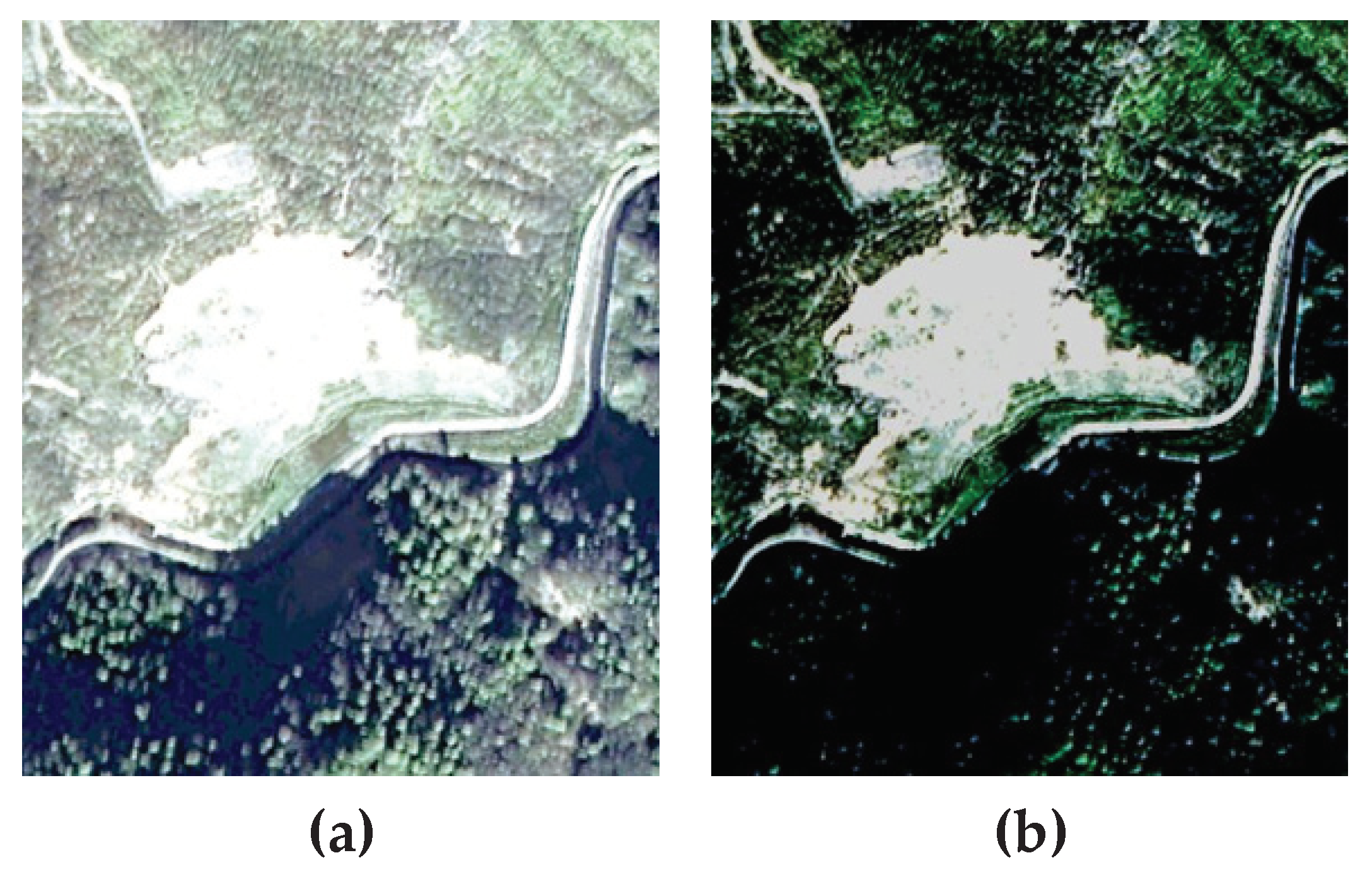

2.2. Data Sources and Preprocessing

3. Methodology

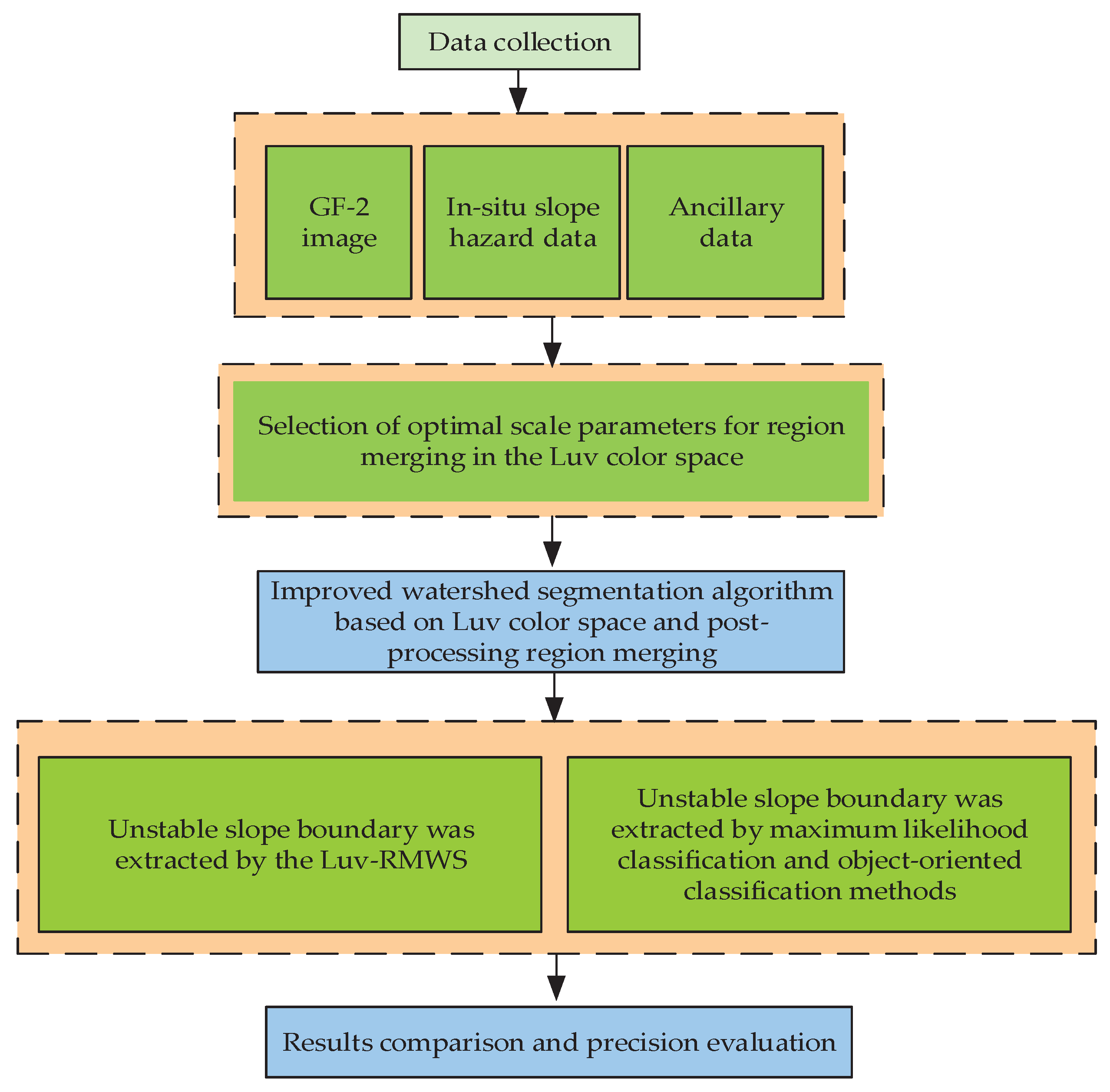

3.1. Technical Procedure

3.2. Luv Color Space and Transformation

3.3. Region Merging Similarity Measure Based on Luv Color Space

3.3.1. Optimal Segmentation Scale Parameters

3.3.2. Optimal Merging Scale Parameters

4. Comparative Methods and Accuracy Evaluation

4.1. Maximum Likelihood Classification

4.2. Object-Oriented Classification

4.3. Accuracy Evaluation Criteria

5. Results

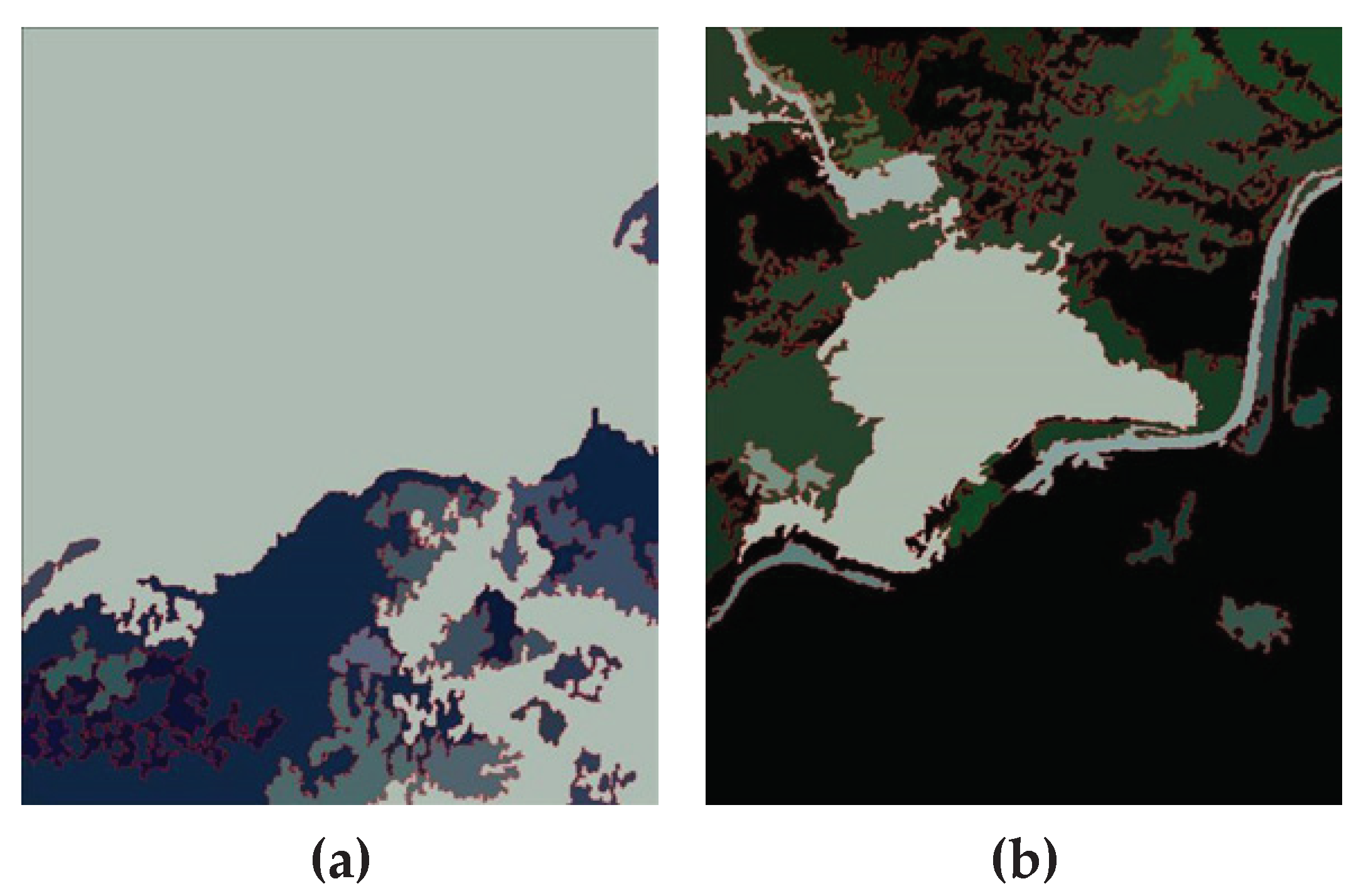

5.1. Segmentation Results Based on the Experimental Image

5.2. Selection of Optimal Scale Parameters

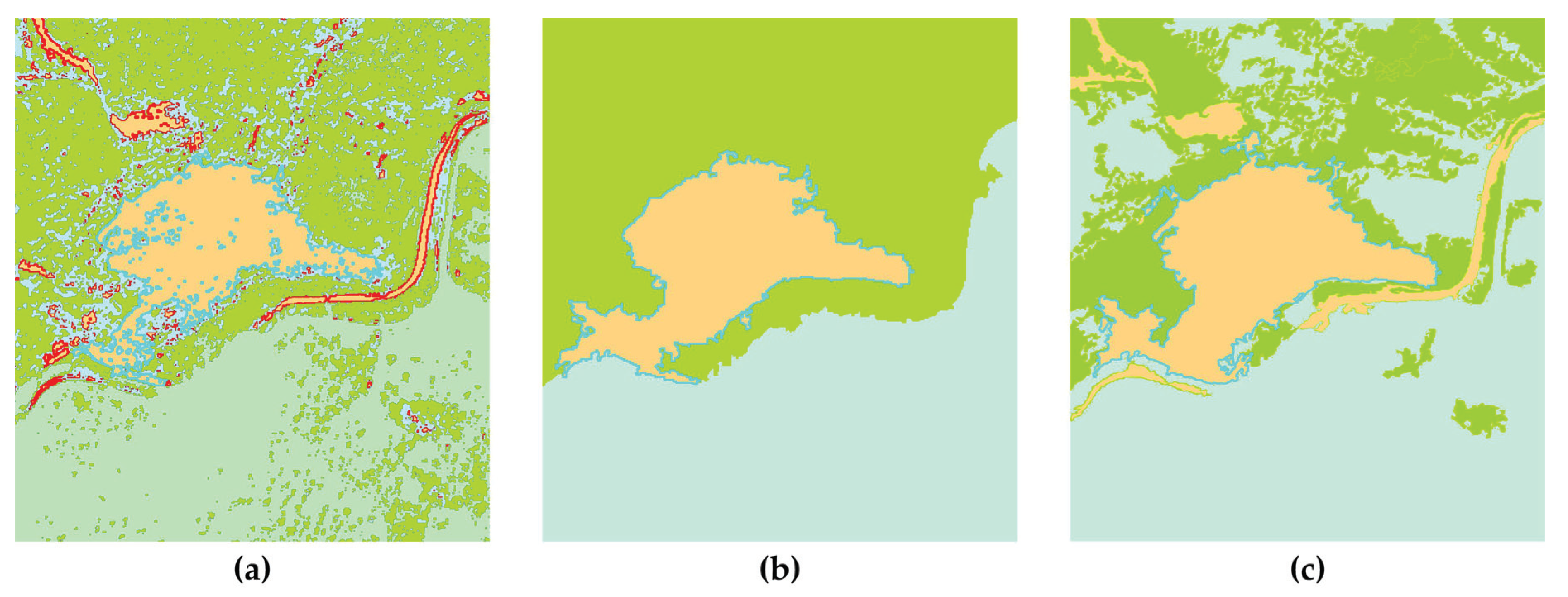

5.3. Extraction of Slope Hazard Boundary

5.4. Processing Time

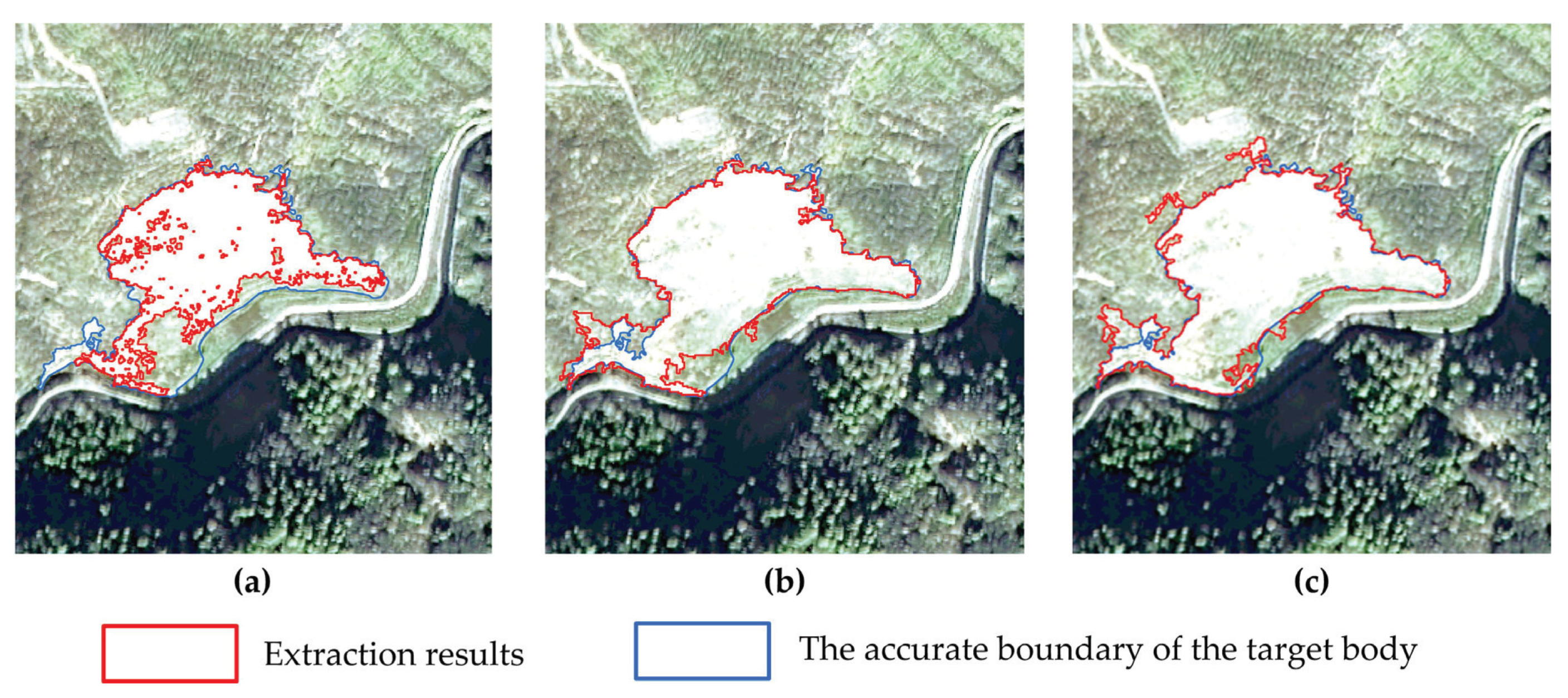

5.5. Extraction Accuracy

6. Discussion

6.1. Analysis of Image Segmentation before and after Contrast Enhancement

6.2. Analysis of Optimal Scale Parameters

6.3. Analysis of Extraction Effect

6.4. Analysis of the Proposed Method

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kalantar, B.; Pradhan, B.; Naghibi, S.A.; Motevalli, A.; Mansor, S. Assessment of the effects of training data selection on the landslide susceptibility mapping: A comparison between support vector machine (SVM), logistic regression (LR) and artificial neural networks (ANN). Geomat. Nat. Hazards Risk 2018, 9, 49–69. [Google Scholar] [CrossRef]

- Peng, L.; Xu, S.N.; Mei, J.J.; Su, F.H. Earthquake-induced landslide recognition using high-resolution remote sensing image. J. Remote Sens. 2017, 4, 509–518. [Google Scholar]

- Hervás, J.; Barredo, J.I.; Rosin, P.L.; Pasuto, A.; Mantovani, F.; Silvano, S. Monitoring landslides from optical remotely sensed imagery: The case history of Tessina landslide, Italy. Geomorphology 2003, 54, 63–75. [Google Scholar] [CrossRef]

- Mondini, A.C.; Guzzetti, F.; Reichenbach, P.; Rossi, M.; Cardinali, M.; Ardizzone, F. Semi-automatic recognition and mapping of rainfall induced shallow landslides using optical satellite images. Remote Sens. Environ. 2011, 115, 1743–1757. [Google Scholar] [CrossRef]

- Zhan, Z.Q.; Lai, B.H. A novel DSM filtering algorithm for landslide monitoring based on multi constraints. IEEE J. STARS 2015, 8, 324–331. [Google Scholar]

- Zhang, M.M.; Xue, Y.A.; Li, J.; Shang, C.S. Identification of landslides and collapses based on remotely sensed imagery and DEM. Mine Surv. 2016, 44, 28–31. [Google Scholar]

- Mohammad, D.H.; Chen, D.M. Segmentation for object-based image analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. 2019, 150, 115–134. [Google Scholar]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Hagyard, D.; Razaz, M.; Atkin, P. Analysis of watershed algorithms for grey scale images. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; Volume 3, pp. 41–44. [Google Scholar]

- Shafarenko, L.; Petrou, M.; Kittler, J. Automatic watershed segmentation of randomly textured color images. IEEE Trans. Image Process. 1997, 6, 1530–1544. [Google Scholar] [CrossRef]

- Smet, P.D.; Pires, R.L. Implementation and analysis of an optimized rain falling watershed algorithm. In Image and Video Communications and Processing International Society for Optics and Photonics; Proceedings of SPIE: San Jose, CA, USA, 2000. [Google Scholar]

- Bieniek, A.; Moga, A. An efficient watershed algorithm based on connected components. Pattern Recogn. 2000, 33, 907–916. [Google Scholar] [CrossRef]

- Soille, P. Morphological Image Analysis-Principles and Applications, 2nd ed.; Springer: Berlin, Germany, 2004; pp. 268–276. [Google Scholar]

- Yu, Y.; Li, B.F.; Zhang, X.W.; Liu, Y.P.; Li, H.Q. Marked watershed segmentation algorithm for RGBD images. J. Image Graph. 2016, 21, 145–154. [Google Scholar]

- Zhang, J.T.; Zhang, L.M. A watershed algorithm combining spectral and texture information for high resolution remote sensing image segmentation. Geomat. Inf. Sci. Wuhan Univ. 2017, 42, 449–455. [Google Scholar]

- Yan, P.F.; Ming, D.P. Segmentation of high spatial resolution remotely sensed data using watershed with self-adaptive parameterization. Remote Sens. Technol. Appl. 2018, 33, 321–330. [Google Scholar]

- Osma-Ruiz, V.; Godino-Llorente, J.I.; Sáenz-Lechón, N.; Gómez-Vilda, P. An improved watershed algorithm based on efficient computation of shortest paths. Pattern Recognit. 2007, 40, 1078–1090. [Google Scholar] [CrossRef]

- Xiao, P.F.; Zhao, S.H.; She, J.F. Multispectral IKONOS image segmentation based on texture marker-controlled watershed algorithm. MIPPR 2007: Remote Sensing and GIS Data Processing and Applications and Innovative Multispectral Technology and Applications, International Society for Optics and Photonics, Wuhan, China, 2007. Int. Symp. Multispectr. Image Process. Pattern Recognit. 2007. [Google Scholar] [CrossRef]

- Li, D.R.; Zhang, G.F.; Wu, Z.C.; Yi, L.N. An edge embedded marker-based watershed algorithm for high spatial resolution remote sensing image segmentation. IEEE Trans. Image Process. 2010, 19, 2781–2787. [Google Scholar]

- Rizvi, I.A.; Mohan, B.K.; Bhatia, P.R. Multi-resolution segmentation of high-resolution remotely sensed imagery using marker-controlled watershed transform. In Proceedings of the International Conference and Workshop on Emerging Trends in Technology, Mumbai, Maharashtra, India, 25–26 February 2011. [Google Scholar]

- Bala, A. An improved watershed image segmentation technique using MATLAB. Int. J. Sci. Eng. 2012, 3, 1–4. [Google Scholar]

- Ng, H.P.; Ong, S.H.; Foong, K.W.C.; Goh, P.S.; Nowinski, W.L. Masseter segmentation using an improved watershed algorithm with unsupervised classification. Comput. Biol. Med. 2008, 38, 171–184. [Google Scholar] [CrossRef]

- Xu, T.Z.; Zhang, G.C.; Jia, Y. Color image segmentation based on morphology gradients and watershed algorithm. Comput. Eng. Appl. 2016, 52, 200–203. [Google Scholar]

- Chen, G. Image segmentation algorithm combined with regularized PM de-noising model and improved watershed algorithm. J. Med. Imaging Health Inform. 2020, 10, 515–521. [Google Scholar] [CrossRef]

- Grau, V.; Mewes, A.U.J.; Alcaniz, M.; Kikinis, R.; Warfield, S.K. Improved watershed transform for medical image segmentation using prior information. IEEE Trans. Med. Imaging 2004, 23, 447–458. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Pan, M.; Wu, Z.X. An improved segmentation of high spatial resolution remote sensing image using marker-based watershed algorithm. In Proceedings of the 20th International Conference on Geoinformatics, Hong Kong, China, 15–17 June 2012. [Google Scholar]

- Xue, Y.A.; Zhang, M.M.; Zhao, J.L.; Guo, Q.H.; Ma, R. Study on quality assessment of multi-source and multi-scale images in disaster prevention and relief. Disaster Adv. 2012, 5, 1623–1626. [Google Scholar]

- Wang, Y. Adaptive marked watershed segmentation algorithm for red blood cell images. J. Image Graph. 2018, 22, 1779–1787. [Google Scholar]

- Jia, X.Y.; Jia, Z.H.; Wei, Y.M.; Liu, L.Z. Watershed segmentation by gradient hierarchical reconstruction under opponent color space. Comput. Sci. 2018, 45, 212–217. [Google Scholar]

- Yasnoff, W.A.; Mui, J.K.; Bacus, J.W. Error measures for scene segmentation. Pattern Recognit. 1977, 9, 217–231. [Google Scholar] [CrossRef]

- Dorren, L.K.A.; Maier, B.; Seijmonsbergen, A.C. Improved Landsat-based forest mapping in steep mountainous terrain using object-based classification. For. Ecol. Manag. 2003, 183, 31–46. [Google Scholar] [CrossRef]

- Ming, D.P.; Luo, J.C.; Zhou, C.H.; Wang, J. Research on high resolution remote sensing image segmentation methods based on features and evaluation of algorithms. Geoinf. Sci. 2006, 8, 107–113. [Google Scholar]

- Chen, Y.Y.; Ming, D.P.; Xu, L.; Zhao, L. An overview of quantitative experimental methods for segmentation evaluation of high spatial remote sensing images. J. Geoinf. Sci. 2017, 19, 818–830. [Google Scholar]

- Huang, Q.; Dom, B. Quantitative methods of evaluating image segmentation. In Proceedings of the International Conference on Image Processing, Washington, DC, USA, 23–26 October 1995. [Google Scholar]

- Jozdani, S.; Chen, D.M. On the versatility of popular and recently proposed supervised evaluation metrics for segmentation quality of remotely sensed images: An experimental case study of building extraction. ISPRS J. Photogramm. 2020, 160, 275–290. [Google Scholar] [CrossRef]

- Debelee, T.G.; Schwenker, F.; Rahimeto, S.; Yohannes, D. Evaluation of modified adaptive k-means segmentation algorithm. Comput. Vis. Media 2019, 5, 347–361. [Google Scholar] [CrossRef]

- Zhang, H.; Fritts, J.E.; Goldman, S.A. Image segmentation evaluation: A survey of unsupervised methods. Comput. Vis. Image Underst. 2008, 110, 260–280. [Google Scholar] [CrossRef]

- Xiao, F.P. High Resolution Remote Sensing Image Segmentation and Information Extraction; Science Press: Beijing, China, 2012; pp. 151–156. [Google Scholar]

- Zhu, C.J.; Yang, S.Z.; Cui, S.C.; Cheng, W.; Cheng, C. Accuracy evaluating method for object-based segmentation of high resolution remote sensing image. High Power Laser Part. Beams 2015, 27, 37–43. [Google Scholar]

- Hoover, A.; Jean-Baptiste, G.; Jiang, X.Y.; Flynn, P.J.; Bunke, H.; Goldgof, D.; Bowyer, K.; Eggert, D.W.; Fitzgibbon, A.; Fisher, R. An experimental comparison of range image segmentation algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 673–689. [Google Scholar] [CrossRef]

- Chen, H.C.; Wang, S.J. The use of visible color difference in the quantitative evaluation of color image segmentation. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004. [Google Scholar]

- Hay, G.J.; Castilla, G.; Wulder, M.A.; Ruiz, J.R. An automated object-based approach for the multiscale image segmentation of forest scenes. Int. J. Appl. Earth Obs. Geoinf. 2005, 7, 339–359. [Google Scholar] [CrossRef]

- Cardoso, J.S.; Corte-Real, L. Toward a generic evaluation of image segmentation. IEEE Trans. Image Process. 2005, 14, 1773–1782. [Google Scholar] [CrossRef]

- Hofmann, P.; Lettmayer, P.; Blaschke, T.; Belgiu, M.; Wegenkittl, S.; Graf, R.; Lampoltshammer, T.J.; Andrejchenko, V. Towards a framework for agent-based image analysis of remote-sensing data. Int. J. Image Data Fusion 2015, 6, 115–137. [Google Scholar] [CrossRef]

- Zhang, X.L.; Feng, X.Z.; Xiao, P.F.; He, G.J.; Zhu, L.J. Segmentation quality evaluation using region-based precision and recall measures for remote sensing images. ISPRS J. Photogramm. 2015, 102, 73–84. [Google Scholar] [CrossRef]

- Wei, X.W.; Zhang, X.F.; Xue, Y. Remote sensing image segmentation quality assessment based on spectrum and shape. J. Geoinf. Sci. 2018, 20, 1489–1499. [Google Scholar]

- Li, Z.Y.; Ming, D.P.; Fan, Y.L.; Zhao, L.F.; Liu, S.M. Comparison of evaluation indexes for supervised segmentation of remote sensing imagery. J. Geoinf. Sci. 2019, 21, 1265–1274. [Google Scholar]

- Chen, L.F.; Liu, Y.M.; Liu, Y. Color image segmentation by combining improved watershed and region growing. Comput. Eng. Sci. 2013, 35, 93–98. [Google Scholar]

- Lin, F.Z. Foundation of Multimedia Technology, 3rd ed.; Tsinghua University Press: Beijing, China, 2009; pp. 104–106. [Google Scholar]

- Gao, W.; Xue, Y.A.; Zhao, J.L. Design and implementation of remote sensing image production quality quantitative evaluation system. J. Taiyuan Univ. Technol. 2014, 45, 776–779. [Google Scholar]

- Hansen, M.W.; Higgins, W.E. Watershed-based maximum-homogeneity filtering. IEEE Trans. Image Process. 2002, 8, 982–988. [Google Scholar] [CrossRef] [PubMed]

- Wei, D.F. There Search on the Methods of Landslide Edge Automatic Extraction Based on Remote Sensing Image. Master’s Thesis, Southwest Jiaotong University, Chendu, China, 2013. [Google Scholar]

- Bruzzone, L.; Prieto, D.F. Unsupervised retraining of a maximum likelihood classifier for the analysis of multitemporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2001, 39, 456–460. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Object-oriented and multi-scale image analysis in semantic networks. In Proceedings of the 2nd International Symposium on Operationalization of Remote Sensing, Enschede, The Netherlands, 16–21 August 1999. [Google Scholar]

- Zhang, Y.J. A classification and comparison of evaluation techniques for image segmentation. J. Image Graph. 1996, 1, 151–158. [Google Scholar]

- Huang, T.; Bai, X.F.; Zhuang, Q.F.; Xu, J.H. Research on Landslides Extraction Based on the Wenchuan Earthquake in GF-1 Remote Sensing Image. Bachelor Surv. 2018, 2, 67–71. [Google Scholar]

- Li, Q.; Zhang, J.F.; Luo, Y.; Jiao, Q.S. Recognition of earthquake-induced landslide and spatial distribution patterns triggered by the Jiuzhaigou earthquake in 8 August 2017. J. Remote Sens. 2019, 23, 785–795. [Google Scholar]

| Indicator | Equation | Note |

|---|---|---|

| δA | Smaller δA indicates higher segmentation accuracy, and otherwise the accuracy is lower. | |

| δP | Smaller δP indicates higher segmentation accuracy, and otherwise the accuracy is lower. |

| Image Category | Patch Number of Watershed Segmentation | Time of Watershed Segmentation (ms) | Number of Patches after Area Merging | Time of Area Merging (ms) | C | D |

|---|---|---|---|---|---|---|

| Original image | 103,939 | 1248 | 22 | 74,896 | 500 | 400 |

| Enhanced image | 62,716 | 1076 | 33 | 38,626 | 500 | 400 |

| Method | Running Time | Experimental Platform | Note |

|---|---|---|---|

| MLC | 381.000 s | ArcGIS | Required more than 1 h for the whole process including sample training and classification, and post-processing such as debris combination. |

| OOC | 11.470s | eCognition | Automatic image segmentation and merging, where segmentation time is 11.470 s, and the number of patches after segmentation was 3. |

| Luv-RMWS | 39.702 s | RSImage-WS | Automatic image segmentation and merging. Watershed algorithm segmentation time was 1.076 s, number of patches after segmentation was 62716; time of region merging was 38.626 s, and then the number of patches after being merged was 33. |

| Indicator | MLC | OOC | Luv-RMWS |

|---|---|---|---|

| 29.02% | 8.71% | 4.92% | |

| 3.82% | 1.90% | 1.60% | |

| 96.18% | 98.10% | 98.40% | |

| 99.85% | 94.06% | 92.90% | |

| 71.03% | 91.29% | 95.08% | |

| 80.93% | 91.56% | 93.05% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Xue, Y.; Ge, Y.; Zhao, J. Watershed Segmentation Algorithm Based on Luv Color Space Region Merging for Extracting Slope Hazard Boundaries. ISPRS Int. J. Geo-Inf. 2020, 9, 246. https://doi.org/10.3390/ijgi9040246

Zhang M, Xue Y, Ge Y, Zhao J. Watershed Segmentation Algorithm Based on Luv Color Space Region Merging for Extracting Slope Hazard Boundaries. ISPRS International Journal of Geo-Information. 2020; 9(4):246. https://doi.org/10.3390/ijgi9040246

Chicago/Turabian StyleZhang, Mingmei, Yongan Xue, Yonghui Ge, and Jinling Zhao. 2020. "Watershed Segmentation Algorithm Based on Luv Color Space Region Merging for Extracting Slope Hazard Boundaries" ISPRS International Journal of Geo-Information 9, no. 4: 246. https://doi.org/10.3390/ijgi9040246

APA StyleZhang, M., Xue, Y., Ge, Y., & Zhao, J. (2020). Watershed Segmentation Algorithm Based on Luv Color Space Region Merging for Extracting Slope Hazard Boundaries. ISPRS International Journal of Geo-Information, 9(4), 246. https://doi.org/10.3390/ijgi9040246