Impact of UAV Surveying Parameters on Mixed Urban Landuse Surface Modelling

Abstract

1. Introduction

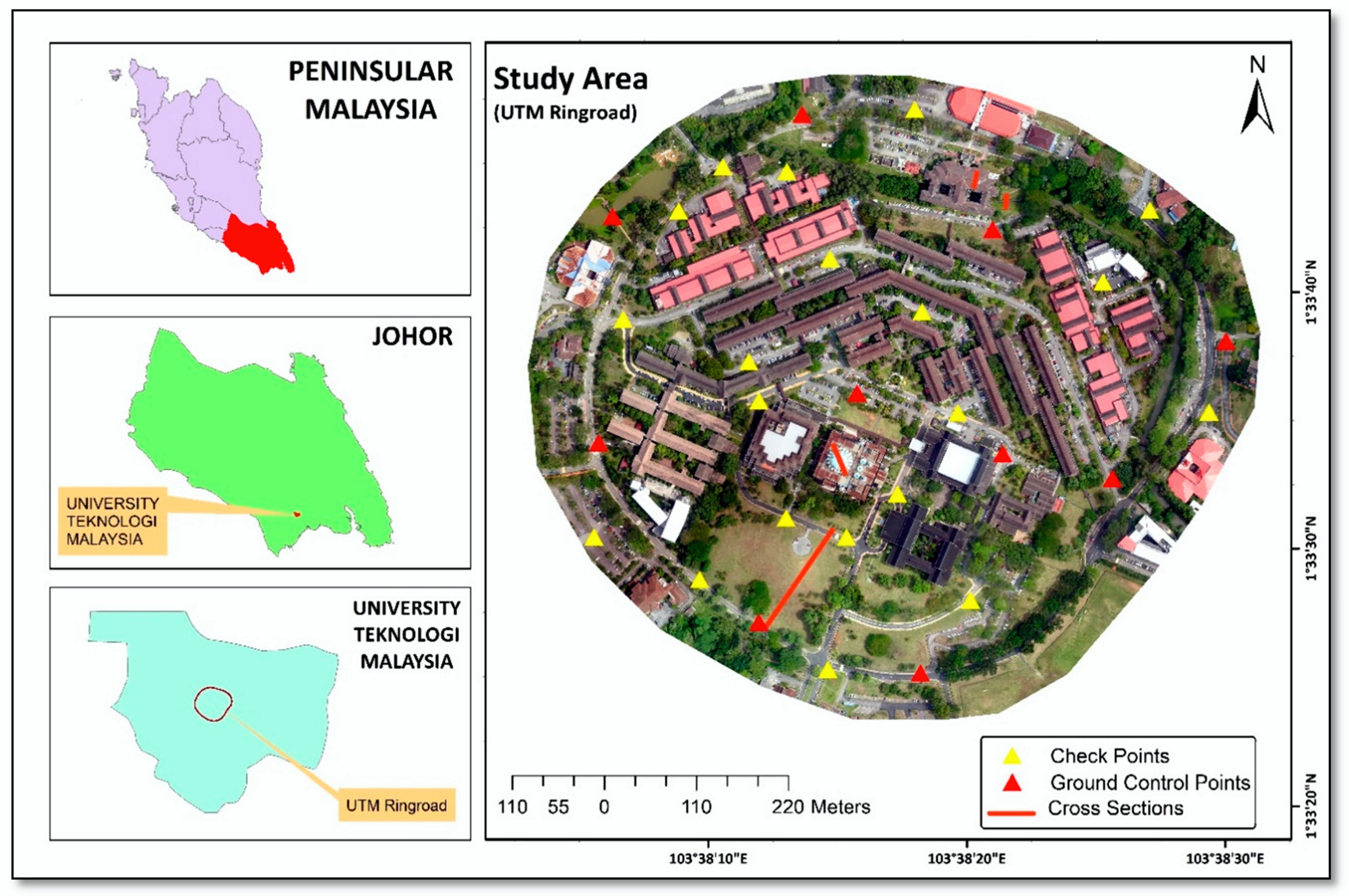

2. Study Site

3. Data Acquisition

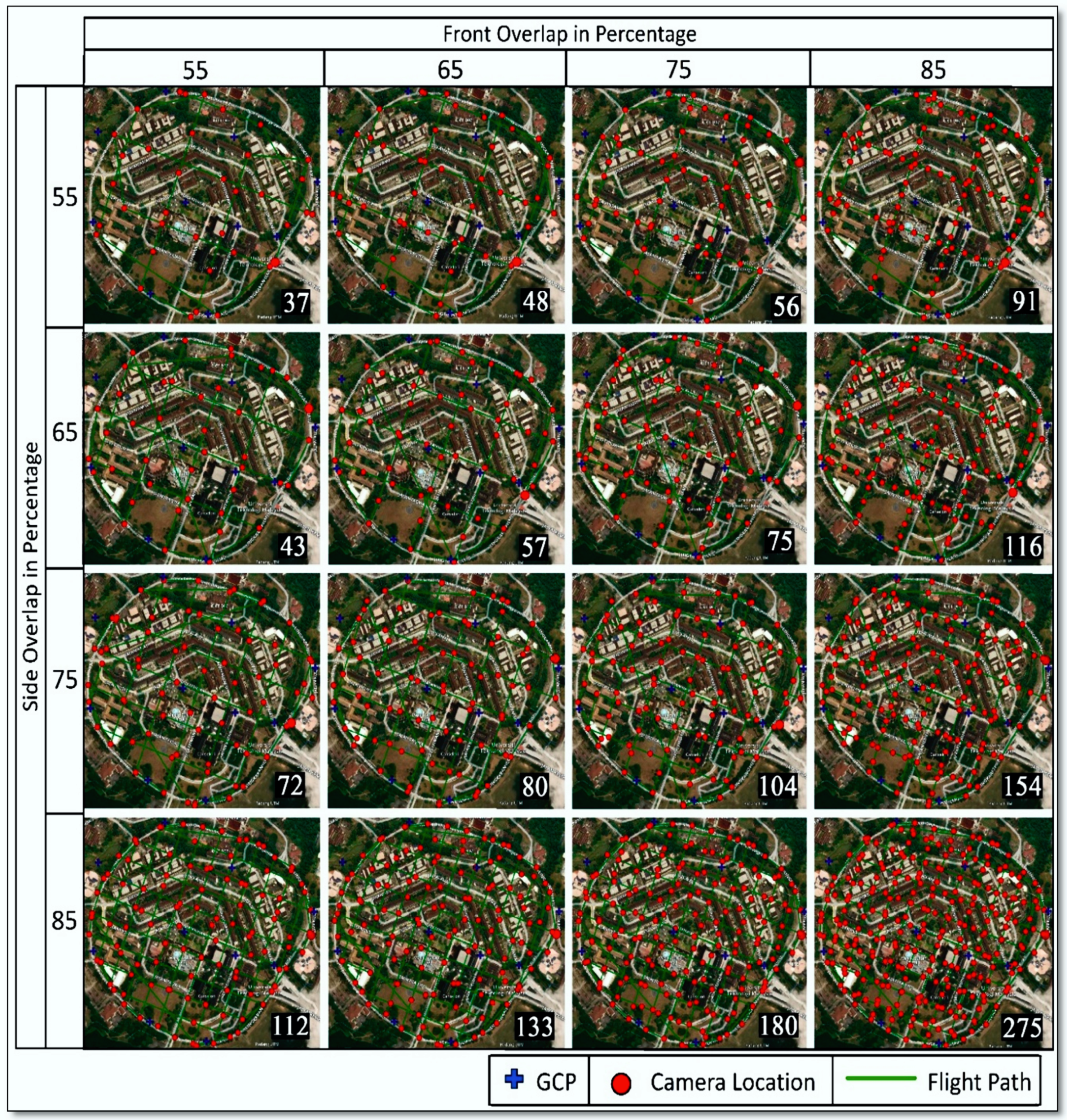

3.1. UAV Flight Planning and Images Acquisition

3.2. Base Data Acquisition

4. Data Processing

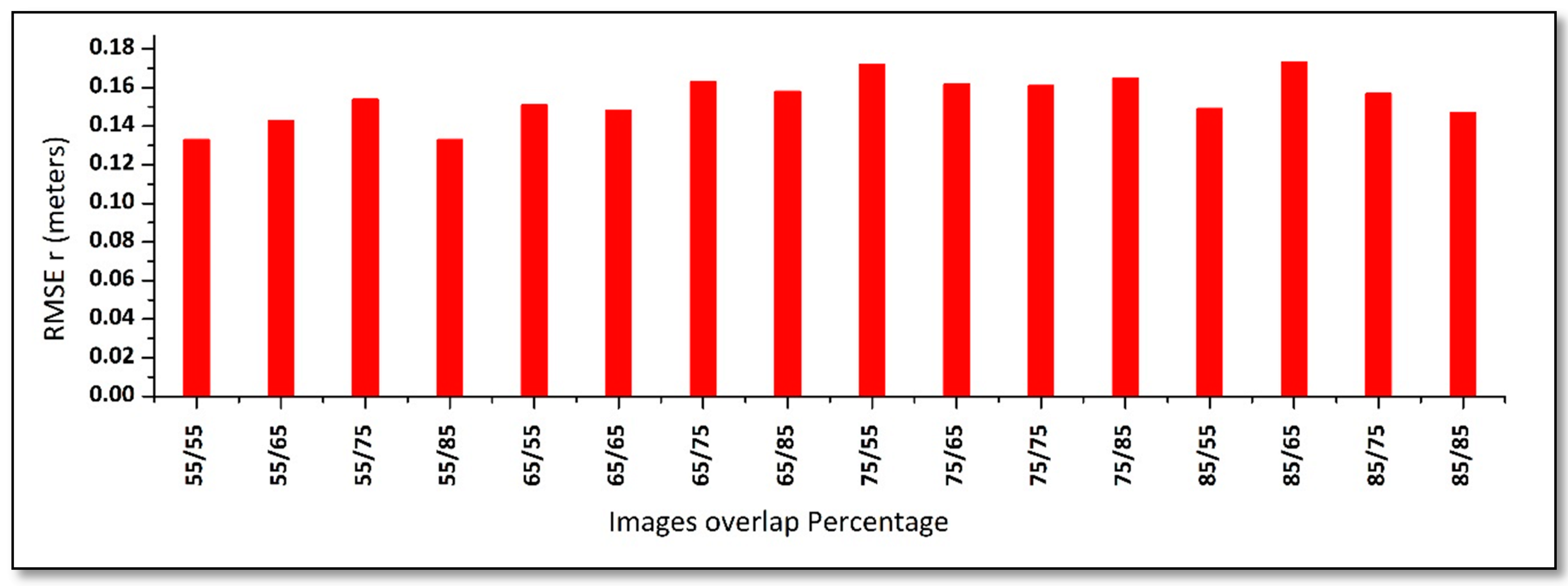

5. Analysis

6. Conclusions and Recommendations

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bandara, K.R.M.U.; Samarakoon, L.; Shrestha, R.P.; Kamiya, Y. Automated Generation of Digital Terrain Model using Point Clouds of Digital Surface Model in Forest Area. Remote Sens. 2011, 3, 845–858. [Google Scholar] [CrossRef]

- El Garouani, A.; Alobeid, A.; El Garouani, S. Digital surface model based on aerial image stereo pairs for 3D building. Int. J. Sustain. Built Environ. 2014, 3, 119–126. [Google Scholar] [CrossRef]

- Han, Y.; Qin, R.; Huang, X. Assessment of dense image matchers for digital surface model generation using airborne and spaceborne images—An update. Photogramm. Rec. 2020, 35, 58–80. [Google Scholar] [CrossRef]

- Fleming, Z.; Pavlis, T. An orientation based correction method for SfM-MVS point clouds—Implications for field geology. J. Struct. Geol. 2018, 113, 76–89. [Google Scholar] [CrossRef]

- Uysal, M.; Toprak, A.S.; Polat, N. DEM generation with UAV Photogrammetry and accuracy analysis in Sahitler hill. Measurement 2015, 73, 539–543. [Google Scholar] [CrossRef]

- Varlik, A.; Selvi, H.; Kalayci, I.; Karauğuz, G.; Öğütcü, S. Investigation of the compatibility of fasillar and eflatunpinar hittite monuments with close-range photogrammetric technique. Mediterr. Archaeol. Archaeom. 2016, 16, 249–256. [Google Scholar]

- Tavani, S.; Granado, P.; Corradetti, A.; Girundo, M.; Iannace, A.; Arbués, P.; Muñoz, J.A.; Mazzoli, S. Building a virtual outcrop, extracting geological information from it, and sharing the results in Google Earth via OpenPlot and Photoscan: An example from the Khaviz Anticline (Iran). Comput. Geosci. 2014, 63, 44–53. [Google Scholar] [CrossRef]

- Redweik, P. Photogrammetry. In Sciences of Geodesy; Xu, G., Ed.; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Mathews, A.; Jensen, J. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Agudo, P.; Pajas, J.; Pérez-Cabello, F.; Redón, J.; Lebrón, B. The Potential of Drones and Sensors to Enhance Detection of Archaeological Cropmarks: A Comparative Study Between Multi-Spectral and Thermal Imagery. Drones 2018, 2, 29. [Google Scholar] [CrossRef]

- Polat, N.; Uysal, M. An Experimental Analysis of Digital Elevation Models Generated with Lidar Data and UAV Photogrammetry. J. Indian Soc. Remote Sens. 2018, 46, 1135–1142. [Google Scholar] [CrossRef]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Laliberte, A. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P.; Sánchez-Hermosilla López, J.; Mesas-Carrascosa, F.J.; García-Ferrer, A.; Pérez-Porras, F.J. Reconstruction of extreme topography from UAV structure from motion photogrammetry. Measurement 2018, 121, 127–138. [Google Scholar] [CrossRef]

- Zeybek, M.; Şanlıoğlu, İ. Point cloud filtering on UAV based point cloud. Measurement 2019, 133, 99–111. [Google Scholar] [CrossRef]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. [Google Scholar] [CrossRef]

- Rupnik, E.; Nex, F.; Toschi, I.; Remondino, F. Aerial multi-camera systems: Accuracy and block triangulation issues. ISPRS J. Photogramm. Remote Sens. 2015, 101, 233–246. [Google Scholar] [CrossRef]

- Xing, C.; Huang, J. An improved mosaic method based on SIFT algorithm for UAV sequence images. In Proceedings of the 2010 International Conference on Computer Design and Applications, Qinhuangdao, China, 25–27 June 2010; pp. V1-414–V1-417. [Google Scholar]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Notario Garcia, M.D.; Merono de Larriva, J.E.; Garcia-Ferrer, A. An Analysis of the Influence of Flight Parameters in the Generation of Unmanned Aerial Vehicle (UAV) Orthomosaicks to Survey Archaeological Areas. Sensors 2016, 16, 1838. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Clavero Rumbao, I.; Torres-Sánchez, J.; García-Ferrer, A.; Peña, J.; López Granados, F. Accurate ortho-mosaicked six-band multispectral UAV images as affected by mission planning for precision agriculture proposes. Int. J. Remote Sens. 2017, 38, 2161–2176. [Google Scholar] [CrossRef]

- Frey, J.; Kovach, K.; Stemmler, S.; Koch, B. UAV Photogrammetry of Forests as a Vulnerable Process. A Sensitivity Analysis for a Structure from Motion RGB-Image Pipeline. Remote Sens. 2018, 10, 912. [Google Scholar] [CrossRef]

- Domingo, D.; Ørka, H.O.; Næsset, E.; Kachamba, D.; Gobakken, T. Effects of UAV Image Resolution, Camera Type, and Image Overlap on Accuracy of Biomass Predictions in a Tropical Woodland. Remote Sens. 2019, 11, 948. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Gabara, G.; Sawicki, P. Multi-Variant Accuracy Evaluation of UAV Imaging. Surveys: A Case Study on Investment Area. Sensors 2019, 19, 5229. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, M.H.; Ahmad, A.; Gulzar, Q. A comparative study of modern UAV platform for topographic mapping. IOP Conf. Ser. Earth Environ. Sci. 2020, 540. [Google Scholar] [CrossRef]

- Cabreira, T.; Brisolara, L.; Ferreira Jr, P.R. Survey on Coverage Path Planning with Unmanned Aerial Vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]

- Najad, P.G.; Ahmad, A.; Zen, I.S. Approach to Environmental Sustainability and Green Campus at Universiti Teknologi Malaysia: A Review. Environ. Ecol. Res. 2018, 6, 203–209. [Google Scholar] [CrossRef]

- Otto, A.; Agatz, N.; Campbell, J.; Golden, B.; Pesch, E. Optimization approaches for civil applications of unmanned aerial vehicles (UAVs) or aerial drones: A survey. Networks 2018, 72, 411–458. [Google Scholar] [CrossRef]

- Besada, J.A.; Bergesio, L.; Campana, I.; Vaquero-Melchor, D.; Lopez-Araquistain, J.; Bernardos, A.M.; Casar, J.R. Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors. Sensors 2018, 18, 1170. [Google Scholar] [CrossRef] [PubMed]

- Hinge, L.; Gundorph, J.; Ujang, U.; Azri, S.; Anton, F.; Abdul Rahman, A. Comparative Analysis of 3d Photogrammetry Modeling Software Packages for Drones Survey. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W12, 95–100. [Google Scholar] [CrossRef]

- Rock, G.; Ries, J.; Udelhoven, T. Sensitivity analysis of UAV-photogrammetry for creating digital elevation models (DEM). In Proceedings of the Conference on Unmanned Aerial Vehicle in Geomatics, Zurich, Switzerland, 14–16 September 2011. [Google Scholar]

- Bianco, S.; Ciocca, G.; Marelli, D. Evaluating the Performance of Structure from Motion Pipelines. J. Imaging 2018, 4, 98. [Google Scholar] [CrossRef]

- Ruiz, J.J.; Diaz-Mas, L.; Perez, F.; Viguria, A. Evaluating the accuracy of dem generation algorithms from UAV imagery. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2013, XL-1/W2, 333–337. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking computer vision aloft—Archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Lowe, G. SIFT—The scale invariant feature transform. Int. J. 2004, 2, 91–110. [Google Scholar]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Applying ASPRS Accuracy Standards to Surveys from Small Unmanned Aircraft Systems (UAS). Photogramm. Eng. Remote Sens. 2015, 81, 787–793. [Google Scholar] [CrossRef]

- Girod, L.; Nuth, C.; Kääb, A.; Etzelmüller, B.; Kohler, J. Terrain changes from images acquired on opportunistic flights by SfM photogrammetry. Cryosphere 2017, 11, 827–840. [Google Scholar] [CrossRef]

- Nagarajan, S.; Khamaru, S.; De Witt, P. UAS based 3D shoreline change detection of Jupiter Inlet Lighthouse ONA after Hurricane Irma. Int. J. Remote Sens. 2019, 40, 9140–9158. [Google Scholar] [CrossRef]

- Koci, J.; Jarihani, B.; Leon, J.X.; Sidle, R.; Wilkinson, S.; Bartley, R. Assessment of UAV and Ground-Based Structure from Motion with Multi-View Stereo Photogrammetry in a Gullied Savanna Catchment. ISPRS Int. J. Geo-Inf. 2017, 6, 328. [Google Scholar] [CrossRef]

- Chiabrando, F.; Sammartano, G.; Spanò, A.; Spreafico, A. Hybrid 3D Models: When Geomatics Innovations Meet Extensive Built Heritage Complexes. ISPRS Int. J. Geo-Inf. 2019, 8, 124. [Google Scholar] [CrossRef]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy Assessment of Point Clouds from LiDAR and Dense Image Matching Acquired Using the UAV Platform for DTM Creation. ISPRS Int. J. Geo-Inf. 2018, 7. [Google Scholar] [CrossRef]

- Pricope, N.G.; Mapes, K.L.; Woodward, K.D.; Olsen, S.F.; Baxley, J.B. Multi-Sensor Assessment of the Effects of Varying Processing Parameters on UAS Product Accuracy and Quality. Drones 2019, 3, 63. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Li, J.; Li, E.; Chen, Y.; Xu, L.; Zhang, Y. Bundled depth-map merging for multi-view stereo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Fransisco, CA, USA, 13–18 June 2010; pp. 2769–2776. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.; Gonizzi-Barsanti, S. Dense image matching: Comparisons and analyses. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; pp. 47–54. [Google Scholar]

- Verykokou, S.; Ioannidis, C. A Photogrammetry-Based Structure from Motion Algorithm Using Robust Iterative Bundle Adjustment Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-4/W6, 73–80. [Google Scholar] [CrossRef]

- Erol, S.; Özögel, E.; Kuçak, R.A.; Erol, B. Utilizing Airborne LiDAR and UAV Photogrammetry Techniques in Local Geoid Model Determination and Validation. ISPRS Int. J. Geo-Inf. 2020, 9, 528. [Google Scholar] [CrossRef]

- Shin, J.-I.; Cho, Y.-M.; Lim, P.-C.; Lee, H.-M.; Ahn, H.-Y.; Park, C.-W.; Kim, T. Relative Radiometric Calibration Using Tie Points and Optimal Path Selection for UAV Images. Remote Sens. 2020, 12, 1726. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D. Comparison of Commercial Structure-from-Motion Photogrammety Software Used for Underwater Three-Dimensional Modeling of Coral Reef Environments. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 127–131. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Abdullah, Q.; Maune, D.; Smith, D.; KarlHeidemann, H. New Standard for New Era: Overview of the 2015 ASPRS Positional Accuracy Standards for Digital Geospatial Data. Photogramm. Eng. Remote Sens. 2015, 81, 173–176. [Google Scholar] [CrossRef]

- Pipaud, I.; Loibl, D.; Lehmkuhl, F. Evaluation of TanDEM-X elevation data for geomorphological mapping and interpretation in high mountain environments—A case study from SE Tibet, China. Geomorphology 2015, 246, 232–254. [Google Scholar] [CrossRef]

- Liu, K.; Song, C.; Ke, L.; Jiang, L.; Pan, Y.; Ma, R. Global open-access DEM performances in Earth’s most rugged region High Mountain Asia: A multi-level assessment. Geomorphology 2019, 338, 16–26. [Google Scholar] [CrossRef]

- Hu, Z.; Peng, J.; Hou, Y.; Shan, J. Evaluation of Recently Released Open Global Digital Elevation Models of Hubei, China. Remote Sens. 2017, 9, 262. [Google Scholar] [CrossRef]

- Podgórski, J.; Kinnard, C.; Pętlicki, M.; Urrutia, R. Performance Assessment of TanDEM-X DEM for Mountain Glacier Elevation Change Detection. Remote Sens. 2019, 11, 187. [Google Scholar] [CrossRef]

- Kramm, T.; Hoffmeister, D. A Relief Dependent Evaluation of Digital Elevation Models on Different Scales for Northern Chile. ISPRS Int. J. Geo-Inf. 2019, 8, 430. [Google Scholar] [CrossRef]

| Flight Parameters | Specifications |

|---|---|

| Flight Height | 300 m |

| Flight Speed | 15 m/s |

| Flight mode | Autonomous |

| Camera | 20MP |

| Focal Length | 24 mm equivalent |

| GSD | 8.36–8.45 |

| Aspect Ratio | 4:3 (4864 × 3648) |

| UAV survey time | 10.00 am–12 noon |

| Image Format | .jpg |

| Flight Overlap Percentage | No. of Photos Used | Median of Tie Points per Calibrated Image | No. of 3D Point Cloud | Time (3D Point Cloud Generation) | Time (DSM Generation) | RMSE (m) as per Pix4D | |

|---|---|---|---|---|---|---|---|

| Side | Forward | ||||||

| 55 | 55 | 37 | 8247 | 5,919,925 | 11 m:48 s | 11m:58s | 0.124 |

| 55 | 65 | 48 | 11,126 | 7,332,416 | 22 m:13 s | 13m:29s | 0.119 |

| 55 | 75 | 56 | 13,694 | 7,437,675 | 22 m:22s | 13m:33s | 0.086 |

| 55 | 85 | 91 | 19,672 | 10,832,272 | 01 h:21 m:05 s | 25m:11s | 0.135 |

| 65 | 55 | 43 | 10,147 | 6,346,402 | 20 m:45 s | 13m:41s | 0.081 |

| 65 | 65 | 57 | 11,895 | 7,895,433 | 26 m:51 s | 14m:40s | 0.106 |

| 65 | 75 | 75 | 15,431 | 9,340,526 | 37 m:38 s | 29m:16s | 0.099 |

| 65 | 85 | 116 | 18,142 | 12,523,802 | 01 h:20 m:26 s | 24m:21s | 0.126 |

| 75 | 55 | 72 | 12,261 | 9,136,794 | 43 m:50 s | 16m:57s | 0.132 |

| 75 | 65 | 80 | 13,670 | 9,668,803 | 01 h:08 m:40 s | 34m:22s | 0.128 |

| 75 | 75 | 104 | 14,502 | 11,216,781 | 52 m:45 s | 22m:45s | 0.161 |

| 75 | 85 | 154 | 17,552 | 14,643,387 | 01 h:45 m:12 s | 31m:23s | 0.144 |

| 85 | 55 | 112 | 13,718 | 12,339,138 | 01 h:00 m:45 s | 23m:23s | 0.096 |

| 85 | 65 | 133 | 13,837 | 13,606,580 | 01 h:11 m:53 s | 22m:39s | 0.147 |

| 85 | 75 | 180 | 16,586 | 16,187,016 | 03 h:14 m:01 s | 26m:01s | 0.133 |

| 85 | 85 | 275 | 17,831 | 24,256,442 | 09 h:04 m:23 s | 51m:23s | 0.157 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chaudhry, M.H.; Ahmad, A.; Gulzar, Q. Impact of UAV Surveying Parameters on Mixed Urban Landuse Surface Modelling. ISPRS Int. J. Geo-Inf. 2020, 9, 656. https://doi.org/10.3390/ijgi9110656

Chaudhry MH, Ahmad A, Gulzar Q. Impact of UAV Surveying Parameters on Mixed Urban Landuse Surface Modelling. ISPRS International Journal of Geo-Information. 2020; 9(11):656. https://doi.org/10.3390/ijgi9110656

Chicago/Turabian StyleChaudhry, Muhammad Hamid, Anuar Ahmad, and Qudsia Gulzar. 2020. "Impact of UAV Surveying Parameters on Mixed Urban Landuse Surface Modelling" ISPRS International Journal of Geo-Information 9, no. 11: 656. https://doi.org/10.3390/ijgi9110656

APA StyleChaudhry, M. H., Ahmad, A., & Gulzar, Q. (2020). Impact of UAV Surveying Parameters on Mixed Urban Landuse Surface Modelling. ISPRS International Journal of Geo-Information, 9(11), 656. https://doi.org/10.3390/ijgi9110656