1. Introduction

The Internet has become a major dissemination and sharing platform for 3D model content and 3D graphics have become an increasingly important part of the web experience. This is mainly due to the rise of browser-based rendering technology for real-time 3D graphics that has been under development since the mid-nineties. Most notably, the adaptation of WebGL [

1] has enabled plug-in free access to increasingly powerful graphics hardware across multiple desktop and mobile computing platforms—without forgetting the development of various commercial and non-commercial approaches to publishing and managing 3D content on the web [

2,

3,

4].

WebGL is a JavaScript application programming interface (API) that enables interactive 3D graphics with advanced rendering techniques like physically based rendering within a browser. It is based on OpenGL ES (Embedded Systems) [

5] and natively supported by most modern desktop and mobile web browsers. There are high-level JavaScript libraries such as Three.js [

6] and Babylon.js [

7] that are designed to make WebGL more accessible and to help in application development. In practice, the 3D model formats supported in WebGL are dependent on these high-level libraries. While no standard format exists for web-based 3D content creation, several authors have utilized the glTF (GL Transmission Format) format [

8,

9,

10]. Several pipelines have been suggested for creating and optimizing 3D content for the web [

11,

12]. Key advantages of web-based 3D compared to desktop applications are cross platform availability and straightforward deployment without separate installing. These advantages and the increasing user readiness have accelerated web-based 3D application development in many fields, e.g., data visualization, digital content creation, gaming, education, e-commerce, geoinformation and cultural heritage [

2].

In recent years, many web-based and plug-in free 3D model publishing platforms have been created and have become increasingly popular, hosting millions of models for billions of potential users. For example, Sketchfab (Sketchfab SAS, Paris, France) [

13], Google Poly (Google LLC, Mountain View, CA, USA) [

14] or Facebook 3D Posts (Facebook Inc., Menlo Park, CA, USA) [

15] have helped in popularizing the creation and publishing of 3D models for non-expert users. Perhaps the most notable example is Sketchfab, a powerful platform for sharing and managing 3D content with modern features such as support for virtual reality (VR)/augmented reality (AR) viewing, interactive animations and annotations, physically based rendering (PBR), a 3D model marketplace and a selection of exporters and APIs [

16]. Sketchfab offers several pricing plans from free to enterprise level. Some have considered Sketchfab as the de-facto standard for publishing 3D content on the web [

4]. Google Poly is a free web service for sharing and discovering 3D objects and scenes. It was built to help in AR and VR development and offers several APIs e.g., for application development in game engines. Facebook 3D Posts is a free feature that allows users to share and display 3D models in Facebook posts. All these platforms are based on WebGL and have an emphasis on high visual quality rather than accurate geometric representation.

The challenge in utilizing these web-based 3D publishing platforms is that they are subject to several technical constraints, including the memory limits of web browsers, the varying GPU performance of the device used and the need to maintain limited file sizes to retain tolerable download times. In addition, 3D assets must be converted to a supported format beforehand. The detailed requirements vary from platform to platform. For example, Sketchfab supports over 50 3D file formats including common formats like OBJ, FBX, GLTF/GLB [

17]. They recommend models to contain up to 500,000 polygons and a maximum of 10 texture images [

18]. Facebook requires models in GLB-format with a maximum file size of 3 MB [

19]. Google Poly accepts OBJ and GLTF/GLB-files up to 100 MB in size and textures at a maximum of 8196x8196 [

20]. This implies that the platforms cannot be utilized to view any 3D models available, but the platform specific requirements have to be acknowledged in the content creation phase.

Traditionally 3D content has been produced by 3D artists, designers or other professionals more or less manually by relying on diverse workflows and various 3D modeling solutions: e.g., 3ds Max (Autodesk Inc., Mill Valley, CA, USA) [

21], Maya (Autodesk Inc., Mill Valley, CA, USA) [

22], Blender (Blender Foundation, Amsterdam, Netherlands) [

23], ZBrush (Pixologic Inc., Los Angeles, CA, USA) [

24] or CAD software: e.g., AutoCAD (Autodesk Inc., Mill Valley, CA, USA) [

25], Microstation (Bentley Systems Inc., Exton, PA, USA) [

26], Rhinoceros (Robert McNeel & Associates, Seattle, WA, USA) [

27] and SketchUp (Trimble Inc., Sunnyvale, CA, USA) [

28]. Often the creation of web-compatible 3D content has been considered inefficient and costly and is seen as a barrier to entry for both the developers and end users [

2].

The utilization of 3D measurement methods, primarily laser scanning and photogrammetry, have the potential to drastically increase the efficiency of 3D content production in use cases where 3D documentation of real-life objects or environments is required. This reality-based 3D data is used in numerous applications in many fields such as cultural heritage [

29], 3D city modeling [

30], construction [

31,

32], gaming [

33] and cultural production [

34].

The potential of reality-based 3D data collection technology has also been increasingly noted and promoted in the 3D graphics and gaming communities as a way to automate content creation processes (e.g., [

35,

36]). Furthermore, the global trend towards virtual and augmented realities (VR and AR) has increased the demand for creating high quality and detailed photorealistic 3D content based on real-life objects and environments. In addition to the detailed 3D geometry, the quality of the texture data also plays a crucial role in these photorealistic experiences of often unprecedentedly high levels of detail. Despite development efforts, the lack of compelling content is considered one of the key challenges in the adoption of VR and AR technologies [

37].

Both laser scanning and photogrammetry have become increasingly available and have advanced significantly over the last few decades thanks to the development leaps made towards more powerful computing and automating various aspects of 3D reconstruction. Laser scanners produce increasingly detailed and accurate 3D point clouds of their surroundings by using laser-based range finding [

38]. Compared to camera-based methods, laser scanning is an active sensing technique that is less dependent on the lighting conditions of the scene. However, laser scanning lacks color information which is required by many applications that rely on photorealism. This is usually solved by utilizing integrated digital cameras to colorize the point cloud data. Photogrammetry is a technique based on deriving 3D measurements from 2D image data. Additionally, the model geometry and the color information used in model texturing can be derived from the same set of images. Photogrammetry has benefited greatly from the advances made towards approaches such as structure-from-motion (e.g., [

39]), dense image matching (e.g., [

40]) and meshing (e.g., [

41]) in the 21st century. In its current state it is an increasingly affordable and highly portable measuring technique capable of recording extremely dense colored 3D point cloud and textured 3D model data sets.

This development has spawned many open source and commercial software solutions for creating reality-based 3D mesh models automatically or semi-automatically from photogrammetric imagery: e.g., 3DF Zephyr (3Dflow srl., Udine, Italy) [

42], RealityCapture (Capturing Reality s.r.o., Bratislava, Slovakia) [

43], Metashape (Agisoft LCC, St. Petersburg, Russia) [

44], Meshroom [

45], COLMAP [

46], Pix4D (Pix4D S.A., Lausanne, Switzerland) [

47] or from arbitrary point cloud data: e.g., SEQUOIA (Amazon Web Services Inc., Seattle, WA, USA) [

48], CloudCompare [

49] for further use in 3D modeling software suites, 3D game engines or web-based 3D model publishing platforms etc. The emergence of these solutions has also made the creation of reality-based 3D content increasingly available for non-expert users [

50].

A number of papers have been published on the integration of laser scanning and photogrammetry on many levels [

51,

52]. Generally, this integration is considered the ideal approach since no single technique can deliver adequate results in all measuring conditions [

29,

53,

54,

55]. Differences and complementary benefits between photogrammetry and laser scanning have been discussed by [

52,

55,

56,

57,

58]. Evaluation of modeling results has been typically focused on analyzing the geometric quality of the resulting hybrid model. Assessing texture quality has gained surprisingly little attention (e.g., [

58]). Furthermore, the actual hybrid model has been rarely compared to the modeling approaches that rely on single methods.

Over the years, integration of laser scanning and photogrammetry has been developed for diverse use cases. For example, for reconstructing the details of building façades [

59,

60], improving the extraction of building outlines [

61] or improving accuracy [

62], registration [

55] and visual quality [

63] of 3D data. Many approaches require user interaction that is time consuming and labor intensive or suitable for specific use cases and data, e.g., simple buildings with planar façades [

64].

In many cases related to 3D modeling, the integration of these two techniques is merely seen as colorizing the point cloud data [

65], dealing with texturing laser derived 3D models [

58,

66] or merging separately generated point cloud, image or model data [

54,

67,

68,

69,

70,

71] at the end of the modeling pipeline where the weaknesses of each data source becomes more difficult to overcome [

51,

72].

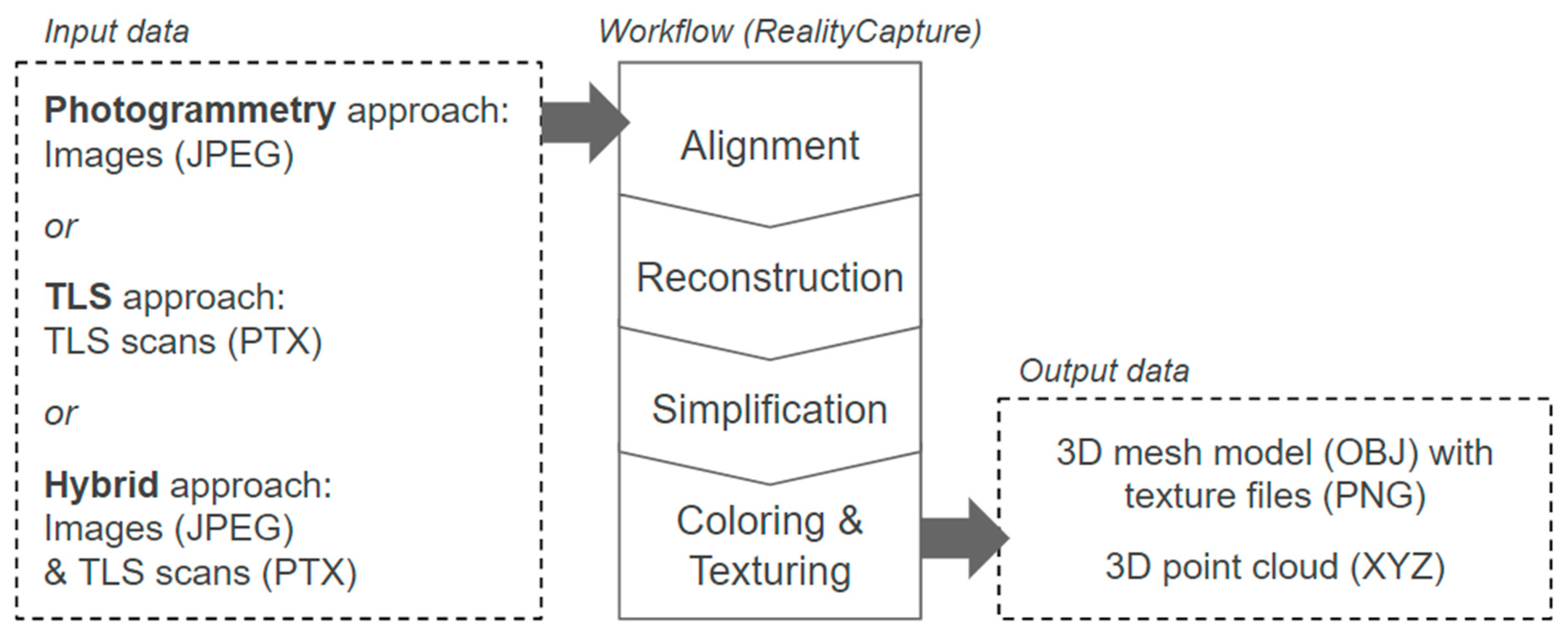

Despite being an avid research topic, very few data integration solutions exist outside of the academic world that would be applicable and available for people to use in their real-life projects. Some approaches that rely on widely available solutions have been described by [

69,

70,

71]. In many of these cases, the integration of laser scanning and photogrammetry has been achieved by simply merging separately generated point cloud data sets, often with the purpose of acquiring 3D data from multiple perspectives to ensure sufficient coverage [

70,

71]. When looking at freely or commercially available solutions (see

Table 1), RealityCapture is the most suitable to integrate laser scanning and photogrammetry in a highly automated 3D reconstruction and texturing process. Laser-scanned point clouds can also be imported into 3DF Zephyr. However, in 3DF Zephyr, laser scans have to be interactively registered as part of a separate process after the creation of a photogrammetric dense point cloud. An example of this type of approach is presented in [

70]. RealityCapture allows the import of the laser-scanned point clouds to be done in earlier phases of the 3D reconstruction pipeline and thus benefits the process with the inherited dimensional accuracy of laser scanning.

Web-based 3D technologies have been applied for visualization and application development in various cases where the data originates from various 3D measurement methods (e.g., [

73]). Related to web applications, 3D measurement methods have also been utilized for producing 3D data for environmental models [

74], 3D city models [

75,

76], whole body laser scans [

77] or indoor models [

34]. The evaluation of the geometric quality of various 3D measurement methods is a mainstay in the research literature (e.g., [

78]). In some cases, this evaluation has been done in projects aiming for web-based 3D (e.g., [

34]). Related to web applications and reality-based models, the need for 3D model optimizations and automation has been stressed by [

79] but the workflow for the automatic optimization is rarely presented in this context. Nevertheless, very little literature exists demonstrating the integration of laser scanning and photogrammetry in a complete workflow aiming to achieve photorealistic and web-compatible 3D models. Furthermore, assessing both the quality of the model geometry and texturing within the different data collection methods has not been done in previous studies, especially in the context of web-compatibility and automation.

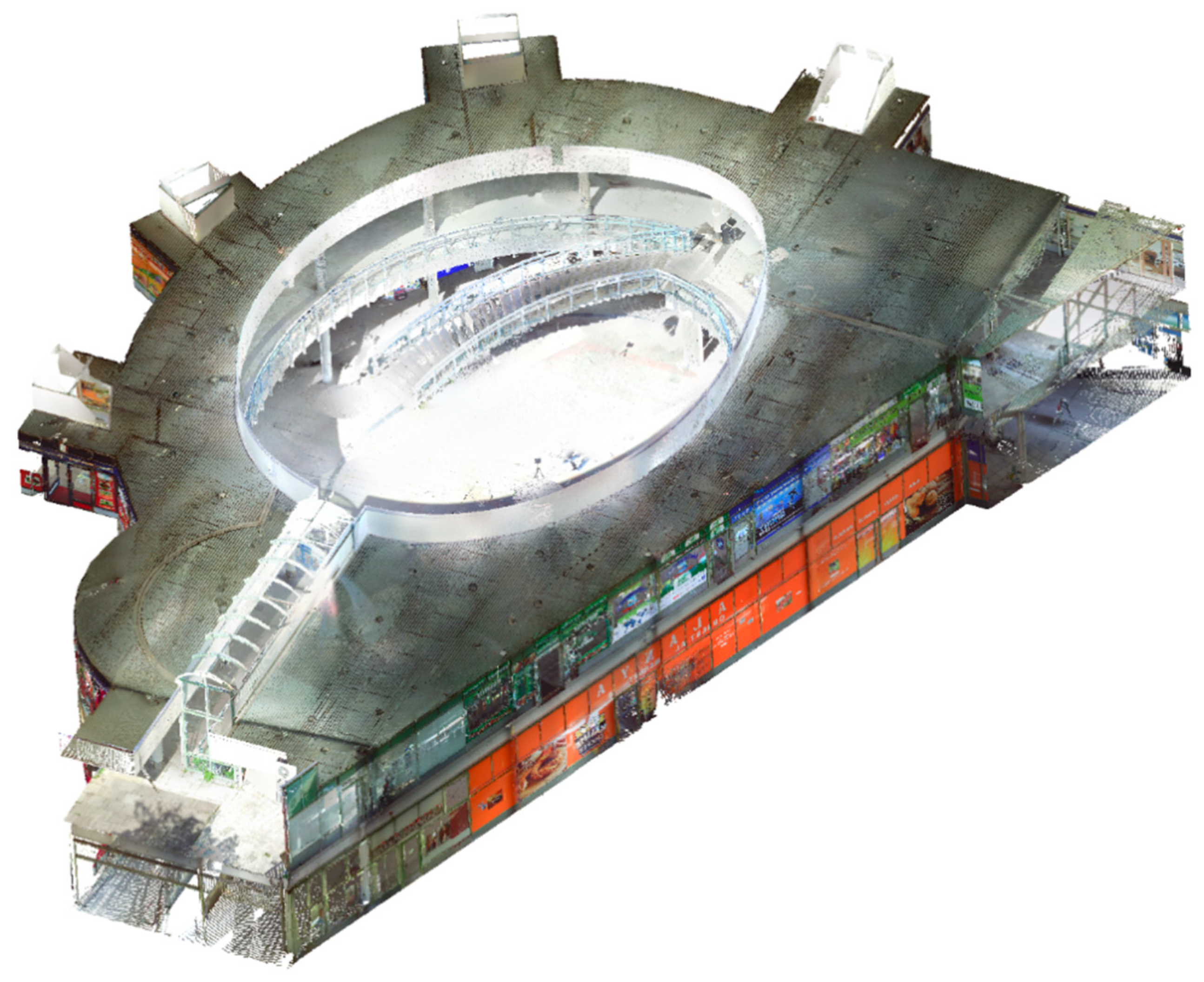

Our aim is to demonstrate a highly automated and integrated content creation process of providing reality-based photorealistic 3D models for the web. 3D reconstruction results based on close-range photogrammetry, terrestrial laser scanning (TLS) and their combination are compared considering both the quality of the model geometry and texturing. In addition, the visual quality of the compared modeling approaches is evaluated through an expert survey. Our approach is a novel combination of web-applicability, multi-sensor integration, high-level automation and photorealism, using state-of-the-art tools. The approach is applied in a real-life project called “Puhos 3D”, an interdisciplinary joint project between Aalto University and the Finnish national public service broadcasting company Yle, with the main goal of exploring the use of reality-based 3D models in journalistic web-based story telling [

80].

4. Discussion

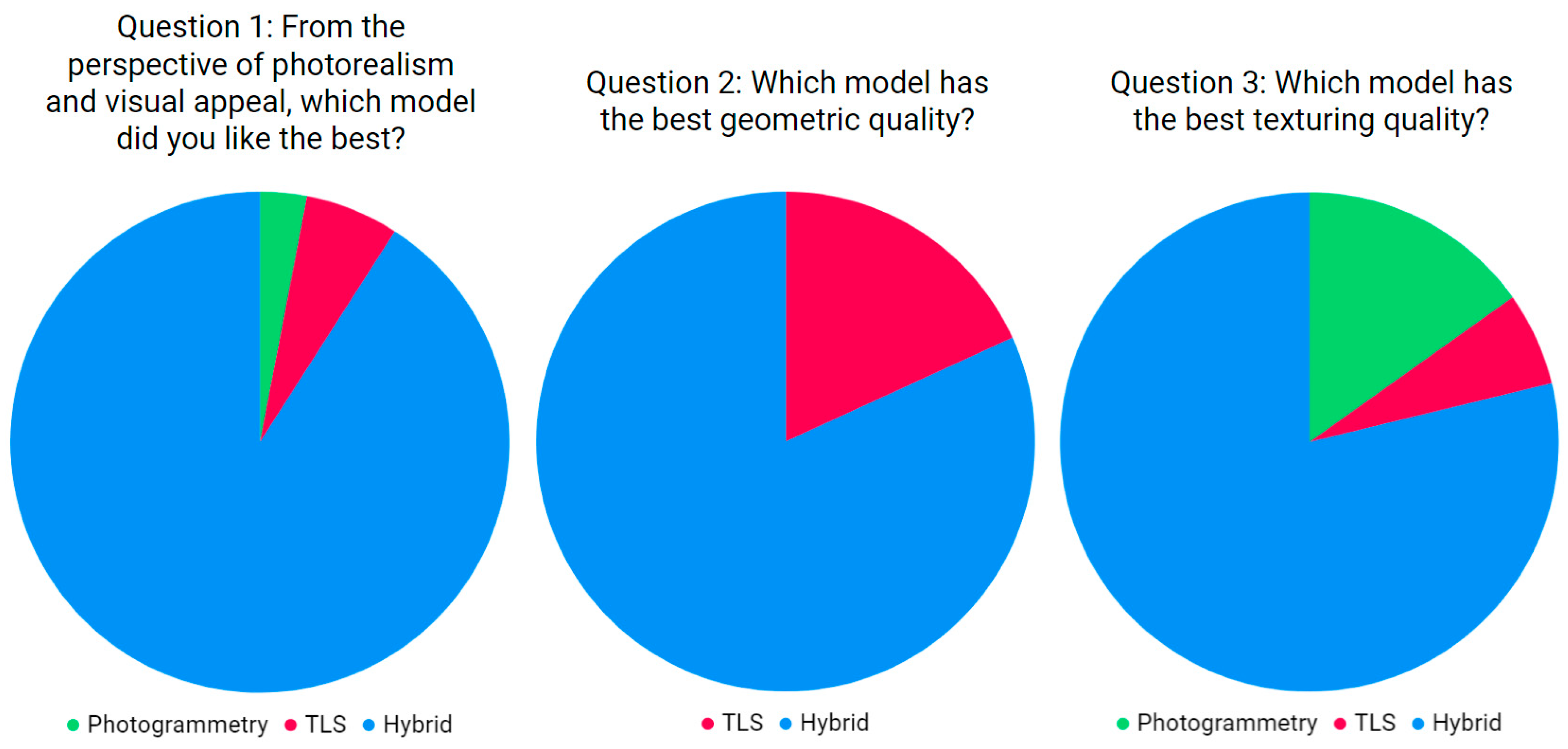

We compared three 3D reconstruction approaches: close-range photogrammetry, terrestrial laser scanning and their combination using available state-of-the-art tools in a real-life project setting. We presented an approach that is a novel combination of web-applicability, multi-sensor integration, high-level automation and photorealism. Furthermore, we assessed the visual quality of web-based 3D content with an expert evaluation.

Despite the recent developments, web-compatibility remains a key challenge in the creation of reality-based 3D models. All the compared approaches produced vast amounts of data and the models had to be heavily decimated in order to meet the limitations of browser-based WebGL applications. For example, the polygon count of the hybrid model had to be decimated to 0.07% of its full size of almost 694 million polygons to achieve the target of 500,000 polygons. This means that some details are inevitably lost in the process. Even though web-compatible models can be created almost fully automatically, the results are still far from optimal.

The emphasis on photorealism and visual aesthetics places high demands on the visual quality of the models. Both the geometry and the textures need to be as free from errors and visible artifacts as possible. The desired high level of visual quality would practically result in some level of manual editing and optimization for either the model geometry (e.g., cleaning and fixing errors, UV-mapping, retopologizing), the textures (e.g., de-lighting, cleaning and fixing errors) or both. Basically, the higher the visual quality requirements are, the more difficult the work becomes to automate it. This is especially so, if a high degree of photorealism and detail has to be attained on a browser-based platform with limited resources.

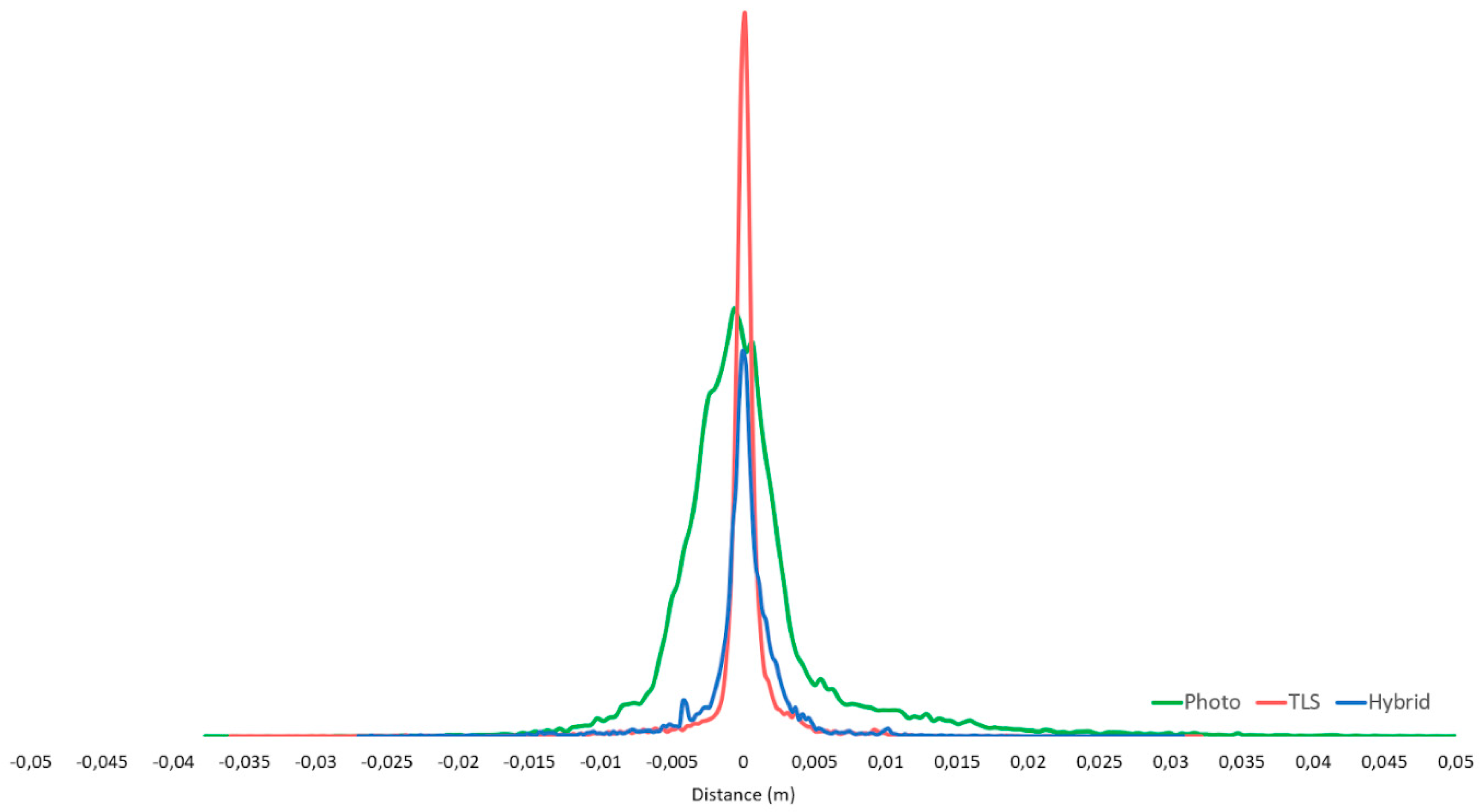

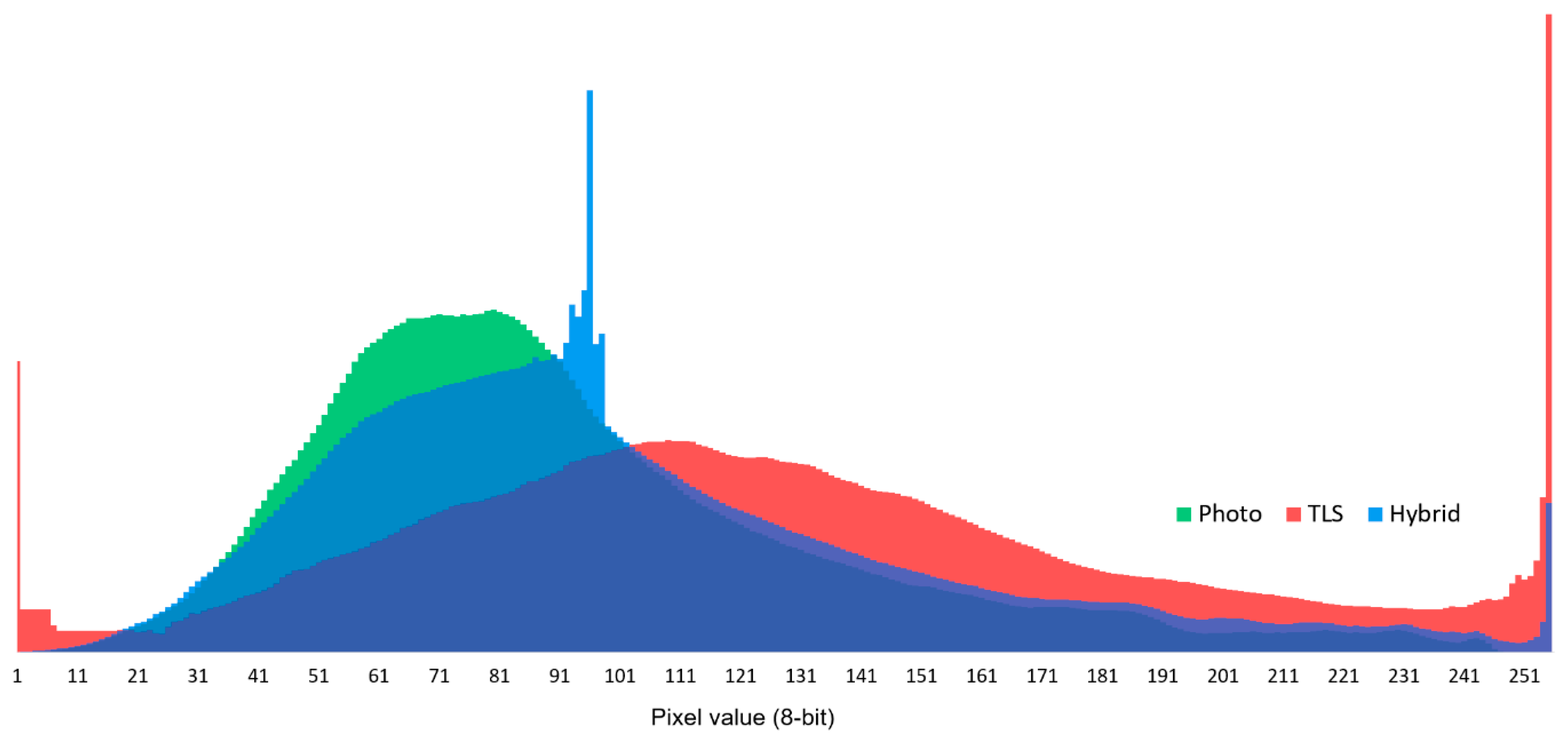

The integrated hybrid approach appeared as a good compromise compared to approaches relying solely to terrestrial laser scanning or photogrammetry. These results were also well in line with the previous research. The hybrid model improved the geometric quality of the photogrammetric model and improved the texture quality of the TLS-based model. However, there was a clear tradeoff in computing performance and the data volume. As a further downside, the addition of laser scanning naturally comes with a significant added cost and manual labor compared to highly affordable and more automated photogrammetry. Despite development, laser scanning is still far from being consumer friendly.

Using photogrammetry alone appeared to be the most affordable, accessible and portable option with a superior texturing quality compared to laser scanning. However, it lacks the benefits of laser scanning, such as direct metric scale determination and better performance on weakly textured surfaces, as well as independence regarding illumination in the scene. According to the analyses, the photogrammetry-based model clearly had the weakest geometric quality that deteriorated especially in the shadowy areas outwards from the center of the scene. Notably, not all images were automatically registered by RealityCapture and the total number of 306 aligned images can be considered a lightweight data set of images. The results could have likely improved by increasing the number of images.

The computing time for the TLS-based model was significantly faster than that of the photogrammetry or hybrid approaches. However, it was difficult to assess the complete workflow. The pre-processing steps were excluded from the analysis because we could consider only the parts of the process that were automated and mutually overlapping. In practice, the registration and filtering of the TLS data can require a significant amount of manual work, thus potentially being by far the most time-consuming step in the whole processing chain. This is the case particularly when modeling heavily crowded public spaces such as the Puhos shopping mall in our case.

In terms of texturing, the inclusion of photogrammetry clearly improved the texture quality. The analyses showed that the TLS-based model suffered greatly from both underexposure and overexposure. This was mainly due to the weaker quality of the built-in camera in the laser scanner (see

Figure 11). Utilization of high dynamic range (HDR) imaging, a common feature in many modern TLS scanners, would have improved the texturing quality but also would most likely have made the data collection significantly slower and therefore increased the problems with moving shadows in the scene, for example. Additionally, the possibilities for editing the raw images are limited with TLS when it comes to aspects such as adjusting the tonal scales or the white balance of the images prior to coloring the point cloud data. Moreover, in all three approaches the lights and the shadows in the scene are baked into the textures and reflect the specific lighting conditions over the time when the data was acquired. In many use cases, an additional de-lighting process would be required to allow the 3D model to be used in any lighting scenario.

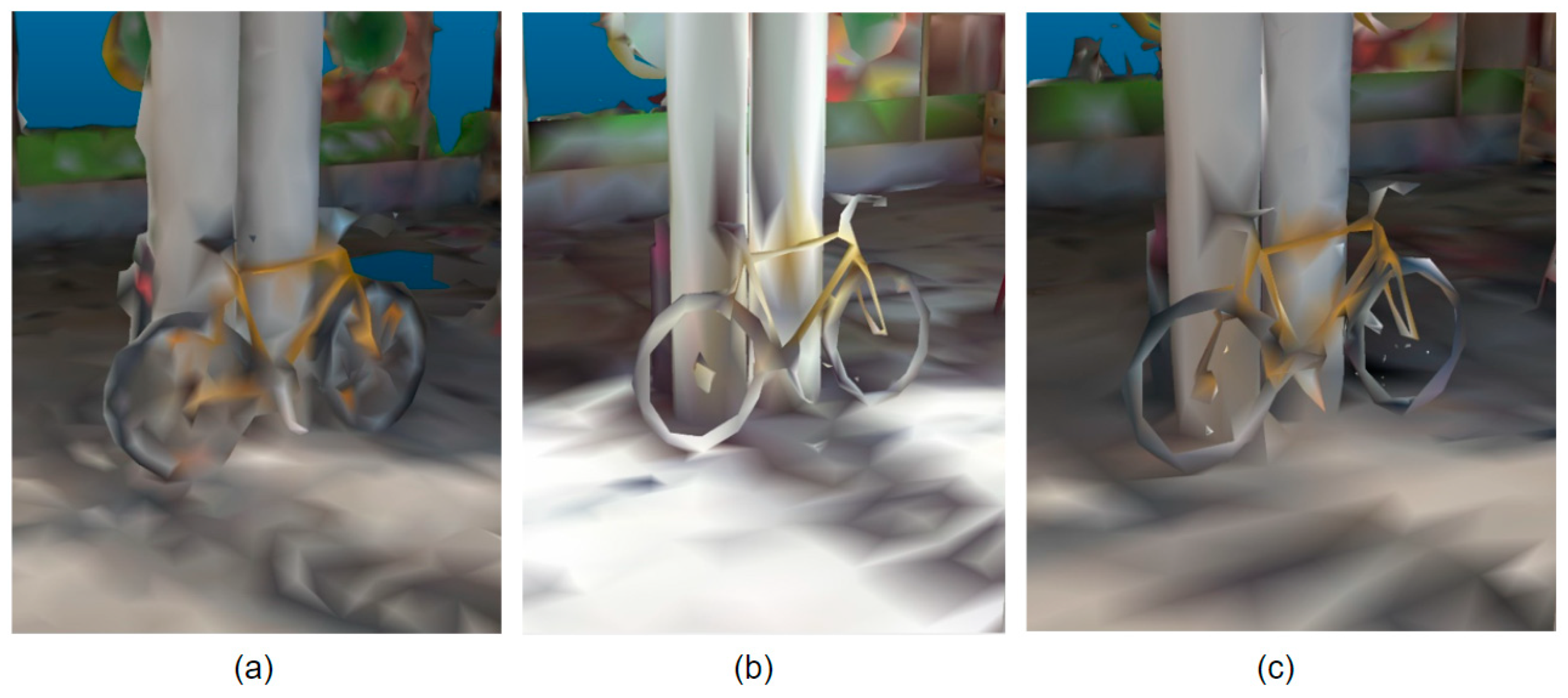

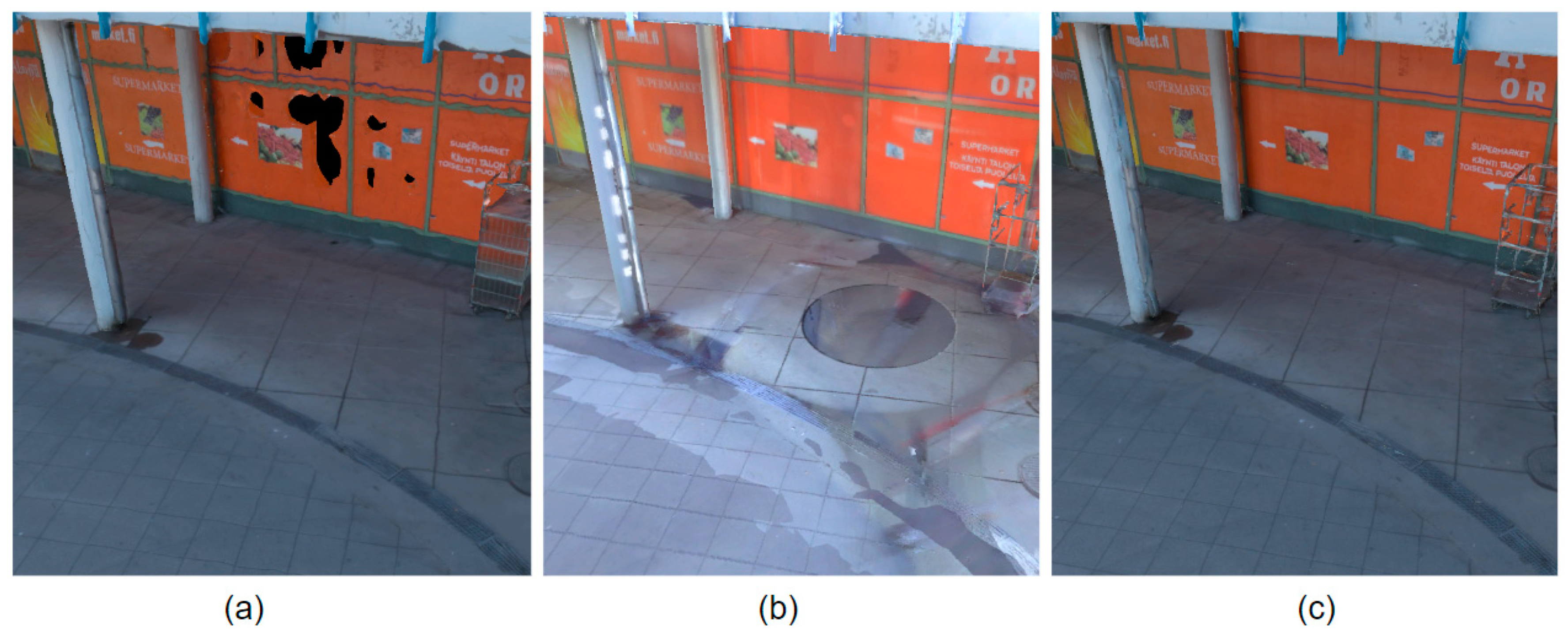

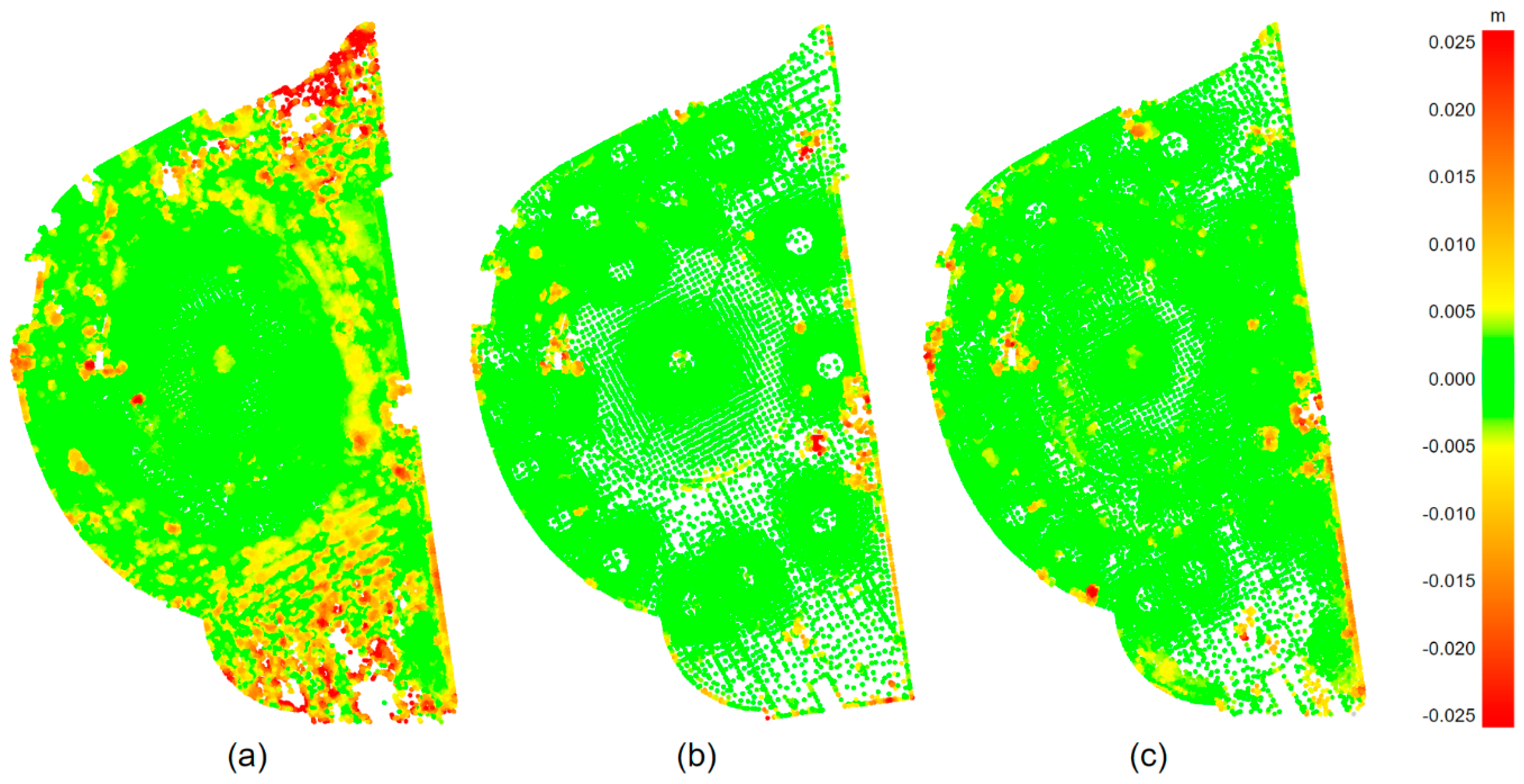

The results from the expert evaluation were even more favorable towards the hybrid approach than our numeric quality analyses. The quality of the geometry and texturing appear to go hand in hand. Good geometry appears to improve the visual appeal of the texturing and good texturing positively affects the visual appeal of the geometry. Furthermore, it appears that the people evaluating the visual quality are prone to focus on coarse errors and artifacts in the models. In our case these were elements such as holes in the photogrammetric model or texture artifacts in the TLS-based model (see

Figure 6). These types of errors are often inherited from the quality issues (e.g., weak sensor quality, weak data overlap, changes in the environment during data collection) in the raw data and are thus very challenging to fix automatically at later stages of the modeling process.

Limitations in our approach included the real-life characteristics of our case study. Data acquisition was limited by uncontrollable and suboptimal weather conditions, a fixed time frame and the consistently large numbers of people in this public space. However, these limitations reflected a realistic project situation where some factors are always beyond control. It is also worth noting that our emphasis was on photorealistic web visualization where the accuracy, precision and reliability of the models was not prioritized. More robust ground reference should have been used if the use case would have been for an application such as structural planning. Furthermore, our focus on complete automation meant compromising on the quality of the models. The results would have been improved if manual editing steps such as point cloud processing or model and texture editing were included. Alternatively, the 3D reconstruction phase could have been accomplished with 3DF Zephyr but this would have resulted in reduced level of integration and increased manual work, as in [

70]. Separate processing of laser scans and photogrammetric reconstruction could have been applied with mesh generation tools to produce somewhat similar, but significantly more manual, results as in [

87]. A different web platform with support for streaming 3D models of multiple levels of details (LODs) might have allowed the use of larger and more detailed models. However, such platforms were unavailable as a free service.

Further research directions include comparing the results of an automated 3D reconstruction process with a traditionally created reality-based 3D model that has been manually optimized for the web. Future development of web-based real-time rendering and streaming of 3D graphics will enable larger and larger data sets and reduce the need to heavily decimate mesh model data sets. Additionally, the development of point-based rendering may advance the direct use of 3D point cloud data, streamlining the modeling processes by minimizing the actual need for any modeling.

With the rapid development of mobile data acquisition methods, namely SLAM (Simultaneous localization and mapping), integration will be handled more on a sensor-level. This tighter level integration could enable further automation and quality control on the roots of the potential problems. Currently, many available SLAM-based 3D mapping systems utilize laser scanners but lack the tight integration of photogrammetry, e.g., for producing textured 3D models. Moreover, further developed integration of laser scanning and photogrammetry could potentially advance semantic modeling, where objects in the scene could be segmented automatically into separate 3D model objects. This would be beneficial in numerous application development cases that currently rely on segmenting the scene manually into meaningful objects.

In addition to color, the type of reflection is an important attribute of a surface texture. 3D models with physically based rendering (PBR) of lighting would benefit from reality-based information on the surface reflection type: which proportion of the light is reflected diffusely and which is reflected specularly from the surface. However, there is no agile and fast method for capturing the reflection type in the area of measurement. Hence, developing this method would accelerate the adaptation of reality-based PBR 3D models, since models with high integrity could be produced with less manual labor.