Abstract

With the wide use of web technologies, service-oriented architecture (SOA), and cloud computing, more and more geographical information systems are served as GIServices. Under such circumstance, quality of geographic information services (QoGIS) has emerged as an important research topic of geoinformatics. However, it is not easy to understand the field since QoGIS has no formal standards, which is not only in regard to server-side performance and capabilities, but is also related with the quality of experience (QoE) during user interaction with GIServices. In this paper, we compare quality of service (QoS) and QoGIS research to understand the uniqueness of QoGIS. A conceptual framework is proposed to organize and interpret QoGIS research from the perspective of quality modeling, acquisition, and application, and we discuss the status, limitations, and future directions of this area. Overall, our analysis shows that new quality metrics will evolve from existing metrics to match the needs in concrete QoGIS applications, and user preferences need to be considered in quality modeling for GIServices. We discuss three approaches for the provision of QoGIS information and find that user feedback mining is an important supplementary source of quality information. Gaps between QoS and QoGIS research suggest that the GIService performance enhancement must not only consider the unique features of spatial data models and algorithms, but also system architecture, deployment, and user spatiotemporal access behaviors. Advanced service selection algorithms must be introduced to tackle the quality optimization problems of geoprocessing workflow planning. Moreover, a QoGIS-aware GIServices framework must be established to facilitate and ensure GISerivce discovery and interaction. We believe this bibliographic review provides a helpful guide for GIS researchers.

1. Introduction

Quality issues affect overall user experiences significantly when interacting with GIServices in a distributed computing environment, especially in the era of spatial cloud computing [1]. According to definition in the research work [2,3,4,5], geographic information services (GIServices) refer to any applications with graphical user interfaces (GUIs) or application programming interfaces (APIs) that serve the end-users for various needs for manipulating, analyzing, and visualizing geographical data through the Internet using client-server (C/S) or browser-server (B/S) modes. Famous examples include Google maps and location-based services (https://map.google.com), ArcGIS online (https://www.esri.com/en-us/arcgis/products/arcgis-online/overview), Geospatial data portals like Data.gov (https://www.data.gov/), and Volunteered Geographical Information (VGI) like OpenStreetMap (OSM) (https://www.openstreetmap.org) in the forms of mobile-based, desktop-based, or web-based applications. Comparing with traditional desktop-based geographical information systems, GIServices experience a more complicated runtime environment, such as a mutable network condition, loosely-couple software architecture, and massive and ever-changing concurrent requests of end-users, which makes the guarantee of quality of service (QoS) and user-experiences crucial. QoS is defined as the totality of the characteristics of a service that bear on its ability to satisfy stated or implied non-functional needs, which is often understood as service-level quality issues. QoGIS research brings and extends upon QoS research and QoS-related studies, like usability testing, into the GIS field to support more precise GIService description, discovery, evaluation, and improvement. End-users can find the desired high-quality GIServices much easier from a geospatial cyber infrastructures (GCIs) and geoportals with the benefit of quality description and discovery mechanisms [6,7,8,9]. Quality evaluation and improvement methods can help developers or system architects to build better GIServices. Thus, QoGIS research can aid developers in improving GIServices as well as the end-user satisfaction.

QoGIS research, however, includes a wide variety of methodologies, techniques, and application scenarios in scattered fields. The diversity in QoGIS research creates problems for researchers because there is no established terminology for QoGIS. Many terms used to partially describe QoGIS, such as performance [10], capability [11], scalability [12], and availability [13], are from the perspective of software quality in QoS research. As a particular type of application, GIServices are capable of sharing, processing, and portraying geospatial data. Different data services may offer geospatial datasets with different data quality, while geoprocessing services yield different analysis results due to the variety of algorithm implementations. Therefore, terms such as location accuracy, topological completeness, spatial consistency, semantic accuracy, metadata completeness, and standard compliancy from standards of spatial data quality and metadata quality like the ISO 19157 [14], ISO 19115 [15], and FGDC specifications [16,17] are also involved as important components of QoGIS research. In addition to software quality and data quality, user experience is also an important quality measurement, especially for the GIServices with GUIs and high human computer interaction (HCI) and geo-visualization demands. Therefore, terms such as usability, readability, immersion, and illustration are also linked to QoGIS research. Open Geospatial Consortium (OGC) set up a Quality of Service and Experience Domain Working Group (QoSE DWG) [18], which provides a forum for discussing issues related to QoS and quality of experience of GIServices for delivering timely and accurate geospatial information to the end users by putting quality in a user centered scenario. The spatial information infrastructure of the European Union, INSPIRE, aims at providing spatial information services for the public sectors, and also sets user guides, roadmaps, and QoS assurance mechanisms to help end-users to explore spatial information and applications [19]. In summary, this research provides a very large picture to outline the QoGIS concepts from different aspects of quality issues that involve GIServices, but the topics and terminologies are becoming very broad. This semantic confusion and the divergent reference sources make it difficult to identify literature specifically related to QoGIS. Moreover, there is no recognized conceptual framework to organize QoGIS concepts and research issues. Researchers from the GIS field or other interdisciplinary areas, therefore, cannot clearly grasp the big picture of QoGIS research, thus impeding further study and advancement in their respective domains.

To deal with this problem, we refer to the more developed area of QoS research, which has relatively mature theories and technologies. QoS metrics, such as response time, stability, and accuracy, are conceptually linked to QoGIS and have been applied in QoGIS research [20]. For example, the responsiveness of the online map services is close to that of desktop-based applications partially due to the application of the tile caching strategy [8], as well as globalized auto-scaling and load-balancing algorithms [9] by utilizing users’ spatiotemporal visitation patterns [21]. Analyzing the relevance and differences between QoGIS and QoS, therefore, can provide methods to describe, predict, and guide the development of QoGIS. To systematically compare the QoGIS and QoS fields, we use topic cluster analysis based on document co-citation analysis (DCA) [22]. The generated clusters of QoGIS and QoS were categorized into three categories and, accordingly, we built a conceptual framework for QoGIS research. Based on this conceptual framework, the relevance and differences between QoGIS and QoS were analyzed. The proposed framework provides a basis for a logical interpretation of the existing work and points to possible future trends in QoGIS.

The paper is organized as follows: Section 2 describes how the research was conducted and introduces the proposed QoGIS conceptual Framework. Section 3 discusses the relations between QoGIS and QoS. Section 4 discusses emerging hot topics in QoGIS and the potential challenges in modeling, acquisition, and applications. Section 5 draws some conclusions.

2. Research Design and the Proposed QoGIS Conceptual Framework

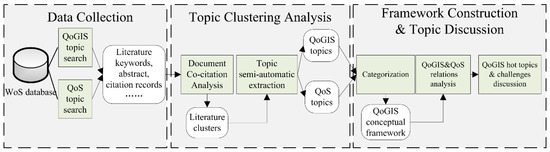

Our research workflow contains three parts as illustrated in Figure 1. The three parts include data collection, topic clustering analysis, and the conceptual framework construction. Data collection is the process of retrieving relevant articles, metadata, and citation records for further topic analysis. Topic clustering analysis provides a means to quantitatively identify active areas in QoGIS and QoS research. Based on a semantic categorization of these research topics, we propose a conceptual framework for QoGIS. The relation between QoGIS and QoS, as well as QoGIS hot topics and challenges are fully discussed accordingly.

Figure 1.

Research workflow.

2.1. Data Collection

The Web of Science (WoS) database used in our work is one of the most comprehensive databases for scientific research [23]. It contains the indexes of the Science Citation Index Expended (SCIE) as well as the Social Science Citation Index (SSCI). The most influential journals in the GIS field are included in these indices, e.g., International Journal of Geographical Information Science (IJGIS), Transactions in GIS (TGIS), and Computers and Geosciences [24]. Since important ideas often emerge in conference papers, we also collected the indices including the Conference Proceedings Citation Index (CPCI-S), Conference Proceedings Citation Index—Social Science and Humanities (CPCI-SSH) and Emerging Sources Citation Index (ESCI) of WoS. The search date was 2018-9-24 and the time span was refined to “All year”. As mentioned in our introduction, QoGIS has no standard and widely-recognized terminology, thus we extended the QoGIS topic search with the terms “geographic spatial service OR OGC web service OR web map service OR location-based service OR WebGIS” and “quality OR performance”; further refining the category with “geography” and “remote sensing”, as illustrated in Appendix A. Additionally, QoS topic search words include “web service AND QoS”, Appendix B. We chose the topic search words of “web service AND QoS” because QoS is widely recognized in both academic and industrial studies. Terminologies, such as performance, availability, throughput, and reliability, that relate to the topics of QoS can be retrieved by using the search word “QoS”. Given our search constraints, we obtained 242 QoGIS records and 1002 QoS records.

2.2. Analysis Method

Bibliometric documentation co-citation analysis (DCA) using CiteSpace [25] was applied to identify clusters of publications in both QoS and QoGIS research. The DCA method takes an article as a node in citation network tracing the references cited to compute the centrality of every article or node in a network of citations [22]. By comparing the centrality of every article, the most representative and important clusters can be identified. Keywords are extracted from these clusters of articles using a semi-automatic process. Term frequency-inverse document frequency (TF-IDF) was used to extract representative words. Each cluster yields a list of representative words. The word with highest TF-IDF value was selected as the representative of the cluster and, together, the words from all the clusters were regarded as important topics. After that, we manually removed or replaced irrelevant words with more relevant ones for QoGIS topics since the quantity of QoGIS literature is relatively small and extracted words can be irrelevant to the topics when analyzing a small amount of text. In this process, we referred to the terms defined in the highly-cited research papers, standards, as well as the documents and initiatives from authoritative organizations or working groups for topic word selection.

2.3. QoGIS Conceptual Framework

Through DCA clustering and our semi-automated topic extraction process, we defined a list of topics related to QoGIS and QoS. These topics, however, are scattered in different sub-domains, thus obscuring the commonalities between QoGIS and QoS research and solutions. To fully explore the existing research scattered across the disciplines, a systematical conceptual framework of QoGIS research topics is needed. We classified both QoS topics and QoGIS topics into three categories as detailed in Appendix C. Based on topic categorization, we assembled the existing QoGIS topics within the proposed conceptual framework to better understand and interpret the existing research and solutions relevant to QoGIS.

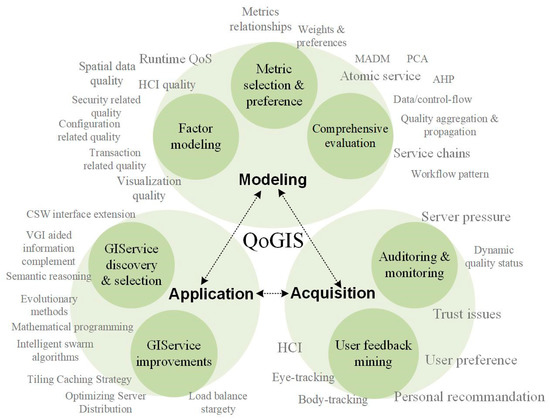

As illustrated in Figure 2, this conceptual framework categorizes QoGIS topics into three categories: modeling, acquisition, and application. Modeling links with the basic research of quality description and evaluation. Acquisition refers to mechanisms for collecting empirical quality measurements and end-user feedback. Application focuses on the QoGIS-based GIService discovery and selection, and client-side and server-side improvement. The three categories form the main stages of quality information value-adding process and they are highly interdependent due to their intrinsic associations. Acquisition provides data input and basic preprocessing for the value-adding process. Modeling is the processing and analysis stage, while application is the potential output and final target. Modelling and acquisition serve as foundation for each other. Quality factor modeling defines what to acquire, while acquisition provides quality data and their measurement for quality evaluation. Meanwhile, modelling and acquisition provide the basis of methods and data for application. For example, in the application of QoGIS-based GIService discovery, only when the quality information is provided and evaluation method is carefully designed can end-users apply this information to more focused discovery and selection of GIServices. In addition, QoGIS-supported improvement in GIService applications is an important driver, which will in turn promote the iterative and evolutionary development of modelling methods and guide the implementation and improvement of acquisition. For example, when the responsiveness and time efficiency of a web map is assured by using QoGIS improvement technologies such as auto-scaling and load balancing, the emphasis of quality modeling and acquisition could move on to HCI and geo-visualization related quality factors such as readability and esthetics of the map content. According to the conceptual framework, we discuss the relations between QoGIS and QoS and analyze hot topics and challenges in QoGIS in the following sections.

Figure 2.

Conceptual framework for QoGIS research.

3. Relations between QoGIS and QoS

Many QoGIS research topics are derived from QoS. Understanding the relationship between QoGIS and QoS can provide clues to potential solutions in QoGIS. Topics in QoS that have not yet been investigated in QoGIS could guide future QoGIS research. Remaining differences between QoGIS and QoS point to the unique characteristics and significance of QoGIS. Therefore, an analysis of the relations between QoGIS and QoS is needed. Although QoGIS and QoS research have the same ultimate aim to satisfy non-functional needs of end users, GIServices have different application scenarios, user demands, and unique characteristics on spatial data organization, processing, and representation, which may introduce domain-specified research questions. To thoroughly discuss the relevance and difference between QoGIS and QoS, we compare them by analyzing the following perspectives based on the extracted topics in Appendix C: (1) research methods and technical route, which can reflect the similarity and difference between GIServices and common web services, as well as the unique features of spatial data; and (2) study object and emphasis, which relate to the user demands and application scenarios.

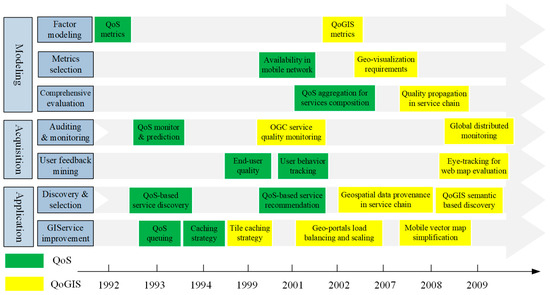

3.1. Relevance between QoGIS and QoS

By comparing QoGIS and QoS topics, we can find that QoGIS is impacted by QoS. QoGIS is originally introduced to geospatial domain to address runtime quality issues of GIServices and geospatial cyberinfrastructures, and can be regarded as the specific scenarios for QoS to some extents [20,21]. Hence, QoS and QoGIS share many topics including quality factor model, quality evaluation mechanism, quality monitoring and acquisition, as well as quality-driven GIService selection and improvement. As the timeline shown in Figure 3, QoGIS lags behind QoS research, but often follows a similar path. Note that the topics end in the year around 2009, because DCA builds the network and detects the significant topics based on the citation counts. New topics may also rise from the literature, but they need time to obtain enough citations to be detected in the topic clustering methods.

Figure 3.

Timeline showing the relationship between QoGIS and QoS topics.

In modeling aspect, comprehensive QoS metrics are proposed to objectively measuring the quality status of the online network [26]. Similar topics appeared in QoGIS research such as QoGIS metrics [27] approximately a decade later. QoS metrics provide important references for quality evaluation of GIServices. Based on the QoS metrics, more QoGIS metrics are built up for describing geospatial data quality of the data retrieved or generated by a GIService. Quality aggregation and propagation for service composition are both focal topics in QoGIS and QoS, because with the increasing amount of the available services, more complex business can be achieved by reusing the existing atomic services.

In acquisition aspect, service runtime status monitoring is also indispensable for GIServices quality evaluation since network dynamics affect the user experiences a great deal. End-user quality [28] and user behavior tracking [29] are proposed to describe and measure the user experiences and satisfaction; eye-tracking for web map evaluation [30] were also proposed to deal with the end-user quality of maps and spatial data. User behavior tracking research in QoS provides insight and theoretical foundations for QoGIS. For example, user tolerance time for waiting a web page response can be utilized to evaluate the responsiveness of web maps, while eye-tracking used in web UI design could also benefit map content evaluation in cartography.

In application aspect, the tile caching strategy [31] is developed based on the caching strategy [32]. Tiling caching strategies and the asynchronous JavaScript and XML (AJAX) helped the implementation of map tiled services. Tiling caching takes the spatial data structure and user access pattern into considerations. Therefore, refined spatial indices are proposed to split and allocate the spatial data [33]. With the wide use of computing technologies in geospatial domain, such as cloud computing and volunteering computing, the cutting-edge quality improvement methods are also adopted in GIServices, such as auto-scaling and global elastic deployment [9].

Many QoGIS topics are covering QoS topics because there are same technique sources for QoGIS and QoS. As a special type of applications, GIServices share many similar quality demands and issues with counterpart applications in other domains. Many problems in QoS will also happen in QoGIS field, so there is much relevance of these two fields. Therefore, it is reasonable to deduce that, in the future, QoGIS may cover some research topics that have been widely investigated in QoS but not fully studied in QoGIS yet, such as security issues and transaction quality [34]. A full discussion of this appears in Section 4.1.1.

3.2. Differences between QoGIS and QoS

QoGIS has its own emphasis because GIServices have unique application scenarios, user demands, and characteristics on spatiotemporal data processing and analysis. The differences between QoGIS and QoS will emerge because of the emphasis of the research context and targets, even in the very same service conditions. Thus, if we can understand the important factors that make QoGIS unique, then the differences will be clarified. In this section, we discuss the differences in the conceptual framework through four well-recognized uniqueness of GIServices, including spatial data structures [35], geo-visualization demands [36], HCI in geospatial analysis [37], and geography context.

Regarding quality modeling, both QoS and QoGIS research addresses data completeness and data accuracy issues in web services or GIServices. QoGIS focuses more on topological completeness and locational accuracy of geospatial data. Both QoS and QoGIS research focus on the usability issues, but QoS deals more with service-execution metrics like response time and reliability, while QoGIS addresses client-side usability related metrics such as friendliness of GIService user interface and intuition in geo-visualization since quality of experience (QoE) during the user interaction with GIServices is also a critical quality concern. In terms of friendliness, many operations in GIS are relatively complex and difficult for new users to follow. However, in public participation applications, most participants are non-experts. Their participation should be encouraged, thus QoGIS should consider the friendliness or fitness for use. Geo-visualization also demands special quality metrics, such as frame rate, allowed viewing angles, resolution, and user ratings for evaluating immersive intuitive experiences central to the success of virtual geo-environments, issues that are rarely discussed in QoS metrics.

In terms of quality information acquisition, spatial data also creates domain-specific problems for QoGIS research. For example, the information acquired from the access logs of web map applications reveal spatiotemporal distribution patterns of geographic objects and events in the physical world. These patterns of geographic related information have humanities and social contexts, which are important for finding group user preferences and helping users locate the matching GIServices [38]. Both QoS and QoGIS must take HCI into consideration for acquiring end-user feedback, but the emphasis in feedback analysis and extraction are quite different. QoS focuses on more general HCI issues such as if the prepared titles or figures in Graphic User Interfaces (GUIs) can grab the end-users’ attention appropriately. QoGIS needs to combine the geospatial context in the GUIs, such as the user-frequently-focusing areas of interest (AOI) of the map content or if the map symbol styles appropriate in the map representations. The so-called AOI can be acquired through eye-tracking equipment or other user-behavior tracking equipment. QoGIS can be evaluated by comparing the real areas of interest and the areas designers wish to emphasis. Additionally, the feedback from GIS novice users and expert users has different functions. For example, the feedback from novice users can help build the applications that suit for the users without much expertise knowledge. The feedback from experts could be useful to refine functionality and HCI experience of GIServices furtherly. Thus, the knowledge or career background information about users must be considered as variables when analyzing HCI data to accurately evaluate the quality of a service.

Both QoS and QoGIS can be used to solve application performance and user experience problems, but QoGIS research may pay more attention to the problems and solutions linked to unique GIService characteristics. Taking the availability enhancement as an example, besides classical QoS stability research, QoGIS pay more attention to spatial data simplification, optimization transmission and caching strategy by elegantly utilizing the features of spatial data model to alleviate network and server-side burdens. Meanwhile, data transmission and information representation quality are handled differently in QoGIS and QoS. QoS addresses data transmission efficiency and security issues, while the representation and accuracy of spatial datasets is a major concern in QoGIS. QoGIS research provides a foundation and guidance when developing more suitable strategies for efficiently presenting spatial data, for example, the pyramid tiling strategy of the web map tiling service [39] was designed for presenting two-dimensional spatial information. When constructing level-of-detail (LOD) metrics for different map tile levels, geo-visualization must conform to cartographic and map generalization rules.

In summary, the unique requirements of spatial data organizing, geo-visualization, and HCI, make the QoGIS research necessary and different from QoS research. Analyzing the relevance and differences between QoS and QoGIS provides a comparative, evolving view of the overall picture of QoGIS research so we can explore the details of QoGIS research logically and comprehensively.

4. Hot Topics and Challenges in QoGIS

4.1. QoGIS Modeling and Description

4.1.1. Establishment and Evolution of the QoGIS Factor Model

QoGIS metrics are evolving; new QoGIS metrics are emerging and existing metrics are being extended. In the early stages, QoGIS research followed the path set by QoS research, so most quality-related issues were expressed in terms of online web GIS system performance. QoS metrics were incorporated in QoGIS applications: these include server-side management, service runtime measurements, and service result quality evaluation. As GIServices became more mature and widely adopted, generic expressions of “system performance” have been slowly replaced by QoGIS metrics based on those proposed in QoS studies [34,40] targeting the unique characteristics of GIServices [41]. Research papers, international standards, and directives from organizations such as OGC [14,15] and INSPIRE [19], working groups and industrial research reports, and other initiatives define quality factors from different angles [18]. These metrics include HCI and usability quality, spatial data quality, and geo-visualization requirements. In the future, with the expanding commercialization of GIServices, QoGIS may also develop metrics adapted from those initially developed for e-business services. Based on an understanding of the developing trends in QoGIS, we propose a QoGIS metrics model organized by three quality types, i.e., shared quality factors, GIServices, and e-business emphasis, as shown in Table 1. We also summarized a quality metric catalogue listed with GIService examples with good or poor quality in Appendix D.

Table 1.

QoGIS metrics model.

Table 1 displays shared quality factors for measuring quality like runtime QoS of web services that also apply to QoGIS. Meeting runtime QoS requirements is challenging because geo-processing in GIServices are often both data-intensive and computation-intensive. QoGIS research, therefore, focuses on extending scalability and robustness by utilizing new computing technologies and storing models for geospatial datasets. For example, to optimize the efficiency of a spatial data query, new computational topological models can be developed [46].

GIService-specific factors, including spatial data and metadata quality, geo-visualization, and HCI quality, are duly considered by emerging technologies and application. For example, in Volunteered Geographic Information (VGI) [43], virtual geo-environments [44], and Public Participation GIS (PPGIS) [45], new QoGIS metrics were developed to overcome specific quality issues pertaining to those domains. The emergence of VGI has made QoGIS research pay more attention to the spatial data quality because VGI contributors are often non-experts; thus, quality problems may arise in submitted spatial data [47]. Virtual geo-environments can be regarded as a new application scenario that requires new quality metrics to describe and evaluate geo-visualization effects. PPGIS brings new considerations to QoGIS research, such as the usability or fitness for use, which are important factors when acquiring lay knowledge created by non-expert users contributing to public decision-making processes.

Future GIService studies may also consider quality factors critical to e-business services that incorporate transaction-, management-, configuration-, and security-related features [48]. These topics are seldom discussed in the realm of the QoGIS, the most likely reason is that most of GIServices, except online map services and basic location-based services, are as not widely commercialized as other e-business services, like weather forecasting services, payment services, and advertisement services. However, when geoprocessing services become more frequently used, transaction related quality issues could cause serious problems. Configuration- and management-related quality will be considered throughout, since service operating, maintenance, and access authorities have become significant issues. Security-related QoS is becoming a focus in geospatial data services, especially for classified government and military geospatial datasets, and in relation to personal privacy concerns. Vector or raster map watermarking [49] aims at solving these security-related quality issues partially, however, they are seldom discussed in the context of GIServices.

These metrics can help measure quality both quantitatively and qualitatively. However, an individual metric appeals to different types of users, such as end-users, service provider, developers, or system architects. Developers and system architects often care about the internal quality metrics not explicitly visible or present in the application interface from the perspective of software and system architecture. In contrast, end-users and service providers care more about quality metrics that directly affect the user experience, such as response time and visualization quality. Meanwhile, the importance of these quality metrics varies in relation to applications and end-user requirements. Therefore, the application types and end-user preferences must be considered in final in-use quality evaluations.

4.1.2. Quality Metric Selection in Applications Types and End-User Preferences

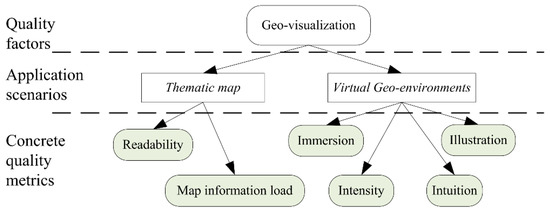

Application types must be considered when selecting quality metrics. Since quality demands vary on application types, the same quality factor may have different measurement methods in different application scenarios and become domain-specific. For example, thematic mapping and virtual geo-environments have different geo-visualization requirements as illustrated in Figure 4. Geo-visualization in thematic mapping applications must conform to cartographical conventions and rules [50]. Thematic maps demand readability and appropriate information loads. While in virtual geo-environments, the interactive user experience [51] is emphasized, thus intuitive, intense, and immersion experience is demanded. Similarly, HCI is also varied in applications. For example, in PPGIS, HCI usability is an important quality metric, as enhanced usability quality motivates users to participate when editing, collaboration, and sharing their local geospatial knowledge. In emergency response service, timeliness of HCI is more critical to success. These varied meanings and requirements in the quality results from the differences of application scenarios.

Figure 4.

The geo-visualization quality in different application scenarios.

End-user preferences must be considered in quality evaluation. In the same applications, end-users will have different quality preferences. For example, quality of a positioning service is often evaluated by response time and location accuracy. When end-users need to choose one positioning service from a large number of similar services, an evaluation result that incorporates both response time and result accuracy must be generated, as different end-users have different preferences. In summary, the application types and end-user preferences must be considered in the quality evaluation of the GIServices. Additionally, quality evaluation methods for both atomic services and service chains need to be developed for GIService selection and optimization.

4.1.3. Quality Evaluation of Atomic Service and Service Chain

To evaluate the quality of an atomic service, multiple quality dimensions must be leveraged in an evaluation model. Operations research methods, such as multiple attribute decision making (MADM) [52] and analytic hierarchy process (AHP) [53] provide solutions for quality dimension integration. However, when dealing with the quality of service chain, the control-flow and data-flow structures need to be considered before calculating aggregated (or total) quality score.

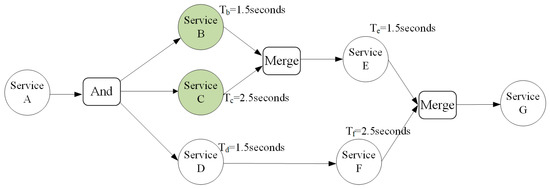

Quality metrics have different aggregation behavior under different control-flow or data-flow structures. For example, the total response time of the service chain with a sequential control-flow structure is the sum of response times for all the atomic services (so called sub-task or service components), while the total availability is calculated by multiplying the availability of all the atomic services in the same service chain. Quality metric computation can be even more complicated in service chains with nested control-flow structures. As illustrated in Figure 5, the total response time from service A and service E depends on the longer response time of service B and service C, while the total response time from service A to service G depends on the longer path from service A to service E and service A to service F. In short, the response time of a service chain is determined by the critical path of its control-flow directed-graph. Therefore, if only quality status of atomic services is considered and the workflow structure is ignored, then overall optimization of a service chain cannot be guaranteed [54], especially when multiple quality metrics need to be balanced.

Figure 5.

The aggregation of response times in an exemplary service chain (Note: “And” stands for parallel routing, while “Merge” stands for asynchronous join [55]).

QoGIS research on quality evaluation of atomic services and service chains appears relatively later in the timeline comparing with QoS, because the development of GIService and GIService chaining techniques in geospatial domain is relatively late. Only when a large amount of GIServices are available online for public use and chaining will the research on quality evaluation start getting active. At present, the quality evaluation methods and technologies of atomic service and GIService chain are still evolving and far from mature application, especially in the geospatial domain. For example, the study of quality aggregation of GIService emphasis quality factors, such as logical consistency and position accuracy for a GIService chain consisting of multiple geoprocessing steps, is still lacking.

Quality modeling must consider the purpose of the applications, which have an impact on the final quality evaluation. Metric modeling and evaluation methods provide important methodologies; the quality acquisition process, however, also presents challenges.

4.2. QoGIS Acquisition and Mining

QoGIS acquisition refers to the process of obtaining subjective or objective measurement of quality metrics. Service providers, third-party monitoring, and user feedback are three major data sources for QoGIS acquisition. For service advertisement purpose, service provider may publish its quality statement in service metadata, website or through a service broker. Third-party monitoring collects runtime QoS information in an active and stakeholder neutrality manner. User feedback is an important approach to obtain the evaluation of user experience and satisfaction level in an end-user participating manner. These quality acquisition methods have different characteristics. As illustrated in Table 2, service providers can provide the comprehensive quality information containing both runtime QoS and comments from self-assessment. Third-party monitoring brokers provide runtime QoS metrics like response time and availability. User feedback provides user preferences, satisfaction rankings and comments, which are often not directly related to quality information, further mining generates more useful quality information.

Table 2.

A comparison of multiple QoGIS acquisition sources.

In Table 2, we compare three major QoGIS acquisition sources through following perspective, i.e., quality type, data volume, metric dimension, technique difficulty, acquisition cost, trustworthiness, and timeliness. Quality type can be quantitative runtime QoS measurement or HCI quality factors illustrated in Table 1 or comprehensive quality which may contains both quantitative metrics and qualitative descriptions from user comments and provider self-assessment that covers all the quality factor types in Table 1. Data volume collected from different acquisition sources varies. The data volume for third-party monitoring can be very large if the monitoring is a frequent and long-term activity, while, for questionnaires, the data volume can be either small or medium depending on the sample size of the survey. Metric dimensions measuring the richness in quality metrics can be extracted from the quality description. User comments may contain many quality metrics since they are collected from massive end-users with different backgrounds and requirements, and there are no restrictions on the described content. For monitoring data, the data dimensions are limited to certain pre-defined runtime QoS quality dimensions. Technique difficulty and labor burden describe the acquisition cost for obtaining and analyzing the acquired quality description from the technique and labor cost perspective, respectively. Trustworthiness is a characteristic for describing whether the obtained quality information is reliable. Timeliness of quality information is also important because the service quality and user experience might be mutable due to many reasons.

4.2.1. Service Provider Offering Versus Third-Party Monitoring

The most direct methods to acquire comprehensive quality information of a service is to consult with the service provider. However, using this information is risky for users since providers might tend to overstate the quality of their products [56,57]. Therefore, a more impartial data source is needed to assess the quality of GIServices. Third-party monitoring can fill this gap.

Third-party service monitor brokers can provide high trustworthy quality information from neutral organizations through active, periodic, or even real-time monitoring. For example, the response time of a WFS or WMS can be obtained by invoking mandatory or optional service operations [58]. However, frequent quality monitoring can increase pressure on the server [31]. To acquire long-time and fine-grained monitoring data covers all time spans while without putting too much concurrent access burden to the GIService server, monitoring must incorporate design strategies [59,60]. In addition, researchers have also found the performance of service is related to the geographic distance between visitors and deployed services [61,62]. Thus, single-site monitoring cannot obtain useful QoGIS information for users from all over the world. Therefore, globally-distributed monitors must be set up at different locations around the world.

Although third-party monitoring provides more trustworthy quality information, there are still limitations as the quality metrics are not comprehensive enough; covering all spatial and temporal quality statuses and the server pressure remain problems. To deal with these problems, user feedback can be a good supplementary solution.

4.2.2. User Feedback Mining

User feedback is based on the user visiting behavior. In general, four different data sources can provide efficient user feedback including questionnaire, user comments, server logs, and usability testing, as illustrated in Table 3. These data sources contain subjective user views that may provide rich quality-related information, such as how the users like the deployed services, which can be used to investigate user satisfaction and preferences. However, user feedback also creates problems as unstructured texts cannot be directly used in quality acquisition, and text mining is required.

Table 3.

Four exemplary quality data sources of user feedback.

Questionnaires and user comments are the most natural way to investigate user feedback. However, a survey method has a disadvantage in that users may be misguided or hide their true feelings consciously or unconsciously [63]. User comments may be more reliable because the comments are produced by the spontaneous behavior of users. However, the problem remains that user comments often contain irrelevant or interference information that has nothing to contribute to quality information acquisition.

Similar to user comments, server log mining has statistical significance when finding the spatial-temporal patterns of large groups of users and makes recommendations of more suitable services to end-users. Understanding the spatial-temporal patterns of group behavior requires many visits to the deployed service because different kinds of users have different visiting patterns. In addition to spatial-temporal patterns, mining can also help identify user preferences in interested data and service functions through machine learning techniques, such as collaborative filtering [64]. However, acquiring and analyzing large amounts of user data can be expensive. Moreover, this data source cannot efficiently reflect the specific knowledge background of the end-users [63].

User tracking based usability testing [65] is one way of acquiring user feedback on a more detailed level. Lab testing needs fewer users—20 users can be enough [66]—and experiments can be set up with users from specific backgrounds. For example, if a GIService is aimed for novices, then the participants should be users without professional knowledge of the field. There are many tools available for the user testing. Tools like eye-tracking equipment [67] have been developed for the experiments [65]. Body language and facial expression changes can also be used to estimate the user reactions when using services. These methods have been successfully used to design web UIs for better user experience [68]. More importantly, several attempts have been made to adjust to user experiences of GIService, such as map navigation [69], interactive online mapping designs [20], and feedback-based GIService retrieval [70]. However, the theory and application of usability testing in QoGIS research is still evolving since this interdisciplinary research links with many disciplines, such as cognition science and psychology. Meanwhile, the technique difficulty and cost are relatively high.

Overall, these acquisition methods result in problems, including uncertain reputation, server-side pressure, and mining efficiency. However, the advantages and disadvantages of these methods can be complementary. Integration of the acquisition methods can provide more comprehensive and reliable quality information for real-world applications, e.g., location-based service and VGI applications. The combination of new acquisition methods and different data sources is an emerging trend. The GIService commercialization process will bring more end-users, thus demanding more intelligent mining methods to investigate user satisfaction and preferences, like commodity recommendations.

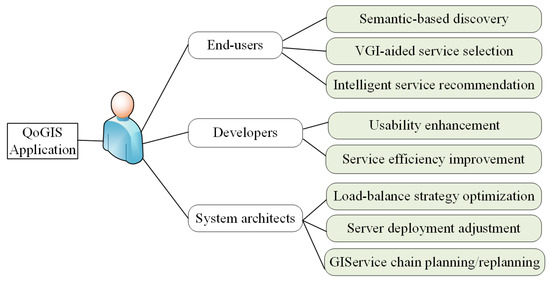

4.3. QoGIS-Aware Application and GIService Optimization

As illustrated in Figure 6, QoGIS can benefit a wide range of different types of users. From the end-user perspective, a QoGIS evaluation model can help them make selections among similar GIServices. From the developer or system architects view, QoGIS research provides methodologies to improve GIServices, and makes the service provider benefit from the market. User-centered design is a future direction for the GIService development, which is also raising new demands for both developers and the system architects in the field.

Figure 6.

Typical application scenarios of QoGIS for different roles of users.

4.3.1. GIService Discovery and Selection

As GIServices develop, global providers will deliver more and more GIServices with similar functionalities. According to the research, there are over 40 thousands WMSs deployed all over the world [62]. Thus, it is challengeable for end-users to find the best quality GIServices from such a large number of similar GIService candidates [70]. Most Spatial Data Infrastructure (SDI) and geoportals, such as GEOSS cleaning house, Data.gov, and INSPIRE, do not support quality-aware service discovery [71]. The challenges include how to provide quality-enabled metadata registry models for quality description, GIServices retrieval methods, and standards for these GIService resources. Quality semantics [3,72] can be considered as promising solutions for helping service discovery by organize different quality facets hierarchically and describing complex end-user QoGIS requirements to the computers, thus enabling further intelligent and efficient service discovery.

To support quality optimization of GIService chain, sophisticated GIService selection methods must be developed. Mathematical programming approaches, like linear programming (LP), can deal with the optimization of GIService chain with limited number of atomic services and the corresponding service instances (i.e., service candidates of an atomic service) [73]. When the scale of the GIService chain became larger, these methods become less efficient due the computing complexity problem. Furthermore, optimal methods like LP can only give one optimized solution once in planning stage, which may become invalid or easily violated when the values of some quality metric change slightly, since service runtime and network environments are usually mutable. Evolutionary methods [74], like genetic algorithms, can be applied in parallel computing easily to improve the efficiency, while intelligent swarm algorithms provide strong search capabilities and robustness in dynamic situations [75]. Few of these methods have been used for GIService chain optimization [76]. Considering the nature of complex control/data flow structures and high dimensions of quality metric of GIService chain, more research on quality evaluation and selection must be done in the QoGIS field.

The developments in crowdsourcing technologies, like VGI and PPGIS, allow end-users to contribute to the perfection of the data quality of GIServices through public participation [77]. End-users can help to improve the completeness and accuracy of spatial data and metadata by submitting updates and corrections, such as OSM [78] and Geo-wiki [79]. With local knowledge [80] of the end-users, the mistakes in the spatial data and metadata can be more efficiently identified and corrected and the availability of the spatial related information is enhanced [81]. However, crowdsourcing technologies also create problems. Local residents might have better knowledge about their home towns or areas, but they may not be familiar with the cartographic rules which makes information submitted unsuitable for use, or it may even be wrong [82]. Thus, making full use of volunteered information demands strict quality auditing and inspection. There is research currently underway on the evaluation of the quality and improvement of VGI data [83].

QoGIS can also help service discovery through incorporating the end-user background information into the user recommendation model. Recommendations for the potentially desired GIService by previous selection context or user profiles can lead to efficient service discovery. For example, users selecting the imagery data service may also need the imagery processing service. There are research combining the method like collaborative filtering to find the user potential services as service recommendations [84]. User profiles can also help users, e.g., climate researchers, geology scientists, or urban planners, locate potential GIServices. A possible trend in this area may be to build semantic links between user preferences and their user profiles.

4.3.2. GIService Improvements

Many methods studied from the developer and system architect perspective provide better GIServices with improved QoGIS. Usability can be a serious impediment to user participation, especially in collecting public views for public-involved applications, like location selection for public infrastructure. Developers utilize usability testing to adjust and improve the design of the user interface and HCI workflow of a GIService [85]. GIServices often deal with large-volume geographic datasets and are time-consuming, so asynchronous invocation and status tracking mechanisms have been proposed to improve usability and the visibility of the processing status in the execution stage of a GIService chain [86].

Developers also optimize a map tile caching strategy [87] by mining user visiting patterns from server-side logs and adjusting the spatial indexing to fit parallel computing environments [88]. When performance is assured, other quality issues of the geospatial data content can be considered, such as accuracy of positioning datasets or topological completeness of the spatial relations of volunteered geospatial information [83].

System architects focus on a higher level of service improvement by using group visiting patterns to handle the server pressure caused by ever-changing user visiting behaviors. To find the visiting patterns, researchers mine and analyze server logs based on time series decomposition methods [89]. Based on these visiting patterns, system architects can design load balancing and auto-scaling strategies, thus scaling up to more computing nodes to handle high-concurrency visiting periods, and releasing computing resources during low visiting periods.

System architects also need to consider the geographical distribution of the servers [90,91], because the response time of deployed services is often related to the network distance and bandwidth between server and clients. In general, geographically close services provide better performance with quicker response times [92,93]. The rule can be applicable to most web-based applications but is even more critical in the case of data-intensive GIServices because of the data transmission issues.

Algorithms have been proposed to improve the service efficiency theoretically; however, technology innovations must be introduced. For example, user concurrency prediction provides suggestions for adjusting the computing nodes of the server side, however traditional manually configuring the server-side computing nodes can be very time-consuming. New engineering techniques, like cloud computing, can provide elastic mechanisms for dynamically setting up and adjusting servers [9]. Future challenges may lie in how to tackle real world applications using emerging IT technologies.

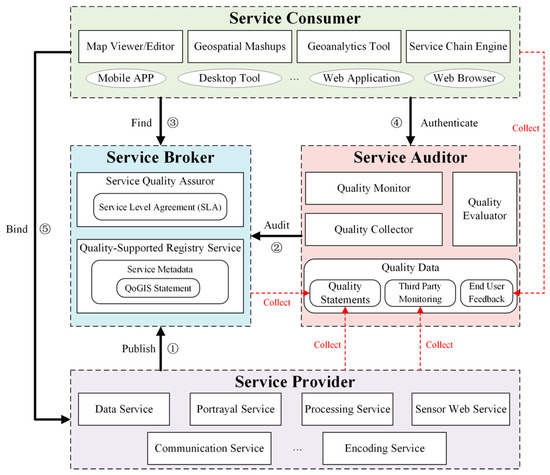

4.3.3. QoGIS-Aware GIService Framework

In order to better support GIService discovery and interaction, a quality-aware GIService framework should be established as an important research agenda. In the basic web service architecture model [93] of SOA, interoperability is archived by collaborating three types of software agents or so-called associates, i.e., service consumer, provider, and discovery broker, through three operations among them, i.e., publish, find, and bind. Discovery broker bridges service consumers and providers for service discovery by maintaining a service catalogue for service metadata management and query. Service providers publish their service metadata into the discovery broker, then service consumers find demanded services through the broker and bind with service providers accordingly. This basic architecture is widely-used in SOA. Universal Description Discovery and Integration (UDDI) and the e-business Registry Information Model (ebRIM) are widely-recognized standards for service discovery. By adopting this architecture, OGC proposes its OpenGIS Web Services (OWS) Service Framework (OSF) in OGC Reference Model (ORM) [94] for GIService interoperability. OGC also defines the standard of Catalogues Service for the Web (CSW) for GISevice discovery. However, quality description, audition, and assurance are missing in this architecture, so the service quality and its reliability cannot be guaranteed.

To address aforementioned issues, we propose a QoGIS-aware GIService framework by extending OSF framework, as illustrated in Figure 7. By learning from the OASIS web service quality model [95], we introduce quality audition, authentication, and assurance mechanism by adding one additional associate, i.e., a service auditor, and combining the role of quality assuror and discovery broker together to form an extended service broker into the framework. The service auditor plays the role of service quality audition and authentication. It collects, monitors, and evaluates quality information from service providers, built-in or third-party monitors, and user feedback. When a service provider registers a new GIService with a quality statement into the service broker, the audition process will be triggered. The auditor also updates quality evaluation periodically due to the mutable nature of quality. After finding matched services in the broker, the consumer can verify their quality statements and obtain third-party neutral quality authentication for service selection. Hence, quality description and audition are introduced into service discovery process. The entire interaction mode change from “publish-find-bind” to “publish-audit-find-authenticate-bind” accordingly. Additionally, the extended service broker provides runtime assurance during interaction by using a service level agreement (SLA) negotiated between the service provider and consumer. The compensation and punishment mechanism can be established accordingly.

Figure 7.

QoGIS-aware GIService framework.

The three categories of the QoGIS conceptual framework need be investigated thoroughly to implement such a QoGIS-aware framework. In modeling, a quality-supported metadata model is needed to incorporate quality description with basic service metadata. A comprehensive quality evaluation method is required to synthesize all quality facets from multiple quality sources. In acquisition, quality data collection and mining maintain the challenges of dealing with irrelevant and fraudulent data, the timeliness, and monitoring burden. In applications, quality-enabled search algorithms with user preference awareness, quality-support registry service, and search API standards are needed for both atomic GIService search and GIService chaining applications. The standards and models for quality audition, authentication, and assurance still need to be elaborated.

5. Conclusions

This paper presents a bibliographic review of QoGIS research. We adopt topic clustering analysis to compare QoGIS and QoS research focuses, and propose a QoGIS conceptual framework to interpret QoGIS research directions. This comparative analysis provides an evolutionary view to expose the overall picture of the research trends in QoGIS. A discussion of hot topics based on the proposed conceptual framework showed how several important problems are likely to become future tendencies in the field.

QoGIS is related to QoS and also has its own uniqueness. First, QoGIS is impacted by QoS in many ways as QoGIS trails QoS research. Thus, QoS is a reference for QoGIS future research. Second, QoGIS is unique given the nature of geospatial application requirements. Both the relevance and differences can help investigate the hot research topics and potential challenges in QoGIS fields, which are identified as follows:

(1) In modeling, QoGIS focuses on the quality metrics like spatial data quality, HCI issues, geo-visualization, and geography context but also have the potential needs to study e-commerce related quality issues in modeling, e.g., transaction and security. In addition to metrics, the quality modeling also needs to develop sophisticated evaluations methods and consider the impacts of the application purpose and end-users. Quality aggregation of a GIService chain need further investigation to address the quality propagation issue of QoGIS emphasis factors under complicated data/control-flow structures.

(2) In acquisition, service providers, monitoring brokers, and the user feedback all provide solutions for quality information acquisition, but have different advantages and shortcomings. Service providers are easy to acquire but often involved with trust issues. Monitoring brokers are more reliable, but often create the server-side pressures on the GIServices. User feedback reveals the level of end-user satisfaction and relieves pressure on servers due to monitoring, but often needs more mining techniques, such as spatial-temporal based QoGIS vibration pattern recognition [77]. In the future, combinations of different data sources may provide deeper and more comprehensive information about GIService quality. Commercialization of GIServices will create new demands, thus GIServices quality measurement and assessment must become more intelligent with scalable methods for mining quality information from user comments, user profiles [96] or other user feedback [60]. Innovations in HCI like virtual geo-environment, augmented reality, or mixed reality may present new challenges when mining user logs and in usability testing.

(3) QoGIS information can benefit end-users, developers, and system architects in applications. QoGIS and semantic enhancement might yield promising solutions to help end-users to find desired GIServices when dealing with large amounts of similar GIServices. The quality of data and metadata in the spatial domain might benefit from adoption of innovative methods originating in the VGI or public participation domains [72]. Developers can improve the usability based on the user feedback and enhance the service performance by designing strategies especially fitted to spatial data storage and visualization requirements. System architects must also consider applying spatial-temporal access patterns and engineering methods derived from emerging IT technologies, such as distributed load-balancing and auto-scaling, to solve quality issues. Moreover, more research must be conducted to establish a QoGIS-aware GIService framework for ensuring the entire GIService discovery and interaction process.

Nevertheless, this work still has some limitations in the search strategies, because the overall literatures that link to the topic of “QoGIS” and “QoS” cannot be obtained through the used topic keywords. The current search strategy in the WoS databases and most other academic databases still use the basic keyword-level matching method. Thus, we need to deal with this problem of literature completeness using more semantically enhanced solutions in the future, such as ontology-based searching or phrase semantic modeling. Additionally, the DCA method will focus more on the topics in the historical trajectories, rather than the most recent works. This is also the limitation for understanding most recent work using citation based DCA method. In the future, we can also consider more methods like combining the indicator like usage 180 provided by WoS database or the altmetrics [97] recently developed by scientometric researchers by using the mention data in the social media dataset.

Author Contributions

Conceptualization: Z.G., H.W. and K.H.; methodology: K.H. and Z.G.; formal analysis: K.H. and Z.G.; writing—original draft preparation: K.H. and Z.G.; writing—review and editing: Z.G., X.C., K.H., and S.C.M.; visualization: K.H. and Z.G.; supervision: H.W.; project administration: Z.G. and H.W.

Funding

This paper is supported by National Natural Science Foundation of China (No. 41501434 and No. 41371372) and National Key R&D Program of China (No. 2017YFB0503704 and No. 2018YFC0809806).

Acknowledgments

Thanks to Honghan Zheng and Dehua Peng for providing helps in experiments and manuscript formatting.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. QoGIS Search Statement

(TS = (geographic spatial service OR OGC web service OR web map service OR location based service OR WebGIS) AND TS = (quality OR performance) AND SU = (geography OR remote sensing)) AND LANGUAGE: (English)

Indexes = SCI-EXPANDED, SSCI Timespan = 1985-2018

Note: TS stands for Topic, SU stands for Research Area

Appendix B. QoS Search Statement

(TS = (QoS AND web service)) AND LANGUAGE: (English)

Indexes = SCI-EXPANDED, SSCI Timespan = 1985-2018

Note: TS stands for Topic, SU stands for Research Area

Appendix C. QoGIS and QoS Topics

Table A1.

QoGIS and QoS Topics Generated by Topic Clustering Analysis.

Table A1.

QoGIS and QoS Topics Generated by Topic Clustering Analysis.

| QoGIS Topics | QoS Topics | Category |

|---|---|---|

| Quality propagation in service chain Spatial data quality metrics Web map interaction and usability Geo-visualization requirements Data compression in mobile environments VGI spatial data quality Virtual geo-environment visualization quality GIService quality semantic | QoS metrics Reputation and trust issues Availability in Mobile network Security and privacy Online video QoS Fuzzy theory for quality evaluation QoS aggregation for service composition | Modeling |

| OGC service quality monitoring Global distributed monitoring User grading on geospatial data quality User behavior tracking | QoS monitor and prediction QoS queueing theory End-user quality Quality of experiences Caching strategy | Acquisition |

| Tiling cache strategy Quality based geospatial resource discovery Geoportal load balancing Cloud-based scalable geospatial services Geospatial service chain modeling Map service recommendation Optimization of big geospatial data access | QoS semantic QoS enhancement QoS based discovery Scalability improvements Service level agreements QoS based service composition Clonal service selection Web service recommendation | Application |

Appendix D. Catalogue of QoGIS Quality Metric

Table A2.

Catalogue of QoGIS Quality Metric with GIService Examples with Good or Bad Quality.

Table A2.

Catalogue of QoGIS Quality Metric with GIService Examples with Good or Bad Quality.

| QoGIS Factor | QoGIS Metric | Examples (GIServices with Exemplary “Good” or “Bad” Quality) |

|---|---|---|

| Runtime QoS | Scalability | WMTS: can auto-scaling to support both time efficient and computing resource efficient concurrent access versus failed to elastically dealing with the ever-changing concurrent access |

| response time | WMS: obtains capabilities document or map layers within one second vs. layer loading timeout | |

| throughput | WPS: able to process 1 GB volume image or thousands of requests per second vs. 2 KB per second or access denied | |

| reliability | WMS: 0.001% fail rate during operating in one month vs. 40% fail rate | |

| availability | WMS: 99.9% time in 24 hours is available vs. 50% time in 24 hours is not available | |

| robustness | WPS: enable fuzzy input with error-tolerant mechanism vs. only strictly input parameters are allowed | |

| Spatial data and metadata quality | completeness | WFS: all geospatial features are contained in the dataset vs. 20% features are missing |

| logical consistency | Navigation service: over 99.999% spatial relations of the road networks are correct vs. most of the spatial relations of road networks are wrong | |

| position accuracy | Navigation service: 0.1 meter positioning error for pedestrian navigation on a street vs. 200 meters positioning error | |

| thematic accuracy | WFS: the landcover data has high classification accuracy and can fulfil the need of users vs. low classification accuracy mismatching the requirement of users | |

| temporal accuracy | Navigation service: the road network updates in a daily basis vs. update once per year | |

| conformance | WFS: the metadata format is compliant with OGC and ISO metadata standard vs. the metadata is not standard-compliant | |

| timeliness | WCS: the metadata description is coherent with the changes on corresponding coverages vs. the metadata never changes when coverage changes | |

| Geo-visualization quality | immersion | Virtual geo-environment like Google Earth VR: the visualization is life-like vs. users feel very different from real world |

| intensity | Virtual geo-environment: close to real-world experience vs. far away from the real-world experiences | |

| illustration | Virtual geo-environment: annotations can efficiently help user to understand the virtual environment vs. no readily comprehensible annotations or reminders | |

| intuition | Virtual geo-environment: the visualization conforms to user cognitive habits vs. the visualization is often hard to recognize with daily experience | |

| quantity of information | WMS: appropriate number of features on the maps with distinguishable object size vs. too many features with too small size for perception | |

| aesthetics | WMS: beautiful map style and symbols vs. bad color schemes | |

| spatial cognition | Navigation service: Well-designed for users to find location vs. hard to find location and the way to there | |

| HCI quality | usability | Geoportal like data.gov: the GUI and HCI process is easy for novice vs. the system is hard to use |

| readability | Geoportal: well-designed layout for grasping information vs. bad layouts | |

| effectiveness | Geoportal: easy to find to expected geospatial resources versus hard to find | |

| efficiency | Geoportal: the interaction is very smooth in a time-efficient manner vs. the interaction in query and query result presentation is very slow | |

| satisfaction | Geoportal: well-designed GUI and simple HCI process that make the discovery process very convenient vs. bad GUI design and difficult to use | |

| Transaction support QoS | ACID (atomicity, consistency, isolation, durability) | WFS supporting transaction operation: ACID is ensured during the creation, update, deletion of a feature vs. inconsistency occurs during update |

| Configuration and management QoS | regulatory | CSW: can monitor and control the operating status of the service vs. the operating status is hard to trace and control |

| configuration standard compliance | WMS: support OGC map style configuration standard to configure and change map styles vs. don’t compliance with configuration standards | |

| exception handling | WPS: has error diagnosis and handling mechanism what can recover service execution efficiently vs. rough or no exception description in log file for problem analysis | |

| Security QoS | authentication | Geoportal: with user authentication mechanism for private data access vs. with no authentication |

| authorization | Geoportal: with authorization mechanism to give different authorities to different users to manipulate data vs. with no security authorization | |

| confidentiality | Geoportal: the data is secured to be reached by irrelevant users or hacker vs. everybody can access the data | |

| accountability | Geoportal: the system has the capability to take actions to users upon their behaviors and roles vs. no actions upon the system access behaviors | |

| traceability and auditability | Geoportal: user access is traced and carefully audited vs. no user access tracing and auditing | |

| data encryption | Geo-data transmission service: with strong data encryption algorithm to ensure data is not decoded by irrelevant users during network transmission vs. the encryption algorithm is easy to be decoded | |

| non-repudiation | Geoportal: who accessed the data or invoke an online geoprocessing task cannot be denied vs. the originator of the access cannot be identified |

References

- Yang, C.; Goodchild, M.; Huang, Q.; Nebert, D.; Raskin, R.; Bambacus, M.; Xu, Y.; Fay, D. Spatial cloud computing: How can the geospatial sciences use and help shape cloud computing? Int. J. Digit. Earth 2011, 4, 305–329. [Google Scholar] [CrossRef]

- Tao, C.; Wang, Q.K. GIServices-based 3D Internet GIS: GeoEye 3D. Acta Geod. Cartogr. Sin. 2002, 31, 17–21. [Google Scholar]

- Peng, Q.; You, L.; Dong, N. A location-aware GIServices quality prediction model via collaborative filtering. Int. J. Digit. Earth 2017, 11, 897–972. [Google Scholar] [CrossRef]

- Shen, S.; Zhang, T.; Wu, H.; Liu, Z. A Catalogue Service for Internet GIServices Supporting Active Service Evaluation and Real-Time Quality Monitoring. Trans. GIS 2012, 16, 745–761. [Google Scholar] [CrossRef]

- Yue, P.; Baumann, P.; Bugbee, K.; Jiang, L. Towards intelligent GIServices. Earth Sci. Inform. 2015, 8, 463–481. [Google Scholar] [CrossRef]

- Janowicz, K.; Schade, S.; Bröring, A.; Keßler, C.; Maué, P.; Stasch, C. Semantic enablement for spatial data infrastructures. Trans. GIS 2010, 14, 111–129. [Google Scholar] [CrossRef]

- Gui, Z.; Yang, C.; Xia, J.; Liu, K.; Xu, C.; Li, J.; Lostritto, P. A performance, semantic and service quality-enhanced distributed search engine for improving geospatial resource discovery. Int. J. Geogr. Inf. Sci. 2013, 27, 1109–1132. [Google Scholar] [CrossRef]

- Li, R.; Guo, R.; Xu, Z.; Feng, W. A prefetching model based on access popularity for geospatial data in a cluster-based caching system. Int. J. Geogr. Inf. Sci. 2012, 26, 1831–1844. [Google Scholar] [CrossRef]

- Xia, J.; Yang, C.; Liu, K.; Gui, Z.; Li, Z.; Huang, Q.; Li, R. Adopting Cloud Computing to Optimize Spatial Web Portals for Better Performance to Support Digital Earth and Other Global Geospatial Initiatives. Int. J. Digit. Earth 2015, 8, 451–475. [Google Scholar] [CrossRef]

- Kim, J.W.; Park, S.S.; Kim, C.S.; Lee, Y. The efficient web-based mobile GIS service system through reduction of digital map. In Proceedings of the International Conference on Computational Science and Its Applications, Assisi, Italy, 14–17 May 2004; Springer: Berlin, Germany, 2004. [Google Scholar]

- Kim, D.H.; Kim, M.S. Web GIS service component based on open environment. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; Volume 6, pp. 3346–3348. [Google Scholar]

- Blower, J.D. GIS in the cloud: Implementing a web map service on Google App Engine. In Proceedings of the 1st International Conference and Exhibition on Computing for Geospatial Research & Application, Washington, DC, USA, 21–23 June 2010; Volume 34. [Google Scholar]

- Rolfhamer, P.; Grabowska, K.; Ekdahl, K. Implementing a public web based GIS service for feedback of surveillance data on communicable diseases in Sweden. BMC Infect. Dis. 2004, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- ISO 19157. Preview Geographic Information—Data Quality. 2013. Available online: https://www.iso.org/standard/32575.html (accessed on 4 January 2019).

- ISO 19115. Geographic Information—Metadata. 2003. Available online: https://www.iso.org/standard/26020.html (accessed on 4 January 2019).

- Federal Geographic Data Committee (FGDC). Geospatial Metadata. Available online: https://www.fgdc.gov/metadata (accessed on 4 January 2019).

- Federal Geographic Data Committee (FGDC). Geospatial Standards. Available online: https://www.fgdc.gov/standards (accessed on 4 January 2019).

- Quality of Service and Experience Domain Working Group (QoSE DWG). Available online: https://external.opengeospatial.org/twiki_public/QualityOfService/WebHome (accessed on 4 January 2019).

- Infrastructure for Spatial Information in Europe (INSPIRE). Directives. Available online: http://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32009R0976&from=EN (accessed on 4 January 2019).

- Wu, H.; Zhang, H. QoGIS: Concept and research framework. Geomat. Inf. Sci. Wuhan Univ. 2007, 32, 385–388. [Google Scholar]

- Yang, C.; Wong, D.W.; Yang, R.; Kafatos, M.; Li, Q. Performance-improving techniques in web-based GIS. Int. J. Geogr. Inf. Sci. 2005, 19, 319–342. [Google Scholar] [CrossRef]

- Small, H. Co-citation in the scientific literature: A new measure of the relationship between two documents. J. Am. Soc. Inf. Sci. 1973, 24, 265–269. [Google Scholar] [CrossRef]

- Wei, F.; Grubesic, T.H.; Bishop, B.W. Exploring the GIS Knowledge Domain Using CiteSpace. Prof. Geogr. 2015, 67, 374–394. [Google Scholar] [CrossRef]

- Biljecki, F. A scientometric analysis of selected GIScience journals. Int. J. Geogr. Inf. Sci. 2016, 30, 1302–1335. [Google Scholar] [CrossRef]

- Chen, C. CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 359–377. [Google Scholar] [CrossRef]

- Nagarajan, R.; Kurose, J.F.; Towsley, D. Modelling and Performance Evaluation of ATM Technology. In Local Allocation of End-to-End Quality-of-Service in High-Speed Networks, Proceedings of the IFIP TC6 Task Group/WG6.4 International Workshop on Performance of Communication Systems, Martinique, French Caribbean Island, 25–27 January 1993; Elsevier: Oxford, UK, 1993. [Google Scholar]

- Wu, H.; Zhang, H.; Liu, X.; Sun, X. Adaptive Architecture of Geospatial Information Service over the Internet with QOGIS Embeded. In Proceedings of the International Society of Photogrammetry and Remote Sensing (ISPRS) Workshop on Service and Application of Spatial Data Infrastructure XXXVI (4/W6), Hangzhou, China, 14–16 October 2006; pp. 53–57. [Google Scholar]

- Lee, C.; Lehoezky, J.; Rajkumar, R.; Siewiorek, D. On quality of service optimization with discrete QoS options. In Proceedings of the Fifth IEEE Real-Time Technology and Applications Symposium, Vancouver, BC, Canada, 4 June 1999; pp. 276–286. [Google Scholar]

- Satyanarayanan, M. Pervasive computing: Vision and challenges. IEEE Pers. Commun. 2001, 8, 10–17. [Google Scholar] [CrossRef]

- Çöltekin, A.; Heil, B.; Garlandini, S.; Fabrikant, S.I. Evaluating the effectiveness of interactive map interface designs: A case study integrating usability metrics with eye-movement analysis. Cartogr. Geogr. Inf. Sci. 2009, 36, 5–17. [Google Scholar] [CrossRef]

- Wei, Z.K.; Oh, Y.H.; Lee, J.D.; Kim, J.H.; Park, D.S.; Lee, Y.G.; Bae, H.Y. Efficient spatial data transmission in Web-based GIS. In Proceedings of the 2nd International Workshop on Web Information and Data Management, Kansas City, MO, USA, 2–6 November 1999; pp. 38–42. [Google Scholar]

- Smith, J.M.; Traw, C.B.S. Giving applications access to Gb/s networking. IEEE Netw. 1993, 7, 44–52. [Google Scholar] [CrossRef]

- Xia, J.; Yang, C.; Li, Q. Using spatiotemporal patterns to optimize Earth Observation Big Data access: Novel approaches of indexing, service modeling and cloud computing. Comput. Environ. Urban Syst. 2018, 72, 191–203. [Google Scholar] [CrossRef]

- Wang, Z. Internet QoS: Architectures and Mechanisms for Quality of Service; Morgan Kaufmann: Burlington, MA, USA, 2001. [Google Scholar]

- Li, D.; Zhang, J.; Wu, H. Spatial data quality and beyond. Int. J. Geogr. Inf. Sci. 2012, 26, 2277–2290. [Google Scholar] [CrossRef]

- Huang, M.; Maidment, D.R.; Tian, Y. Using SOA and RIAs for water data discovery and retrieval. Environ. Modell. Softw. 2011, 26, 1309–1324. [Google Scholar] [CrossRef]

- Brown, M.; Sharples, S.; Harding, J.; Parker, C.J.; Bearman, N.; Maguire, M.; Forrest, D.; Haklay, M.; Jackson, M. Usability of geographic information: Current challenges and future directions. Appl. Ergon. 2013, 44, 855–865. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.; Liu, Y. Intelligent context-aware and adaptive interface for mobile LBS. Comput. Intell. Neurosci. 2015, 10, 489793. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Pierch, M.E.; Fox, G.C.; Devadasan, N. Implementing a caching and tiling map server: A web 2.0 case study. In Proceedings of the CTS 2007 IEEE International Symposium on Collaborative Technologies and Systems, Orlando, FL, USA, 25 May 2007; pp. 247–256. [Google Scholar]

- Jutila, U.; Koponen, M.; Rranta-aho, M.; Holder, P.; Ubhi, D.; Brou, C.; Aronsheim-grotsch, J.; Hall, J.; Smirnow, M.; Tschichholz, M. A Common Framework for Qos/Network Performance in a Multiprovider Environment; Project P806-GI; EURESCOM: Heidelberg, Germany, 1999. [Google Scholar]

- Wu, H.; Li, Z.; Zhang, H.; Yang, C.; Shen, S. Monitoring and evaluating the quality of Web Map Service resources for optimizing map composition over the internet to support decision making. Comput. Geosci. 2011, 37, 485–494. [Google Scholar] [CrossRef]

- Ran, S. A model for web services discovery with QoS. ACM Sigecom Exch. 2003, 4, 1–10. [Google Scholar] [CrossRef]

- Heipke, C. Crowdsourcing geospatial data. ISPRS J. Photogramm. Remote Sens. 2010, 65, 550–557. [Google Scholar] [CrossRef]

- Huang, B.; Jiang, B.; Li, H. An integration of GIS, virtual reality and the Internet for visualization, analysis and exploration of spatial data. Int. J. Geogr. Inf. Sci. 2001, 15, 439–456. [Google Scholar] [CrossRef]

- Haklay, M.; Tobón, C. Usability evaluation and PPGIS: Towards a user—Centred design approach. Int. J. Geogr. Inf. Sci. 2003, 17, 577–592. [Google Scholar] [CrossRef]

- Liu, B.; Li, D.; Xia, Y.; Ruan, J.; Xu, L.; Wu, H. Combinational Reasoning of Quantitative Fuzzy Topological Relations for Simple Fuzzy Regions. PLoS ONE 2015, 10, e0117379. [Google Scholar] [CrossRef] [PubMed]

- Fan, H.; Zipf, A.; Fu, Q.; Neis, P. Quality assessment for building footprints data on OpenStreetMap. Int. J. Geogr. Inf. Sci. 2014, 28, 700–719. [Google Scholar] [CrossRef]

- Jones, S.; Wilikens, M.; Morris, P.; Masera, M. Trust requirements in e-business. Commun. ACM 2000, 43, 81–87. [Google Scholar] [CrossRef]

- Zheng, L.; You, F. A fragile digital watermark used to verify the integrity of vector map. In Proceedings of the 2009 International Conference on E-Business and Information System Security, Wuhan, China, 23–24 May 2009; pp. 1–4. [Google Scholar]

- Gradinar, A.I.; Huck, J.; Coulton, P.; Salinas, L. Beyond the blandscape: Utilizing aesthetics in digital cartography. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1383–1388. [Google Scholar]