Method of Constructing Point Generalization Constraints Based on the Cloud Platform

Abstract

:1. Introduction

2. Impact of the Cloud Platform on Point Generalization Constraints

2.1. The Traditional Point Generalization Constraints

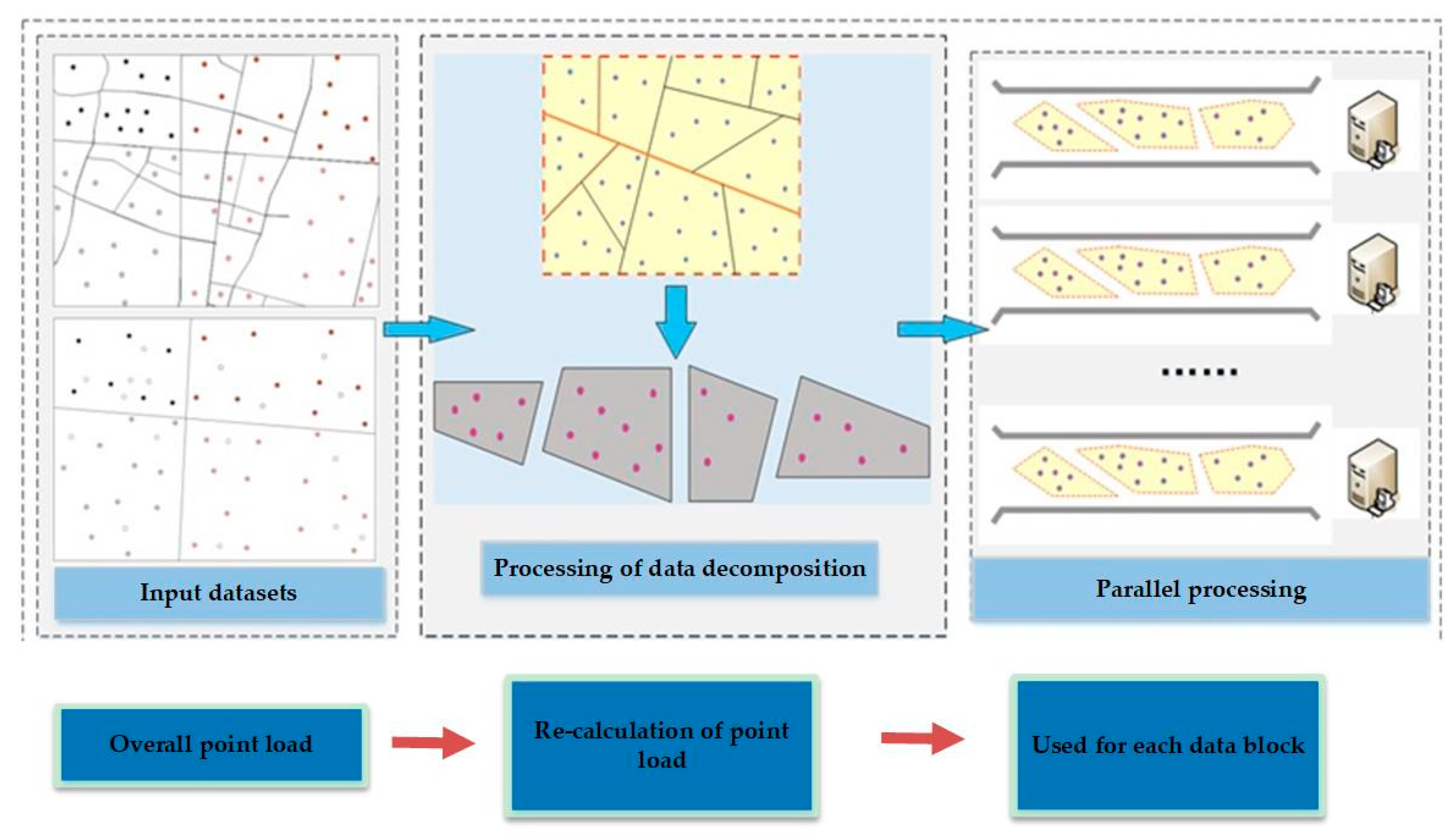

2.2. The Impact of Data Decomposition and Real-Time Visualization on the Point Generalization Constraints

3. Construction Method of Point Generalization Constraints Based on the Cloud Platform

3.1. Deficiencies of Töpfer’s Law in the Cloud Platform

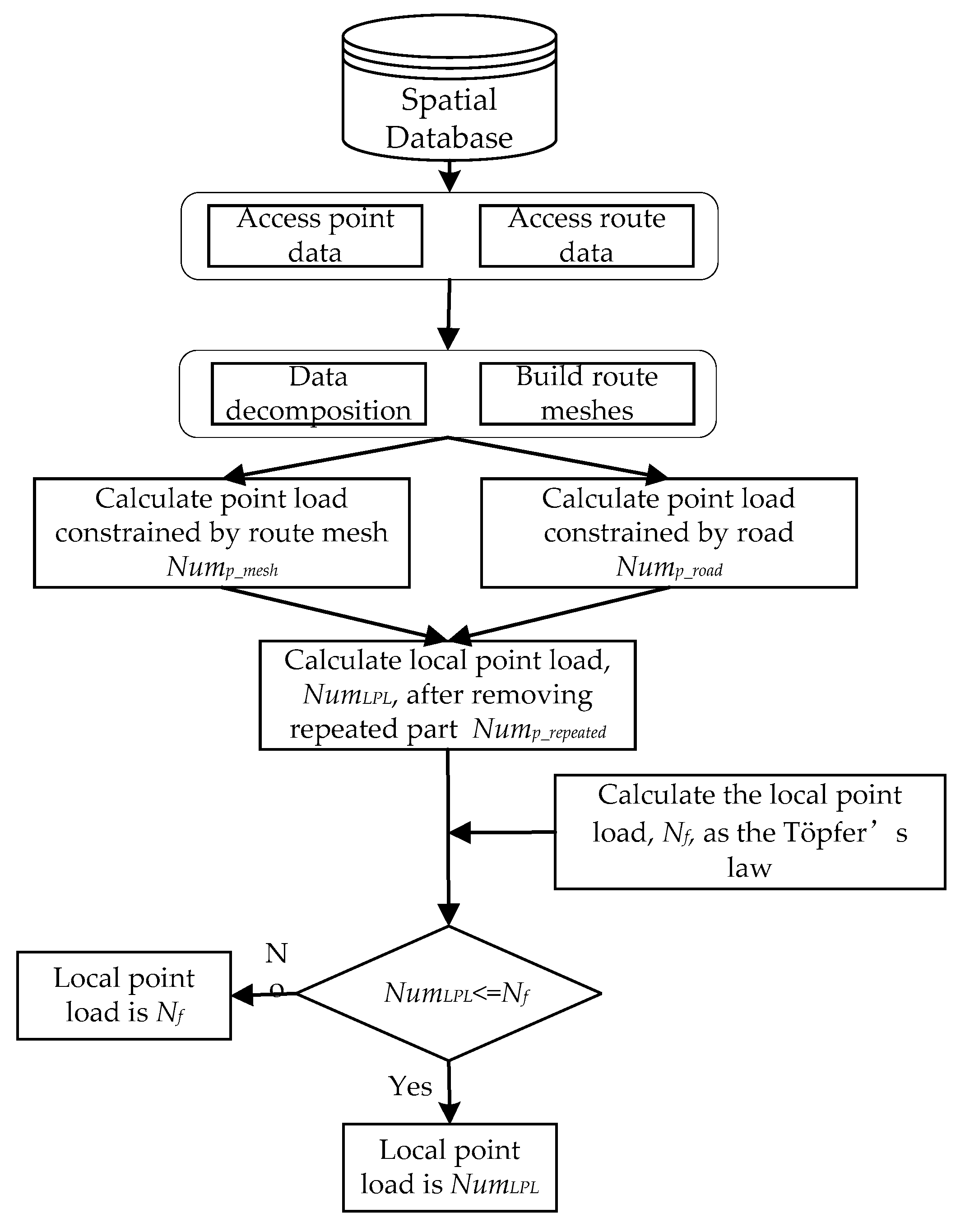

3.2. The Capacity of Route Meshes—The Local Point Load

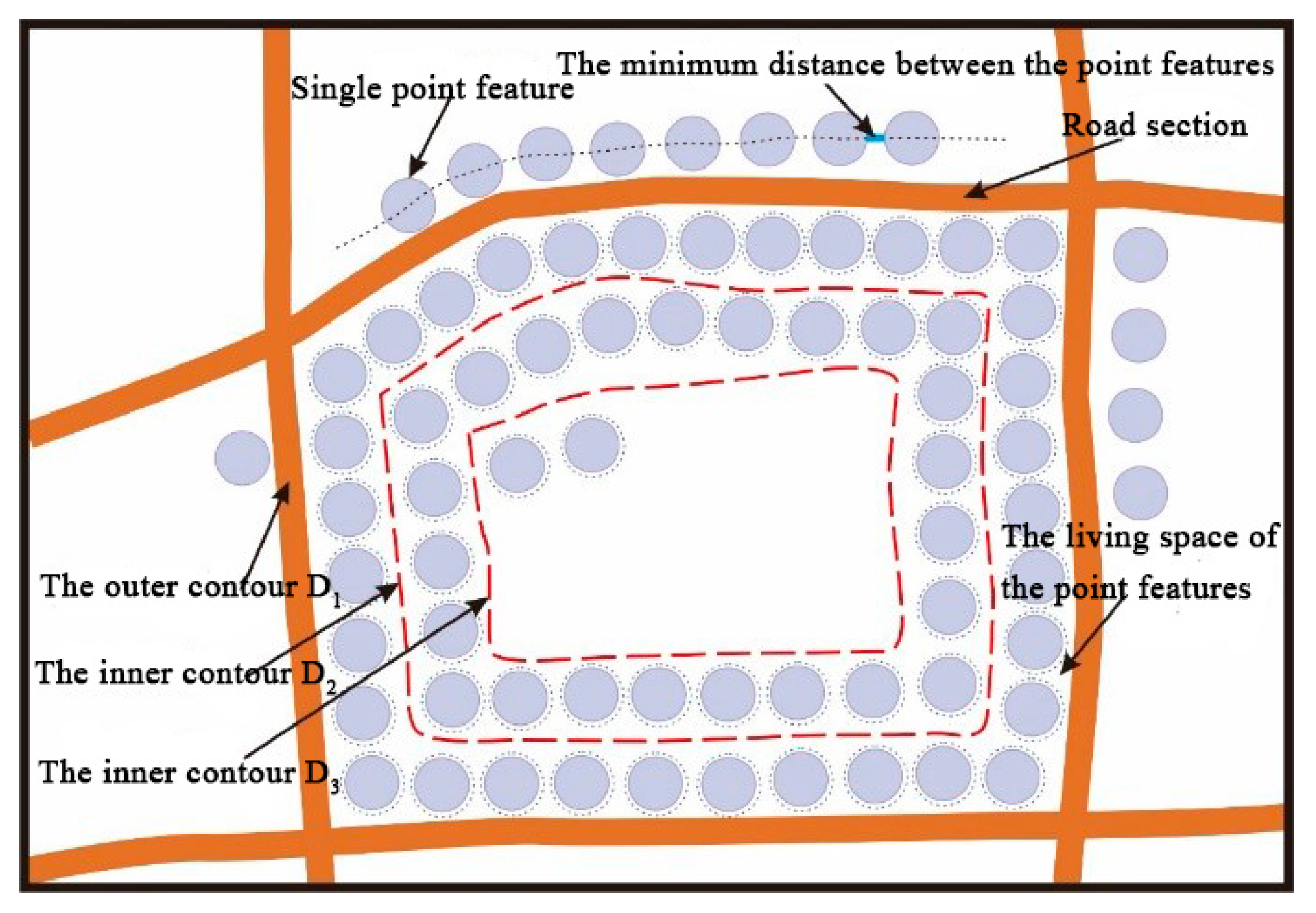

3.2.1. Point Load Calculation within the Mesh

3.2.2. Point Load Calculation Outside of the Mesh

3.2.3. The Local Point Load

4. Experiments and Discussion

4.1. Design of Experiment

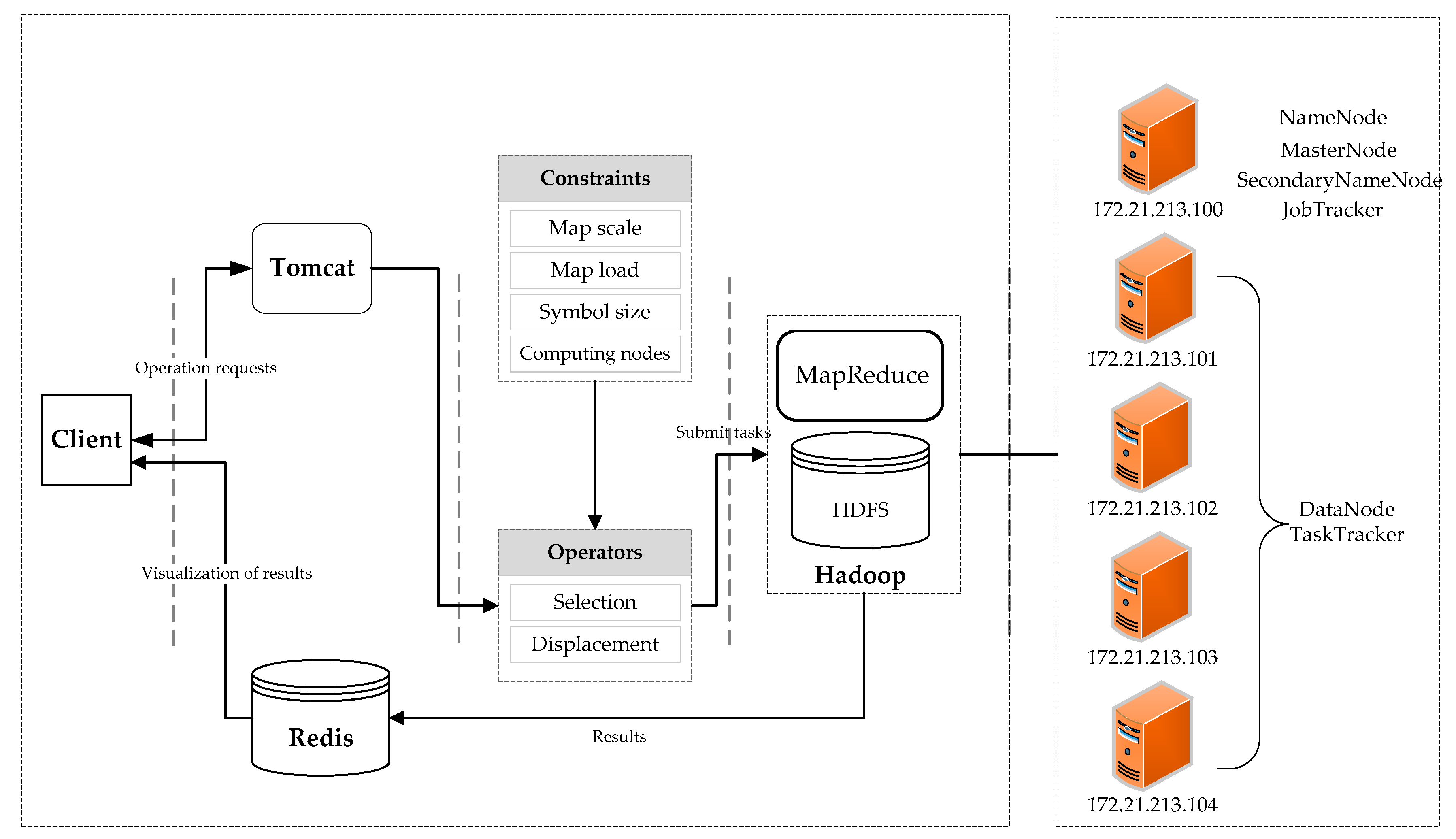

4.1.1. Experimental Platform

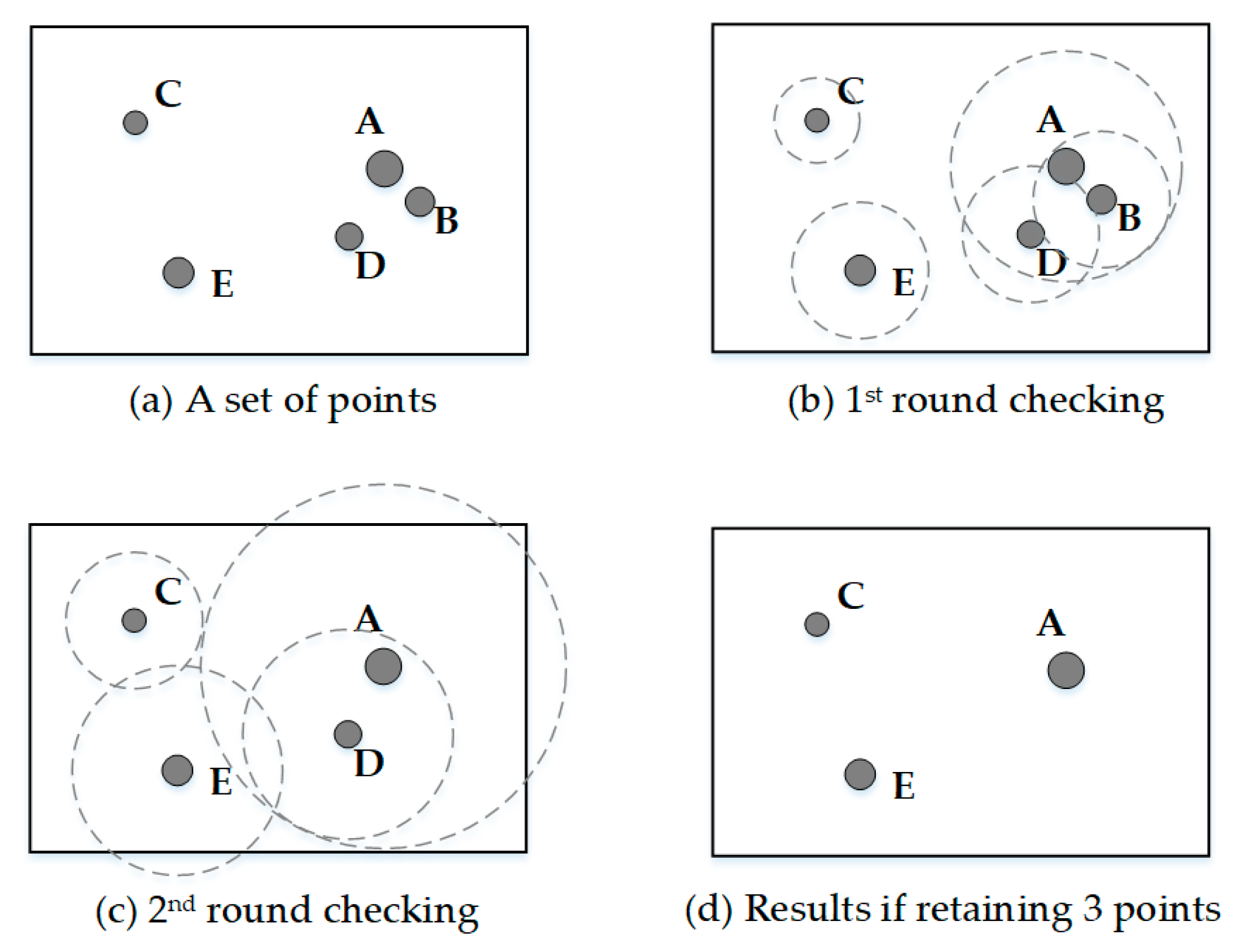

4.1.2. The Circle Growth Algorithm

4.1.3. Experimental Data

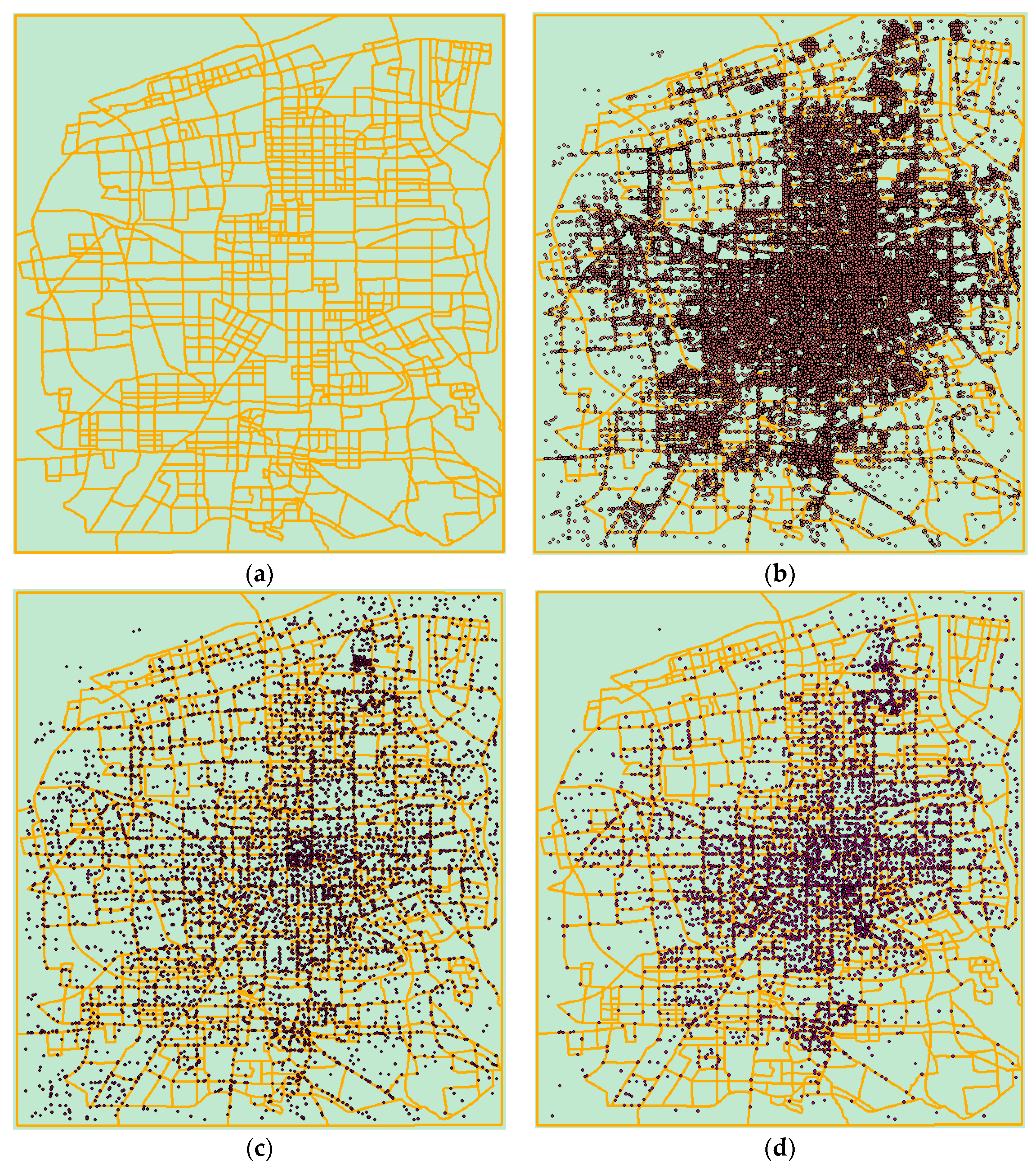

4.2. Experimental Results

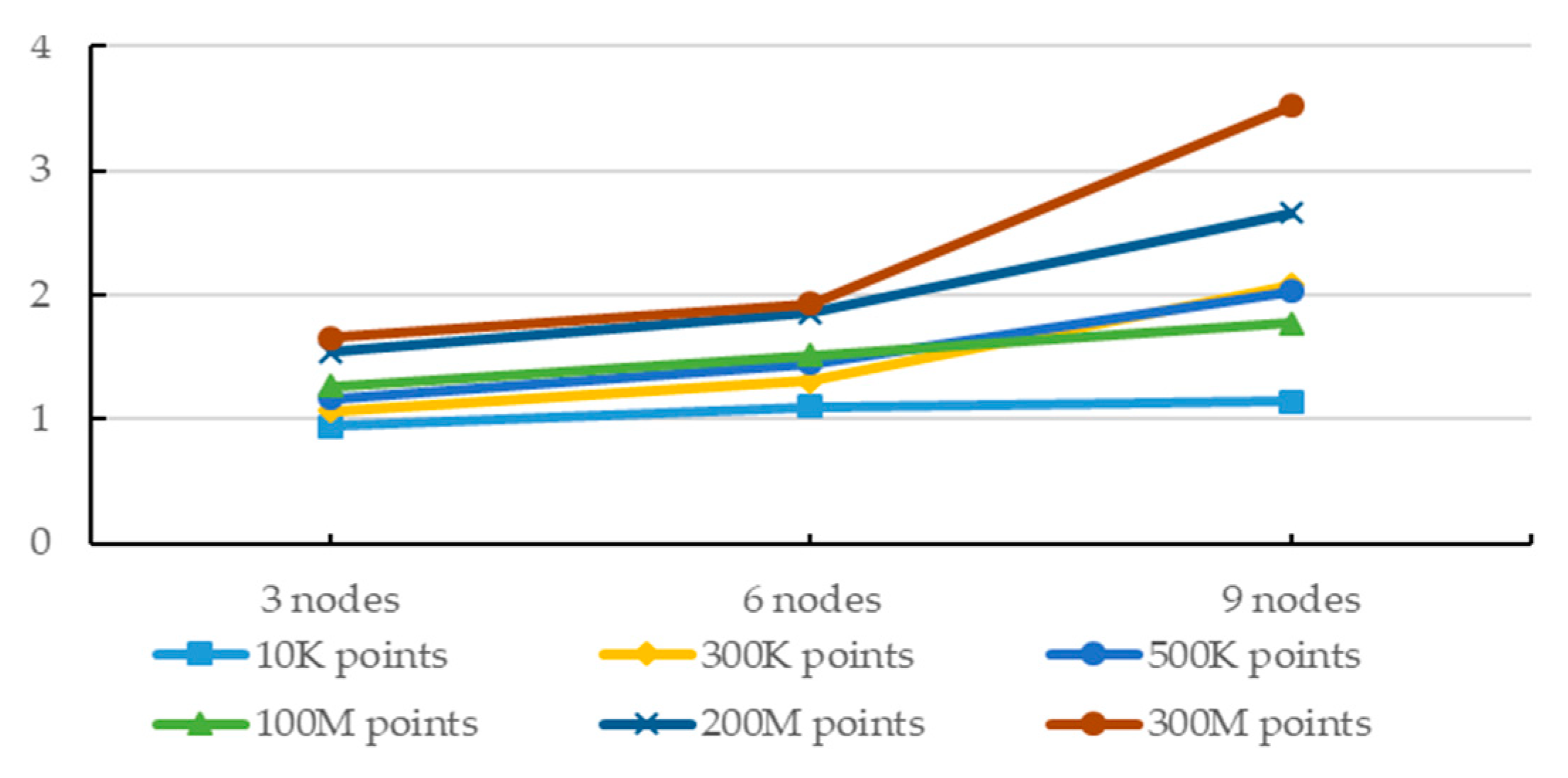

4.2.1. Results of the Algorithm Efficiency under the Control of the Constraints

4.2.2. Preservation of Spatial Pattern under the Control of the Constraints

4.3. Discussion

4.3.1. Efficiency Analysis

4.3.2. Quality Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Staněk, K.; Friedmannová, L.; Kubíček, P.; Konečny, M. Selected issues of cartographic communication optimization for emergency centers. Int. J. Digit. Earth 2010, 3, 316–339. [Google Scholar] [CrossRef] [Green Version]

- McKenzie, G.; Janowicz, K.; Gao, S.; Yang, J.-A.; Hu, Y. POI pulse: A multi-granular, semantic signature—Based information observatory for the interactive visualization of big geosocial data. Cartogr. Int. J. Geogr. Inf. Geovisualization 2015, 50, 71–85. [Google Scholar] [CrossRef]

- Stampach, R.; Kubicek, P.; Herman, L. Dynamic visualization of sensor measurements: Context based approach. Quaest. Geogr. 2015, 34, 117–128. [Google Scholar] [CrossRef]

- Park, W.; Yu, K. Hybrid line simplification for cartographic generalization. Pattern Recognit. Lett. 2011, 32, 1267–1273. [Google Scholar] [CrossRef]

- Mackaness, W.; Burghardt, D.; Duchêne, C. Map generalisation: Fundamental to the modelling and understanding of geographic space. In Abstracting Geographic Information in a Data Rich World; Springer: Berlin, Germany, 2014; pp. 1–15. [Google Scholar]

- Timpf, S. Abstraction, levels of detail, and hierarchies in map series. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin, Germany, 1999; Volume 1661, pp. 125–140. [Google Scholar]

- Li, Z. Algorithmic Foundation of Multi-Scale Spatial Representation; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Scott, D.W. Multivariate Density Estimation: Theory, Practice, and Visualization; John Wiley & Sons: Hoboken, NJ, USA, 1992; Volume 156, ISBN 0471547700. [Google Scholar]

- Peters, S. Quadtree- and octree-based approach for point data selection in 2D or 3D. Ann. GIS 2013, 19, 37–44. [Google Scholar] [CrossRef]

- Edwardes, A.; Burghardt, D.; Weibel, R. Portrayal and generalisation of point maps for mobile information services. In Map-Based Mobile Services: Theories, Methods and Implementations; Springer: Berlin, Germany, 2005; pp. 11–30. ISBN 3540230556. [Google Scholar]

- Foerster, T.; Stoter, J.; Kraak, M.-J. Challenges for Automated Generalisation at European Mapping Agencies: A Qualitative and Quantitative Analysis. Cartogr. J. 2010, 47, 41–54. [Google Scholar] [CrossRef]

- Touya, G.; Berli, J.; Lokhat, I.; Regnauld, N. Experiments to Distribute and Parallelize Map Generalization Processes. Cartogr. J. 2017, 54, 322–332. [Google Scholar] [CrossRef]

- Ziouel, T.; Amieur-Derbal, K.; Boukhalfa, K. SOLAP on-the-fly generalization approach based on spatial hierarchical structures. In IFIP Advances in Information and Communication Technology; Springer: Berlin, Germany, 2015; Volume 456, pp. 279–290. [Google Scholar]

- Jones, C.B.; Ware, J.M. Map generalization in the Web age. Int. J. Geogr. Inf. Sci. 2005, 19, 859–870. [Google Scholar] [CrossRef]

- van Oosterom, P. A storage structure for a multi-scale database: The reactive-tree. Comput. Environ. Urban Syst. 1992, 16, 239–247. [Google Scholar] [CrossRef]

- Guo, Q.S.; Huang, Y.L.; Zheng, C.Y.; Cai, Y.X. Spatial reasoning and progressive map generalization 2007.

- Šuba, R.; Meijers, M.; Oosterom, P. Continuous Road Network Generalization throughout All Scales. ISPRS Int. J. Geo-Inf. 2016, 5, 145. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Gong, H. The Basic Problems of Multi-scale Geographical Elements Expression. Sci. China 2006, 36, 38–44. [Google Scholar]

- Shen, J.; Guo, L.; Zhu, W.; Gu, N. Parallel computing suitability of contour simplification based on MPI. Cehui Xuebao 2013, 42, 621–628. [Google Scholar]

- Daoud, J.J.A.; Doytsher, Y. An automated cartographic generalization process: A pseudo-physical model. In Proceedings of the XXI Congress of the International Society for Photogrammetry and Remote Sensing (ISPRS 2008), Beijing, China, 3–11 July 2008; pp. 419–424. [Google Scholar]

- Karsznia, I.; Weibel, R. Improving settlement selection for small-scale maps using data enrichment and machine learning. Cartogr. Geogr. Inf. Sci. 2018, 45, 111–127. [Google Scholar] [CrossRef]

- Lee, J.; Jang, H.; Yang, J.; Yu, K. Machine Learning Classification of Buildings for Map Generalization. ISPRS Int. J. Geo-Inf. 2017, 6, 309. [Google Scholar] [CrossRef]

- Li, X.; Grandvalet, Y.; Davoine, F. Explicit Inductive Bias for Transfer Learning with Convolutional Networks. arXiv, 2108; arXiv:1802.01483. [Google Scholar]

- Yang, C.; Huang, Q.; Li, Z.; Liu, K.; Hu, F. Big Data and cloud computing: Innovation opportunities and challenges. Int. J. Digit. Earth 2017, 10, 13–53. [Google Scholar] [CrossRef]

- Li, Z.; Yang, C.; Liu, K.; Hu, F.; Jin, B. Automatic Scaling Hadoop in the Cloud for Efficient Process of Big Geospatial Data. ISPRS Int. J. Geo-Inf. 2016, 5, 173. [Google Scholar] [CrossRef]

- Li, Z.; Hu, F.; Schnase, J.L.; Duffy, D.Q.; Lee, T.; Bowen, M.K.; Yang, C. A spatiotemporal indexing approach for efficient processing of big array-based climate data with MapReduce. Int. J. Geogr. Inf. Sci. 2017, 31, 17–35. [Google Scholar] [CrossRef]

- Guan, Q.; Shi, X. Opportunities and challenges for urban land-use change modeling using high-performance computing. In Modern Accelerator Technologies for Geographic Information Science; Springer: Boston, MA, USA, 2013; pp. 227–236. ISBN 9781461487456. [Google Scholar]

- Guan, Q.; Kyriakidis, P.C.; Goodchild, M.F. A parallel computing approach to fast geostatistical areal interpolation. Int. J. Geogr. Inf. Sci. 2011, 25, 1241–1267. [Google Scholar] [CrossRef] [Green Version]

- Guercke, R.; Brenner, C.; Sester, M. Data Integration and Generalization for SDI in a Grid Computing Framework. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008. [Google Scholar]

- Foerster, T.; Stoter, J.E.; Morales Guarin, J.M. Enhancing cartographic generalization processing with grid computing power and beyond. GIS Sci. Z. Geoinform. 2009, 3, 98–101. [Google Scholar]

- Neun, M.; Burghardt, D.; Weibel, R. Automated processing for map generalization using web services. Geoinformatica 2009, 13, 425–452. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Wu, C.; Wang, L. A Conceptual Framework for the Automated Generalization of Geological Maps Based on Multiple Agents and Workflow. IEEE Access 2016, 4. [Google Scholar] [CrossRef]

- Yi, F.; Li, Q.; Yang, W. Parallel Algorithm of Delaunay Triangulation Dividing. Minimicro Syst. 2001, 22, 450–452. [Google Scholar]

- Bing, Z.; Zhonghui, F.; Hexing, W. The Study of Parallel Clustering Algorithm for Cluster System. Comput. Sci. 2007, 34, 4–16. [Google Scholar]

- Jianjun, L.; Zhaoyang, L. Parallel Algorithm for Delaunay Triangulation and Its Implementation on Computer Clusters. J. Northeast For. Univ. 2008, 10, 32. [Google Scholar]

- Li, H.; Zhai, J. A parallel algorithm for topological sort in directed graphs. Yantai Norm. Univ. J. 2005, 21, 168–171. [Google Scholar]

- Langran, G.E.; Poiker, T.K. Integration of name selection and name placement. In Proceedings of the Second International Symposium on Spatial Data Handling, Seattle, WA, USA, 5–10 July 1986. [Google Scholar]

- Van Kreveld, M.; Van Oostrum, R.; Snoeyink, J. Efficient settlement selection for interactive display. In Proceedings of the Auto Carto 13, Seattle, WA, USA, 7–10 April 1997; pp. 287–296. [Google Scholar]

- Weibel, R.; Dutton, G. Constraint-based automated map generalization. In Proceedings of the 8th International Symposium on Spatial Data Handling, Vancouver, BC, Canada, 11–15 July 1998; pp. 214–224. [Google Scholar]

- Harrie, L. An Optimisation Approach to Cartographic Generalisation; Department of Technology and Society, Lund University: Lund, Sweden, 2001. [Google Scholar]

- Ruas, A.; Plazanet, C. Strategies for automated generalization. In Advances in GIS Research II, Proceedings of the 7th International Symposium on Spatial Data Handling, Delft, The Netherlands, 12–16 August 1996; TU Delft: Delft, The Netherlands, 1996; pp. 319–336. [Google Scholar]

- Töpfer, F.; Pillewizer, W. The principles of selection. Cartogr. J. 1966, 3, 10–16. [Google Scholar] [CrossRef]

- Srnka, E. The analytical solution of regular generalization in cartography. Int. Yearb. Cartogr. 1970, 10, 48–62. [Google Scholar]

- Štampach, R.; Mulíčková, E. Automated generation of tactile maps. J. Maps 2016, 12, 532–540. [Google Scholar] [CrossRef] [Green Version]

- Yan, H.; Wang, J. A generic algorithm for point cluster generalization based on Voronoi diagrams. J. Image Graph. 2005, 10, 633–636. [Google Scholar]

| Type of Constraints | Name of Constraints | Description |

|---|---|---|

| Geometric constraints | Minimum size | The minimum symbol size for point features at a given scale |

| Minimum distance of abutment | Graphic restrictions on point features at a certain scale | |

| Topological constraints | Relations between points and routes | The spatial attachment between point features and route features |

| Relations between points and route meshes | Route meshes contain the point group | |

| Adjacent point | The number of points in a group that has a contiguous relationship with a given point | |

| Structural constraints | Reserving feature points | The number of important points reserved |

| Number of clusters | Changes of points distribution characters | |

| Contour of clusters | Changes in the region of points distribution | |

| Point load | A measure of how many points can be held on the map | |

| Regional absolute density | Parameters describing point group metrics information | |

| Regional relative density | The point density changes after point generalization |

| Datasets | The Number of Features | Data Volume |

|---|---|---|

| Point data of Xinjiekou Nanjing | 12,006 | 9.91 MB |

| Road data of Xinjiekou Nanjing | 70 | 26.5 KB |

| Point data of Xian | 59,650 | 49.6 MB |

| Road data of Xian | 513 | 362 KB |

| Point data of Beijing | 207,710 | 171 MB |

| Road data of Beijing | 1292 | 314 KB |

| Simulation point data of Xian_1 | 10,000 | 8.5 MB |

| Simulation point data of Xian_2 | 300,000 | 189 MB |

| Simulation point data of Xian_3 | 500,000 | 240 MB |

| Simulation point data of Xian_4 | 1,000,000 | 495 MB |

| Simulation point data of Xian_5 | 2,000,000 | 1331.2 MB |

| Simulation point data of Xian_6 | 3,000,000 | 5427.2 MB |

| Datasets | Nanjing | Xian | Beijing |

|---|---|---|---|

| Input data size | 9.91 MB | 49.6 MB | 171 MB |

| Output data size | 2.48 MB | 2.79 MB | 2.36 MB |

| Total data size | 12.39 MB | 52.39 MB | 173.36 MB |

| Processing time of the stand-alone environment | 51″ | 3′30″ | 44′30″ |

| Processing time of the cloud platform | |||

| 1 node | 32″ | 52″ | 4′11″ |

| 3 nodes | 33″ | 45″ | 3′11″ |

| 6 nodes | 29″ | 30″ | 2′14″ |

| 9 nodes | 28″ | 21″ | 1′03″ |

| Datasets | Simulation Point Data of Xi’an_1 | Simulation Point Data of Xi’an_2 | Simulation Point Data of Xi’an_3 | Simulation Point Data of Xi’an_4 | Simulation Point Data of Xi’an_5 | Simulation Point Data of Xi’an_6 |

|---|---|---|---|---|---|---|

| Input data size | 8.5 MB | 189 MB | 240 MB | 495 MB | 1331.2 MB | 5427.2 MB |

| Number of point features | 10,000 | 300,000 | 500,000 | 1,000,000 | 2,000,000 | 3,000,000 |

| Processing time of the cloud platform | ||||||

| 1 node | 33″ | 4′57″ | 9′53″ | 23′17″ | 1:10′46″ | 2:25′22″ |

| 3 nodes | 35″ | 4′38″ | 8′31″ | 18′23″ | 45′55″ | 1:27′50″ |

| 6 nodes | 30″ | 3′47″ | 6′50″ | 15′24″ | 38′12″ | 1:15′34″ |

| 9 nodes | 29″ | 2′23″ | 4′53″ | 13′11″ | 26′36″ | 41′17″ |

| Number of Nodes | Nanjing | Xi’an | Beijing | |||

|---|---|---|---|---|---|---|

| ISpeedup | ISpeedup/V 1 | ISpeedup | ISpeedup/V | ISpeedup | ISpeedup/V | |

| 1 node | 1.59375 | 0.128632 | 4.230769 | 0.080755 | 10.63745 | 0.06136 |

| 3 nodes | 1.545455 | 0.124734 | 4.888889 | 0.093317 | 13.97906 | 0.080636 |

| 6 nodes | 1.758621 | 0.141939 | 7.333333 | 0.139976 | 19.92537 | 0.114936 |

| 9 nodes | 1.821429 | 0.147008 | 10.47619 | 0.199965 | 42.38095 | 0.244468 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Shen, J.; Yang, S.; Yu, Z.; Stanek, K.; Stampach, R. Method of Constructing Point Generalization Constraints Based on the Cloud Platform. ISPRS Int. J. Geo-Inf. 2018, 7, 235. https://doi.org/10.3390/ijgi7070235

Zhou J, Shen J, Yang S, Yu Z, Stanek K, Stampach R. Method of Constructing Point Generalization Constraints Based on the Cloud Platform. ISPRS International Journal of Geo-Information. 2018; 7(7):235. https://doi.org/10.3390/ijgi7070235

Chicago/Turabian StyleZhou, Jiemin, Jie Shen, Shuai Yang, Zhenguo Yu, Karel Stanek, and Radim Stampach. 2018. "Method of Constructing Point Generalization Constraints Based on the Cloud Platform" ISPRS International Journal of Geo-Information 7, no. 7: 235. https://doi.org/10.3390/ijgi7070235