Abstract

Location-based services have important economic and social values. The positioning accuracy and cost have a crucial impact on the quality, promotion, and market competitiveness of location services. Dead reckoning can provide accurate location information in a short time. However, it suffers from motion pattern diversity and cumulative error. To address these issues, we propose a PCA-GWO-KELM optimization gait recognition indoor fusion localization method. In this method, 30-dimensional motion features for different motion patterns are extracted from inertial measurement units. Then, constructing PCA-GWO-KELM optimization gait recognition algorithms to obtain important features, the model parameters of the kernel-limit learning machine are optimized by the gray wolf optimization algorithm. Meanwhile, adaptive upper thresholds and adaptive dynamic time thresholds are constructed to void pseudo peaks and valleys. Finally, fusion localization is achieved by combining with acoustic localization. Comprehensive experiments have been conducted using different devices in two different scenarios. Experimental results demonstrated that the proposed method can effectively recognize motion patterns and mitigate cumulative error. It achieves higher localization performance and universality than state-of-the-art methods.

1. Introduction

Location-based services have significant commercial and economic value, and they have been widely used in navigation and guidance services for public places such as smart homes, logistics and warehousing, medical health, public transportation, shopping malls, exhibition halls, etc. In outdoor environments, GPS can provide precise location information in all-weather days [,]. However, GPS signal cannot permeate indoor environments due to building obstructions []. Therefore, how to provide robust positioning in indoor environments has become the key challenge.

Researchers have developed a series of indoor positioning technologies including infrared [], Bluetooth (BT) [], Wireless Fidelity (Wi-Fi) [], Radio Frequency Identification (RFID) [], and ultra-wideband (UWB) []. In these technologies, Wi-Fi, infrared, and BT have good positioning performance within a short distance range. And RFID and UWB have high costs with infrastructure installation. Dead reckoning (DR) has high positioning performance over a period of time and a low cost, and it cannot be affected by external environmental interference []. In acoustic positioning, pseudo-ultrasound is not affected by human behavior and has high positioning accuracy with the frequency range of 16–20 kHz. At the same time, many indoor venues are already equipped with complete loudspeaker broadcast systems, which can be modified to broadcast positioning signals, minimizing the deployment cost of positioning []. Therefore, DR and acoustic localization are promising techniques for indoor positioning.

Dead reckoning utilizes inertial measurement unit (IMU) data to estimate the target’s position. It is a relative localization technique that estimates the position of the target at the next moment based on the current position []. Thio et al. [] proposed a gait detection method based on sine wave approximation of acceleration signals. The method provides consecutive and real-time full-step information in the form of fractions. Wu et al. [] used a generalized likelihood ratio multi-threshold detection algorithm for gait detection, which can adaptively adjust the thresholds. It estimates the acceleration and angular velocity of the inertial sensors by great likelihood and uses the generalized likelihood to obtain the corresponding threshold ratios, thus counting the number of steps. Niu et al. [] proposed to use the Kalman filtering algorithm combined with dynamic thresholding to determine the number of pedestrian steps. However, as the walking time increases, PDR produces a cumulative error. To solve this problem, researchers have proposed many solutions [,]. Zhao et al. [] presented a three-step constrained step size estimation model based on the Weinberg model []. Yu et al. [] designed an adaptive step-size estimation algorithm that changes the linear estimating equation using only one parameter to provide accurate step-size information. Huang et al. [] utilized triple data fusion of magnetometers, accelerometers, and gyroscopes for heading angle estimation.

Acoustic localization determines the target by extracting angle and time features from the acoustic signal. The main methods of acoustic localization include Time of Arrival (TOA), Time Difference of Arrival (TDOA), and Angle of Arrival (AOA) []. Mandal et al. [] proposed the Beep system for the first time to design a cell phone-compatible low-frequency-band acoustic signal. Khyzhniak et al. [] used TDOA to process sound source signals acquired by microphones for localization in two dimensions. Urazghildiiev et al. [] utilized maximum likelihood estimation for acoustic localization using combined AOA/TDOA estimation. Liu et al. [] proposed combining inertial measurement units and acoustic sensor data to improve the indoor localization accuracy of smartphones. Wang et al. [] presented a fast and accurate joint CHAN-Taylor three-bit localization algorithm. Zhu et al. [] proposed a new low-cost, easily usable system based on the TDOA algorithm that can localize objects using only a smartphone. Zhang et al. [] designed an asynchronous acoustic localization system based on a smartphone. The system utilizes the acoustic signals collected by the cell phone to calculate the real-time TDOA between nodes.

Under complex and changing indoor environments, a single source positioning method often fails to achieve accurate positioning []. Acoustic localization can be performed using existing microphones and loudspeakers with high accuracy. However, acoustic localization is subject to unexpected errors from environmental interference []. Wang et al. [] designed an adaptive robust traceless Kalman filter to effectively deal with unknown system noise and observation roughness in acoustic navigation. Klee et al. [] constructed an extended Kalman filter model to directly update sound source position estimates, providing a fundamental framework for dynamic filtering in acoustic localization. The diversity and complexity of targets’ motion make it difficult to achieve accurate positioning in dead reckoning [,].

To address these issues, we propose a multiple motion pattern navigation and localization method. The proposed method effectively reduces the cumulative error of dead reckoning and the outliers of acoustic localization []. The core contributions of this paper are summarized as follows:

PCA-GWO-KELM motion pattern recognition: We propose the Principal Component Analysis and Gray Wolf Optimization with Kernel Extreme Learning Machine (PCA-GWO-KELM) motion mode recognition method. The PCA-GWO-KELM method can extract effective features from 30 motion features of original data and infer the importance of each feature in the motion posture from the effective features, thus estimating the motion mode with higher accuracy. This proposed method can effectively solve the inaccurate positioning caused by motion patterns.

Dynamic adaptive double threshold gait detection: A double dynamic adaptive threshold method for peaks and valleys detection is proposed. This method constructs an adaptive acceleration upper threshold and an adaptive dynamic time threshold. Both thresholds can be adjusted according to different motion states, effectively filtering out numerous pseudo peaks. As a result, it enhances the accuracy of gait detection and reduces the cumulative error in localization.

Robust motion posture recognition fusion localization: A multiple-motion-mode recognition fusion localization system is proposed. Particle filtering is used to achieve acoustic and IMU positioning to solve the challenges of different motion modes and low positioning accuracy. It can achieve accurate positioning of different motion postures. Localization performance is improved in different scenarios and motion modes.

2. Related Works

In recent years, researchers have conducted extensive research on the recognition of various movement states. Luo et al. [] designed a deep learning model-assisted PDR algorithm using a combined model of a trained convolutional neural network and a long-short-term machine (CNN-LSTM) neural network to identify complex movement patterns of pedestrians. Zhang et al. [] used Wavelet-CNN to process microelectromechanical system (MEMS) sensor data and identify multiple complex human activities. Chen et al. [] employed the K-Nearest Neighbor (KNN) algorithm to recognize motion states, including going up stairs, going downstairs, and walking horizontally. Experimental results show that KNN achieves more than a 95% recognition rate for all three states. Khalili et al. [] presented a Support Vector Machine (SVM) and the Decision Tree (DT) combined classification algorithm to identify different movement patterns and walking speeds, with an accuracy of 97.03% and 97.67% for females and males, respectively. Although these classification models can all be used to recognize different motion patterns, their recognition accuracy mainly depends on the applicability of the motion features. To obtain high recognition accuracy, we must consider the extraction and selection of features. Gu et al. [] proposed a deep learning method based on stacked denoising self-encoders. The method can automatically extract the data features, which facilitates the recognition of different motion patterns related to indoor positioning and reduces the workload of designing and selecting motion features. Klein et al. [] designed a new Gradient Boosting (GB) classifier for feature selection. The 111 features used in the classification problem were evaluated, and 12 significant features were extracted to recognize and classify four cell phone postures.

It is common to use multi-information fusion positioning techniques to obtain positioning results with higher positioning accuracy and robustness. Guo et al. [] proposed a low-cost, two-step signal detection, data-driven PDR localization algorithm and utilized an acoustic measurement compensation method to improve localization performance. Song et al. [] combined acoustic localization without infrastructure with PDR and validated the plausibility of the PDR results through acoustic constraints. Wang et al. [] introduced a fusion localization system for large-area scenes. To eliminate the cumulative error of PDR, the system utilizes a Hamming distance-based acoustic localization algorithm to assist PDR with position updating. Wei et al. [] presented an efficient closed-loop mechanism based on WIFI and PDR, which can achieve sub-meter localization accuracy. Wu et al. [] designed a magnetic field matching and PDR based localization accuracy enhancement method with strong robustness. Naheem et al. [] introduced an indoor pedestrian tracking system with UWB and PDR fusion. This system not only reduces the cumulative error of PDR but also decreases the influence of UWB by indoor non-visual distance. Jin et al. [] utilized particle filtering (PF) combined with low-power Bluetooth and PDR for fusion localization. The method reduces the cumulative error of PDR and the fluctuation of BLE received signal strength to provide more stable and accurate localization results. Wu et al. [] proposed a novel indoor localization method fusing RFID, PDR, and geomagnetism.

Inspired by existing research efforts, we propose a method for improved dead reckoning based on motion pattern recognition, fused with CHAN-Taylor acoustic localization (MPR-IPDR-CT). The method uses the pedestrian’s motion states as a priori conditions for the dead reckoning positioning process to improve the positioning reliability. Then, gait detection is performed according to the multi-pattern recognition results, which effectively improves the positioning accuracy. Finally, fine estimates are obtained by fusing the acoustic estimates.

3. Materials and Methodology

An overview of the robust motion posture recognition localization method is introduced in Section 3.1. Section 3.2 describes the PCA-GWO-KELM model. Double dynamic adaptive threshold gait detection is discussed in Section 3.3. Section 3.4 depicts an adaptive step length estimation model. A method for heading estimation is introduced in Section 3.5. Finally, a robust motion posture recognition localization system is demonstrated in Section 3.6.

3.1. Overview

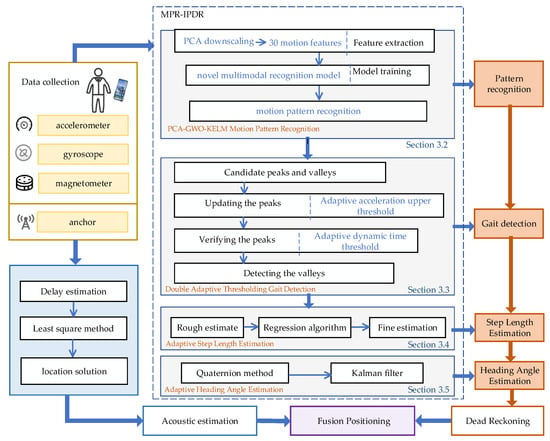

In this section, we introduce the localization and navigation method of double threshold gait detection and multi-pattern recognition, which includes the PCA-GWO-KELM motion pattern recognition model, double dynamic adaptive threshold gait detection, acoustic estimation, and motion posture recognition localization. The overall localization and navigation framework is shown in Figure 1.

Figure 1.

Framework of proposed MPR-IPDR-CT method.

The PCA-GWO-KELM motion pattern recognition method extracts important features from 30 motion features of the raw data and infers the importance of each feature in the motion model to determine the motion state of the target. A dual adaptive threshold-based gait detection method is proposed to realize the step number of the moving target. In acoustic estimation, a smartphone is used to receive acoustic signals. Two weighted least squares are used to achieve the location of the target. Finally, MPR-IPDR and acoustic estimation are fused by particle filtering.

3.2. PCA-GWO-KELM Motion Pattern Recognition

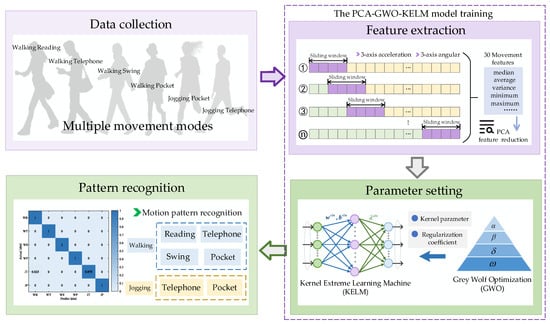

The motion characteristics are extremely related to the complex and diverse target motion postures []. Therefore, how to accurately obtain the target motion characteristics plays a key role in navigation and positioning. The PCA-GWO-KELM motion posture recognition model is proposed in Figure 2. It mainly includes three stages: data acquisition, feature extraction, and motion mode recognition.

Figure 2.

Structure of multi-pattern recognition model.

There are differences in acceleration and angular velocity for different motion states during the motion process. This article focuses on collecting three-dimensional motion acceleration and angular velocity data.

Firstly, the original data from accelerators and gyroscopes need to be standardized, which can ensure that all variables have a mean of 0 and a standard deviation of 1, putting them on an equal scale and eliminating the influence of different units. Different features have different importance for motion posture. Sixty-dimensional motion features, including mean, variance, maximum, minimum, energy values, etc., will be obtained from the standardized original accelerators and gyroscope data, which are denoted as . In this paper, we set a data length of 2 s as the sliding window length, and the length of each step is 0.5 s, which results in a 50% data overlap. When the acceleration or angular velocity modulus sequence meets the requirements, the sequence is intercepted, after which the specified window length is slid backward. Repeat the above steps in this way to segment the IMU data to obtain more sample data.

The variable needs to be standardized by the formula

where is the -th sample of the -th variable, is the mean of each variable , and is the standard deviation.

The covariance matrix of the standardized data is calculated. The element of the covariance matrix is given by

where is the variance of the -th variable, and is the covariance between the -th and -th variables. And is the number of principal components selected.

The next step is to find the eigenvalues and corresponding eigenvectors of the covariance matrix .

This is accomplished by solving the following equation:

The eigenvalues represent the amount of variance explained by the corresponding principal components, and the eigenvectors define the directions of the principal components in the original variable space. Each eigenvector is normalized to have a length of 1, that is, . is the number of variables in the original dataset, and subsequent operations such as calculating the covariance matrix are centered around these variables.

The principal components are ranked according to the magnitudes of their corresponding eigenvalues. Usually, only the first principal components are selected, where is determined based on the amount of variance explained. A common criterion is to choose such that the cumulative proportion of variance explained by the first principal component is given by

where is the -th element of the -th eigenvector .

The original data are projected onto the selected principal components. For each sample , its projection onto the -dimensional principal component space is expressed as , where for .

This projection transforms the original high-dimensional data into a low-dimensional space, retaining the most important information of the original data. Subsequently, the reduced dimensional motion features are used as the input for PCA-GWO-KELM as the motion postures. In this paper, the input layer dimension of the PCA-GWO-KELM algorithm is the number of features after PCA dimensionality reduction and is set to 10. The hidden layer dimension is 60 and the output layer dimension is 6.

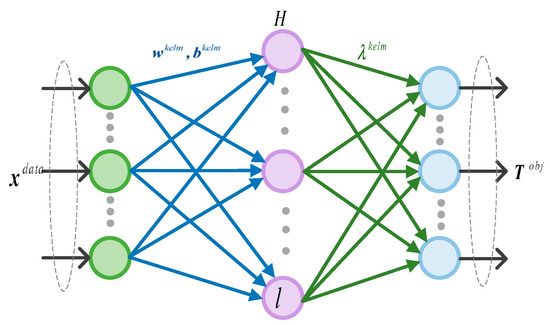

Figure 3 shows kernel extreme learning machine model. Suppose the input dataset is represented as follows:

Figure 3.

The network model of KELM.

The output of the hidden layer is expressed as

where represents the activation function of the hidden layer node. , are the weight vectors and thresholds connecting the implicit and input layers, respectively, which can be expressed as

where l denotes the number of nodes in the hidden layer and s denotes the number of nodes in the input layer.

We design a loss function . Assuming the target matrix of the training data is denoted by , and the output of the KELM model can be represented by , the loss function is expressed as follows:

where is the target matrix of the training data, is the predict output, and is the connection weight value between the hidden layer and the output layer.

To prevent model overfitting and ensure matrix inverse stability, regularization coefficients C are added to optimize connection weight . The update loss function is as follows:

where is the L2 regularization penalty.

To achieve the optimization predicted value, it become the optimization question for searching the minimum loss function .

Expanding Equation (10), we obtain

Differentiating Equation (11),

The optimization value of Equation (12) is achieved as below:

Then, the output of KELM is shown as follows:

where is the kernel function in the KELM model, which is expressed as

From Equation (14), the kernel function parameters and regularization coefficients C are the key factors affecting the prediction performance. The gray wolf optimization (GWO) method is used to optimize the two parameters due to its effectiveness and strong global search ability.

Firstly, a population of N gray wolves is randomly initialized within the search space. The position of each wolf i is initialized as follows:

where and are the lower and upper bounds of the j-th variable, respectively, and is a random number uniformly distributed in the range [0, 1].

Each wolf has the individual fitness function and can be expressed as

where N denotes the number of training samples, and is the predicted value of the model containing and .

The gray wolf algorithm is set with a population size of 50 and a maximum number of iterations of 60. The lower boundary of the wolf pack search space is 1, and the upper boundary is 20. Gray wolves encircle their prey during the hunting process. This behavior is modeled by updating the position of each wolf towards the prey. Wolves , , , and wolf are identified. Individual gray wolves are positionally updated as follows:

where , , and denote the distances between , , and with the target solution, respectively. , , and denote the positions of , , and at the th iteration. denotes the specific position of the current target solution.

Then, the three new candidate positions , , and for each wolf based on the position of wolves , , and is calculated as below:

where , and are coefficient vectors.

Finally, the new position of the wolf at the nest iteration is updated as the average of the three candidate positions:

When the search approaches the end, this makes the wolves approach the optimal solution more accurately. This position represents kernel function parameters and regularization coefficients C of the optimized KELM.

To validate the recognition accuracy of the proposed PCA-GWO-KELM model, experiments have been carried out in two separate experimental paths with six different motion modes, including walking and reading (WR), walking and telephoning (WT), walking and swinging (WS), walking and pocketing (WP), jogging and telephoning (JT), and jogging and pocketing (JP). WR mode refers to when a pedestrian is walking at normal speed with their phone steadily placed in front of their chest. WT mode refers to the situation where a pedestrian is walking at normal speed with their phone held to their ear to answer a call. WS mode refers to the state where a pedestrian’s phone swings at random while walking at normal speed. WP mode refers to the situation where pedestrians place their mobile phones in the side pockets of their pants while walking at normal speed. JT mode refers to the situation where a pedestrian answers a call with their phone held to their ear while running. JP mode refers to the situation where a pedestrian’s phone is placed in the side pocket of their pants while running. The device used in this experiment is Mate60 Pro cell phone. These two planned experimental paths are two indoor straight corridors with a building length of 24 m and 97.2 m, respectively.

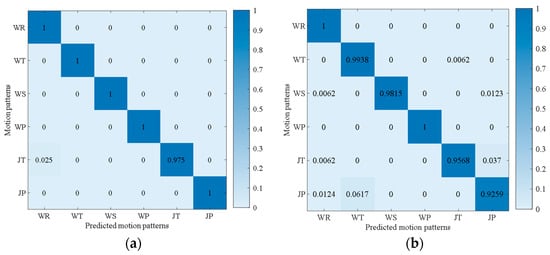

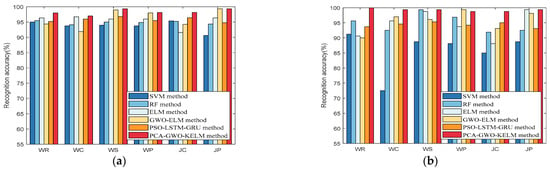

Figure 4 presents the accuracy of the proposed PCA-GWO-KELM model in multiple movement model recognition. Results have demonstrated that the accuracy of the motion pattern recognition for WR, WT, WS, WP, JT, and JP modes are, respectively, 100%, 100%, 100%, 100%, 97.50%, and 100% on the 24 m path. And the recognition rates for WR, WT, WS, WP, JT, and JP modes are, respectively, 100%, 99.38%, 98.15%, 100%, 95.68%, and 92.60% on the 97.2 m path. The PCA-GWO-KELM model achieves a high recognition rate of motion pattern under different scenes.

Figure 4.

The recognition results of six motion patterns: (a) 24 m experimental path; (b) 97.2 m experimental path.

3.3. Double Adaptive Threshold Gait Detection

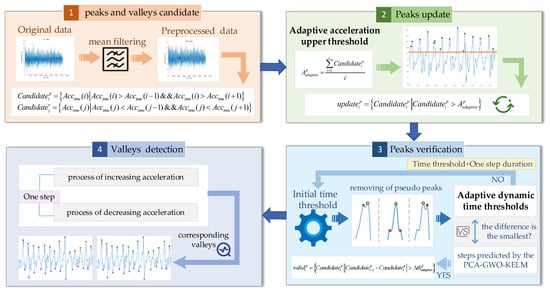

Accurate gait detection can achieve good positioning performance. We propose a double adaptive threshold gait detection method, shown in Figure 5. The proposed gait detection method includes peak and valley candidates, peak update, peak verification, and valley detection.

Figure 5.

Framework of the double adaptive threshold gait detection method.

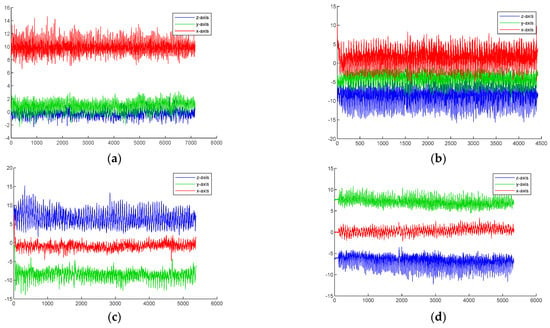

Three-dimensional acceleration under different movements reflects different motion postures. Figure 6 shows the changes in motion acceleration in reading, phone, swing, and pocket modes. The red line represents the X-axis acceleration, the green line represents the Y-axis acceleration, and the blue line represents the Z-axis acceleration. The results demonstrate that in reading mode and pocket mode, the acceleration changes in the z-axis and x-axis directions are more significant. In swing and phone modes, there are significant changes in acceleration in the x-axis and y-axis directions. Therefore, there are differences in the motion characteristics of different postures.

Figure 6.

Waveforms of triaxial acceleration data for different mobile phone attitudes: (a) reading mode, (b) pocket mode, (c) swing mode, and (d) telephone mode.

In gait detection, candidate peak pre-detection of motion acceleration is first performed using the peak detection method. The peak must be greater than the value of the acceleration before and after it. If a peak is detected, it is considered that the pedestrian has taken a step, and the peak time is recorded, as shown in the following equation:

where is the set of candidate peaks at sampling point i. is the combined acceleration.

To avoid the pseudo peaks, the adaptive acceleration upper threshold is set by taking the average of the forward acceleration within a fixed time period, as shown in Equation (14).

where is the adaptive acceleration upper threshold, and c is the number of candidate peaks.

Candidate peaks that are larger than the threshold for adaptive acceleration are saved, and is the set of updated candidate peaks.

The time interval between two adjacent peaks is finite. In this paper, an adaptive dynamic time threshold is set to verify the valid peaks. First, the initial time threshold is set to the time interval of the first step, and the number of prediction steps corresponding to the initial time threshold is recorded. This predicted number of steps then differs from the true number of steps obtained from the PCA-GWO-KELM model. If the difference is minimum at this point, the number of steps corresponding to the initial threshold is output. If the difference is not a minimum, the initial time threshold is doubled, and the algorithm loop continues. The current peak acceleration can be judged valid only if the time interval between two adjacent steps meets the requirements of the following equation.

where is the set of peaks after verification of the adaptive dynamic time thresholds, and is an adaptive dynamic time threshold.

The flow of the double adaptive threshold gait detection method is depicted in Algorithm 1.

| Algorithm 1: The double adaptive threshold gait detection method |

| Input: Motion acceleration data. |

| Output: Target steps and peak amplitude. |

| 1: Data preprocessing using mean filtering. |

| 2: Candidate peaks are selected using peak detection by Equation (21). |

| 3: Candidate peaks are counted, and adaptive acceleration upper thresholds are calculated by Equation (22). |

| 4: Update candidate peaks by Equation (23). |

| 5: Set the time interval of the first step to the initial time threshold. |

| 6: for each step do |

| 7: Record the difference between the step number corresponding to the current time threshold and the true step number. |

| 8: if the difference is minimal then |

| 9: Save the step number at this moment. |

| 10: else |

| 11: Double the time threshold. |

| 12: end if |

| 13: Save the value of each peak. |

| 14: end for |

3.4. Adaptive Step Length Estimation

In this paper, an adaptive step length estimation algorithm is used, which considers the motion state of pedestrians. This algorithm first uses the previous two step lengths to obtain a rough estimate of the current step length. Eight feature variables are extracted from the acceleration signal, which are peak-to-valley amplitude difference, walk frequency, variance, mean acceleration, peak median, valley median, signal area, and signal energy.

where is the rough estimate of the current step, and and are the previous step and the first two steps of the current step, respectively. and are the maximum and minimum values of acceleration for the current step, respectively. , , and are the weight factors.

Then, eight feature variables are regularized and constrained using the least absolute shrinkage and selection operator (Lasso) method. When formula (18) reaches its minimum value, the optimal step size feature coefficient is obtained.

where is the total number of steps walked, is the number of feature variables, represents the characteristic variables, and stands for the regression coefficients. is the regularization factor, which serves to adjust the size of the characteristic coefficients. is the fine estimate of the current step.

In the end, the obtained step length is

where AF is the step characteristic and B is the constant matrix corresponding to the regression coefficients.

3.5. Adaptive Heading Angle Estimation

The general heading estimation method is to use the accelerometer, magnetometer, and gyroscope that come with the mobile phone for attitude solving. The mobile phone is converted from the carrier coordinate system to the navigation coordinate system, and then the yaw angle in the navigation coordinate system is used as the forward direction angle. We use the quaternion method to coordinate and transform the gyroscope data [].

where are the four sub-elements that correspond to the four elements that make up the quaternion. And the attitude angle of the mobile phone in the navigation coordinate system is solved by Equation (21) [].

where is the yaw angle, is the pitch angle, and is the roll angle. Then, the gyroscope data are fused with the acceleration and magnetometer data through KF. In this paper, the fusion process takes the heading angle measured by the direction sensor as the system observation and the gyroscope data as the measured value control input to the process. Continuously iterating this process implements KF to correct the heading angle.

3.6. Multiple Pattern Recognition Positioning

Particle filtering is a recursive Bayesian filtering method for state estimation of dynamic systems, which is suitable for nonlinear and non-Gaussian noise cases. Therefore, we utilized PF combined with PDR and acoustic signals for fusion positioning. The fusion positioning method designed in this paper performs the following steps:

Step 1: Initialize a particle swarm containing particles in the positioning region, each with a weight of 1, and assume that the set of particles at moment t are . The position coordinates of the initial particle set are generated based on the acoustic positioning results.

Step 2: After obtaining the initialized particle set, the position of the particle at time t + 1 will be updated by Equation (30).

where and are the step length and heading angle of the pedestrian at t + 1, respectively. is Gaussian noise with zero mean and variance of 1.

Step 3: The acoustic positioning results are used as systematic observations, expressed as follows:

where and are the estimated positions for acoustic localization at t + 1, respectively, and is the observation noise. The new weight update factor is calculated from the acoustic positioning results as follows:

where is the covariance matrix of the acoustic estimate.

Step 4: The particles are normalized by Equation (33).

The normalized particles are then resampled. Particles with large weights have a high percentage after resampling, and particles with small weights are discarded. The particles with high weights are replicated multiple times with the total number of particles remaining the same.

Step 5: Finally, fusion positioning estimation is performed. The pedestrian’s position at t + 1 can be calculated by Equation (34).

4. Experimental Results

In this section, the experimental setup is described in Section 4.1. Then, the recognition accuracy of the proposed PCA-GWO-KELM model is analyzed in Section 4.2. In Section 4.3, a double adaptive threshold gait detection method is discussed. Section 4.4 evaluates the positioning accuracy of MPR-IPDR. Finally, Section 4.5 assesses the positioning performance of the fusion method.

4.1. Experimental Setting

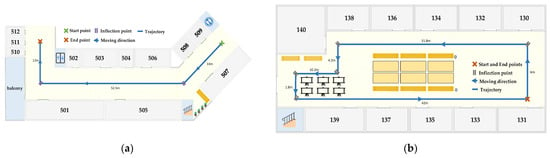

To demonstrate the localization performance of the proposed method, we have conducted comprehensive experiments in two indoor architectural environments. As shown in Figure 5, the two experimental scenes include a public corridor and an office building with dimensions of approximately 68 × 20 × 3 m3 and 65 × 16 × 3 m3, respectively. And bold blue lines denote the planned movement trajectories of the target. In Figure 7a, the movement trajectory is a narrow corridor with fire extinguishers and greenery, and it contains a 120° and 90° corner. In Figure 7b, the movement trajectory is an irregular closed graph with six consecutive corners, and it is an office building with some display signs.

Figure 7.

Schematic of experimental scenes: (a) scene 1; (b) scene 2.

In these experiments, we recruit a volunteer from a local university to collect acoustic signals and IMU data along the planned path. The 17.5–19.5 kHz pseudo-ultrasound as the acoustic source is chosen. In this paper, a multi-device collaborative approach is used to complete the data acquisition and processing. Vivo Y93s and Huawei mate 60 pro cell phones were used in the data acquisition session to support the stable acquisition of pedestrian motion data at 50 Hz frequency. In the data processing stage, a Seven Rainbow iGame Sigma M500 desktop computer is used, which is equipped with an Intel Core i7-11700 K processor, 32 GB DDR4 3200 MHz RAM, and an NVIDIA GeForce RTX 3060 graphics card.

4.2. PCA-GWO-KELM Motion Pattern Recognition Analyses

To validate the recognition accuracy of the proposed PCA-GWO-KELM model, we compare it with the SVM, RF, ELM, GWO-ELM, and PSO-LSTM-GRU models [] in the two test trajectories. SVM is a Radial Basis Kernel Function (RBF) with a penalty factor of C = 1.0 and trained using the Sequential Minimum Optimization (SMO) algorithm. RF consists of 100 decision trees with Gini impurity for feature partitioning, and ELM is a single-hidden-layer feed-forward neural network with 100 nodes and a Sigmoid activation function. The number of nodes is set to 100, and the activation function is Sigmoid. In GWO-ELM, the number of gray wolf individuals is 30, and the maximum number of iterations is 50. For PSO-LSTM-GRU, the swarm size is set to 40 particles, and both the LSTM and GRU layers use 64 hidden units and are trained for 30 epochs. The above model is used as a comparative method to verify the performance of our proposed PCA-GWO-KELM model.

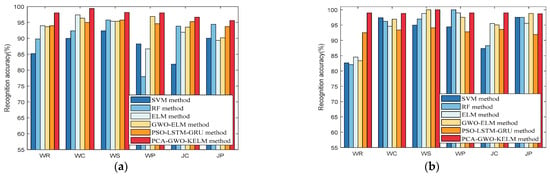

The tester collects multiple sets of IMU data at each of the six movement models and then obtains the recognition accuracy of the movement modes under different classification methods. Figure 8 and Figure 9 show that the recognition accuracy of the proposed PCA-GWO-KELM model reaches the highest level under all six movement modes in the two experimental environments compared to the SVM, RF, ELM, GWO-ELM, and PSO-LSTM-GRU models. Although PSO-LSTM-GRU leverages swarm-driven tuning of deep recurrent layers, it still lags behind PCA-GWO-KELM by roughly 2–3 percentage points across devices and scenes, confirming the latter’s superior accuracy with lower computational cost. This is because the PCA-GWO-KELM model improves the convergence speed and final prediction performance of the model by PCA extracting the most important data features and GWO optimizing the network parameters of KELM.

Figure 8.

Recognition accuracy of SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-ELM models in scene 1 when using different devices: (a) Vivo Y93s smartphone in scene 1; (b) Mate60 Pro smartphone in scene 1.

Figure 9.

Recognition accuracy of SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-ELM models in scene 2 when using different devices: (a) Vivo Y93s smartphone in scene 2; (b) Mate60 Pro smartphone in scene 2.

Table 1 presents the average recognition accuracies of the six motion patterns for the PCA-GWO-KELM, SVM, RF, ELM, GWO-ELM, and PSO-LSTM-GRU models when using different devices in Scene 1. To make the data in the table more readable, we have bolded the PCA-GWO-KELM method in the table. It is noticeable that the average recognition accuracy of the proposed PCA-GWO-KELM model is higher than other methods in scene 1 under different mobile devices. For Vivo Y93s in scene 1, its accuracy of 97.63% outperforms the other models, and for Mate60 Pro in the same scene, the 99.07% accuracy also stands out. This is because PCA-GWO-KELM first performs dimensionality reduction using PCA to retain key features, and then optimizes the parameters using GWO to improve feature utilization and model fitting. The experimental results demonstrate that the PCA-GWO-KELM model can provide better movement pattern information to estimate the target states.

Table 1.

Average recognition accuracies of SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-ELM models in scene 1 under two different devices.

Table 2 presents the average recognition accuracies of the six motion patterns for the PCA-GWO-KELM, SVM, RF, ELM, GWO-ELM, and PSO-LSTM-GRU models when using different devices in Scene 2. It is observed that the average recognition accuracy of the proposed PCA-GWO-KELM model is higher than the other methods in scene 2 under different mobile devices. For the Vivo Y93s in scene 2, the accuracy of 98.33% is better, while for the Mate60 Pro in this scene, the accuracy of 99.27% is also the highest among the compared models. The experimental results demonstrate that the proposed model has more versatility, higher recognition accuracy, and can provide better movement pattern information.

Table 2.

Average recognition accuracies of SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-ELM models in scene 2 under two different devices.

To verify the robustness of the PCA-GWO-KELM model, we test it more times consecutively to check the recognition accuracy. Table 3 lists the motion model recognition accuracy of the recognition method proposed in this paper. The time complexity is compared using the SVM, RF, ELM, and the GWO-ELM and PSO-LSTM-GRU models, respectively. SVM has good stability but poor accuracy. The maximum difference in RF recognition accuracy reaches 5.74%. The maximum difference in recognition accuracy of the ELM method reaches 6.27%, and its characteristic of randomly initializing the input weights and biases leads to poor performance stability. And it is noticeable that recognition accuracy difference of the GWO-ELM model reaches 2.57%. Although the GWO-ELM model uses GWO to optimize the parameters of ELM, GWO is accompanied by stochasticity during initialization. In addition, PSO-LSTM-GRU exhibits higher stability than GWO-ELM, but its average accuracy is still lower than the proposed PCA-GWO-KELM model.

Table 3.

Pattern recognition accuracy of the SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-KELM models for 5 tests (%).

The PCA-GWO-KELM model not only has GWO-optimized kernel function parameters and regularization coefficients to enhance the performance of the ELM but also features dimensionality reduction, which effectively improves the recognition accuracy. Experimental results demonstrate that the PCA-GWO-KELM model’s recognition accuracy is higher than the others. It has high stability.

Table 4 shows the computational complexity of different models in scenario 1 and scenario 2. In these two scenarios, the time consumption of the PCA-GMO-KELM model is significantly higher than that of the SVM, RF, ELM, and GMO-ELM models. Although PCA-GWO-KELM achieves high recognition accuracy as shown in previous results, it does so at the cost of increased computational complexity, suggesting a trade-off between accuracy and processing efficiency. In contrast, the PSO-LSTM-GRU model brings the heaviest computational burden, limiting its applicability for deployment on mobile devices.

Table 4.

Computational complexity of SVM, RF, ELM, GWO-ELM, PSO-LSTM-GRU, and PCA-GWO-KELM for different scenes.

4.3. Gait Detection Analyses

Some experiments are designed to validate the proposed double adaptive threshold gait detection algorithm. During the experiments, the tester holds two different devices participating in the planned route and collects multiple sets of IMU data.

Table 5 shows the step detection results for different devices in two scenes. The experimental results illustrate the better effectiveness of the proposed double adaptive threshold step detection algorithm. This is because the proposed step detection algorithm is not only able to adaptively eliminate the peaks with large differences but also adaptively adjust the time threshold to be nearer to the real step count.

Table 5.

Step detection results of different devices in different scenes (%).

4.4. MPR-IPDR Positioning Analyses

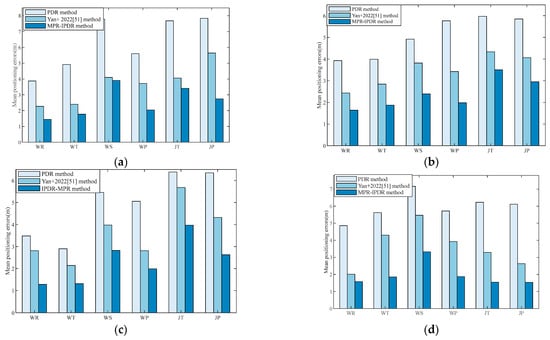

In this section, the positioning errors of the MPR-IPDR method are validated with comprehensive experiments. Figure 10 shows the mean positioning errors of six motion patterns using the traditional PDR, Yan+ 2022 [], and MPR-IPDR method under the two scenes and two devices. We observe that the mean positioning error of the PDR and Yan+ 2022 [] method are larger than those of MPR-IPDR. This is attributed to the complexity of motion patterns during pedestrian navigation, and PDR applications are limited in many indoor scenarios. If human motion states are not distinguished, large deviations may occur during positioning. The MPR-IPDR method judges the motion pattern of the pedestrian before performing indoor positioning. And the MPR-IPDR method also employs the proposed step detection algorithm based on double adaptive thresholds and adaptive step length estimation method, which further reduces the cumulative error and improves the localization robustness.

Figure 10.

Mean positioning errors of six motion patterns using PDR, Yan+ 2022 [], and MPR-IPDR methods: (a) using Vivo Y93s in scene 1, (b) using Vivo Y93s in scene 2, (c) using Mate60 Pro in scene 1, (d) and using Mate60 Pro in scene 2.

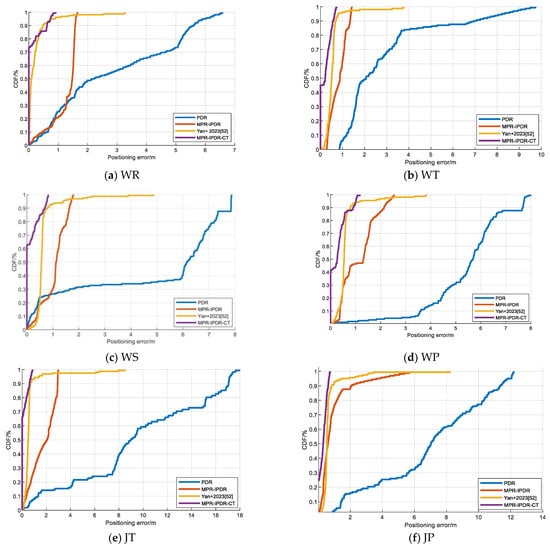

4.5. Fusion Positioning Analyses

We have conducted extensive localization experiments in two experimental scenes. Figure 11 illustrates the cumulative distribution function (CDF) between the proposed MPR-IPDR-CT method and the PDR, MPR-IPDR, and Yan+ 2023 [] methods for different motion modes in scene 1. Under the six different motion modes, the positioning error of the MPR method is less than 1.5 m with 90% probability. The proposed MPR-IPDR-CT algorithm performs adaptive gait detection, step length estimation and heading estimation by combining the results of different multi-modal recognitions. At the same time, the method is also combined with acoustic positioning to accurately estimate the pedestrian position. This method can overcome the defects of traditional PDR algorithms that are difficult to adapt to various combinations of pedestrian motion patterns, which are applicable to different indoor environments and provide a good solution for fusion positioning.

Figure 11.

The CDF of the positioning error among the PDR, MPR-PDR, Yan+ 2023 [], and the MPR-IPDR-CT method with different motions in the first scene: (a) walking and reading, (b) walking and telephoning, (c) walking and swinging, (d) walking and pocketing, (e) jogging and telephoning, and (f) jogging and pocketing.

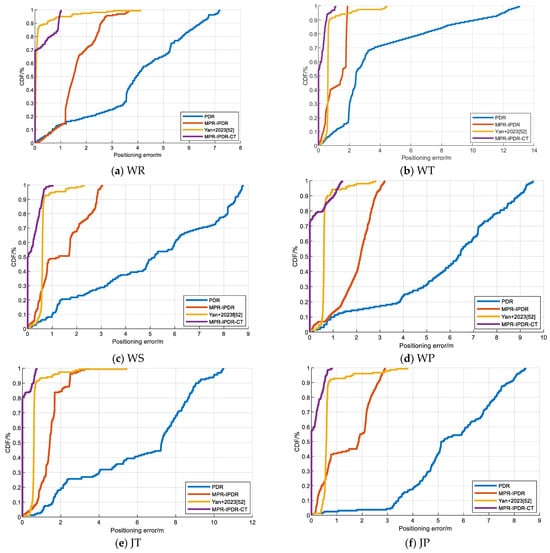

Figure 12 illustrates the CDF between the proposed MPR-IPDR-CT method and the PDR, MPR-IPDR, and Yan+2023 [] methods for different motion modes in scene 2. Among all the motion mode types, the cumulative probabilities of the MPR-IPDR-CT methods all continue to show a steeper upward trend when the localization errors are in the smaller range. The reason why the MPR-IPDR-CT method achieves higher accuracy is most likely because it combines a multi-motion pattern recognition approach to accommodate different motion states, and incorporates acoustic localization to improve positioning accuracy. This integrated approach can better reduce motion interference and positioning errors and thus achieve higher positioning accuracy under different motion modes.

Figure 12.

The CDF of the positioning error among the PDR, MPR-PDR, Yan+ 2023 [], and the MPR-IPDR-CT methods with different motions in the second scene: (a) walking and reading, (b) walking and telephoning, (c) walking and swinging, (d) walking and pocketing, (e) jogging and telephoning, (f) and jogging and pocketing.

Table 6 illustrates the mean and root mean square error (RMS) of the PDR, MPR-PDR, Yan+ 2023 [], and MPR-IPDR-CT methods under Scene 1. To make the data in the table more readable, we have bolded the MPR-IPDR-CT method in the table. The MPR-IPDR-CT method has the advantages of accurate action recognition, high action adaptability, and high localization accuracy. As a result, the method has a smaller average error and RMS error than the PDR, MPR-PDR, and Yan+ 2023 [] methods for the six different motion modes. Meanwhile, the experimental results also show that the positioning error of the proposed algorithm is significantly reduced with the assistance of the motion pattern recognition results. This demonstrates that the algorithm can effectively improve the positioning performance of PDR under complex conditions and has strong practicality and scalability.

Table 6.

Localization error of the PDR, MPR-PDR, Yan+ 2023 [], and MPR-IPDR-CT methods in the first scene (m).

Table 7 illustrates the mean and root mean square error (RMS) of the PDR, MPR-PDR, Yan+ 2023 [], and MPR-IPDR-CT methods under Scene 2. It is obvious that the MPR-IPDR-CT method minimizes both the mean error and the root mean square error in each motion mode. This superiority stems from its ability to combine multi-motion pattern recognition and improved dead reckoning with acoustic localization, which effectively reduces the errors caused by complex motions and environmental disturbances and thus exhibits excellent localization performance in different scenes.

Table 7.

Localization error of the PDR, MPR-PDR, Yan+ 2023 [], and MPR-IPDR-CT methods in the second scene (m).

5. Conclusions

To solve the problems of large cumulative error and poor stability caused by relative localization and different motion modes, an MPR-IPDR-CT method is proposed. In this approach, we propose an MPR-IPDR method that incorporates a multimode recognition model and a novel gait detection algorithm. Among them, the PCA-GWO-KELM-based multi-modal recognition model not only uses the PCA method to narrow down the motion features but also optimizes the network parameters of the KELM model through the GWO method. The motion pattern recognition information output from this model helps PDR to achieve high accuracy navigation and localization under multiple motion states of pedestrians. The dual adaptive threshold-based gait detection method possesses an acceleration adaptive upper threshold and an adaptive dynamic time threshold, which can filter out many pseudo peaks and improve the accuracy of gait detection. Finally, acoustic localization and PDR are fused for localization using PF to overcome the defects of single localization techniques and further improve the accuracy and stability of localization. We conduct multiple experiments in different experimental scenarios and with different equipment. The experimental results show that the proposed MPR-IPDR-CT method not only has excellent localization performance under different scenarios and different devices but also has good general adaptation and robustness. In the future, we will focus our work around expanding the analysis of more complex pedestrian movement patterns. We will consider analyzing various motion states such as stair climbing and backward movement to further enrich the diversity of data. By continuously optimizing the experimental design and data acquisition scheme, we will continuously improve the adaptability and reliability of the algorithm in complex indoor scenes. In addition, the multipath effect in complex indoor environments may introduce measurement outliers, leading to a decrease in acoustic localization accuracy. We will consider the combination of anti-interference algorithms to enhance the suppression ability of acoustic localization against multipath effects to further improve the stability and accuracy of the fusion localization system.

Author Contributions

Conceptualization, Xiaoyu Ji, Xiaoyue Xu, and Suqing Yan; methodology, Xiaoyu Ji and Xiaoyue Xu; software, Xiaoyu Ji, Xiaoyue Xu, and Suqing Yan; validation, Xiaoyu Ji, Xiaoyue Xu, and Jianming Xiao; formal analysis, Xiaoyue Xu, Suqing Yan, and Qiang Fu; investigation, Jianming Xiao, Qiang Fu, and Kamarul Hawari Bin Ghazali; resources, Xiaoyu Ji, Jianming Xiao, and Kamarul Hawari Bin Ghazali; writing—original draft preparation, Xiaoyu Ji, Xiaoyue Xu, and Suqing Yan; writing—review and editing, Suqing Yan and Kamarul Hawari Bin Ghazali; funding acquisition, Suqing Yan, Xiaoyu Ji, and Qiang Fu All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded by Guangxi Science and Technology Project (GuikeZY23055048, GuikeAA23062038, GuikeAB23026120, GuikeZY22096026, GuikeAA24206043), Guangxi Science and Technology Base and Talent Special Project: Research and Application of Key Technologies for Precise Navigation (GuiKe AD25069103), Guangxi Natural Science Foundation (2024GXNSFBA010265), National Natural Science Foundation of China (U23A20280, 62161007, 62471153), Nanning Science Research and Technology Development Plan (20231029, 20231011), Guangxi Major Talent Project, Project of the Collaborative Innovation Center for BeiDou Location Service and Border and Coastal Defense Security Application, The Collaborative Innovation Center for BeiDou Location Service and Border and Coastal Defense Security Application. Graduate Student Innovation Project of Guilin University of Electronic and Technology (2025YCXS038), Innovation Project of Guang Xi Graduate Education.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All test data mentioned in this paper will be made available on request to the corresponding author’s email with appropriate justification.

Conflicts of Interest

Jianming Xiao was employed by the company SGUET-Nanning E-Tech Research Institute Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Qi, L.; Liu, Y.; Yu, Y.; Chen, L.; Chen, R.Z. Current Status and Future Trends of Meter-Level Indoor Positioning Technology: A Review. Remote Sens. 2024, 16, 398. [Google Scholar] [CrossRef]

- Wan, R.J.; Chen, Y.X.; Song, S.W.; Wang, Z.F. CSI-Based MIMO Indoor Positioning Using Attention-Aided Deep Learning. IEEE Commun. Lett. 2024, 28, 53–57. [Google Scholar] [CrossRef]

- Lin, K.H.; Chen, H.M.; Li, G.J.; Huang, S.S. Analysis and Reduction of the Localization Error of the UWB Indoor Positioning System. In Proceedings of the 7th IEEE International Conference on Consumer Electronics—Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 28–30 September 2020. [Google Scholar]

- Ciezkowski, M.; Kociszewski, R. Fast 50 Hz Updated Static Infrared Positioning System Based on Triangulation Method. Sensors 2024, 24, 1389. [Google Scholar] [CrossRef] [PubMed]

- Xiao, K.; Hao, F.Z.; Zhang, W.J.; Li, N.N.; Wang, Y.T. Research and Implementation of Indoor Positioning Algorithm Based on Bluetooth 5.1 AOA and AOD. Sensors 2024, 24, 4579. [Google Scholar] [CrossRef]

- Li, S.H.; Tang, Z.; Kim, K.S.; Smith, J.S. On the Use and Construction of Wi-Fi Fingerprint Databases for Large-Scale Multi-Building and Multi-Floor Indoor Localization: A Case Study of the UJIIndoorLoc Database. Sensors 2024, 24, 3827. [Google Scholar] [CrossRef]

- Ge, T. Indoor positioning design based on HCC in the background of mobile edge computing. Soft Comput. 2023, 28, 471. [Google Scholar] [CrossRef]

- Liu, A.; Wang, J.G.; Lin, S.W.; Kong, X.Y. A Dynamic UKF-Based UWB/Wheel Odometry Tightly Coupled Approach for Indoor Positioning. Electronics 2024, 13, 1518. [Google Scholar] [CrossRef]

- Asraf, O.; Shama, F.; Klein, I. PDRNet: A Deep-Learning Pedestrian Dead Reckoning Framework. IEEE Sens. J. 2022, 22, 4932–4939. [Google Scholar] [CrossRef]

- Kan, R.X.; Wang, M.; Zhou, Z.; Zhang, P.; Qiu, H.B. Acoustic Signal NLOS Identification Method Based on Swarm Intelligence Optimization SVM for Indoor Acoustic Localization. Wirel. Commun. Mob. Comput. 2022, 2022, 5210388. [Google Scholar] [CrossRef]

- Ehrlich, C.R.; Blankenbach, J. Indoor localization for pedestrians with real-time capability using multi-sensor smartphones. Geo-Spat. Inf. Sci. 2019, 22, 73–88. [Google Scholar] [CrossRef]

- Thio, V.; Aparicio, J.; Anonsen, K.B.; Bekkeng, J.K.; Booij, W. Fusing of a Continuous Output PDR Algorithm With an Ultrasonic Positioning System. IEEE Sens. J. 2022, 22, 2464–2474. [Google Scholar] [CrossRef]

- Wu, L.; Guo, S.L.; Han, L.; Baris, C.A. Indoor positioning method for pedestrian dead reckoning based on multi-source sensors. Measurement 2024, 229, 114416. [Google Scholar] [CrossRef]

- Niu, C.L.; Chang, H. Research on Indoor Positioning on Inertial Navigation. In Proceedings of the 2nd IEEE International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 16–18 August 2019; pp. 55–58. [Google Scholar]

- Diallo, A.; Konstantinidis, S.; Garbinato, B. A Pragmatic Trade-off Between Deployment Cost and Location Accuracy for Indoor Tracking in Real-Life Environments. In Proceedings of the 14th International Conference on Localization and GNSS (ICL-GNSS), Antwerp, Belgium, 25–27 June 2024. [Google Scholar]

- Zhao, G.L.; Wang, X.; Zhao, H.X.; Jiang, Z.H. An improved pedestrian dead reckoning algorithm based on smartphone built-in MEMS sensors. Aeu-Int. J. Electron. Commun. 2023, 168, 154674. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications. Analog. Devices 2009, 2, 1–6. [Google Scholar]

- Yu, C.; Shin, B.; Kang, C.G.; Lee, J.H.; Kyung, H.; Kim, T.; Lee, T. RF signal shape reconstruction technology on the 2D space for indoor localization. In Proceedings of the International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Republic of Korea, 6–9 February 2022. [Google Scholar]

- Huang, L.; Li, H.; Li, W.K.; Wu, W.T.; Kang, X. Improvement of Pedestrian Dead Reckoning Algorithm for Indoor Positioning by using Step Length Estimation. In Proceedings of the ISPRS TC III and TC IV 14th GeoInformation for Disaster Management (Gi4DM), Beijing, China, 1–4 November 2022; pp. 19–24. [Google Scholar]

- Widdison, E.; Long, D.G. A Review of Linear Multilateration Techniques and Applications. IEEE Access 2024, 12, 26251–26266. [Google Scholar] [CrossRef]

- Mandal, A.; Lopes, C.V.; Givargis, T. Beep: 3D Indoor Positioning Using Audible Sound. In Proceedings of the Second IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 6 January 2008. [Google Scholar]

- Khyzhniak, M.; Malanowski, M. Localization of an Acoustic Emission Source Based on Time Difference of Arrival. In Proceedings of the Signal Processing Symposium (SPSympo), Lodz, Poland, 20–23 September 2021; pp. 117–121. [Google Scholar]

- Urazghildiiev, I.R.; Hannay, D.E. Localizing Sources Using a Network of Synchronized Compact Arrays. IEEE J. Ocean. Eng. 2021, 46, 1302–1312. [Google Scholar] [CrossRef]

- Liu, T.; Niu, X.J.; Kuang, J.; Cao, S.; Zhang, L.; Chen, X. Doppler Shift Mitigation in Acoustic Positioning Based on Pedestrian Dead Reckoning for Smartphone. IEEE Trans. Instrum. Meas. 2021, 70, 3010384. [Google Scholar] [CrossRef]

- Wang, X.; Huang, Z.H.; Zheng, F.Q.; Tian, X.C. The Research of Indoor Three-Dimensional Positioning Algorithm Based on Ultra-Wideband Technology. In Proceedings of the 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 5144–5149. [Google Scholar]

- Zhu, H.Z.; Zhang, Y.X.; Liu, Z.F.; Wang, X.; Chang, S.; Chen, Y.Y. Localizing Acoustic Objects on a Single Phone. IEEE-Acm Trans. Netw. 2021, 29, 2170–2183. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, M.; Qiu, H.B.; Kan, R.X. Acoustic Localization System based on Smartphone without Time Synchronization. In Proceedings of the 5th International Conference on Information Science, Computer Technology and Transportation (ISCTT), Shenyang, China, 13–15 November 2020; pp. 475–481. [Google Scholar]

- Zhou, Z.J.; Feng, W.; Li, P.; Liu, Z.T.; Xu, X.; Yao, Y.B. A fusion method of pedestrian dead reckoning and pseudo indoor plan based on conditional random field. Measurement 2023, 207, 112417. [Google Scholar] [CrossRef]

- Wang, J.T.; Xu, T.H.; Wang, Z.J. Adaptive Robust Unscented Kalman Filter for AUV Acoustic Navigation. Sensors 2020, 20, 60. [Google Scholar] [CrossRef]

- Klee, U.; Gehrig, T.; McDonough, J. Kalman filters for time delay of arrival-based source localization. Eurasip J. Appl. Signal Process. 2006, 2006, 12378. [Google Scholar] [CrossRef]

- Wang, B.Y.; Liu, X.L.; Yu, B.G.; Jia, R.C.; Gan, X.L. Pedestrian Dead Reckoning Based on Motion Mode Recognition Using a Smartphone. Sensors 2018, 18, 1811. [Google Scholar] [CrossRef] [PubMed]

- Kang, W.; Han, Y. SmartPDR: Smartphone-Based Pedestrian Dead Reckoning for Indoor Localization. IEEE Sens. J. 2015, 15, 2906–2916. [Google Scholar] [CrossRef]

- Yan, S.Q.; Xu, X.Y.; Luo, X.A.; Xiao, J.M.; Ji, Y.F.; Wang, R.R. A Positioning and Navigation Method Combining Multimotion Features Dead Reckoning with Acoustic Localization. Sensors 2023, 23, 9849. [Google Scholar] [CrossRef]

- Luo, Y.R.; Guo, C.; Su, J.T.; Guo, W.F.; Zhang, Q. Learning-Based Complex Motion Patterns Recognition for Pedestrian Dead Reckoning. IEEE Sens. J. 2021, 21, 4280–4290. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, L.; Yi, Q.W.; Wang, X.J.; Zhang, D.W.; Zhang, G.H. Positioning Method of Pedestrian Dead Reckoning Based on Human Activity Recognition Assistance. In Proceedings of the 12th IEEE International Conference on Indoor Positioning and Indoor Navigation (IPIN), Beijing, China, 5–7 September 2022. [Google Scholar]

- Chen, X.T.; Xie, Y.X.; Zhou, Z.H.; He, Y.Y.; Wang, Q.L.; Chen, Z.M. An Indoor 3D Positioning Method Using Terrain Feature Matching for PDR Error Calibration. Electronics 2024, 13, 1468. [Google Scholar] [CrossRef]

- Khalili, B.; Abbaspour, R.A.; Chehreghan, A.; Vesali, N. A Context-Aware Smartphone-Based 3D Indoor Positioning Using Pedestrian Dead Reckoning. Sensors 2022, 22, 9968. [Google Scholar] [CrossRef]

- Gu, F.Q.; Khoshelham, K.; Valaee, S.; Shang, J.G.; Zhang, R. Locomotion Activity Recognition Using Stacked Denoising Autoencoders. IEEE Internet Things J. 2018, 5, 2085–2093. [Google Scholar] [CrossRef]

- Klein, I.; Solaz, Y.; Ohayon, G. Pedestrian Dead Reckoning With Smartphone Mode Recognition. IEEE Sens. J. 2018, 18, 7577–7584. [Google Scholar] [CrossRef]

- Guo, G.Y.; Chen, R.Z.; Yan, K.; Li, Z.; Qian, L.; Xu, S.H.; Niu, X.G.; Chen, L. Large-Scale Indoor Localization Solution for Pervasive Smartphones Using Corrected Acoustic Signals and Data-Driven PDR. IEEE Internet Things J. 2023, 10, 15338–15349. [Google Scholar] [CrossRef]

- Song, X.Y.; Wang, M.; Qiu, H.B.; Luo, L.Y. Indoor Pedestrian Self-Positioning Based on Image Acoustic Source Impulse Using a Sensor-Rich Smartphone. Sensors 2018, 18, 4143. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Duan, N.; Zhou, Z.; Zheng, F.; Qiu, H.B.; Li, X.P.; Zhang, G.L. Indoor PDR Positioning Assisted by Acoustic Source Localization, and Pedestrian Movement Behavior Recognition, Using a Dual-Microphone Smartphone. Wirel. Commun. Mob. Comput. 2021, 2021, 9981802. [Google Scholar] [CrossRef]

- Wei, B.; Gao, M.C.; Li, F.L.; Luo, C.W.; Wang, S.; Zhang, J. rWiFiSLAM: Effective WiFi Ranging Based SLAM System in Ambient Environments. IEEE Robot. Autom. Lett. 2024, 9, 5362–5369. [Google Scholar] [CrossRef]

- Wu, Q.; Li, Z.K.; Shao, K.F. Location Accuracy Indicator Enhanced Method Based on MFM/PDR Integration Using Kalman Filter for Indoor Positioning. IEEE Sens. J. 2024, 24, 4831–4840. [Google Scholar] [CrossRef]

- Naheem, K.; Kim, M.S. A Low-Cost Foot-Placed UWB and IMU Fusion-Based Indoor Pedestrian Tracking System for IoT Applications. Sensors 2022, 22, 8160. [Google Scholar] [CrossRef]

- Jin, Z.; Li, Y.J.; Yang, Z.; Zhang, Y.F.; Cheng, Z. Real-Time Indoor Positioning Based on BLE Beacons and Pedestrian Dead Reckoning for Smartphones. Appl. Sci. 2023, 13, 4415. [Google Scholar] [CrossRef]

- Wu, J.; Zhu, M.H.; Xiao, B.; Qiu, Y.Z. The Improved Fingerprint-Based Indoor Localization with RFID/PDR/MM Technologies. In Proceedings of the 24th IEEE International Conference on Parallel and Distributed Systems (ICPADS), Singapore, 11–13 December 2018; pp. 878–885. [Google Scholar]

- Martinelli, A.; Gao, H.; Groves, P.D.; Morosi, S. Probabilistic Context-Aware Step Length Estimation for Pedestrian Dead Reckoning. IEEE Sens. J. 2018, 18, 1600–1611. [Google Scholar] [CrossRef]

- Kuipers, J.B. Quaternions and Rotation Sequences—A Primer with Applications to Orbits, Aerospace and Virtual Reality; Princeton University Press: Princeton, NJ, USA, 2002; p. 400. [Google Scholar]

- Nguyen, V.-H.; Do, T.C.; Ahn, K.-K. Implementing PSO-LSTM-GRU Hybrid Neural Networks for Enhanced Control and Energy Efficiency of Excavator Cylinder Displacement. Mathematics 2024, 12, 3185. [Google Scholar] [CrossRef]

- Yan, S.; Wu, C.; Deng, H.; Luo, X.; Ji, Y.; Xiao, J. A Low-Cost and Efficient Indoor Fusion Localization Method. Sensors 2022, 22, 5505. [Google Scholar] [CrossRef]

- Yan, S.Q.; Wu, C.P.; Luo, X.A.; Ji, Y.F.; Xiao, J.M. Multi-Information Fusion Indoor Localization Using Smartphones. Appl. Sci. 2023, 13, 3270. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).