DOCB: A Dynamic Online Cross-Batch Hard Exemplar Recall for Cross-View Geo-Localization

Abstract

1. Introduction

- A Dynamic Online Cross-Batch (DOCB) hard exemplar mining method is proposed, which dynamically selects the hardest cross-batch negative exemplar for each anchor based on the current network state during the iteration process and assists triplet loss to better guide the network to learn valuable metric information.

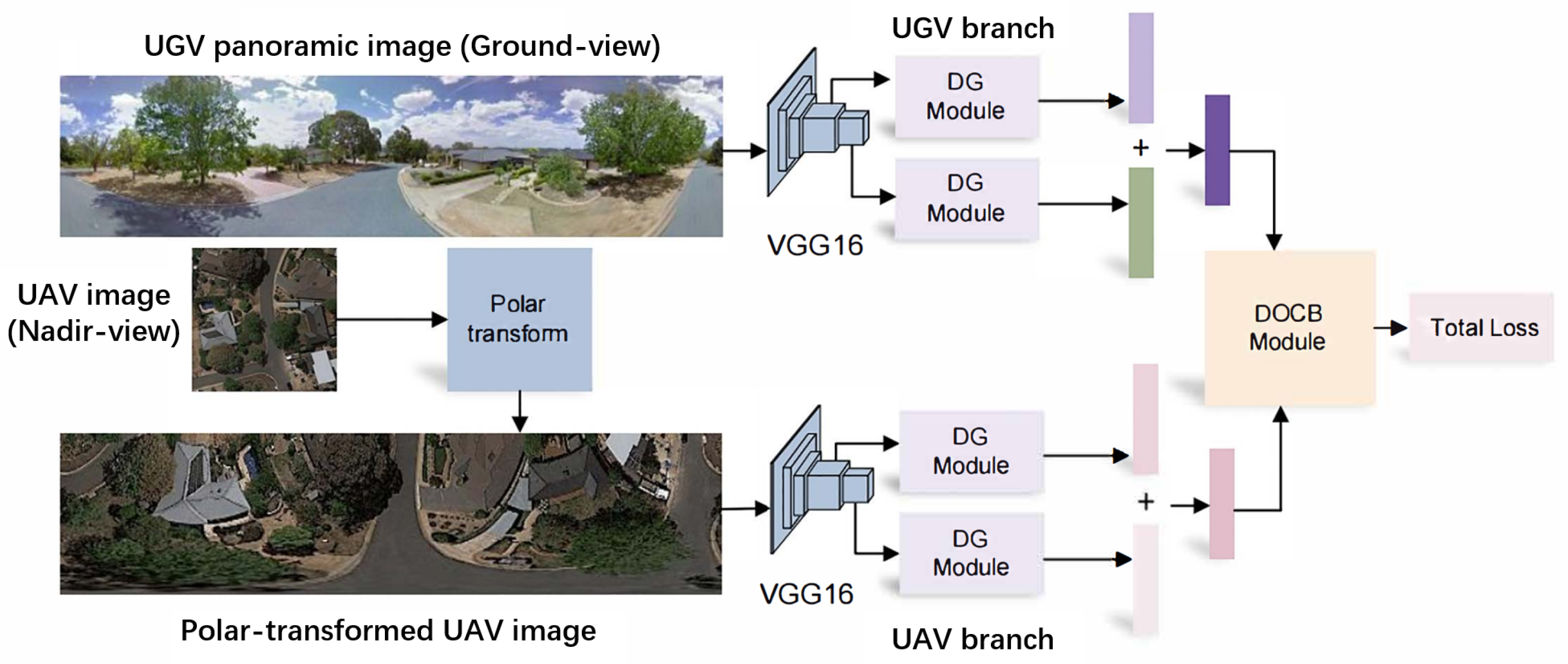

- A simple network architecture MSFA is proposed for cross-view image-based geo-localization, which generates multi-scale feature aggregation by learning and fusing multiple local spatial embeddings.

- Experiments show the following: With the DOCB and MSFA, we have a top 1 recall of 95.78% on CVUSA and 86.34% on CVACT_val. In addition, our model produces a shorter descriptor with a length of 512 and has better cross-data transferring ability.

2. Related Work

2.1. Evolution of Feature Representation for Geo-Localization

2.2. Advances in Cross-View Geo-Localization Architectures

2.3. Progress in Metric Learning and Hard Exemplar Mining

3. The Proposed Method

3.1. Overview

3.2. Network Architecture

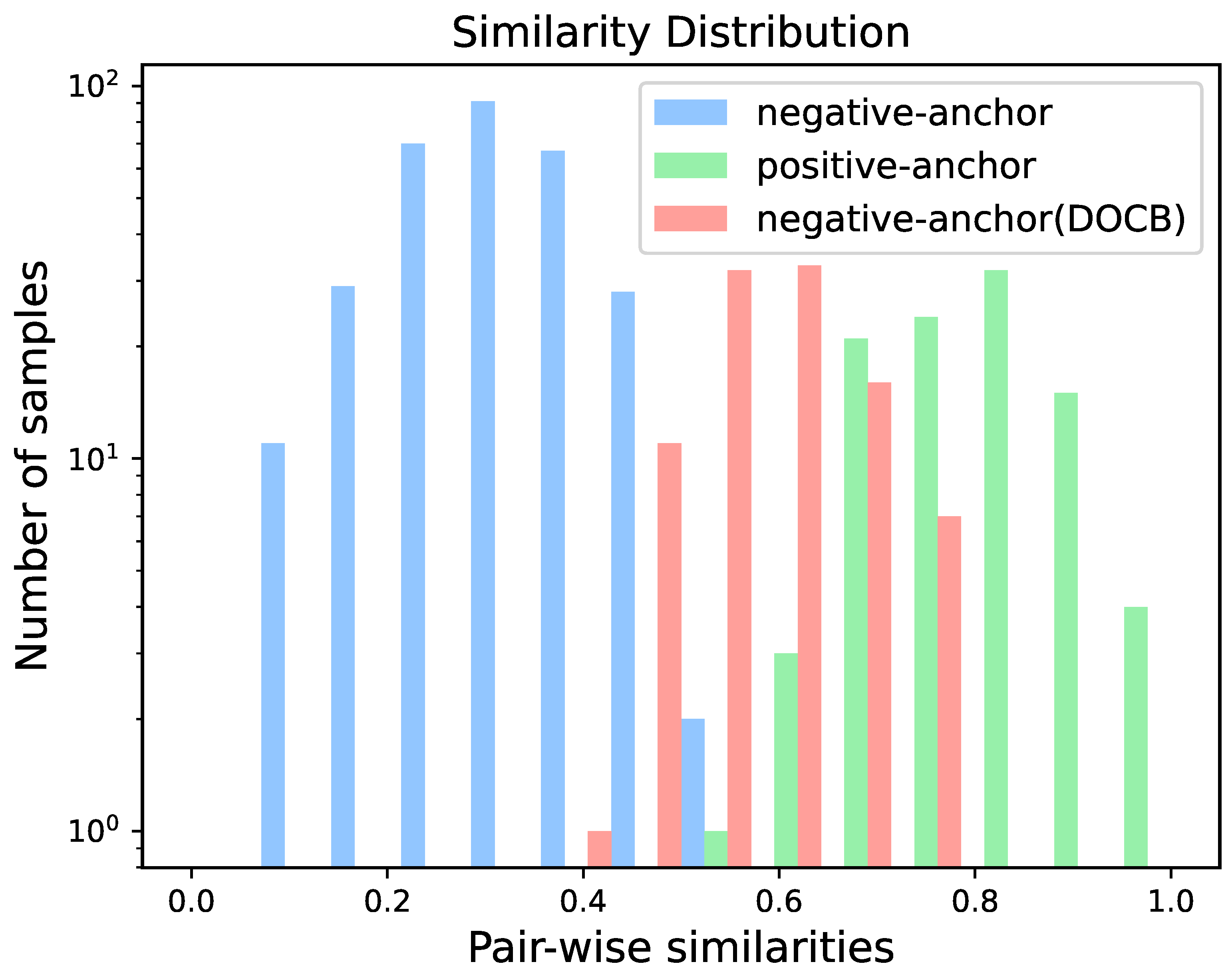

3.3. Dynamic Online Cross-Batch Hard Exemplar Mining Scheme

4. Experiments

4.1. Datasets

4.1.1. CVUSA

4.1.2. CVACT

4.2. Evaluation Protocol

4.3. Implementation Details

4.4. A Comparison with the State of the Art

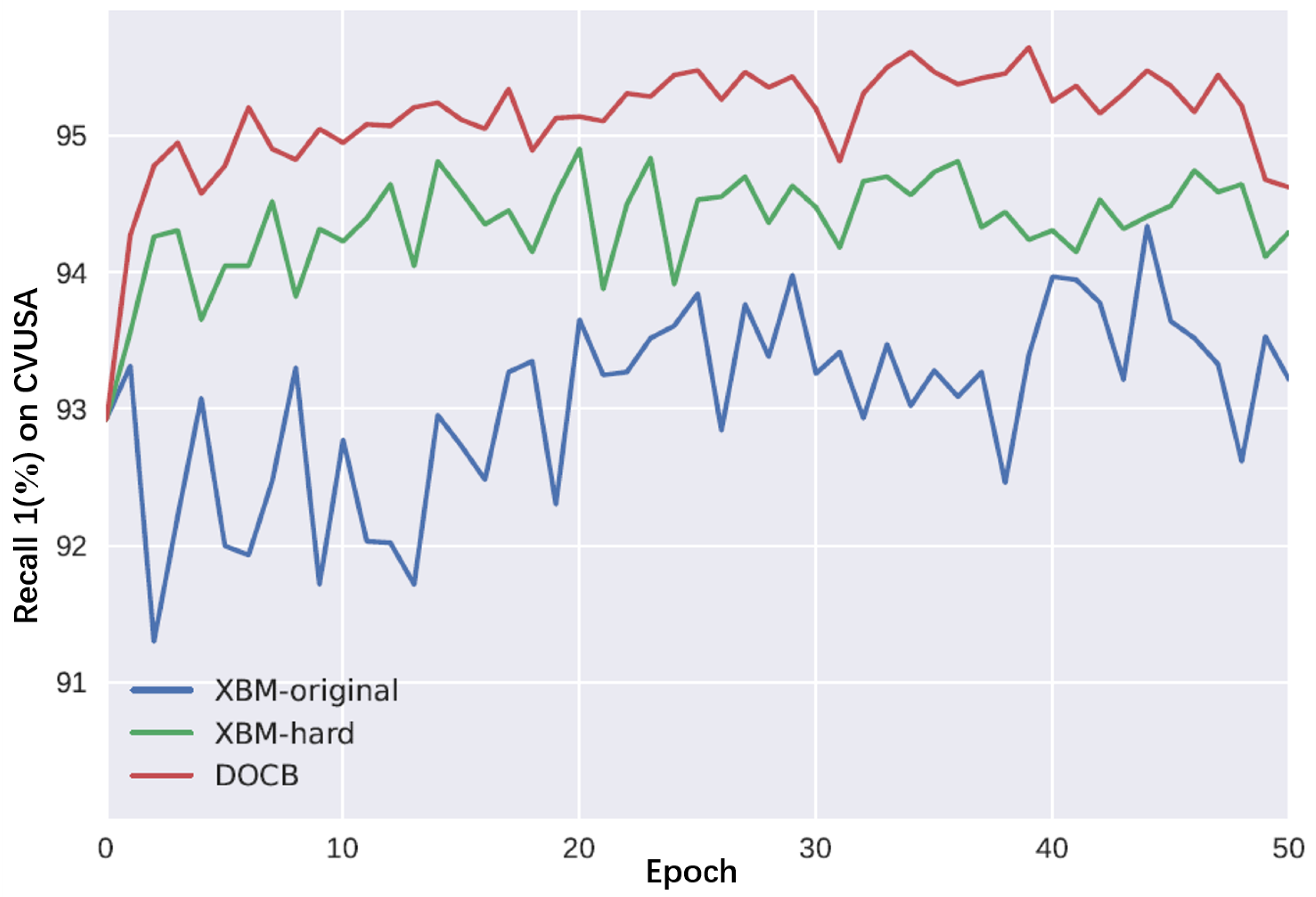

4.5. Comparison with Other Hard Exemplar Mining Methods

4.6. Computational Cost

4.7. Descriptor Length Comparison Experiment

4.8. Ablation Experiments

4.8.1. Effect of DG Module

4.8.2. Effect of Fusion Strategy

4.8.3. Effectiveness of DOCB

4.8.4. Effectiveness of Hyper-Parameter M

4.9. The Effect of Multi-Scale Aggregation and the Parallel Operation

4.10. Cross-Dataset Transferring Performance

4.11. Visualization of Retrieval Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| UGV | Unmanned Ground Vehicle |

| DOCB | Dynamic Online Cross-Batch |

| MSFA | Multi-Scale Feature Aggregation |

References

- Shi, Y.; Yu, X.; Liu, L.; Zhang, T.; Li, H. Optimal feature transport for cross-view image geo-localization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11990–11997. [Google Scholar]

- Liu, L.; Li, H. Lending orientation to neural networks for cross-view geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5624–5633. [Google Scholar]

- Shi, Y.; Liu, L.; Yu, X.; Li, H. Spatial-aware feature aggregation for image based cross-view geo-localization. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Yang, H.; Lu, X.; Zhu, Y. Cross-view geo-localization with layer-to-layer transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 29009–29020. [Google Scholar]

- Wang, T.; Fan, S.; Liu, D.; Sun, C. Transformer-guided convolutional neural network for cross-view geolocalization. arXiv 2022, arXiv:2204.09967. [Google Scholar]

- Zhu, S.; Shah, M.; Chen, C. Transgeo: Transformer is all you need for cross-view image geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1162–1171. [Google Scholar]

- Cai, S.; Guo, Y.; Khan, S.; Hu, J.; Wen, G. Ground-to-aerial image geo-localization with a hard exemplar reweighting triplet loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8391–8400. [Google Scholar]

- Zhu, S.; Yang, T.; Chen, C. Revisiting street-to-aerial view image geo-localization and orientation estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 756–765. [Google Scholar]

- Guo, Y.; Choi, M.; Li, K.; Boussaid, F.; Bennamoun, M. Soft exemplar highlighting for cross-view image-based geo-localization. IEEE Trans. Image Process. 2022, 31, 2094–2105. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, H.; Huang, W.; Scott, M.R. Cross-batch memory for embedding learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6388–6397. [Google Scholar]

- Tan, Z.; Liu, A.; Wan, J.; Liu, H.; Lei, Z.; Guo, G.; Li, S.Z. Cross-batch hard example mining with pseudo large batch for id vs. spot face recognition. IEEE Trans. Image Process. 2022, 31, 3224–3235. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Sivic, J.; Zisserman, A. Video Google: Efficient visual search of videos. In Toward Category-Level Object Recognition; Springer: Berlin/Heidelberg, Germany, 2006; pp. 127–144. [Google Scholar]

- Vo, N.; Jacobs, N.; Hays, J. Revisiting im2gps in the deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2621–2630. [Google Scholar]

- Torii, A.; Arandjelovic, R.; Sivic, J.; Okutomi, M.; Pajdla, T. 24/7 place recognition by view synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1808–1817. [Google Scholar]

- Workman, S.; Jacobs, N. On the location dependence of convolutional neural network features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 70–78. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef]

- Vo, N.N.; Hays, J. Localizing and orienting street views using overhead imagery. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 494–509. [Google Scholar]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5297–5307. [Google Scholar]

- Hu, S.; Feng, M.; Nguyen, R.M.; Lee, G.H. Cvm-net: Cross-view matching network for image-based ground-to-aerial geo-localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7258–7267. [Google Scholar]

- Shi, Y.; Yu, X.; Campbell, D.; Li, H. Where am i looking at? Joint location and orientation estimation by cross-view matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4064–4072. [Google Scholar]

- Lu, X.; Luo, S.; Zhu, Y. It’s okay to be wrong: Cross-view geo-localization with step-adaptive iterative refinement. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4709313. [Google Scholar] [CrossRef]

- Sun, Y.; Ye, Y.; Kang, J.; Fernandez-Beltran, R.; Feng, S.; Li, X.; Luo, C.; Zhang, P.; Plaza, A. Cross-view object geo-localization in a local region with satellite imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4704716. [Google Scholar] [CrossRef]

- Zheng, Z.; Wei, Y.; Yang, Y. University-1652: A multi-view multi-source benchmark for drone-based geo-localization. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1395–1403. [Google Scholar]

- Dai, M.; Hu, J.; Zhuang, J.; Zheng, E. A transformer-based feature segmentation and region alignment method for UAV-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4376–4389. [Google Scholar] [CrossRef]

- Zhu, R.; Yang, M.; Yin, L.; Wu, F.; Yang, Y. UAV’s status is worth considering: A fusion representations matching method for geo-localization. Sensors 2023, 23, 720. [Google Scholar] [CrossRef] [PubMed]

- Lv, H.; Zhu, H.; Zhu, R.; Wu, F.; Wang, C.; Cai, M.; Zhang, K. Direction-Guided Multi-Scale Feature Fusion Network for Geo-localization. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5622813. [Google Scholar] [CrossRef]

- Zhu, S.; Yang, T.; Chen, C. Vigor: Cross-view image geo-localization beyond one-to-one retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 3640–3649. [Google Scholar]

- Wang, T.; Li, J.; Sun, C. DeHi: A decoupled hierarchical architecture for unaligned ground-to-aerial geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 1927–1940. [Google Scholar] [CrossRef]

- Ge, F.; Zhang, Y.; Liu, Y.; Wang, G.; Coleman, S.; Kerr, D.; Wang, L. Multibranch joint representation learning based on information fusion strategy for cross-view geo-localization. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5909516. [Google Scholar] [CrossRef]

- Li, P.; Pan, P.; Liu, P.; Xu, M.; Yang, Y. Hierarchical temporal modeling with mutual distance matching for video based person re-identification. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 503–511. [Google Scholar] [CrossRef]

- Duan, Y.; Lu, J.; Feng, J.; Zhou, J. Deep localized metric learning. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2644–2656. [Google Scholar] [CrossRef]

- Shi, Y.; Li, H. Beyond cross-view image retrieval: Highly accurate vehicle localization using satellite image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17010–17020. [Google Scholar]

- Workman, S.; Souvenir, R.; Jacobs, N. Wide-area image geolocalization with aerial reference imagery. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3961–3969. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Wang, T.; Zheng, Z.; Yan, C.; Zhang, J.; Sun, Y.; Zheng, B.; Yang, Y. Each part matters: Local patterns facilitate cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 867–879. [Google Scholar] [CrossRef]

- Zhang, X.; Meng, X.; Yin, H.; Wang, Y.; Yue, Y.; Xing, Y.; Zhang, Y. SSA-Net: Spatial scale attention network for image-based geo-localization. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8022905. [Google Scholar] [CrossRef]

- Lin, J.; Zheng, Z.; Zhong, Z.; Luo, Z.; Li, S.; Yang, Y.; Sebe, N. Joint representation learning and keypoint detection for cross-view geo-localization. IEEE Trans. Image Process. 2022, 31, 3780–3792. [Google Scholar] [CrossRef]

- Toker, A.; Zhou, Q.; Maximov, M.; Leal-Taixé, L. Coming down to earth: Satellite-to-street view synthesis for geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 6488–6497. [Google Scholar]

- Tian, Y.; Deng, X.; Zhu, Y.; Newsam, S. Cross-time and orientation-invariant overhead image geolocalization using deep local features. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2512–2520. [Google Scholar]

- Li, J.; Yang, C.; Qi, B.; Zhu, M.; Wu, N. 4scig: A four-branch framework to reduce the interference of sky area in cross-view image geo-localization. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4703818. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

| Model | CVUSA | CVACT_Val | CVACT_Test | ||||||

|---|---|---|---|---|---|---|---|---|---|

| r@1 | r@5 | r@1% | r@1 | r@5 | r@1% | r@1 | r@5 | r@1% | |

| CVM-Net [21] | 22.47 | 49.98 | 89.62 | 20.15 | 45.00 | 87.57 | 5.41 | 14.79 | 54.53 |

| Liu and Li [2] | 40.79 | 66.82 | 96.12 | 46.96 | 68.28 | 92.01 | 19.21 | 35.97 | 60.69 |

| CVFT [1] | 61.43 | 84.69 | 99.02 | 61.05 | 81.33 | 95.93 | 26.12 | 45.33 | 71.69 |

| LPN (PT) [38] | 85.79 | 95.38 | 99.41 | 79.99 | 90.63 | 97.03 | - | - | - |

| SAFA + LPN (PT) [38] | 92.83 | 98.00 | 99.78 | 83.66 | 94.14 | 98.41 | - | - | - |

| SAFA (PT) [3] | 89.84 | 96.93 | 99.64 | 81.03 | 92.8 | 98.17 | 55.50 | 79.94 | 94.49 |

| SAFA + 4SCIG [43] | 92.91 | 98.15 | 99.79 | 83.18 | 93.35 | 99.30 | - | - | - |

| DSM (PT) [22] | 91.96 | 97.50 | 99.67 | 82.49 | 92.44 | 97.32 | 35.63 | 60.07 | 84.75 |

| CDE (PT) [41] | 92.56 | 97.55 | 99.57 | 83.28 | 93.57 | 98.22 | 61.29 | 85.13 | 98.32 |

| LPN + USAM (PT) [40] | 91.22 | - | 99.67 | 82.02 | - | 98.18 | - | - | - |

| L2LTR (PT) [4] | 94.05 | 98.27 | 99.67 | 84.89 | 94.59 | 98.37 | 60.72 | 85.85 | 96.12 |

| L2LTR + 4SCIG [43] | 93.82 | 98.62 | 99.70 | 81.54 | 93.11 | 97.95 | - | - | - |

| TransGeo [6] | 94.08 | 98.36 | 99.04 | - | - | - | - | - | - |

| TransGeo + 4SCIG [43] | 91.10 | 98.74 | 99.81 | 83.73 | 94.41 | 98.41 | - | - | - |

| TransGCNN (PT) [5] | 94.15 | 98.21 | 99.79 | 84.92 | 94.46 | 98.36 | - | - | - |

| DeHi [30] | 94.34 | 98.63 | 99.82 | 84.96 | 94.48 | 98.58 | - | - | - |

| MSFA (PT) (Ours) | 94.38 | 98.32 | 99.71 | 85.53 | 94.39 | 98.37 | 63.04 | 86.12 | 96.10 |

| MSFA (PT) + DOCB (Ours) | 95.78 | 98.50 | 99.67 | 86.34 | 94.42 | 98.24 | 63.90 | 86.95 | 95.99 |

| Method | Batch Size | r@1 |

|---|---|---|

| DSM [22] + SEH [9] | 30 | 94.46 |

| DSM [22] + SEH [9] | 90 | 94.91 |

| DSM [22] + SEH [9] | 120 | 95.11 |

| DSM [22] + DOCB | 32 | 95.02 |

| MSFA + XBM-hard | 32 | 94.90 |

| MSFA + Global mining [8] | 32 | 94.74 |

| MSFA + DOCB (Ours) | 32 | 95.78 |

| Model | Param (M) | GFLOPs | Inference Time (Batch) (ms) | r@1 |

|---|---|---|---|---|

| SAFA [3] | 29.50 | 42.24 | 110 | 89.84 |

| DSM [22] | 17.90 | - | - | 91.96 |

| L2LTR [4] | 195.90 | - | - | 94.05 |

| TransGCNN [5] | 87.80 | - | - | 94.15 |

| TransGeo [6] | 44.8 | 11.32 | 99 | 94.08 |

| MSFA (Ours) | 38.90 | 40.68 | 108 | 94.38 |

| MSFA + DOCB (Ours) | 38.90 | 40.68 | 108 | 95.39 |

| Model | Descriptor Length | r@1 |

|---|---|---|

| SAFA [3] | 4096 | 89.84 |

| DSM [22] | 4096 | 91.96 |

| L2LTR [4] | 768 | 94.05 |

| TransGeo [6] | 1000 | 94.08 |

| MSFA (Ours) | 512 | 94.38 |

| Model | r@1 (%) |

|---|---|

| 65.74 | |

| 89.84 | |

| 93.29 |

| Model | Fusion Strategy | r@1 |

|---|---|---|

| none | 93.29 | |

| concatenate | 93.82 | |

| summation | 93.92 | |

| attention + summation | 94.12 | |

| summation | 94.38 |

| Method | Batch Size | r@1 |

|---|---|---|

| 32 | 94.38 | |

| 32 | 94.52 | |

| 32 | 95.41 | |

| 32 | 95.78 |

| Dataset | M | r@1 () | r@1 |

|---|---|---|---|

| 20 | 95.12 | ||

| CVUSA | 200 | 93.82 | 95.46 |

| 1000 | 95.78 | ||

| 20 | 86.34 | ||

| CVACT_val | 200 | 85.15 | 86.26 |

| 1000 | 85.22 |

| Model | Multi-Scale | Parallel | r@1 |

|---|---|---|---|

| No | No | 93.29 | |

| Yes | No | 94.02 | |

| No | Yes | 93.81 | |

| Yes | Yes | 94.38 |

| Task | Model | r@1 | r@5 | r@1% |

|---|---|---|---|---|

| CVUSA→CVACT | SAFA [3] | 30.40 | 52.93 | 85.82 |

| DSM [22] | 33.66 | 52.17 | 79.67 | |

| L2LTR [4] | 47.55 | 70.58 | 91.39 | |

| MSFA (Ours) | 53.67 | 75.02 | 93.26 | |

| CVACT→CVUSA | SAFA [3] | 21.45 | 36.55 | 69.83 |

| DSM [22] | 18.47 | 34.46 | 69.01 | |

| L2LTR [4] | 33.00 | 51.87 | 84.79 | |

| MSFA (Ours) | 44.17 | 63.47 | 89.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, W.; Tian, X.; Huang, L.; Zhang, X.; Wang, F. DOCB: A Dynamic Online Cross-Batch Hard Exemplar Recall for Cross-View Geo-Localization. ISPRS Int. J. Geo-Inf. 2025, 14, 418. https://doi.org/10.3390/ijgi14110418

Fan W, Tian X, Huang L, Zhang X, Wang F. DOCB: A Dynamic Online Cross-Batch Hard Exemplar Recall for Cross-View Geo-Localization. ISPRS International Journal of Geo-Information. 2025; 14(11):418. https://doi.org/10.3390/ijgi14110418

Chicago/Turabian StyleFan, Wenchao, Xuetao Tian, Long Huang, Xiuwei Zhang, and Fang Wang. 2025. "DOCB: A Dynamic Online Cross-Batch Hard Exemplar Recall for Cross-View Geo-Localization" ISPRS International Journal of Geo-Information 14, no. 11: 418. https://doi.org/10.3390/ijgi14110418

APA StyleFan, W., Tian, X., Huang, L., Zhang, X., & Wang, F. (2025). DOCB: A Dynamic Online Cross-Batch Hard Exemplar Recall for Cross-View Geo-Localization. ISPRS International Journal of Geo-Information, 14(11), 418. https://doi.org/10.3390/ijgi14110418