1. Introduction

General-purpose code is typically written in formal programming languages such as Python, C++, or Java, and is employed to perform data processing, implement algorithms, and handle multitask operations such as network communication [

1]. Users translate logical expressions into executable instructions through programming, enabling the processing of diverse data types including text, structured data, and imagery. However, the rapid growth of high-resolution remote sensing imagery and crowdsourced spatiotemporal data has imposed greater demands on computational capacity and methodological customization in geospatial information analysis, particularly for tasks involving indexing, processing, and analysis of heterogeneous geospatial data. Geospatial data types are inherently complex, encompassing remote sensing imagery, vector layers, and point clouds. These data involve various geometric structures (points, lines, polygons, volumes) and semantic components such as attributes, metadata, and temporal information [

2,

3]. Spatial analysis commonly entails dozens of operations, including buffer generation, point clustering, spatial interpolation, spatiotemporal trajectory analysis, isopleth and isochrone generation, linear referencing, geometric merging and clipping, and topological relationship evaluation [

4]. Effective geospatial data processing requires integrated consideration of spatial reference system definition and transformation, spatial indexing structures (e.g., R-trees, Quad-trees), validation of geometric and topological correctness, and the modeling and management of spatial databases [

5]. Moreover, modern geospatial applications demand the integration of functionalities such as basemap services (e.g., OpenStreetMap, Mapbox), vector rendering, user interaction, heatmaps, trajectory animation, and three-dimensional visualization [

6,

7]. Consequently, the foundational libraries of conventional general-purpose languages are increasingly insufficient for addressing the complexities of contemporary geospatial analysis.

Although geospatial analysis platforms such as ArcGIS and QGIS have integrated a wide range of built-in functions, they primarily rely on graphical user interfaces (GUIs) for point-and-click operations. While these interfaces are user-friendly, they present limitations in terms of workflow customization, task reproducibility, and methodological dissemination [

8,

9]. In contrast, code-driven approaches offer greater flexibility and reusability. Users can automate complex tasks and enable cross-context reuse through scripting, thereby facilitating the efficient dissemination and sharing of GIS methodologies. Meanwhile, cloud-based platforms such as Google Earth Engine (GEE) provide JavaScript and Python APIs that support large-scale online analysis of remote sensing and spatiotemporal data [

10]. However, the underlying computational logic of these platforms depends on pre-packaged functions and remote execution environments, which restrict fine-grained control over processing workflows. As a result, they struggle to meet demands for local deployment, custom operator definition, and data privacy—particularly in scenarios requiring enhanced security or functional extensibility. In recent years, the rise in WebGIS has been driving a paradigm shift in spatial analysis toward browser-based execution. In applications such as urban governance, disaster response, agricultural monitoring, ecological surveillance, and public participation, users increasingly expect an integrated, browser-based environment for data loading, analysis, and visualization—enabling a lightweight, WYSIWYG (what-you-see-is-what-you-get) experience [

11]. This trend is propelling the evolution of geospatial computing from traditional desktop and server paradigms toward “computable WebGIS.” The browser is increasingly serving as a lightweight yet versatile computational terminal, opening new possibilities for frontend spatial modeling and visualization.

Against this backdrop, JavaScript has emerged as a key language for geospatial analysis and visualization, owing to its native browser execution capability and mature frontend ecosystem. Compared to languages such as Python (e.g., GeoPandas, Shapely, Rasterio), R, or MATLAB, JavaScript can run directly in the front-end, eliminating the need for environment setup (e.g., Python requires installing interpreters and libraries). This makes it particularly well-suited for lightweight analysis workflows with low resource overhead and strong cross-platform compatibility [

12]. For spatial tasks, the open-source community has developed a rich ecosystem of JavaScript-based geospatial tools, which can be broadly categorized into two types. The first category focuses on spatial analysis and computation. For instance, Turf.js offers operations such as buffering, merging, clipping, and distance measurement; JSTS supports topological geometry processing; and Geolib enables precise calculations of distances and angles. The second category centers on map visualization. Libraries such as Leaflet and OpenLayers provide functionalities for layer management, plugin integration, and interactive user interfaces, significantly accelerating the shift in GIS capabilities to the Web [

13]. In this paper, we collectively refer to these tools as “JavaScript-based Geospatial Code”, which enables the execution of core geospatial analysis and visualization tasks without requiring backend services. These tools are particularly suitable for small-to-medium-scale data processing, prototype development, and educational demonstrations. While JavaScript may fall short of Python in terms of large-scale computation or deep learning integration, it offers distinct advantages in frontend spatial computing and method sharing due to its excellent platform compatibility, low development barrier, and high reusability [

14].

Authoring JavaScript-based geospatial code requires not only general programming skills but also domain-specific knowledge of spatial data types, core library APIs, spatial computation logic, and visualization techniques—posing a significantly higher barrier to entry than conventional coding tasks [

15]. As GIS increasingly converges with fields such as remote sensing, urban studies, and environmental science, a growing number of non-expert users are eager to engage in geospatial analysis and application development. However, many of them encounter substantial difficulties in modeling and implementation, highlighting the urgent need for automated tools to lower learning and execution costs. In recent years, large language models (LLMs), empowered by massive code corpora and Transformer-based architectures, have achieved remarkable progress in natural language-driven code generation tasks. Models such as GPT-4o, DeepSeek, Claude, and LLaMA are capable of translating natural language prompts directly into executable program code [

16]. Domain-specific models such as DeepSeek Coder, Qwen 2.5-Coder, and Code LLaMA have further enhanced accuracy and robustness, offering feasible solutions for automated code generation in geospatial tasks [

17]. However, unlike general-purpose coding tasks, geospatial code generation entails elevated demands in terms of semantic understanding, library function invocation, and structural organization—challenges that are particularly pronounced in the JavaScript environment. In contrast to backend languages such as Python and Java, which are primarily used for building and managing server-side logic, the JavaScript ecosystem is characterized by fragmented function interfaces, inconsistent documentation standards, and strong dependence on specific browsers and operating systems. Moreover, it often requires the tight integration of map rendering, spatial computation, and user interaction logic, thereby imposing stricter demands on semantic precision and modular organization [

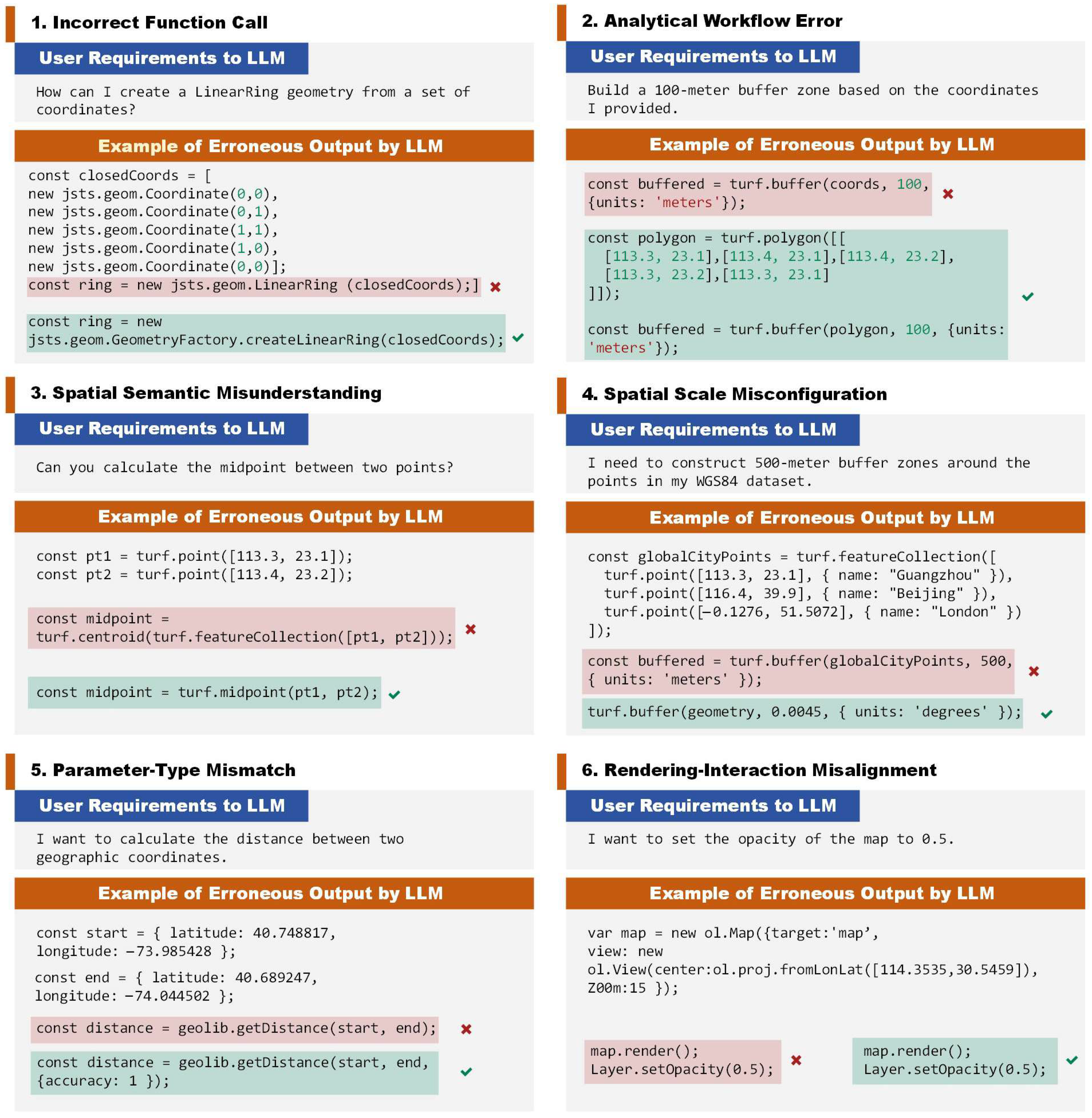

18]. Current mainstream LLMs frequently exhibit hallucination issues when generating JavaScript-based geospatial code. These manifest as incorrect function calls, spatial semantic misunderstandings, flawed analytical workflows, misaligned rendering–interaction logic, parameter-type mismatches, and spatial scale misconfigurations (

Figure 1), often resulting in erroneous or non-executable outputs.

This raises a critical question: how well do current LLMs actually perform in generating JavaScript-based geospatial code? Without systematic evaluation, direct application of these models may result in code with structural flaws, logical inconsistencies, or execution failures—ultimately compromising the reliability of geospatial analyses. Although LLMs have demonstrated strong performance on general code benchmarks such as HumanEval and MBPP [

19], these benchmarks overlook the inherent complexity of geospatial tasks and the unique characteristics of the JavaScript frontend ecosystem, making them insufficient for assessing real-world usability and stability in spatial contexts. Existing studies have made preliminary attempts to evaluate geospatial code generation, yet significant limitations remain. For instance, GeoCode-Bench and GeoCode-Eval proposed by Wuhan University [

20], and the GeoSpatial-Code-LLMs Dataset developed by Wrocław University of Science and Technology, primarily focus on Python or GEE-based code, with limited attention to JavaScript-centric scenarios. Specifically, GeoCode-Bench and GeoCode-Eval rely on manual scoring, resulting in high evaluation costs, subjectivity, and limited reproducibility. While the GeoSpatial-Code-LLMs Dataset introduces automation to some extent, it suffers from a small sample size (approximately 40 examples) and lacks coverage of multimodal data structures such as vectors and rasters. Moreover, AutoGEEval and AutoGEEval++ have established evaluation pipelines for the GEE platform, yet they fail to address browser-based execution environments or the distinctive requirements of JavaScript frontend tasks [

21,

22]. Therefore, there is an urgent need to develop an automated, end-to-end evaluation pipeline for systematically assessing the performance of mainstream LLMs in JavaScript-based geospatial code generation. Such a framework is essential for identifying model limitations and appropriate application scopes, guiding future optimization and fine-tuning efforts, and laying the theoretical and technical foundation for building low-barrier, high-efficiency geospatial code generation tools.

To address the aforementioned challenges, this study proposes and implements GeoJSEval, a LLM evaluation framework designed for unit-level function generation assessment. GeoJSEval establishes an automated and structured evaluation system for JavaScript-based geospatial code. As illustrated in

Figure 2, GeoJSEval comprises three core components: the GeoJSEval-Bench test suite, the Submission Program, and the Judge Program. The GeoJSEval-Bench module (

Figure 2a) is constructed based on the official documentation of five mainstream JavaScript geospatial libraries: Turf.js, JSTS, Geolib, Leaflet, and OpenLayers. The benchmark contains a total of 432 function-level tasks and 2071 structured test cases. All test cases were automatically generated using multi-round prompting strategies with Claude Sonnet 4 and subsequently verified and validated by domain experts to ensure correctness and executability. Each test case encompasses three major components—function semantics, runtime configuration, and evaluation logic—and includes seven key metadata fields: function_header, reference_code, parameters_list, output_type, eval_methods, output_path, and expected_answer. The benchmark spans 25 data types supported across the five libraries, including geometric objects, map elements, numerical values, and boolean types—demonstrating high representativeness and diversity. These five libraries are widely adopted and well-established in both academic research and industrial practice. Their collective functionality reflects the mainstream capabilities of the JavaScript ecosystem in geospatial computation and visualization, providing a sufficiently comprehensive foundation for conducting rigorous benchmarking studies. The Submission Program (

Figure 2b) uses the function header as a core prompt to guide the evaluated LLM in generating a complete function implementation. The system then injects predefined test parameters and executes the generated code, saving the output to a designated path for downstream evaluation. The Judge Program (

Figure 2c) automatically selects the appropriate comparison strategy based on the output type, enabling systematic evaluation of code correctness and executability. GeoJSEval also integrates resource and performance monitoring modules to track token usage, response time, and code length, allowing the derivation of runtime metrics such as Inference Efficiency, Token Efficiency, and Code Efficiency. Additionally, the framework incorporates an error detection mechanism that automatically identifies common failure patterns—including syntax errors, type mismatches, attribute errors, and runtime exceptions—providing quantitative insights into model performance. In the experimental evaluation, we systematically assess a total of 20 state-of-the-art LLMs: 9 general non-reasoning models, 3 reasoning-augmented models, 5 code-centric models, and 1 geospatial-specialized model, GeoCode-GPT [

20]. The results reveal significant performance disparities, structural limitations, and potential optimization directions in the ability of current LLMs to generate JavaScript geospatial code.

The main contributions of this study [

23] are summarized as follows:

We propose GeoJSEval, the first automated evaluation framework for assessing LLMs in JavaScript-based geospatial code generation. The framework enables a fully automated, structured, and extensible end-to-end pipeline—from prompt input and code generation to execution, result comparison, and error diagnosis—addressing the current lack of standardized evaluation tools in this domain.

We construct an open-source GeoJSEval-Bench, a comprehensive benchmark consisting of 432 function-level test tasks and 2071 parameterized cases, covering 25 types of JavaScript-based geospatial data. The benchmark systematically addresses core spatial analysis functions, enabling robust assessment of LLM performance in geospatial code generation.

We conduct a systematic evaluation and comparative analysis of 20 representative LLMs across four model families. The evaluation quantifies performance across multiple dimensions—including execution pass rates, accuracy, resource consumption, and error type distribution—revealing critical bottlenecks in spatial semantic understanding and frontend ecosystem adaptation, and offering insights for future model optimization.

The remainder of this paper is organized as follows:

Section 2 reviews related work on geospatial code, code generation tasks, and LLM-based code evaluation.

Section 3 presents the task modeling, module design, construction method, and construction results of the GeoJSEval-Bench test suite.

Section 4 details the overall evaluation pipeline of the GeoJSEval framework, including the implementation of the submission and judge programs.

Section 5 describes the evaluated models, experimental setup, and the multidimensional evaluation metrics.

Section 6 presents a systematic analysis and multidimensional comparison of the evaluation results.

Section 7 concludes the study by summarizing key contributions, discussing limitations, and outlining future research directions.

3. GeoJSEval-Bench

This study presents GeoJSEval-Bench, a standardized benchmark suite designed for unit-level evaluation of geospatial function calls. It aims to assess the capability of LLMs in generating JavaScript-based geospatial code. The benchmark is constructed based on the official API documentation of five representative open-source JavaScript geospatial libraries, covering core task modules ranging from spatial computation to map visualization. These libraries include Turf.js, JSTS, Geolib, Leaflet, and OpenLayers. These libraries can be broadly categorized into two functional groups. The first group focuses on spatial analysis and geometric computation. Turf.js offers essential spatial analysis functions such as buffering, union, clipping, and distance calculation, effectively covering core geoprocessing operations in frontend environments. JSTS specializes in topologically correct geometric operations, supporting high-precision analysis for complex geometries. Geolib focuses on point-level operations in latitude–longitude space, providing precise distance calculation, azimuth determination, and coordinate transformation. It is particularly valuable in location-based services and trajectory analysis. The second group comprises libraries for map visualization and user interaction. Leaflet, a lightweight web mapping framework, offers core APIs for map loading, tile layer control, event handling, and marker rendering. It is widely used in developing small to medium-scale interactive web maps. In contrast, OpenLayers provides more comprehensive functionalities, including support for various map service protocols, coordinate projection transformations, vector styling, and customizable interaction logic—making it a key tool for building advanced WebGIS clients. Based on the function systems of these libraries, GeoJSEval-Bench contains 432 unit-level test cases, spanning 25 data types, including map objects, layer objects, strings, and numerical values. This chapter provides a detailed description of the unit-level task modeling, module design, construction methodology, and the final composition of the benchmark.

3.1. Task Modeling

The unit-level testing task () proposed in GeoJSEval is designed to perform fine-grained evaluation of LLMs in terms of their ability to understand and generate function calls for each API function defined in five representative JavaScript geospatial libraries. This includes assessing their comprehension of function semantics, parameter structure, and input-output specifications. Specifically, each task evaluates whether a model can, given a known function header, accurately reconstruct the intended call semantics, generate a valid parameter structure, and synthesize a syntactically correct, semantically aligned, and executable JavaScript code snippet that effectively invokes the target function . This process simulates a typical developer workflow—reading API documentation and writing invocation code—and comprehensively assesses the model’s capability chain from function comprehension to code construction and finally behavioral execution. Fundamentally, this task examines the model’s code generation ability at the finest operational granularity—a single function call—serving as a foundational measure of an LLM’s capacity for function-level behavioral modeling.

Let the set of functions provided in the official documentation of the five mainstream JavaScript geospatial libraries be denoted as:

For each model under evaluation, the task is to generate a code snippet

that conforms to JavaScript syntax specifications and can be successfully executed in a real runtime environment:

GeoJSEval formalizes each function-level test task as the following function mapping process:

Specifically,

denotes the function header, including the function name and parameter signature.

refers to the large language model, which takes

as input and produces a function call code snippet

. The symbol

represents the target test function, and

denotes the execution result of the generated code

applied to

. The expected reference output is denoted by

. The module Exec represents the code execution engine, while Judge is the evaluation module responsible for determining whether

and

are consistent. Furthermore, GeoJSEval defines a task consistency function

to assess whether the model behavior aligns with expectations over a test set

. The equality operator “=” used in this comparison may represent strict equivalence, floating-point tolerance, or set-based inclusion—depending on the semantic consistency requirements of the task.

3.2. Module Design

All unit-level test cases were generated based on reference data using Claude Sonnet 4, an advanced large language model developed by Anthropic, guided by predefined prompts. Each test case was manually reviewed and validated by domain experts to ensure correctness and executability (see

Section 3.3 for details). Each complete test case is structured into three distinct modules: the Function Semantics Module, the Execution Configuration Module, and the Evaluation Parsing Module.

3.2.1. Function Semantics Module

In the task of automatic geospatial function generation, accurately understanding the invocation semantics and target behavior of a function is fundamental to enabling the model to generate executable code correctly. Serving as the semantic entry point of the entire evaluation pipeline, the Function Semantics Module aims to clarify two key aspects: (i) what the target function is intended to accomplish, and (ii) how it should be correctly implemented. This module comprises two components: the function header, which provides the semantic constraints for input, and the standard_code, which serves as the behavioral reference for correct implementation.

The function declaration

provides the structured input for each test task. It includes the function name, parameter types, and semantic descriptions. This information is combined with prompt templates to offer semantic guidance for the large language model, steering it to generate the complete function body. The structure of

is formally defined as:

Here, denotes the function name; represents the list of input parameters; specifies the type information for each parameter ; and “” provides a natural language description of the function’s purpose and usage scenario.

The reference code snippet

provides the correct implementation of the target function under standard semantics. It is generated by Claude Sonnet 4 using a predefined prompt and is executed by domain experts to produce the ground-truth output. The behavior of this function should remain stable and reproducible across all valid inputs, that is:

The resulting output serves as the reference answer in the subsequent evaluation logic module. It is important to note that this reference implementation is completely hidden from the model during evaluation. The model generates the test function body solely based on the function declaration , without access to .

3.2.2. Execution Configuration Module

GeoJSEval incorporates an Execution Configuration Module into each test case, which explicitly defines all prerequisites necessary for execution in a structured manner. This includes parameters_list, output_type, edge_test, and output_path. Together, these elements ensure the completeness and reproducibility of the test process.

The parameter list explicitly specifies the concrete input values required for each test invocation. These structured parameters are injected as fixed inputs into both the reference implementation and the model-generated code , enabling the construction of a complete and executable function invocation and driving the generation of output results.

The output type specifies the expected return type of the function and serves as a constraint on the format of the model-generated output. Upon executing the model-generated code and obtaining the output , the system first verifies whether its return type matches the expected type . If a type mismatch is detected, the downstream consistency evaluation is skipped to avoid false judgments or error propagation.

The edge test item indicates whether the boundary testing mechanism is enabled for this test case. If the value is not empty, the system will automatically generate a set of extreme or special boundary parameters based on the standard input values and inject them into the test process. This is done to assess the model’s robustness and generalization ability under extreme input conditions. The edge test set encompasses the following categories of boundary conditions: (1) Numerical boundaries, where input values approach their maximum or minimum limits, potentially introducing floating-point errors during computation. (2) Geometric-type boundaries, where the geometry of input objects reaches extreme cases, such as empty geometries or polygonal data degenerated into a single line. (3) Parameter-validity boundaries, where input parameters exceed predefined ranges—for instance, longitude values beyond the valid range of −180° to 180° are regarded as invalid.

The output path specifies the storage location of the output results produced by the model during execution. The testing system will read the external result file produced by the model from the specified path.

3.2.3. Evaluation Parsing Module

The evaluation logic module is responsible for defining the decision-making markers that the testing framework will use during the evaluation phase. It clarifies whether the results of the model-generated code are comparable, whether structural information needs to be extracted, and whether auxiliary functions are required for comparison. Serving as the input for the judging logic, this module consists of two components: expected_answer and eval_methods. Its core responsibility is to provide a structured, automated mechanism for evaluability annotation.

The expected answer

refers to the correct result obtained by executing the reference code snippet with the predefined parameters, serving as the sole benchmark for comparison during the evaluation phase, used to assess the accuracy of the model’s output. The generation process is detailed in

Section 3.2.1, which covers the Function Semantics Module.

The evaluation method

indicates whether the function output can be directly evaluated. If direct evaluation is not possible, it specifies the auxiliary function(s) to be invoked. This field includes two types: direct evaluation and indirect evaluation. Direct evaluation applies when the function output is a numerical value, boolean, text, or a parseable data structure (e.g., JSON, GeoJSON), which can be directly compared. For such functions,

is marked as null, indicating that no further processing is required. Indirect evaluation applies when the function output is a complex object (e.g., Map, Layer, Source), requiring the use of specific methods from external libraries to extract relevant features. In this case,

explicitly marks the required auxiliary functions for feature extraction. For auxiliary function calls, based on the function’s role and return type, we select functions from the platform’s official API that can extract indirect semantic features of complex objects (e.g., map center coordinates, layer visibility, feature count in a data source). This ensures accurate comparison under structural consistency and semantic alignment. This paper constructs an auxiliary function library for three typical complex objects in geospatial visualization platforms (Map, Layer, Source), as shown in

Table 1. Depending on the complexity of the output structure of different evaluation functions, a single method or a combination of auxiliary methods may be chosen to ensure the accuracy of high-level semantic evaluations.

3.3. Construction Method

Based on task modeling principles and modular design concepts, GeoJSEval-Bench has constructed a clearly structured and automatically executable unit test benchmark, designed for the systematic evaluation of LLMs in geospatial function generation tasks. This benchmark is based on the official API documentation of five prominent JavaScript geospatial libraries—Turf.js, JSTS, Geolib, Leaflet, and OpenLayers—covering a total of 432 function interfaces. Each function page provides key information, including the function name, functional description, parameter list with type constraints, return value type, and semantic explanations. Some functions come with invocation examples, while others lack examples and require inference of the call structure based on available metadata.

Figure A3 illustrates a typical function documentation page.

During the construction process, the research team first conducted a structured extraction of the API documentation for the five target libraries, standardizing the function page contents into a unified JSON format. Based on this structured representation, the framework designed dedicated Prompt templates for each function to guide the large language model in reading the semantic structure and generating standardized test tasks. The template design carefully considers the differences across libraries in function naming conventions, parameter styles, and invocation semantics.

Figure 3 presents a sample Prompt for a function from the OpenLayers library. Each automatically generated test task is subject to a strict manual review process. The review process is conducted by five experts with geospatial development experience and proficiency in JavaScript. The review focuses on whether the task objectives are reasonable, descriptions are clear, parameters are standardized, and whether the code can execute stably and return logically correct results. For tasks exhibiting runtime errors, semantic ambiguity, or structural irregularities, the experts applied minor revisions based on professional judgment. For example, in a test task generated by the large language model for the buffer function in the Turf.js library, the return parameter was described as “Return the buffered region.” This description was insufficiently precise, as it did not specify the return data type. The experts revised it to “Return the buffered FeatureCollection | Feature < Polygon | MultiPolygon>.” thereby clarifying that the output should be either a FeatureCollection or a Feature type, explicitly containing geometric types such as Polygon or MultiPolygon. The background information and selection criteria for the expert team are detailed in

Table A1. All tasks that pass the review and execute successfully have their standard outputs saved in the specified output_path as the reference answer. During the testing phase, the judging program automatically reads the reference results from this path and compares them with the model-generated output, serving as the basis for evaluating accuracy.

3.4. Construction Results

GeoJSEval-Bench ultimately constructed 432 primary function-level test tasks, covering all well-defined and valid functions across the five target libraries. Based on this foundation, and incorporating various input parameters and invocation path configurations, an additional 2071 structured unit test cases were generated to systematically cover diverse use cases. The test tasks encompass a wide variety of data types, reflecting common data structures in geospatial development practice. A total of 25 types are covered, with the detailed list provided in

Table 2. From an evaluability perspective, these data types can be broadly categorized into two groups: one consists of basic types with clear structures and stable semantics, such as numeric variables, strings, arrays, and standard GeoJSON. These types of data can be directly parsed into structured results and are suitable for direct comparison in evaluation. The other group consists of more complex encapsulated object types, such as Map and Layer objects in Leaflet or OpenLayers, where internal state information cannot be accessed directly and requires the use of auxiliary methods to extract semantic information and align the structure. To aid in understanding the differences between these types and their corresponding evaluation strategies,

Figure 4 shows a direct evaluation test case example, while

Figure 5 demonstrates a test case for complex object types requiring indirect structural extraction. A few examples are provided here, with additional detailed examples available in

Appendix A.

7. Conclusions

This study introduces GeoJSEval, the first large language model automation evaluation framework specifically designed for JavaScript geospatial code generation tasks. It systematically examines the function understanding and code generation capabilities of mainstream LLMs across five core JS geospatial libraries (Turf.js, JSTS, Geolib, Leaflet, and OpenLayers), covering two key areas of capability: spatial analysis and map visualization. To this end, the standardized evaluation benchmark GeoJSEval-Bench was constructed, comprising 432 function-level test tasks and 2071 structured sub-cases, covering 25 types of multimodal data structures, providing a comprehensive reflection of the diversity and complexity of geospatial function outputs. The evaluation tasks adopt a modular modeling strategy, relying on three core modules—function semantics, execution configuration, and evaluation parsing—to design test cases. Automated evaluation and comparison of model outputs are achieved through the answering and judging programs. The evaluation covers dimensions such as code accuracy, resource consumption, operational efficiency, and error types, encompassing 20 representative models across four categories: general language models, reasoning-augmented models, general code generation models, and geospatial-specific models. The evaluation results reveal the performance heterogeneity and capability boundaries of different models at the JavaScript geospatial library level. A reproducible, extensible, and structured geospatial code generation evaluation chain has been established, serving application scenarios in GIS, spatial visualization, and frontend development.

7.1. Significance and Advantages

The significance of this study is primarily reflected in three aspects. First, GeoJSEval is the first large language model evaluation framework focusing on JavaScript geospatial code generation tasks. It covers the complete process of task modeling, modular test design, benchmark construction, implementation of answering and judging programs, and evaluation metric setting, significantly reducing manual intervention and creating a structured, reproducible, and scalable automated evaluation system. Compared to traditional methods relying on manual screening and subjective judgment, GeoJSEval achieves a programmatic and standardized evaluation loop, improving the logical consistency and credibility of the evaluation results. Secondly, GeoJSEval conducts the first systematic evaluation of JavaScript geospatial code generation capabilities, filling the gap in previous research where the structure and invocation semantics of mainstream library functions like Turf.js and Leaflet were not modeled. Through empirical analysis, the study reveals the performance heterogeneity and capability shortcomings of mainstream models in tasks such as spatial computation and map rendering, providing clear directions and methodological support for future model fine-tuning, semantic enhancement, and system integration. Finally, GeoJSEval-Bench gathers 432 real API functions, 25 types of multimodal data structures, and 2071 structured test cases, forming one of the most comprehensive resources for evaluating JavaScript geospatial code generation to date. This benchmark suite offers task diversity, structural coverage, and high reusability, making it widely applicable for geospatial agent development, multi-platform code evaluation, and toolchain construction, with significant potential for further dissemination and academic value.

7.2. Limitations and Future Work

Although this study has developed the first function-level automated evaluation framework for JavaScript geospatial code, GeoJSEval offers significant advantages in structural design and evaluation mechanisms, it still has several limitations that can be further expanded and improved in the future. First, in terms of evaluation coverage, although the current framework selects five representative mainstream libraries, it has not yet covered some specialized or poorly documented spatial computing and visualization libraries in the JavaScript ecosystem. Some functions were excluded due to complex encapsulation, lack of examples, or unclear semantics. In particular, APIs related to frontend interaction logic but not directly associated with map rendering have not been included in the evaluation, affecting the comprehensiveness of the assessment. In the future, it should be expanded to include community plugins, higher-level modules, and complex components to enhance adaptability to the JS geospatial function ecosystem and deepen the evaluation depth. Secondly, with respect to task modeling granularity, the current evaluation focuses on single-function calls and does not yet address function composition, chained invocations, or cross-module collaboration—logical patterns commonly encountered in real-world development. Consequently, its applicability to complex geospatial workflows remains limited. In the future, a composite task set should be developed with a scenario-specific evaluation mechanism to better align with JavaScript geospatial analysis solving processes. Furthermore, there is room for further expansion in evaluation dimensions. For visualization objects (e.g., Map and Layer), evaluation currently relies primarily on structural field parsing and auxiliary function outputs. Although an automatic screenshot mechanism has been implemented, image feature-matching methods introduce uncontrollable noise and impose substantial computational overhead. As a result, metrics such as rendering latency and automated image comparison are not yet integrated into the scoring process, and screenshots are used only as references for manual verification. In the future, structure-aware image comparison algorithms can be introduced to incorporate visual behaviors such as rendering correctness and layer style overlay into the automated evaluation system. Finally, the current evaluation method primarily assesses the generated geospatial analysis code but does not assist LLMs in improving the quality of their outputs. Future research will incorporate a feedback loop mechanism, whereby evaluation results are fed back to the LLM in an iterative manner to optimize code generation quality. This mechanism will enable LLMs to continuously refine their code generation capabilities, thereby improving accuracy and stability, particularly in complex and diverse geospatial tasks. Through such iterative feedback, we expect LLMs to better adapt to the evolving demands of geospatial analysis and further enhance the intelligence of code generation.