This chapter aims to provide a deeper understanding of the visualization process using UE. This will be accomplished by addressing the hardware and software requirements based on the data from the Vltava project and the scope of the Vltava River valley by describing the used GIS data, its origins, used software and plugins by delving into the recreation of the 3D visualization of Vltava River valley in Unreal Engine in detail with focus on practices for importing and processing GIS data in UE, techniques for creating realistic-looking models, performance optimization techniques for ensuring a seamless viewing experience, and possibilities for sharing and or publishing of the resulting visualizations and lastly by focusing on the workflow of 3D visualization in general.

2.2. Used Software

The main software used to recreate the visualization of the Vltava River Valley was a game engine. Each game engine has strengths and features that cater to different development needs, allowing developers to choose the one that best suits their project requirements and expertise. For this purpose, only Unreal Engine and Unity were considered. Unity and Unreal Engine are increasingly being used outside the gaming industry for modeling, visualizations, and VR, and the high availability of learning materials also plays a role. The other engines are unsuitable for this type of work since they focus primarily on games and do not support GIS data [

20,

28,

29,

30]. Multiple comparative articles were consulted to establish differences between Unity and Unreal Engine and to obtain enough information to decide which to use.

The first difference between these two game engines is their ease of use. Unity is renowned for its user-friendly interface, making it accessible to developers at all levels. Its intuitive visual editor and component-based system simplify the creation of game logic, allowing beginners to become started quickly. Furthermore, Unity benefits from a large community and extensive asset store, providing tutorials, resources, support, and a wide range of pre-made assets, scripts, and plugins that can be easily integrated into projects. Unity also offers robust tools and features specifically designed for 2D game development, including sprite-based workflows, 2D physics, and a powerful animation system. However, Unity primarily focuses on traditional coding with C#, although it does provide a visual scripting system called Bolt. This means that developers who prefer visual scripting environments may require a more robust programming background. However, Unreal Engine has a steeper learning curve, especially for beginners or developers without prior experience. Its advanced features and complex systems may require more time and effort to fully grasp. However, Unreal Engine’s Blueprints visual scripting system allows developers to create and compile valid code without traditional coding, enabling designers and artists to implement mechanics and prototype ideas. Unreal Engine also has a sizable community and asset store. However, compared with Unity, these are smaller due to the aforementioned higher bar of entry and, thus, a lower number of smaller and individual developers [

15,

16,

20,

28,

29].

The second significant difference is in the capabilities of each engine. Unreal Engine is known for its stunning visual capabilities and advanced rendering features. It excels in producing high-fidelity graphics, realistic lighting, and impressive visual effects, making it the preferred choice for creating visually captivating games and immersive experiences. In addition, Unreal Engine’s real-time rendering capabilities have gained recognition in the film and animation industries by offering virtual production setups and real-time visual effects. However, the Unreal Engine’s advanced rendering capabilities and graphical fidelity can demand higher system resources, necessitating the optimization of performance and frame rates. Furthermore, projects developed in Unreal Engine tend to have larger file sizes, impacting storage requirements and download times, particularly for projects targeting mobile platforms. Unreal Engine also offers built-in collaboration tools, allowing multiple developers to work on a project simultaneously and make real-time changes. Conversely, Unity excels in cross-platform development, supporting various platforms such as PCs, consoles, mobile devices, and web browsers. This compatibility allows developers to reach a broader audience and deploy games on multiple platforms relatively quickly. Although Unity may not match Unreal Engine’s graphical prowess in terms of advanced rendering and visual effects, it has made improvements. However, it still may not offer the same level of visual fidelity. Unity also lacks built-in tools for real-time collaboration among team members, often requiring external solutions or version control systems [

15,

16,

20,

28,

29].

To choose which engine would recreate the visualization of the 1670 km2 area of the Vltava River valley, all the abovementioned information, their sources, and public information about the game engines were accounted for. There were three reasons why the Unreal Engine was chosen as the main game engine:

Practical—Unreal Engine was already used within the Vltava project in the past [

1]. As such, multiple project members can help improve or build upon this visualization of the UE in the future by adding VR controls. Furthermore, plugins used/bought for the previous use of the UE within the project can be reused in this case study, and vice versa.

Technical—Unreal Engine focuses more on realistic graphics, is better optimized for large worlds, and has lower programming knowledge/ability requirements.

Moral—In recent years, Unity’s leadership made multiple derogatory statements against its game developer community [

31] and went forward with immoral retrospective installation fees, prioritizing monetization above everything else [

32]. While these would not directly impact the Vltava project or this case study, and the retrospective fees have been changed (not removed), using Unity would mean indifference or outright support for such unacceptable behaviors.

UE has many versions (4.27–5.4), but this case study used version 5.2.1 because it is the newest version that the plugins used in this study support; moreover, this version uses Lumen global illumination technology by default. Outside the UE, GIS and 3D modeling software were also heavily used. The main GIS software used in this study was ArcGIS Pro and the 3D modeling software CityEngine, both of which fall under the ESRI software. These were used primarily because of the availability of licensing at the author’s institution and their previous use in the Vltava project. ArcGIS version 2.8 was used to generate the input data, and the updated version 3.2.1 was used to edit the input data further. CityEngine 2021.1 was used to create rule packages for procedural modeling. Lastly, GIMP 2.10.36 was used to edit DMT to be compatible with Unreal Engine. All the software and its versions that were used are summarized in

Table 2.

Lastly,

Table 3 lists plugins that were directly used while creating the 3D visualization of the Vltava River valley. Plugins tested but not used are mentioned in the text at the appropriate locations but are not part of this table.

2.4. Importing of GIS Data

The first step in creating visualizations in the UE is the importation of GIS data. This step may seem trivial, but it is the first step where problems occur because UE, by default, does not support standard GIS file formats. There are, however, multiple ways GIS data can be imported using external tools, plugins, and extensions. The most common ways to import GIS data are as follows:

- (1)

Directly by dragging files into the content browser. This, however, only works for specific supported formats. This is comparable to ArcGIS Pro and imports data into the map from the catalog window (it may work simply by dragging files over or requiring a proper importing function to be used, even for supported formats).

- (2)

Using the built-in plugin Datasmith, the official way to import data from outside the UE ecosystem. It works in two ways: as an extension of external software called the Datasmith Exporter or as a plugin for UE called the Datasmith Importer. The extension is mainly used with CAD and 3D modeling software to allow exporting models in formats that UE can work with, while the plugin version is for importing files with supported file formats into UE.

- (3)

Using third-party plugins, such as Landscaping or TerraForm PRO. These plugins mainly add functionality to import DTM data to create landscape actors and GIS vector lines to create landscape splines or blueprints. The disadvantage of using such third-party plugins is that they are paid (bought using the Unreal Engine marketplace) and may not support future versions of UE.

Since UE cannot import GIS file formats immediately, and there seemed to be no Datasmith extension for GIS software, the third option was to find suitable plugins to import GIS data from the Vltava project into UE. Unreal Engine Marketplace was used to find such plugins. This official marketplace is available from web browsers and Epic Games Launcher. It allows the sharing and selling of assets, such as textures, models, animations, and code, that can be added to individual projects or installed in the engine.

Two plugins were tested: Landscaping and TerraForm PRO, both of which allow the import of vector data and DTM files. Landscaping is severely limited in coordinate systems since it only works with UTM-style coordinates. This caused the first problem of transforming all data into an appropriate coordinate system. Secondly, it took a long time to import DTM, up to several hours if importing the whole 1670 km2 DTM (on the Poznań PC). Lastly, this plugin proved to be quite unstable when importing large datasets of vector data. TerraForm PRO offers the same functionality as that of Landscaping. The options and settings for importing DTM are comparable with those of the Landscaping plugin, with the advantage of not being limited to a specific CRS. Importing DTM using the default settings takes roughly 15 min, which is a significant improvement over the Landscaping plugin; however, there seems to be a major problem with the resulting coordinates of the DTM. The imported DTM is transformed to match the engine’s coordinate origin (X = 0, Y = 0, Z = 0), which means any attempts to import any other data outside TerraForm, for example, 3D models from SketchUp using Datasmith, will not match the imported landscape. There does not seem to be a way to avoid this behavior. This means that if data from different sources need to be imported using other methods, TerraForm PRO cannot be used. For these reasons, TerraForm PRO was rejected as a reliable form of importing GIS data for this study.

Fortunately, during the work inside CityEngine, it was discovered that this software, also used in the Vltava project for procedural modeling, contains an inbuilt Datasmith exporter not mentioned in the official Datasmith documentation. This was significant because CityEngine is an ESRI software, which allows it to import standard GIS data file formats and ESRI-specific GIS formats. These can then be exported in the Datasmith file format (*.udatasmith). This method allows the export of DTM, 3D models, and shapes (vector data) into the UE. Importing *.udatasmith files using Datasmith Importer inside UE looks fairly stable compared to using plugins such as Landscaping, which is reasonably fast. For example, importing 1670 km2 of DTM with over 28,000 polygons took 2 min to complete. Furthermore, importing data using CityEngine does not cause the coordinate transformation present in the Landscaping and TerraForm PRO plugins, meaning it is possible to import and visualize data that are in its original S-JTSK/Krovak East North—EPSG:5514 coordinate system used in Czechia. This should be the case for most orthogonal metric coordinate systems. However, what was found later (during the iterative testing) were the limitations on the DTM pixel size. While the DTM is not limited in maximum size per se, the resolution must match the specific width and height values. To create a landscape in UE, the DTM raster must be divided into quads and components, effectively splitting the terrain into small pieces that UE works with. For the imported DTM to work without any further problems (stretched terrain around the outskirts of the DTM) and to later be able to match the terrain with raster masks, it is necessary to match the resolution of the imported DTM with the Overall Resolution calculated based on the number of quads and components displayed under the Landscaping mode while importing/creating a landscape. This will result in the need to use irregular decimal pixel resolution values for the DTM and, consequently, the raster masks (meaning that a pixel will not have 1 × 1 m resolution but must be, for example, 0.987 × 1.123 m).

After extensively testing all the methods and plugins mentioned above with data from project Vltava, an approach using the Datasmith exporter within CityEngine was chosen for this study. This was because of the ease of use, speed, and stability of the importing process while using CityEngine as a middleman for importing GIS data into UE. It is important to note that there are other ways to import GIS data, but the choice is heavily affected by the availability of licensing for CityEngine and ArcGIS Online. The options are CityEngine (licensed), ArcGIS Maps SDK for Unreal Engine to import maps and terrain directly from ArcGIS Online (licensed), and plugins such as TerraForm PRO (paid).

2.5. Preparation and Editing of GIS Data for Use in Unreal Engine

During the initial testing of the UE, it was found that most of the imported GIS data would need to be further edited in GIS to be usable or practical to use and work within the UE to create a 3D visualization of the Vltava Valley.

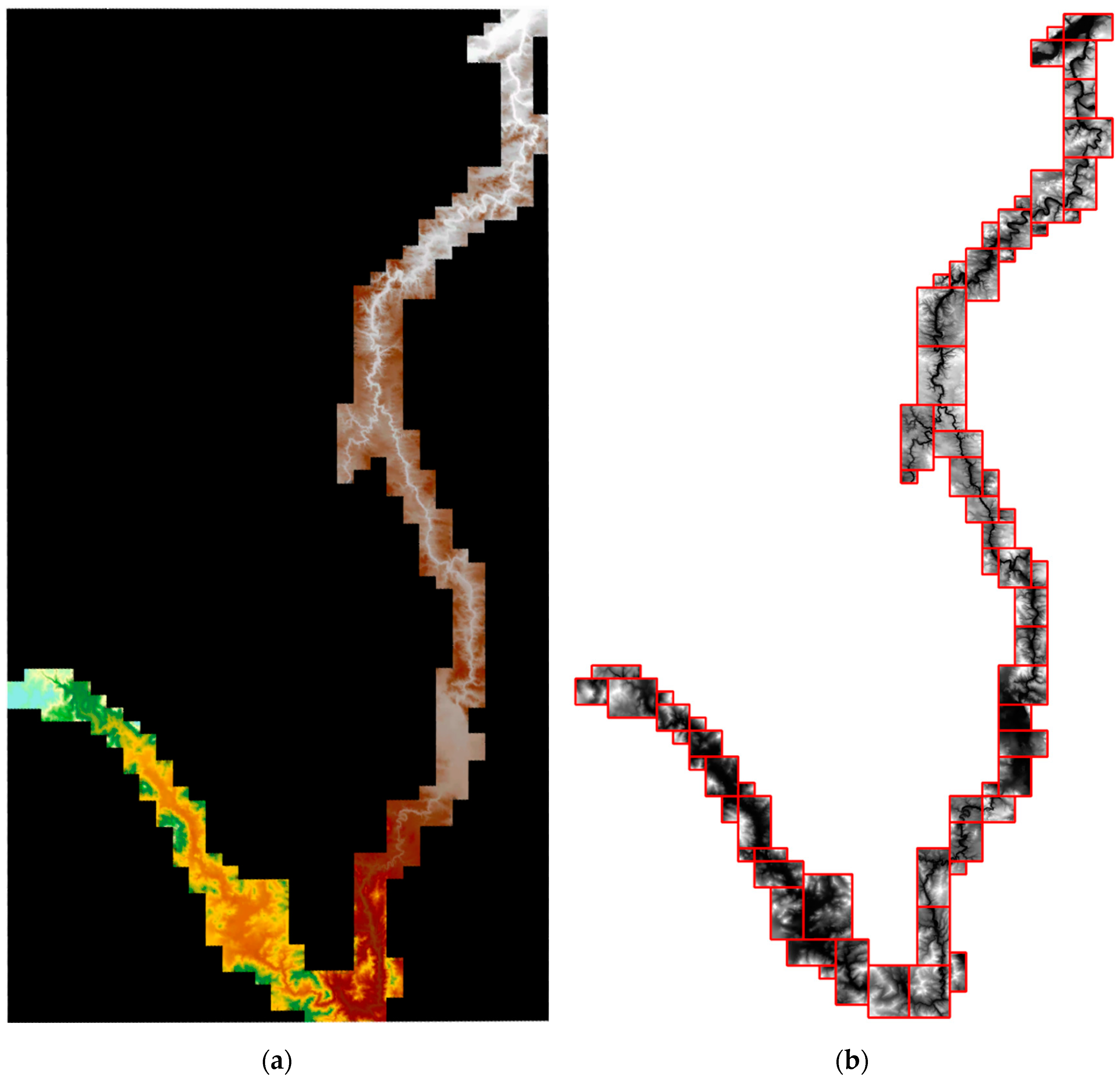

While the DTM raster file containing height data of the terrain can be imported as a single file, reducing the amount of data is necessary to create a more stable and less memory-demanding visualization in UE. As is displayed in

Figure 2a, the original one-piece raster contains a large amount of no-data space represented by a black background. This black area is still composed of pixels with their positions and data values. The only difference is that the value is set to zero (or another arbitrary number), which GIS software would identify as such and would not display or use in calculations. This means that even though those areas do not contain usable data for terrain visualization, they still contain data, owing to how the raster data is saved. However, in UE and other non-GIS software, those pixels are taken at their face value, resulting in one big rectangular terrain with a size of over 12,700 km

2 (as in

Figure 2a) compared to the 1670 km

2 of the area of Vltava valley shown in

Figure 2b, and result in terrain being created in locations with no valuable data. This results in UE rendering the 12,700 km

2 area, significantly reducing performance and increasing hardware demands.

Because of how raster files are saved, the way to avoid empty (black) places is to split the original DTM raster into smaller rectangular rasters, where each raster fully contains part of the area of interest. The area of the DTM of Vltava Valley is primarily created based on SMO-5 maps and follows the shape of the SMO-5 rectangular map sheet layout. The original SMO-5 sheet division was not retained since it would create significantly more individual rasters, which would compound the amount of work needed, since for each terrain raster, there are an additional 14 raster masks of land use information (each land use has its raster: woods, fields, gardens, meadows, pastures, etc.). The division of the original raster into smaller rectangular pieces, which resulted in the creation of 68 individual rasters, is shown in

Figure 2b. To divide the DTM, first, an outline of the area containing the Vltava River valley was created using the Raster Domain geoprocessing tool. This Outline was then manually divided into 68 rectangles. The individual rectangles were designed to use as few as possible, but made them similar in size, requiring some larger areas to be further split into smaller rectangles. The aim was to create rectangles covering the whole area, but not containing any areas outside the Vltava River valley. The final rectangles are shown in red in

Figure 2b. The next step was to split the original DTM using the Split Raster geoprocessing tool. The resulting rasters were tested in UE, and through an iterative process of trial and error of partially building the visualization, the best parameters for the final raster attributes were found. First, it was found that there needs to be an overlap between the rasters to ensure the continuity of the terrain. However, the overlap should be as small as possible since, in the later stages of the creation of the visualizations, the procedurally created 3D objects like trees may become too dense in the areas of the overlap. Furthermore, due to the follow-up processes performed on top of the terrain, it was decided that the individual raster’s precision (pixel size) should be increased from 5 m to 1 m.

While this precision increase may seem counterproductive in terms of the reduction in memory demands of the visualization, the removal of the empty spots of terrain means the removal of more than 80% of the raster size, and together with the later use of World Partition, more than makes up for it. As is shown in

Table 4, while the upscaled DTM takes 25 times more memory due to the increased pixel count, after removing the “no data” background, the size is only 3.3 times bigger than the original. On average, the file sizes of the 68 DTM pieces are in the tens of MB.

This is significant because later in the process of creating the scene in UE, a process called World Partition is used, which renders only objects near the camera/player position. This allows for the dynamic hiding (not rendering) of terrain, vegetation, buildings, and water bodies. However, the content present throughout the scene as one object, such as clouds, sky spheres, or rivers (if not split), will remain rendered. This can be further set for each individual object. This means that only a few of the 68 DTM pieces are rendered at a time, leading to lower memory usage than the single original DTM with a resolution of 5 m. The final 68 DTMs allow further use of world partition (tiling) in UE and, together with the outline polygon, are further used for editing and creating land use data.

Secondly, rasters used for importing terrain into UE through CityEngine should be a 32-bit float pixel type, which is significant information since the rasters used for terrain masks used for applying textures require different pixel types (16-bit unsigned). If the terrain raster had only 16 bit depth, the created terrain in UE would be made of flat pixels resembling stairs, creating an unsmoothed terrain model, which would require either a smoothing pass for the entire landscape in UE or recreation of the imported raster in GIS.

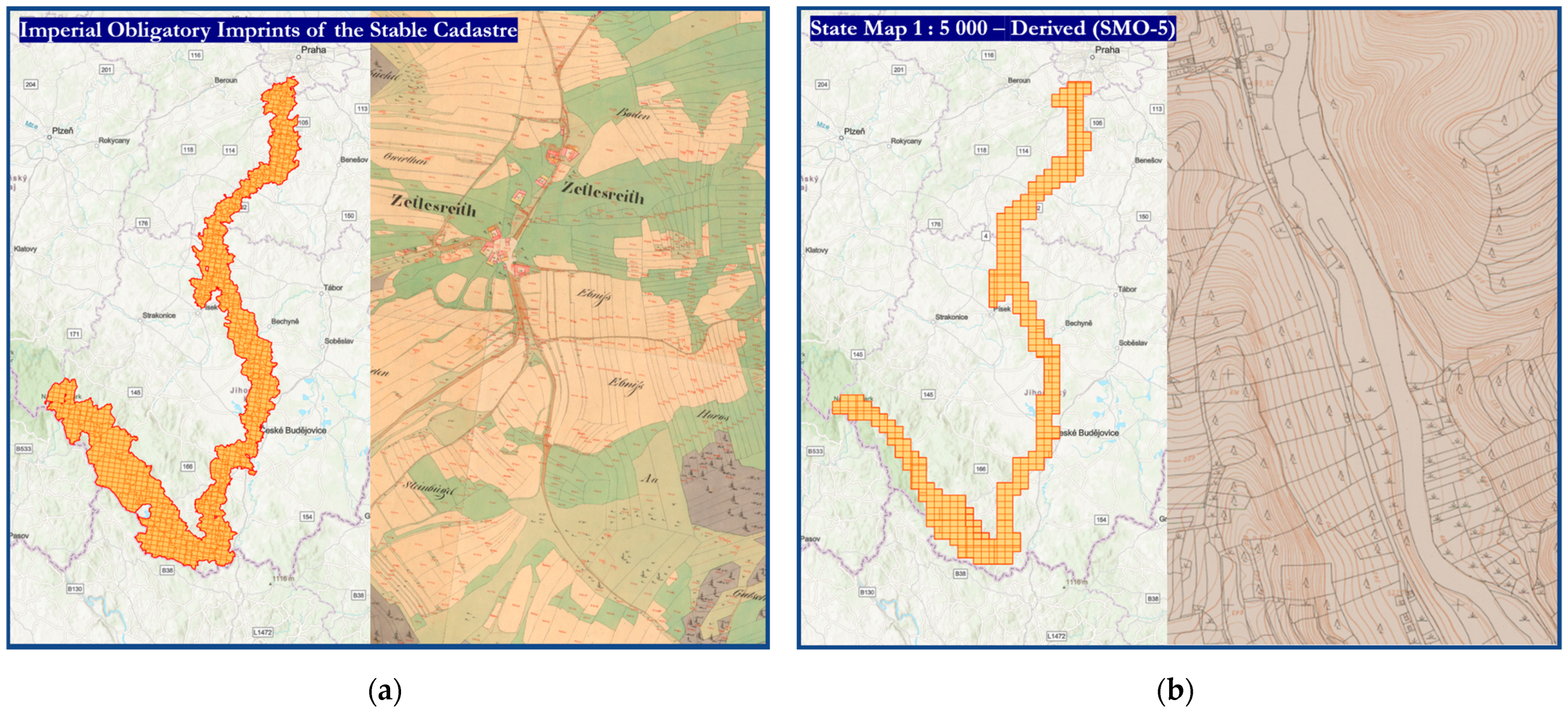

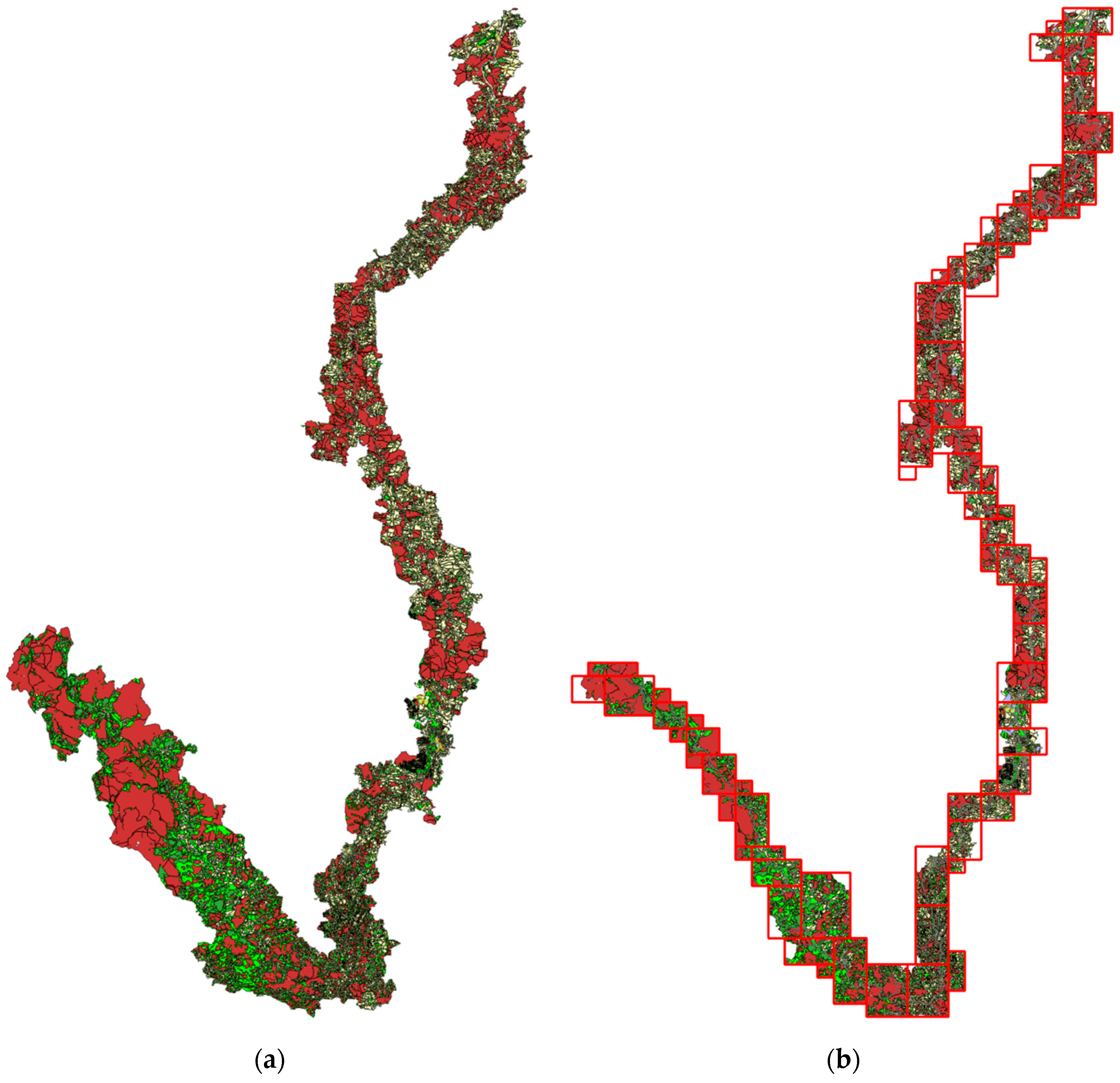

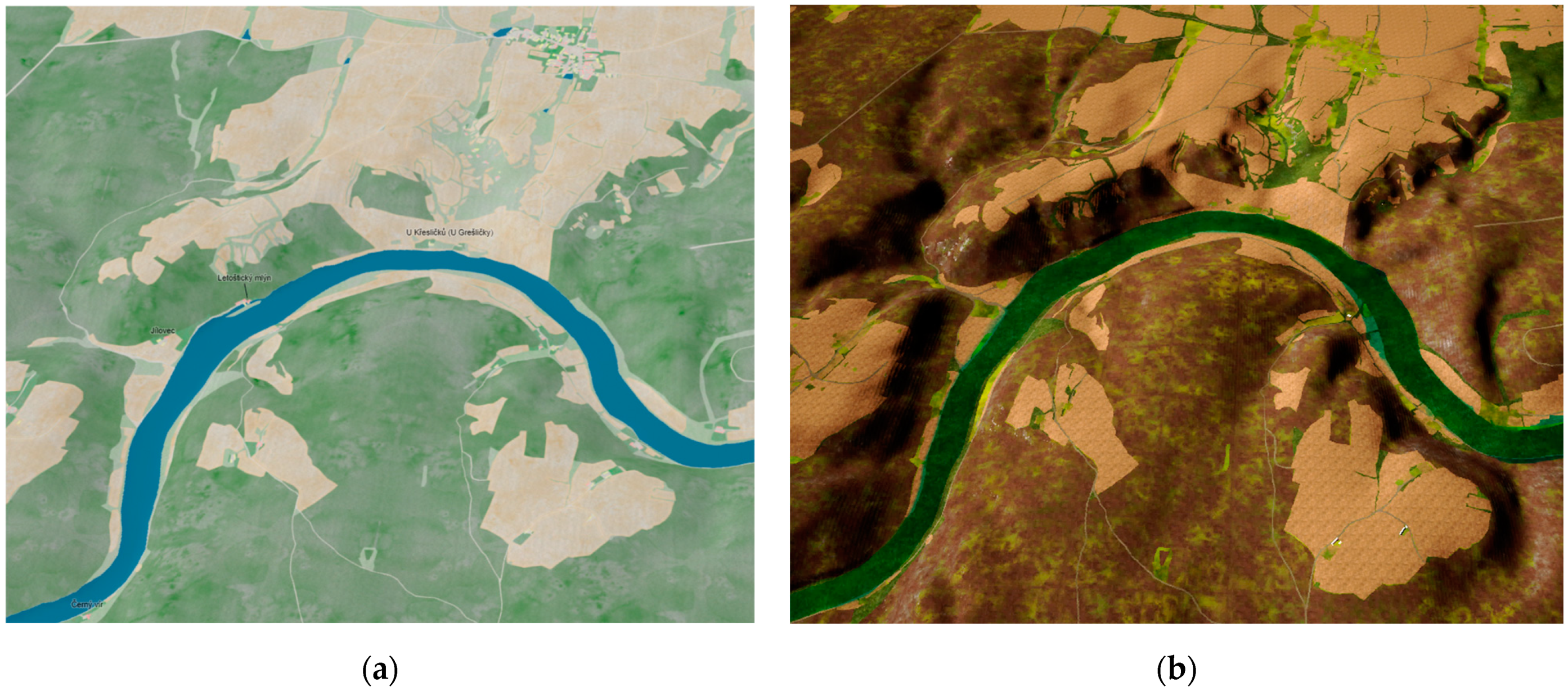

The second dataset was created by manual vectorization of scans of the Imperial obligatory imprints of the stable cadaster, which captures the land use information between the years 1821 and 1840. While in the original GIS visualization, the whole vectorization shown in

Figure 3a was used owing to the global height layer available in ArcGIS Online, this layer is not available for use in UE. For this reason, the first change to the vectorization was to reduce its scope to match the area covered by the DTM, as shown in

Figure 3b.

This was performed using the Clip geoprocessing tool with the DTM outline polygon shown in

Figure 3b as the clipping feature layer. After reducing the land use layer to the area of DTM, the whole vector layer had to be converted to raster. This is because, while the UE can import and display vector data, it converts all polygons into 3D splines. This creates a vast number of actors, which causes performance issues. On top of that, they do not interact with the terrain in any way and cannot be used to visualize land use on the terrain. In comparison, in a 3D GIS scene, 2D layers are projected on top of the terrain, and with the help of Symbology, those layers can apply textures to parts of the terrain according to the land use information. In UE, however, the way to apply textures (in UE, called materials, which on top of textures contain further details regarding physics and lighting-related attributes) is vastly different; terrain (in UE, called landscape) can only have one single material assigned to a landscape. However, this material can be customized using a Blueprint visual scripting system to create multiple layers with different attributes that can be assigned to various landscape parts. This is available as a paint option, allowing manual landscape painting with these layers. However, to use them on a larger scale, an option to import the layer’s area in the format of raster masks must be used. This means that the whole set of land use data had to be converted into a raster form of the exact dimensions of the original landscape, and specific file formats with specific naming schemes had to be used to apply different textures to different areas, depending on the land use information.

Before converting land use data into raster form, it is essential to understand how the final raster is used during UE, so that it can be tailored and changed beforehand. Typically, rasters in UE are used in two ways: to import the landscape itself or to apply textures (materials) using landscape attributes like slope or aspect in raster format. These are then used to apply materials and create seamless material blending between the individual mask layers. The individual pixel values of the mask are either used as a value of fact (for example, slope values) or as a weight for material blending. This visualization uses a process similar to material blending in the importing sense. In this instance, land use rasters in the UE are applied to individual landscape parts as a raster mask. From this comes the first change made to the land use dataset: the aggregation of all separate areas of the same land use under one ID. The goal was to make all regions of the same land use look the same in terms of UE. Since there were no plans for material blending and there is no need for differencing between different areas with the same land use, having different values for each polygon represented in the final raster is unnecessary and may cause problems in UE when applying materials and working with tens of thousands of polygons in GIS (may) cause instability, errors when using geoprocessing tools, and other problems. For these reasons, all polygons of the same land use were aggregated (merged). After aggregating all the values of the dataset by the land use type into fourteen groups using the Select by attribute tool, and merging them, each land use record representing the individual way the land used was converted to an individual raster format, creating 14 rasters in total using the Polygon to Raster geoprocessing tool. However, some rasters, such as water bodies or buildings, were not used further since those were modeled in the final visualization in different ways. Another reason for the aggregation was that using the Polygon to Raster geoprocessing tool with selection as part of the input feature creates one raster for each selected polygon, creating thousands of rasters, which is unnecessary and unsuitable for further data processing.

What needs to be highlighted is the use of the Snap Raster option, which is mandatory at this point of the process because, without it, the output rasters would have different dimensions than the original DTM raster(s), where the areas on the edges of the processing extend may be cut out (for example, because there may not be any forest in the border areas during the creation of land use raster of forests), creating problems further down in the process where the final land use rasters would be difficult if not impossible to correctly align with the landscape in UE during the import of the land use rasters as raster masks.

What needs to be said is that there is a loss of geographic information during the process of converting vector data into raster masks. Although the loss can be reduced by creating rasters with smaller pixel sizes, this is unavoidable. With an area of 1670 km2 multiplied by 14 different raster masks, going under a pixel size of 1 m is not feasible due to the sheer amount of space required. This means that details that are less than 1 m will be lost.

After creating the individual land use rasters displaying each land type (forests, fields, gardens, etc.) in this way for the whole Vltava River valley, they were each split to match the rectangular areas of the 68 DTM parts. The process for this further division was very similar to dividing the original DTM using the Split Raster geoprocessing tool. The difference here was the use of the Snap Raster option, where the rasters used for snapping were the individual 68 rasters, to ensure that the resulting land-use masking rasters would match the individual DTM rasters.

2.6. Work inside Unreal Engine

The first step in creating the visualization of the Vltava River valley in the UE was to import terrain rasters as a landscape. This was performed using the Datasmith Importer with CityEngine as a middleman. After the landscape layers were imported into UE and datasets were prepared in the GIS, further preparations on the UE side were necessary to import the land use data as raster masks. Firstly, to correctly display and work within UE, many settings needed to be tweaked/changed to ensure maximal visual quality and functionality of the used processes and, in general, help with creating the visualization both in UE editor and within the final visualization. The most required settings are mentioned further in the text at appropriate places. However, for ease of use and the ability to set everything beforehand, all used Editor Preferences and Project Settings are listed in

Table 5 and

Table 6. The remainder of this chapter describes the steps to prepare for the remaining data to be correctly imported and processed in the UE and the steps made within UE to create the final Vltava River visualization.

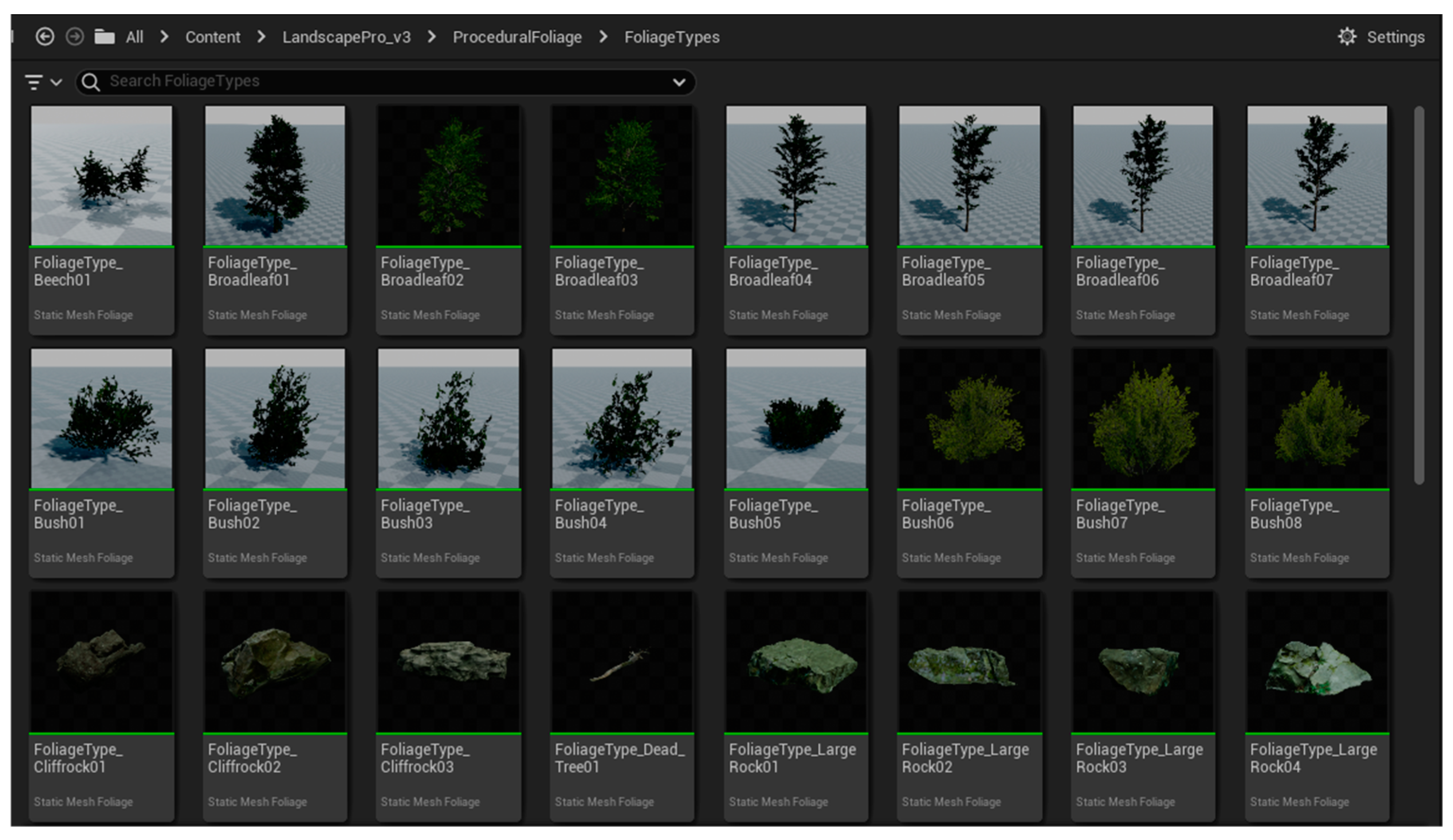

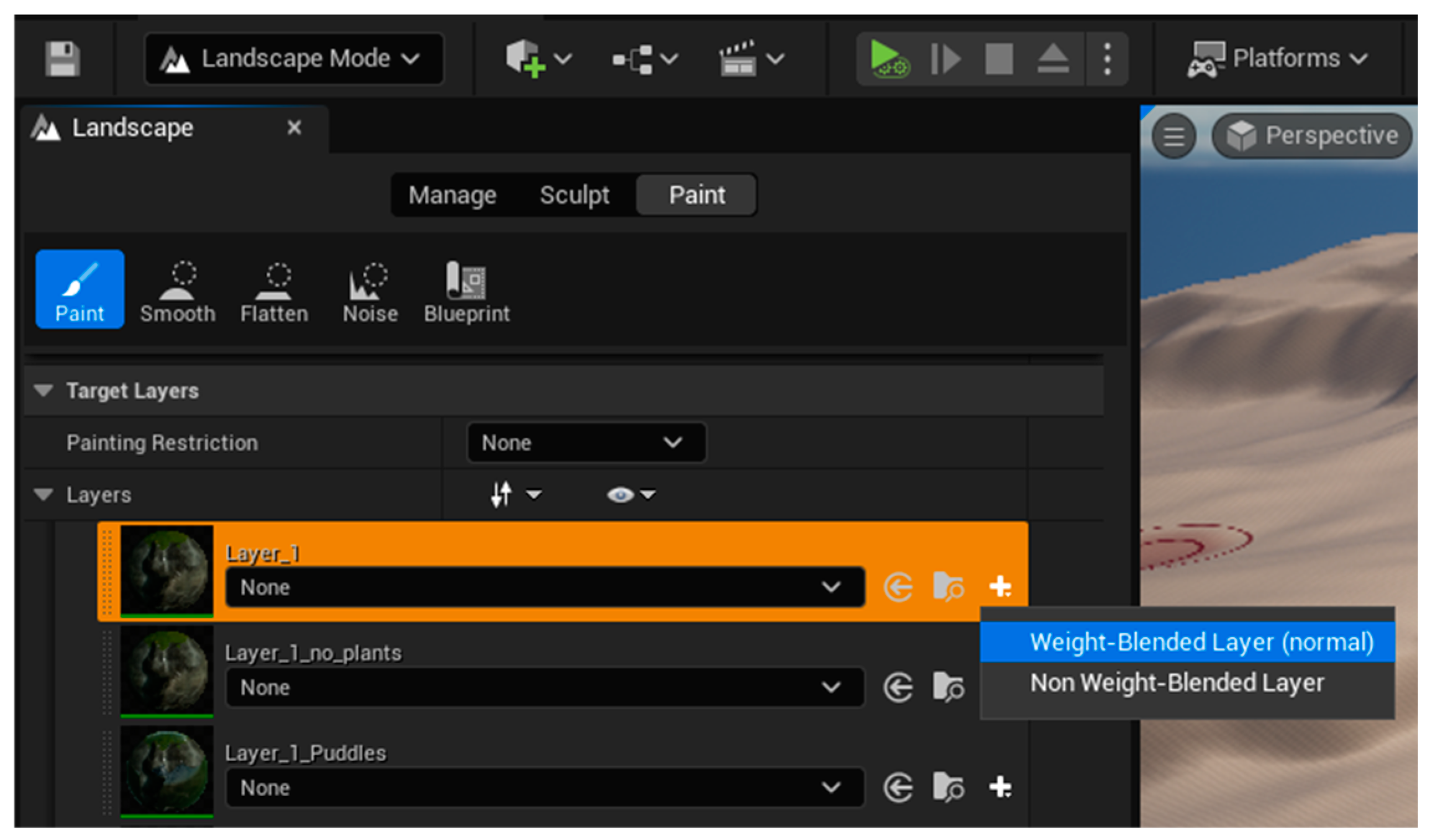

The first step is to display and use the land use data on top of the imported landscape. To do this, landscape layers within landscape material had to be defined. For this purpose, a plugin named Landscape Pro 3 was used. This plugin contains already-prepared materials for the landscape visualization and many textures, 3D models, actors, and other assets, as shown in

Figure 4.

These were needed to visualize the land use information and procedurally generate grass, trees, rock formations, and other 3D models based on it to fill up the landscape and make it look organic, realistic, and detailed. After enabling the Landscape Pro 3 plugin, its landscape material was applied to the imported landscape by dragging the material file from the Content Browser plugin directory

LandscapePro_v3 >

Environments >

Landscape to the landscape material attribute inside the landscape actor Details panel. This material file contains the definitions of each material layer, where each layer is composed of parameters, textures, normals, masks, and functions. These layers are then blended to create the final landscape material. Furthermore, this material file contains a Grass Output section that, depending on what layers are blended, procedurally generates ground foliage and 3D objects on top of the landscape. After applying the material, Layer Info must be manually created. To do so, the UE editor needs to be switched to Landscape Mode, and then under the Paint tab, for each layer, Layer Info must be created using the Create Layer Info (the + icon next to each layer) as shown in

Figure 5.

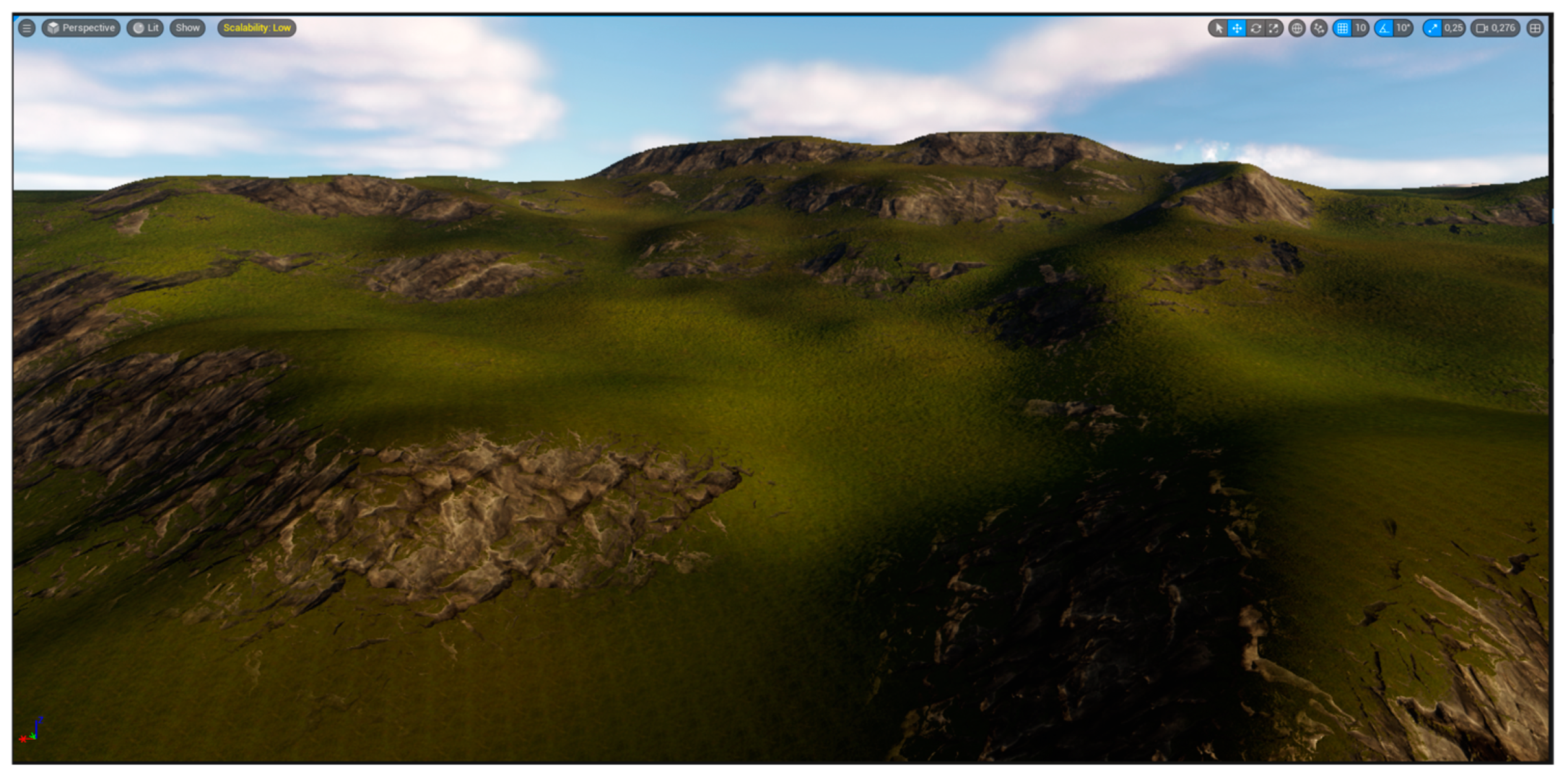

After the Layer Info is created, the base (first) layer will be applied to the selected landscape, which will result in textures being applied where primary terrain attributes like slope are taken into account to apply either rock or grass textures, as shown in

Figure 6, together with procedurally generated foliage matching the texture.

Unlike the base layer, all the other layers (from layer two upwards) are not applied automatically and must be applied manually or imported as raster masks. For this, each layer needs its own Layer Info to be created. If a layer is missing Layer Info, the importing feature is greyed out and no import is allowed. The importing of layer masks is located under Landscape Mode in the Manage/Import tab. Each layer with Layer Info allows the importing of raster files as raster masks. Rasters must match specific requirements that are not readily apparent to be imported as layer masks. After all these requirements are met, the rasters can be imported as material layer masks, after which the layers are automatically applied, adding textures and generating foliage and other objects. The requirements are as follows:

The file format of the imported rasters needs to be RAW or PNG

The rasters must have a color depth of 16 bit (greyscale) with unsigned values

All imported rasters need to have unique names within their directory

No auxiliary files can be present in the same folder as the imported raster

Rasters must contain an alpha channel or the file format may not be recognized

Another step in the creation of landscape visualization is to fill it with content. In this case, it is vegetation and its procedural generation. This step is tightly tied to the landscape materials and material layers. The plugin used for their creation also contains procedural generation capabilities. In this case, the procedural generation of vegetation is accomplished in two ways: globally generating ground vegetation and objects on the whole landscape, and locally generating vegetation based on individual land use layers coverage.

The global procedural generation that applies to all the material layers is defined directly within the material layer. It aims to generate vegetation that fills the entire landscape depending on the layers used. The first layer is the base (main) layer for the whole landscape. It does not require location definition as the other layers do and is applied automatically after the material is assigned to the landscape. After the assignment, it applies textures and generates 3D vegetation to the landscape. The generated vegetation consists of small and tall grasses, flowers, bushes, wooden branches, and other ground foliage shown in

Figure 7, which is fully animated.

Furthermore, vegetation placement considers the terrain slope and adjusts which and how dense vegetation will be generated. This process is fully automated and does not require any further actions. This base layer can be overlaid at specified locations with the other defined layers, such as layers representing forests, whose locations are imported as land-use raster masks. If the non-base material layers are applied over the base layer, a blend of those layers is created, resulting in the generation of foliage specific to the layers blend, as is defined in the Grass Output part of the material. This feature, however, is not used because of how the original land use data (vectorization created in GIS) was made. It does not contain any overlaps between individual entries, resulting in no possibility of such layers blending in UE outside the base grass layer.

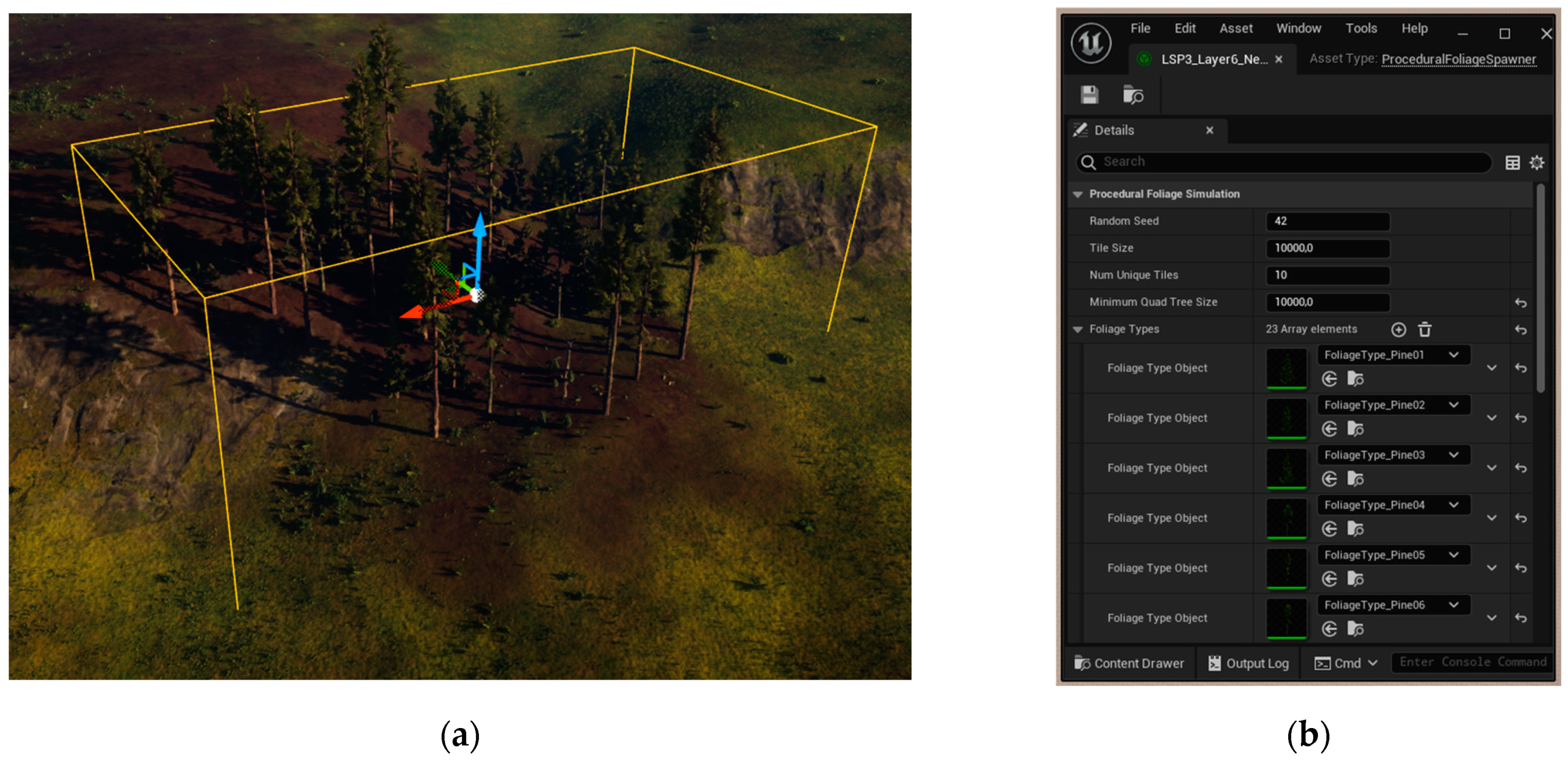

To procedurally generate any of the larger objects, such as broadleaf and needle trees, and rock formations, Procedural Foliage Spawner actors must be used. The spawner creates a bounding box, as shown in

Figure 8a, that interacts with specific predefined material layers. This results in procedural generation being performed only on top of the matching material layer and within the bounds of the bounding box. Furthermore, the spawner controls which objects will be generated, as shown in

Figure 8b. However, the Procedural Foliage option must first be enabled in the Editor Preferences to make procedural generation using the spawners work. Without it, the spawner actors cannot be added to the scene. Another tweaking was performed within the Project Settings, where the anti-aliasing method was changed to Temporal Anti-Aliasing (TAA) to remove flickering and shimmering effects from the animated foliage to create organic, realistic, and detailed foliage, as in

Figure 9.

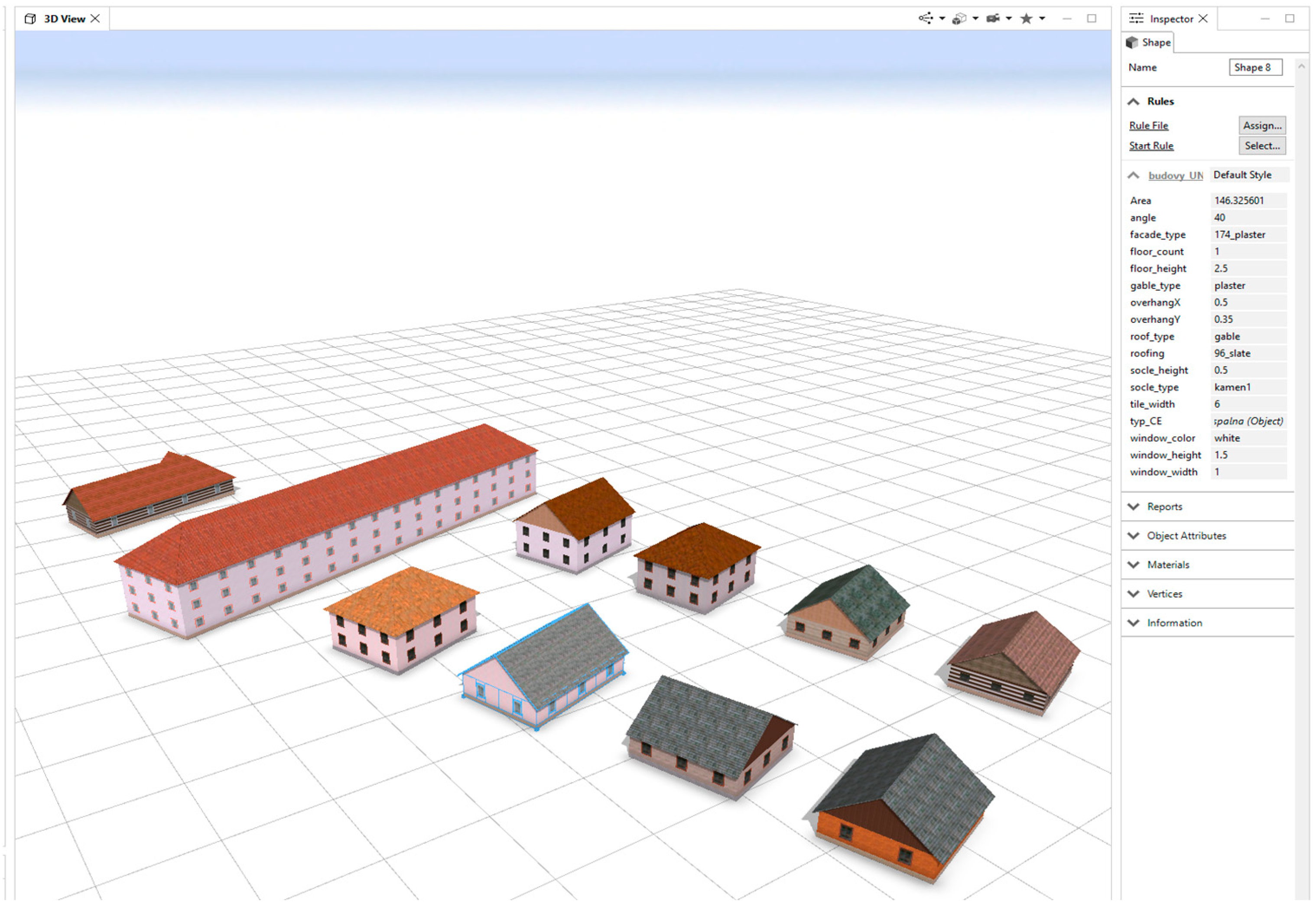

The primary method of creating 3D models of over 28,000 buildings was procedural modeling performed in CityEngine, as shown in

Figure 10. However, these are not part of the input data for this case study since those models were only exported in a format compatible with ArcGIS Online at the time of their creation. Since one of this study’s goals is to recreate the Vltava Valley’s visualization, recreating those 28,000 models is also needed. The easiest way to create these models again is to use CityEngine and import them, either in the *.udatasmith format or any other format supported by UE (for example, glTF). However, because the case study aims to explore the usage of GIS data within UE, another solution has been used.

CityEngine is a 3D modeling software locked behind a license, but it has a freely downloadable software development kit, CityEngine SDK. This C++ API allows for implementing procedural modeling, as is used within CityEngine. Based on this SDK, a plugin for UE was developed, named Vitruvio. Vitruvio is free for personal, educational, and non-commercial use; however, as an input, Vitruvio requires Rule Packages (RPK), which are created in CityEngine and require a license [

34,

35]. While using Vitruvio, the procedurally created buildings remain procedural and can be changed easily with a parametric interface (height, style, and appearance). An RPK file includes assets and a CGA rule file that encodes an architectural style.

To use Vitruvio, polygons that represent the footprints of the modeled building must be imported into the UE. This is completed using CityEngine and the Datasmith Importer. This creates Actors for each imported polygon in the project. Once the polygons are imported, a new action menu option will appear: Select all viable initial shapes in the hierarchy. This will select all Shapes within the selected Actors, which can then be converted into Vitruvio Actors with the procedural modeling functionality. Before conversion into the Vitruvio Actor, the RPK file must be present within the project. To do so, the RPK file created in CityEngine must be dragged into the Content Browser. After selecting from the context menu, Convert to Vitruvio Actor and select the desired RPK file. All shapes will be converted to the Vitruvio Actor, and the original polygon will be modeled into the desired shape according to the RPK file, which, in this case, will be fully textured models of buildings.

3D modeling of over 21,000 buildings was quick and took a couple of minutes on both tested machines. This is comparable with procedural modeling performed in CityEngine. The process was also stable during testing, and repeated importing and procedural modeling were performed on both tested machines. After converting Shapes into Vitruvio Actors, it is possible to change the attributes used for procedural modeling using the Vitruvio Actor details inside the Vitruvio Component section under Attributes. The implementation is similar to procedural modeling in ArcGIS Pro, which is available under polygon symbology as an option under the fill symbol layer. Both methods use CityEngine RPK files to create models procedurally based on the polygon footprint. In both cases, each will have its attributes created based on the rule file that is part of the RPK. These attributes can be changed to edit the look of individual models. Neither of these implementations (UE or ArcGIS) allows for creating or editing the original rule file or alerting the original assets that are part of the RPK, which is limited only to CityEngine, in which the rule file was created. The UE version also contains more options related to physics and materials that are unique to game engines.

The last unique step for the UE is the conversion from Vitruvio Actors to Static Mesh Actors. This process preserves the changes made to Vitruvio Actors and saves procedurally modeled objects. Without this conversion, Vitruvio Actors would continue to exist until the project’s closure, after which they would cease to exist, and the original shapes would need to be imported again. During the conversion of Vitruvio Actors to Static Mesh Actors, a process of so-called Cooking is used. UE stores content assets in particular formats that it uses internally, such as PNG for texture data or WAV for audio. However, this content needs to be converted into different formats because the platform uses a proprietary format, does not support Unreal’s format used to store the asset, or a more memory- or performance-effective format exists. The process of converting content from an internal format to a platform-specific format, together with compiling shaders, is called cooking. This process can take longer with more complex assets (like meshes with lots of polygons, etc.) [

36]. This process is conducted in the same way as the initial conversion, meaning that after selecting all Vitruvio Actors in the context menu, there will be a new option:

Convert to static mesh actors. Unlike procedural modeling, this process can be volatile due to its very high hardware (memory) requirements, and the time needed is also severely longer, where finishing these conversions takes hours. Solutions to these problems are to split the number of Actors processed at a time into smaller chunks, in this case, 10,000 at one time, or to increase available memory either by installing more RAM or significantly increasing the size of the system’s virtual memory via increasing the page file size in Windows.

During model cooking, the CPU and memory are utilized significantly. While the CPU speeds things up, insufficient memory leads to a UE crash. Resource Monitor reveals that UE commits over 170 GB of memory just for itself at the time of cooking.

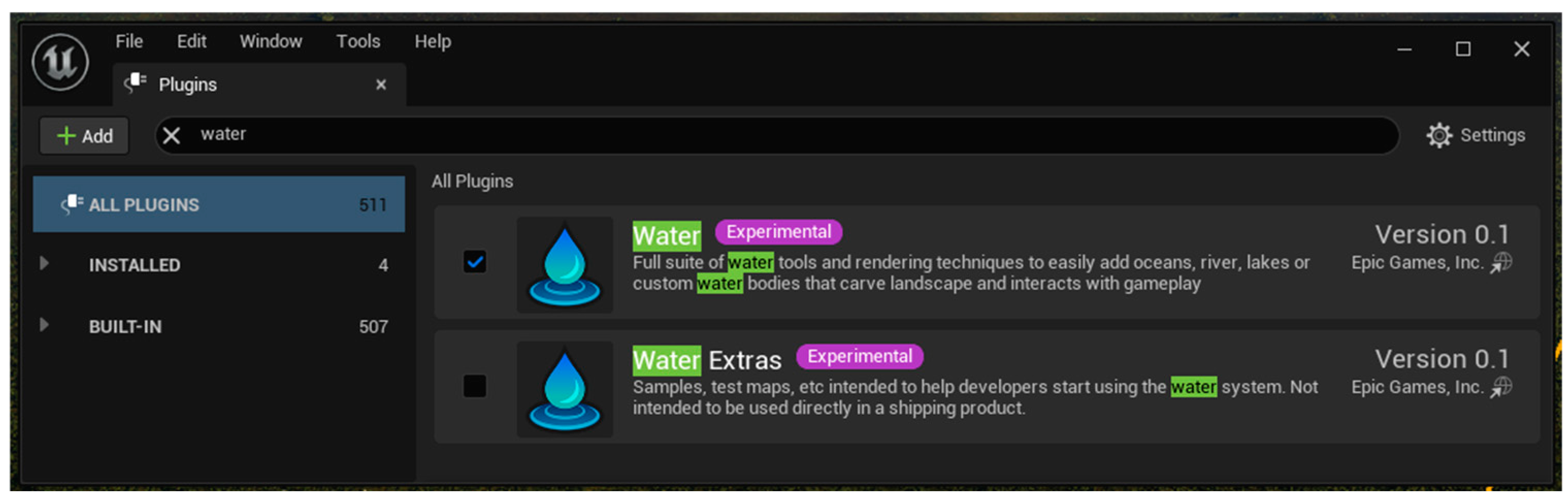

Another part of the visualization is creating water bodies. To add and work with water, a plugin of the same name, Water, is used. This plugin is built into the UE and is currently in the experimental version, as shown in

Figure 11.

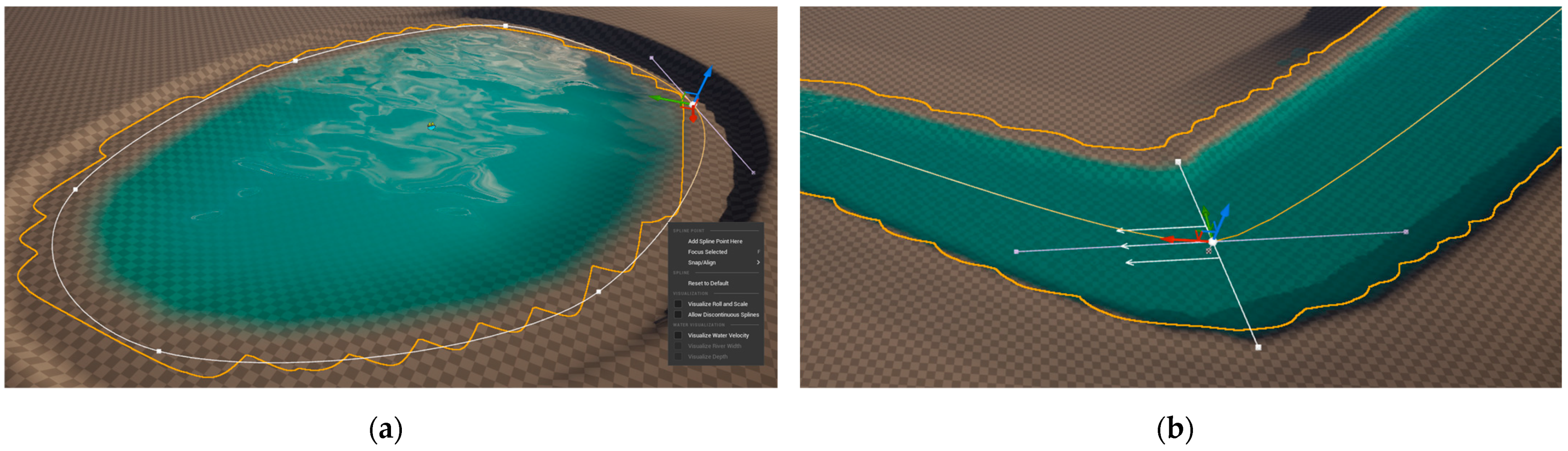

This plugin brings multiple types of water bodies to the UE. Still, only two are being used to visualize the Vltava Valley: Lake for modeling smaller water bodies like fish farms, water reservoirs, or ponds and River for modeling the Vltava River and smaller streams, as shown in

Figure 12.

The simpler of the two is an Actor named Water Body Lake. This actor creates a lake defined by three Spline points with the possibility of adding additional spline points. These spline points are connected by arc-shaped curves whose attributes can be changed using the detail panel or graphical representations of these attributes directly in the editor scene. This actor is more straightforward than a river because a lake has only one height value (the lake must be flat). Specific attributes, like the speed of water flow, do not matter for small-scale water bodies, and there are significantly fewer attributes in general. By default, the lake actor contains the attribute option Affects Landscape, which allows the lake to interact with and change the landscape on which it is situated. While this feature can be disabled, it must be used since the smaller water bodies for which the actor Water Body Lake is used are not modeled within the DTM. In the original datasets, they are represented only by land-use vectorizations and consequently by texture (only their position is known). The lack of lake bottoms in DTM and their representation only in vectorization create additional problems. Since many water bodies contained in the land use vectorizations are made of sharp angles, which are significantly harder to achieve using the spline points, it is lengthy and complicated to perfectly match the position of the banks with the vectorization. Furthermore, the exact measurements of the bottom of these water bodies (depth, angle, etc.) are unknown, at least in the datasets used for this modeling. This results in higher creative freedom in how the water bodies are created; however, it is at the cost of historical accuracy and the accuracy of following the base GIS data, in this case, the vectorization of old maps.

The actor named the Water Body River is a more complex one. Like the lake actor, it creates a river defined by multiple spline points; however, these have many more associated attributes. Firstly, each spline point has its height (Z coordinate), making the manual creation of rivers more accurate but at the cost of the increased difficulty of working with the river actor. Furthermore, each spline point has its depth point, river width, flow orientation, and flow strength/speed. The actor Water Body River also contains the attribute option Affects Landscape; however, in the case of the Vltava River, the situation is reversed. Since the used DTM already includes the bottom of the river basin, there is no need to alert the terrain. Furthermore, doing so could lead to terrain continuity problems, where it could/would be challenging in specific spots to make the terrain changes made by the river actor unnoticeable and seamless with the original terrain. Another reason for not using the Affects Landscape option is that, by not using it, the whole workflow of creating rivers can be significantly simplified. Since the water level is always lower than the surrounding terrain and the bottom of the river is already modeled within the DTM, these attributes can be made purposefully larger to fill the river basin. This reduces the whole process of creating the river by removing the need to set up each single spline point with depth, width, and terrain angles to only create the individual spline points and set their location, which is already a significantly lengthy process considering the shape of the river and its length and the fact that the spline points are all created and positioned manually. However, a combination of both Affects Landscape options is used for creating streams according to individual stream locations and the terrain around them. Since these streams are substantially smaller than the Vltava River, using the Affects Landscape can help highlight the stream in the terrain. This is especially welcomed when physics-abiding water in the UE would not be usable to create such streams because of its location on flat or sloped terrain. Similar to the situation with lakes that are not modeled within the DTM, the position of the stream bed may not be modeled in the DTM. In certain instances where the stream is, for example, in the ravine, this may not be a problem, but a stream going through pasture or meadow may require the use of the Affects Landscape option not only to make the stream stand out, but also to be able to model the water correctly.

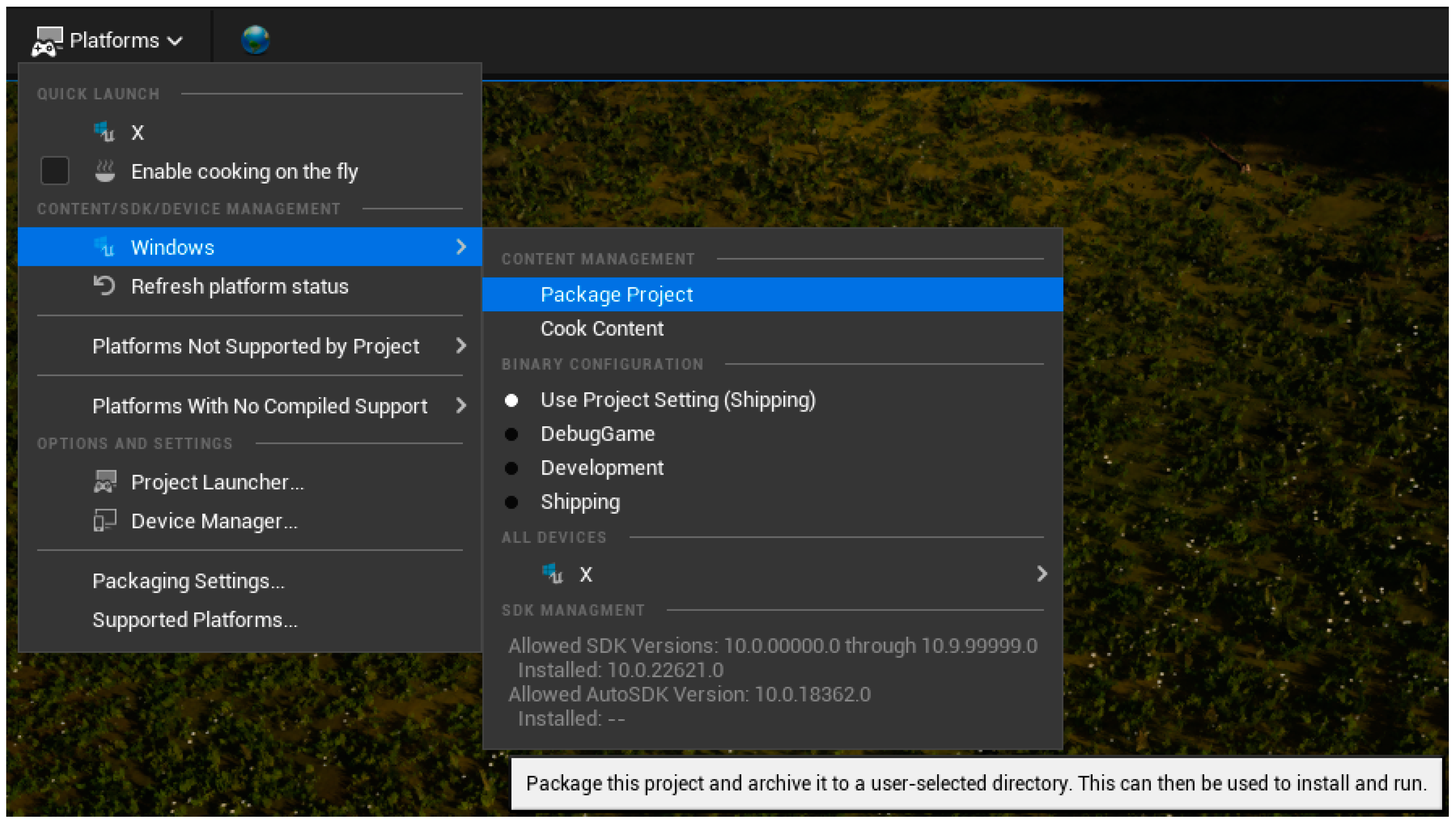

After the scene is filled with content, meaning it contains the finished landscape with applied material layers according to the land use information, with procedurally generated vegetation on top of it, all the water bodies, and the procedurally modeled buildings, the last step is to package the whole scene. Packaging a project is the final step, which allows the scene to be opened via an executable file and no longer requires UE to be installed. The first step in packaging the project is to fill out all the information in Project Settings under ProjectDescription, namely Project Display Title (also used by the operating system), publisher information if applicable, project version, and others. Next, choosing default maps (levels) to be opened in the Project—Maps and Models section is crucial. Lastly, the Build Configuration needs to be set to Shipping in the Project-Packaging section, allowing the opening of the final packaged project without needing UE. All other settings can be changed according to an individual project’s needs; however, they are left with default values for this study. After the project is set up for packaging, it can be selected from the main toolbar under the Platforms, as shown in

Figure 13.

However, this step has an excellent potential to cause errors and failure. The most straightforward errors to solve are those related to the required SDKs, which can be fixed by installing and updating.NET Core 3.1, .NET 9.0, Windows SDK, and Visual Studio 2022. Another set of problems may arise from the deleted and unused content within the project. The packaging process packages all levels, including any (testing) unused levels. The problem is that they usually contain invalid references to deleted content, which will cause packaging to fail.

For this reason, it is essential to “clean up” the whole project before packaging, for example, using the Content Browser filtering option Not Used at Any Level. After all these steps are completed and potential errors are resolved, the project packaging process can be completed. It is important to note that the entire packaging process takes a significant amount of time and fully utilizes the computer CPU for compiling shaders and packing the project per the chosen options in ProjectPackaging within the Project Settings. After the packaging process, the application executable file and all packaged assets are created within the selected folder. This application opens up the previously set default level for the created visualization with basic movement and camera controls. Within the application, no further modifications to the visualization can be made (outside of programmed interactions); however, the project within UE can still be used and worked on, and a new version of the application can be packaged.