In this section, we analyze the experimental results of the two scenarios and compare HDRLM3D with other crowd simulation methods to demonstrate the effectiveness and advantages of our method.

3.2.1. Scenario I

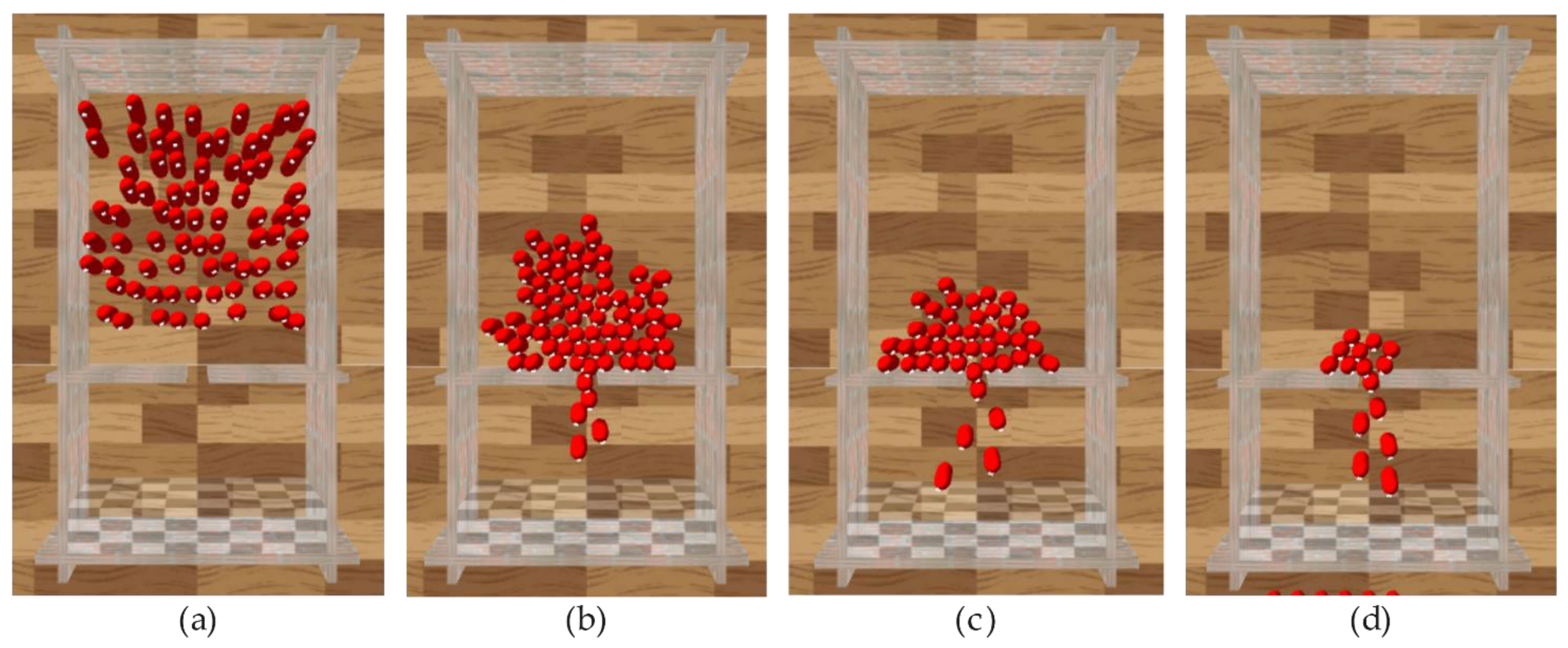

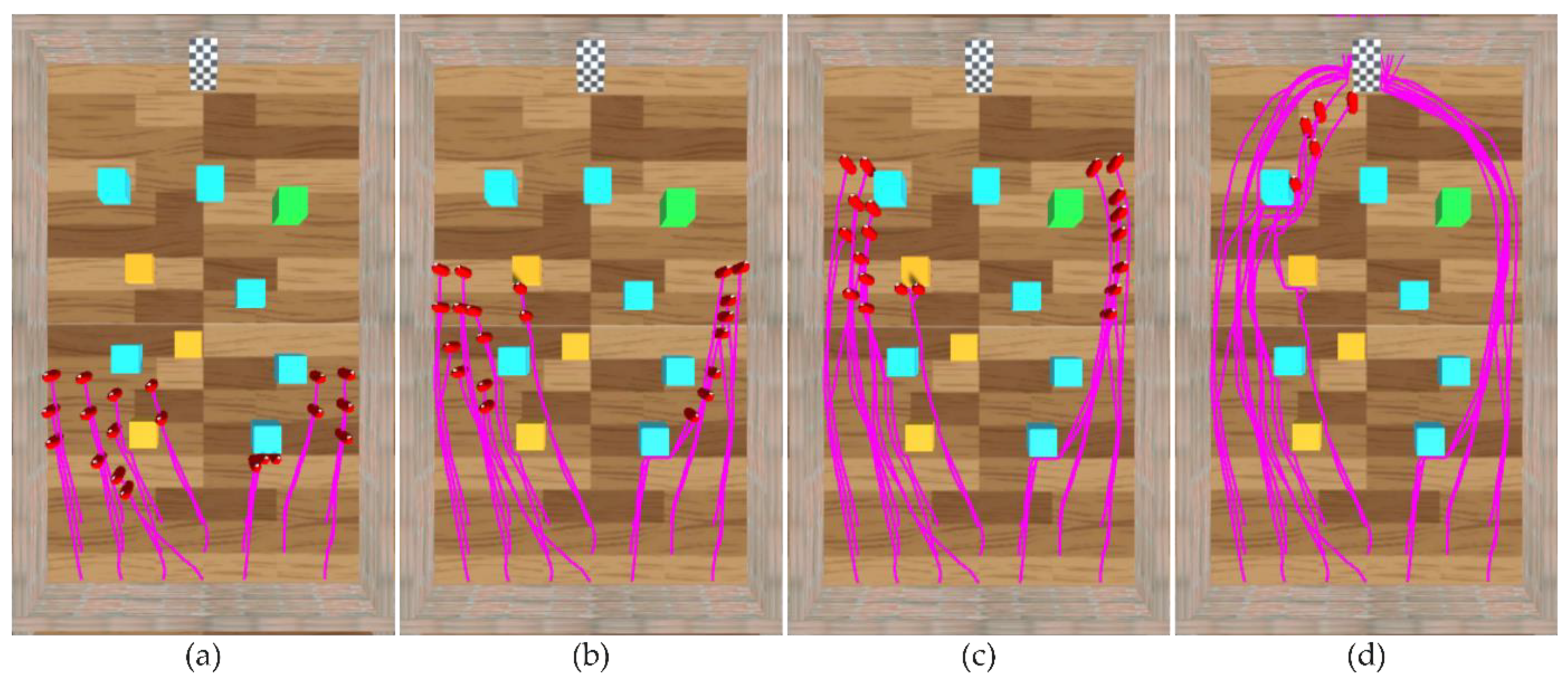

In

Figure 6, a temporal sequence of an experimental result in scenario I is shown. During the experiment, all agents move toward the exit (

Figure 6a). When the agents arrive at the exit, all agents cannot pass the exit together due to the narrowness of the exit, which causes the congestion of some agents at the exit (

Figure 6b). This phenomenon is intuitively manifested in the blocked agents always gathering at the exit; all the agents as a whole take on the shape of an “arch” until they pass the exit (

Figure 6c,d). This experimental result is consistent with the characteristics of the bottleneck effect.

- (1)

Density map

As one of the basic quantities used to describe the characteristics of pedestrian flow, density can intuitively reflect the occupancy of physical space by pedestrians and can further reveal the movement patterns of pedestrians (such as areas of congestion and paths). Therefore, to verify the validity of the above experimental results, we use a density map to analyze the movement laws of the agents. Moreover, the social force model (SFM) and optimal reciprocal collision avoidance (ORCA) [

40], as classic crowd simulation methods, have been widely recognized and applied in the field of crowd simulation. In scenario I, we compare HDRLM3D with SFM and ORCA to demonstrate the advantages of our method.

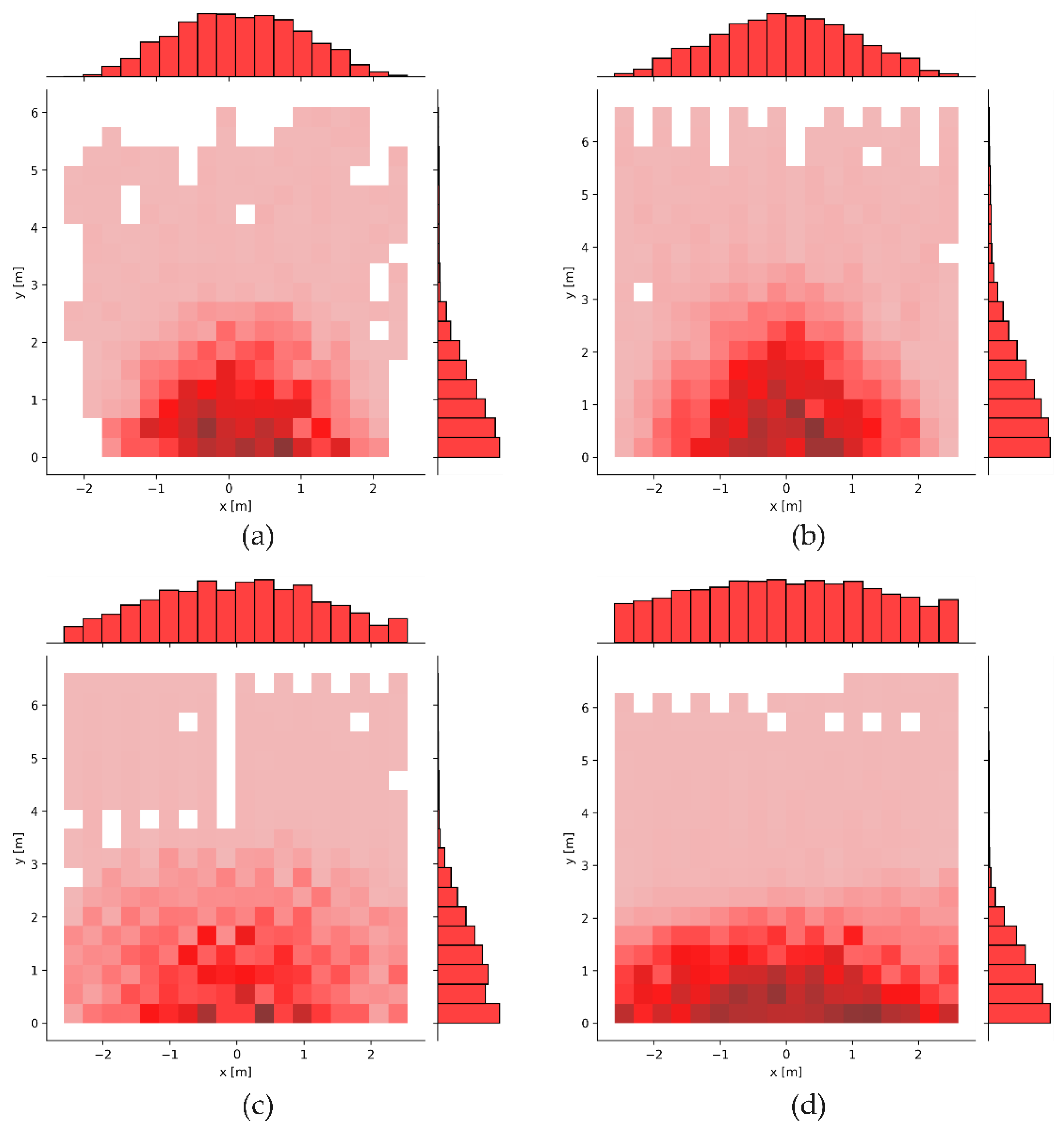

Figure 7a–d show the density maps generated by the real crowd experiment, HDRLM3D, SFM, and ORCA in scenario I, respectively. Taking point

as the center (exit), the densities gradually decrease from the center to both ends on the

x-axis and continuously decrease along the positive direction of the

y-axis. These density maps can intuitively reflect the congestion of agents (pedestrians) at the exit, which is represented as an arch. It is worth noting that the arches generated by SFM (

Figure 7c) and ORCA (

Figure 7d) are relatively flat compared to the arch generated by the real crowd experiment (

Figure 7a), while the arch generated by HDRLM3D (

Figure 7b) is similar to it.

To further verify the above conclusions, we calculate the mean and standard deviation of the densities along the

x-axis. As shown in

Table 3, the mean values of the real crowd experiment, HDRLM3D, SFM and ORCA are

,

,

and

, which proves that the agents (pedestrians) are all congested around the exit. Their standard deviations are

,

,

, and

, which indicates the flatness of the arches. The order of the results is as follows: real crowd experiment < HDRLM3D < SFM < ORCA. HDRLM3D also suffers from arch flattening, but its results are more similar to those of the real crowd experiment than those of SFM and ORCA.

- (2)

Fundamental diagram and evacuation time

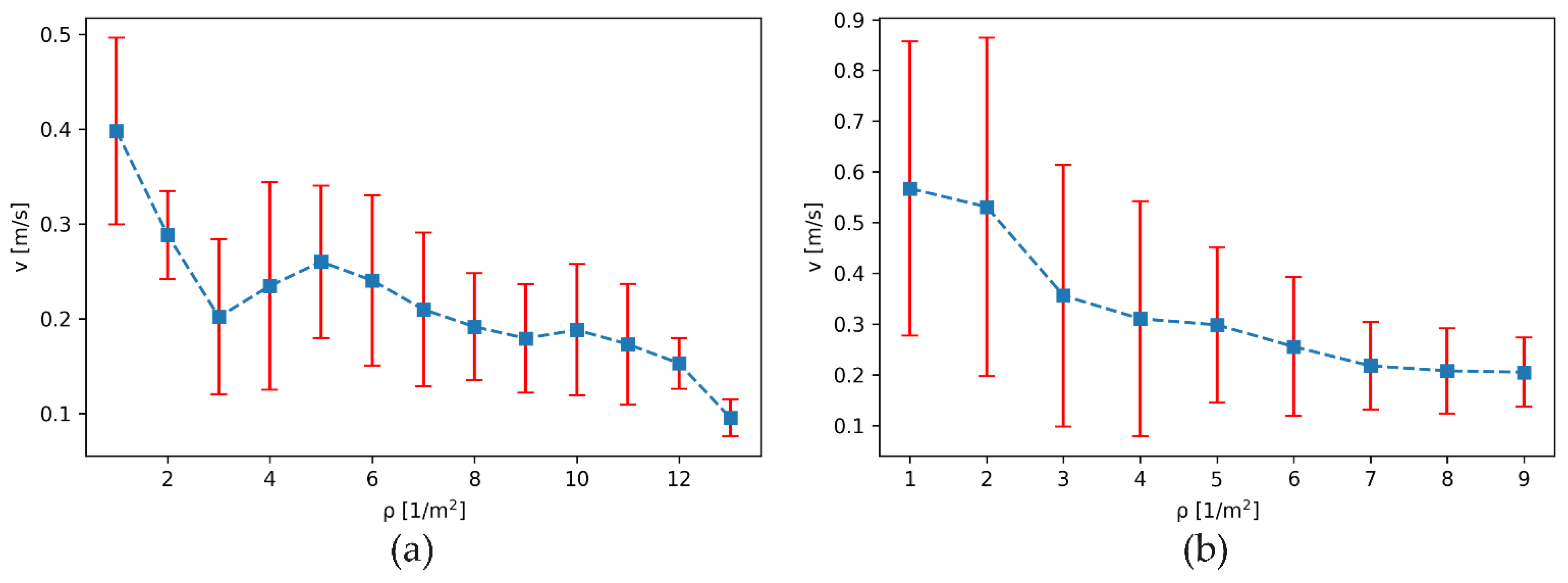

The fundamental diagram is an important test of whether a crowd simulation model is suitable for the description of pedestrian streams [

41], which indicates the relation between density and velocity. Therefore, we set up a square area with a side length of

in front of the exit to calculate the density and velocity of the agents (pedestrians). The fundamental diagrams generated by the real crowd experiment and HDRLM3D in scenario I are shown in

Figure 8a,b, respectively. Their density and velocity are negatively correlated as a whole; that is, the greater the density is, the lower the velocity, and with increasing density, the decreasing trend of the velocity gradually becomes slower. This conclusion is consistent with the basic characteristics of crowd evacuation [

42].

Moreover, our method exhibits some differences from the real crowd experiment. On the one hand, considering personnel safety and the evacuation atmosphere, it is difficult for real crowd experiments to simulate crowd evacuation in emergency situations, and the evacuation motivation of pedestrians is generally low. However, due to the time reward, the evacuation motivation of agents is stronger in HDRLM3D, so the agents’ velocity is slightly higher than the pedestrians’ velocity under the same density. On the other hand, agents cannot squeeze against each other in highly crowded situations due to their own colliders, so their maximum density is lower than the maximum density of pedestrians in the real crowd experiment.

Evacuation time is one of the indicators for evaluating the results of crowd evacuation, and it is also an important method of testing the performance of crowd simulation models. In

Table 4, the evacuation times required by the real crowd experiment, HDRLM3D, SFM, and ORCA are displayed. The evacuation time required by HDRLM3D (

) is the closest to that required by the real crowd experiment (

) and is slightly shorter than that of the real crowd experiment. This result is consistent with the analysis of the fundamental diagrams. Because the velocity of the agents in HDRLM3D is slightly higher than that of pedestrians in the real crowd experiment, the evacuation time required by HDRLM3D is relatively low. Due to the highly crowded environment and extremely narrow exit, the agents tend to have balanced forces or disordered behavior in SFM and ORCA, so the evacuation times (

and

) they require are much longer than that in the real crowd experiment.

3.2.2. Scenario II

- (1)

Experimental results in scenario II

In the case of an unknown environment, that is, when agents cannot obtain environmental information through the GOLP and the reward function does not contain any environmental information, we use the method of [

27] and HDRLM3D to train agents in scenario II to obtain the corresponding training model and then conduct comparative experiments.

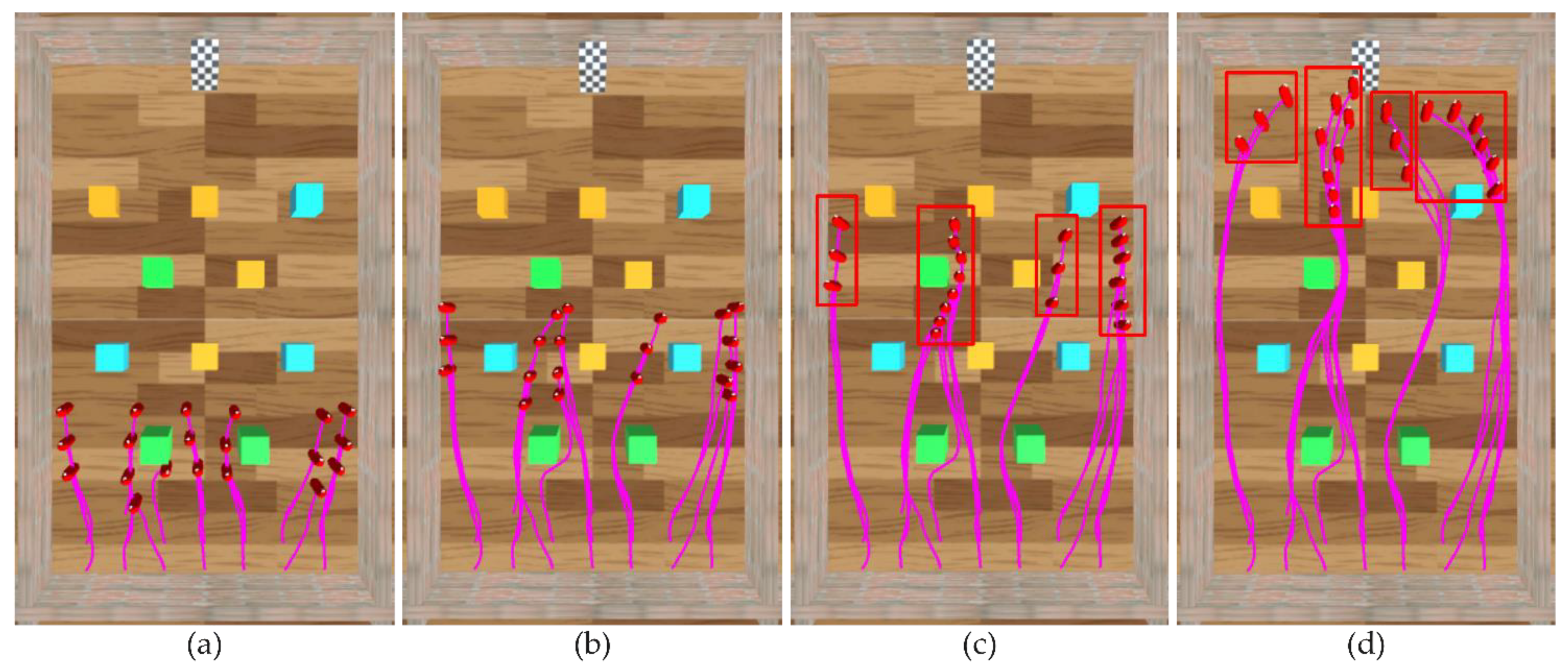

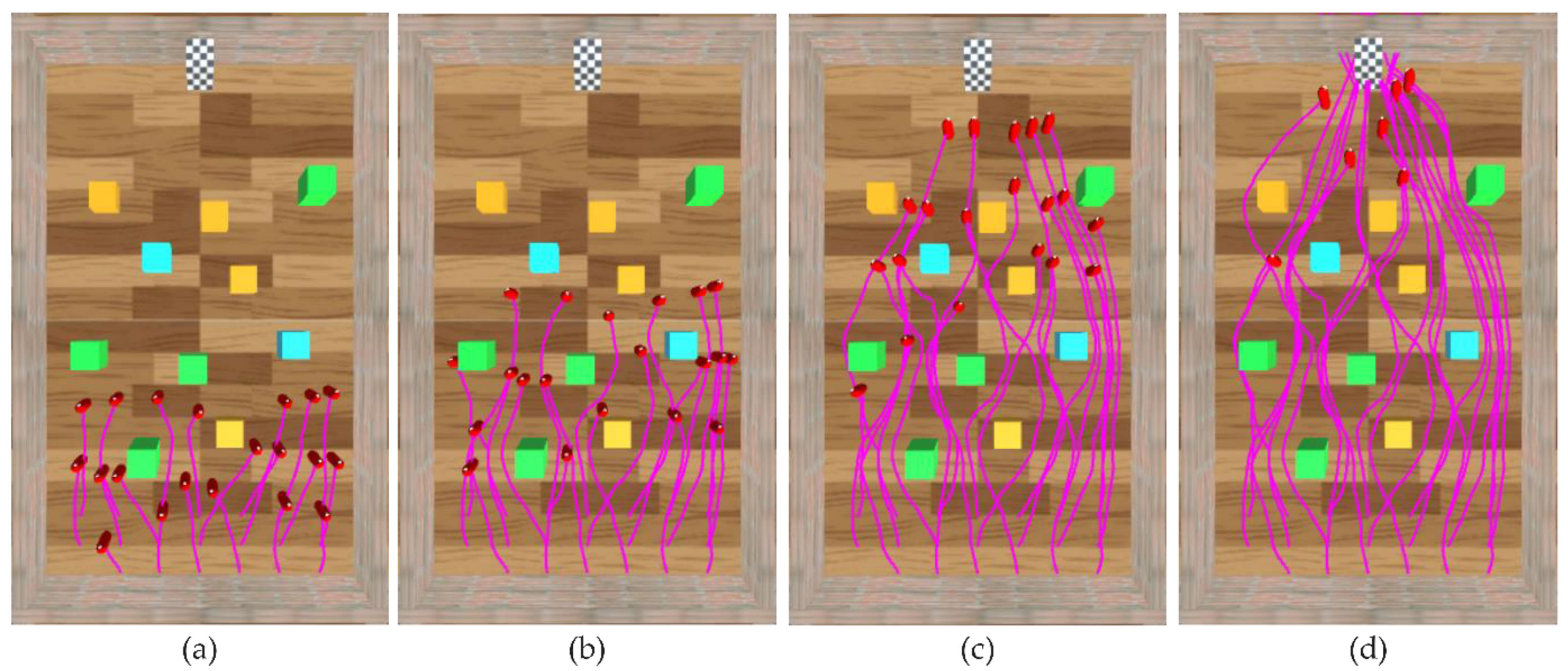

The method of [

27] can enable agents to avoid obstacles and navigate in unknown three-dimensional scenarios, but it does not consider the height of the environment in the construction of the perceptron and policy, so these environments can be abstractly regarded as two-dimensional scenarios. In

Figure 9, a temporal sequence of an experimental result generated by the method of [

27] in scenario II is shown. During the experiment, the agents move toward the target without colliding with obstacles (

Figure 9a), gradually gather (

Figure 9b) to form four groups (

Figure 9c,d, where each red rectangle represents a group), and finally reach the target. As seen from the trajectories (pink curves in

Figure 9), the agents form four fixed and unified routes during the experiment, and two of the routes are very close to the walls on both sides.

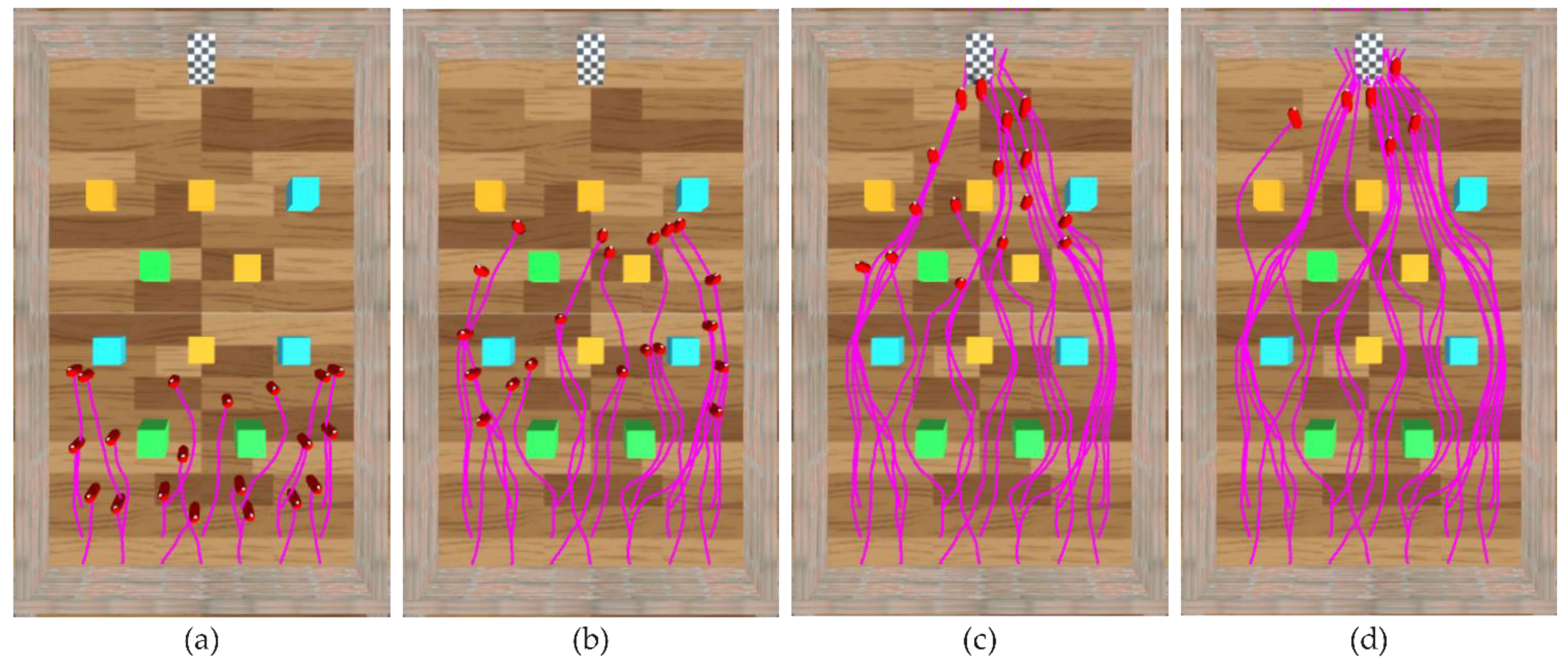

In

Figure 10, a temporal sequence of an experimental result generated by HDRLM3D in scenario II is shown. During the experiment, the agents can also move toward the target without colliding with obstacles (

Figure 10a), but they do not form obvious groups (

Figure 10b,c), and they finally reach the target (

Figure 10d). As seen from the trajectories (pink curves in

Figure 10), the agents also do not form fixed and unified routes. Compared with the experimental result in

Figure 9, their routes are more random and scattered and are far from the walls on both sides. This experimental result is intuitively more similar to the observation data of real crowds [

36,

43].

We further compare the performance of these two methods ([

27] and HDRLM3D) in scenario II. We use the two methods to conduct one hundred experiments in scenario II, count the total number of collisions between all agents and obstacles, and obtain the average number of collisions for each agent in each experiment. As shown in

Table 5, the average number of collisions for both methods is low (

and

), which indicates that they both have a strong obstacle avoidance ability in scenario II. Moreover, compared to the method of [

27], the average number of collisions in HDRLM3D is smaller (

), which indicates that HDRLM3D has a stronger obstacle avoidance ability in scenario II.

- (2)

Experimental results in an adjusted scenario II

To further demonstrate the advantages of HDRLM3D in unknown three-dimensional scenarios, we randomly adjust the heights and positions of the obstacles in scenario II and use the models trained in scenario II without additional training to conduct comparative experiments.

In

Figure 11, a temporal sequence of an experimental result generated by the method of [

27] in the adjusted scenario II is shown. Similar to the experimental result in

Figure 9, the agents still gradually gather to form relatively fixed and unified routes (pink curves in

Figure 11), and more routes are close to the walls on both sides. Moreover, as shown in

Figure 12, because this method does not consider the height of the environment, the agents cannot cope well with the changes in the heights and positions of obstacles, which leads to frequent collisions between the agents and obstacles in the adjusted scenario II (inside the red rectangles in

Figure 12).

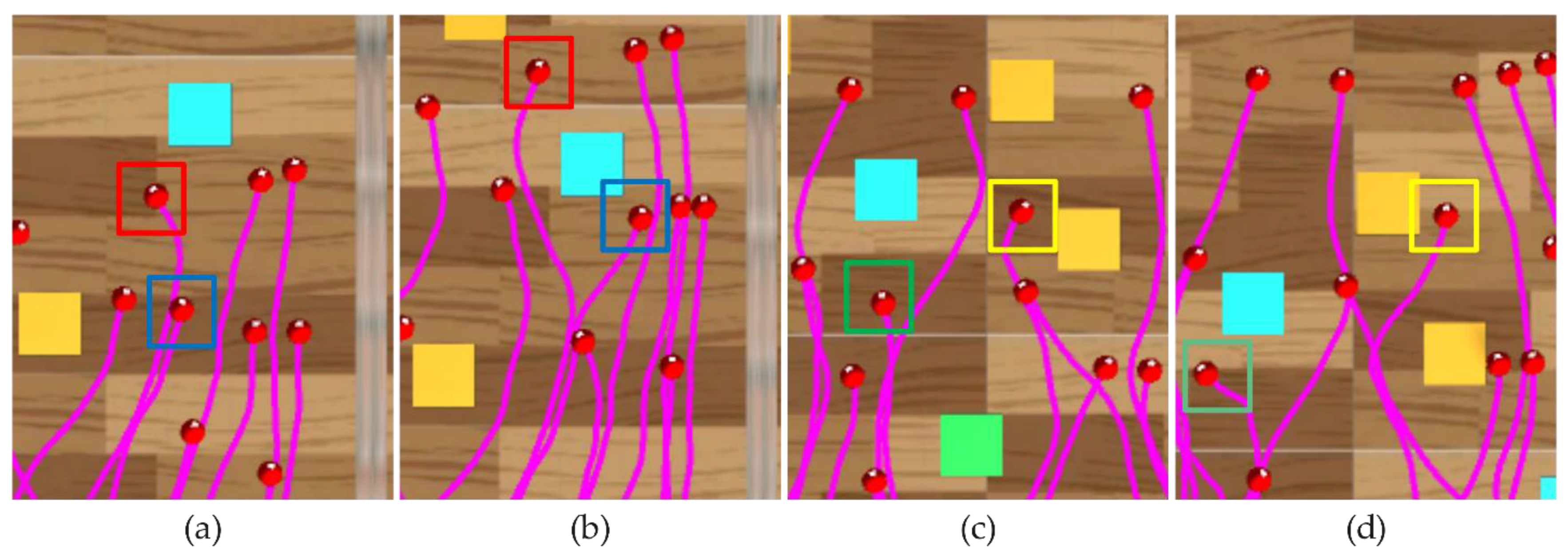

In

Figure 13, a temporal sequence of an experimental result generated by HDRLM3D in the adjusted scenario II is shown. In this scenario, the agents can still reach the target without colliding with obstacles, and their routes (pink curves in

Figure 13) are random and scattered and are far from the walls on both sides. This experimental result is intuitively similar to the experimental result in

Figure 10. As shown in

Figure 14, because HDRLM3D considers the height of the environment, the agents (marked by rectangles of different colors in

Figure 14) can cope well with the changes in the heights and positions of the obstacles, which prevents frequent collisions between the agents and obstacles.

We further compare the performance of these two methods in the adjusted scenario II. We also conduct one hundred experiments in this scenario by using these methods to count and calculate the total number of collisions and the average number of collisions. As shown in

Table 6, in the adjusted scenario II, the average number of collisions of HDRLM3D (

) is much smaller than that of the method of [

27] (

), which indicates that HDRLM3D has a better obstacle avoidance ability in the adjusted scenario II. Moreover, compared to scenario II, the method of [

27] increases the average number of collisions by approximately

in the adjusted scenario II, while HDRLM3D only increases it by approximately

, which indicates that the robustness of HDRLM3D is stronger.