Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning

Abstract

:1. Introduction

Contributions of This Work

- The first publicly available dataset of UAV imagery of very small (less than 100 Watts) SHS (Section 3). We collected, annotated, and openly shared the first UAV-based very small solar panel dataset with precise ground sampling distance and flight altitude. The dataset contains 423 images, 60 videos and 2019 annotated solar panel instances. The dataset contains annotations for training object detection or segmentation models.

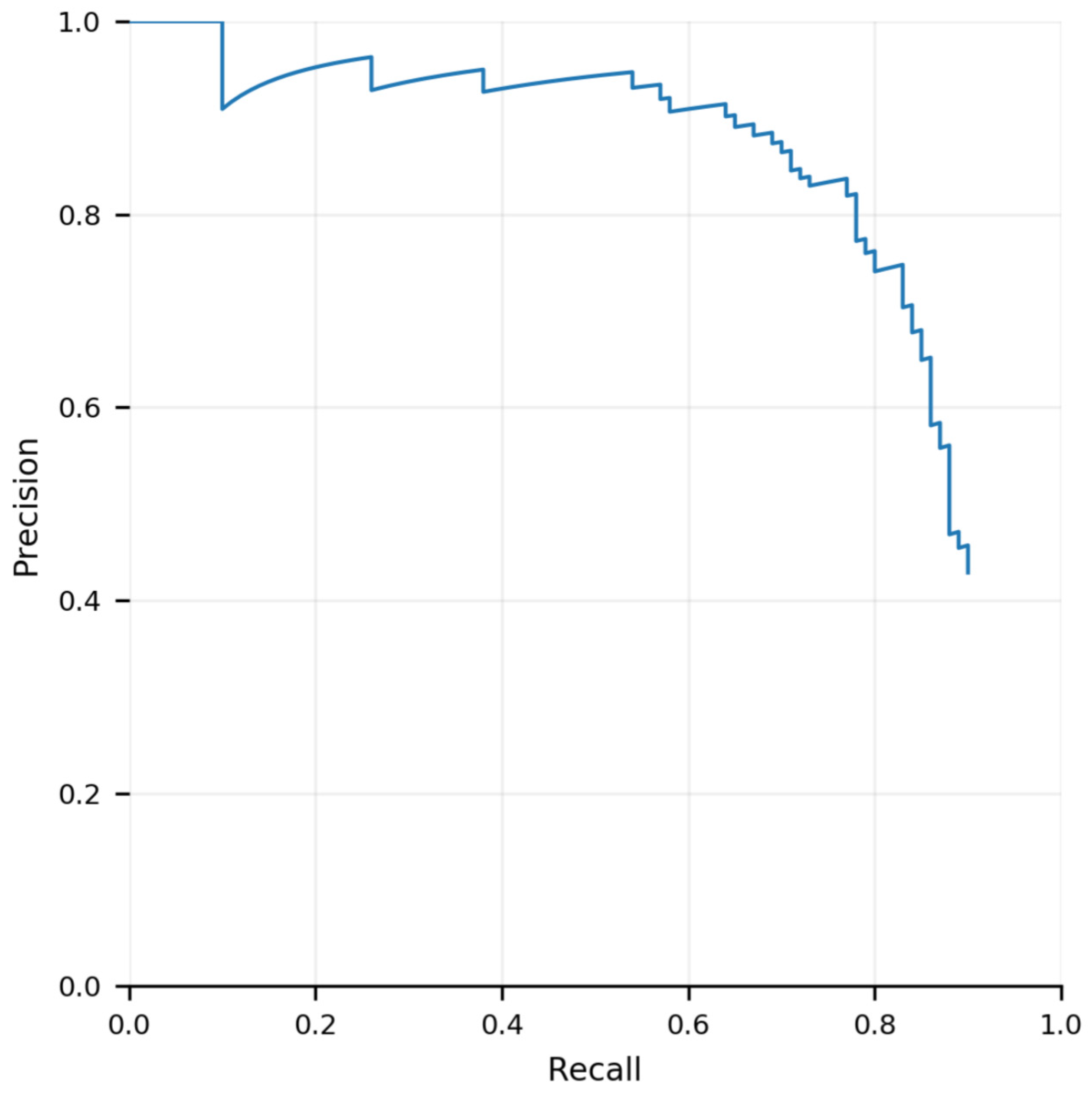

- Evaluating the robustness and detection performance of deep learning object detection for solar PV UAV data (Section 5). We evaluate the performance of SHS detection performance with a U-Net architecture with a pre-trained ResNet50 backbone. We controlled for the data collection resolution (or 1/altitude): sampling every 10 m of altitude across an interval from 50 m–120 m. We controlled for the dimension of panel size by using 5 diverse solar PV panel sizes

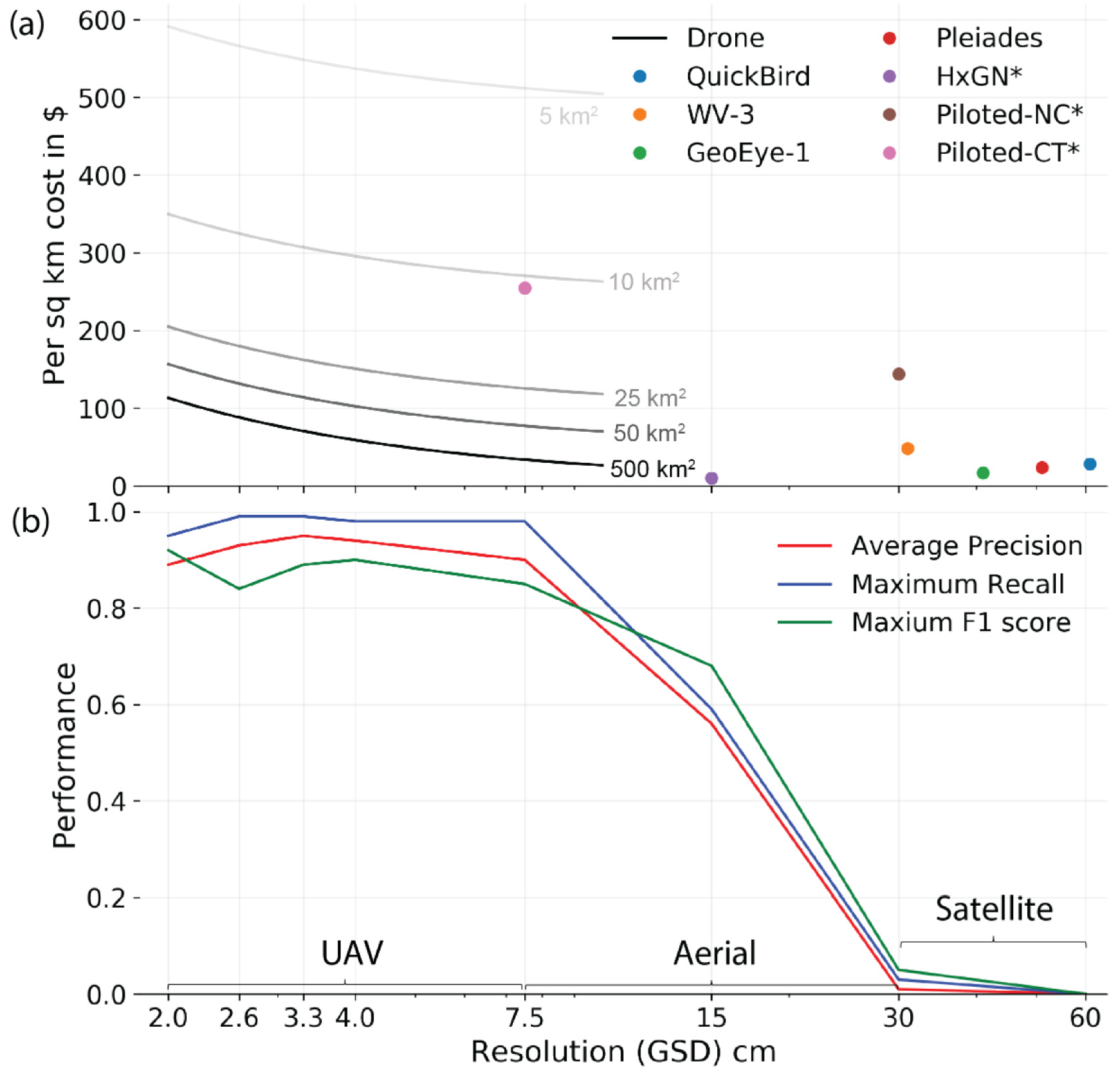

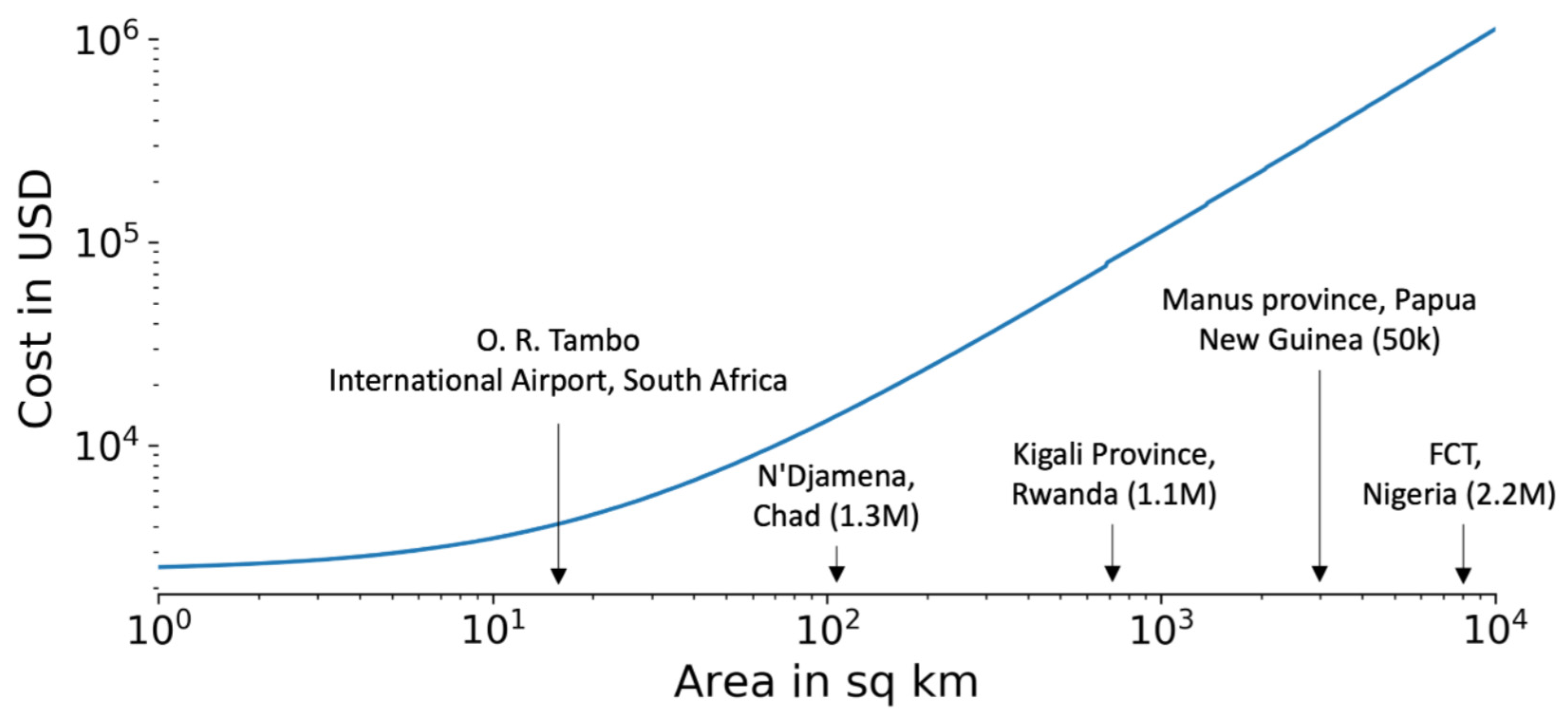

- Cost/benefit analysis of UAV- and satellite-based solar PV mapping (Section 6). We estimate a cost-performance curve for comparing remote sensing based data collection for both UAV and satellite systems for direct comparison. We demonstrate that using the highest resolution satellite imagery currently available, very small SHS are hardly detectable; thus, even the highest-resolution commercially available satellite imagery does not present a viable solution for assessment of very small (less than 100 Watt) solar panel deployments.

- Case study in Rwanda illustrating the potential of drone-based solar panel detection for very small SHS installations (Section 7). By applying our models to drone data collected in the field in Rwanda, we demonstrate an example of the practical performance of using UAV imagery for solar panel detection. Comparing the results to our experiments with data collected under controlled conditions, we identified the two largest obstacles to achieving improved performance are the resolution of the imagery and the diversity of the training data.

2. Related Work

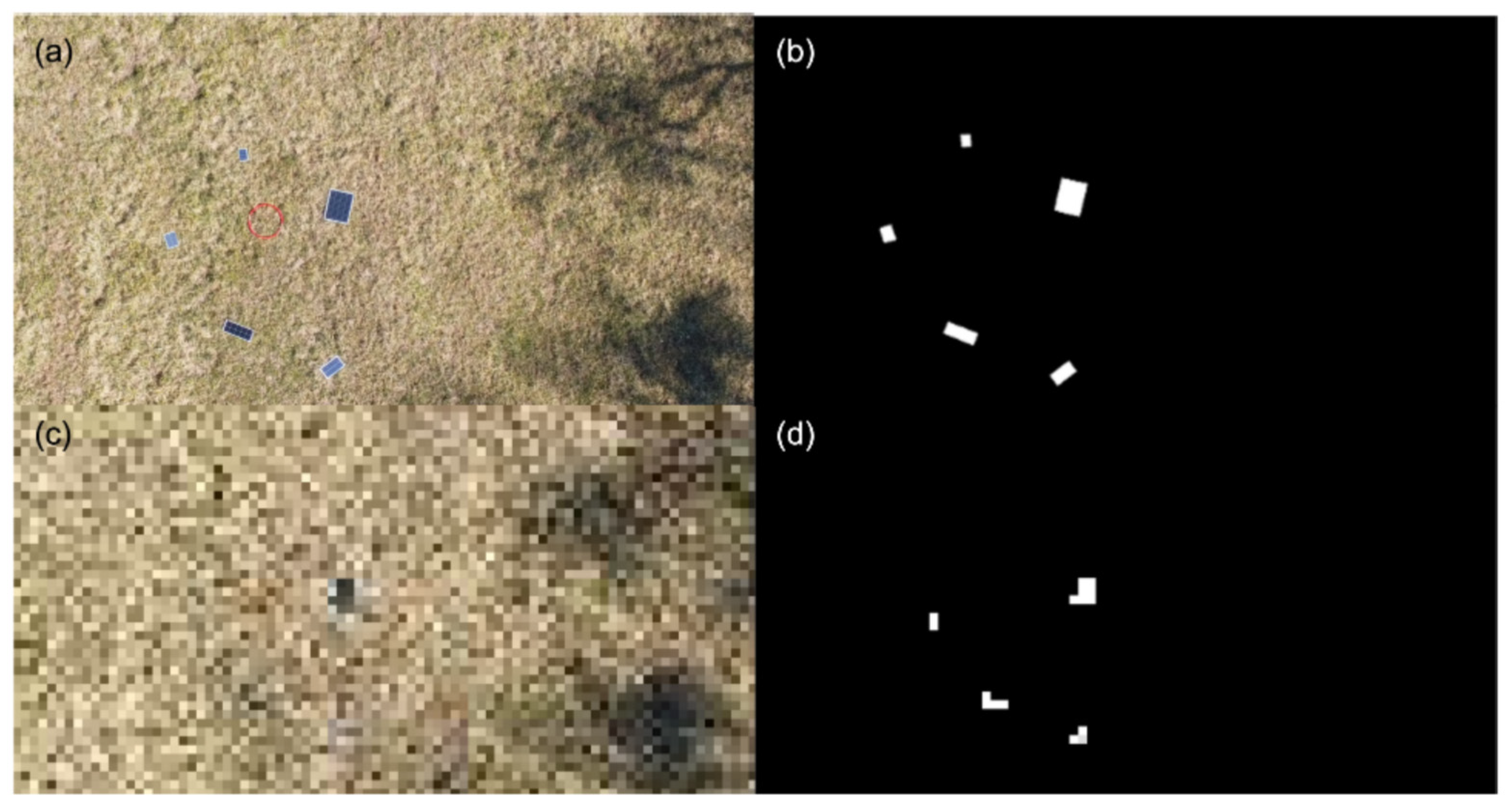

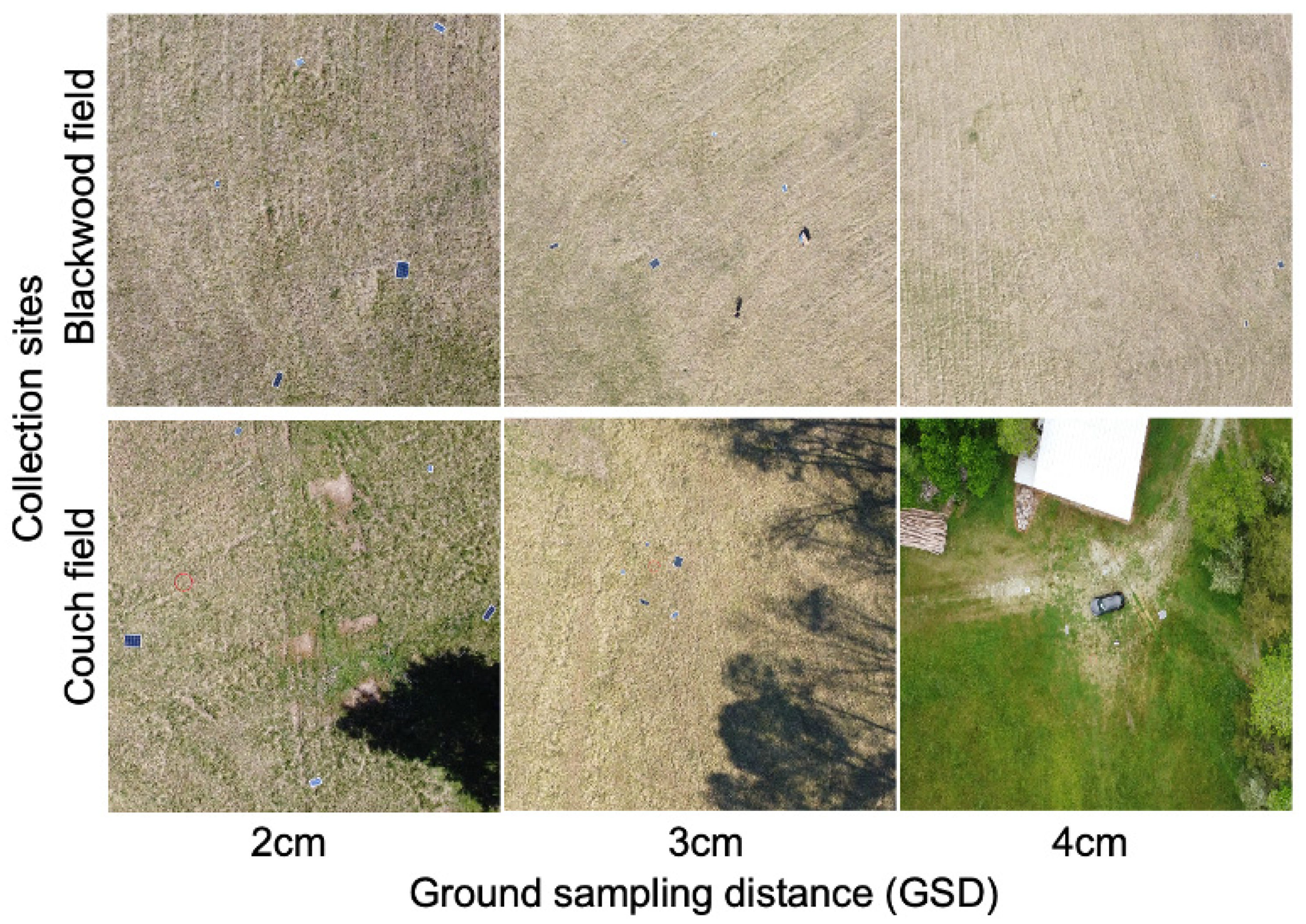

3. The SHS Drone Imagery Dataset

- Adequate ground sampling distance (GSD) range and granularity. Due to variations in factors such as hardware and elevation change, the GSD of drone imagery can vary significantly in practice. Therefore, we want our dataset to contain imagery with a range of image GSDs (shown in Table 1) that are sufficient to represent a variety of real-world conditions, as well as detect SHS.

- Diverse and representative solar panels. As solar panels can have different configurations affecting the visual appearance (polycrystal or monocrystal, size, aspect ratio), we chose our solar panels carefully so that they form a diverse and representative (in terms of power capacity) set (Table A1) of actual solar panels that would be deployed in developing countries.

- Fixed camera angle of 90 degrees and different flying speeds: To investigate the robustness of solar panel detection as well as data collection cost (that is correlated with flying speed), we want our dataset to have more than one flight speed.

Data Collection Process

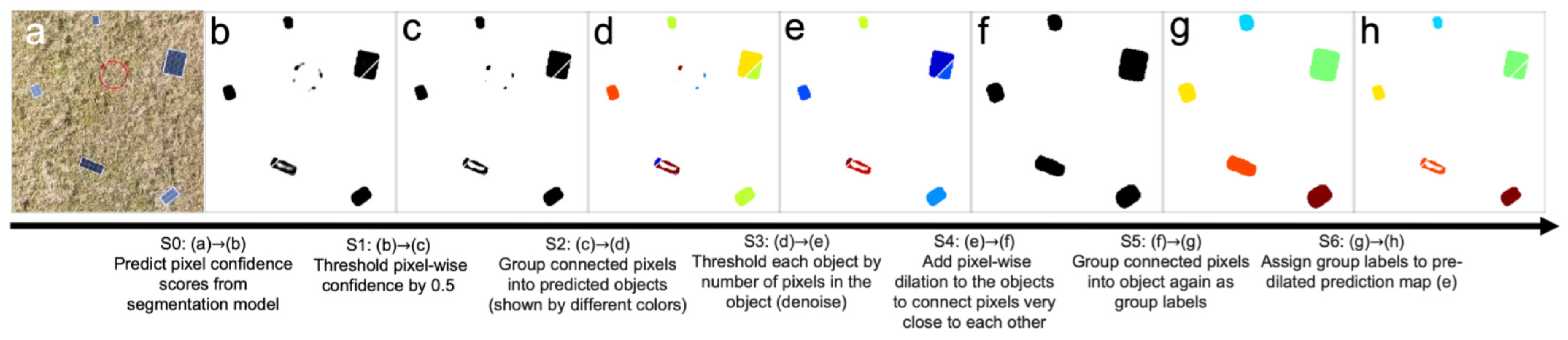

4. Post-Processing and Metrics

5. Experiment #1: Solar Panel Detection Performance Using UAV Imagery

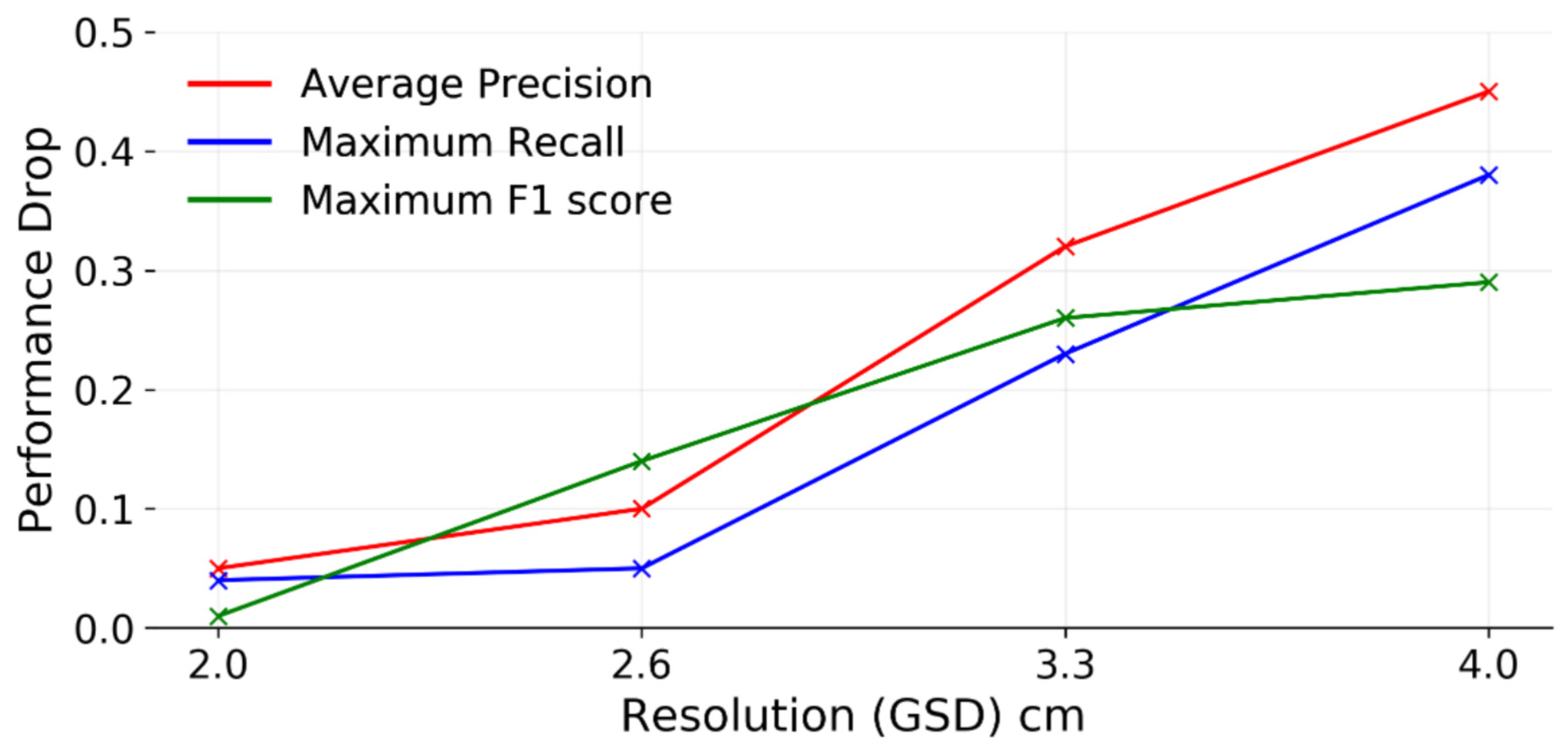

5.1. Detection Performance Comparison over Imagery Resolution

Results

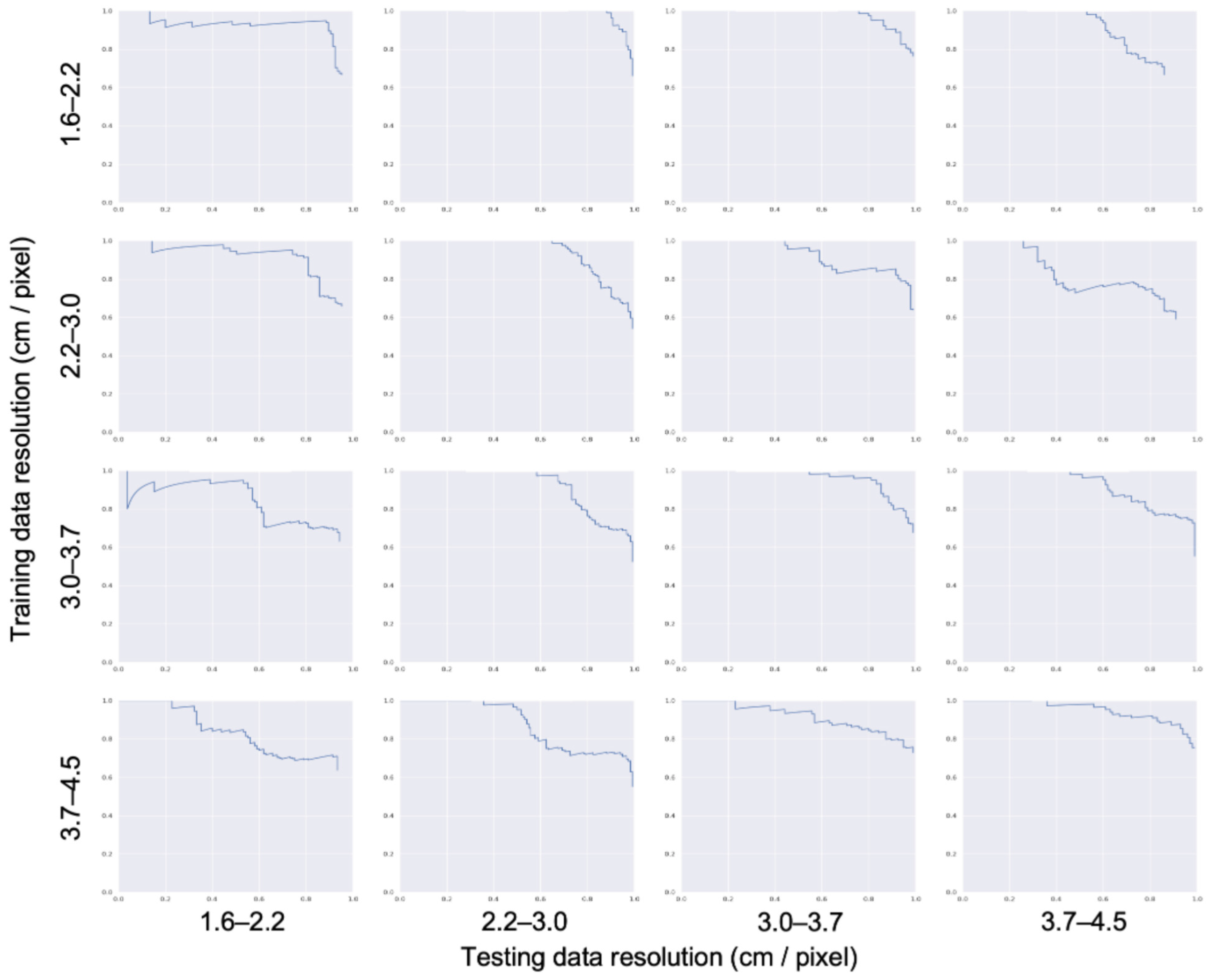

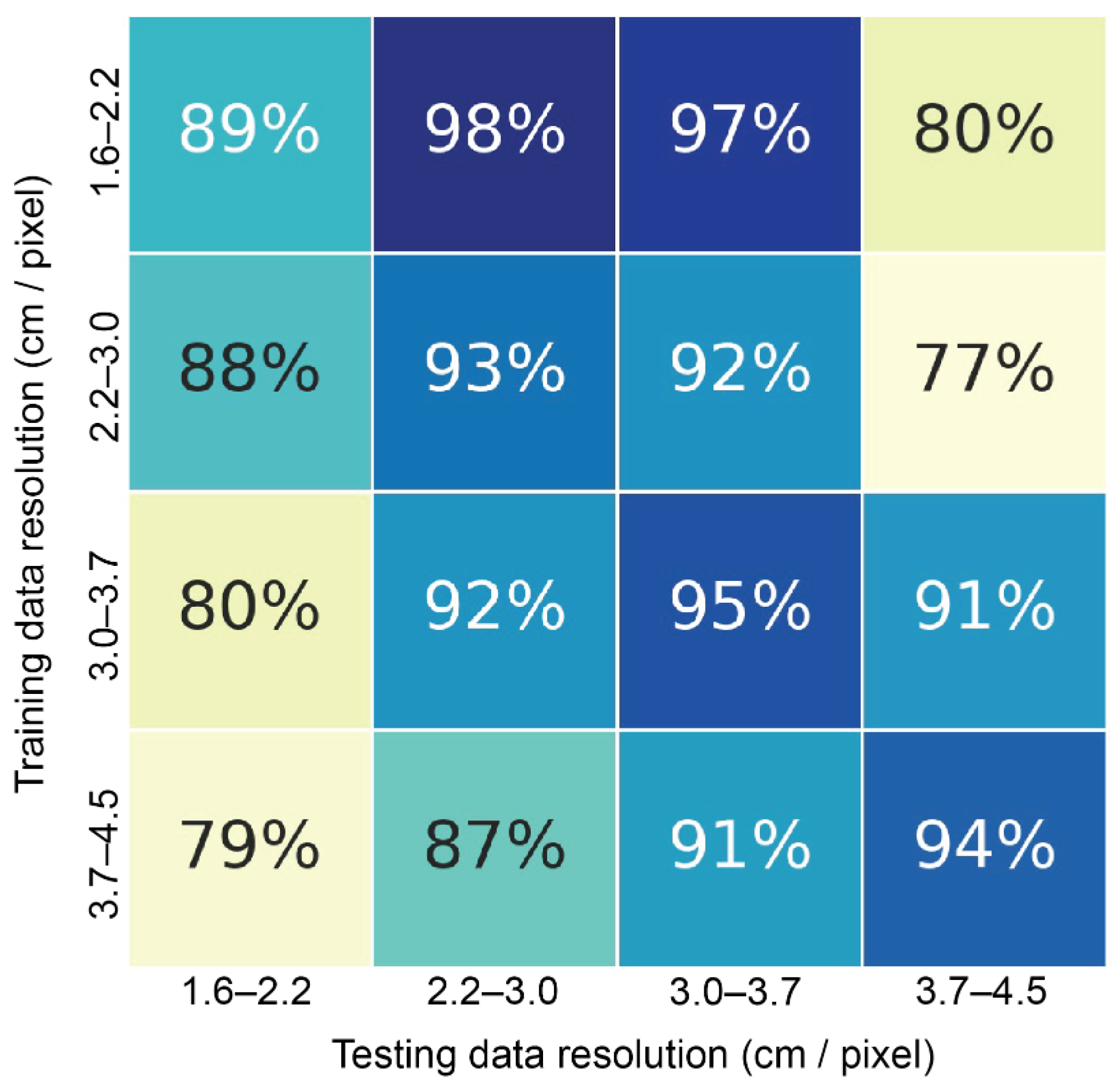

5.2. Detection Performance with Respect to Resolution Mismatch

5.2.1. Results

5.3. Solar Panel Detection Performance with Respect to Flight Speed

Results

6. Experiment #2: Cost Analysis of UAV-Based Solar Panel Detection and Comparison to Satellite Data

6.1. Cost Analysis: Methods

- The UAV is operated five days each week, six hours per day (assuming an eight-hour work day, and allowing two hours for local transportation and drone site setup).

- Each UAV operator rents one car and one UAV; the upfront cost of the UAV is amortized over the expected useful life of the UAV.

- Total UAV image collection time is capped at three months, but multiple pilots (each with their own UAV) may be hired if necessary to complete the collection.

- UAV lifetime is assumed to be 800 flight hours (estimate from consultation with a UAV manufacturer).

- A sufficient quantity of UAV batteries is purchased for operating for a full day.

- The probability of inclement weather is fixed at 20%. and no operation would be carried out under those conditions.

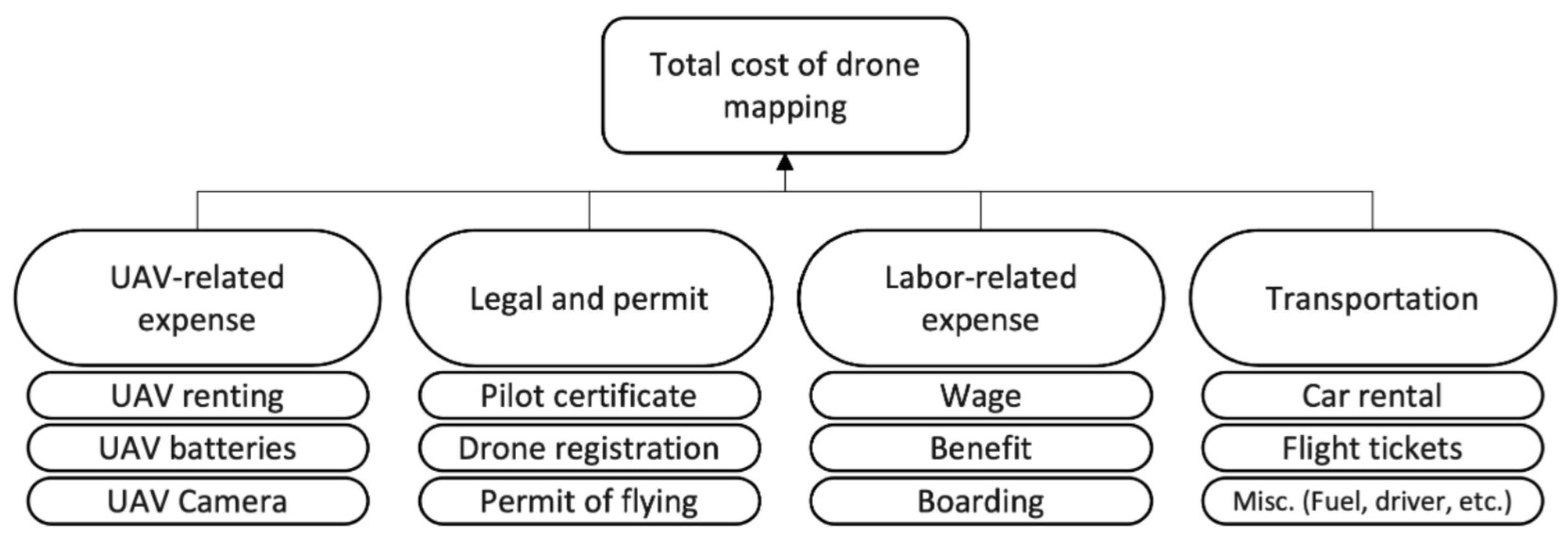

- Legal and permit: The legal and permitting cost of getting the credentials for flying in a certain country or region. As an example, in the US, although state laws may vary, at a federal level, flying for non-hobbyist purposes (class G airspace, below 120 m) requires the drone pilot to have a Part 107 permit, which requires payment of a fee as well as successful completion of a knowledge test. The legal and permitting costs are inherently location-dependent, and cost variation may be large.

- Transportation: The total transportation cost for the drone operator. For the purposes of our estimate here, we assume one drone pilot (thus, total data collection time is a linear function of area covered). Note that this category includes travel to and from the data collection location, which is assumed to include air travel, local car rental, car insurance, fuel costs, and (when the operational crew is foreign to the language) a translation service.

- Labor-related expenses: Umbrella category of all labor-related costs including wages and fringe benefits or overhead paid to the drone pilot, as well as boarding and hotel costs.

- Drone-related expenses: Umbrella category of all drone-related costs including purchase of the drone, batteries, and camera (if not included with the drone).

6.2. Cost Analysis: Result

7. Experiment #3: Case Study: Rwanda SHS Detection Using Drone Imagery

Case Study: Result

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SDG | Sustainable Development Goal |

| UAV | Unmanned Aerial Vehicles |

| US | United States of America |

| AP | Average Precision |

| IoU | Intersection over Union |

| CNN | Convolutional neural networks |

| SHS | Solar Home Systems |

| GSD | Ground Sampling Distance |

Appendix A

Appendix A.1. Data Collection Pipeline and Detailed Specifications of Solar Panels Used

| Brand | X-Crystalline | L (mm) | W (mm) | Aspect_Ratio | Area (dm) | T (mm) | Power (W) | Voltage (V) |

|---|---|---|---|---|---|---|---|---|

| ECO | Poly | 520 | 365 | 1.43 | 19 | 18 | 25 | 18 |

| ECO | Mono | 830 | 340 | 2.45 | 28.3 | 30 | 50 | 5 |

| Rich solar | Poly | 624 | 360.8 | 1.73 | 22.6 | 25.4 | 30 | 12 |

| Newpowa | Poly | 345 | 240 | 1.44 | 8.3 | 18 | 10 | 12 |

| Newpowa | Poly | 910 | 675 | 1.35 | 61.5 | 30 | 100 | 12 |

Appendix A.2. Satellite View for Small SHS

Appendix A.3. Algorithm and Performance Details

- Pretraining: As labeled drone datasets, especially the ones including solar panels, are extremely scarce, we use satellite imagery containing solar panels (same target as our task, but larger in size) to pre-train our network before fine-tuning it with the UAV imagery data we collected. This practice increased performance over fine-tuning from ImageNet pre-trained weights alone, IoU improved from 48% to 70%).

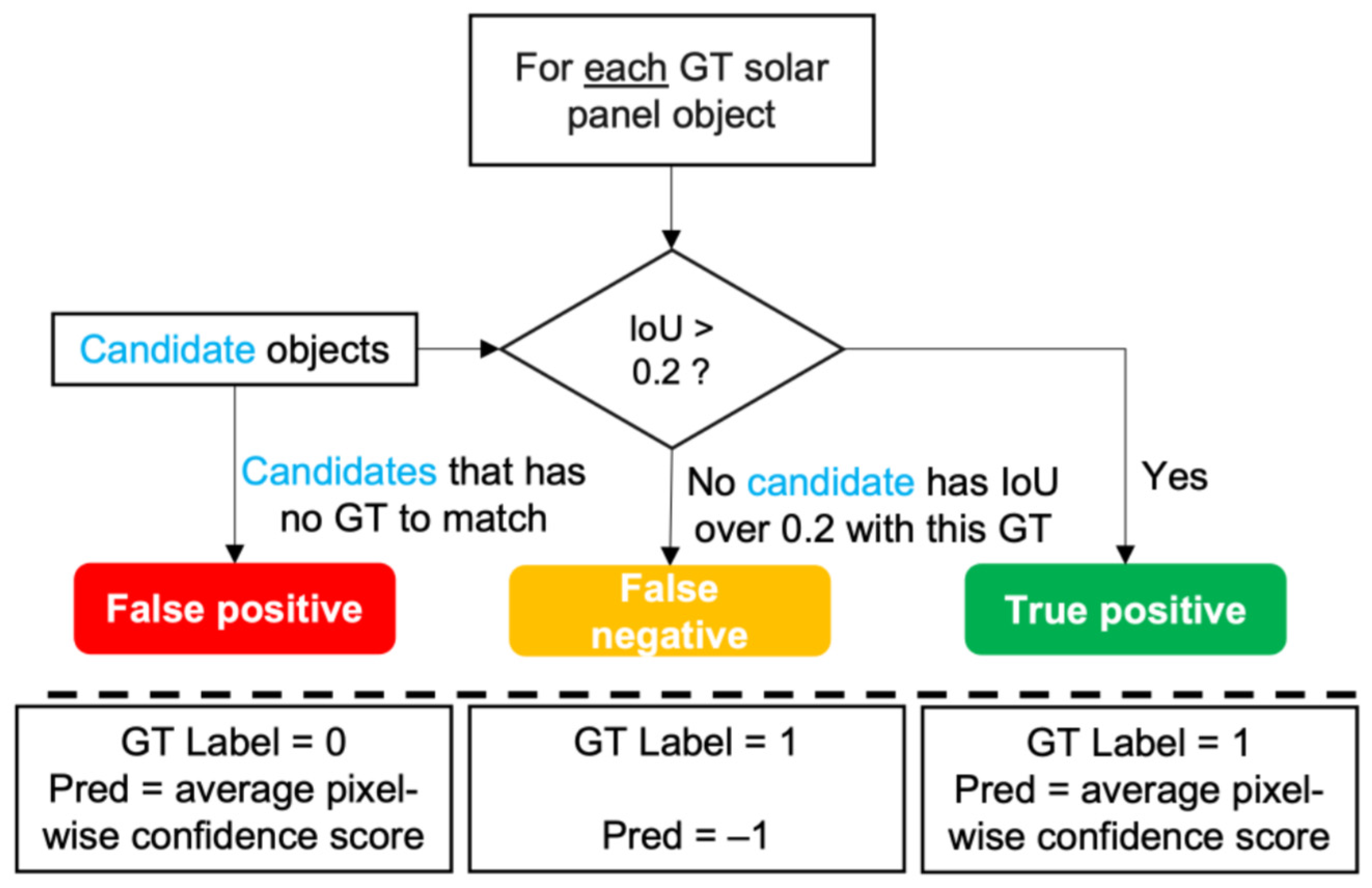

- Scoring pipeline: We illustrate the process of scoring in Figure A2. Note that in detection problems, the concept of true negatives is not defined. This is also precision and recall (and therefore precision-recall curves) are used for performance evaluation rather than ROC curves.

- Simulating satellite resolution imagery: In Section 5, we downsampled our UAV imagery to simulate satellite imagery resolution. To make sure the imagery has an effective resolution that is the same as satellite imagery, while keeping the same overall image dimensions so that our model has the same number of parameters, we follow the downsampling process with an up-sampling procedure using bi-linear interpolation (using OpenCV’s resizing function). The effective resolution remains at the satellite imagery level (30 cm/pixel), but the input size of each image into the convolutional neural network remains the same.

- Hyper-parameter tuning: Across the different resolutions of training data, we kept all hyperparameters constant except for the class weight of the positive class (due to the largely uneven distribution of solar panels and background imagery across changes in GSD). After tuning the other hyperparameters like learning rate and the model architecture once for all flight heights, we tuned the positive class weight individually for each of our image resolution groups due to the inherent difference in the ratio of number of solar panel pixels within each image.

- Precision-recall curves for Section 5.2.1: As only aggregate statistics were presented in Section 5.2.1, we present all relevant precision-recall curves here (Figure A3) for reference.

Appendix A.4. Household Density Estimation

Appendix A.5. Cost Estimation Calculations and Assumptions

Appendix A.6. Lodging Cost Ratio

References

- United Nations. Goal 7|Department of Economic and Social Affairs. Available online: https://sdgs.un.org/goals/goal7 (accessed on 1 September 2021).

- Martin. Sustainable Development Goals Report. Available online: https://www.un.org/sustainabledevelopment/progress-report/ (accessed on 1 September 2021).

- Bisaga, I.; Parikh, P.; Tomei, J.; To, L.S. Mapping synergies and trade-offs between energy and the sustainable development goals: A case study of off-grid solar energy in Rwanda. Energy Policy 2021, 149, 112028. [Google Scholar] [CrossRef]

- Bandi, V.; Sahrakorpi, T.; Paatero, J.; Lahdelma, R. Touching the invisible: Exploring the nexus of energy access, entrepreneurship, and solar homes systems in India. Energy Res. Soc. Sci. 2020, 69, 101767. [Google Scholar] [CrossRef]

- Watson, A.C.; Jacobson, M.D.; Cox, S.L. Renewable Energy Data, Analysis, and Decisions Viewed through a Case Study in Bangladesh; Technical Report; National Renewable Energy Lab. (NREL): Golden, CO, USA, 2019.

- Malof, J.M.; Hou, R.; Collins, L.M.; Bradbury, K.; Newell, R. Automatic solar photovoltaic panel detection in satellite imagery. In Proceedings of the 2015 International Conference on Renewable Energy Research and Applications (ICRERA), Palermo, Italy, 22–25 November 2015; pp. 1428–1431. [Google Scholar]

- Castello, R.; Roquette, S.; Esguerra, M.; Guerra, A.; Scartezzini, J.L. Deep learning in the built environment: Automatic detection of rooftop solar panels using Convolutional Neural Networks. J. Phys. Conf. Ser. 2019, 1343, 012034. [Google Scholar] [CrossRef]

- Bhatia, M.; Angelou, N. Beyond Connections; World Bank: Washington, DC, USA, 2015. [Google Scholar]

- Malof, J.M.; Bradbury, K.; Collins, L.M.; Newell, R.G. Automatic detection of solar photovoltaic arrays in high resolution aerial imagery. Appl. Energy 2016, 183, 229–240. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A machine learning framework to efficiently construct a solar deployment database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef] [Green Version]

- Malof, J.; Collins, L.; Bradbury, K.; Newell, R. A deep convolutional neural network and a random forest classifier for solar photovoltaic array detection in aerial imagery. In Proceedings of the 2016 IEEE International Conference on Renewable Energy Research and Applications (ICRERA), Birmingham, UK, 20–23 November 2016; pp. 650–654. [Google Scholar]

- Kruitwagen, L.; Story, K.; Friedrich, J.; Byers, L.; Skillman, S.; Hepburn, C. A global inventory of photovoltaic solar energy generating units. Nature 2021, 598, 604–610. [Google Scholar] [CrossRef]

- IRENA. Off-Grid Renewable Energy Statistics 2020; International Renewable Energy Agency: Abu Dhabi, United Arab Emirates, 2020. [Google Scholar]

- What Is the Standard Size of a Solar Panel? Available online: https://www.thesolarnerd.com/blog/solar-panel-dimensions/ (accessed on 1 September 2021).

- Liang, S.; Wang, J. (Eds.) Chapter 1—A systematic view of remote sensing. In Advanced Remote Sensing, 2nd ed.; Academic Press: Cambridge, MA, USA, 2020; pp. 1–57. [Google Scholar]

- Yuan, J.; Yang, H.H.L.; Omitaomu, O.A.; Bhaduri, B.L. Large-scale solar panel mapping from aerial images using deep convolutional networks. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 2703–2708. [Google Scholar] [CrossRef]

- Bradbury, K.; Saboo, R.; Johnson, T.L.; Malof, J.M.; Devarajan, A.; Zhang, W.; Collins, L.M.; Newell, R.G. Distributed solar photovoltaic array location and extent dataset for remote sensing object identification. Sci. Data 2016, 3, 160106. [Google Scholar] [CrossRef] [Green Version]

- Ishii, T.; Simo-Serra, E.; Iizuka, S.; Mochizuki, Y.; Sugimoto, A.; Ishikawa, H.; Nakamura, R. Detection by classification of buildings in multispectral satellite imagery. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3344–3349. [Google Scholar]

- Malof, J.M.; Bradbury, K.; Collins, L.; Newell, R. Image features for pixel-wise detection of solar photovoltaic arrays in aerial imagery using a random forest classifier. In Proceedings of the 5th International Conference on Renewable Energy Research and Applications, Birmingham, UK, 20–23 November 2016; Volume 5, pp. 799–803. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Malof, J.M.; Li, B.; Huang, B.; Bradbury, K.; Stretslov, A. Mapping solar array location, size, and capacity using deep learning and overhead imagery. arXiv 2019, arXiv:1902.10895. [Google Scholar]

- Camilo, J.; Wang, R.; Collins, L.M.; Bradbury, K.; Malof, J.M. Application of a semantic segmentation convolutional neural network for accurate automatic detection and mapping of solar photovoltaic arrays in aerial imagery. arXiv 2018, arXiv:1801.04018. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hou, X.; Wang, B.; Hu, W.; Yin, L.; Wu, H. SolarNet: A Deep Learning Framework to Map Solar Power Plants in China From Satellite Imagery. arXiv 2019, arXiv:1912.03685. [Google Scholar]

- Zhang, D.; Wu, F.; Li, X.; Luo, X.; Wang, J.; Yan, W.; Chen, Z.; Yang, Q. Aerial image analysis based on improved adaptive clustering for photovoltaic module inspection. In Proceedings of the 2017 International Smart Cities Conference (ISC2), Wuxi, China, 14–17 September 2017. [Google Scholar] [CrossRef]

- Ismail, H.; Chikte, R.; Bandyopadhyay, A.; Al Jasmi, N. Autonomous detection of PV panels using a drone. In Proceedings of the ASME 2019 International Mechanical Engineering Congress and Exposition, Salt Lake City, UT, USA, 11–14 November 2019; Volume 59414, p. V004T05A051. [Google Scholar]

- Vega Díaz, J.J.; Vlaminck, M.; Lefkaditis, D.; Orjuela Vargas, S.A.; Luong, H. Solar panel detection within complex backgrounds using thermal images acquired by UAVs. Sensors 2020, 20, 6219. [Google Scholar] [CrossRef]

- Zheng, T.; Bergin, M.H.; Hu, S.; Miller, J.; Carlson, D.E. Estimating ground-level PM2. 5 using micro-satellite images by a convolutional neural network and random forest approach. Atmos. Environ. 2020, 230, 117451. [Google Scholar] [CrossRef]

- Pierdicca, R.; Malinverni, E.S.; Piccinini, F.; Paolanti, M.; Felicetti, A.; Zingaretti, P. Deep convolutional neural network for automatic detection of damaged photovoltaic cells. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2018, 42, 893–900. [Google Scholar] [CrossRef] [Green Version]

- Xie, X.; Wei, X.; Wang, X.; Guo, X.; Li, J.; Cheng, Z. Photovoltaic panel anomaly detection system based on Unmanned Aerial Vehicle platform. IOP Conf. Ser. Mater. Sci. Eng. 2020, 768, 072061. [Google Scholar] [CrossRef]

- Herraiz, A.; Marugan, A.; Marquez, F. Optimal Productivity in Solar Power Plants Based on Machine Learning and Engineering Management. In Proceedings of the Twelfth International Conference on Management Science and Engineering Management, Melbourne, Australia, 1–4 August 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 901–912. [Google Scholar] [CrossRef]

- Li, X.; Yang, Q.; Lou, Z.; Yan, W. Deep Learning Based Module Defect Analysis for Large-Scale Photovoltaic Farms. IEEE Trans. Energy Convers. 2019, 34, 520–529. [Google Scholar] [CrossRef]

- Ding, S.; Yang, Q.; Li, X.; Yan, W.; Ruan, W. Transfer Learning based Photovoltaic Module Defect Diagnosis using Aerial Images. In Proceedings of the 2018 International Conference on Power System Technology (POWERCON), Guangzhou, China, 6–8 November 2018; pp. 4245–4250. [Google Scholar] [CrossRef]

- Li, X.; Yang, Q.; Wang, J.; Chen, Z.; Yan, W. Intelligent fault pattern recognition of aerial photovoltaic module images based on deep learning technique. In Proceedings of the 9th International Multi-Conference on Complexity, Informatics and Cybernetics (IMCIC 2018), Orlando, FL, USA, 13–16 March 2018; Volume 1, pp. 22–27. [Google Scholar]

- Li, X.; Li, W.; Yang, Q.; Yan, W.; Zomaya, A.Y. An Unmanned Inspection System for Multiple Defects Detection in Photovoltaic Plants. IEEE J. Photovolt. 2020, 10, 568–576. [Google Scholar] [CrossRef]

- Hanafy, W.A.; Pina, A.; Salem, S.A. Machine learning approach for photovoltaic panels cleanliness detection. In Proceedings of the 2019 15th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2019; p. 77. [Google Scholar] [CrossRef]

- Correa, S.; Shah, Z.; Taneja, J. This Little Light of Mine: Electricity Access Mapping Using Night-time Light Data. In Proceedings of the Twelfth ACM International Conference on Future Energy Systems, Online. 28 June–2 July 2021; pp. 254–258. [Google Scholar]

- Falchetta, G.; Pachauri, S.; Parkinson, S.; Byers, E. A high-resolution gridded dataset to assess electrification in sub-Saharan Africa. Sci. Data 2019, 6, 110. [Google Scholar] [CrossRef] [Green Version]

- Min, B.; Gaba, K.M.; Sarr, O.F.; Agalassou, A. Detection of rural electrification in Africa using DMSP-OLS night lights imagery. Int. J. Remote Sens. 2013, 34, 8118–8141. [Google Scholar] [CrossRef]

- Chew, R.; Rineer, J.; Beach, R.; O’Neil, M.; Ujeneza, N.; Lapidus, D.; Miano, T.; Hegarty-Craver, M.; Polly, J.; Temple, D.S. Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones 2020, 4, 7. [Google Scholar] [CrossRef] [Green Version]

- Drone Imagery Classification Training Dataset for Crop Types in Rwanda. 2021. Available online: https://doi.org/10.34911/rdnt.r4p1fr (accessed on 1 June 2021).

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 128. [Google Scholar]

- Orych, A. Review of methods for determining the spatial resolution of UAV Sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 391–395. [Google Scholar] [CrossRef] [Green Version]

- Şimşek, B.; Bilge, H.Ş. A Novel Motion Blur Resistant vSLAM Framework for Micro/Nano-UAVs. Drones 2021, 5, 121. [Google Scholar] [CrossRef]

- Federal Aviation Administration. Become a Drone Pilot; Federal Aviation Administration: Washington, DC, USA, 2021.

- Federal Aviation Administration. How to Register Your Drone; Federal Aviation Administration: Washington, DC, USA, 2021.

- U.S. Energy Information Administration. Gasoline and Diesel Fuel Update; U.S. Energy Information Administration: Washington, DC, USA, 2022.

- U.S. Department of Labor, Bureau of Labor Statistics. News Release. Available online: https://www.bls.gov/news.release/pdf/ecec.pdf (accessed on 1 January 2022).

- Geographic Information Coordinating Council, NC. Business Plan for Orthoimagery in North Carolina. Available online: https://files.nc.gov/ncdit/documents/files/OrthoImageryBusinessPlan-NC-20101029.pdf (accessed on 1 September 2021).

- State of Connecticut. Connecticut’s Plan for The American Rescue Plan Act of 2021. Available online: https://portal.ct.gov/-/media/Office-of-the-Governor/News/2021/20210426-Governor-Lamont-ARPA-allocation-plan.pdf (accessed on 1 September 2021).

- Lietz, H.; Lingani, M.; Sie, A.; Sauerborn, R.; Souares, A.; Tozan, Y. Measuring population health: Costs of alternative survey approaches in the Nouna Health and Demographic Surveillance System in rural Burkina Faso. Glob. Health Action 2015, 8, 28330. [Google Scholar] [CrossRef]

- Fuller, A.T.; Butler, E.K.; Tran, T.M.; Makumbi, F.; Luboga, S.; Muhumza, C.; Chipman, J.G.; Groen, R.S.; Gupta, S.; Kushner, A.L.; et al. Surgeons overseas assessment of surgical need (SOSAS) Uganda: Update for Household Survey. World J. Surg. 2015, 39, 2900–2907. [Google Scholar] [CrossRef] [PubMed]

- Gertler, P.J.; Martinez, S.; Premand, P.; Rawlings, L.B.; Vermeersch, C.M. Impact Evaluation in Practice; World Bank Publications: Washington, DC, USA, 2016. [Google Scholar]

- Ivosevic, B.; Han, Y.G.; Cho, Y.; Kwon, O. The use of conservation drones in ecology and wildlife research. J. Ecol. Environ. 2015, 38, 113–118. [Google Scholar] [CrossRef] [Green Version]

- Rabta, B.; Wankmüller, C.; Reiner, G. A drone fleet model for last-mile distribution in disaster relief operations. Int. J. Disaster Risk Reduct. 2018, 28, 107–112. [Google Scholar] [CrossRef]

- Afghah, F.; Razi, A.; Chakareski, J.; Ashdown, J. Wildfire monitoring in remote areas using autonomous unmanned aerial vehicles. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 835–840. [Google Scholar]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [Green Version]

- Ramsankaran, R.; Navinkumar, P.; Dashora, A.; Kulkarni, A.V. UAV-based survey of glaciers in himalayas: Challenges and recommendations. J. Indian Soc. Remote Sens. 2021, 49, 1171–1187. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Azar, A.T.; Koubaa, A.; Ali Mohamed, N.; Ibrahim, H.A.; Ibrahim, Z.F.; Kazim, M.; Ammar, A.; Benjdira, B.; Khamis, A.M.; Hameed, I.A.; et al. Drone Deep Reinforcement Learning: A Review. Electronics 2021, 10, 999. [Google Scholar] [CrossRef]

- Washington, A.N. A Survey of Drone Use for Socially Relevant Problems: Lessons from Africa. Afr. J. Comput. ICT 2018, 11, 1–11. [Google Scholar]

- The World Bank. Population Density (People per s1. km of Land Area)—Rwanda. Available online: https://data.worldbank.org/indicator/EN.POP.DNST?locations=RW (accessed on 1 March 2022).

- National Institute of Statistics of Rwanda (NISR), Ministry of Health and ICF International. The Rwanda Demographic and Health Survey 2014–15. Available online: https://www.dhsprogram.com/pubs/pdf/SR229/SR229.pdf (accessed on 1 March 2022).

- WorldPop and CIESIN, Columbia University. The Spatial Distribution of Population Density in 2020, Rwanda. Available online: https://dx.doi.org/10.5258/SOTON/WP00675 (accessed on 1 March 2022).

| Altitude | GSD | # Img | # Vid | # Annotated PV |

|---|---|---|---|---|

| 50 m | 1.7 cm | 58 | 6 | 248 |

| 60 m | 2.1 cm | 63 | 7 | 289 |

| 70 m | 2.5 cm | 47 | 8 | 227 |

| 80 m | 2.8 cm | 60 | 8 | 295 |

| 90 m | 3.2 cm | 44 | 8 | 214 |

| 100 m | 3.5 cm | 47 | 9 | 230 |

| 110 m | 3.9 cm | 56 | 4 | 278 |

| 120 m | 4.3 cm | 48 | 10 | 238 |

| Category | Item | Unit Cost | Unit |

|---|---|---|---|

| Legal and permit | Part 107 certificate | $150 [47] | /pilot |

| Pilot training for exam | $300 | /pilot | |

| Drone registration fee | $5 [48] | /drone × year | |

| Transportation | Car rental | $1700 | /month |

| Car insurance | $400 | /month | |

| Fuel | $3 [49] | /gallon | |

| Flight ticket | $2000 | /pilot | |

| Driver/translator | 0 in US | /pilot | |

| Labor related | Wage | $40 | /hour × pilot |

| Benefit | $20 [50] | /hour × pilot | |

| Hotel | $125 | /night | |

| Drone related | Drone | $27,000 | /drone |

| Camera | $0 | /drone | |

| Battery | $3000 | /drone | |

| Data storage | $130 | /5 TB |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, S.; Malof, J.; Fetter, R.; Beach, R.; Rineer, J.; Bradbury, K. Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning. ISPRS Int. J. Geo-Inf. 2022, 11, 222. https://doi.org/10.3390/ijgi11040222

Ren S, Malof J, Fetter R, Beach R, Rineer J, Bradbury K. Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning. ISPRS International Journal of Geo-Information. 2022; 11(4):222. https://doi.org/10.3390/ijgi11040222

Chicago/Turabian StyleRen, Simiao, Jordan Malof, Rob Fetter, Robert Beach, Jay Rineer, and Kyle Bradbury. 2022. "Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning" ISPRS International Journal of Geo-Information 11, no. 4: 222. https://doi.org/10.3390/ijgi11040222

APA StyleRen, S., Malof, J., Fetter, R., Beach, R., Rineer, J., & Bradbury, K. (2022). Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning. ISPRS International Journal of Geo-Information, 11(4), 222. https://doi.org/10.3390/ijgi11040222