How Can Physiological Computing Benefit Human-Robot Interaction?

Abstract

1. Introduction

1.1. A Need for Physiology-Centered Research in Remote HRI

1.2. Interaction Modes and Autonomy Levels

- the frequency of human intervention;

- the type of control (i.e., manual vs. automatic);

- and the embedded capacities of the robots/artificial agents (i.e., to what extent they can achieve tasks autonomously).

2. Mental States of Interest for Human-Robot Interaction

2.1. Situation Awareness, Resource Engagement and Associated Mental States

2.1.1. Prime Mental States

2.1.2. Collateral Mental States

- Inattentional sensory impairments, such as inattentional blindness and inattentional deafness. These attentional phenomena consist in "missing" alarms when all attentional resources are engaged in another sensory modality. Hence, for the inattentional deafness phenomenon well studied in the aeronautical context, pilots under high workload miss auditory alarms when they are over-engaged in the visual modality (e.g., fascinated by the landing track) [58,59].

- Automation surprise, in which the operator is surprised by the behavior of the automation [60]. Although cases reported in the aeronautical domain are generally several minutes long, a subtype of automation surprise is the confusion in response to a brief unexpected event, such as a specific alarm. In order to go back to the nominal state of the global system, it is important to detect such a state from the operator. It does not matter whether the confusion of the operator arises from a failure of the artificial agents or the human ones. It might also be elicited by a general attentional disengagement of the operator, who is then incapable of correctly processing system-outputs and is confused by any negative feedback. This state might, in any case, lead the operator to take bad decisions and should be detected and taken into account in order to avoid system failure.

2.2. Physiological Features

2.2.1. Temporal Features

2.2.2. Spectral Features

2.2.3. Spatial Features

3. Operator Mental State Assessment

3.1. Preprocessing

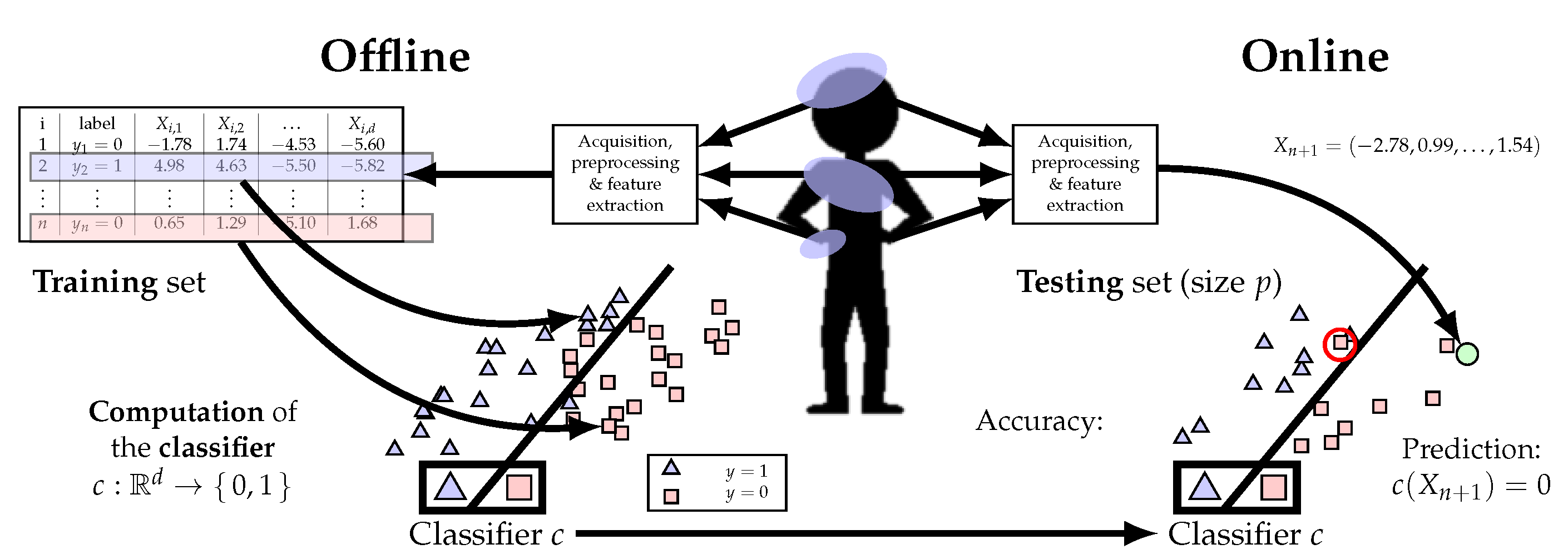

3.2. Learning and Classification

3.2.1. Classification Principle

3.2.2. Classification Performance

3.3. Some Famous Classifiers

3.3.1. Linear and Quadratic Discriminant Analyses

3.3.2. Support Vector Machine

3.3.3. k-Nearest Neighbors

3.4. Other Algorithms, Recent Advances, and Challenges

- Finding physiological features that are robust to the acquisition environment and tasks. Indeed, interactions between features have been found to significantly impact and decrease classification performance [86,95]. Therefore, one should try and find markers that are context-independent and that could efficiently be used both in the lab and in the field.

- Developing classification pipelines that are capable of transfer-learning. Classifiers are indeed rarely immune to performance decrements generated by a switch of task, participant, or even session. Pipelines that are robust to inter-subject, inter-session, and inter-task variability are, therefore, to be aimed at.

- Performing the estimation in an online fashion and closing the loop, that is to say, feeding the mental state estimates to a decisional system that can, e.g., adapt the functioning of the whole system accordingly (e.g., assign tasks or send alarms to the operator). This topic is addressed in the next part.

4. Closing the Loop: Towards Flexible Symbiotic Systems

4.1. Symbiotic Systems: Principle

- Physiological data obtained with sensors worn by the human operator, such as electroencephalography (EEG), electrocardiography (ECG), electrodermal activity (EDA), near infrared spectroscopy (NIRS), electromyogram (EMG), etc. [39].

4.2. One Solution: Mixed-Initative Interaction Driving Systems

4.3. Mixed-Initiative Symbiotic Interaction Systems: Existing Work

4.3.1. Adaptive Interaction Exploiting Subjective and Behavioral Data for Human State Estimation

- -

- Subjective measures

- -

- Actions and sequences of actions

- -

- Vocal commands

- -

- Ocular behavior

4.3.2. Adaptive Interaction Exploiting Physiological Data for Human State Estimation

- -

- Active BCIs

- -

- Passive BCI for active BCI

- -

- Passive BCIs for mental workload management

4.4. Research Gaps and Future Directions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Van Erp, J.; Lotte, F.; Tangermann, M. Brain-computer interfaces: Beyond medical applications. Computer 2012, 45, 26–34. [Google Scholar] [CrossRef]

- Cinel, C.; Valeriani, D.; Poli, R. Neurotechnologies for human cognitive augmentation: Current state of the art and future prospects. Front. Hum. Neurosci. 2019, 13, 13. [Google Scholar] [CrossRef] [PubMed]

- Vasic, M.; Billard, A. Safety issues in human-robot interactions. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 197–204. [Google Scholar]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety bounds in human robot interaction: A survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human-robot Interaction: A Survey. Found. Trends Hum.-Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors 2016, 58, 525–532. [Google Scholar] [CrossRef]

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Reich, F.; Heilemann, F.; Mund, D.; Schulte, A. Self-scaling Human-Agent Cooperation Concept for Joint Fighter-UCAV Operations. In Advances in Human Factors in Robots and Unmanned Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 225–237. [Google Scholar]

- De Souza, P.E.U. Towards Mixed-Initiative Human-Robot Interaction: A Cooperative Human-Drone Team Framework. Ph.D. Thesis, Université de Toulouse, délivré par l’Institut Supérieur de l’Aéronautique et de l’Espace (ISAE-SUPAERO), Toulouse, France, 2017. [Google Scholar]

- Parasuraman, R.; Wickens, C.D. Humans: Still Vital After All These Years of Automation. Hum. Factors 2008, 50, 511–520. [Google Scholar] [CrossRef]

- VaezMousavi, S.; Barry, R.J.; Clarke, A.R. Individual differences in task-related activation and performance. Physiol. Behav. 2009, 98, 326–330. [Google Scholar] [CrossRef]

- Mehler, B.; Reimer, B.; Coughlin, J.F.; Dusek, J.A. Impact of incremental increases in cognitive workload on physiological arousal and performance in young adult drivers. Transp. Res. Rec. 2009, 2138, 6–12. [Google Scholar] [CrossRef]

- Callicott, J.H.; Mattay, V.S.; Bertolino, A.; Finn, K.; Coppola, R.; Frank, J.A.; Goldberg, T.E.; Weinberger, D.R. Physiological characteristics of capacity constraints in working memory as revealed by functional MRI. Cereb. Cortex 1999, 9, 20–26. [Google Scholar] [CrossRef]

- Parasuraman, R.; Rizzo, M. Neuroergonomics: The Brain at Work; Oxford University Press: Oxford, UK, 2008. [Google Scholar]

- Ayaz, H.; Dehais, F. Neuroergonomics: The Brain at Work and in Everyday Life; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Goodrich, M.A.; Olsen, D.R.; Crandall, J.W.; Palmer, T.J. Experiments in adjustable autonomy. In Proceedings of IJCAI Workshop on Autonomy, Delegation and Control: Interacting with Intelligent Agents; American Association for Artificial Intelligence Press: Seattle, WA, USA, 2001; pp. 1624–1629. [Google Scholar]

- Huang, H.M.; Messina, E.; Albus, J. Toward a Generic Model for Autonomy Levels for Unmanned Systems (ALFUS); Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2003.

- Tang, F.; Mohammed, M.; Longazo, J. Experiments of human-robot teaming under sliding autonomy. In Proceedings of the 2016 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Banff, AB, Canada, 12–15 July 2016; pp. 113–118. [Google Scholar]

- Truszkowski, W.; Hallock, H.; Rouff, C.; Karlin, J.; Rash, J.; Hinchey, M.; Sterritt, R. Autonomous and Autonomic Systems: With Applications to NASA Intelligent Spacecraft Operations and Exploration Systems; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Huang, H.M.; Pavek, K.; Novak, B.; Albus, J.; Messin, E. A framework for autonomy levels for unmanned systems (ALFUS). Proc. AUVSIs Unmanned Syst. N. Am. 2005, 849–863. [Google Scholar]

- Sheridan, T.B.; Verplank, W.L. Human and Computer Control of Undersea Teleoperators; Technical Report; Massachusetts Institute of Tech Cambridge Man-Machine Systems Lab: Cambridge, MA, USA, 1978. [Google Scholar]

- Asaro, P. On banning autonomous weapon systems: Human rights, automation, and the dehumanization of lethal decision-making. Int. Rev. Red Cross 2012, 94, 687–709. [Google Scholar] [CrossRef]

- Bonnemains, V.; Saurel, C.; Tessier, C. Embedded ethics: Some technical and ethical challenges. Ethics Inf. Technol. 2018, 20, 41–58. [Google Scholar] [CrossRef]

- Nikitenko, A.; Durst, J. Autonomous systems and autonomy quantification. Digit. Infantary Battlef. Solut. 2016, 81. [Google Scholar]

- Tessier, C.; Dehais, F. Authority Management and Conflict Solving in Human-Machine Systems. AerospaceLab 2012. Available online: http://www.aerospacelab-journal.org/al4/authority-management-and-conflict-solving (accessed on 15 November 2020).

- Pizziol, S.; Tessier, C.; Dehais, F. Petri net-based modelling of human–automation conflicts in aviation. Ergonomics 2014, 57, 319–331. [Google Scholar] [CrossRef]

- Gangl, S.; Lettl, B.; Schulte, A. Single-seat cockpit-based management of multiple UCAVs using on-board cognitive agents for coordination in manned-unmanned fighter missions. In Proceedings of the International Conference on Engineering Psychology and Cognitive Ergonomics, Las Vegas, NV, USA, 21–26 July 2013; pp. 115–124. [Google Scholar]

- Jiang, S.; Arkin, R.C. Mixed-Initiative Human-Robot Interaction: Definition, Taxonomy, and Survey. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015; pp. 954–961. [Google Scholar]

- Hardin, B.; Goodrich, M.A. On using mixed-initiative control: A perspective for managing large-scale robotic teams. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, Boulder, CO, USA, 8–11 March 2009; pp. 165–172. [Google Scholar]

- Gombolay, M.; Bair, A.; Huang, C.; Shah, J. Computational design of mixed-initiative human–robot teaming that considers human factors: Situational awareness, workload, and workflow preferences. Int. J. Robot. Res. 2017, 36, 597–617. [Google Scholar] [CrossRef]

- Charles, J.A.; Chanel, C.P.C.; Chauffaut, C.; Chauvin, P.; Drougard, N. Human-Agent Interaction Model Learning based on Crowdsourcing. In Proceedings of the 6th International Conference on Human-Agent Interaction, Southampton, UK, 15–18 December 2018; pp. 20–28. [Google Scholar]

- Schulte, A.; Donath, D.; Honecker, F. Human-system interaction analysis for military pilot activity and mental workload determination. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015; pp. 1375–1380. [Google Scholar]

- Fairclough, S.H.; Gilleade, K. Advances in Physiological Computing; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Fairclough, S.H. Fundamentals of physiological computing. Interact. Comput. 2009, 21, 133–145. [Google Scholar] [CrossRef]

- Osiurak, F.; Navarro, J.; Reynaud, E. How our cognition shapes and is shaped by technology: A common framework for understanding human tool-use interactions in the past, present, and future. Front. Psychol. 2018, 9, 293. [Google Scholar] [CrossRef]

- Zander, T.O.; Kothe, C. Towards passive brain–computer interfaces: Applying brain–computer interface technology to human—Machine systems in general. J. Neur. Eng. 2011, 8, 025005. [Google Scholar] [CrossRef]

- Chen, D.; Vertegaal, R. Using mental load for managing interruptions in physiologically attentive user interfaces. In Proceedings of the CHI’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 1513–1516. [Google Scholar]

- Singh, G.; Bermùdez i Badia, S.; Ventura, R.; Silva, J.L. Physiologically Attentive User Interface for Robot Teleoperation - Real Time Emotional State Estimation and Interface Modification Using Physiology, Facial Expressions and Eye Movements. In Proceedings of the Eleventh International Joint Conference on Biomedical Engineering Systems and Technologies, BIOSTEC 2018, Funchal, Madeira, Portugal, 19–21 January 2018. [Google Scholar]

- Roy, R.N.; Frey, J. Neurophysiological Markers for Passive Brain–Computer Interfaces. In Brain–Computer Interfaces 1: Foundations and Methods; Clerc, M., Bougrain, L., Lotte, F., Eds.; Wiley-ISTE: Hoboken, NJ, USA, 2016. [Google Scholar]

- Allen, J.; Guinn, C.I.; Horvtz, E. Mixed-initiative interaction. IEEE Intell. Syst. Appl. 1999, 14, 14–23. [Google Scholar] [CrossRef]

- Ai-Chang, M.; Bresina, J.; Charest, L.; Chase, A.; Hsu, J.J.; Jonsson, A.; Kanefsky, B.; Morris, P.; Rajan, K.; Yglesias, J.; et al. Mapgen: Mixed-initiative planning and scheduling for the mars exploration rover mission. IEEE Intell. Syst. 2004, 19, 8–12. [Google Scholar] [CrossRef]

- Chanel, C.P.; Roy, R.N.; Drougard, N.; Dehais, F. Mixed-Initiative Human-Automated Agents Teaming: Towards a Flexible Cooperation Framework. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; pp. 117–133. [Google Scholar]

- Endsley, M.R. Design and Evaluation for Situation Awareness Enhancement. Proc. Hum. Factors Soc. Ann. Meet. 1988, 32, 97–101. [Google Scholar] [CrossRef]

- Jones, D.G.; Endsley, M.R. Sources of situation awareness errors in aviation. Aviat. Space Environ. Med. 1996, 67, 507–512. [Google Scholar] [PubMed]

- Endsley, M.R. Theoretical Underpinnings of Situation Awareness: A Critical Review. In Situation Awareness Analysis and Measurement; Endsley, M.R., Garland, D.J., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000; Chapter 1. [Google Scholar]

- Sarter, N.B.; Woods, D.D. Situation Awareness: A Critical But Ill-Defined Phenomenon. Int. J. Aviat. Psychol. 1991, 1, 45–57. [Google Scholar] [CrossRef]

- Wickens, C.; Kramer, A.; Vanasse, L.; Donchin, E. Performance of concurrent tasks: A psychophysiological analysis of the reciprocity of information-processing resources. Science 1983, 221, 1080–1082. [Google Scholar] [CrossRef]

- Wickens, C.D. Attentional tunneling and task management. In Proceedings of the 2005 International Symposium on Aviation Psychology, Dayton, OH, USA, 14–17 April 2005. [Google Scholar]

- Cheyne, J.A.; Solman, G.J.; Carriere, J.S.; Smilek, D. Anatomy of an error: A bidirectional state model of task engagement/disengagement and attention-related errors. Cognition 2009, 111, 98–113. [Google Scholar] [CrossRef]

- Gouraud, J.; Delorme, A.; Berberian, B. Out of the Loop, in Your Bubble: Mind Wandering Is Independent From Automation Reliability, but Influences Task Engagement. Front. Hum. Neurosci. 2018, 12, 383. [Google Scholar] [CrossRef]

- Mackworth, J.F. Vigilance, arousal, and habituation. Psychol. Rev. 1968, 75, 308. [Google Scholar] [CrossRef]

- Lal, S.K.; Craig, A. Driver fatigue: Electroencephalography and psychological assessment. Psychophysiology 2002, 39, 313–321. [Google Scholar] [CrossRef]

- Smallwood, J.; Beach, E.; Schooler, J.W.; Handy, T.C. Going AWOL in the brain: Mind wandering reduces cortical analysis of external events. J. Cogn. Neurosci. 2008, 20, 458–469. [Google Scholar] [CrossRef] [PubMed]

- Braboszcz, C.; Delorme, A. Lost in thoughts: Neural markers of low alertness during mind wandering. Neuroimage 2011, 54, 3040–3047. [Google Scholar] [CrossRef] [PubMed]

- Cummings, M.L.; Mastracchio, C.; Thornburg, K.M.; Mkrtchyan, A. Boredom and distraction in multiple unmanned vehicle supervisory control. Interact. Comput. 2013, 25, 34–47. [Google Scholar] [CrossRef]

- Mendl, M. Performing under pressure: Stress and cognitive function. Appl. Anim. Behav. Sci. 1999, 65, 221–244. [Google Scholar] [CrossRef]

- Cain, B. A Review of the Mental Workload Literature; Technical Report; Defence Research And Development Toronto (Canada): Toronto, ON, Canada, 2007. [Google Scholar]

- Dehais, F.; Tessier, C.; Christophe, L.; Reuzeau, F. The perseveration syndrome in the pilot’s activity: Guidelines and cognitive countermeasures. In Human Error, Safety and Systems Development; Springer: Berlin/Heidelberg, Germany, 2010; pp. 68–80. [Google Scholar]

- Macdonald, J.S.; Lavie, N. Visual perceptual load induces inattentional deafness. Atten. Percept. Psychophys. 2011, 73, 1780–1789. [Google Scholar] [CrossRef]

- Sarter, N.B.; Woods, D.D.; Billings, C.E. Automation surprises. Handb. Hum. Factors Ergon. 1997, 2, 1926–1943. [Google Scholar]

- Dehais, F.; Causse, M.; Pastor, J. Embedded eye tracker in a real aircraft: New perspectives on pilot/aircraft interaction monitoring. In Proceedings from The 3rd International Conference on Research in Air Transportation; Federal Aviation Administration: Fairfax, VA, USA, 2008. [Google Scholar]

- Peysakhovich, V.; Dehais, F.; Causse, M. Pupil diameter as a measure of cognitive load during auditory-visual interference in a simple piloting task. Procedia Manuf. 2015, 3, 5199–5205. [Google Scholar] [CrossRef]

- Derosiere, G.; Mandrick, K.; Dray, G.; Ward, T.; Perrey, S. NIRS-measured prefrontal cortex activity in neuroergonomics: Strengths and weaknesses. Front. Hum. Neurosci. 2013, 7, 583. [Google Scholar] [CrossRef]

- Durantin, G.; Dehais, F.; Delorme, A. Characterization of mind wandering using fNIRS. Front. Syst. Neurosci. 2015, 9, 45. [Google Scholar] [CrossRef]

- Gateau, T.; Durantin, G.; Lancelot, F.; Scannella, S.; Dehais, F. Real-time state estimation in a flight simulator using fNIRS. PLoS ONE 2015, 10, e0121279. [Google Scholar] [CrossRef]

- Malik, M.; Bigger, J.T.; Camm, A.J.; Kleiger, R.E.; Malliani, A.; Moss, A.J.; Schwartz, P.J. Heart rate variability: Standards of measurement, physiological interpretation, and clinical use. Eur. Heart J. 1996, 17, 354–381. [Google Scholar] [CrossRef]

- Sörnmo, L.; Laguna, P. Bioelectrical Signal Processing in Cardiac and Neurological Applications; Elsevier: Amsterdam, The Netherlands; Academic Press: Boston, MA, USA, 2005. [Google Scholar]

- Roy, R.N.; Charbonnier, S.; Campagne, A. Probing ECG-based mental state monitoring on short time segments. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6611–6614. [Google Scholar]

- Heard, J.; Harriott, C.E.; Adams, J.A. A Survey of Workload Assessment Algorithms. IEEE Trans. Hum. Mach. Syst. 2018, 48, 434–451. [Google Scholar] [CrossRef]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef] [PubMed]

- Dehais, F.; Causse, M.; Vachon, F.; Tremblay, S. Cognitive conflict in human–automation interactions: A psychophysiological study. Appl. Ergon. 2012, 43, 588–595. [Google Scholar] [CrossRef] [PubMed]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Murata, A.; Uetake, A.; Takasawa, Y. Evaluation of mental fatigue using feature parameter extracted from event-related potential. Int. J. Ind. Ergon. 2005, 35, 761–770. [Google Scholar] [CrossRef]

- Fu, S.; Parasuraman, R. Event-related potentials (ERPs) in Neuroergonomics. In Neuroergonomics: The Brain at Work; Parasuraman, R., Rizzo, M., Eds.; Oxford University Press, Inc.: New York, NY, USA, 2007; pp. 15–31. [Google Scholar]

- Holm, A.; Lukander, K.; Korpela, J.; Sallinen, M.; Müller, K.M. Estimating Brain Load from the EEG. Sci. World J. 2009, 9, 639–651. [Google Scholar] [CrossRef] [PubMed]

- Roy, R.N.; Bovo, A.; Gateau, T.; Dehais, F.; Chanel, C.P.C. Operator Engagement During Prolonged Simulated UAV Operation. IFAC-PapersOnLine 2016, 49, 171–176. [Google Scholar] [CrossRef][Green Version]

- Giraudet, L.; St-Louis, M.E.; Scannella, S.; Causse, M. P300 event-related potential as an indicator of inattentional deafness? PLoS ONE 2015, 10, e0118556. [Google Scholar] [CrossRef]

- Scannella, S.; Roy, R.; Laouar, A.; Dehais, F. Auditory neglect in the cockpit: Using ERPs to disentangle early from late processes in the inattentional deafness phenomenon. Proc. Int. Neuroergon. Conf. 2016. [Google Scholar] [CrossRef]

- Ferrez, P.W.; Millán, J.R. Error-related EEG potentials generated during simulated brain–computer interaction. IEEE Trans. Biomed. Eng. 2008, 55, 923–929. [Google Scholar] [CrossRef]

- Chavarriaga, R.; Sobolewski, A.; Millán, J.R. Errare machinale est: The use of error-related potentials in brain-machine interfaces. Front. Neurosci. 2014, 8, 208. [Google Scholar] [CrossRef] [PubMed]

- Pope, A.T.; Bogart, E.H.; Bartolome, D.S. Biocybernetic system evaluates indices of operator engagement in automated task. Biol. Psychol. 1995, 40, 187–195. [Google Scholar] [CrossRef]

- Coull, J. Neural correlates of attention and arousal: Insights from electrophysiology, functional neuroimaging and psychopharmacology. Prog. Neurobiol. 1998, 55, 343–361. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef]

- Gevins, A.; Smith, M.E. Neurophysiological measures of working memory and individual differences in cognitive ability and cognitive style. Cereb. Cortex 2000, 10, 829–839. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M. EEG feature extraction. In Brain–Computer Interfaces 2: Signal Processing and Machine Learning; Clerc, M., Bougrain, L., Lotte, F., Eds.; Wiley-ISTE: Hoboken, NJ, USA, 2016. [Google Scholar]

- Roy, R.N.; Bonnet, S.; Charbonnier, S.; Campagne, A. Mental fatigue and working memory load estimation: Interaction and implications for EEG-based passive BCI. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6607–6610. [Google Scholar]

- Charbonnier, S.; Roy, R.N.; Bonnet, S.; Campagne, A. EEG index for control operators’ mental fatigue monitoring using interactions between brain regions. Expert Syst. Appl. 2016, 52, 91–98. [Google Scholar] [CrossRef]

- Roy, R.N.; Charbonnier, S.; Bonnet, S. Eye blink characterization from frontal EEG electrodes using source separation and pattern recognition algorithms. Biomed. Signal Process. Control 2014, 14, 256–264. [Google Scholar] [CrossRef]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Kotsiantis, S.B.; Zaharakis, I.; Pintelas, P. Supervised machine learning: A review of classification techniques. Emerg. Artif. Intell. Appl. Comput. Eng. 2007, 160, 3–24. [Google Scholar]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Drougard, N.; Chanel, C.P.C.; Roy, R.N.; Dehais, F. Mixed-initiative mission planning considering human operator state estimation based on physiological sensors. In Proceedings of the IROS-2017 workshop on Human-Robot Interaction in Collaborative Manufacturing Environments (HRI-CME), Vancouver, BC, Canada, 24 September 2017. [Google Scholar]

- Dehais, F.; Dupres, A.; Di Flumeri, G.; Verdiere, K.; Borghini, G.; Babiloni, F.; Roy, R. Monitoring pilot’s cognitive fatigue with engagement features in simulated and actual flight conditions using an hybrid fNIRS-EEG passive BCI. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 544–549. [Google Scholar]

- Mühl, C.; Jeunet, C.; Lotte, F. EEG-based workload estimation across affective contexts. Front. Neurosci. 2014, 8, 114. [Google Scholar] [PubMed]

- Roy, R.N.; Bonnet, S.; Charbonnier, S.; Campagne, A. Efficient workload classification based on ignored auditory probes: A proof of concept. Front. Hum. Neurosci. 2016, 10, 519. [Google Scholar] [CrossRef] [PubMed]

- Dehais, F.; Roy, R.; Scannella, S. Inattentional deafness to auditory alarms: Inter-individual differences, electrophysiological signature and single trial classification. Behav. Brain Res. 2019, 360, 51–59. [Google Scholar] [CrossRef] [PubMed]

- Dehais, F.; Duprès, A.; Blum, S.; Drougard, N.; Scannella, S.; Roy, R.N.; Lotte, F. Monitoring Pilot’s Mental Workload Using ERPs and Spectral Power with a Six-Dry-Electrode EEG System in Real Flight Conditions. Sensors 2019, 19, 1324. [Google Scholar] [CrossRef] [PubMed]

- Lachenbruch, P.A.; Goldstein, M. Discriminant analysis. Biometrics 1979, 35, 69–85. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vert, J.P.; Tsuda, K.; Schölkopf, B. A primer on kernel methods. Kernel Methods Comput. Biol. 2004, 47, 35–70. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Widrow, B.; Lehr, M.A. 30 years of adaptive neural networks: Perceptron, madaline, and backpropagation. Proc. IEEE 1990, 78, 1415–1442. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient backprop. In Neural networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Map. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng. 2007, 4, R1. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.; Cichocki, A.; Park, H.M.; Lee, S.Y. Blind source separation and independent component analysis: A review. Neural Inform. Process.-Lett. Rev. 2005, 6, 1–57. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Classification of covariance matrices using a Riemannian-based kernel for BCI applications. Neurocomputing 2013, 112, 172–178. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Cutrell, E.; Tan, D. BCI for passive input in HCI. Proc. CHI 2008, 8, 1–3. [Google Scholar]

- Zander, T.O.; Kothe, C.; Welke, S.; Rötting, M. Utilizing secondary input from passive brain-computer interfaces for enhancing human-machine interaction. In International Conference on Foundations of Augmented Cognition; Springer: Berlin/Heidelberg, Germany, 2009; pp. 759–771. [Google Scholar]

- Prinzel, L.J.; Freeman, F.G.; Scerbo, M.W.; Mikulka, P.J.; Pope, A.T. Effects of a psychophysiological system for adaptive automation on performance, workload, and the event-related potential P300 component. Hum. Factors 2003, 45, 601–614. [Google Scholar] [CrossRef]

- De Souza, P.E.U.; Chanel, C.P.C.; Dehais, F. MOMDP-based target search mission taking into account the human operator’s cognitive state. In Proceedings of the 2015 IEEE 27th International Conference on Tools with Artificial Intelligence (ICTAI), Vietri sul Mare, Italy, 9–11 November 2015; pp. 729–736. [Google Scholar]

- Gateau, T.; Chanel, C.P.C.; Le, M.H.; Dehais, F. Considering human’s non-deterministic behavior and his availability state when designing a collaborative human-robots system. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4391–4397. [Google Scholar]

- Sellner, B.; Heger, F.W.; Hiatt, L.M.; Simmons, R.; Singh, S. Coordinated multiagent teams and sliding autonomy for large-scale assembly. Proc. IEEE 2006, 94, 1425–1444. [Google Scholar] [CrossRef][Green Version]

- Atrash, A.; Pineau, J. A Bayesian method for learning POMDP observation parameters for robot interaction management systems. In Proceedings of the POMDP Practitioners Workshop, Toronto, BC, Canada, 12 May 2010. [Google Scholar]

- Hoey, J.; Poupart, P.; von Bertoldi, A.; Craig, T.; Boutilier, C.; Mihailidis, A. Automated handwashing assistance for persons with dementia using video and a partially observable Markov decision process. Comput. Vis. Image Underst. 2010, 114, 503–519. [Google Scholar] [CrossRef]

- Dehais, F.; Goudou, A.; Lesire, C.; Tessier, C. Towards an anticipatory agent to help pilots. In Proceedings of the AAAI 2005 Fall Symposium “From Reactive to Anticipatory Cognitive Embodied Systems”, Arlington, VA, USA, 4–6 November 2005. [Google Scholar]

- Cummings, M.L.; Mitchell, P.J. Predicting controller capacity in supervisory control of multiple UAVs. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2008, 38, 451–460. [Google Scholar] [CrossRef]

- Dehais, F.; Peysakhovich, V.; Scannella, S.; Fongue, J.; Gateau, T. Automation surprise in aviation: Real-time solutions. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 2525–2534. [Google Scholar]

- Nikolaidis, S.; Ramakrishnan, R.; Gu, K.; Shah, J. Efficient Model Learning from Joint-Action Demonstrations for Human-Robot Collaborative Tasks. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction; ACM: New York, NY, USA, 2015; pp. 189–196. [Google Scholar] [CrossRef]

- Atrash, A.; Pineau, J. A bayesian reinforcement learning approach for customizing human-robot interfaces. In Proceedings of the 14th International Conference on Intelligent User Interfaces, Sanibel Island, FL, USA, 8–11 February 2009; pp. 355–360. [Google Scholar]

- Regis, N.; Dehais, F.; Rachelson, E.; Thooris, C.; Pizziol, S.; Mickaël Causse, C.T. Formal Detection of Attentional Tunneling in Human Operator-Automation Interactions. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 326–336. [Google Scholar]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain Computer Interfaces, a Review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Ghosh, D. An Adaptive Human Brain to ComputerInterface System for Robotic or Wheel-Chair based Navigational Tasks. Master’s Thesis, TUM Technische Iniversität München, KTH Industrial Engineering and Management Master of Science , Stockholm, Sweden, 2012. [Google Scholar]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Colosimo, A.; Bonelli, S.; Golfetti, A.; Pozzi, S.; Imbert, J.P.; Granger, G.; Benhacene, R.; et al. Adaptive automation triggered by EEG-based mental workload index: A passive brain-computer interface application in realistic air traffic control environment. Front. Hum. Neurosci. 2016, 10, 539. [Google Scholar]

- Chanel, C.P.; Roy, R.N.; Dehais, F.; Drougard, N. Towards Mixed-Initiative Human–Robot Interaction: Assessment of Discriminative Physiological and Behavioral Features for Performance Prediction. Sensors 2020, 20, 296. [Google Scholar] [CrossRef]

- Appriou, A.; Cichocki, A.; Lotte, F. Modern Machine-Learning Algorithms: For Classifying Cognitive and Affective States From Electroencephalography Signals. IEEE Syst. Man Cybern. Mag. 2020, 6, 29–38. [Google Scholar] [CrossRef]

- Pongsakornsathien, N.; Lim, Y.; Gardi, A.; Hilton, S.; Planke, L.; Sabatini, R.; Kistan, T.; Ezer, N. Sensor networks for aerospace human-machine systems. Sensors 2019, 19, 3465. [Google Scholar] [CrossRef]

- Cavallo, F.; Limosani, R.; Fiorini, L.; Esposito, R.; Furferi, R.; Governi, L.; Carfagni, M. Design impact of acceptability and dependability in assisted living robotic applications. Int. J. Interact. Des. Manuf. 2018, 12, 1167–1178. [Google Scholar] [CrossRef]

- Gimhae, G.N. Six human factors to acceptability of wearable computers. Int. J. Multimed. Ubiquitous Eng. 2013, 8, 103–114. [Google Scholar]

- Dias, R.D.; Ngo-Howard, M.C.; Boskovski, M.T.; Zenati, M.A.; Yule, S.J. Systematic review of measurement tools to assess surgeons’ intraoperative cognitive workload. Br. J. Surg. 2018, 105, 491–501. [Google Scholar] [CrossRef] [PubMed]

- Omurtag, A.; Roy, R.N.; Dehais, F.; Chatty, L.; Garbey, M. Tracking mental workload by multimodal measurements in the operating room. In Neuroergonomics; Elsevier: Amsterdam, The Netherlands, 2019; pp. 99–103. [Google Scholar]

- Memar, A.H.; Esfahani, E.T. Objective Assessment of Human Workload in Physical Human-robot Cooperation Using Brain Monitoring. ACM Trans. Hum. Robot Interact. 2019, 9, 1–21. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roy, R.N.; Drougard, N.; Gateau, T.; Dehais, F.; Chanel, C.P.C. How Can Physiological Computing Benefit Human-Robot Interaction? Robotics 2020, 9, 100. https://doi.org/10.3390/robotics9040100

Roy RN, Drougard N, Gateau T, Dehais F, Chanel CPC. How Can Physiological Computing Benefit Human-Robot Interaction? Robotics. 2020; 9(4):100. https://doi.org/10.3390/robotics9040100

Chicago/Turabian StyleRoy, Raphaëlle N., Nicolas Drougard, Thibault Gateau, Frédéric Dehais, and Caroline P. C. Chanel. 2020. "How Can Physiological Computing Benefit Human-Robot Interaction?" Robotics 9, no. 4: 100. https://doi.org/10.3390/robotics9040100

APA StyleRoy, R. N., Drougard, N., Gateau, T., Dehais, F., & Chanel, C. P. C. (2020). How Can Physiological Computing Benefit Human-Robot Interaction? Robotics, 9(4), 100. https://doi.org/10.3390/robotics9040100