1. Introduction

Research and development of robots that play with humans have been conducted for more than three decades. Robots that play a game were developed as tools to investigate the learning mechanisms of humans and robots, such as a robot that played hide-and-seek [

1] or a robot that learned how to play from children [

2].

Education is another important purpose of playing robots. There have been many works on developing robots for special education [

3,

4] such as that for children with physical disabilities [

5,

6] or those with autism [

7,

8,

9,

10]. Other works have developed robots for not only children with disabilities, but also normal children to help in social development [

11,

12].

Developing robots that play physical games with children is a big challenge. We need to ensure safety to both the human and robot, as well as to develop methods that achieve human–robot communication and understanding of human behavior.

We have been developing a robot that plays the darumasan-ga-koronda game with children [

13,

14,

15]. darumasan-ga-koronda is a traditional Japanese children’s game. Our final goal is to develop a robot that plays darumasan-ga-koronda, performing the two roles mentioned above, with children. In our previous works, we developed methods for realizing a part of the functions for playing the game as “it.” First, the robot tracks the players’ movement and determines whether a player is moving where the player should freeze [

13]. We applied a human detection based on a laser range finder (LRF) for a human-following method [

16] to track the players [

14]. Then, we achieved a robust detection and tracking considering various postures of players [

15].

In this paper, we describe the total robot system that plays darumasan-ga-koronda as “it.” We only briefly describe the parts already published [

13,

14,

15]. Then, we describe the other functions needed to achieve the total play, such as the function of a robot to touch the human players and to return to the original position after playing the game.

The paper is organized as follows. The darumasan-ga-koronda game and the robot design are described in

Section 2.

Section 3 describes the development of the robot hardware, including the expandable and contractible arm. In

Section 4, we describe the function to detect and track the players.

Section 5 describes the “out” judgment method. The function to touch the human player is described in

Section 6. Then,

Section 7 describes the function to return to the origin to play the game iteratively. The total system is evaluated in

Section 8.

2. The Darumasan-Ga-Koronda Game and Robot Design

The Rule of the Game

First, we briefly describe the rule of the darumasan-ga-koronda game. Darumasan-ga-koronda is a multiple-player game that resembles the “red light, green light” or “statue” game [

17].

The game is played in a field with three or more players. One of the players becomes “it” and stands at the edge of the field. The

supplemental material (video) provides with an example of the game played by three persons. The game can be organized into several states, as follows.

In the beginning, “it”’ and other players stand in the distance.

“It” turns back and chants “Da-ru-ma-sa-n-ga-ko-ro-n-da.” When chanting, other players get nearer to “it.”

After chanting, “it” looks back. At this moment, the other players should freeze. If “it” finds a player is moving, “it” utters “Out!”

The player who are declared “out” is caught by “it.” If “it” catches all the other players, “it” wins, and the game finishes.

If a player touches “it,” the caught players are released.

At the moment of release, “it” shouts “Stop!”, and the other players should stop.

“It” moves to the nearest player in three steps. If “it” can touch the player, “it” says “Out!” to the touched player, and the player becomes the next “it.”

On designing the robot that plays darumasan-ga-koronda, we changed the rule of the game as follows so that the robot could actually participate in the game.

The role of the robot is fixed as “it.”

Since the players and “it” touch each other, we changed the rule so that a player clicks a mouse button instead of touching the robot’s body. The problem for the robot to touch the player is solved by developing a new robotic arm.

When the robot moves toward a player in Step (7) shown in the previous section, the robot moves the distance (using wheels) equivalent to three steps of an adult.

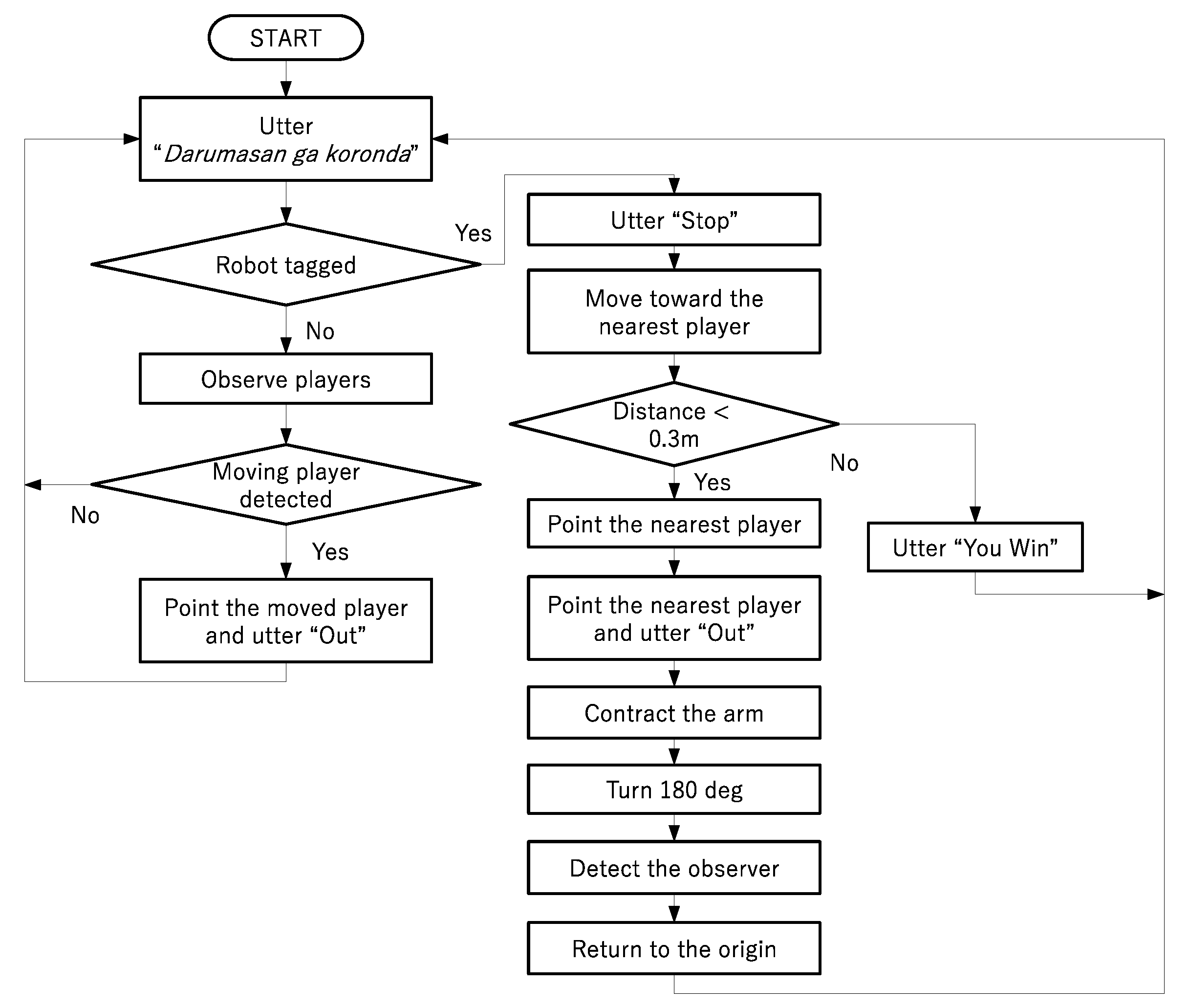

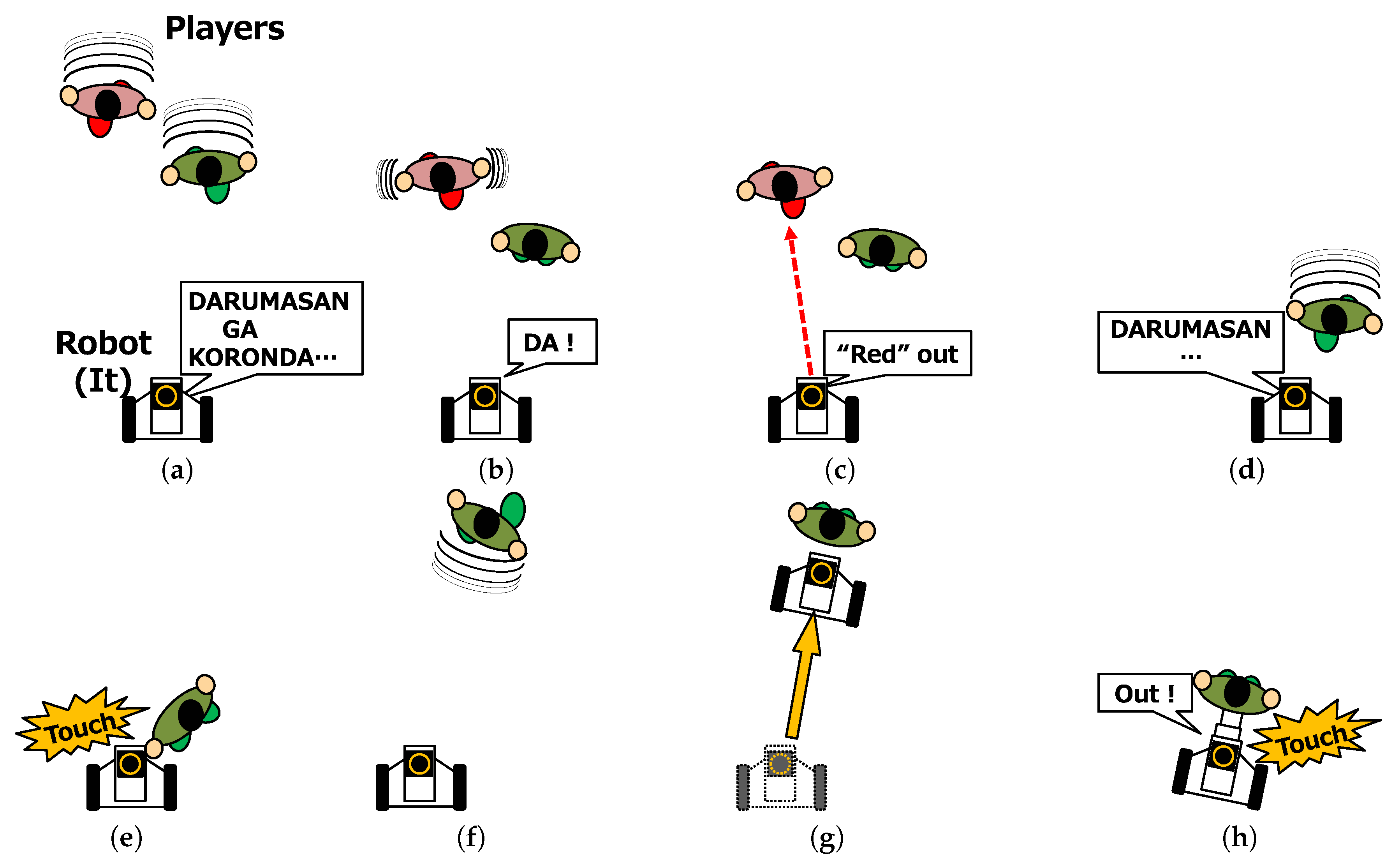

Figure 1 shows a flowchart of the robot’s behavior.

Including the above description, we developed (or already developed) the following techniques to develop a robot that plays darumasan-ga-koronda.

- (A)

- (B)

Track the detected players [

15]

- (C)

Decide if players are freezing [

13]

- (D)

Move to the nearest player [

15]

- (E)

Touch the player

- (F)

Move back to the original position

3. Development of the Robot Hardware

3.1. The Hardware

Figure 2 shows the developed robot that plays the game. We developed the robot based on Carry-PM3, a mobile robot developed by our group [

13], as shown in

Figure 2. The base of the robot was the mobile robot i-Cart edu [

18]. A robot avatar, a small communication robot, was mounted on Carry-PM3. The robot avatar makes a pointing gesture and communicates with the players [

19]. The weight of the robot was 11.5 kg, and the maximum moving speed was 0.8 m/s.

3.2. Development of an Expandable and Contractible Arm

From the observation of human–human play of darumasan-ga-koronda, we found that “it” touched other players’ backs from the middle to the shoulders. Thus, we designed the robot to touch the player in the position below the shoulders. Since the average axilla height (underarm level) of Japanese adults is 1220.6 mm [

20], we determined the height of the robot’s arm to be 1220 mm.

It is a difficult task to design a robot mechanism that directly contacts a human. When humans played the game, “it” touched the players mostly by swinging its arm downward. In children’s play, “it” sometimes slaps a player on the body. If we implemented the robot’s arm to move downward as shown in

Figure 3a, the moving range of the arm would be relatively wide, which may cause a collision with other players. Thus, we designed the arm to move linearly to touch a player by a pushing motion, as shown in

Figure 3b.

When a robot touches a player, a large force may be harmful to the player. There have been several works on the safety of a manipulator [

21,

22]. In the work by Jeong et al. [

22], they proposed two indices for safety and dexterity. In our work, the arm only had one function: to touch the player; thus, we considered the safety by restricting the pushing force of the arm. When players stop, they stop with various postures. The pushing force of the arm should be sufficiently small so that it is not harmful regardless of the touching position. The ISO/TS 15066 requires that the static force of the robot when contacting a human body should not be more than 110 N when contacting a human’s abdomen [

23]. Considering that players may be children, we decided that the pushing force of the arm should not exceed 20 N.

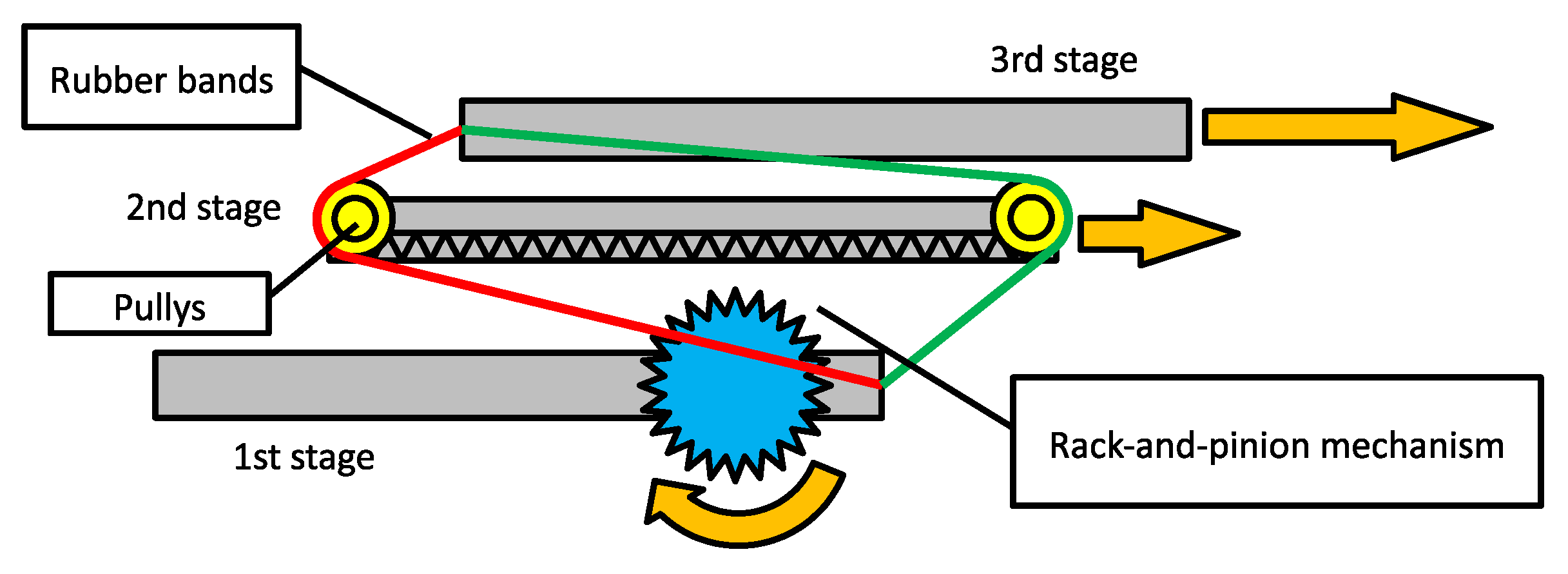

We employed the rack and pinion mechanism for the linear-motion arm. Since the supposed distance between the robot and a player is 300 mm when touching, the arm should stretch no less than 300 mm. To make the arm compact when shrinking, we employed the three-fold telescopic mechanism, as shown in

Figure 4. An actuator (Dynamixel MX-64AR) was attached on the first stage of the arm, and the second stage moved linearly by the rack and pinion gears. Two rubber bands were attached to the right side of the first and the left side of the third stage, and the pulleys attached to the second stage held the rubber band. When the second stage moved, it moved the rubber band so that the band pushed the third stage. Using this mechanism, the third stage automatically stretched and shrinked along with the second stage.

Figure 5 illustrates the extending mechanism of the arm. Due to the use of rubber bands, the arm can be pushed back flexibly, and thus, the pushing force can be reduced.

4. Detection and Tracking of the Players

The detection and tracking of the players are the central parts of the robot. The detail of the detection and tracking was described in the paper by Nakamori et al. [

15], so we describe the outline of the system briefly.

We used an LRF for detecting players. The detection method was based on the human-following method [

16]. This method detects the persons considering the size of a human body. The LRF scanned the environment from right to left and measured the distance between the LRF and an obstacle at a specific angle. We detected objects using a threshold of distance difference between two contiguous points. After detecting objects, we decided if the object was a human or not considering the size of a human body.

After detecting the players, the robot needed to track each player. We employed a simple tracking algorithm, where two persons observed the two contiguous time frames were regarded as identical persons when the central points of the two persons were shorter than a pre-defined threshold.

5. The “Out” Judgment

5.1. Judgment of a Player’s Movement

When the players should freeze, if “it” finds a player moving, “it” calls “Out!” To realize this play, the robot judged whether players were freezing or not. We used the same method as the player detection and tracking for the judgment.

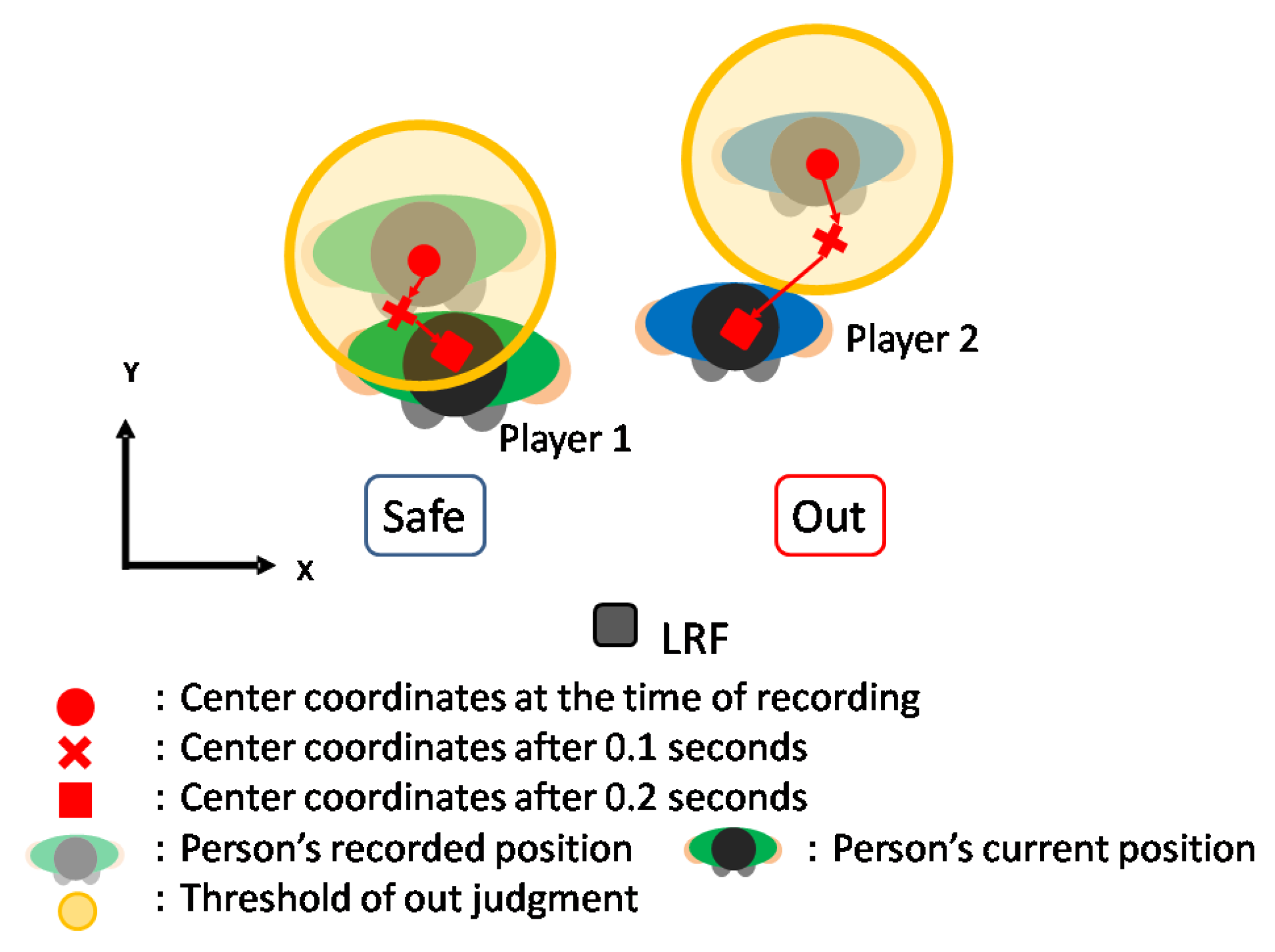

The basic idea was to measure the fluctuation a player’s center point when the player was freezing. By measuring the fluctuation of players, the robot can determine whether a player is to be judged as “out” or not.

Figure 6 shows the “out” judgment. First, the robot observes the players’ center positions. Let

be the time when the robot starts the observation. Here, we denote the time of the next LRF scan as

, where one scan takes 0.1 s. Let

be the two-dimensional position of the

player at time

t. Then, the robot judges that player

i moves when:

Here, is the threshold of the judgment.

5.2. Experiment for Determining the Detection Threshold

We carried out an evaluation experiment to determine an appropriate threshold

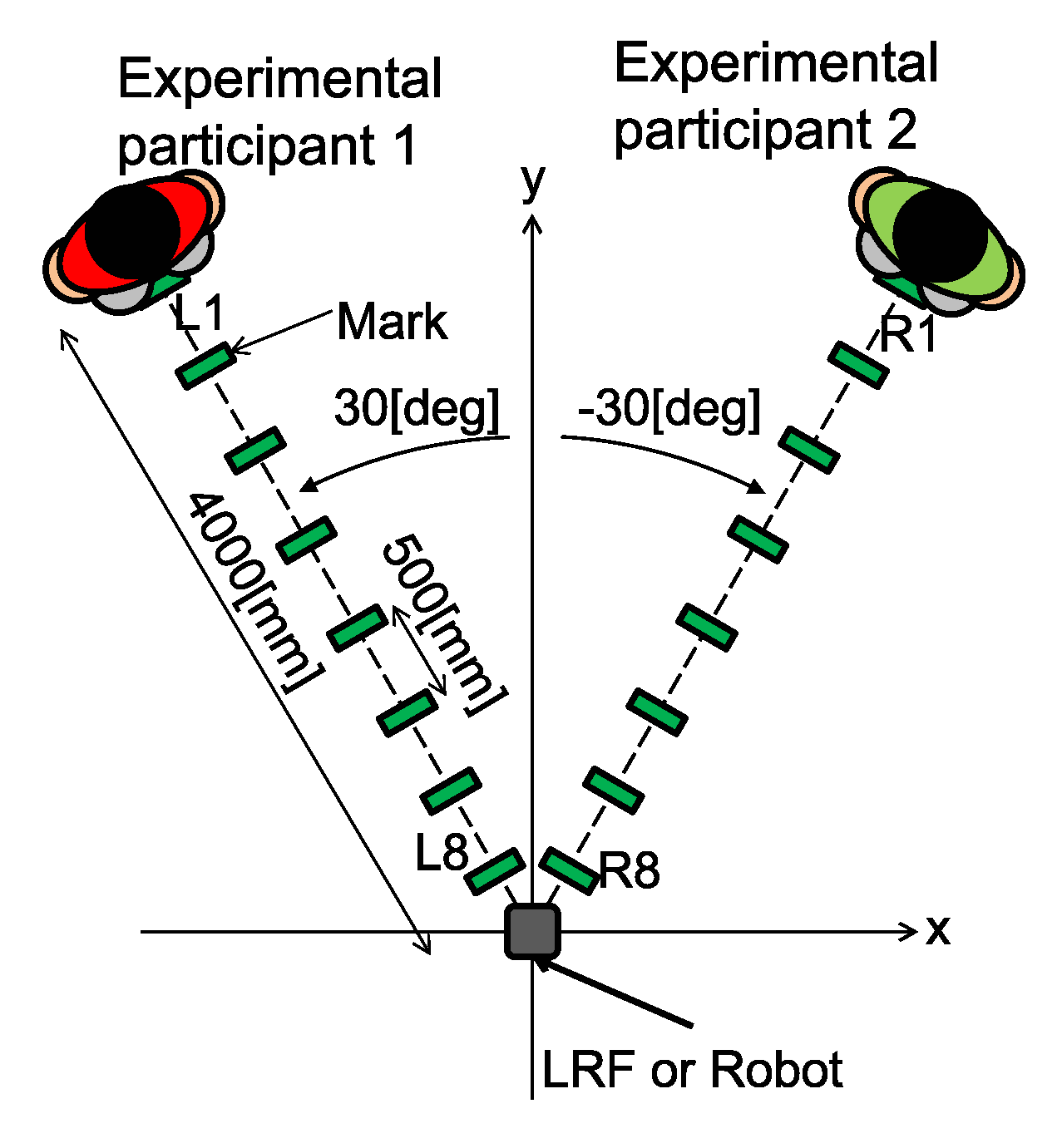

. We conducted two experiments: in the first experiment (the static condition), we observed standing persons and observed their movement; in the second experiment (the dynamic condition), the persons walked and stopped, and we observed the movement after they stopped. The experimental environment is depicted in

Figure 7. We set the LRF at the origin, and a participant stood at a position of 4000 mm from the origin with an angle of

deg. We marked every 500 mm from the initial position to the origin. In the static condition, we observed the fluctuation of the participant’s position for 10 s (i.e.,

in Equation (

1)). We repeated this observation at every mark. In the dynamic condition, the participant moved from one mark to the next one near the origin while the robot was counting. Then, we observed the movement for 30 s at every mark.

Figure 8 shows the experimental result. Here, the bars show the average fluctuations and the error bars are the standard deviation. This result suggested that the distance from the LRF to the person did not affect the measurement. Thus, we set a distance-independent threshold for judgment.

Table 1 shows the average and standard deviation of the fluctuation for each condition. We set the threshold to be larger than the value

in

Table 1. Finally, we determined the threshold as 40 mm for the static condition and 80 mm for the dynamic condition.

5.3. The “Out” Judgment in the Actual Play

Next, the robot and human players actually played the game to confirm the proposed system was sufficient for playing the game, as well as the adequacy of the “out” threshold.

We employed two male graduate students as players. The experimental environment was the same as that in the previous experiment shown in

Figure 7. We conducted three trials for each of the two thresholds. When the robot was making the first utterance, the players walked nearer to the robot. After the utterance, the player froze for five seconds while the robot judged if the players were “out” or not. The players tagged the robot in two games out of six trials, one for a 40-mm threshold and the other one for 80 mm.

Next, we compared judgments made by the robot and human.

Table 2 shows the number of judgments, the number of correct judgments (same judgments as that by human evaluator), and the accuracy. The accuracy was around 80% for both the 40-mm and 80-mm thresholds. All of the misjudgments by the robot were judging the player as “out” while the human evaluator judged the opposite.

The reason for the overdetection of the movement of the players was that the system detected small movements of the arms. The human evaluator tended to overlook the movement of arms, while the system strictly judged regardless of the body part of the movement.

6. Touching a Player

6.1. Overview

When a player tags the robot, the robot calls “stop”, and the players freeze. Then, the robot moves to the nearest player. If the robot can touch the player, the touched player is called “out.”

It is not easy to develop a robot that touches a human safely. There have been several works where a robot touched a human or a human touched a robot [

24,

25,

26,

27]. The main concern of those works was to investigate the mental effect on a person upon physically contacting a robot. Thus, in these works, small robots (such as Nao) were used, and the robot and human contacted gently, while the robots did not move. In contrast, in our system, the players were excited to play the game, and the robot needed to approach the player and touch him/her.

To realize this play, we implemented a mechanism for touching a player.

Figure 9 shows the procedure of the play until the robot touched the player.

6.2. Controlling an Extendable Arm Using an LRF

As described, we used an extendable and contractible arm to touch the player. When touching the player, we needed to consider how to touch the player safely. To ensure the safety, the arm should extend a distance exactly the same as that between the robot and the player so that the arm stops moving exactly when the hand touches the player. Moreover, if the player moves his/her body and the distance to the player changes, the robot should follow the change of the distance. To realize this function, we used the LRF to measure the distance to the player. The LRF used to measure the distance was the same one as used to detect and track the players.

When the robot extends its arm to touch the player, the robot continues to measure the distance to the player using the LRF, as shown in

Figure 10. Here, we assumed that the maximum distance between the robot and the player was less than 0.3 m. We measured the distance to the player as the average of three points, which were the center point of the player and his/her left and right observation point.

We calculated the extension distance of the arm

using the distance between the LRF and the player

and the distance between the LRF and the front of the contracted arm

, as shown in

Figure 11.

We used the Dynamixel MX-64AR with multi-turn mode as the actuator of the arm. This actuator is a smart actuator that rotates to the designated angle given by a command. The designated angle can be from

to 2520 deg. When the extension distance is

(m), the target angle is:

where

R (m) is the radius of the pinion gear.

When extending the arm, the LRF observes the distance every 0.1 s and sends the distance to the actuator subsystem. The actuator subsystem changes the target angle of the actuator according to the distance. This process iterates 50 times (5 s).

6.3. Evaluation of the Arm

We measured the pushing force of the arm using a force gauge at four distances, 150, 200, 250, and 300 mm. We conducted the measurement three times and took the average. The result is shown in

Table 3. As explained in

Section 3.2, we designed the arm so that the pushing force did not exceed the maximum force (20 N). The result showed that the actual pushing forces were almost ten-times smaller than the assumed maximum force.

Next, we measured the precision of the extension distances of the arm from 60–360 mm. When we used the target angle of the actuator according to Equation (

2), the actual extension distances were systematically smaller than the target distances. Thus, we added bias to the target distance so that the actual extension distance became near the target distance. As a result, the root-mean-squared error of the extension distance was 4.7 mm, which is small enough for this purpose.

6.4. Considering Players’ Posture

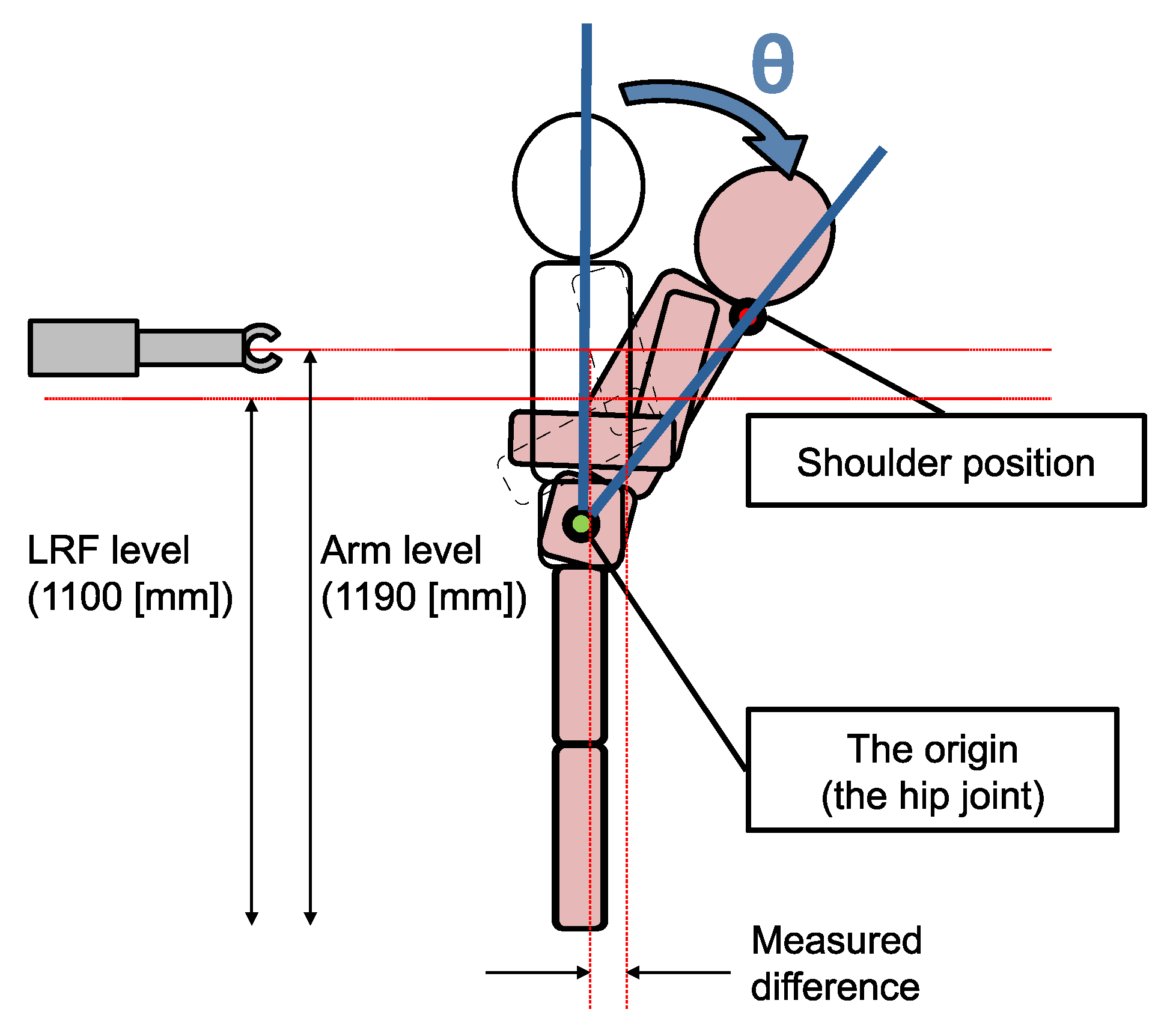

The player sometimes bent back to avoid being touched. The possible problem is that the level of the LRF and that of the arm were different. Because the robot measured the distance using the LRF and extended the arm according to the measurement, if the distance to the player at the level of the LRF and that at the level of the arm were different, it would cause the arm not to reach the player. Thus, we measured the actual difference of the distances at the two levels.

One male adult participated in the experiment. The participant bent backward three times, and the positions of the shoulder and the hip joint were measured. Then, as shown in

Figure 12, the difference of the horizontal positions of the body at the arm level (1190 mm) and the LRF level (1100 mm) were calculated.

As a result, the average difference of the LRF level and that at the arm level was 77.3 mm. Thus, we increased the target extension distance of the arm by 75 mm.

7. Continuous Play

7.1. Returning to the Original Position

We have participated in many events to demonstrate the proposed robot system (we describe the details later). In these events, when the robot played the game, the game continued several times. In the first implementation of the robot, the program finished when one game finished, and the operator needed to bring the robot back to the original position and start the system again. However, it takes much time and effort to bring the heavy robot back to the origin and restart the system again. To realize a more practical system, we implemented a mechanism in the robot to return to the original position automatically after one game finished and start the game again.

To do this, the robot needed to memorize the original position using a certain means such as making an environmental map or finding landmarks. However, it is not easy to make a map or find landmarks in a large space such as a park. Thus, we developed a method to memorize the position of an operator as a landmark.

In the current operation of the robot, an operator (observer) is needed to bring the robot to the place, start the system, and stop the system in case of an emergency. We focused on the observer and came up with the idea to use the observer as a landmark to return to the origin.

Figure 13 shows the overview of the play and returning to the origin. As this figure shows, the robot first approached the nearest player, touched the player, and called “out.” Considering the average footstep of an adult, we decided the distance to move the robot as 4090.5 mm. Then, it turned around and found the observer. Here, we assumed that there was only the observer near the origin at this time. The robot assumed that the observer’s position was the origin, and it moved to that point. Then, it turned in front to be in the initial state.

7.2. Experiment

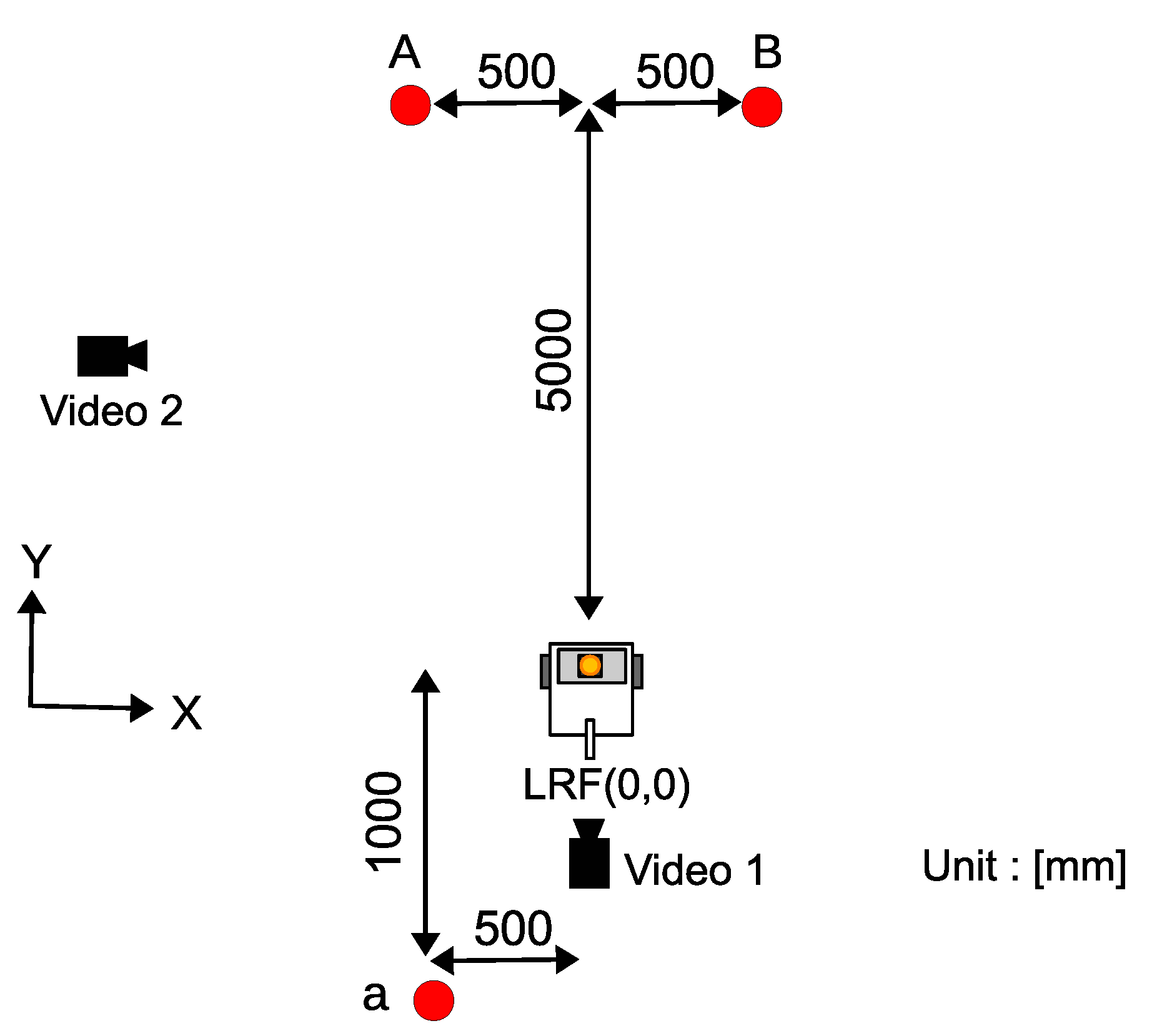

We conducted an evaluation experiment for continuous play. Two persons participated in the experiment. The experimental environment is shown in

Figure 14. We used two video cameras to record the plays. The two players stood at Points A and B, respectively, and played the game three times. The observer first stood at Point A and moved to the origin when the robot moved to the player to touch. One game finished when two players were called “out” or the robot touched a player.

As a result, the robot chanted “darumasan-ga-koronda” twelve times. In all three plays, a player touched the robot, and then, the robot moved to the player, touched the player, and called “out.” The robot conducted the three plays without any trouble.

Figure 15 shows the images captured by the two cameras. After being touched by a player, the robot detected the nearest player (

Figure 15a). Then, it moved to the player (

Figure 15b) and called “You are out.” After the call, the robot detected the observer at the origin (

Figure 15c) and moved to that place (

Figure 15d). The robot turned to the front at the origin (

Figure 15e), and it started the game again (

Figure 15f).

8. Evaluation of the Total System

8.1. Evaluation Experiment

Finally, we experimented to evaluate the total system. Two players participated in the experiment and played the darumasan-ga-koronda game according to the usual rules. The configuration of the playing field was the same as

Figure 14. The players stood at 5 m from the robot and got near the robot to touch the robot. When the robot touched a player, we instructed the player to perform three different postures when touched, as shown in

Figure 16:

Facing away from the robot, standing straight

Facing the robot, standing straight

Facing the robot, bending back

We conducted three experiments for each of the above three conditions. The recorded video is available as the

supplemental material. As a result, the robot and human players could complete the game, and the robot could touch a player in most of the conditions. In the three trials, the player moved when being touched by the robot and was called “out” by the robot.

The pushing force of the robot arm was so mild that the players felt no pain. Even when touched at the player’s back, there was no hazard for the player to fall by being pushed by the robot.

8.2. Track Records of Demonstrations in Events

The robot described here has been demonstrated in 13 events in which at least 168 people participated in the game with the robot. The events included open campus events and other events of Osaka Institute of Technology, Japan Robot Week 2016, the Open Challenge of RoboCup@Home League in RoboCup 2017 Nagoya, several town events, and two TV events. Many people with various ages, genders, heights, and weights have participated in the demonstrations and played the game without a big problem. Not only Japanese adults and children, but children from other countries participated in Japan Robot Week 2016. When participating in Japan Robot Week 2016, the robot operated seven hours for the entire three-day event. The biggest concern was the operation time of the batteries.

Table 4 shows the battery operation time of the components. We prepared spare batteries of all components, and we changed the battery of the robot avatar every hour. To make sure the robot operated throughout the event, we prepared two mobile robots. As a result, the robot operated without any trouble. For the TV coverage, where we could not fail, the robot robustly performed the game.

9. Conclusions

This paper described the development of a robot that can play the game darumasan-ga-koronda with humans. It is difficult for conventional robots to play the game because the robot needs to track the players who make a sudden move, detect the small movement of players, and touch the players safely. To realize such a robot, we needed to develop technologies to detect and track the players, measure the movement of the players, detect and move to the nearest player, touch the player, and return to the origin. In this paper, we described the parts of the techniques that were not described in our previous papers and evaluated the total system.

Here, we would like to comment on the significance of a robot that plays a game. In the current system, we aimed for the robot to play as similarly to a human player as possible. For example, the “out” judgment threshold was determined according to human judgment. Here, we can control the strictness of the “out” judgment. For example, the robot can use a looser threshold when playing with children. In contrast, the robot can make a more strict judgment than a human. Besides, the robot tried to touch by measuring the distance to the player, which means that the robot extended the arm more when a player tries to avoid the touch. It is a novel point of our system that it achieved this kind of “battle” interaction between the robot and a human player. In addition to the “out” judgment, playing elements like this one promote active interaction between a robot and a human.

Finally, the robot can only play the role of “it” in the game. Development of a robot that can play both “it” and other players is a challenging task since it needs to recognize speech of an unusual speed from a distance uttered by “it,” recognize a quick turn of “it”, and so on.

Author Contributions

Y.H. initiated the research, designed the experiment, and wrote the draft of the paper. A.I. advised on the design of the experiment and analysis and finished the final paper.

Funding

Part of this work was supported by JSPS Kakenhi JP16K00363.

Acknowledgments

Yuko Nakamori conducted the experiments in the paper. Shogo Tanaka helped with the development of the arm.

Conflicts of Interest

No potential conflict of interest was reported by the authors.

References

- Trafton, J.G.; Schultz, A.C.; Perznowski, D.; Bugajska, M.D.; Adams, W.; Cassimatis, N.L.; Brock, D.P. Children and robots learning to play hide and seek. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 242–249. [Google Scholar] [CrossRef]

- Brooks, A.G.; Gray, J.; Hoffman, G.; Lockerd, A.; Lee, H.; Breazeal, C. Robot’s play: Interactive games with sociable machines. Comput. Entertain. 2004, 2, 10. [Google Scholar] [CrossRef]

- Amanatiadis, A.; Kaburlasos, V.G.; Dardani, C.; Chatzichristofis, S.A. Interactive social robots in special education. In Proceedings of the 2017 IEEE 7th International Conference on Consumer Electronics, Berlin, Germany, 3–6 September 2017; pp. 126–129. [Google Scholar] [CrossRef]

- Catlin, D.; Blamires, M. Designing Robots for Special Needs Education. Technol. Knowl. Learn. 2019, 24, 291–313. [Google Scholar] [CrossRef]

- Kronreif, G.; Prazak, B.; Mina, S.; Kornfeld, M.; Meindl, M.; Furst, M. PlayROB-robot-assisted playing for children with severe physical disabilities. In Proceedings of the 9th International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005; pp. 193–196. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Ferrari, E.; Kronreif, G.; Prazak-Aram, B.; Marti, P.; Iacono, I.; Gelderblom, G.J.; Bernd, T.; Caprino, F.; et al. Scenarios of robot-assisted play for children with cognitive and physical disabilities. Interact. Stud. 2012, 13, 189–234. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Billard, A. Issues of Robot-Human Interaction Dynamics in the Rehabilitation of Children with Autism. In Proceedings of the 6th International Conference on the Simulation of Adaptive Behavior (SAB2000), Paris, France, 11–15 September 2000; pp. 519–528. [Google Scholar]

- Robins, B.; Dautenhahn, K.; Boekhorst, R.T.; Billard, A. Robotic assistants in therapy and education of children with autism: Can a small humanoid robot help encourage social interaction skills? Univers. Access Inf. Soc. 2005, 4, 105–120. [Google Scholar] [CrossRef]

- Boccanfuso, L.; O’Kane, J.M. CHARLIE: An Adaptive Robot Design with Hand and Face Tracking for Use in Autism Therapy. Int. J. Soc. Robot. 2011, 3, 337–347. [Google Scholar] [CrossRef]

- Wood, L.J.; Zaraki, A.; Walters, M.L.; Novanda, O.; Robins, B.; Dautenhahn, K. The iterative development of the humanoid robot Kaspar: An assistive robot for children with autism. In International Conference on Social Robotics; Springer: Cham, Switzerland, 2017; pp. 53–63. [Google Scholar] [CrossRef]

- Abe, K.; Hieida, C.; Attamimi, M.; Nagai, T.; Shimotomai, T.; Omori, T.; Oka, N. Toward playmate robots that can play with children considering personality. In Proceedings of the Second International Conference on Human-Agent Interaction, Tsukuba, Japan, 29–31 October 2014; pp. 165–168. [Google Scholar] [CrossRef]

- Khaliq, A.A.; Pecora, F.; Saffiotti, A. Children playing with robots using stigmergy on a smart floor. In Proceedings of the 2016 Intl IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016; pp. 1098–1122. [Google Scholar] [CrossRef]

- Sakai, K.; Hiroi, Y.; Ito, A. Playing with a Robot: Realization of “Red Light, Green Light” Using a Laser Range Finder. In Proceedings of the 2015 Third International Conference on Robot, Vision and Signal Processing (RVSP), Kaohsiung, Taiwan, 18–20 November 2015. [Google Scholar] [CrossRef]

- Nakamori, Y.; Hiroi, Y.; Ito, A. Enhancement of person detection and tracking for a robot that plays with human. In Proceedings of the 2017 IEEE/SICE International Symposium on System Integration (SII), Taipei, Taiwan, 11–14 December 2017; pp. 494–499. [Google Scholar] [CrossRef]

- Nakamori, Y.; Hiroi, Y.; Ito, A. Multiple player detection and tracking method using a laser range finder for a robot that plays with human. ROBOMECH J. 2018, 5, 25. [Google Scholar] [CrossRef]

- Hiroi, Y.; Matsunaka, S.; Ito, A. A mobile robot system with semi-autonomous navigation using simple and robust person following behavior. J. Man Mach. Technol. 2012, 1, 44–62. [Google Scholar]

- Nishibayashi, H. Idea Book 2—Teaching Japanese for Juniors; KYOZAI–L. O. T. E. Teaching Aids: Kalamunda, Australia, 1996. [Google Scholar]

- T-frog Project. Robot Assembly kit i-Cart edu (TF-ICARTEDU2016); T-frog Project-Future Robot Groundworks from Tsukuba: Tsuchiura, Japan, 2016. [Google Scholar]

- Hiroi, Y.; Ito, A.; Nakano, E. Evaluation of robot-avatar-based user-familiarity improvement for elderly people. KANSEI Eng. Int. 2009, 8, 59–66. [Google Scholar] [CrossRef]

- National Institute of Advanced Industrial Science and Technology. AIST Anthropometric Database 1991–92; National Institute of Advanced Industrial Science and Technology: Tokyo, Japan, 1992. [Google Scholar]

- Jeong, S.; Takahashi, T. Impact Force Reduction for Manipulators using Dynamics Acceleration Polytope and Flexible Collision Detection Sensor. Adv. Robot. 2009, 23, 367–383. [Google Scholar] [CrossRef]

- Jeong, S.; Takahashi, T. Unified Evaluation Index of Safety and Dexterity of a Human Symbiotic Manipulator. Adv. Robot. 2013, 5, 393–405. [Google Scholar] [CrossRef]

- International Organization for Standardization. Robots and Robotic Devices—Collaborative Robots; ISO/TS 15066:2016; International Organization for Standardization: Geneva, Switzerland, 2016. [Google Scholar]

- Hirano, T.; Shiomi, M.; Iio, T.; Kimoto, M.; Tanev, I.; Shimohara, K.; Hagita, N. How Do Communication Cues Change Impressions of Human–Robot Touch Interaction? Int. J. Soc. Robot. 2018, 10, 21–31. [Google Scholar] [CrossRef]

- Altun, K.; MacLean, K.E. Recognizing affect in human touch of a robot. Pattern Recognit. Lett. 2015, 66, 31–40. [Google Scholar] [CrossRef]

- Willemse, C.J.A.M.; Toet, A.; van Erp, J.B.F. Affective and Behavioral Responses to Robot-Initiated Social Touch: Toward Understanding the Opportunities and Limitations of Physical Contact in Human-Robot Interaction. Front. ICT 2017, 4, 12. [Google Scholar] [CrossRef]

- Andreasson, R.; Alenljung, B.; Billing, E.; Lowe, R. Affective Touch in Human–Robot Interaction: Conveying Emotion to the Nao Robot. Int. J. Soc. Robot. 2018, 10, 473–491. [Google Scholar] [CrossRef]

Figure 1.

Flowchart of the robot behavior in the game.

Figure 1.

Flowchart of the robot behavior in the game.

Figure 2.

The developed robot “Carry-PM3 with arm”.

Figure 2.

The developed robot “Carry-PM3 with arm”.

Figure 3.

Mechanism of the arm. (a) The serial link arm touching a player by swinging the arm downward; (b) The linear-motion of the arm touching a player by pushing.

Figure 3.

Mechanism of the arm. (a) The serial link arm touching a player by swinging the arm downward; (b) The linear-motion of the arm touching a player by pushing.

Figure 4.

The mechanism of the arm applying the principle of a telescopic ladder.

Figure 4.

The mechanism of the arm applying the principle of a telescopic ladder.

Figure 5.

The linear-motion arm. (a) Shrinking state; (b) Half-stretching state; (c) Fully-stretching state.

Figure 5.

The linear-motion arm. (a) Shrinking state; (b) Half-stretching state; (c) Fully-stretching state.

Figure 7.

Experimental environment.

Figure 7.

Experimental environment.

Figure 8.

The experimental result.

Figure 8.

The experimental result.

Figure 9.

The robot’s play until touching the player. (a) When the robot is chanting, players get near the robot; (b) when the robot finishes chanting, players should freeze; (c) the robot finds moving players and calls “you are out!”; the “out” player leaves the field; (d) the play starts again; (e) the player touches the robot; (f) the player runs away and calls “stop”; (g) the robot moves towards the nearest player; (h) the robot touches the player by extending the arm and calls “you are out”.

Figure 9.

The robot’s play until touching the player. (a) When the robot is chanting, players get near the robot; (b) when the robot finishes chanting, players should freeze; (c) the robot finds moving players and calls “you are out!”; the “out” player leaves the field; (d) the play starts again; (e) the player touches the robot; (f) the player runs away and calls “stop”; (g) the robot moves towards the nearest player; (h) the robot touches the player by extending the arm and calls “you are out”.

Figure 10.

Measurement of the distance to the player using the LRF.

Figure 10.

Measurement of the distance to the player using the LRF.

Figure 11.

The lengths , and .

Figure 11.

The lengths , and .

Figure 12.

Measurement of distance when a player is bending.

Figure 12.

Measurement of distance when a player is bending.

Figure 13.

Return to origin method. (a) The robot detects the nearest player; (b) the robot moves to the nearest player and calls “out”; (c) the robot turns 180 degrees; (d) the robot detects the observer; (e) the robot utters “I move to the observer”; (f) the robot moves to the observer and turns to the front.

Figure 13.

Return to origin method. (a) The robot detects the nearest player; (b) the robot moves to the nearest player and calls “out”; (c) the robot turns 180 degrees; (d) the robot detects the observer; (e) the robot utters “I move to the observer”; (f) the robot moves to the observer and turns to the front.

Figure 14.

Experimental environment.

Figure 14.

Experimental environment.

Figure 15.

Evaluation experiment of the total system. (a) Detect the nearest player. (b) Move to the nearest player. (c) Detect the observer. (d) Move to the observer. (e) Turn to the front. (f) Start the next game.

Figure 15.

Evaluation experiment of the total system. (a) Detect the nearest player. (b) Move to the nearest player. (c) Detect the observer. (d) Move to the observer. (e) Turn to the front. (f) Start the next game.

Figure 16.

Postures of a player when touched by the robot. (a) Facing away from the robot, standing straight. (b) Facing the robot, standing straight. (c) Facing the robot, bending back.

Figure 16.

Postures of a player when touched by the robot. (a) Facing away from the robot, standing straight. (b) Facing the robot, standing straight. (c) Facing the robot, bending back.

Table 1.

Average and standard deviation of the fluctuation.

Table 1.

Average and standard deviation of the fluctuation.

| Condition | Average (mm) | Standard Deviation (mm) | |

|---|

| Static | 10.9 | 9.50 | 29.9 |

| Dynamic | 37.4 | 20.8 | 79.0 |

Table 2.

The experimental result.

Table 2.

The experimental result.

| | 40 mm | 80 mm |

|---|

| Judgment number of times | 14 | 16 |

| Match number of robot and measurer | 11 | 13 |

| Accuracy (%) | 78.6 | 81.3 |

Table 3.

Pushing force of the arm.

Table 3.

Pushing force of the arm.

| Distance (mm) | Average (N) | St. Dev. (N) |

|---|

| 150 | 1.2 | 0.010 |

| 200 | 2.0 | 0.046 |

| 250 | 1.6 | 0.056 |

| 300 | 0.87 | 0.15 |

Table 4.

The battery operation time of the main components of the robot.

Table 4.

The battery operation time of the main components of the robot.

| Component | Operation Time (h) |

|---|

| The mobile robot | 12 |

| The robot avatar | 1.5 |

| Laptop PC | 2 |

| Loudspeaker | 12 |

| Arm | 12 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).