Abstract

Time constraints is the most critical factor that faces the first responders’ teams for search and rescue operations during the aftermath of natural disasters and hazardous areas. The utilization of robotic solutions to speed up search missions would help save the lives of humans who are in need of help as quickly as possible. With such a human-robot collaboration, by using autonomous robotic solutions, the first response team will be able to locate the causalities and possible victims in order to be able to drop emergency kits at their locations. This paper presents a design of vision-based neural network controller for the autonomous landing of a quadrotor on fixed and moving targets for Maritime Search and Rescue applications. The proposed controller does not require prior information about the target location and depends entirely on the vision system to estimate the target positions. Simulations of the proposed controller are presented using ROS Gazebo environment and are validated experimentally in the laboratory using a Parrot AR Drone system. The simulation and experimental results show the successful control of the quadrotor in autonomously landing on both fixed and moving landing platforms.

Keywords:

UAV; neural network; intelligent control; ROS; unmanned aerial vehicles; search and rescue 1. Introduction

Search and rescue operations (SAR) in hazardous and hard-to-access spots are critically constrained with time limits; any time delay may result in dramatic consequences and the losses of lives. It is of utmost importance that the first responder’s team search and reach the trapped victims and persons in danger within the shortest possible time to save their lives. However, the SAR missions can get more complicated practically in challenging environments such as avalanches, oceans, forests, volcanoes, and hazardous areas due to the irregular morphologic nature of the environment as well as threats to the lives of the rescue personnel. Studies over the past decade have established the utilization of Unmanned Aerial Vehicles in SAR missions where a considerable amount of literature has presented system design of combined UAVs and Unmanned Ground Vehicles UGVs in reaching victims, referred to as targets, within the minimum possible time and to assist the overstretched first responders and prevent putting their lives at risk while reaching the targets at low operating costs. UAVs can be programmed to fly autonomously or can be manually controlled from a ground station in various applications. UAVs are agile and fast and can reach targets with the minimum involvement of human operators. UAVs can operate at high altitudes, stands strong winds and low temperatures, thus enabling them to fit to fly at various operating conditions. With proper embedded systems and equipment, UAVs can fly during the day and night and can carry payloads such as emergency kits that can be dropped at target locations [1,2,3,4].

In disastrous and hazardous areas, there are always barriers that make SAR missions more challenging when using UAVs. As an example of such barriers, in maritime SAR missions, the winds and speed of the currents of oceans would complicate the search mission and may increase the risk of losing the victims or failing to rescue them [3,5,6]. An efficient solution is to design a UAV swarm system for SAR operations. Swarm systems have been found efficient in SAR missions when incorporated by different robotic systems such as Unmanned Ground Vehicles (UGVs) and Unmanned Surface Vehicles (USVs) along with UAVs. Several studies have revealed swarm-based solutions to overcome such challenging situations by designing cooperative and cognitive UAV swarms with dynamic search algorithms based on the last known location of the humans [7,8,9]. Reference [10] has reported an optimal cooperative cognitive dynamic search algorithm for UAVs used in SAR missions. The authors have utilized the elements of game theory as an enabling function for developing a dynamic search pattern. In maritime SAR missions, UAVs are utilized to detect moving victims in tides and waves of the ocean during the aftermath of sinking ships or burning oil tankers and oil rigs. A marine SAR system has been proposed in Reference [11] using UAVs, UGVs and Unmanned Surface Vehicles (USVs) to locate and rescue victims in offshore marine SAR missions. The UAVs are responsible for locating and tracking moving victims in the ocean and sending the location information to the USVs and the control unit to execute the rescue procedure. A review on the control methods for multiple UAVs and the system design architectures for various UAV swarm-based applications has been reported in References [12,13]. The authors have illustrated format methods in resilient system designs that are applicable to wide UAV-based applications but more specifically towards multi-UAV control in uncertain, dynamic and hazardous environments.

An artificial neural network (ANN) controller for the autonomous landing of a UAV on a ship has been presented in [14]. The authors have trained the ANN to identify the helipad corner points for landing by using a video feed from an onboard camera on the UAV and to calculate the orientation and distance to the landing spot. Simulation results were illustrated and have shown the successful control of the UAV to precisely land on the target within an accuracy of ±1%.

An adaptive sliding mode relative motion controller for the autonomous carrier landing of a UAV has been designed and implemented in Reference [15] for a fixed wing UAV. The UAV model was controlled using a 6 degrees of freedom (DOF) relative motion model. The controller demonstrated a good performance in simulation by driving the UAV within the required trajectory and attitude. An artificial neural network controller has been designed an ANN to track a real-time object and to detect and autonomously land on a safe area without the need for markers [16]. The proposed controller estimates the attitude and computes the horizontal displacement from the landing area. Simulations were carried out and were experimentally verified showing the successful control of the UAV. In addition, an artificial neural network direct inverse control (DIC-ANN) to control a quadrotor UAV dynamics has been presented and applied in Reference [16]. The authors have presented a comparative study between the performance of the DIC-ANN and PID controller on the UAV attitude and dynamics. The performance of the DIC-ANN controller was superior to the performance of the PID and they have elaborated that using ANN controller will support the autonomous flight control of the UAVs. A comprehensive method for an automatic landing assist system for fixed-wing UAVs has been proposed in Reference [17]. The method is based on markers identification on a runway for fixed-wing UAVs where the camera locates the markers as object points for perspective-n-points (PnP) solution with pose estimation algorithm. Simulations were carried out and a successful control and detection of the markers were achieved.

A novel design of a vision-based autonomous landing of a UAV on a moving platform has been reported in Reference [18]. The novelty of the presented approach relies on using onboard visual odometry and computations without using motion capture systems for the state estimation and localization of the platform position. Additionally, the authors have stated that with their state-of-art computer vision algorithm, no prior information of the moving platform is required to execute the autonomous landing algorithm. The proposed algorithm has been simulated using a Gazebo and Rotors simulation framework in the Robot Operating System (ROS). The moving target speed was varied and the path planning and auto-landing system has been tested to work correctly. The authors have carried out experimental verification of their proposed auto-landing system and successful results were reported.

A model predictive control algorithm for autonomous landing of a UAV on moving platforms has been used in the literature [19]. The algorithm was designed and simulated using MATLAB environment followed by an experimental setup that proved successful attempts on landing on moving platforms of various speeds and trajectories. The model predictive control strategy requires a predicted path of the moving target to be able to land correctly on the target. This is achieved via the live feedback from the motion capture system where the inputs are fed into the model predictive controller to estimate the required landing trajectory. With the current experimental setup, the necessity of having a motion capture system for estimating the landing trajectory limits the system to work indoor only.

An autonomous landing of a quadcopter on a ground vehicle moving at high speeds has been reported in Reference [20]. The authors demonstrated the efficiency of their control strategy by extensive experiments with the vehicle moving at the highest possible speed of 50 km/h. The system architecture consists of low-cost and commercially available sensors as well as a mobile phone placed on the landing pad to transmit GPS data for the localization and estimation of the vehicle position relative to the quadcopter. A simple Proportional-Derivative (PD) controller is used for the autonomous landing mode. The controller of the system was tuned manually during simulations and adjusted accordingly during the experiments. In addition, a Kalman filter is used for estimating position, velocity and acceleration of the moving target based on the received GPS data. A high processing onboard computer is placed on the quadcopter to carry out computations within a short and reliable time. Collectively, it can be observed that there exists a body of literature presenting research on the autonomous landing of UAVs on stationary targets. However, the autonomous landing on moving targets still poses a challenge and there remains a paucity of its research work [19]. In addition, most of the reported work on the autonomous landing of moving targets rely on prior information from external infrastructures such as motion capture and GPS systems for indoor and outdoor navigation and localization, respectively. In view of all the studies that have been mentioned, overall these studies highlight the need for further contributions toward the autonomous landing of UAVs on moving targets.

This research aims to contribute to the growing area of research of UAVs in a plethora of applications by presenting a vision-based intelligent neural network controller for the autonomous landing of UAV on static and moving targets for maritime SAR applications. The novelty of the developed controller relies on its simple yet applicable design for the autonomous landing on static and moving targets with no prior information from external infrastructures of the target locations. The paper is divided into four main sections. The first section presents the quadrotor system modelling, section two presents the autonomous landing controller design, section three illustrates the simulation results and, lastly, section four illustrates the experimental results and discussion.

2. Quadrotor System

In this section, the quadrotor system modelling is presented. The Newton–Euler derivation method is utilized to describe the quadrotor equations of motions with the translational and rotational dynamics. Table 1 provides the definition of the variables used in modelling the quadrotor system.

Table 1.

The nomenclature.

The detailed model derivation has been presented in Reference [21] with the system dynamic equation defined as

where

and system inputs are defined as

3. Intelligent Controller Design

In this section, a vision-based hybrid intelligent controller is developed for the autonomous landing on targets. The targets are defined by April tag markers. The test-bench UAV used in this research is programmed to have three operating modes. First, the UAV is commanded to operate in the exploration mode where it follows a predefined path to find possible targets using the downward facing camera. Once a target is detected, the target tracking mode will be activated to have the UAV tracking the target. After locating and tracking the target, the UAV will enter the landing mode where it will gradually descend to the marked area.

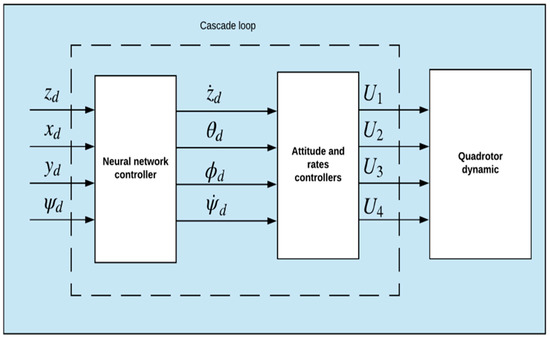

The hybrid controller consists of a neural network controller with a PID controller. Figure 1 presents the controller system architecture. The neural network controller is developed in an inner-outer loop scheme with a PID controller for the autonomous landing on the target. At this stage of the research, the PID controller is tuned heuristically using the Zeigler-Nichols tuning method. To solve the previous control problem, we calculate the kinematic control vector:

Figure 1.

The control system architecture.

This minimizes the error vector, defined as

The neural network controller is a three-layer feedforward neural network and the output vector is given by the following equations:

where and are the hidden and output layer vectors respectively, and are the hidden and output layer weight matrices, respectively. The activation function is a sigmoid function and is defined as

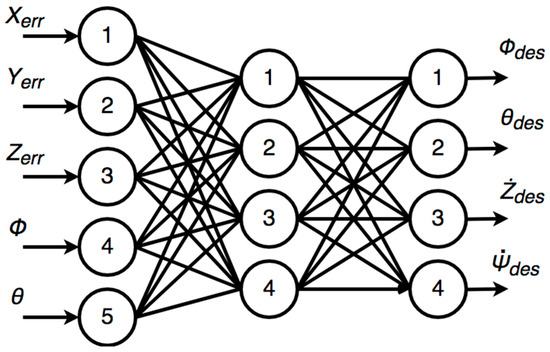

The weights are obtained by using a supervised learning mechanism; the training process aims to minimize the error between the user input commands and the kinematic output vector of the neural network depending on the quadrotor’s state. The neural network is illustrated in Figure 2.

Figure 2.

The neural network layers.

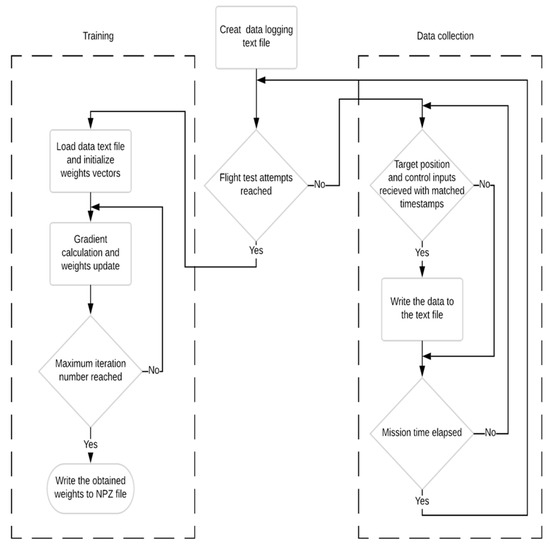

The training process is performed as follows:

● Data Collection

The quadrotor is operated and controlled manually to hover and land on the target with different initial starting positions and orientations. During the data collection, data synchronization is applied to ensure that every state change is caused by the captured control command.

● Training Phase

The backpropagation algorithm is used to calculate the gradient descent in every iteration to minimize the error vector. Figure 3 illustrates the data collection and training phases of the neural network controller.

Figure 3.

The neural network training phase flowchart.

4. Simulation Results

In this section, the simulation scenario and results are presented. The Robotics Operating System (ROS) framework was used with Gazebo simulator to simulate the developed controller [22]. The workstation specifications were a PC with Ubuntu Linux operating system that has I5 2.4 GHz processor, 6 GB RAM, and an NVIDIA GEFORCE 840M graphical card.

In ROS, multiple codes, defined as nodes, can run simultaneously to control the system with topics and messages passing between different codes as a feedback and attributes that can be used in one another. Hence, the simulation software can be divided into the following code packages:

- A controller package: contains the neural network controller forward propagation implementation.

- Data collection package: to perform data logging and synchronization of the captured data.

- Training package: trains the neural network model with the collected data and obtains the weight matrices.

- Manual operation: drives the quadrotor manually using the keyboard to land on targets for data collection.

- ARdrone autonomy package: an open source package used to receive the input commands from the control package and sends it to the ARdrone model plugin used by the Unified Robot Description File (URDF) format inside the Gazebo simulator.

- Ar_track_alvar package: an open source package used to estimate the position of the landing pad with the markers using the downward camera feed in which it calculates the distance to the marker and the defined x,y,z points of the detected landing pad.

- TUM_simulator package: a package that was developed by the TUM UAV research group that contains the ARdrone URDF files, sensors plugin, IMU, cameras, and sonar of the ARdrone.

All the previous packages are implemented as ROS nodes that can communicate with each other using specified topics in a publisher-subscriber pattern.

Remark 1: For the experimental part, all the previous packages will be used except the TUM_simulator package, which is replaced by the real drone that communicates with the laptop via a WIFI link.

Autonomous Landing on a Fixed Target

As previously mentioned, the quadrotor is programmed to have three operating modes: the exploration, target tracking, and autonomous landing modes. In this simulation scenario, the quadrotor takes off from an initial starting position and then starts the exploration mode with a predefined search pattern until a target is detected. Once the target markers are identified, the quadrotor starts the tracking mode with the Ar_Track_alvar package to estimate the position and orientation of the target and, hence, the neural network controller, the autonomous landing phase uses the published target positions to drive the drone to land precisely on the target.

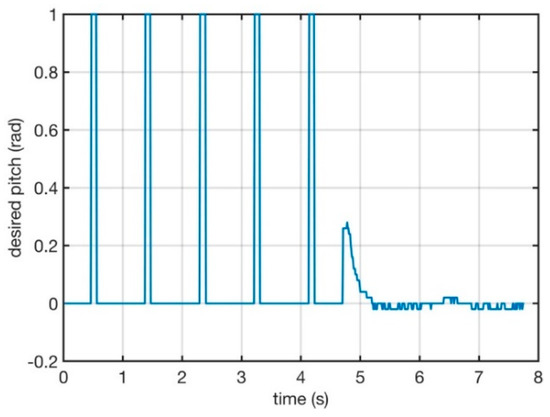

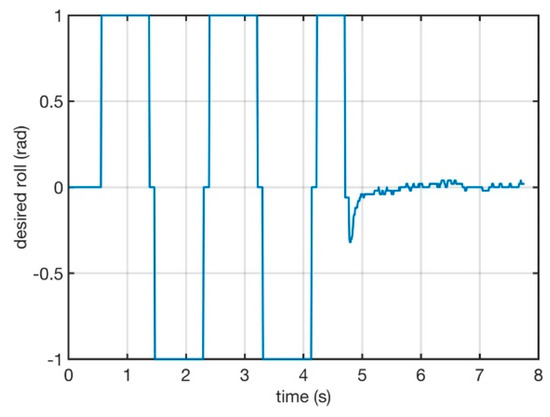

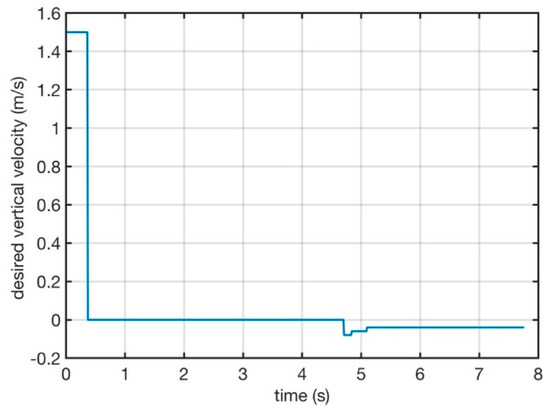

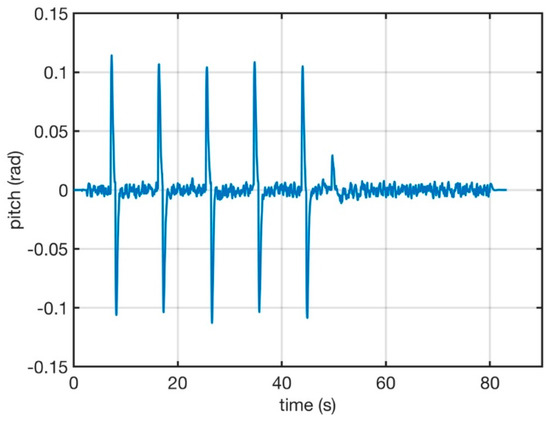

For the exploration mode, the drone is commanded to follow a predefined trajectory using reference angles. Figure 4 and Figure 5 illustrate the pitch angle and the roll angle references, respectively. In addition, the vertical velocity reference is presented in Figure 6. The controller has to drive the quadrotor system towards the given reference signals to explore the area and to detect the landing platform.

Figure 4.

The pitch angle reference signal.

Figure 5.

The roll angle reference signal.

Figure 6.

The vertical velocity reference signal.

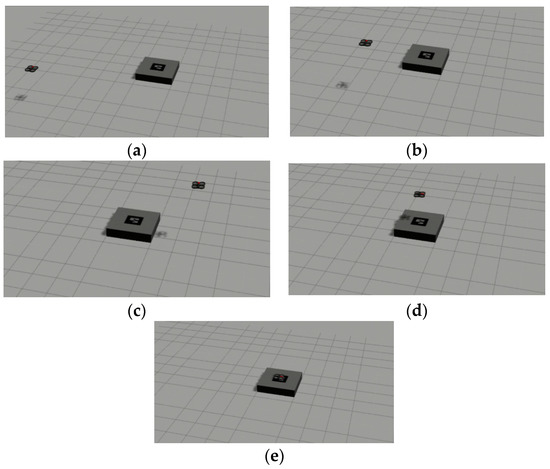

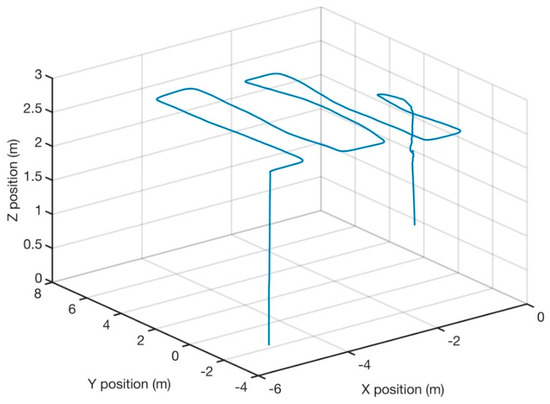

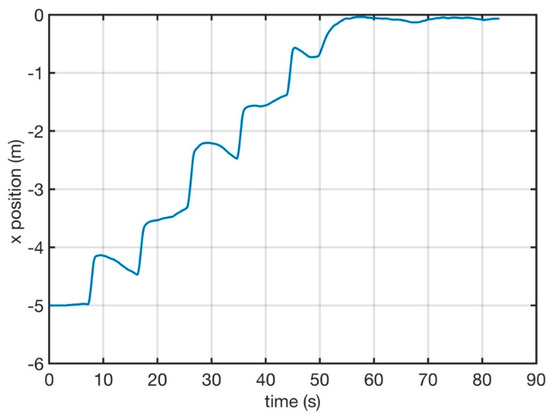

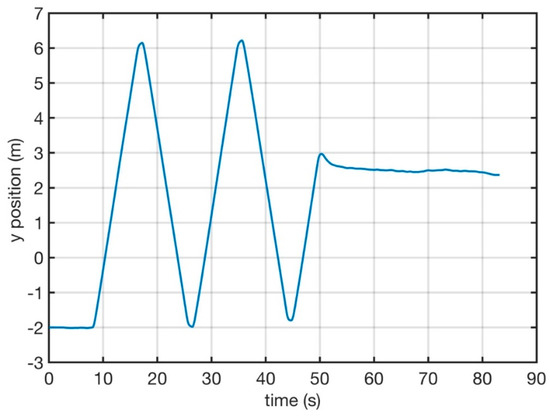

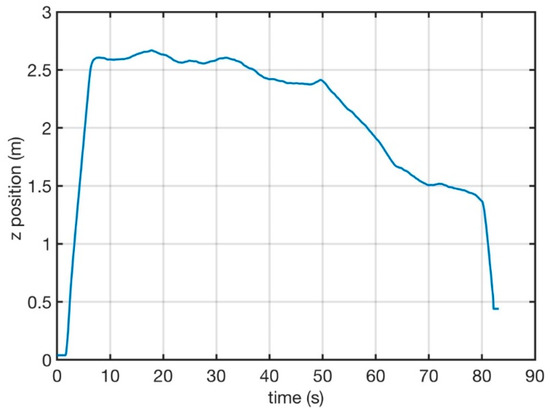

The simulation of the system resulted in a successful landing on the fixed target as shown in Figure 7. The resulting simulation 3D exploration trajectory of the quadrotor is illustrated in Figure 8 proving the controllability of the system and the ability of the controller to drive the quadrotor to the defined reference angles. Furthermore, Figure 9 and Figure 10 present the convergence of the x–y positions respectively to the target position after the detection of the landing platform. The convergence of the quadrotor altitude is illustrated in Figure 11, which shows the gradual landing of the quadrotor to the target platform. Hence, the designed intelligent controller has used the published target positions from the target tracking package to drive the drone to land successfully on the target position.

Figure 7.

The autonomous landing on a fixed target simulation in a Gazebo environment showing the start of the simulation in (a) until landing on the target in (e).

Figure 8.

The 3D trajectory of the quadrotor.

Figure 9.

The X position of the quadrotor.

Figure 10.

The Y position of the quadrotor.

Figure 11.

The Z position of the quadrotor.

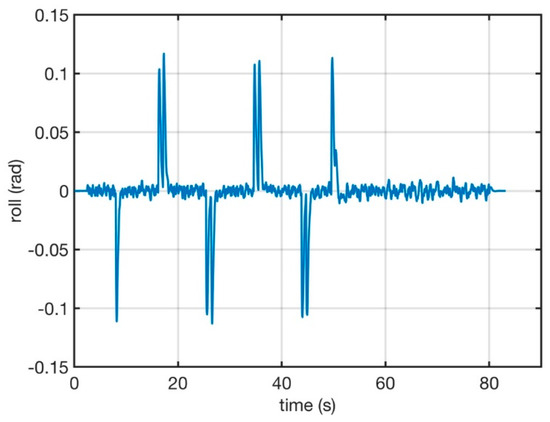

The simulated roll and pitch angles are presented in Figure 12 and Figure 13, respectively, and show that the angles are bounded and the coupling phenomena of the gyroscopic effect have been successfully eliminated. It can be observed that the controller maintains the system angles brought back to equilibrium rapidly, thus demonstrating the agility of the drone with the proposed controller.

Figure 12.

The roll angle evolution.

Figure 13.

The pitch angle evolution.

5. Experimental Validation and Results

Autonomous Landing on a Moving Target

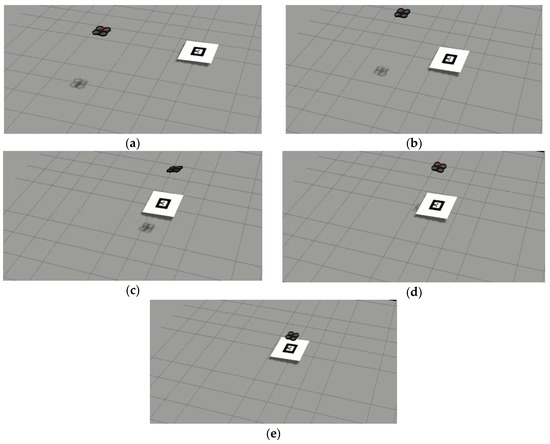

In this simulation scenario, the quadrotor is simulated to track and land on a moving target. The moving target is assumed to be a landing pad on a mobile robot that is moving in a straight line. The quadrotor takes off and then starts the autonomous exploration mode to find the target. Once the target is detected, the target tracking package is activated to estimate the target’s position relative to the quadrotor’s position and thus calculates the x,y,z position points of the target that are fed into the system controller. As a first step, the system has been simulated in a Gazebo environment and the quadrotor was able to land successfully on the landing pad as shown in Figure 14.

Figure 14.

The autonomous landing on a moving target simulation in a Gazebo environment showing the start of the simulation in (a) until landing on the target in (e).

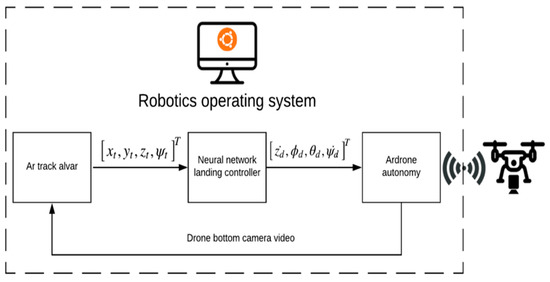

To demonstrate the feasibility of the proposed autonomous landing controller, an experimental setup was carried out using a Parrot© AR Drone quadrotor with ROS packages to conduct flight tests for autonomous landing on a moving platform in the real world. The target was placed on an iRobot Create mobile robot platform to enable a programmed motion of the target and the flight tests have been conducted in an indoor environment with the experimental setup illustrated in Figure 15.

Figure 15.

The experimental setup diagram with a real quadrotor system.

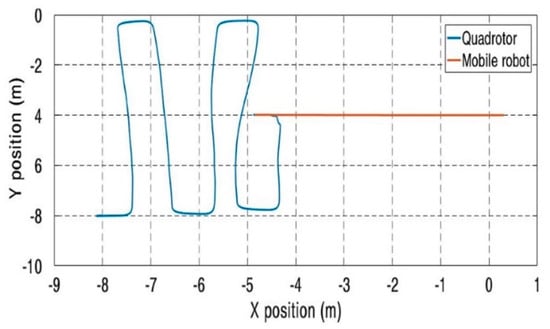

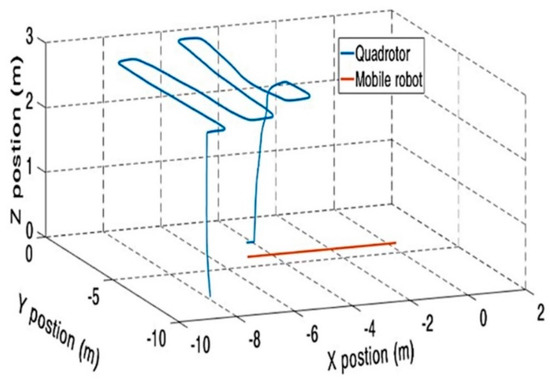

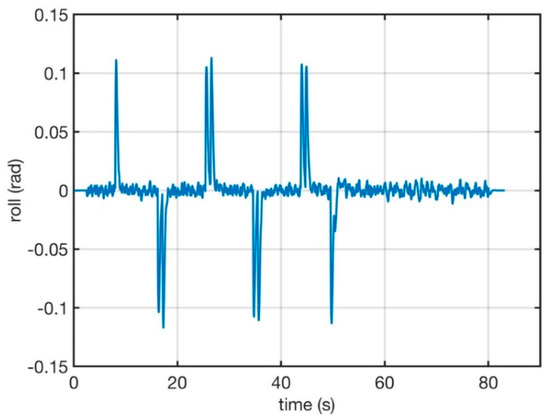

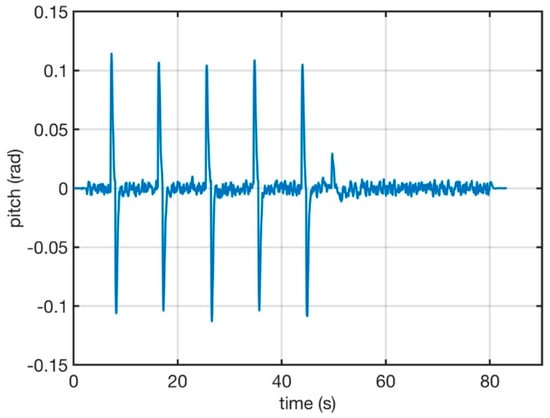

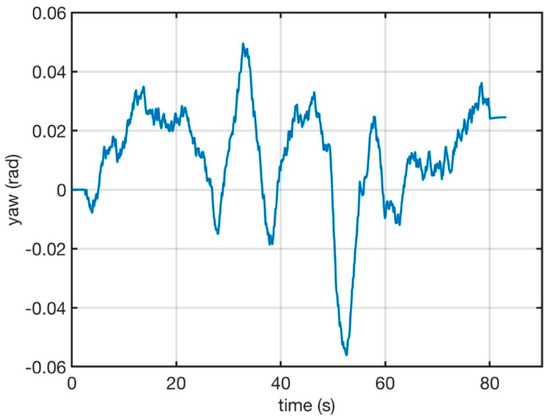

The flight data have been recorded and fed over the wireless connection into the computer running ROS and the recorded 2D and 3D trajectories of the quadrotor and the mobile robot are presented in Figure 16 and Figure 17, respectively, showing the successful landing on the moving target. In addition, the roll, pitch and yaw angles are presented in Figure 18, Figure 19 and Figure 20, respectively. It can be noted that the quadrotor has taken off and followed the predefined exploration trajectory to search for the moving landing platform whilst the tracking algorithm is executed. The platform was then detected and the quadrotor started to follow a gradual descending trajectory for a smooth landing on the moving platform. The recorded angles of the quadrotor system are bounded, showing the robustness and feasibility of the proposed intelligent controller.

Figure 16.

The moving target 2D trajectory.

Figure 17.

The moving target 3D trajectory.

Figure 18.

The roll angle evolution.

Figure 19.

The pitch angle evolution.

Figure 20.

The yaw angle evolution.

6. Conclusions

Aiming to contribute towards the design of the autonomous landing controller of UAVs on moving targets in SAR applications, this research presents a vision-based intelligent neural-network controller for autonomous landing on fixed and moving landing platforms. The proposed controller neither requires prior information of target positions nor external localization infrastructures nor relies mainly on vision system to locate the target during the exploration phase. Simulations were carried out in a Gazebo environment and the results have proven the feasibility of the implemented controller. In addition, the controller has been implemented on an AR Drone quadrotor to validate the design experimentally. The recorded flight data and results confirm the feasibility of the implemented intelligent controller to autonomously drive the quadrotor to land on a moving target. Notwithstanding the limited flight scenarios in this research, the research offers a valuable contribution to utilizing a simple neural network based controller to land on fixed and moving targets. Further flight scenarios will be considered as future work of this research to evaluate the performance and the effectiveness of the proposed control system under various operating conditions.

Author Contributions

Conceptualization, A.A.; Data curation, M.R.A.; Methodology, A.A. and M.R.A.; Resources, A.A. and M.R.A.; Visualization, A.A.; Writing—review & editing, M.R.A.

Funding

The experimental setup has been carried out at the robotics research laboratory in the college of technological studies of the Public Authority for Applied Education and Training (PAAET), State of Kuwait.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sampedro, C.; Rodriguez-Ramos, A.; Bavle, H.; Carrio, A.; de la Puente, P.; Campoy, P. A Fully-Autonomous Aerial Robot for Search and Rescue Applications in Indoor Environments using Learning-Based Techniques. J. Intell. Robot. Syst. Theory Appl. 2018, 1–27. [Google Scholar] [CrossRef]

- Gue, I.H.V.; Chua, A.Y. Development of a Fuzzy GS-PID Controlled Quadrotor for Payload Drop Missions. J. Telecommun. Electron. Comput. Eng. 2018, 10, 55–58. [Google Scholar]

- Hayat, S.; Yanmaz, E.; Brown, T.X.; Bettstetter, C. Multi-objective UAV path planning for search and rescue. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5569–5574. [Google Scholar]

- Waharte, S.; Trigoni, N. Supporting Search and Rescue Operations with UAVs. In Proceedings of the 2010 International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010. [Google Scholar]

- Skinner, S.W.; Urdahl, S.; Harrington, T.; Balchanos, M.G.; Garcia, E.; Mavris, D.N. UAV Swarms for Migration Flow Monitoring and Search and Rescue Mission Support. In Proceedings of the 2018 AIAA Information Systems Infotech @ Aerospace, Kissimmee, FL, USA, 8–12 January 2018; pp. 1–13. [Google Scholar]

- Wu, Y.; Sui, Y.; Wang, G. Vision-Based Real-Time Aerial Object Localization and Tracking for UAV Sensing System. IEEE Access 2017, 5, 23969–23978. [Google Scholar] [CrossRef]

- Fawaz, W.; Atallah, R.; Assi, C.; Khabbaz, M. Unmanned Aerial Vehicles as Store-Carry-Forward Nodes for Vehicular Networks. IEEE Access 2017, 5, 23710–23718. [Google Scholar] [CrossRef]

- Vanegas, F.; Campbell, D.; Roy, N.; Gaston, K.J.; Gonzalez, F. UAV tracking and following a ground target under motion and localisation uncertainty. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 4–11. [Google Scholar]

- Abu-jbara, K.; Alheadary, W.; Sundaramorthi, G.; Claudel, C. A Robust Vision-Based Runway Detection and Tracking Algorithm for Automatic UAV Landing Thesis by Khaled Abu Jbara In Partial Fulfillment of the Requirements For the Degree of Masters of Science. Master’s Thesis, King Abdullah Universify of Science and Technology, Jeddah, Saudi Arabia, 2015; pp. 1148–1157. [Google Scholar]

- Rahmes, M.D.; Chester, D.; Hunt, J.; Chiasson, B. Optimizing cooperative cognitive search and rescue UAVs. In Proceedings of the Autonomous Systems: Sensors, Vehicles, Security, and the Internet of Everything, Orlando, FL, USA, 16–18 April 2018; p. 31. [Google Scholar]

- De Cubber, G.; Doroftei, D.; Serrano, D.; Chintamani, K.; Sabino, R.; Ourevitch, S. The EU-ICARUS project: Developing assistive robotic tools for search and rescue operations. In Proceedings of the IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Linkoping, Sweden, 21–26 October 2013. [Google Scholar]

- Yeong, S.P.; King, L.M.; Dol, S.S. A Review on Marine Search and Rescue Operations Using Unmanned Aerial Vehicles. World Acad. Sci. Eng. Technol. Int. J. Mech. Aerospace Ind. Mechatron. Manuf. Eng. 2015, 9, 396–399. [Google Scholar]

- Madni, A.M.; Sievers, M.W.; Humann, J.; Ordoukhanian, E.; Boehm, B.; Lucero, S. Formal Methods in Resilient Systems Design: Application to Multi-UAV System-of-Systems Control. Discip. Converg. Syst. Eng. Res. 2018, 407–418. [Google Scholar]

- Moriarty, P.; Sheehy, R.; Doody, P. Neural networks to aid the autonomous landing of a UAV on a ship. In Proceedings of the 2017 28th Irish Signals and Systems Conference (ISSC), Killarney, Ireland, 20–21 June 2017; pp. 6–9. [Google Scholar]

- Zheng, Z.; Jin, Z.; Sun, L.; Zhu, M. Adaptive Sliding Mode Relative Motion Control for Autonomous Carrier Landing of Fixed-wing Unmanned Aerial Vehicles. IEEE Access 2017, 4, 5556–5565. [Google Scholar] [CrossRef]

- Din, A.; Bona, B.; Morrissette, J.; Hussain, M.; Violante, M.; Naseem, F. Embedded low power controller for autonomous landing of small UAVs using neural network. In Proceedings of the 10th International Conference on Frontiers of Information Technology, Islamabad, India, 17–19 December 2012; pp. 196–203. [Google Scholar]

- Ruchanurucks, M.; Rakprayoon, P.; Kongkaew, S. Automatic Landing Assist System Using IMU+PnP for Robust Positioning of Fixed-Wing UAVs. J. Intell. Robot. Syst. Theory Appl. 2018, 90, 189–199. [Google Scholar] [CrossRef]

- Falanga, D.; Zanchettin, A.; Simovic, A.; Delmerico, J.; Scaramuzza, D. Vision-based autonomous quadrotor landing on a moving platform. In Proceedings of the IEEE International Symposium on Safety, Security and Rescue Robotics, Shanghai, China, 11–13 October 2017; pp. 11–13. [Google Scholar]

- Mendoza Chavez, G. Autonomous Landing on Moving Platforms. Ph.D. Thesis, King Abdullah Universify of Science and Technology, Jeddah, Saudi Arabia, 2016. [Google Scholar]

- Borowczyk, A.; Nguyen, D.-T.; Nguyen, A.P.-V.; Nguyen, D.Q.; Saussié, D.; le Ny, J. Autonomous Landing of a Multirotor Micro Air Vehicle on a High Velocity Ground Vehicle. IFAC 2017, 50, 10488–10494. [Google Scholar]

- Bouabdallah, S. Design and Control of Quadrotors with Application to Autonomous Flying. École Polytech. Fédérale Lausanne À La Fac. Des Sci. Tech. L’Ingénieur 2007, 3727, 61. [Google Scholar]

- Furrer, F.; Burri, M.; Achtelik, M.; Siegwart, R. Robot Operating System (ROS): The Complete Reference (Volume 1); Springer: Berlin, Germany, 2016; Volume 2. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).