Field Evaluation of an Autonomous Mobile Robot for Navigation and Mapping in Forest

Abstract

1. Introduction

| First Author | Ref. | Year | Robotic System | Autonomy | Onboard Sensors | Approach for Tree Trait Estimation | Output |

|---|---|---|---|---|---|---|---|

| Tang | [16] | 2015 | Skid-steered robot | ✗ | LiDAR (FARO Focus3D 120S) | Improved maximum likelihood estimation [29] | 2D tree locations |

| Pierzchala | [18] | 2018 | Skid-steered robot (Superdroid 4WD) | ✗ | LiDAR (Velodyne VLP-16) | Point cloud-based (RANSAC plane-fitting, DBSCAN clustering, circle fitting) | 3D map, DBH |

| Tremblay | [17] | 2020 | Skid-steered robot (Clearpath Husky) | ✗ | LiDAR (Velodyne HDL32E) | Point cloud-based (Manual segmentation, cylinder fitting) | 3D map, DBH |

| Da Silva | [30] | 2021 | Skid-steered robot | ✗ | Camera (GoPro Hero6, FLIR M232, ZED Stereo, Allied Mako G-125) | Image-based (deep learning) | Tree bounding boxes |

| Da Silva | [31] | 2022 | Skid-steered robot | ✗ | Camera (OAK-D) | Image and depth-based (deep learning) | 2D tree locations |

| Freißmuth | [28] | 2024 | Quadruped robot (ANYmal) | ✔ | LiDAR (Velodyne VLP-16, Hesai QT64) | Point cloud-based (CSF, Vornoi-based clustering, cylinder fitting) | 3D map, DBH, height |

| Malladi | [20] | 2024 | Quadruped robot (ANYmal) | ✗ | LiDAR (Velodyne VLP-16) | Point cloud-based (CSF, density-based clustering, cylinder fitting) | 3D map, DBH |

| Sheng | [19] | 2024 | Skid-steered robot (AgileX Scout Mini) | ✗ | LiDAR (Velodyne VLP-16) | Point cloud-based (CSF, Euclidean clustering, circle fitting) | 3D map, DBH |

| Proposed approach | 2025 | Skid-steered robot (AgileX Scout 2.0) | ✔ | LiDAR (Velodyne VLP-16) camera (Intel RealSense D435) | Based on images and LiDAR scans (deep learning, LiDAR data projection, DBSCAN clustering) | 3D map, 3D tree locations, DBH |

- An autonomous navigation approach for a wheeled mobile robot operating in a forestry environment;

- A method for detecting tree locations and estimating trunk diameters by combining point cloud data with an image-based artificial neural network;

- The experimental validation of the proposed robotic system and the tree parameter estimation approach in a wooded area.

2. Materials and Methods

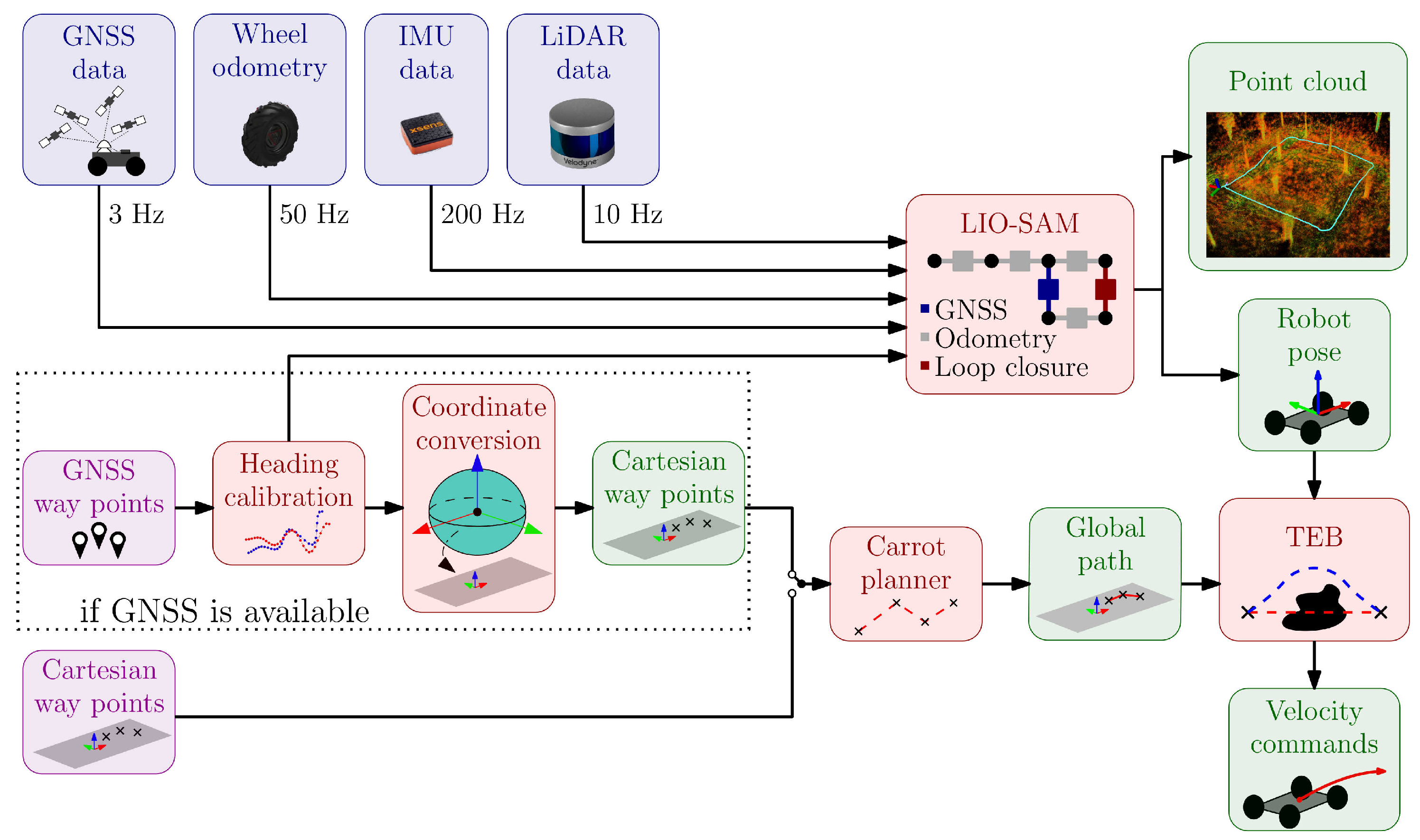

2.1. Autonomous Navigation and 3D Mapping

2.2. Vision-Based Tree Trait Identification

| Algorithm 1 Tree detection and DBH estimation pipeline. |

|

2.3. Experimental Setup

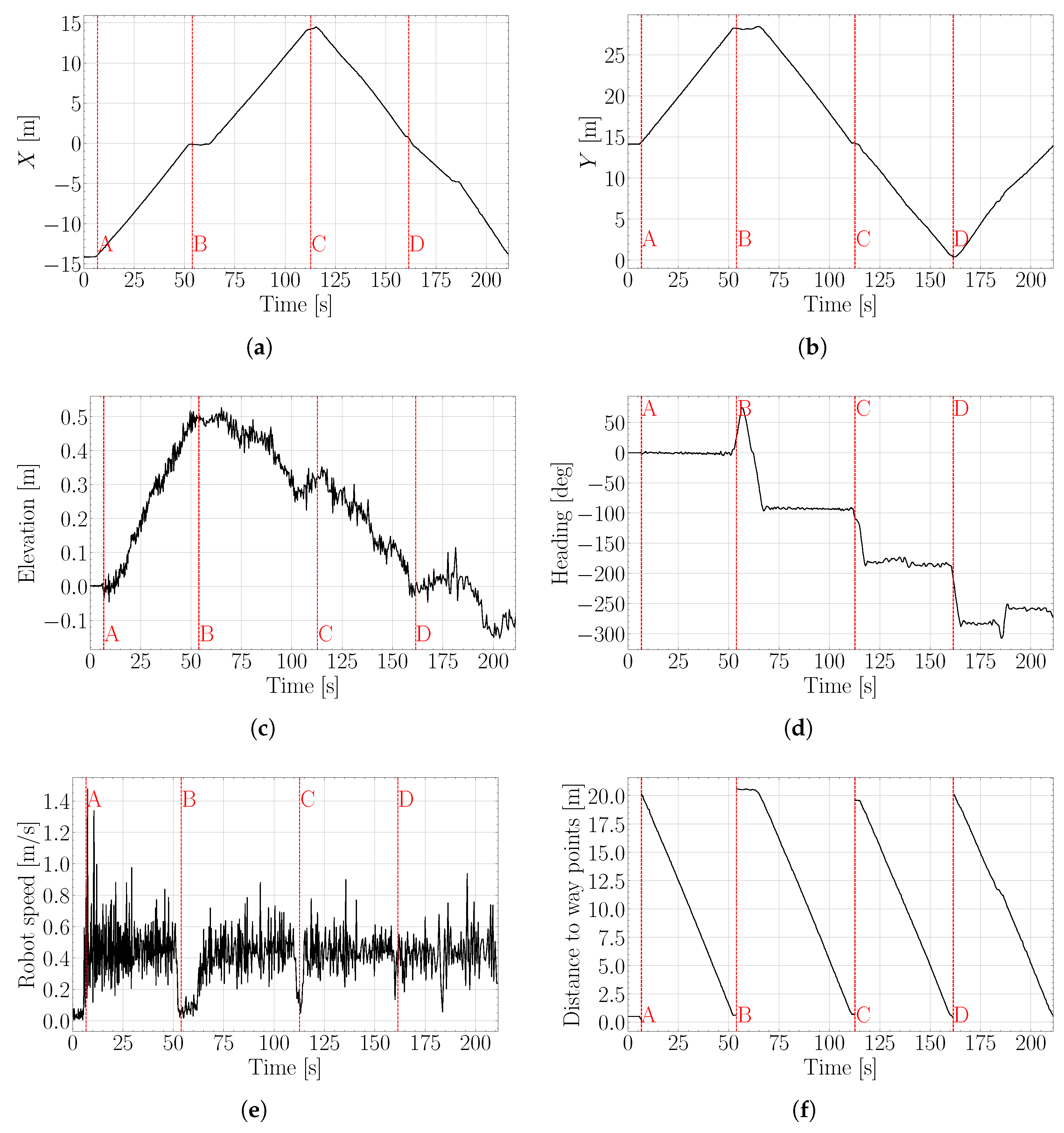

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CSF | Cloth Simulation Filter |

| DBH | Diameter at breast height |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| DHD | Directed Hausdorff distance |

| ECEF | Earth-centered, Earth-fixed coordinate system |

| ENU | East–north–up |

| GNSS | Global Navigation Satellite System |

| IMU | Inertial measurement unit |

| LiDAR | Light detection and ranging |

| LIO-SAM | Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping |

| PDOP | Position Dilution of Precision Proxy |

| PLS | Portable laser scanner |

| RANSAC | Random Sample Consensus |

| RTK | Real-time kinematic |

| SLAM | Simultaneous localization and mapping |

| SfM | Structure from motion |

| TLS | Terrestrial laser scanning |

| WGS 84 | World Geodetic System 1984 |

References

- De Frenne, P.; Lenoir, J.; Luoto, M.; Scheffers, B.R.; Zellweger, F.; Aalto, J.; Ashcroft, M.B.; Christiansen, D.M.; Decocq, G.; De Pauw, K.; et al. Forest microclimates and climate change: Importance, drivers and future research agenda. Glob. Change Biol. 2021, 27, 2279–2297. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.; Crowther, T.W.; Picard, N.; Wiser, S.; Zhou, M.; Alberti, G.; Schulze, E.D.; McGuire, A.D.; Bozzato, F.; Pretzsch, H.; et al. Positive biodiversity-productivity relationship predominant in global forests. Science 2016, 354, aaf8957. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L. Extraction of forest structural parameters by the comparison of Structure from Motion (SfM) and Backpack Laser Scanning (BLS) point clouds. Remote Sens. 2023, 15, 2144. [Google Scholar] [CrossRef]

- Liu, T.; Sun, Y.; Wang, C.; Zhang, Y.; Qiu, Z.; Gong, W.; Lei, S.; Tong, X.; Duan, X. Unmanned Aerial Vehicle and artificial intelligence revolutionizing efficient and precision sustainable forest management. J. Clean. Prod. 2021, 311, 127546. [Google Scholar] [CrossRef]

- Ferreira, J.F.; Portugal, D.; Andrada, M.E.; Machado, P.; Rocha, R.P.; Peixoto, P. Sensing and artificial perception for robots in precision forestry: A survey. Robotics 2023, 12, 139. [Google Scholar] [CrossRef]

- Wilkes, P.; Disney, M.; Armston, J.; Bartholomeus, H.; Bentley, L.; Brede, B.; Burt, A.; Calders, K.; Chavana-Bryant, C.; Clewley, D.; et al. TLS2trees: A scalable tree segmentation pipeline for TLS data. Methods Ecol. Evol. 2023, 14, 3083–3099. [Google Scholar] [CrossRef]

- Krisanski, S.; Taskhiri, M.S.; Gonzalez Aracil, S.; Herries, D.; Muneri, A.; Gurung, M.B.; Montgomery, J.; Turner, P. Forest structural complexity tool—An open source, fully-automated tool for measuring forest point clouds. Remote Sens. 2021, 13, 4677. [Google Scholar] [CrossRef]

- Åkerblom, M.; Kaitaniemi, P. Terrestrial laser scanning: A new standard of forest measuring and modelling? Ann. Bot. 2021, 128, 653–662. [Google Scholar] [CrossRef]

- Maset, E.; Cucchiaro, S.; Cazorzi, F.; Crosilla, F.; Fusiello, A.; Beinat, A. Investigating the performance of a handheld mobile mapping system in different outdoor scenarios. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 103–109. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, A.; Xiao, S.; Hu, S.; He, N.; Pang, H.; Zhang, X.; Yang, S. Single tree segmentation and diameter at breast height estimation with mobile LiDAR. IEEE Access 2021, 9, 24314–24325. [Google Scholar] [CrossRef]

- Henrich, J.; van Delden, J.; Seidel, D.; Kneib, T.; Ecker, A.S. TreeLearn: A deep learning method for segmenting individual trees from ground-based LiDAR forest point clouds. Ecol. Inform. 2024, 84, 102888. [Google Scholar] [CrossRef]

- Kim, D.H.; Ko, C.U.; Kim, D.G.; Kang, J.T.; Park, J.M.; Cho, H.J. Automated segmentation of individual tree structures using deep learning over LiDAR point cloud data. Forests 2023, 14, 1159. [Google Scholar] [CrossRef]

- Zhu, R.; Chen, L.; Chai, G.; Chen, M.; Zhang, X. Integrating extraction framework and methods of individual tree parameters based on close-range photogrammetry. Comput. Electron. Agric. 2023, 215, 108411. [Google Scholar] [CrossRef]

- Maset, E.; Scalera, L.; Beinat, A.; Visintini, D.; Gasparetto, A. Performance investigation and repeatability assessment of a mobile robotic system for 3D mapping. Robotics 2022, 11, 54. [Google Scholar] [CrossRef]

- Tiozzo Fasiolo, D.; Scalera, L.; Maset, E.; Gasparetto, A. Towards autonomous mapping in agriculture: A review of supportive technologies for ground robotics. Robot. Auton. Syst. 2023, 169, 104514. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Khoramshahi, E.; Hakala, T.; Hyyppä, J.; Holopainen, M.; Hyyppä, H. SLAM-aided stem mapping for forest inventory with small-footprint mobile LiDAR. Forests 2015, 6, 4588–4606. [Google Scholar] [CrossRef]

- Tremblay, J.F.; Béland, M.; Gagnon, R.; Pomerleau, F.; Giguère, P. Automatic three-dimensional mapping for tree diameter measurements in inventory operations. J. Field Robot. 2020, 37, 1328–1346. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Sheng, Y.; Zhao, Q.; Wang, X.; Liu, Y.; Yin, X. Tree diameter at breast height extraction based on mobile laser scanning point cloud. Forests 2024, 15, 590. [Google Scholar] [CrossRef]

- Malladi, M.V.; Guadagnino, T.; Lobefaro, L.; Mattamala, M.; Griess, H.; Schweier, J.; Chebrolu, N.; Fallon, M.; Behley, J.; Stachniss, C. Tree instance segmentation and traits estimation for forestry environments exploiting LiDAR data collected by mobile robots. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 17933–17940. [Google Scholar]

- Zhang, X.; Liu, Y.; Liu, J.; Chen, X.; Xu, R.; Ma, W.; Zhang, Z.; Fu, S. An autonomous navigation system with a trajectory prediction-based decision mechanism for rubber forest navigation. Sci. Rep. 2024, 14, 29495. [Google Scholar] [CrossRef]

- Ni, J.; Chen, Y.; Tang, G.; Cao, W.; Yang, S.X. An Integration Model of Blind Spot Estimation and Traversable Area Detection for Indoor Robots. IEEE Sen. J. 2025, 25, 17850–17866. [Google Scholar] [CrossRef]

- Ni, J.; Chen, Y.; Zhang, Z.; Zhao, Y.; Yang, S.X. An Improved Fuzzy Decision and Geometric Inference Based Indoor Layout Estimation Model from RGB-D Images. IEEE Trans. Instrum. Meas. 2025, 74, 5015115. [Google Scholar] [CrossRef]

- Ali, M.; Jardali, H.; Roy, N.; Liu, L. Autonomous navigation, mapping and exploration with gaussian processes. In Proceedings of the Robotics: Science and Systems (RSS), Daegu, Republic of Korea, 10–14 July 2023. [Google Scholar]

- Gasparino, M.V.; Sivakumar, A.N.; Liu, Y.; Velasquez, A.E.; Higuti, V.A.; Rogers, J.; Tran, H.; Chowdhary, G. Wayfast: Navigation with predictive traversability in the field. IEEE Robot. Autom. Lett. 2022, 7, 10651–10658. [Google Scholar] [CrossRef]

- Fahnestock, E.; Fuentes, E.; Prentice, S.; Vasilopoulos, V.; Osteen, P.R.; Howard, T.; Roy, N. Far-Field Image-Based Traversability Mapping for A Priori Unknown Natural Environments. IEEE Robot. Autom. Lett. 2025, 10, 6039–6046. [Google Scholar] [CrossRef]

- Pollayil, M.J.; Angelini, F.; de Simone, L.; Fanfarillo, E.; Fiaschi, T.; Maccherini, S.; Angiolini, C.; Garabini, M. Robotic monitoring of forests: A dataset from the EU habitat 9210* in the Tuscan Apennines (central Italy). Sci. Data 2023, 10, 845. [Google Scholar] [CrossRef]

- Freißmuth, L.; Mattamala, M.; Chebrolu, N.; Schaefer, S.; Leutenegger, S.; Fallon, M. Online tree reconstruction and forest inventory on a mobile robotic system. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 11765–11772. [Google Scholar]

- Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppä, J. LiDAR scan matching aided inertial navigation system in GNSS-denied environments. Sensors 2015, 15, 16710–16728. [Google Scholar] [CrossRef]

- da Silva, D.Q.; Dos Santos, F.N.; Sousa, A.J.; Filipe, V. Visible and thermal image-based trunk detection with deep learning for forestry mobile robotics. J. Imaging 2021, 7, 176. [Google Scholar] [CrossRef]

- da Silva, D.Q.; dos Santos, F.N.; Filipe, V.; Sousa, A.J.; Oliveira, P.M. Edge AI-based tree trunk detection for forestry monitoring robotics. Robotics 2022, 11, 136. [Google Scholar] [CrossRef]

- Arslan, A.E.; Inan, M.; Çelik, M.F.; Erten, E. Estimations of forest stand parameters in open forest stand using point cloud data from Terrestrial Laser Scanning, Unmanned Aerial Vehicle and aerial LiDAR data. Eur. J. For. Eng. 2022, 8, 46–54. [Google Scholar] [CrossRef]

- Proudman, A.; Ramezani, M.; Digumarti, S.T.; Chebrolu, N.; Fallon, M. Towards real-time forest inventory using handheld LiDAR. Robot. Auton. Syst. 2022, 157, 104240. [Google Scholar] [CrossRef]

- Laino, D.; Cabo, C.; Prendes, C.; Janvier, R.; Ordonez, C.; Nikonovas, T.; Doerr, S.; Santin, C. 3DFin: A software for automated 3D forest inventories from terrestrial point clouds. For. Int. J. For. Res. 2024, 97, 479–496. [Google Scholar] [CrossRef]

- Wołk, K.; Tatara, M.S. A review of semantic segmentation and instance segmentation techniques in forestry using LiDAR and imagery data. Electronics 2024, 13, 4139. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Xiang, B.; Wielgosz, M.; Kontogianni, T.; Peters, T.; Puliti, S.; Astrup, R.; Schindler, K. Automated forest inventory: Analysis of high-density airborne LiDAR point clouds with 3D deep learning. Remote Sens. Environ. 2024, 305, 114078. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef]

- Grondin, V.; Fortin, J.M.; Pomerleau, F.; Giguère, P. Tree detection and diameter estimation based on deep learning. For. Int. J. For. Res. 2022, 96, 264–276. [Google Scholar] [CrossRef]

- Zhou, S.; Xi, J.; McDaniel, M.W.; Nishihata, T.; Salesses, P.; Iagnemma, K. Self-supervised learning to visually detect terrain surfaces for autonomous robots operating in forested terrain. J. Field Robot. 2012, 29, 277–297. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled LiDAR Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5135–5142. [Google Scholar]

- Tiozzo Fasiolo, D.; Scalera, L.; Maset, E. Comparing LiDAR and IMU-based SLAM approaches for 3D robotic mapping. Robotica 2023, 41, 2588–2604. [Google Scholar] [CrossRef]

- Sorkine-Hornung, O.; Rabinovich, M. Least-squares rigid motion using SVD. Computing 2017, 1, 1–5. [Google Scholar]

- Rösmann, C.; Hoffmann, F.; Bertram, T. Integrated online trajectory planning and optimization in distinctive topologies. Robot. Auton. Syst. 2017, 88, 142–153. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Tiozzo Fasiolo, D.; Maset, E.; Scalera, L.; Macaulay, S.; Gasparetto, A.; Fusiello, A. Combining LiDAR SLAM and deep learning-based people detection for autonomous indoor mapping in a crowded environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 447–452. [Google Scholar] [CrossRef]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. (TODS) 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Satopaa, V.; Albrecht, J.; Irwin, D.; Raghavan, B. Finding a “kneedle” in a haystack: Detecting knee points in system behavior. In Proceedings of the 2011 31st International Conference on Distributed Computing Systems Workshops, Minneapolis, MN, USA, 20–24 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 166–171. [Google Scholar]

- Koide, K.; Oishi, S.; Yokozuka, M.; Banno, A. General, single-shot, target-less, and automatic LiDAR-camera extrinsic calibration toolbox. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 11301–11307. [Google Scholar]

| Global Path [m] | Robot Path Length [m] | Survey Duration [s] | Number of Points in the 3D Map | Number of Trees Detected | DBH [cm] | |

|---|---|---|---|---|---|---|

| Test (2) | 10 × 10 | 43.2 | 112 | 2,609,866 | 16 | |

| Test (3) | 15 × 15 | 66.4 | 187 | 4,552,286 | 21 | |

| Test (4) | 20 × 20 | 83.1 | 211 | 5,556,149 | 17 | |

| Test (5) | 15 × 10 | 89.2 | 317 | 2,834,634 | 24 |

| Tree ID | X | Y | DBH |

|---|---|---|---|

| 0 | 2.57 | 3.80 | 34 |

| 1 | −0.75 | 6.67 | 20 |

| 2 | 2.85 | 6.13 | 21 |

| 3 | 7.43 | 5.30 | 30 |

| 4 | 8.31 | 3.29 | 31 |

| 5 | 10.57 | 10.16 | 20 |

| 6 | 11.88 | 13.13 | 13 |

| 7 | 10.37 | 15.60 | 59 |

| 8 | 7.16 | 13.86 | 29 |

| 9 | −1.64 | 17.65 | 29 |

| 10 | −4.69 | 19.28 | 25 |

| 11 | −6.60 | 11.38 | 19 |

| 12 | −2.28 | 9.46 | 14 |

| 13 | −7.19 | 7.21 | 48 |

| 14 | −6.46 | 1.26 | 17 |

| 15 | −4.90 | 3.95 | 29 |

| Tree ID | X | Y | DBH |

|---|---|---|---|

| 0 | 2.86 | 3.28 | 37 |

| 1 | 0.70 | 6.62 | 17 |

| 2 | 8.65 | 2.85 | 32 |

| 3 | 8.44 | 6.98 | 4 |

| 4 | 11.54 | 8.71 | 18 |

| 5 | 12.11 | 12.67 | 18 |

| 6 | 10.46 | 15.82 | 27 |

| 7 | 6.26 | 13.04 | 13 |

| 8 | −0.6 | 17.44 | 23 |

| 9 | −0.74 | 22.18 | 22 |

| 10 | −0.73 | 25.83 | 31 |

| 11 | −4.7 | 26.41 | 13 |

| 12 | −8.36 | 22.8 | 25 |

| 13 | −4.16 | 19.91 | 22 |

| 14 | −1.84 | 20.85 | 14 |

| 15 | −10.02 | 16.71 | 34 |

| 16 | −5.84 | 11.86 | 19 |

| 17 | −12.73 | 13.1 | 15 |

| 18 | −4.43 | 4.8 | 29 |

| 19 | −4.89 | 2.85 | 11 |

| 20 | 5.76 | 0.13 | 30 |

| Tree ID | X | Y | DBH |

|---|---|---|---|

| 0 | −9.64 | 22.92 | 24 |

| 1 | −5.44 | 18.84 | 14 |

| 2 | −1.21 | 31.25 | 20 |

| 3 | 2.01 | 37.91 | 10 |

| 4 | −4.58 | 35.17 | 10 |

| 5 | 8.92 | 24.24 | 34 |

| 6 | 12.39 | 18.37 | 23 |

| 7 | 11.3 | 15.04 | 24 |

| 8 | 15.8 | 15.36 | 19 |

| 9 | 6.25 | 0.18 | 21 |

| 10 | 0.86 | −1.53 | 17 |

| 11 | −3.92 | 2.05 | 40 |

| 12 | −5.27 | 6.66 | 22 |

| 13 | −2.34 | 8.86 | 29 |

| 14 | −15.48 | 11.8 | 23 |

| 15 | −15.6 | 18.82 | 15 |

| 16 | −19.26 | 16.23 | 10 |

| Tree ID | X | Y | DBH |

|---|---|---|---|

| 0 | −8.07 | −5.44 | 36 |

| 1 | −5.16 | −7.47 | 91 |

| 2 | −5.75 | −8.47 | 21 |

| 3 | −11.11 | −15.1 | 27 |

| 4 | −2.76 | −11.25 | 22 |

| 5 | 1.39 | −13.2 | 23 |

| 6 | −1.19 | −6.79 | 36 |

| 7 | 1.53 | −3.95 | 20 |

| 8 | 5.7 | 0.07 | 24 |

| 10 | 10.86 | −2.96 | 24 |

| 12 | 8.77 | −14.37 | 18 |

| 13 | −3.58 | −19.71 | 27 |

| 14 | 5.52 | −18.71 | 19 |

| 15 | 4.73 | −24.45 | 27 |

| 16 | 10.18 | −23.8 | 14 |

| 17 | 17.99 | −5.84 | 23 |

| 18 | 17.79 | −10.34 | 6 |

| 19 | 15.32 | −10.25 | 9 |

| 20 | −2.5 | −1.27 | 34 |

| 21 | −0.44 | 5.29 | 24 |

| 22 | −5.19 | 3.32 | 58 |

| 23 | −3.15 | 5.76 | 78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tiozzo Fasiolo, D.; Scalera, L.; Maset, E.; Gasparetto, A. Field Evaluation of an Autonomous Mobile Robot for Navigation and Mapping in Forest. Robotics 2025, 14, 89. https://doi.org/10.3390/robotics14070089

Tiozzo Fasiolo D, Scalera L, Maset E, Gasparetto A. Field Evaluation of an Autonomous Mobile Robot for Navigation and Mapping in Forest. Robotics. 2025; 14(7):89. https://doi.org/10.3390/robotics14070089

Chicago/Turabian StyleTiozzo Fasiolo, Diego, Lorenzo Scalera, Eleonora Maset, and Alessandro Gasparetto. 2025. "Field Evaluation of an Autonomous Mobile Robot for Navigation and Mapping in Forest" Robotics 14, no. 7: 89. https://doi.org/10.3390/robotics14070089

APA StyleTiozzo Fasiolo, D., Scalera, L., Maset, E., & Gasparetto, A. (2025). Field Evaluation of an Autonomous Mobile Robot for Navigation and Mapping in Forest. Robotics, 14(7), 89. https://doi.org/10.3390/robotics14070089