Exploiting a Variable-Sized Map and Vicinity-Based Memory for Dynamic Real-Time Planning of Autonomous Robots

Abstract

1. Introduction

- A unified autonomous navigation system that dynamically adapts to complex environments with robust obstacle avoidance. Its low computational overhead enables onboard processing for visual odometry, local mapping, and trajectory planning without requiring prior knowledge of the environment or reliance on complex sensors.

- An innovative, adaptive local ESDF map that dynamically adjusts its size and resolution based on vehicle velocity, enabling high-frequency updates for efficient and responsive path planning.

- A novel mechanism combining adaptive offset map positioning based on angular velocity and short-term map memory to retain information from previously observed areas at the vicinity of the robot, minimizing redundant calculations and preventing unnecessary maneuvers.

2. Mapping and Path Planning Algorithm

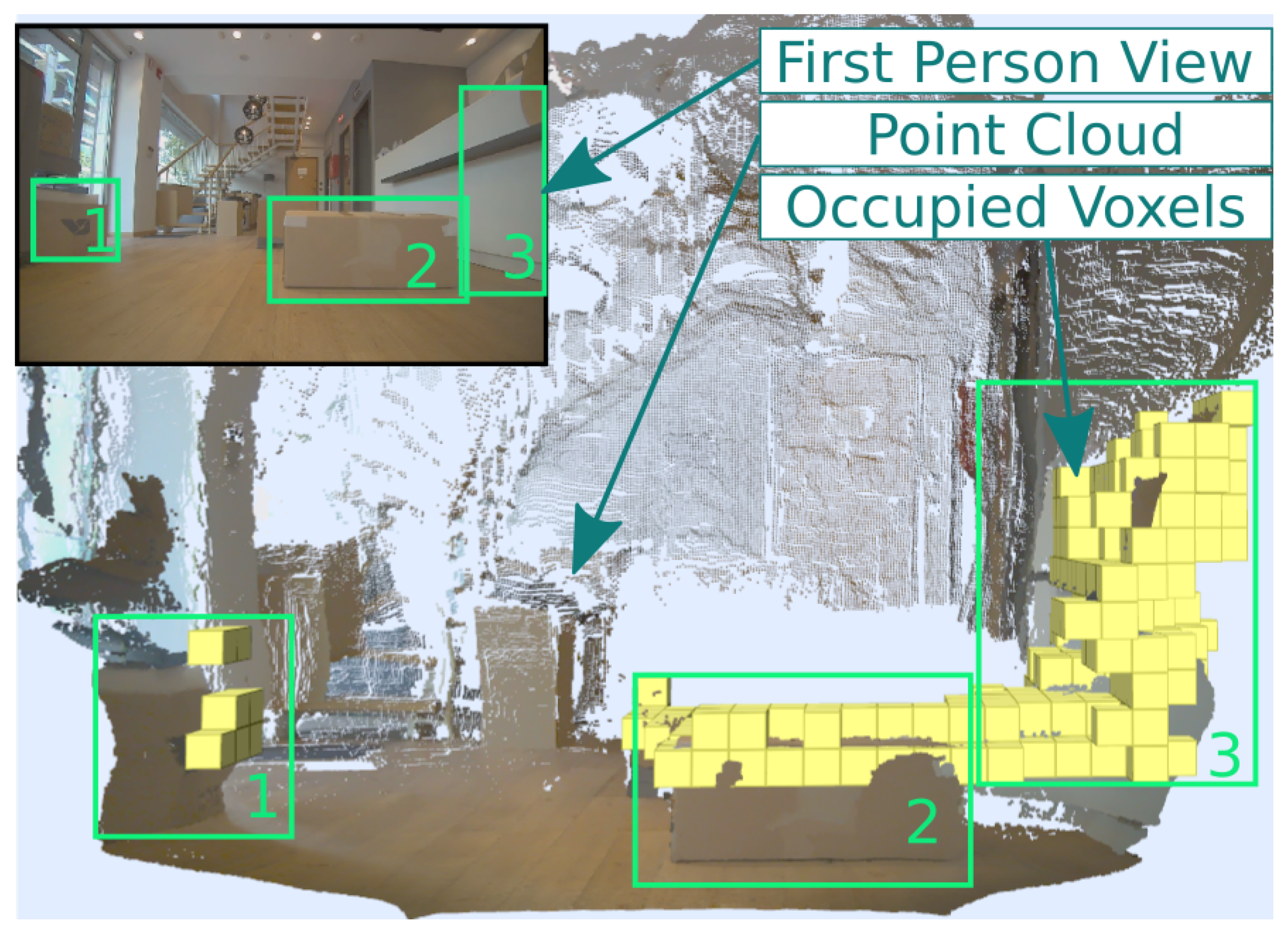

2.1. Local Mapping

| Algorithm 1 Occupancy update algorithm |

|

| Algorithm 2 ESDF update algorithm |

|

2.2. Path Planning

3. The Developed UGV

3.1. Hardware

3.2. Control

4. Experimental Results

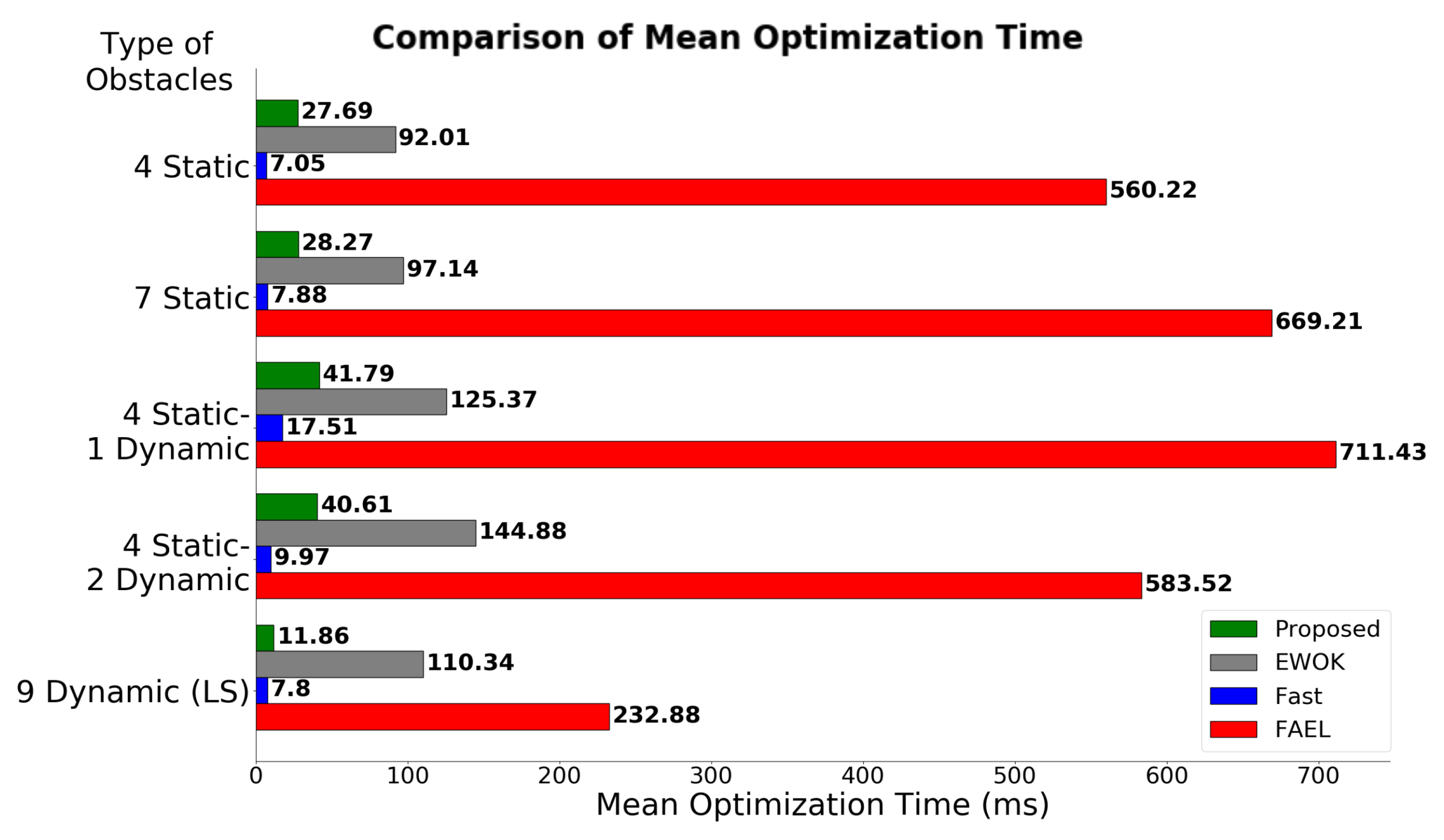

4.1. Simulation Experiments

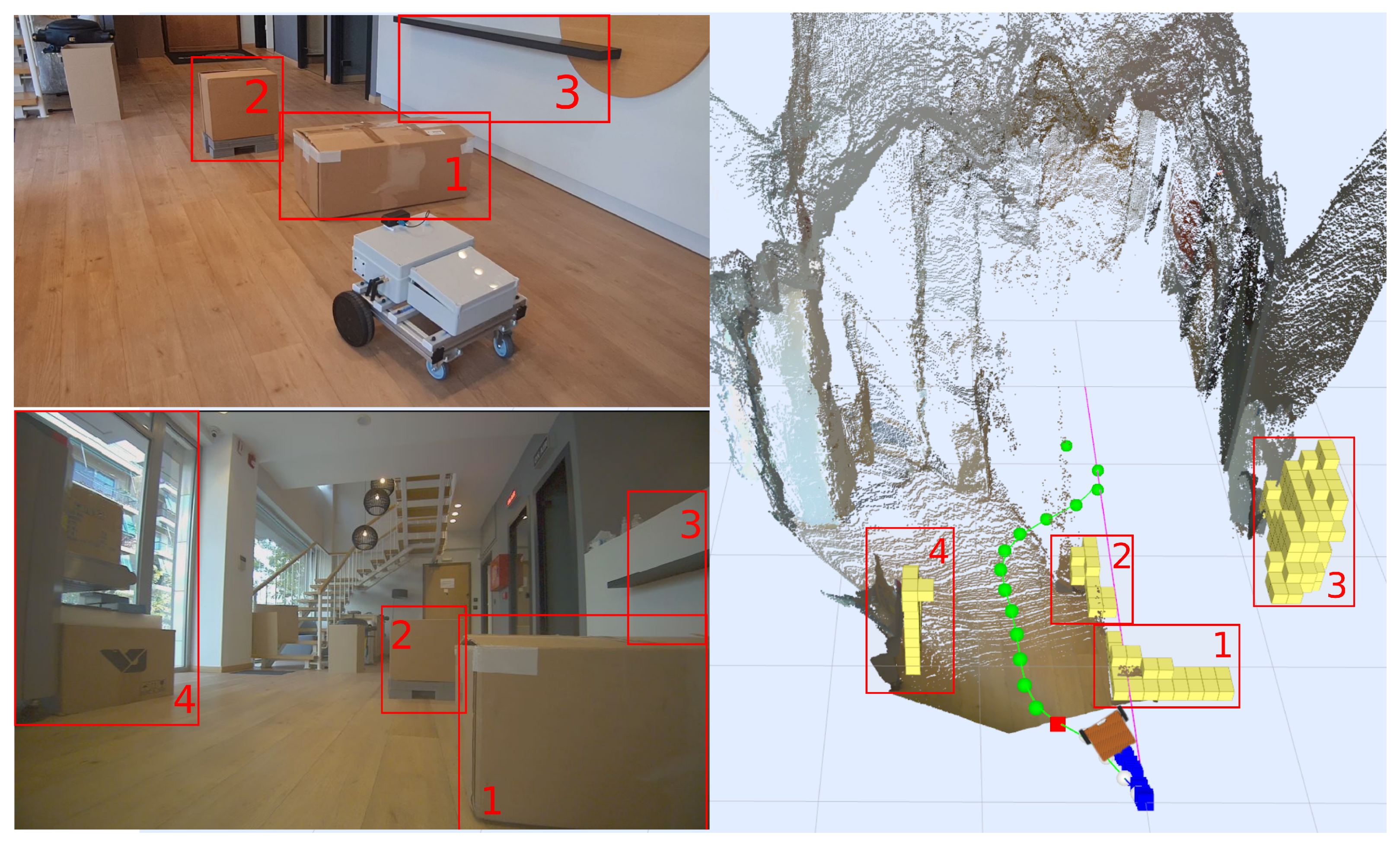

4.2. Real-World Experiments

5. Discussion

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| GPS | Global Positioning System |

| ESDF | Euclidean Signed Distance Field |

| RGB-D | Red Green Blue–Depth |

| TSDF | Truncated Signed Distance Field |

| 3D | Three-Dimensional |

| LiDAR | Light Detection And Ranging |

| UGV | Unmanned Ground Vehicle |

| CPU | Central Processing Unit |

| CPR | Counts Per Revolution |

| CAN | Controller Area Network |

| PID | Proportional–Integral–Derivative |

| PP | Projected Point |

| RAM | Random-Access Memory |

References

- Lu, Z.; Liu, F.; Lin, X. Vision-based localization methods under GPS-denied conditions. arXiv 2022, arXiv:cs.CV/2211.11988. [Google Scholar]

- Gao, F.; Wang, L.; Zhou, B.; Zhou, X.; Pan, J.; Shen, S. Teach-Repeat-Replan: A Complete and Robust System for Aggressive Flight in Complex Environments. IEEE Trans. Robot. 2020, 36, 1526–1545. [Google Scholar] [CrossRef]

- Oleynikova, H.; Taylor, Z.; Siegwart, R.; Nieto, J. Safe Local Exploration for Replanning in Cluttered Unknown Environments for Micro-Aerial Vehicles. IEEE Robot. Autom. Lett. 2017, 3, 1474–1481. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Oleynikova, H.; Taylor, Z.; Fehr, M.; Siegwart, R.; Nieto, J. Voxblox: Incremental 3D Euclidean Signed Distance Fields for on-board MAV planning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1366–1373. [Google Scholar] [CrossRef]

- Han, L.; Gao, F.; Zhou, B.; Shen, S. FIESTA: Fast Incremental Euclidean Distance Fields for Online Motion Planning of Aerial Robots. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4423–4430. [Google Scholar] [CrossRef]

- Oleynikova, H.; Lanegger, C.; Taylor, Z.; Pantic, M.; Millane, A.; Siegwart, R.; Nieto, J. An open-source system for vision-based micro-aerial vehicle mapping, planning, and flight in cluttered environments. J. Field Robot. 2020, 37, 642–666. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Ye, H.; Xu, C.; Gao, F. EGO-Planner: An ESDF-free Gradient-based Local Planner for Quadrotors. IEEE Robot. Autom. Lett. 2020, 6, 478–485. [Google Scholar] [CrossRef]

- Tordesillas, J.; How, J.P. FASTER: Fast and Safe Trajectory Planner for Navigation in Unknown Environments. IEEE Trans. Robot. 2021, 38, 922–938. [Google Scholar]

- Usenko, V.; von Stumberg, L.; Pangercic, A.; Cremers, D. Real-time trajectory replanning for MAVs using uniform B-splines and a 3D circular buffer. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 215–222. [Google Scholar] [CrossRef]

- Yuan, C.; Xu, W.; Liu, X.; Hong, X.; Zhang, F. Efficient and Probabilistic Adaptive Voxel Mapping for Accurate Online LiDAR Odometry. IEEE Robot. Autom. Lett. 2022, 7, 8518–8525. [Google Scholar] [CrossRef]

- Wang, W.; Hu, Y.; Xi, W.; Zou, D.; Yu, W. Efficient Semantic-Aware TSDF Mapping with Adaptive Resolutions. In Proceedings of the 2023 3rd International Conference on Robotics, Automation and Artificial Intelligence (RAAI), Singapore, 14–16 December 2023; pp. 39–45. [Google Scholar] [CrossRef]

- Funk, N.; Tarrio, J.; Papatheodorou, S.; Popović, M.; Alcantarilla, P.F.; Leutenegger, S. Multi-Resolution 3D Mapping with Explicit Free Space Representation for Fast and Accurate Mobile Robot Motion Planning. IEEE Robot. Autom. Lett. 2021, 6, 3553–3560. [Google Scholar] [CrossRef]

- Zheng, J.; Barath, D.; Pollefeys, M.; Armeni, I. Map-adapt: Real-time quality-adaptive semantic 3D maps. In European Conference on Computer Vision; Springer: Cham, Switzerlad, 2025; pp. 220–237. [Google Scholar]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding Horizon “Next-Best-View” Planner for 3D Exploration. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1462–1468. [Google Scholar] [CrossRef]

- Nieuwenhuisen, M.; Beul, M.; Droeschel, D.; Behnke, S. Obstacle Detection and Navigation Planning for Autonomous Micro Aerial Vehicles. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems, ICUAS 2014—Conference Proceedings, Orlando, FL, USA, 27–30 May 2014. [Google Scholar] [CrossRef]

- Mohta, K.; Watterson, M.; Mulgaonkar, Y.; Liu, S.; Qu, C.; Makineni, A.; Saulnier, K.; Sun, K.; Zhu, A.; Delmerico, J.; et al. Fast, Autonomous Flight in GPS-Denied and Cluttered Environments. J. Field Robot. 2017, 35, 101–120. [Google Scholar] [CrossRef]

- Burri, M.; Oleynikova, H.; Achtelik, M.W.; Siegwart, R. Real-time visual-inertial mapping, re-localization and planning onboard MAVs in unknown environments. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1872–1878. [Google Scholar] [CrossRef]

- Zhou, B.; Pan, J.; Gao, F.; Shen, S. RAPTOR: Robust and Perception-Aware Trajectory Replanning for Quadrotor Fast Flight. IEEE Trans. Robot. 2021, 37, 1992–2009. [Google Scholar] [CrossRef]

- Geladaris, A.; Papakostas, L.; Mastrogeorgiou, A.; Sfakiotakis, M.; Polygerinos, P. Real-Time Local Map Generation and Collision-Free Trajectory Planning for Autonomous Vehicles in Dynamic Environments. In Proceedings of the 2023 International Conference on Control, Artificial Intelligence, Robotics & Optimization (ICCAIRO), Crete, Greece, 11–13 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Zuo, K.; Cheng, X.; Zhang, H. Overview of Obstacle Avoidance Algorithms for UAV Environment Awareness. J. Phys. Conf. Ser. 2021, 1865, 042002. [Google Scholar] [CrossRef]

- Johnson, S.G. The NLopt Nonlinear-Optimization Package. 2007. Available online: https://github.com/stevengj/nlopt (accessed on 15 January 2024).

- StereoLabs. ZED X. 2024. Available online: https://www.stereolabs.com/en-gr/products/zed-x (accessed on 20 November 2023).

- ODriveRobotics. ODrive BotWheels. 2024. Available online: https://odriverobotics.com/shop/botwheels (accessed on 29 August 2023).

- ODriveRobotics. ODrive S1 Datasheet. 2024. Available online: https://docs.odriverobotics.com/v/latest/hardware/s1-datasheet.html (accessed on 29 August 2023).

- Michiel Franke, C.L. Tracking_pid. 2020. Available online: https://github.com/nobleo/tracking_pid (accessed on 19 December 2023).

- PAL-Robotics. Intel RealSense Gazebo ROS Plugin. 2019. Available online: https://github.com/pal-robotics/realsense_gazebo_plugin (accessed on 23 January 2024).

- Billy Okal, T.L. Pedestrian Simulator. 2014. Available online: https://github.com/srl-freiburg/pedsim_ros (accessed on 23 January 2024).

- OpenRobotics. P3D (3D Position Interface for Ground Truth). 2014. Available online: https://classic.gazebosim.org/tutorials?tut=ros_gzplugins#P3D(3DPositionInterfaceforGroundTruth) (accessed on 9 July 2024).

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and efficient quadrotor trajectory generation for fast autonomous flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, B.; Fan, Z.; Zhu, Y.; Jie, Y.; Li, L.; Cheng, H. FAEL: Fast Autonomous Exploration for Large-scale Environments With a Mobile Robot. IEEE Robot. Autom. Lett. 2023, 8, 1667–1674. [Google Scholar] [CrossRef]

- Wanders, I. Scalopus. 2018. Available online: https://github.com/iwanders/scalopus (accessed on 30 April 2024).

- StereoLabs. Positional Tracking Overview. 2024. Available online: https://www.stereolabs.com/docs/positional-tracking (accessed on 15 February 2024).

| Method | 4S | 7S | 4S-1D | 4S-2D |

| Proposed | 1.100 | 1.113 | 1.266 | 1.153 |

| FAST Planner | 1.095 | 1.128 | 1.268 | 1.154 |

| Method | Narrow | Winding | Sparse |

| Proposed | 0.83 | 1.0 | 0.8 |

| FAST-Planner | 0.0 | 0.0 | 0.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geladaris, A.; Papakostas, L.; Mastrogeorgiou, A.; Polygerinos, P. Exploiting a Variable-Sized Map and Vicinity-Based Memory for Dynamic Real-Time Planning of Autonomous Robots. Robotics 2025, 14, 44. https://doi.org/10.3390/robotics14040044

Geladaris A, Papakostas L, Mastrogeorgiou A, Polygerinos P. Exploiting a Variable-Sized Map and Vicinity-Based Memory for Dynamic Real-Time Planning of Autonomous Robots. Robotics. 2025; 14(4):44. https://doi.org/10.3390/robotics14040044

Chicago/Turabian StyleGeladaris, Aristeidis, Lampis Papakostas, Athanasios Mastrogeorgiou, and Panagiotis Polygerinos. 2025. "Exploiting a Variable-Sized Map and Vicinity-Based Memory for Dynamic Real-Time Planning of Autonomous Robots" Robotics 14, no. 4: 44. https://doi.org/10.3390/robotics14040044

APA StyleGeladaris, A., Papakostas, L., Mastrogeorgiou, A., & Polygerinos, P. (2025). Exploiting a Variable-Sized Map and Vicinity-Based Memory for Dynamic Real-Time Planning of Autonomous Robots. Robotics, 14(4), 44. https://doi.org/10.3390/robotics14040044