Abstract

Manipulation involves both fine tactile feedback, with dynamic transients perceived by fingerpad mechanoreceptors, and kinaesthetic force feedback, involving the whole hand musculoskeletal structure. In teleoperation experiments, these fundamental aspects are usually divided between different setups at the operator side: those making use of lightweight gloves and optical tracking systems, oriented toward tactile-only feedback, and those implementing exoskeletons or grounded manipulators as haptic devices delivering kinaesthetic force feedback. At the level of hand interfaces, exoskeletons providing kinaesthetic force feedback undergo a trade-off between maximum rendered forces and bandpass of the embedded actuators, making these systems unable to properly render tactile feedback. To overcome these limitations, here, we investigate a full upper limb exoskeleton, covering all the upper limb body segments from shoulder to finger phalanxes, coupled with linear voice coil actuators at the fingertips. These are developed to render wide-bandwidth tactile feedback together with the kinaesthetic force feedback provided by the hand exoskeleton. We investigate the system in a pick-and-place teleoperation task, under two different feedback conditions (visual-only and visuo-haptic). The performance based on measured interaction forces and the number of correct trials are evaluated and compared. The study demonstrates the overall feasibility and effectiveness of a complex full upper limb exoskeleton (seven limb-actuated DoFs plus five hand DoFs) capable of combined kinaesthetic and tactile haptic feedback. Quantitative results show significant performance improvements when haptic feedback is provided, in particular for the mean and peak exerted forces, and for the correct rate of the pick-and-place task.

1. Introduction

Teleoperation systems for the control of remote robots are technologies experimented in different fields, ranging from surgical applications, such as the Da VinciTM robot [1] to inspection and intervention in dangerous or contaminated areas [2,3]. Teleoperated systems enable intervention in inaccessible, unhealthy, or risky environments, and make it possible to accomplish complex manual tasks not yet achievable with unsupervised autonomous robotic systems.

Teleoperation stability and, at the same time, the perception of interaction forces are thus two of the key requirements for a successful telemanipulation system, and they are still an open challenge for the scientific community [4,5]. Concerning a teleoperation architecture, to achieve the abovementioned results, aspects like the stability and robustness to randomly varying communication delay and loss of information have to be solved [6]. Several control techniques have been proposed to overcome the abovementioned problems, i.e., passivity-based approaches [7] (wave variables, time-domain passivity approach (TDPA), and adaptive controllers), four-channel architecture [8], and model-mediated control [9]. From the operator’s perspective, the rendering of kinaesthetic force feedback can be implemented through different interfaces: desktop haptic devices are a conventional choice when the workspace is limited, such as in surgical or micro-assembly operations [10,11]. Robotic manipulators with proper force sensing and control features in terms of dynamic and transparency can also be used as a haptic interface, covering a wide workspace. This solution has been adopted in advanced bilateral telemanipulation systems, such as the DLR Bimanual Haptic Device [12] or the telepresence system of the NimbRo Teams winning the ANA Avatar XPRIZE international competition in 2022 [13]. Other solutions involve the use of a custom operator arm [14,15] to exert haptic feedback. Lastly, solutions such as those in [16,17] provide force interaction with the remote environment solely at the level of the finger, whereas the motion of the arm is collected by a separate tracking system. With the above systems, the interaction is limited at the end effector. The use of active robotic exoskeletons can improve the immediateness of control, allowing for both the direct tracking of the operator movements and force interaction at the level of each body segment.

Complex exoskeleton systems covering the full upper limb, from shoulder to hand, have been presented for teleoperation [18]. Despite the hardware complexity, they offer advantages with respect to other control interfaces (such as 6-DoF joysticks and desktop haptic devices): They provide intuitive control of the arm pose and perception of interaction forces also in complex teleoperated movements. A dual-arm exoskeleton is presented in [19] and evaluated in the teleoperation of a humanoid robot. However, no force feedback is provided at the level of the fingers, since the operator could use only a trigger for closing the remote robotic hand. In the framework of the EU Project CENTAURO, our group proposed an exoskeleton-based telepresence suit tested in different manipulation tasks [18]. In the project, most tasks consisted of gross manipulation where the force feedback of the whole arm was the most informative sensory source for the operator, and no performance measurements were focused on fine manipulation.

Regarding haptic feedback at the level of hands, hand exoskeletons can be used to provide force feedback related to finger closing [20,21]. However, the high number of degrees of freedom and the spatial constraints required for a compact structure introduce a trade-off between the intensity and dynamics of the delivered feedback. The bandwidth of haptic rendering is often limited by the use of high-gear reduction, tendon transmissions, or remote pneumatic actuators. Other solutions experimented on in lightweight gloves [22,23] are based on passive force feedback and actuated brakes [24,25], based on the principle that force rendering is unidirectional in grasping. This solution is also common in commercial haptic gloves targeted for virtual reality applications, such as the CyberGrasp, SenseGlove, and Haptx devices.

Unfortunately, in agreement with the richness of mechanoreceptors found in fingerpads [26], high-frequency cues and fast transients are very informative signals in haptic perception and especially in fine manipulation [27,28]. Such tactile signals are commonly featured by thimble-like haptic devices, with different solutions proposed for rendering high-frequency cues such as textures and surface features. Vibrotactile feedback based on vibration motors or linear resonant actuators (LRAs) is a closely related approach, largely used in haptic devices due to the compactness, robustness, and simplicity of integration of these actuators [29,30,31]. Vibration motors do not require coupling and calibration of moving parts with the user; on the other hand, signals are limited to pure vibrations, with narrow frequency bands for LRAs and frequencies not independent from intensity for eccentric-mass actuators. The modulation of transients (i.e., sharp edges) is also limited by dynamic properties [32]. Wearable haptic devices at the fingertips can also provide further tactile information such as modulated normal indentation and lateral stretch, resembling force interaction typically present in manipulation [33,34,35]. In teleoperation applications, wearable devices conveying tactile feedback at the fingertips have been proposed, by means of haptic thimbles [36], passive controllers or trackers augmented by tactile feedback [37,38,39], and gloves [40,41].

Other teleoperation and prosthetic approaches use vibrotactile feedback delivered at the forearm segment while controlling a robotic hand. They are used to convey different information through the tactile channel, such as proximity to obstacles [42] and contact transients [43,44].

In the above studies, tactile feedback in teleoperation was used together with optical or lightweight tracking devices, which were added to desktop haptic devices conveying kinaesthetic feedback at the end effector or embedded to surgical robots not delivering other haptic feedback modalities. In the above-cited telepresence systems [13], vibrotactile feedback coupled with kinesthetic feedback of hand closing (by means of a SenseGlove device) was implemented in a bimanual upper limb teleoperation system. Here, however, the feedback was vibrotactile and not linear, and the kinaesthetic haptic feedback of hand closing was limited to the passive-brake actuation system.

In this paper, we investigate combined kinaesthetic and wide-bandwidth tactile feedback in telemanipulation, conveyed through a full upper limb exoskeleton system. With respect to previous work, we propose the following novelties:

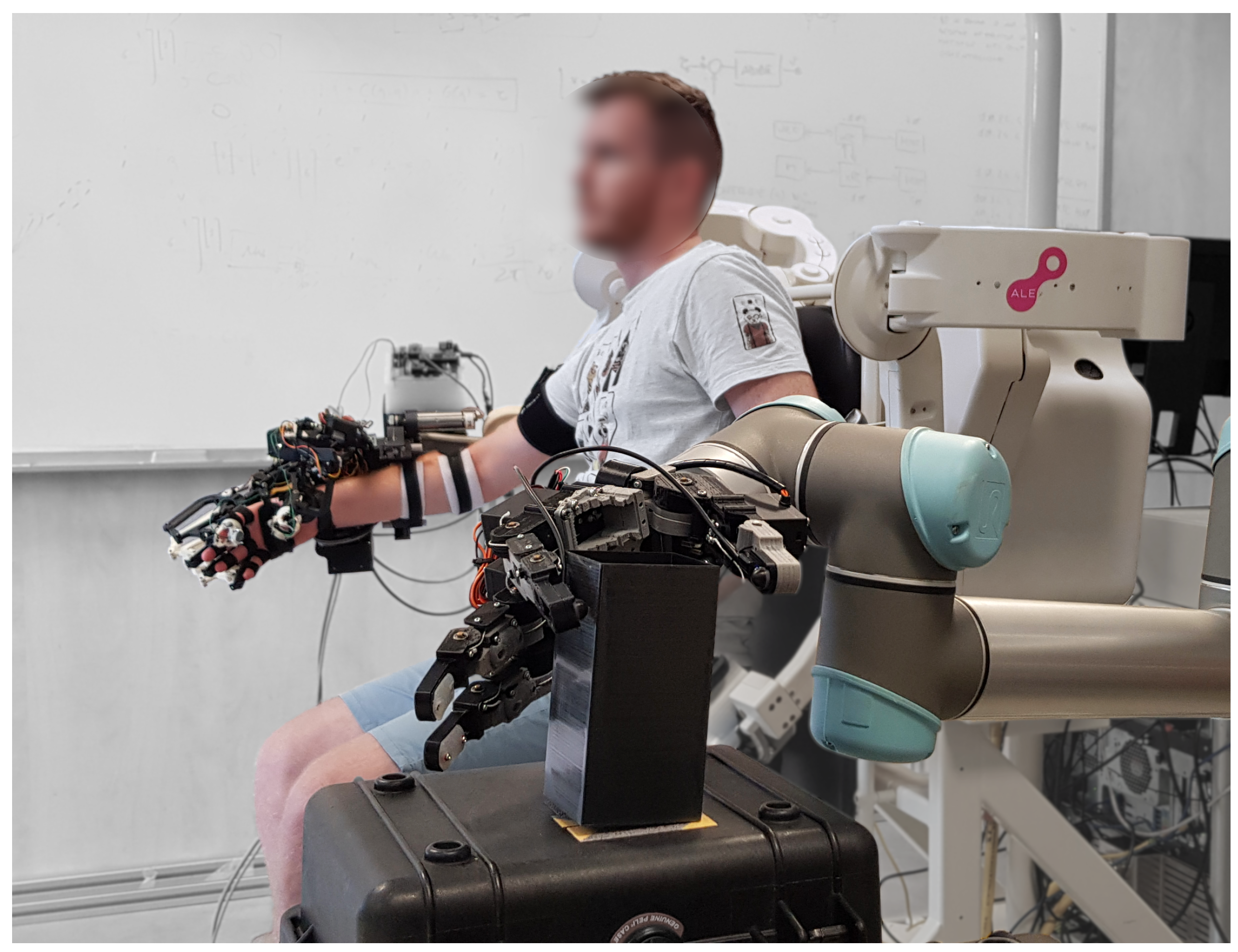

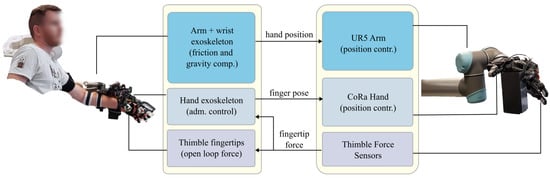

- The proposed system is composed of a full upper limb exoskeleton comprising a hand exoskeleton that provides both kinaesthetic force feedback at the fingertips and wide-bandwidth cutaneous force feedback through dedicated linear tactile actuators. Regarding the follower, sensitive force sensors at the fingertips are used to convey clean contact and force-modulated signals (Figure 1).

Figure 1. The proposed system is composed of a hand exoskeleton integrating additional cutaneous force feedback actuators. The teleoperated robotic hand includes force sensors at the fingertips.

Figure 1. The proposed system is composed of a hand exoskeleton integrating additional cutaneous force feedback actuators. The teleoperated robotic hand includes force sensors at the fingertips. - The full arm exoskeleton allows for the transparent use of a hand exoskeleton, providing tracking, gravity, and dynamic compensation in a large upper limb workspace and also presenting the opportunity (not implemented in this setup) of full kinaesthetic force feedback delivered at each segment of the upper limb.

- The hand exoskeleton in place of haptic gloves allows for the implementation of larger and heavier mechanisms to properly transmit forces to the fingers, as well as heavier actuators to convey more intense, wide-bandwidth tactile feedback. In this paper, the consistency and richness of the obtained haptic feedback are evaluated through the measurement of the interaction forces in a pick-and-place teleoperation task.

2. Materials and Methods

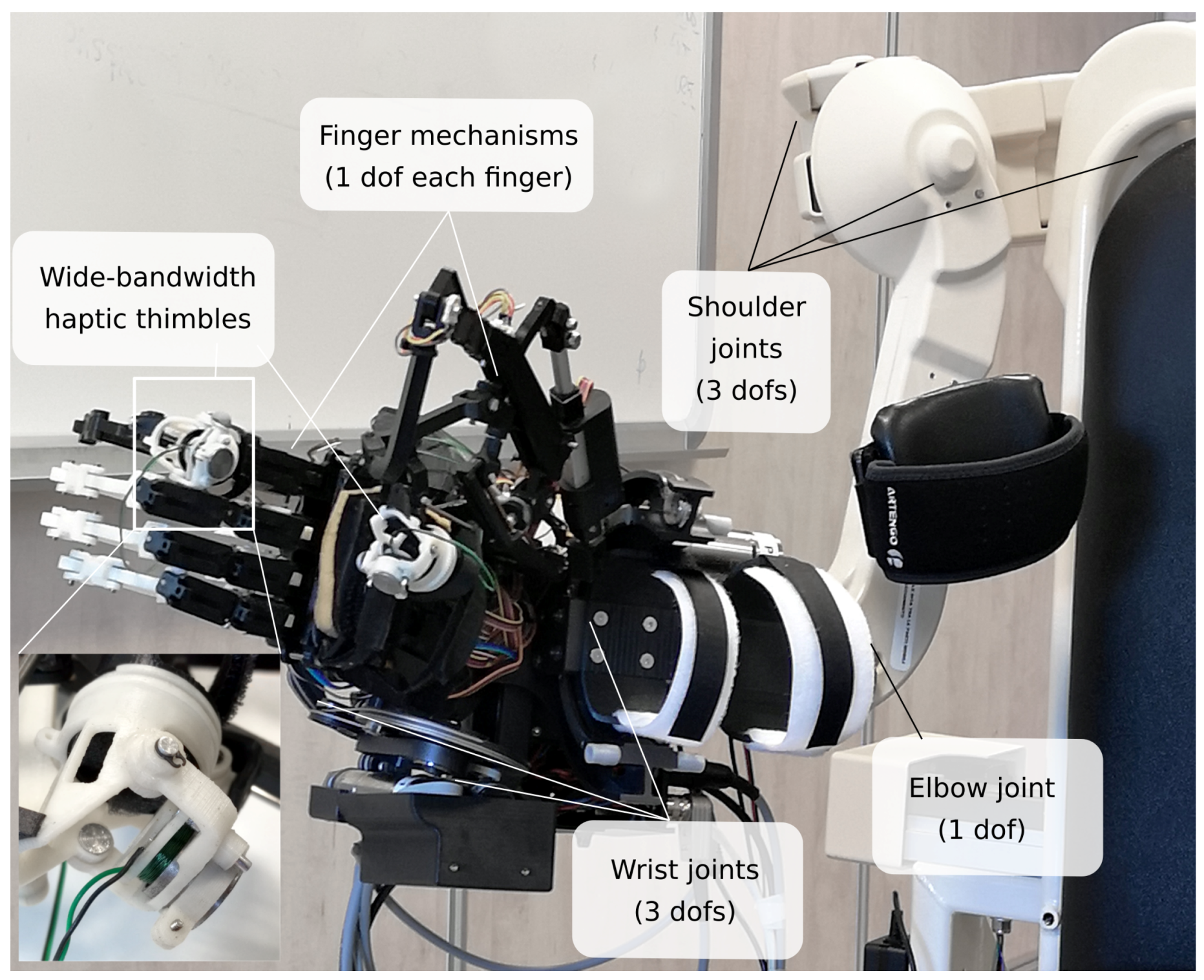

The leader system is composed of a full upper limb and actuated exoskeleton, divided into different sub-modules. It includes 3 actuated DoFs for the shoulder, 1 actuated DoF for the elbow, and 3 actuated DoFs for the wrist. The hand exoskeleton includes 5 actuators within an underactuated, parallel mechanism for each finger. The dedicated tactile actuators are embedded at each fingertip. Sub-modules are described in subsequent sections.

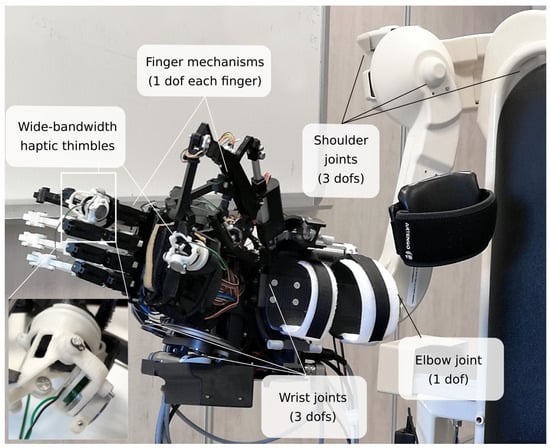

2.1. The Exoskeleton Interface

The leader interface is a grounded fully actuated exoskeleton covering all the upper limb joints, from the shoulder to the fingers (Figure 2). In detail, the leader interface is composed of an arm exoskeleton called Alex [45], a spherical wrist WRES [46], and an underactuated hand exoskeleton covering both the long fingers [20] and the thumb [47]. In this work, the hand exoskeleton was integrated with linear and wide-bandwidth haptic thimble devices [48]. This combined the kinaesthetic force feedback of the hand exoskeleton mechanism, a wide-bandwidth cutaneous feedback able to convey information about fine contact transients, and dynamic modulation.

Figure 2.

Detail of the leader interface consisting of the developed full upper limb exoskeleton integrated with haptic thimbles.

2.1.1. The ALEX Arm Exoskeleton Module

The ALEX exoskeleton [45] is a wearable arm interface featuring an extended range of motion and other design features suitable for teleoperation. Shoulder and elbow actuators are placed at the base, with back-drivable wire transmission. This reduces the moving masses and the resulting inertia perceived by the user. It also implements an embedded force sensing method to smoothly interact with the operator. Two high-resolution encoders were implemented for each actuator: one mounted at the motor shaft and the other at the link. External forces were then obtained by comparing the two encoders and measuring the deformation of the tendon transmission. The method allows for the interaction of the user at each link of the interface and not at the end effector only. The ALEX kinematics is isomorphic with respect to the human arm, and such kinematics does not present different singularities with respect to it.

2.1.2. The WRES Wrist Exoskeleton Module

For the wrist joint, a 3-DoF, spherical actuated wrist called WRES [46] was used. Similar to the arm module, the wrist exoskeleton also presented an isomorphic kinematics, with the center of the actuated spherical joint aligned with the center of the user’s wrist. Joint actuation was obtained thanks to a tendon-based transmission as in the ALEX arm exoskeleton. Table 1 shows both the allowed range of motion and the maximum allowed torques for each of the three wrist rotations: pronation–supination (PS), flexion–extension (FE), and radial–ulnar deviation (RU). In addition to the wide range of motion and the relevant forces allowed, a key feature of the adopted wrist device is the encumbrance distribution. Indeed, the majority of the device volume is placed on the outer side of the user’s forearm: this enables the user to move their hands close to each other, which is useful in bimanual teleoperation tasks.

Table 1.

Specifications of the ALEX arm and WRES wrist exoskeleton.

The palm of the user’s hand, together with the arm and forearm, represented the three contact points between the operator and the exoskeleton. Velcro bands were used to keep the user’s arm attached to the exoskeleton and to transmit force feedback to the user.

2.1.3. The Hand Exoskeleton Module

The adopted hand exoskeleton was designed to provide kinaesthetic force feedback of finger closing and opening. The base link of the device was fixed at the last link of the wrist module and firmly attached to the user’s palm. Because of the numerous DoFs of the human hand, the device was designed to adopt the under-actuation concept, focusing on grasping operations with independent fingers. Wearability issues were taken into account and addressed by a peculiar design solution with parallel, underactuated kinematics that includes the user’s finger in a parallel kinematic design [20]. A similar concept, although resulting in a more complex parallel underactuated mechanism, was followed for the thumb mechanism design [47]. All the five finger mechanisms were grounded at the hand exoskeleton base. The four long finger mechanisms were connected to the user’s fingers at the proximal and intermediate phalanges, whereas the thumb mechanism was connected to the user’s thumb at the metacarpal and distal phalanges. Concerning the actuation of the device, linear screw actuators with DC motors were implemented as a compact and lightweight solution with a high maximum output force. Miniaturized strain gauge force sensors (SMD Sensors S215, 55 N full scale) were implemented at the base of each linear actuator to obtain the active back-drivability of the device through a force-to-velocity control loop. Table 2 summarizes the main features of the hand exoskeleton module.

Table 2.

Specifications of the adopted hand exoskeleton and its actuators.

2.1.4. The Tactile Feedback Module

Kinaesthetic grasping force feedback provided by the hand exoskeleton was enriched by the addition of linear, wide-bandwidth actuators for tactile rendering. The additional wearable fingertip haptic modules (Figure 2) were added for the index and thumb fingers and integrated into the hand exoskeleton structure at the level of the distal index phalanx link and of the intermediate thumb phalanx link. Each tactile actuator was based on a voice-coil structure with a permanent magnet and moving coil, as presented in [48]. This design can achieve wide bandwidth, up to 250 Hz, due to the direct drive layout and the moving plate in contact with the fingerpad. Rendering also included static and low-frequency force components, up to a maximum continuous force of 0.4 N. Table 3 summarizes the main features of the implemented tactile modules.

Table 3.

Features of the fingertip modules.

3. Teleoperation Experimental Setup

The proposed operator interface system, described in Section 2, was evaluated in a pick-and-place teleoperation task. Details of the follower robotic interface are described in Section 3.1, while the teleoperation task and experimental procedure are explained in Section 3.3.

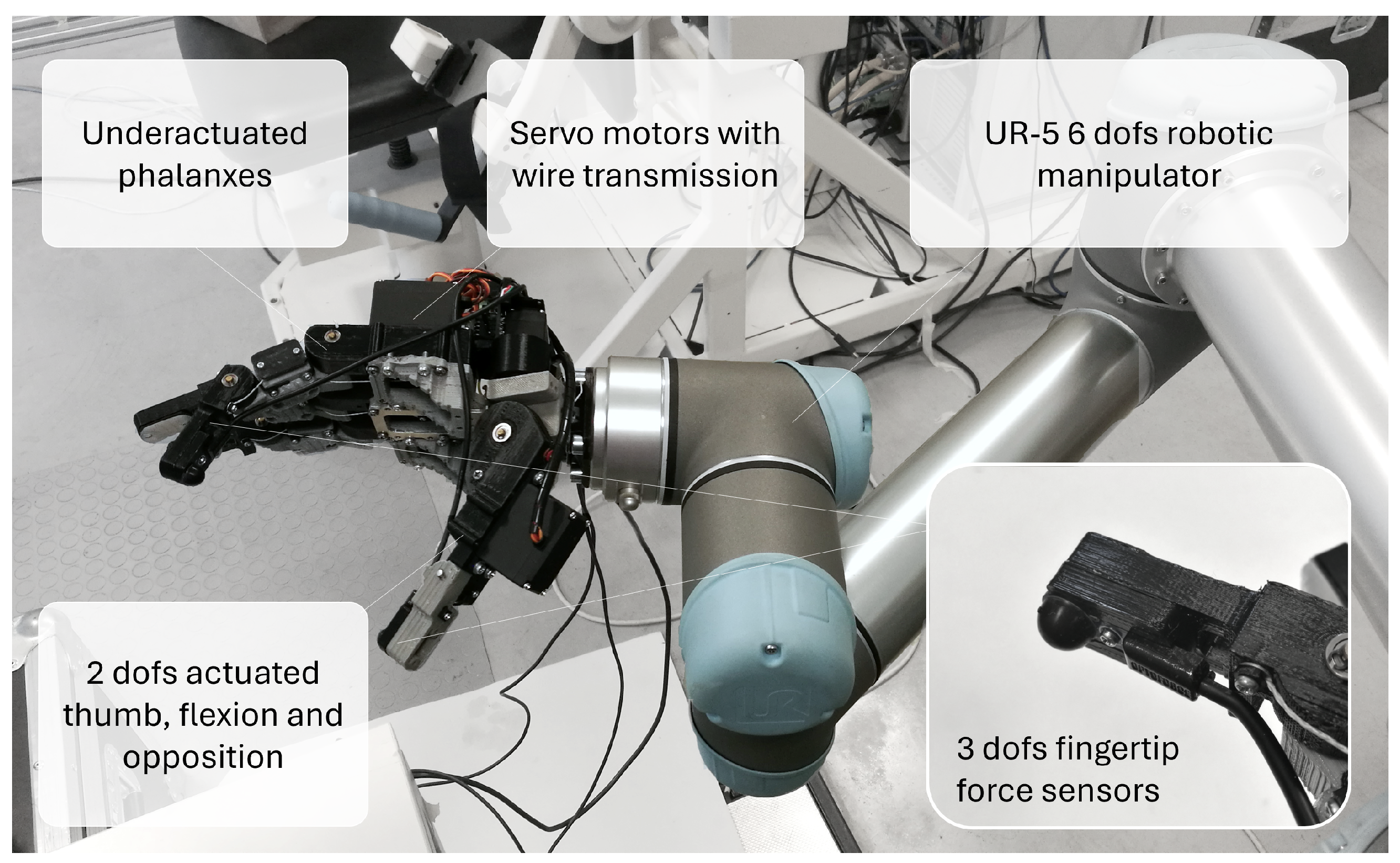

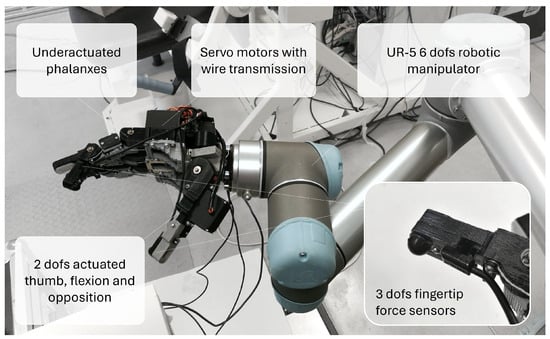

3.1. Follower Robotic System

The follower system was composed of a six-DoF commercial collaborative robot UR-5 (Universal Robots, [49]) equipped with a custom-made robotic hand (CoRa hand [50]) and two sensitive force sensors at the index and thumb fingertips, as depicted in Figure 3. The robotic hand was designed for accomplishing whole-hand and pinch grasping with independent finger control. The design of the CoRA hand was aimed at robustness and compliance in teleoperation or autonomous manipulation tasks, although with simplifications at the level of the number of DoFs: Fingers had parallel planes of actuation. The tendon-driven actuation was reduced to one DoF during the closing mechanism of each finger. An additional degree of freedom was introduced for the thumb in order to rotate the plane of actuation of the thumb on the axis normal to the palm surface.

Figure 3.

Detail of the follower interface implementing the robotic hand equipped with two sensitive force sensors (index and thumb).

The hand was actuated by compact electromagnetic servomotors with gear reduction, embedded in the hand palm together with control electronics and motor drivers. The control of the servomotors was achieved through a position control loop with modulated saturation. It was thus possible to modulate the torque applied by actuators in a feed-forward mode when in quasi-static contact conditions. In order to obtain a reliable measurement of the contact forces, two miniaturized force sensors based on a deformable silicon hemisphere (Optoforce 10NTM) with a diameter of 10 mm and a resolution of 1 mN were mounted at the index fingertip of the robotic hand, in direct contact with the grasped object. Although the hemispherical shape of the sensor was not ideal for preventing the rotation of the object around the contact point, the sensors were chosen for their compactness, high sensitivity, and range of measured forces suitable for the proposed manipulation task.

3.2. Teleoperation Setup

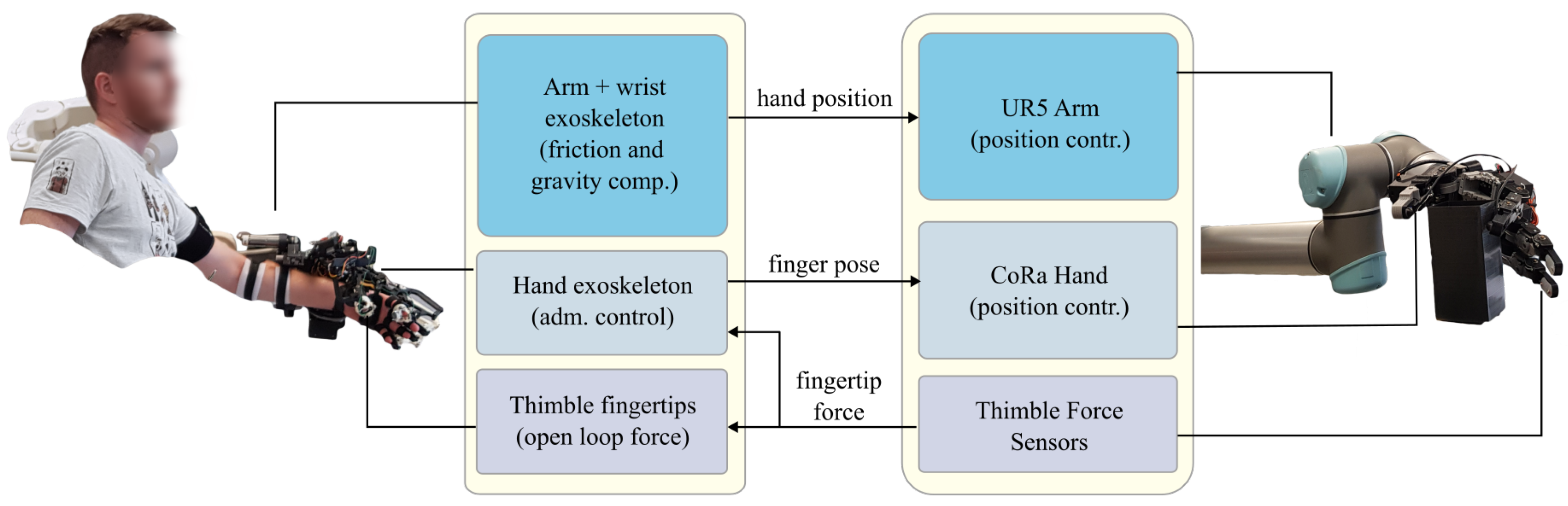

Teleoperation was performed locally with leader and follower systems connected by wired ethernet connection. At the level of the arm, the follower robotic manipulator was locally controlled with a position control loop, where the reference of the end effector was set from the end-effector position of the leader side. At the leader side, the arm exoskeleton was actively controlled to obtain transparency, with gravity and friction compensation. The transparency of the arm exoskeleton was obtained through a local force–velocity control loop; the sensing of the user forces interacting with the exoskeleton links was implemented in the device design by comparing measurements from redundant, high-resolution position sensors placed at the motors and at the end of the wire transmission of each joint. In this experimental setup, no force feedback was provided at the level of the arm. The gravity compensation of the whole-arm-to-hand exoskeleton modules was implemented at the level of the arm and wrist exoskeletons, using the mass distribution obtained from CAD design and the current pose of the exoskeleton. Regarding friction compensation, a model of static and viscous friction was implemented for each joint. It used the joint velocities as input to compute the estimated friction torques. Gravity and friction compensation torques computed at each joint were fed in a feed-forward mode to the actuators with respect to the main force–velocity control loop. Finally, dynamic compensation was implemented through the mass distribution model of the robot on the basis of the desired acceleration. The desired acceleration was obtained as a derivative of the desired velocity in the force–velocity control loop.

At the level of the hand, the follower’s robotic hand was driven in position control with position reference signals coming from the leader’s hand exoskeleton, normalized between the fully open and closed pose of the user. Force signals measured by the fingertip force sensors were used as force reference signals for the leader’s hand exoskeleton. There, an admittance force-to-velocity control was implemented for each finger, based on the strain-gauge sensors placed at the mounting point of each linear actuator.

In order to couple the additional tactile feedback with the kinaesthetic feedback provided by the hand exoskeleton, signals were rendered in a feed-forward mode from the robotic hand fingertip sensors, and hi-pass-filtered to remove slow and continuous force components, rendered instead as kinesthetic force feedback. The teleoperation layout is depicted in Figure 4. Regarding the hardware implementation of control loops and data processing, the setup was organized as follows: The arm and wrist exoskeletons were driven by a dedicated embedded PC, running a 1 KHz control loop on an xPC-Target real-time operative system. At the follower side, the UR5 robotic arm was controlled by a proprietary control unit and control interface, providing a 150 Hz reference update. The hand exoskeleton was equipped with a Teensy 3.6 microcontroller board with an SPI to ethernet module, running a 150 Hz control loop. The electronics were also used to control the two voice-coil actuators with dedicated H-Bridge drivers (DRV8835 IC). The same Teensy 3.6 electronic board was implemented at the follower side on the robotic CORA Hand. A host PC was used to control the overall teleoperation setup, send high-level commands to the different modules, and save record data. Data of the two fingertip force sensors using a native USB interface were read by the host PC and sent at 150 Hz to the driving electronics of the haptic thimble. All the implemented ethernet communication interfaces were locally wired to a common ethernet switch (typical latency below 10 ms) and used UDP protocol messages, with the exception of the proprietary UR-5 control interface implementing TCP/IP messages.

Figure 4.

Teleoperation scheme involving two different force feedback rendering to the user: kinaesthetic and tactile feedback at fingerpads.

3.3. Experimental Procedure

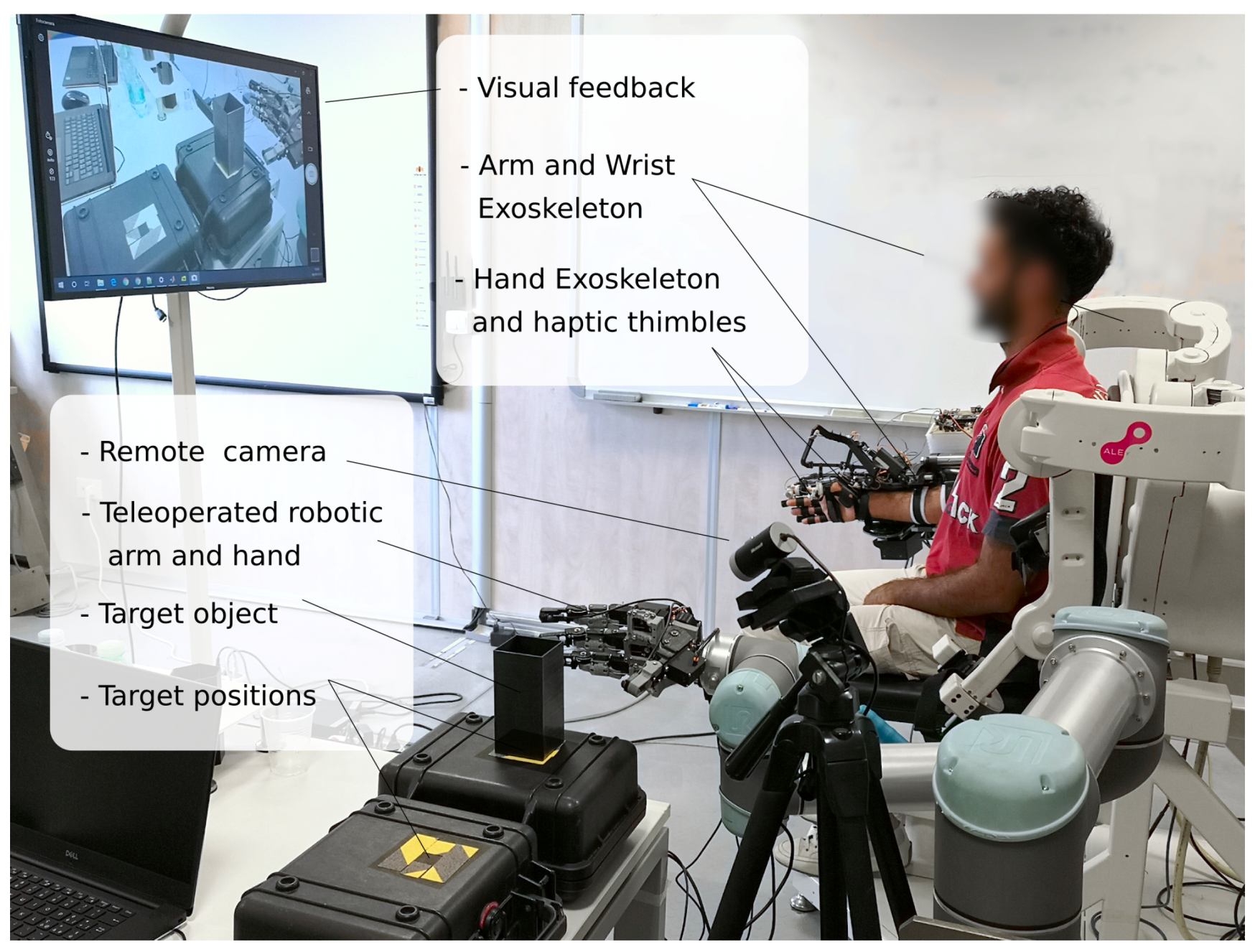

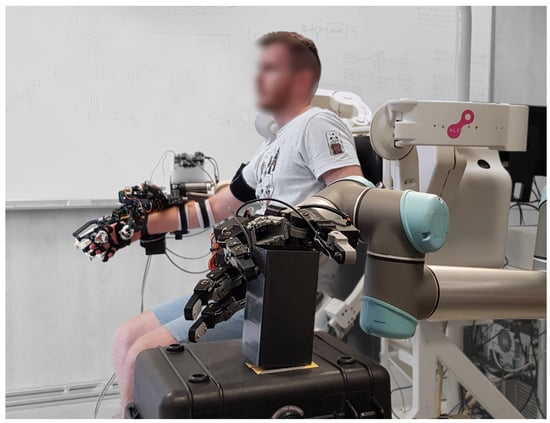

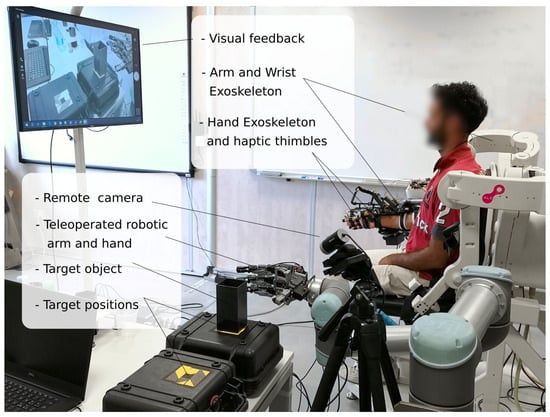

The experimental procedure consisted of a teleoperated pick-and-place task, performed in two different feedback conditions: with visual feedback only (V condition) and with the addition of haptic feedback (HV condition). Seven healthy subjects participated in the study (aged 28–34, five males and two females). The procedure was approved by the Ethics committee of the Scuola Superiore Sant’Anna. Subjects had technical expertise in engineering and mechatronics but not in the use of teleoperation systems. Subjects were invited to wear the leader interface (consisting of the upper limb exoskeleton modules) on their right arm. A short calibration phase, lasting about 2–3 min, was performed to calibrate the pose of the hand exoskeleton with the reference pose sent to the robotic hand: the fully opened and fully closed hand exoskeleton joint positions were acquired, interpolated, and normalized in order to serve as reference signals for the follower robotic hand. The leader and follower systems (described in Section 3.1) were placed aside in the same room, separated by a white screen (it was removed in Figure 5 in order to take an overall photo of the setup). Participants received visual feedback on the teleoperated environment through a remote camera. The video stream was presented through an LCD monitor placed about 1 m in front of them (see Figure 5). The camera-mediated visual feedback was implemented in the setup in order to better resemble real teleoperation conditions. This of course limited the information provided by vision with respect to a direct-sight teleoperation laboratory setup.

Figure 5.

The experimental teleoperation setup.

The remote environment consisted of a desk with two raised platforms. The starting and final positions of the pick-and-place task were marked by yellow tape on the two platforms. The distance between the start and final positions of the task was 0.4 m. A 3D-printed rigid plastic box (dimensions 60 × 60 × 120 mm, mass 0.06 kg) was the target object to be grasped.

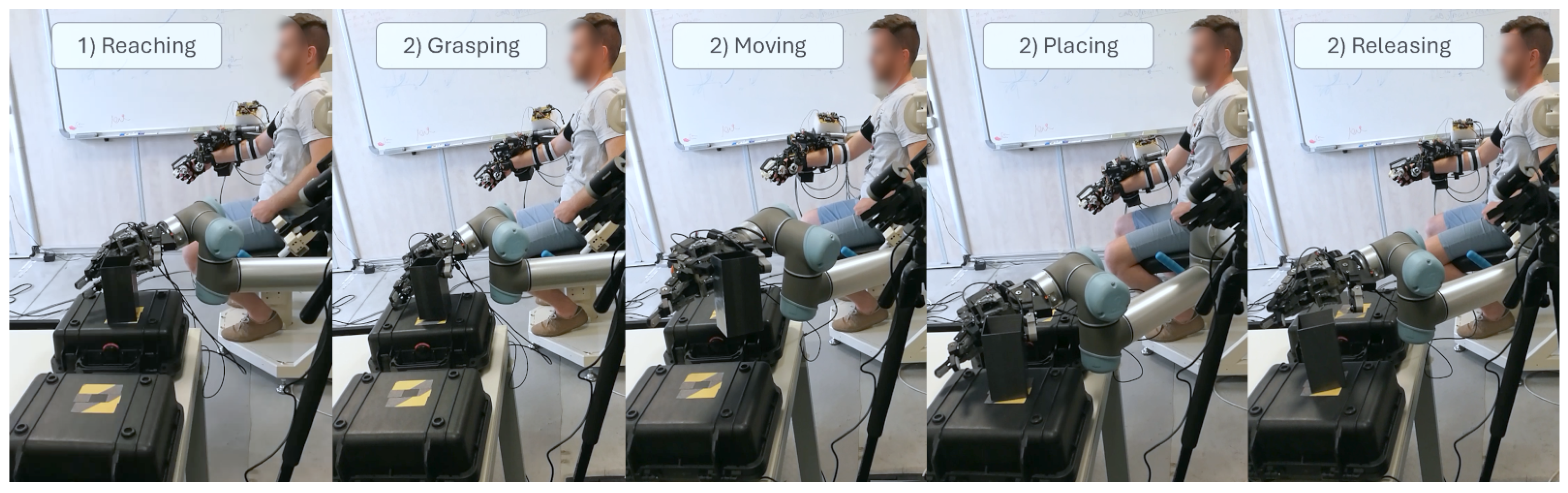

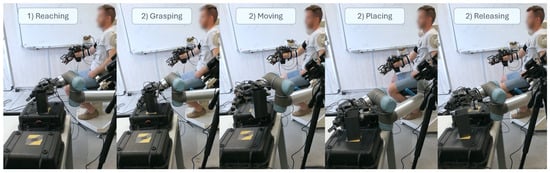

At the beginning of the experiment, participants underwent a familiarization phase, lasting approximately 5 min, in which they familiarized themselves with the teleoperation system, the proposed task, and the proposed feedback conditions. They were instructed to drive the robotic hand to grasp the target object with enough force to lift the object to keep it from slipping. A maximum grasping force threshold was introduced in the experiment as a virtual breaking condition of the object. The threshold was set at 4.0 N and applied in real time to the averaged thumb and index interaction forces. When exceeding the force threshold, an auditory cue was provided to warn the subject. The threshold value was selected to be equal to five times the measured minimum grasping force required to lift the object (0.8 N). The sequence of the proposed pick-and-place task is depicted in Figure 6.

Figure 6.

Sequence of the proposed pick-and-place task.

Each subject was asked to accomplish the task 10 times in two conditions: V, with visual feedback only, and HV with haptic and visual feedback. The presentation order of the two conditions was pseudo-randomized between subjects. In the V condition, the hand exoskeleton was controlled in transparency; hence, no forces from the follower robotic hand were rendered. The haptic thimbles were also turned off. In the HV condition, the kinaesthetic feedback of the hand exoskeleton and the tactile feedback provided by haptic thimbles were active. In both conditions, the arm exoskeleton was turned on to compensate for the weight of the modules. A trial was considered successful when the target object was correctly picked from the starting position and placed in the final position without falling and without exceeding the maximum force threshold at any time.

In order to compare the two experimental conditions, the following metrics were considered: the correct rate, the time elapsed for accomplishing each trial, and the averaged interaction forces at the fingertips.

4. Results and Discussion

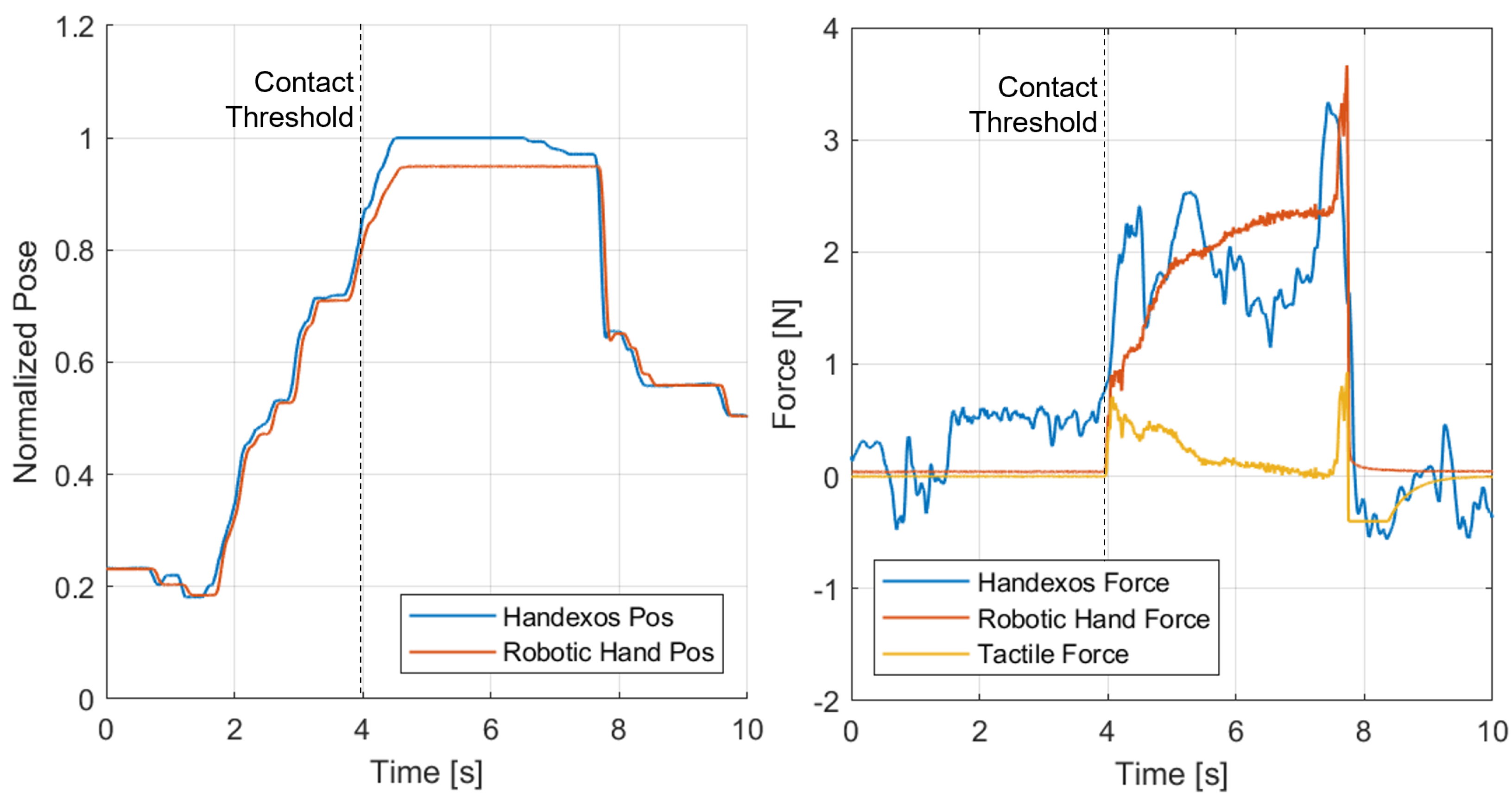

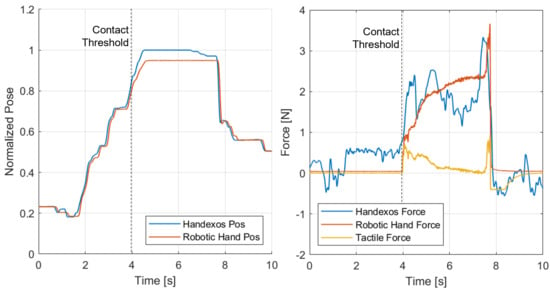

The performance of the pick-and-place task is reported and compared between the two experimental conditions. A representative time plot for one grasping repetition is shown in Figure 7, depicting the magnitude of the different feedback signals. At the contact threshold, the measured fingertip force at the robotic hand was reproduced by the kinaesthetic feedback (blue line) and tactile feedback (yellow line) with lower intensity but faster dynamics. In the plot, the blue line represents the force measured by strain-gauge force sensors of the hand exoskeleton (the plot refers to the index finger only); hence, it sums the contribution of the hand exoskeleton actuators (kinaesthetic force feedback) and the user interaction. Before the contact threshold, a residual interaction force (mean 0.5 N) was measured at the hand exoskeleton, generated by the user’s fingers moving the exoskeleton in free air toward the object. Just before the releasing phase, a sudden increase in the modulated grasping force was noticeable, probably modulated by the user in relation to some pose adjustment and then reflected to the robotic hand (red signal). The mean absolute force tracking error measured in a representative full sequence of ten pick-and-place tasks was 0.54 N, while the measured mean absolute position tracking error was 0.027 in the normalized finger pose range.

Figure 7.

Representative time plot of the position and force profiles during grasping in the HV condition.

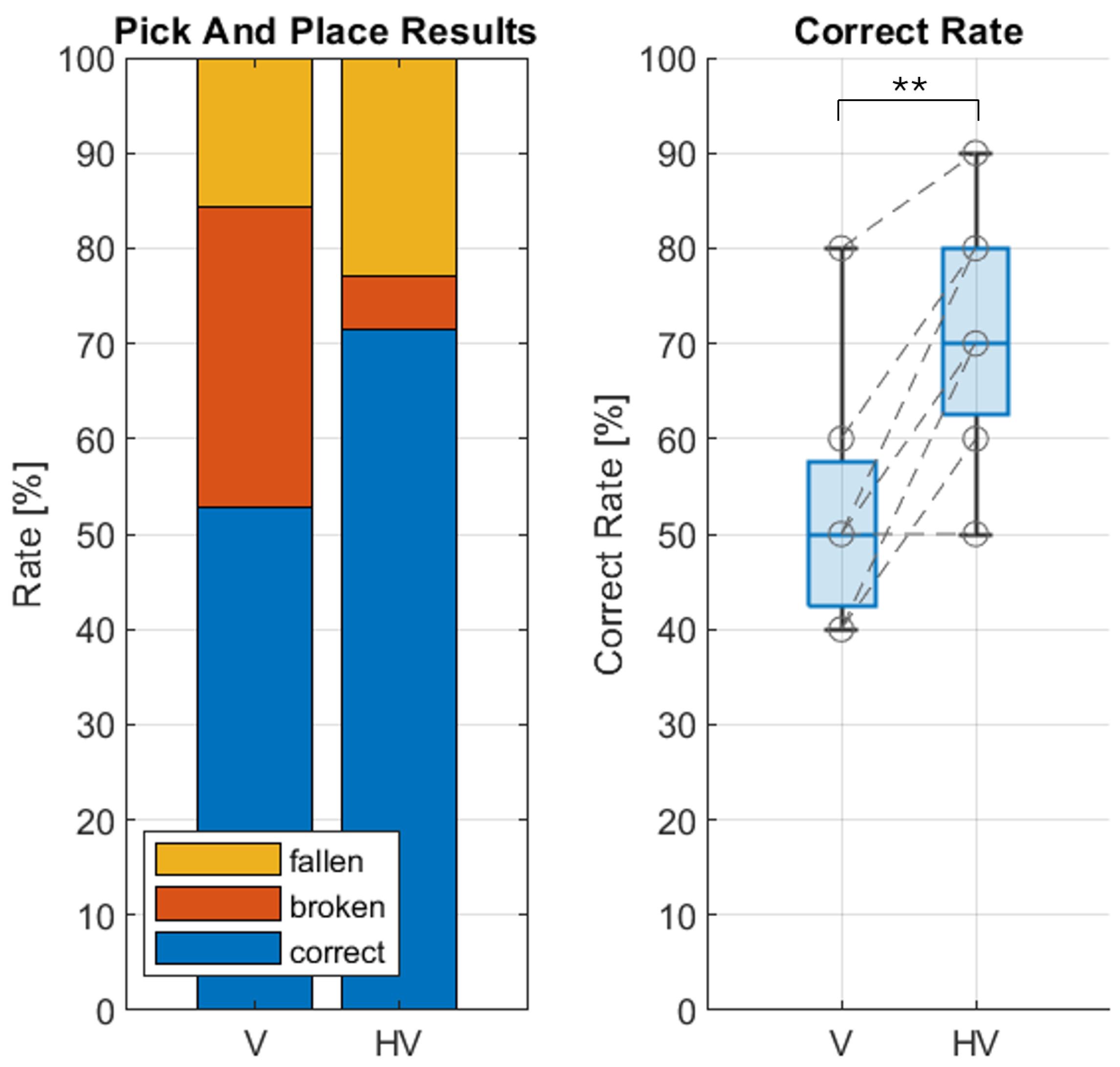

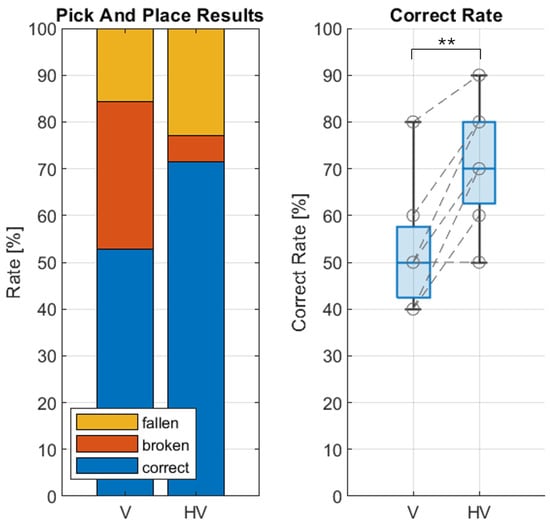

Regarding the performance of the task results averaged over subjects, horizontal bar plots in Figure 8 compare the two experimental conditions in terms of the percentage of correctly placed, slipped, and virtually broken objects. Subjects had no direct experience in teleoperation systems, and from this perspective, the proposed system was intuitive enough to be used after a very short training phase. One of the advantages of an exoskeleton interface is that it facilitates the direct control of the body pose, providing interaction and robust tracking at the level of each body segment.

Figure 8.

Results of the pick-and-place tasks with respect to the correctly placed, fallen, or virtually broken objects (left). Plot of the correct rate results with circles and dotted lines showing the performance of each subject (right). On each box, the central mark indicates the median, and the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively. The ** symbol represents a statistically significant difference with .

The task was also completely teleoperated, hence with vision mediated using a camera and a monitor, possibly altering or limiting the perception of depth and self-location in the remote environment. This better resembled the envisaged real teleoperation applications. In this regard, further developments of the setup can include stereoscopic vision; in this regard, calibration and effective visualization for the user of stereoscopic video streaming is not straightforward to implement and requires dedicated development.

Regarding the setup and its limitations, the implemented sensitive force sensors at the robotic fingertips featured a small hemispherical rubber dome, which does not provide a large contact surface with respect to the object pivoting around the contact point. During the experiment, we observed that the object could be stably grasped with pinch–grasping; yet, depending on the grasping point, this occurred with a visible oscillation upon lifting due to gravity, around the axis connecting the two thumb and index contact points. This might have induced a more cautious manipulation behavior.

As a further limitation of the setup, manipulation was limited to a pinch–grasping task, with tactile feedback limited to the thumb and index only. The limit of tactile feedback provided at the thumb and index finger only was also due to the complexity of the instrumentation; yet, it can be ideally extended to other fingers in future developments.

The number of successfully pick-and-placed objects showed a statistically significant increase when the visuo-haptic feedback was provided ( correct trials in the HV, in the V condition, ). Regarding incorrect trials, interestingly, the V condition yielded a relatively high number of broken objects with respect to fallen objects ( broken, fallen). This trend can be explained by the information the subjects could retrieve via the visual feedback in the V condition. Since the grasped object was rigid, no information about the modulation of the grasping force could be visually noted. On the other hand, close-to-slip conditions might be observed through subtle movements of the object. The use of rigid objects was decided to better resemble the majority of manipulated physical objects and resulted in a more difficult yet more realistic manipulation task. Conversely, the use of compliant objects might have biased the V condition and facilitated the overall manipulation performance.

The HV condition yielded a percentage of fallen objects and just broken objects. Here, the combined kinaesthetic and tactile feedback provided information about the interaction force, allowing participants to modulate grasping accordingly. With the more informative feedback in the HV condition, subjects appeared to follow a different task execution strategy, reducing the grasping force closer to the limit of slippage (hence not balanced between the slip limit and the breaking threshold). In general, this behavior is in agreement with the natural grasping task execution: It has been proven that during the natural execution of a grasping task, forces are held just above the level of the minimum static friction required to avoid slippage [51], while in conditions where sensory feedback is limited, such as in virtual environments, the safety margin in terms of forces required to grasp and lift an object is increased. From a comparison with a previous study [52], it is interesting to note that in the V condition, the interaction with a real remote cube reduced the correct rate with respect to a similar V condition performed with a virtual cube (it was ). Conversely, the superposition of the haptic feedback restored the outcome of the test; indeed, the correct rate of HV condition is in agreement with the previous study performed in a virtual environment (it was ). The reduction in broken cubes due to haptic feedback is in agreement with previous findings, and it is also of a similar magnitude to what was found in [32], where a similar study was performed in a pick-and-place test with a modified box-and-block setup by amputees with a hand prosthesis plus a haptic feedback cue.

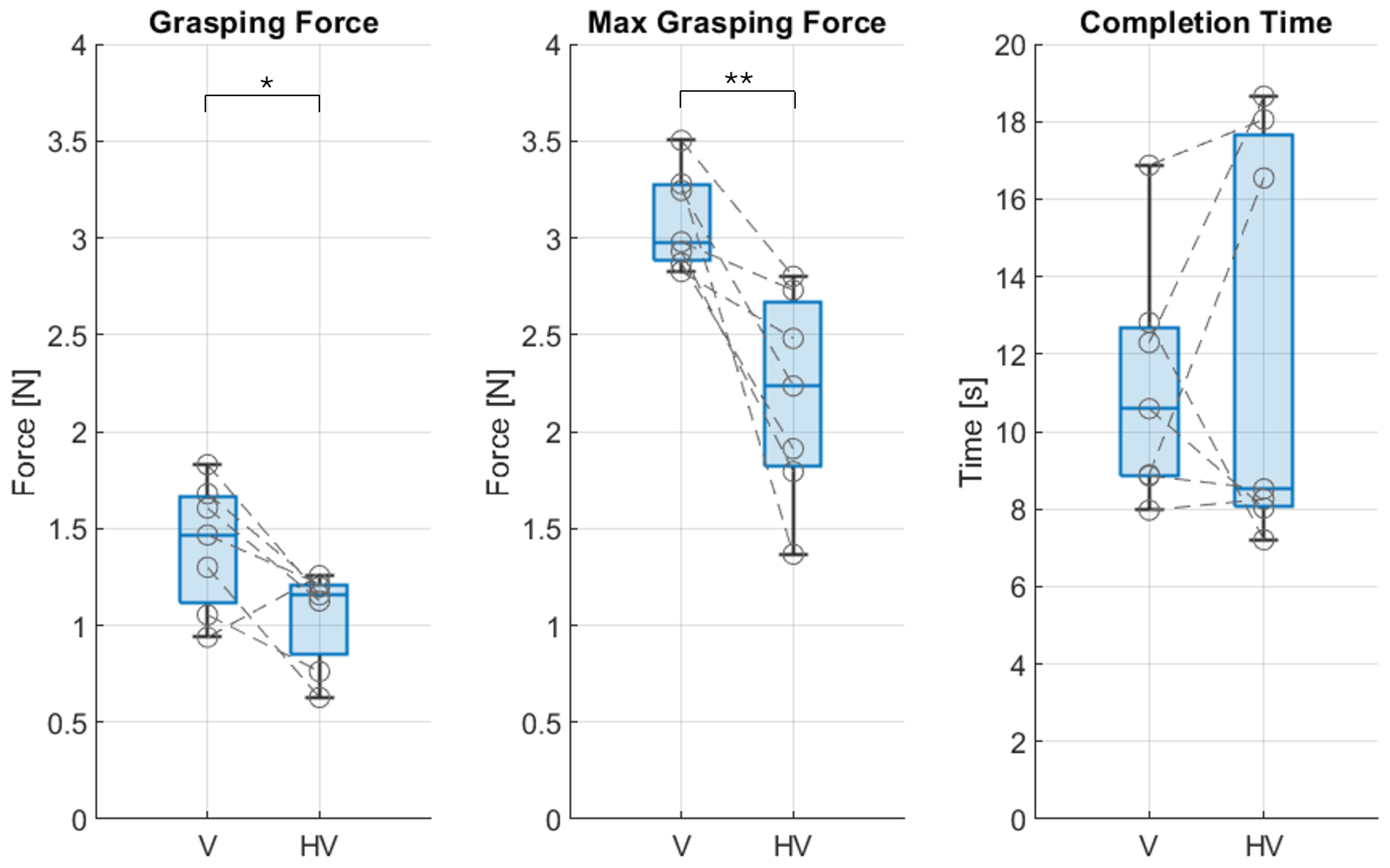

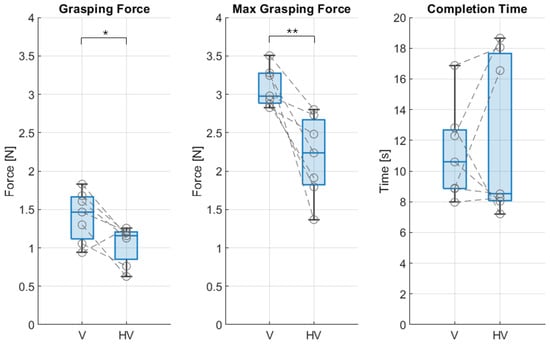

This trend also emerges in the averaged and peak force metrics measured during the task execution. The results depicted in bar plots of Figure 9 show that the user significantly decreased the interaction forces when the haptic feedback was provided (mean force, V: N HV: N, ; peak force, V: N HV: N, ).

Figure 9.

Pick-and-place task results as average grasping force (left), peak grasping force (middle), and completion time (right). Only successful trials are considered. On each box, circles and dotted lines show the result of each subject, the central mark indicates the median, and the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively. The * and ** symbols represent a statistically significant difference with and , respectively.

Regarding the completion time, the additional haptic feedback did not improve the task execution in terms of velocity (completion time, V: vs. HV: s). This can be explained by different factors: While haptic feedback could help the subject in modulating the force once the object was grasped, it did not add spatial information to the visual feedback when approaching the object and pre-shaping the hand pose. Also, the information provided by additional feedback may require higher mental effort to be processed and effectively used, especially during the learning period of non-trained users. Compared with a pick-and-place task performed in the first person, however, the completion time was remarkably slower. As a qualitative comment by the experimenters, subjects were particularly cautious in performing the task, due to limits in the feedback information (both visual and haptic) compared to a first-person execution and possibly due to concerns regarding the risk of collision between the teleoperated robotic hand and the rigid platforms.

Considering the possible effects of latency in the provided feedback to subjects, teleoperation was performed locally through a wired ethernet connection, hence with low latency (typically below <10 ms). The main limits in bandwidth and dynamics of the system were represented by the dynamics of the geared servomotors of the robotic hand and hand exoskeleton modules. These embedded and compact actuators, due to the relatively high reduction ratio, typically show rising time for the step response in the 0.1 s range, as seen in the response curves of previous studies [47,50].

As regards the possible learning effects, the sequence of the V and HV sessions was randomized for each subject. Yet, due to the relatively small sample size, the possible effects of the sequence order were further tested. No significant difference emerged for any of the evaluated metrics when sessions were grouped by order rather than by feedback condition.

5. Conclusions

This work investigates combined kinaesthetic and tactile feedback at the fingers, with the purpose of improving perception in teleoperation tasks. To this end, we proposed a full upper limb exoskeleton interface, extended to include fine tactile feedback with dedicated actuators. In the literature, tactile feedback is often provided alone in VR or in lightweight teleoperation setups, while on the other hand, kinaesthetic feedback interfaces, such as exoskeletons or robotic manipulators, rarely show the bandwidth and linearity needed to properly render tactile information.

We experimentally evaluated the performance of the provided feedback and in general the proposed operator interface within a teleoperated pick-and-place task, requiring precision in modulating the pinching contact forces. The results showed a positive and significant effect of the haptic feedback regarding the correct rate, i.e., the number of objects picked and placed at the target location without falling and without exceeding the maximum force threshold. The balance between fallen and broken objects and the measured average forces also highlight a different task execution strategy adopted by subjects in the presence of the haptic feedback: While with visual feedback only, subjects were prone to over-squeeze the object, in the presence of haptic feedback, forces were kept closer to the minimum level required to avoid slippage. The latter behavior better resembles the natural execution of a real grasping task in humans, where forces are held just above the level of the static friction required to maintain stable grasping.

Yet, compared to a pick-and-place task performed in the first person, the task was performed with caution and with noticeably long duration. This indicates that limitations still remain in the richness of the provided visual and haptic perception. From a broader perspective, although the relatively complex setup includes a full upper limb exoskeleton and linear tactile units, the final perception is still far from the natural sense of touch. In particular, at fingerpads, the variety of mechanical stimuli also includes multiple degrees of freedom, spatially distributed cues, and continuous slip sensations, which are hard to be met by compact, wearable devices. With these technological limitations in mind, there is room to further improve the field, considering both the development of novel actuating principles and rendering methods and the investigation of which haptic stimuli prove most informative for a given telemanipulation application.

Author Contributions

Conceptualization, D.L. and M.G.; formal analysis, M.B.; funding acquisition, D.L. and A.F.; investigation, D.L., M.G., S.M., M.B. and F.P.; methodology, D.L., M.G., S.M., M.B., D.C. and F.P.; software, S.M. and F.P.; writing—original draft preparation, D.L., M.G., M.B. and D.C.; writing—review and editing, A.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by project SUN—the European Union’s Horizon Europe research and innovation program under grant agreement No. 101092612.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Ethical Review Board of the Scuola Superiore Sant’Anna, protocol number 152021.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Da Vinci. Intuitive Surgical Systems. Available online: https://www.intuitive.com/en-us/products-and-services/da-vinci/systems (accessed on 15 July 2023).

- KHG, Kerntechnische Hilfsdienst GmbH. Available online: https://khgmbh.de (accessed on 15 July 2023).

- Ministry of Defence, UK. British Army Receives Pioneering Bomb Disposal Robots. Available online: https://www.gov.uk/government/news/british-army-receives-pioneering-bomb-disposal-robots (accessed on 15 July 2023).

- Patel, R.V.; Atashzar, S.F.; Tavakoli, M. Haptic feedback and force-based teleoperation in surgical robotics. Proc. IEEE 2022, 110, 1012–1027. [Google Scholar] [CrossRef]

- Porcini, F.; Chiaradia, D.; Marcheschi, S.; Solazzi, M.; Frisoli, A. Evaluation of an Exoskeleton-based Bimanual Teleoperation Architecture with Independently Passivated Slave Devices. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10205–10211. [Google Scholar] [CrossRef]

- Chan, L.; Naghdy, F.; Stirling, D. Application of adaptive controllers in teleoperation systems: A survey. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 337–352. [Google Scholar]

- Anderson, R.; Spong, M. Asymptotic stability for force reflecting teleoperators with time delays. In Proceedings of the 1989 International Conference on Robotics and Automation, Scottsdale, AZ, USA, 14–19 May 1989; pp. 1618–1625. [Google Scholar]

- Lawrence, D.A. Stability and transparency in bilateral teleoperation. In Proceedings of the 31st IEEE Conference on Decision and Control, Tucson, AZ, USA, 16–18 December 1992; pp. 2649–2655. [Google Scholar]

- Mitra, P.; Niemeyer, G. Model-mediated telemanipulation. Int. J. Robot. Res. 2008, 27, 253–262. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Scheggi, S.; Prattichizzo, D.; Misra, S. Haptic feedback for microrobotics applications: A review. Front. Robot. AI 2016, 3, 53. [Google Scholar] [CrossRef]

- Bolopion, A.; Régnier, S. A review of haptic feedback teleoperation systems for micromanipulation and microassembly. IEEE Trans. Autom. Sci. Eng. 2013, 10, 496–502. [Google Scholar] [CrossRef]

- Hulin, T.; Hertkorn, K.; Kremer, P.; Schätzle, S.; Artigas, J.; Sagardia, M.; Zacharias, F.; Preusche, C. The DLR bimanual haptic device with optimized workspace. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3441–3442. [Google Scholar]

- Lenz, C.; Behnke, S. Bimanual telemanipulation with force and haptic feedback through an anthropomorphic avatar system. Robot. Auton. Syst. 2023, 161, 104338. [Google Scholar] [CrossRef]

- Luo, R.; Wang, C.; Keil, C.; Nguyen, D.; Mayne, H.; Alt, S.; Schwarm, E.; Mendoza, E.; Padır, T.; Whitney, J.P. Team Northeastern’s approach to ANA XPRIZE Avatar final testing: A holistic approach to telepresence and lessons learned. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 7054–7060. [Google Scholar]

- Park, S.; Kim, J.; Lee, H.; Jo, M.; Gong, D.; Ju, D.; Won, D.; Kim, S.; Oh, J.; Jang, H.; et al. A Whole-Body Integrated AVATAR System: Implementation of Telepresence With Intuitive Control and Immersive Feedback. IEEE Robot. Autom. Mag. 2023, 2–10. [Google Scholar] [CrossRef]

- Cisneros-Limón, R.; Dallard, A.; Benallegue, M.; Kaneko, K.; Kaminaga, H.; Gergondet, P.; Tanguy, A.; Singh, R.P.; Sun, L.; Chen, Y.; et al. A cybernetic avatar system to embody human telepresence for connectivity, exploration, and skill transfer. Int. J. Soc. Robot. 2024, 1–28. [Google Scholar] [CrossRef]

- Marques, J.M.; Peng, J.C.; Naughton, P.; Zhu, Y.; Nam, J.S.; Hauser, K. Commodity Telepresence with Team AVATRINA’s Nursebot in the ANA Avatar XPRIZE Finals. In Proceedings of the 2nd Workshop Toward Robot Avatars, IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1–3. [Google Scholar]

- Klamt, T.; Schwarz, M.; Lenz, C.; Baccelliere, L.; Buongiorno, D.; Cichon, T.; DiGuardo, A.; Droeschel, D.; Gabardi, M.; Kamedula, M.; et al. Remote mobile manipulation with the centauro robot: Full-body telepresence and autonomous operator assistance. J. Field Robot. 2019, 37, 889–919. [Google Scholar] [CrossRef]

- Mallwitz, M.; Will, N.; Teiwes, J.; Kirchner, E.A. The capio active upper body exoskeleton and its application for teleoperation. In Proceedings of the 13th Symposium on Advanced Space Technologies in Robotics and Automation. ESA/Estec Symposium on Advanced Space Technologies in Robotics and Automation (ASTRA-2015), Noordwijk, The Netherlands, 11–13 May 2015. [Google Scholar]

- Sarac, M.; Solazzi, M.; Leonardis, D.; Sotgiu, E.; Bergamasco, M.; Frisoli, A. Design of an underactuated hand exoskeleton with joint estimation. In Advances in Italian Mechanism Science; Springer: Cham, Switzerland, 2017; pp. 97–105. [Google Scholar]

- Wang, D.; Song, M.; Naqash, A.; Zheng, Y.; Xu, W.; Zhang, Y. Toward whole-hand kinesthetic feedback: A survey of force feedback gloves. IEEE Trans. Haptics 2018, 12, 189–204. [Google Scholar] [CrossRef]

- Baik, S.; Park, S.; Park, J. Haptic glove using tendon-driven soft robotic mechanism. Front. Bioeng. Biotechnol. 2020, 8, 541105. [Google Scholar]

- Hosseini, M.; Sengül, A.; Pane, Y.; De Schutter, J.; Bruyninck, H. Exoten-glove: A force-feedback haptic glove based on twisted string actuation system. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 320–327. [Google Scholar]

- Xiong, Q.; Liang, X.; Wei, D.; Wang, H.; Zhu, R.; Wang, T.; Mao, J.; Wang, H. So-EAGlove: VR haptic glove rendering softness sensation with force-tunable electrostatic adhesive brakes. IEEE Trans. Robot. 2022, 38, 3450–3462. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, D.; Wang, Z.; Zhang, Y.; Xiao, J. Passive force-feedback gloves with joint-based variable impedance using layer jamming. IEEE Trans. Haptics 2019, 12, 269–280. [Google Scholar] [CrossRef] [PubMed]

- Westling, G.; Johansson, R.S. Responses in glabrous skin mechanoreceptors during precision grip in humans. Exp. Brain Res. 1987, 66, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Jansson, G.; Monaci, L. Identification of real objects under conditions similar to those in haptic displays: Providing spatially distributed information at the contact areas is more important than increasing the number of areas. Virtual Real. 2006, 9, 243–249. [Google Scholar] [CrossRef]

- Edin, B.B.; Westling, G.; Johansson, R.S. Independent control of human finger-tip forces at individual digits during precision lifting. J. Physiol. 1992, 450, 547–564. [Google Scholar] [CrossRef] [PubMed]

- Preechayasomboon, P.; Rombokas, E. Haplets: Finger-worn wireless and low-encumbrance vibrotactile haptic feedback for virtual and augmented reality. Front. Virtual Real. 2021, 2, 738613. [Google Scholar] [CrossRef]

- Jung, Y.H.; Yoo, J.Y.; Vázquez-Guardado, A.; Kim, J.H.; Kim, J.T.; Luan, H.; Park, M.; Lim, J.; Shin, H.S.; Su, C.J.; et al. A wireless haptic interface for programmable patterns of touch across large areas of the skin. Nat. Electron. 2022, 5, 374–385. [Google Scholar] [CrossRef]

- Luo, H.; Wang, Z.; Wang, Z.; Zhang, Y.; Wang, D. Perceptual Localization Performance of the Whole Hand Vibrotactile Funneling Illusion. IEEE Trans. Haptics 2023, 16, 240–250. [Google Scholar] [CrossRef]

- Clemente, F.; D’Alonzo, M.; Controzzi, M.; Edin, B.B.; Cipriani, C. Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 1314–1322. [Google Scholar] [CrossRef]

- Minamizawa, K.; Fukamachi, S.; Kajimoto, H.; Kawakami, N.; Tachi, S. Gravity grabber: Wearable haptic display to present virtual mass sensation. In Proceedings of the SIGGRAPH07: Special Interest Group on Computer Graphics and Interactive Techniques Conference, San Diego, CA, USA, 5–9 August 2007; p. 8-es. [Google Scholar]

- Schorr, S.B.; Okamura, A.M. Three-dimensional skin deformation as force substitution: Wearable device design and performance during haptic exploration of virtual environments. IEEE Trans. Haptics 2017, 10, 418–430. [Google Scholar] [CrossRef]

- Giraud, F.H.; Joshi, S.; Paik, J. Haptigami: A fingertip haptic interface with vibrotactile and 3-DoF cutaneous force feedback. IEEE Trans. Haptics 2021, 15, 131–141. [Google Scholar] [CrossRef]

- Musić, S.; Salvietti, G.; Chinello, F.; Prattichizzo, D.; Hirche, S. Human–robot team interaction through wearable haptics for cooperative manipulation. IEEE Trans. Haptics 2019, 12, 350–362. [Google Scholar] [CrossRef]

- McMahan, W.; Gewirtz, J.; Standish, D.; Martin, P.; Kunkel, J.A.; Lilavois, M.; Wedmid, A.; Lee, D.I.; Kuchenbecker, K.J. Tool contact acceleration feedback for telerobotic surgery. IEEE Trans. Haptics 2011, 4, 210–220. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Displaying sensed tactile cues with a fingertip haptic device. IEEE Trans. Haptics 2015, 8, 384–396. [Google Scholar] [CrossRef] [PubMed]

- Palagi, M.; Santamato, G.; Chiaradia, D.; Gabardi, M.; Marcheschi, S.; Solazzi, M.; Frisoli, A.; Leonardis, D. A Mechanical Hand-Tracking System with Tactile Feedback Designed for Telemanipulation. IEEE Trans. Haptics 2023, 16, 594–601. [Google Scholar] [CrossRef] [PubMed]

- Liarokapis, M.V.; Artemiadis, P.K.; Kyriakopoulos, K.J. Telemanipulation with the DLR/HIT II robot hand using a dataglove and a low cost force feedback device. In Proceedings of the 21st Mediterranean Conference on Control and Automation, Platanias, Greece, 25–28 June 2013; pp. 431–436. [Google Scholar]

- Martinez-Hernandez, U.; Szollosy, M.; Boorman, L.W.; Kerdegari, H.; Prescott, T.J. Towards a wearable interface for immersive telepresence in robotics. In Interactivity, Game Creation, Design, Learning, and Innovation, Proceedings of the 5th International Conference, ArtsIT 2016, and First International Conference, DLI 2016, Esbjerg, Denmark, 2–3 May 2016; Proceedings 5; Springer: Cham, Switzerland, 2017; pp. 65–73. [Google Scholar]

- Bimbo, J.; Pacchierotti, C.; Aggravi, M.; Tsagarakis, N.; Prattichizzo, D. Teleoperation in cluttered environments using wearable haptic feedback. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3401–3408. [Google Scholar]

- Campanelli, A.; Tiboni, M.; Verité, F.; Saudrais, C.; Mick, S.; Jarrassé, N. Innovative Multi Vibrotactile-Skin Stretch (MuViSS) haptic device for sensory motor feedback from a robotic prosthetic hand. Mechatronics 2024, 99, 103161. [Google Scholar] [CrossRef]

- Barontini, F.; Obermeier, A.; Catalano, M.G.; Fani, S.; Grioli, G.; Bianchi, M.; Bicchi, A.; Jakubowitz, E. Tactile Feedback in Upper Limb Prosthetics: A Pilot Study on Trans-Radial Amputees Comparing Different Haptic Modalities. IEEE Trans. Haptics 2023, 16, 760–769. [Google Scholar] [CrossRef]

- Pirondini, E.; Coscia, M.; Marcheschi, S.; Roas, G.; Salsedo, F.; Frisoli, A.; Bergamasco, M.; Micera, S. Evaluation of a new exoskeleton for upper limb post-stroke neuro-rehabilitation: Preliminary results. In Replace, Repair, Restore, Relieve–Bridging Clinical and Engineering Solutions in Neurorehabilitation; Springer: Cham, Switzerland, 2014; pp. 637–645. [Google Scholar]

- Buongiorno, D.; Sotgiu, E.; Leonardis, D.; Marcheschi, S.; Solazzi, M.; Frisoli, A. WRES: A novel 3 DoF WRist ExoSkeleton with tendon-driven differential transmission for neuro-rehabilitation and teleoperation. IEEE Robot. Autom. Lett. 2018, 3, 2152–2159. [Google Scholar] [CrossRef]

- Gabardi, M.; Solazzi, M.; Leonardis, D.; Frisoli, A. Design and evaluation of a novel 5 DoF underactuated thumb-exoskeleton. IEEE Robot. Autom. Lett. 2018, 3, 2322–2329. [Google Scholar] [CrossRef]

- Gabardi, M.; Solazzi, M.; Leonardis, D.; Frisoli, A. A new wearable fingertip haptic interface for the rendering of virtual shapes and surface features. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 140–146. [Google Scholar]

- UR5, Universal Robots. Available online: https://www.universal-robots.com/products/ur5-robot/ (accessed on 27 May 2024).

- Leonardis, D.; Frisoli, A. CORA hand: A 3D printed robotic hand designed for robustness and compliance. Meccanica 2020, 55, 1623–1638. [Google Scholar] [CrossRef]

- Cole, K.J.; Abbs, J.H. Grip force adjustments evoked by load force perturbations of a grasped object. J. Neurophysiol. 1988, 60, 1513–1522. [Google Scholar] [CrossRef] [PubMed]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A 3-RSR haptic wearable device for rendering fingertip contact forces. IEEE Trans. Haptics 2016, 10, 305–316. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).